Continuous Delivery is a concept that was first introduced in the book: Continuous Delivery: Reliable Software Releases Through Build, Test, and Deployment Automation written by Jez Humble and David Farley. By automation of the test, build, and deployment, the pace of software release can be the time to market. It also helps in the collaboration between developers, operations, and testers, reducing communication effort and bugs. CD pipeline aims to be a reliable and repeatable process and tools of delivering software.

Kubernetes is one of the destinations in CD pipeline. This section will describe how to deliver your new release software into Kubernetes by Jenkins and Kubernetes deployment.

Knowing Jenkins is prerequisite to this section. For more details on how to build and setup Jenkins from scratch, please refer to Integrating with Jenkins section in this chapter. We will use the sample

Flask (http://flask.pocoo.org) app my-calc mentioned in Moving monolithic to microservices section. Before setting up our Continuous Delivery pipeline with Kubernetes, we should know what Kubernetes deployment is. Deployment in Kubernetes could create a certain number of pods and replication controller replicas. When a new software is released, you could then roll updates or recreate the pods that are listed in the deployment configuration file, which can ensure your service is always alive.

Just like Jobs, deployment is a part of the extensions API group and still in the v1beta version. To enable a deployment resource, set the following command in the API server configuration when launching. If you have already launched the server, just modify the /etc/kubernetes/apiserver configuration file and restart the kube-apiserver service. Please note that, for now, it still supports the v1beta1 version:

--runtime-config=extensions/v1beta1/deployments=true

After the API service starts successfully, we could start building up the service and create the sample my-calc app. These steps are required, since the concept of Continuous Delivery is to deliver your software from the source code, build, test and into your desired environment. We have to create the environment first.

Once we have the initial docker push command in the Docker registry, let's start creating a deployment named my-calc-deployment:

# cat my-calc-deployment.yaml apiVersion: extensions/v1beta1 kind: Deployment metadata: name: my-calc-deployment spec: replicas: 3 template: metadata: labels: app: my-calc spec: containers: - name: my-calc image: msfuko/my-calc:1 ports: - containerPort: 5000 // create deployment resource # kubectl create -f deployment.yaml deployment "my-calc-deployment" created

Also, create a service to expose the port to the outside world:

# cat deployment-service.yaml apiVersion: v1 kind: Service metadata: name: my-calc spec: ports: - protocol: TCP port: 5000 type: NodePort selector: app: my-calc // create service resource # kubectl create -f deployment-service.yaml You have exposed your service on an external port on all nodes in your cluster. If you want to expose this service to the external internet, you may need to set up firewall rules for the service port(s) (tcp:31725) to serve traffic. service "my-calc" created

To set up the Continuous Delivery pipeline, perform the following steps:

- At first, we'll start a Jenkins project named

Deploy-My-Calc-K8Sas shown in the following screenshot:

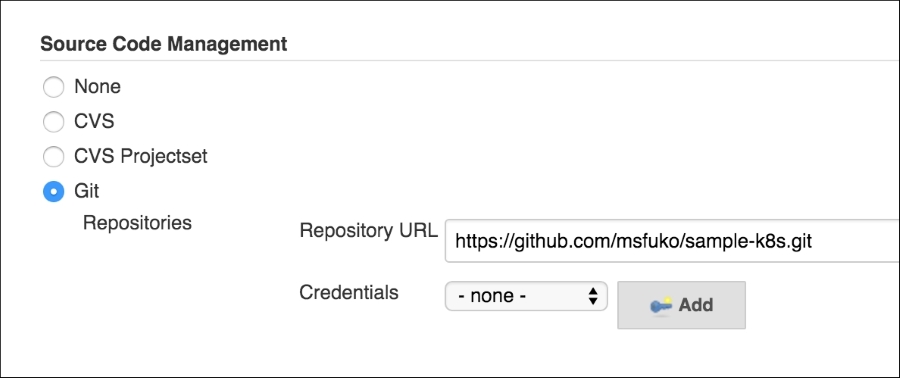

- Then, import the source code information in the Source Code Management section:

- Next, add the targeted Docker registry information into the Docker Build and Publish plugin in the Build step:

- At the end, add the Execute Shell section in the Build step and set the following command:

curl -XPUT -d'{"apiVersion":"extensions/v1beta1","kind":"Deployment","metadata":{"name":"my-calc-deployment"},"spec":{"replicas":3,"template":{"metadata":{"labels":{"app":"my-calc"}},"spec":{"containers":[{"name":"my-calc","image":"msfuko/my-calc:${BUILD_NUMBER}","ports":[{"containerPort":5000}]}]}}}}' http://54.153.44.46:8080/apis/extensions/v1beta1/namespaces/default/deployments/my-calc-deploymentLet's explain the command here; it's actually the same command with the following configuration file, just using a different format and launching method. One is by using the RESTful API, another one is by using the

kubectlcommand. - The

${BUILD_NUMBER} tagis an environment variable in Jenkins, which will export as the current build number of the project:apiVersion: extensions/v1beta1 kind: Deployment metadata: name: my-calc-deployment spec: replicas: 3 template: metadata: labels: app: my-calc spec: containers: - name: my-calc image: msfuko/my-calc:${BUILD_NUMBER} ports: containerPort: 5000

- After saving the project and we could start our build. Click on Build Now. Then, Jenkins will pull the source code from your Git repository, building and pushing the image. At the end, call the RESTful API of Kubernetes:

# showing the log in Jenkins about calling API of Kubernetes ... [workspace] $ /bin/sh -xe /tmp/hudson3881041045219400676.sh + curl -XPUT -d'{"apiVersion":"extensions/v1beta1","kind":"Deployment","metadata":{"name":"my-cal-deployment"},"spec":{"replicas":3,"template":{"metadata":{"labels":{"app":"my-cal"}},"spec":{"containers":[{"name":"my-cal","image":"msfuko/my-cal:1","ports":[{"containerPort":5000}]}]}}}}' http://54.153.44.46:8080/apis/extensions/v1beta1/namespaces/default/deployments/my-cal-deployment % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0 100 1670 100 1407 100 263 107k 20534 --:--:-- --:--:-- --:--:-- 114k { "kind": "Deployment", "apiVersion": "extensions/v1beta1", "metadata": { "name": "my-calc-deployment", "namespace": "default", "selfLink": "/apis/extensions/v1beta1/namespaces/default/deployments/my-calc-deployment", "uid": "db49f34e-e41c-11e5-aaa9-061300daf0d1", "resourceVersion": "35320", "creationTimestamp": "2016-03-07T04:27:09Z", "labels": { "app": "my-calc" } }, "spec": { "replicas": 3, "selector": { "app": "my-calc" }, "template": { "metadata": { "creationTimestamp": null, "labels": { "app": "my-calc" } }, "spec": { "containers": [ { "name": "my-calc", "image": "msfuko/my-calc:1", "ports": [ { "containerPort": 5000, "protocol": "TCP" } ], "resources": {}, "terminationMessagePath": "/dev/termination-log", "imagePullPolicy": "IfNotPresent" } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst" } }, "strategy": { "type": "RollingUpdate", "rollingUpdate": { "maxUnavailable": 1, "maxSurge": 1 } }, "uniqueLabelKey": "deployment.kubernetes.io/podTemplateHash" }, "status": {} } Finished: SUCCESS

- Let's check it using the

kubectlcommand line after a few minutes:// check deployment status # kubectl get deployments NAME UPDATEDREPLICAS AGE my-cal-deployment 3/3 40m

We can see that there's a deployment named

my-cal-deployment. - Using

kubectl describe, you could check the details:// check the details of my-cal-deployment # kubectl describe deployment my-cal-deployment Name: my-cal-deployment Namespace: default CreationTimestamp: Mon, 07 Mar 2016 03:20:52 +0000 Labels: app=my-cal Selector: app=my-cal Replicas: 3 updated / 3 total StrategyType: RollingUpdate RollingUpdateStrategy: 1 max unavailable, 1 max surge, 0 min ready seconds OldReplicationControllers: <none> NewReplicationController: deploymentrc-1448558234 (3/3 replicas created) Events: FirstSeen LastSeen Count From SubobjectPath Reason Message ───────── ──────── ───── ──── ───────────── ────── ─────── 46m 46m 1 {deployment-controller } ScalingRC Scaled up rc deploymentrc-3224387841 to 3 17m 17m 1 {deployment-controller } ScalingRC Scaled up rc deploymentrc-3085188054 to 3 9m 9m 1 {deployment-controller } ScalingRC Scaled up rc deploymentrc-1448558234 to 1 2m 2m 1 {deployment-controller } ScalingRC Scaled up rc deploymentrc-1448558234 to 3

We could see one interesting setting named RollingUpdateStrategy. We have 1 max unavailable, 1 max surge, and 0 min ready seconds. It means that we could set up our strategy to roll the update. Currently, it's the default setting; at the most, one pod is unavailable during the deployment, one pod could be recreated, and zero seconds to wait for the newly created pod to be ready. How about replication controller? Will it be created properly?

// check ReplicationController # kubectl get rc CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS AGE deploymentrc-1448558234 my-cal msfuko/my-cal:1 app=my-cal,deployment.kubernetes.io/podTemplateHash=1448558234 3 1m

We could see previously that we have three replicas in this RC with the name deploymentrc-${id}. Let's also check the pod:

// check Pods # kubectl get pods NAME READY STATUS RESTARTS AGE deploymentrc-1448558234-qn45f 1/1 Running 0 4m deploymentrc-1448558234-4utub 1/1 Running 0 12m deploymentrc-1448558234-iz9zp 1/1 Running 0 12m

We could find out deployment trigger RC creation, and RC trigger pods creation. Let's check the response from our app my-calc:

# curl http://54.153.44.46:31725/ Hello World!

Assume that we have a newly released application. We'll make Hello world! to be Hello Calculator!. After pushing the code into GitHub, Jenkins could be either triggered by the SCM webhook, periodically run, or triggered manually:

[workspace] $ /bin/sh -xe /tmp/hudson877190504897059013.sh + curl -XPUT -d{"apiVersion":"extensions/v1beta1","kind":"Deployment","metadata":{"name":"my-calc-deployment"},"spec":{"replicas":3,"template":{"metadata":{"labels":{"app":"my-calc"}},"spec":{"containers":[{"name":"my-calc","image":"msfuko/my-calc:2","ports":[{"containerPort":5000}]}]}}}} http://54.153.44.46:8080/apis/extensions/v1beta1/namespaces/default/deployments/my-calc-deployment % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0 100 1695 100 1421 100 274 86879 16752 --:--:-- --:--:-- --:--:-- 88812 { "kind": "Deployment", "apiVersion": "extensions/v1beta1", "metadata": { "name": "my-calc-deployment", "namespace": "default", "selfLink": "/apis/extensions/v1beta1/namespaces/default/deployments/my-calc-deployment", "uid": "db49f34e-e41c-11e5-aaa9-061300daf0d1", "resourceVersion": "35756", "creationTimestamp": "2016-03-07T04:27:09Z", "labels": { "app": "my-calc" } }, "spec": { "replicas": 3, "selector": { "app": "my-calc" }, "template": { "metadata": { "creationTimestamp": null, "labels": { "app": "my-calc" } }, "spec": { "containers": [ { "name": "my-calc", "image": "msfuko/my-calc:2", "ports": [ { "containerPort": 5000, "protocol": "TCP" } ], "resources": {}, "terminationMessagePath": "/dev/termination-log", "imagePullPolicy": "IfNotPresent" } ], "restartPolicy": "Always", "terminationGracePeriodSeconds": 30, "dnsPolicy": "ClusterFirst" } }, "strategy": { "type": "RollingUpdate", "rollingUpdate": { "maxUnavailable": 1, "maxSurge": 1 } }, "uniqueLabelKey": "deployment.kubernetes.io/podTemplateHash" }, "status": {} } Finished: SUCCESS

Let's continue the last action. We built a new image with the $BUILD_NUMBER tag and triggered Kubernetes to replace a replication controller by a deployment. Let's observe the behavior of the replication controller:

# kubectl get rc CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS AGE deploymentrc-1705197507 my-calc msfuko/my-calc:1 app=my-calc,deployment.kubernetes.io/podTemplateHash=1705197507 3 13m deploymentrc-1771388868 my-calc msfuko/my-calc:2 app=my-calc,deployment.kubernetes.io/podTemplateHash=1771388868 0 18s

We can see deployment create another RC named deploymentrc-1771388868, whose pod number is currently 0. Wait a while and let's check it again:

# kubectl get rc CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS AGE deploymentrc-1705197507 my-calc msfuko/my-calc:1 app=my-calc,deployment.kubernetes.io/podTemplateHash=1705197507 1 15m deploymentrc-1771388868 my-calc msfuko/my-calc:2 app=my-calc,deployment.kubernetes.io/podTemplateHash=1771388868 3 1m

The number of pods in RC with the old image my-calc:1 reduces to 1 and the new image increase to 3:

# kubectl get rc CONTROLLER CONTAINER(S) IMAGE(S) SELECTOR REPLICAS AGE deploymentrc-1705197507 my-calc msfuko/my-calc:1 app=my-calc,deployment.kubernetes.io/podTemplateHash=1705197507 0 15m deploymentrc-1771388868 my-calc msfuko/my-calc:2 app=my-calc,deployment.kubernetes.io/podTemplateHash=1771388868 3 2m

After a few seconds, the old pods are all gone and the new pods replace them to serve users. Let's check the response by the service:

# curl http://54.153.44.46:31725/ Hello Calculator!

The pods have been rolling updates to the new image individually. Following is the illustration on how it works. Based on RollingUpdateStrategy, Kubernetes replaces pods one by one. After the new pod launches successfully, the old pod is destroyed. The bubble in the timeline arrow shows the timing of the logs we got on the previous page. At the end, the new pods will replace all the old pods:

Deployment is still in the beta version, while some functions are still under development, for example, deleting a deployment resource and recreating strategy support. However, it gives the chance to Jenkins to make the Continuous Delivery pipeline available. It's pretty easy and makes sure all the services are always online to update. For more details of the RESTful API, please refer to http://YOUR_KUBERNETES_MASTER_ENDPOINT:KUBE_API_PORT/swagger-ui/#!/v1beta1/listNamespacedDeployment.

By deployment, we could achieve the goals of rolling the update. However, kubectl also provides a rolling-update command, which is really useful, too. Check out the following recipes:

- The Updating live containers and Ensuring flexible usage of your containers recipes in Chapter 3, Playing with Containers

- Moving monolithic to microservices

- Integrating with Jenkins

- The Working with a RESTful API and Authentication and authorization recipes in Chapter 7, Advanced Cluster Administration