![]()

SQL Server supports integer, decimal, and floating-point numbers. Working with numbers requires an understanding of the types available and what they are capable of. Implicit conversion rules sometimes lead to surprising results from seemingly simple-to-understand expressions. The recipes in this chapter show some of the more common operations as well as techniques for guarding against unexpected and unwanted results.

Problem

You are writing T-SQL or creating a table, and you want to represent integer data in a binary format.

Solution

Choose one of the four integer data types provided in SQL Server. The following is a code block showing the four types and their range of valid values:

DECLARE @bip bigint, @bin bigint

DECLARE @ip int, @in int

DECLARE @sip smallint, @sin smallint

DECLARE @ti tinyint

SET @bip = 9223372036854775807 /* 2^63-1 */

SET @bin = -9223372036854775808 /* -2^63 */

SET @ip = 2147483647 /* 2^31-1 */

SET @in = -2147483648 /* -2^31 */

SET @sip = 32767 /* 2^15-1 */

SET @sin = -32768 /* -2^15 */

SET @ti = 255 /* 2^8-1 */

SELECT 'bigint' AS type_name, @bip AS max_value, @bin AS min_value

UNION ALL

SELECT 'int', @ip, @in

UNION ALL

SELECT 'smallint', @sip, @sin

UNION ALL

SELECT 'tinyint', @ti, 0

ORDER BY max_value DESC

How It Works

SQL Server supports four integer data types. Each allocates a specific number of bytes for use in representing integer values. From largest to smallest, the types are as follows:

- bigint (eight bytes)

- int (four bytes)

- smallint (two bytes)

- tinyint (one byte)

The results from the solution example prove the range of values supported by each of the types:

type_name max_value min_value

--------- ------------------- --------------------

bigint 9223372036854775807 -9223372036854775808

int 2147483647 -2147483648

smallint 32767 -32768

tinyint 255 0

(4 row(s) affected)

Attempts to store an out-of-range value result in an overflow error. For example, decrement the minimum value for smallint by 1, attempt to store that value, and you’ll get the following results:

DECLARE @sin smallint

SET @sin = -32769

Msg 220, Level 16, State 1, Line 2

Arithmetic overflow error for data type smallint, value = -32769.

tinyint is a single byte limited to positive values only. The other three types take negative values as well. SQL Server does not provide for unsigned versions of bigint, int, and smallint.

Choose from among the integer types based upon the range of values that you are working with. Don’t forget to allow for future growth. If storing the national debt, for example, you might want to jump straight to the bigint data type. Any of the types may also be used in CREATE TABLE statements. You can create table columns based upon the four types, as well as T-SQL variables, as shown in the example.

![]() Note The absolute value range in the negative direction is one greater than in the positive direction. That is because of the two’s-complement notation used internally by the database engine. If you’re curious, you can read more about two’s-complement in the following Wikipedia article: http://en.wikipedia.org/wiki/Two%27s_complement.

Note The absolute value range in the negative direction is one greater than in the positive direction. That is because of the two’s-complement notation used internally by the database engine. If you’re curious, you can read more about two’s-complement in the following Wikipedia article: http://en.wikipedia.org/wiki/Two%27s_complement.

Integers decrease in size from eight bytes to one byte as you move from bigint to tinyint. But does SQL Server support anything smaller such as a single-bit integer? The answer is yes. Using the bit type, you can define a column or variable that can be set to 1, 0, or null. Here’s an example:

DECLARE @SunnyDayFlag bit

SET @SunnyDayFlag = 1;

SET @SunnyDayFlag = 'true'

SELECT @SunnyDayFlag

The values 'true' and 'false' (case-insensitive) equate to 1 and 0, respectively. SQL Server coalesces bit variables into groups of eight or fewer, storing up to eight values in a single byte. While the official documentation lumps bit with the integer types, it is a type better suited for true/false flags than for numeric values you want to use in expressions.

11-2. Representing Decimal Amounts

Problem

You are working with decimal data such as monetary amounts for which precise, base-10 representation is critical. You want to create a variable or table column of an appropriate type.

Solution

Use the decimal data type. Specify the total number of digits needed. Also specify how many of those digits are to the right of the decimal point. Here’s an example:

DECLARE @x0 decimal(7,0) = 1234567.

DECLARE @x1 decimal(7,1) = 123456.7

DECLARE @x2 decimal(7,2) = 12345.67

DECLARE @x3 decimal(7,3) = 1234.567

DECLARE @x4 decimal(7,4) = 123.4567

DECLARE @x5 decimal(7,5) = 12.34567

DECLARE @x6 decimal(7,6) = 1.234567

DECLARE @x7 decimal(7,7) = .1234567

SELECT @x0

SELECT @x1

SELECT @x2

SELECT @x3

SELECT @x4

SELECT @x5

SELECT @x6

SELECT @x7

The first parameter to decimal indicates the overall number of digits. The second parameter indicates how many of those digits are to the right of the decimal place.

How It Works

Choose the decimal type whenever accurate representation of decimal values is important. You’ll be able to accurately represent values to the number of digits you specify, with none of the rounding or imprecision that often results from floating-point types and their use of base-2.

The two parameters to a decimal declaration are termed precision and scale. Precision refers to the overall number of digits. Scale refers to the location of the decimal point in respect to those digits. The output from the solution example—modified somewhat for readability—is as follows:

----------

1234567

123456.7

12345.67

1234.567

123.4567

12.34567

1.234567

0.1234567

The number of digits of precision is held constant at seven. The changing location of the decimal point indicates the effects of different values for scale.

Monetary values are a particularly good application of the decimal type. The following is an example declaration allowing for values into the hundreds of millions of pounds, including pence:

decimal(11,2)

This declaration gives nine digits to the left of the decimal point and two to the right. The range of valid values is thus as follows:

-999,999,999.99 to 999,999,999.99

The default precision and scale are 18 and 0. Therefore, a declaration of decimal is equivalent to decimal(18,0). The maximum precision is 38, and the scale must be less than or equal to the precision.

11-3. Representing Monetary Amounts

Problem

You want to create a variable or a column for use in holding values representing money.

Solution #1

Choose the decimal type. Choose a suitable precision and scale. For example, to store rupees and paise to plus-or-minus 1 billion:

DECLARE @account_balance decimal(12,2)

Ten digits are to the left of the decimal, allowing you to reach into the single-digit billions. Having two digits to the right of the decimal enables you to store values to the pence.

Solution #2

Choose one of SQL Server’s built-in monetary data types. Here’s an example:

DECLARE @mp money, @mn money

DECLARE @smp smallmoney, @smn smallmoney

SET @mp = 922337203685477.5807

SET @mn = -922337203685477.5808

SET @smp = 214748.3647

SET @smn = -214748.3648

SELECT 'money' AS type_name, @mp AS max_value, @mn AS min_value

UNION ALL

SELECT 'smallmoney', @smp, @smn

How It Works

My preference leans toward Solution #1. Use the decimal type for money values. It is a standard, ISO SQL data type. You can specify however many digits to the right of the decimal point that you prefer. I often specify two digits to the right, because most money systems in use today resolve to the hundredth of their main unit. For example, there are 100 pence to the pound, 100 euro cents to the euro, 100 paise to the rupee, and so forth.

Your other option is to use one of SQL Server’s built-in money types: money and smallmoney. These types both give four digits of precision to the right of the decimal place. Their ranges are as shown in the results from the Solution #2 example:

type_name max_value min_value

---------- -------------------- ---------------------

money 922337203685477.5807 -922337203685477.5808

smallmoney 214748.3647 -214748.3648

If you do use money or smallmoney, then take care to carefully consider whether and when to round values to two decimal places. Imagine you are storing a checking account balance. What would it mean to tell a customer that their balance is, say, 59 dollars and 20 and 2/10ths cents? On the other hand, you might be storing the price of gasoline, which in the United States is generally priced to the tenth of a cent.

![]() Note The money types provoke a certain amount of discussion and debate. Aaron Bertrand has an interesting article in his blog on performance comparisons he made between money and decimal. You can find that article at http://sqlblog.com/blogs/aaron_bertrand/archive/2008/04/27/performance-storage-comparisons-money-vs-decimal.aspx.

Note The money types provoke a certain amount of discussion and debate. Aaron Bertrand has an interesting article in his blog on performance comparisons he made between money and decimal. You can find that article at http://sqlblog.com/blogs/aaron_bertrand/archive/2008/04/27/performance-storage-comparisons-money-vs-decimal.aspx.

11-4. Representing Floating-Point Values

Problem

You are performing scientific calculations and need the ability to represent floating-point values.

Solution

Choose one of the floating-point types supported by SQL Server. As a practical matter, you have the following choices:

DECLARE @x1 real /* same as float(24) */

DECLARE @x2 float /* same as float(53) */

DECLARE @x3 float(53)

DECLARE @x4 float(24)

How It Works

Table 11-1 gives the absolute-value ranges supported by the declarations in the solution. For example, the largest magnitude real is 3.40E+38. That value can, of course, be either positive or negative. The least magnitude value other than zero that you can represent is 1.18E-38. If you must represent a value of smaller magnitude, such as 1.18E-39, then you would need to look toward the float type.

Table 11-1. Floating-Point Value Ranges

| Declaration | Min Absolute Value | Max Absolute Value |

|---|---|---|

| real | 1.18E-38 | 3.40E+38 |

| float | 2.23E-308 | 1.79E+308 |

| float(53) | 2.23E-308 | 1.79E+308 |

| float(24) | 1.18E-38 | 3.40E+38 |

All values in Table 11-1 can be either positive or negative. Storing zero is also always an option.

You can specify float(n) using any n from 1 to 53. However, any value n from 1..24 is treated as 24. Likewise, any value n from 25..53 is treated as 53. A declaration of float(25) is thus the same as float(53).

Types real and float(24) are equivalent and require seven bytes of storage. Types float and float(53) are equivalent and require 15 bytes of storage.

11-5. Writing Mathematical Expressions

Solution

Write any expression you like, making use of SQL Server’s supported operators and functions. For example, the expression in the following code block computes the new balance of a home loan after a payment of $500. The loan balance is $94,235.49. The interest rate is 6 percent. Twelve monthly payments are made per year.

DECLARE @cur_bal decimal(7,2) = 94235.49

DECLARE @new_bal decimal(7,2)

SET @new_bal = @cur_bal - (500.00 - ROUND(@cur_bal * 0.06 / 12.00, 2))

SELECT @new_bal

The results are as follows:

---------

94206.67

How It Works

You can write expressions of arbitrary length involving combinations of values, function calls, and operators. In doing so, you must be aware of and respect the rules of operator precedence. For example, multiplication occurs before addition, as is standard in mathematics.

Table 11-2 lists operators in order of their evaluation priority. The table lists all operators, including the nonmathematical ones.

Table 11-2. Operator Precedence in SQL Server

| Priority Level | Operator | Description |

|---|---|---|

| 1 | ∼ | Bitwise NOT |

| 2 | *, /, % | Multiply, divide, modulo |

| 3 | +, - | Positive sign, negative sign |

| 3 | +, - | Add, subtract |

| 3 | + | String concatenate |

| 3 | &, ^, | | Bitwise AND, Bitwise exclusive OR, Bitwise OR |

| 4 | =, <, <=, !<, >, >=, !>, <>, != | Equals, less than, less than or equal, not less than, greater than, greater than or equal, not greater than, not equal, not equal |

| 5 | NOT | Logical NOT |

| 6 | AND | Logical AND |

| 7 | ALL, ANY, BETWEEN, IN, LIKE, OR, SOME | Logical OR and others |

| 8 | = | Assignment |

Use parentheses to override the default priority. The solution example includes parentheses as follows to force the monthly interest amount to be subtracted from the $500 monthly payment, leaving the amount to be applied to the principal.

(500.00 - ROUND(@cur_bal * 0.06 / 12.00 ,2))

Omit the outer parentheses, and you’ll get a very different result indeed.

Another issue to contend with is data type precedence and the presence or absence of implicit conversions. Recipe 11-6, coming next, helps you guard against incorrect results from mixing data types within an expression.

11-6. Guarding Against Errors in Expressions with Mixed Data Types

Problem

You want to guard against trouble when writing an expression involving values from more than one data type.

Solution

Consider explicitly converting values between types to maintain full control over your expressions and their results. For example, invoke CAST and CONVERT as follows to change values from one type to another:

SELECT 6/100,

CAST(6 AS DECIMAL(1,0)) / CAST(100 AS DECIMAL(3,0)),

CAST(6.0/100.0 AS DECIMAL(3,2))

SELECT 6/100,

CONVERT(DECIMAL(1,0), 6) / CONVERT(DECIMAL(3,0), 100),

CONVERT(DECIMAL(3,2), 6.0/100.0)

The results from both these queries are as follows:

--- -------- -----

0 0.060000 0.06

Choose either CAST or CONVERT depending upon the importance you attach to complying with the ISO SQL standard. CAST is a standard function. CONVERT is specific to SQL Server. My opinion is to favor CAST unless you have some specific need for functionality offered by CONVERT.

How It Works

One of the most common implicit conversion errors in SQL Server is actually the result of implicit conversion not occurring in a specific case when a cursory glance would lead one to expect it to occur. That case involves division of numeric values written as integers, such as 6/100.

Recall the solution example from Recipe 11-5. Instead of writing the 6 percent interest rate as 0.06, write it as 6/100 inside parentheses. Make just that one change, and the resulting code looks as follows:

DECLARE @cur_bal decimal(7,2) = 94235.49

DECLARE @new_bal decimal(7,2)

SET @new_bal = @cur_bal - (500.00 - ROUND(@cur_bal * (6/100) / 12.00 ,2))

SELECT @new_bal

Execute this code, and the result changes from the correct result of 94206.67 as given in Recipe 11-5 to the incorrect result of 93735.49. Why the change? It’s because 6 and 100 are written with no decimal points, so they are treated as integers. Integer division then ensues. The uninitiated expects 6/100 to evaluate to 0.06, but integer division leads to a result of zero. The interest rate evaluates to zero, and too much of the loan payment is applied to the principal.

![]() Caution Keep in mind that numeric constants written without a decimal point are treated as integers. When writing an expression involving constants along with decimal values, include decimal points in your constants so they are treated also as decimals—unless, of course, you are certain you want them written as integers.

Caution Keep in mind that numeric constants written without a decimal point are treated as integers. When writing an expression involving constants along with decimal values, include decimal points in your constants so they are treated also as decimals—unless, of course, you are certain you want them written as integers.

The solution in this case is to recognize that the expression requires decimal values and write either 0.06 or 6.0/100.0 instead. For example, the following version of the expression will yield the same correct results as in Recipe 11-5:

SET @new_bal = @cur_bal - (500.00 - ROUND(@cur_bal * (6.0/100.0) / 12.00 ,2))

What of the values 500.00 and 12.00? Can they be written as 500 and 12? It turns out that they can be written that way. The following expression yields correct results:

SET @new_bal = @cur_bal - (500 - ROUND(@cur_bal * (6.0/100.0) / 12 ,2))

You can get away in this case with 500 and 12, because SQL Server applies data type precedence. In the case of 500, the value being subtracted is a decimal value. Thus, the database engine implicitly converts 500 to a decimal. For much the same reason, the integer 12 is also promoted to decimal. That conversion makes sense in this particular case, but it may not always be what you want.

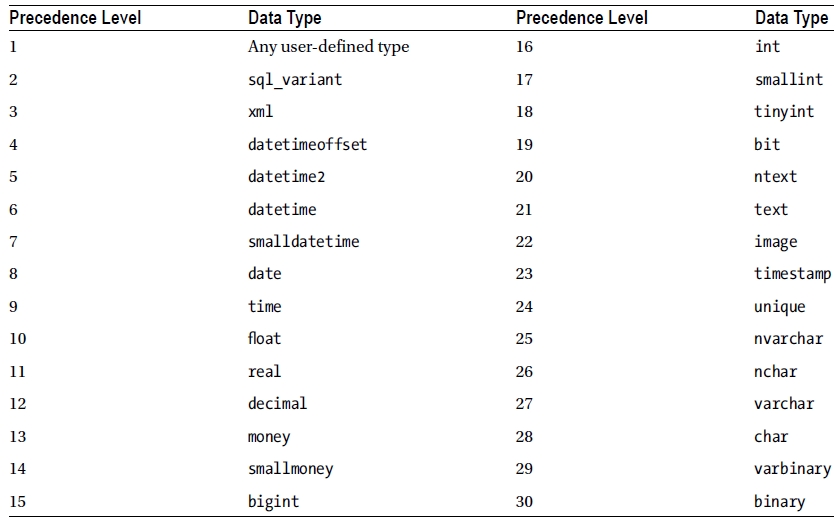

Table 11-3 lists data types by precedence. Anytime an operator works on values of two different types, the type lower on the scale is promoted to the type higher on the scale. If such a conversion is not what you want or if you just want to clearly specify the conversion to remove any doubt, invoke either the CAST or CONVERT function.

Table 11-3. Data Type Precedence in SQL Server

The following is one last restatement of Recipe 11-5’s solution. The original solution used ROUND to force the interest amount to two decimal places, but what was the resulting data type? Do you know? Perhaps it is better to be certain. The following code casts the result of the interest computation to the type decimal(7,2). The rounding still occurs, but this time as part of the casting operation.

DECLARE @cur_bal decimal(7,2) = 94235.49

DECLARE @new_bal decimal(7,2)

SET @new_bal = @cur_bal - (500.00 - CAST(@cur_bal * (6.0/100.0) / 12.00 AS decimal(7,2)))

SELECT @new_bal

The results are as follows:

--------

94206.67

Remember especially the tricky case of integer division in cases such as 6/100. That behavior is unintuitive and leads to many errors. Otherwise, the implicit conversions implied by the precedence in Table 11-3 tend to make sense and produce reasonable results. Whenever values from two types are involved in the same expression, the value of the type having the lower precedence is converted into an instance of the type having the higher precedence. Even so, I recommend explicit conversions in all but the obvious cases. If you aren’t absolutely certain at a glance just what is occurring within an expression, then make the conversions explicit.

11-7. Rounding

Solution

Invoke the ROUND function. Here’s an example:

SELECT EndOfDayRate,

ROUND(EndOfDayRate,0) AS EODR_Dollar,

ROUND(EndOfDayRate,2) AS EODR_Cent

FROM Sales.CurrencyRate

The results are as follows:

EndOfDayRate EODR_Dollar EODR_Cent

------------ ----------- ---------

1.0002 1.00 1.00

1.55 2.00 1.55

1.9419 2.00 1.94

1.4683 1.00 1.47

8.2784 8.00 8.28

...

How It Works

Invoke ROUND to round a number to a specific number of decimal places, as specified by the second argument. The solution example shows rounding to the nearest integer (zero decimal places) and to the nearest hundredth (two decimal places).

![]() Note Digit values of 5 and higher round upward. Rounding 0.5 to the nearest integer yields 1.0 as a result.

Note Digit values of 5 and higher round upward. Rounding 0.5 to the nearest integer yields 1.0 as a result.

You can invoke ROUND with a negative argument to round to the left of the decimal place. The following is an example that rounds product inventories to the nearest 10 units and to the nearest 100 units:

SELECT ProductID, SUM(Quantity) AS Quantity,

SUM(ROUND(Quantity,-1)) as Q10,

SUM(ROUND(Quantity,-2)) as Q100

FROM Production.ProductInventory

GROUP BY ProductID

The results show the effects of rounding away from the decimal place:

ProductID Quantity Q10 Q100...

--------- -------- ---- ----

1 1085 1080 1100

2 1109 1110 1100

3 1352 1350 1300

4 1322 1320 1300

ROUND usually returns a value. However, there is a case to beware of. It comes about because ROUND returns its result in the same data type as the input value. The following three statements illustrate the case in which ROUND will throw an error:

SELECT ROUND(500, -3)

SELECT ROUND(500.0, -4)

SELECT ROUND(500.0, -3)

The first and second statements will return 1000 and 0.0, respectively. But the third query will throw an error as follows:

Msg 8115, Level 16, State 2, Line 2

Arithmetic overflow error converting expression to data type numeric.

ROUND(500, -3) succeeds because the input value is an integer constant. (No decimal point means that 500 is considered as an integer.) The result is thus also an integer, and an integer is large enough to hold the value 1000.

ROUND(500.0, -4) returns zero. The input value indicates a type of decimal(4,1). The value rounds to zero because the value is being rounded too far to the left. Zero fits into the four-digit precision of the implied data type.

ROUND(500.0, -3) fails because the result is 1000. The value 1000 will not fit into the implied data type of decimal(4,1). You can get around the problem by casting your input value to a larger precision. Here’s an example:

SELECT ROUND(CAST(500.0 as DECIMAL(5,1)), -3)

------

1000.0

This time, the input value is explicitly made to be decimal(5,1). The five digits of precision leave four to the left of the decimal place. Those four are enough to represent the value 1000.

11-8. Rounding Always Up or Down

Problem

You want to force a result to an integer value. You want to always round either up or down.

Solution

Invoke CEILING to always round up to the next integer value. Invoke FLOOR to always round down to the next lowest integer value. Here’s an example:

SELECT CEILING(-1.23), FLOOR(-1.23), CEILING(1.23), FLOOR(1.23)

The results are as follows:

---- ---- ---- ----

-1 -2 2 1

How It Works

CEILING and FLOOR don’t give quite the same flexibility as ROUND. You can’t specify a number of decimal places. The functions simply round up or down to the nearest integer, period.

You can work around the nearest integer limitation using a bit of math. For example, to invoke CEILING to the nearest cent and to the nearest hundred, use this:

SELECT CEILING(123.0043*100.0)/100.0 AS toCent,

CEILING(123.0043/100.0)*100.0 AS toHundred

toCent toHundred

---------- ---------

123.010000 200.0

We don’t trust this technique for binary floating-point values. However, it should work fine on decimal values so long as the extra math doesn’t push those values beyond the bounds of precision and scale that decimal can support.

11-9. Discarding Decimal Places

Problem

You just want to “chop off” the digits past the decimal point. You don’t care about rounding at all.

Solution

Invoke the ROUND function with a third parameter that is nonzero. Here’s an example:

SELECT ROUND(123.99,0,1), ROUND(123.99,1,1), ROUND(123.99,-1,1)

------ ------ ------

123.00 123.90 120.00

How It Works

Some database brands (Oracle, for example) implement a TRUNCATE function to eliminate values past the decimal point. SQL Server accomplishes that task using the ROUND function. Make the third parameter anything but zero, and the function will truncate rather than round.

11-10. Testing Equality of Binary Floating-Point Values

Problem

You are testing two binary floating-point values for equality, but the imprecision inherent in floating-point representation is causing values that you consider equal to be rejected as not equal.

Solution

Decide on a difference threshold below which you will consider the two values to be equal. Then test the absolute value of the difference to see whether it is less than your threshold. For example, the following example assumes a threshold of 0.000001 (one one-millionth):

DECLARE @r1 real = 0.95

DECLARE @f1 float = 0.95

IF ABS(@r1-@f1) < 0.000001

SELECT 'Equal'

ELSE

SELECT 'Not Equal'

The difference is less than the threshold, so the values are considered as equal. The result is as follows:

-----

Equal

How It Works

Not all decimal values can be represented precisely in binary floating-point. In addition, different expressions that should in theory yield identical results sometimes differ by tiny amounts. The following is a query block to illustrate the problem:

DECLARE @r1 real = 0.95

DECLARE @f1 float = 0.95

SELECT @r1, @f1, @r1-@f1

Both values are the same but not really. The results are as follows:

---- ---- ----------------------

0.95 0.95 -1.19209289106692E-08

The fundamental problem is that the base-2 representation of 0.95 is a never-ending string of bits. The float type is larger, allowing for more bits, which is the reason for the nonzero difference. By applying the threshold method in the solution, you can pretend that the tiny difference does not exist.

![]() Caution The solution in this recipe represents a conscious decision to disregard small differences in order to treat two values as being equal. Make that decision while keeping in mind the context of how the values are derived and of the problem being solved.

Caution The solution in this recipe represents a conscious decision to disregard small differences in order to treat two values as being equal. Make that decision while keeping in mind the context of how the values are derived and of the problem being solved.

11-11. Treating Nulls as Zeros

Problem

You are writing expressions with numeric values that might be null. You want to treat nulls as zero.

Solution

Invoke the COALESCE function to supply a value of zero in the event of a null. For example, the following query returns the MaxQty column from Sales.SpecialOffer. That column is nullable. COALESCE is used to supply a zero as an alternate value.

SELECT SpecialOfferID, MaxQty, COALESCE(MaxQty, 0) AS MaxQtyAlt

FROM Sales.SpecialOffer

The results are as follows:

SpecialOfferID MaxQty MaxQtyAlt

-------------- ------- -----------

1 NULL 0

2 14 14

3 24 24

4 40 40

5 60 60

6 NULL 0

7 NULL 0

...

How It Works

COALESCE is an ISO standard function taking as input any number of values. It returns the first non-null value in the list. The solution example invokes COALESCE to return a zero in the event MaxQty is null.

SQL Server also implements an ISNULL function, which is propriety and takes only two arguments but otherwise is similar to COALESCE in that it returns the first non-null value in the list. You can implement the solution example using ISNULL as follows and get the same results:

SELECT SpecialOfferID, MaxQty, ISNULL(MaxQty, 0) AS MaxQtyAlt

FROM Sales.SpecialOffer

It’s generally good practice to avoid invoking COALESCE and ISNULL within a WHERE clause predicate. Applying functions to a column mentioned in a WHERE clause can inhibit the use of an index on the column. Here’s an example of what we try to avoid:

SELECT SpecialOfferID

FROM Sales.SpecialOffer

WHERE COALESCE(MaxQty,0) = 0

In a case like this, we prefer to write an IS NULL predicate as follows:

SELECT SpecialOfferID

FROM Sales.SpecialOffer

WHERE MaxQty = 0 OR MaxQty IS NULL

We believe the IS NULL approach preserves the greatest amount of flexibility for the optimizer.

11-12. Generating a Row Set of Sequential Numbers

Problem

You need to generate a row set with an arbitrary number of rows. For example, you want to generate one row per day in the year so that you can join to another table that might be missing rows for some the days, so as to ultimately create a row set having one row per day.

Solution

Many row-generator queries are possible. The following is one solution I particularly like. It is a variation on a technique introduced to me by database expert Vladimir Przyjalkowski in 2004. It returns rows in power-of-ten increments controlled by the number of joins that you write in the outer query’s FROM clause. This particular example returns 10,000 rows numbered from 0 to 9999.

WITH ones AS (

SELECT *

FROM (VALUES (0), (1), (2), (3), (4),

(5), (6), (7), (8), (9)) AS numbers(x)

)

SELECT 1000*o1000.x + 100*o100.x + 10*o10.x + o1.x x

FROM ones o1, ones o10, ones o100, ones o1000

ORDER BY x

The results are as follows:

x

----

0

1

2

3

...

9997

9998

9999

If you like, you can restrict the number of rows returned by wrapping the main query inside of an enclosing query that restricts the results. Be sure to keep the WITH clause first. And specify an alias for the new, enclosing query. The following example specifies n as the alias:

WITH ones AS (

SELECT *

FROM (VALUES (0), (1), (2), (3), (4),

(5), (6), (7), (8), (9)) AS numbers(x)

)

SELECT n.x FROM (

SELECT 1000*o1000.x + 100*o100.x + 10*o10.x + o1.x x

FROM ones o1, ones o10, ones o100, ones o1000

) n

WHERE n.x < 5000

ORDER BY x

This version returns 5,000 rows numbered from 0 through 4999.

How It Works

Row sets of sequential numbers are handy for data densification. Data densification refers to the filling in of missing rows, such as in time series data. Imagine, for example, that you want to generate a report showing how many employees were hired on each day of the year. A quick test of the data shows that hire dates are sparse—there are only a few days in a given year on which employees have been hired. Here’s an example:

SELECT DISTINCT HireDate

FROM HumanResources.Employee

WHERE HireDate >= '2006-01-01'

AND HireDate < '2007-01-01'

ORDER BY HireDate

The results indicate that hires occur sparsely throughout the year:

HireDate

----------

2006-05-18

2006-07-01

2006-11-01

Using the solution query, you can create a sequence table to use in densifying the data so as to return one row per day, regardless of number of hires. Begin by creating a 1,000-row table using a form of the solution query:

WITH ones AS (

SELECT *

FROM (VALUES (0), (1), (2), (3), (4),

(5), (6), (7), (8), (9)) AS numbers(x)

)

SELECT 100*o100.x + 10*o10.x + o1.x x

INTO SeqNum

FROM ones o1, ones o10, ones o100

Now it’s possible to join against SeqNum and use that table as the basis for generating one row per day in the year. Here’s an example:

SELECT DATEADD(day, x, '2006-01-01'), HireDate

FROM SeqNum LEFT OUTER JOIN HumanResources.Employee

ON DATEADD(day, x, '2006-01-01') = HireDate

WHERE x < DATEDIFF (day, '2006-01-01', '2007-01-01')

ORDER BY x

The results are as follows. The HireDate column is non-null for days on which a hire was made.

HireDate

----------------------- ----------

2006-01-01 00:00:00.000 NULL

2006-01-02 00:00:00.000 NULL

...

2006-05-17 00:00:00.000 NULL

2006-05-18 00:00:00.000 2006-05-18

2006-05-19 00:00:00.000 NULL

...

2006-12-29 00:00:00.000 NULL

2006-12-30 00:00:00.000 NULL

2006-12-31 00:00:00.000 NULL

Add a simple GROUP BY operation to count the hires per date, and we’re done! Here’s the final query:

SELECT DATEADD(day, x, '2006-01-01'), COUNT(HireDate)

FROM SeqNum LEFT OUTER JOIN HumanResources.Employee

ON DATEADD(day, x, '2006-01-01') = HireDate

WHERE x < DATEDIFF (day, '2006-01-01', '2007-01-01')

GROUP BY x

ORDER BY x

Results now show the number of hires per day. The following are results for the same days as in the previous output. This time, the count of hires is zero on all days having only null hire dates. The count is 1 on May 18, 2006, for the one person hired on that date.

----------------------- ----

2006-01-01 00:00:00.000 0

2006-01-02 00:00:00.000 0

...

2006-05-17 00:00:00.000 0

2006-05-18 00:00:00.000 1

2006-05-19 00:00:00.000 0

...

2006-12-29 00:00:00.000 0

2006-12-30 00:00:00.000 0

2006-12-31 00:00:00.000 0

You’ll receive a warning message upon executing the final query. The message is nothing to worry about. It reads as follows:

Warning: Null value is eliminated by an aggregate or other SET operation.

This message simply indicates that the COUNT function was fed null values. And indeed that is the case. Null hire dates were fed into the COUNT function. Those nulls were ignored and not counted, which is precisely the behavior one wants in this case.

11-13. Generating Random Integers in a Row Set

Problem

You want each row returned by a query to include a random integer value. You further want to specify the range within which those random values will fall. For example, you want to generate a random number between 900 and 1,000 for each product.

Solution

Invoke the built-in RAND() function as shown in the following example:

DECLARE @rmin int, @rmax int;

SET @rmin = 900;

SET @rmax = 1000;

SELECT Name,

CAST(RAND(CHECKSUM(NEWID())) * (@rmax-@rmin) AS INT) + @rmin

FROM Production.Product;

You’ll get results as follows, except that your random numbers might be different from mine:

Name

---------------------- ----

Adjustable Race 939

All-Purpose Bike Stand 916

AWC Logo Cap 914

BB Ball Bearing 992

Bearing Ball 975

How It Works

RAND() returns a random float value between 0 exclusive and 1 exclusive. RAND() accepts a seed parameter, and any given seed will generate the same result. These are two characteristics you must keep in mind and compensate for as you use the function.

The following is the simplest possible invocation of RAND() in a query against Production.Product. The resulting “random” number is not very random at all. SQL Server treats the function as deterministic because of the lack of a parameter, invokes the function just one time, and applies the result of that invocation to all rows returned by the query.

SELECT Name, RAND()

FROM Production.Product;

Name

----------------------- -----------------

Adjustable Race 0.472241415009636

All-Purpose Bike Stand 0.472241415009636

AWC Logo Cap 0.472241415009636

BB Ball Bearing 0.472241415009636

Bearing Ball 0.472241415009636

What’s needed is a seed value that changes for each row. A common and useful approach is to base the seed value on a call to NEWID(). NEWID() returns a value in a type not passable to RAND(). You can work around that problem by invoking CHECKSUM() on the NEWID() value to generate an integer value acceptable as a seed. Here’s an example:

SELECT Name, RAND(CHECKSUM(NEWID()))

FROM Production.Product;

Name

---------------------- ------------------

Adjustable Race 0.943863936349248

All-Purpose Bike Stand 0.562297100626295

AWC Logo Cap 0.459806720686023

BB Ball Bearing 0.328415563433923

Bearing Ball 0.859439320073147

The NEWID() function generates a globally unique identifier. Because the result must be globally unique, no two invocations of NEWID() will return the same result. The function is therefore not deterministic, and the database engine thus invokes the RAND(CHECKSUM(NEWID())) expression anew for each row.

Now comes some math. It’s necessary to shift the random values from their just-greater-than-zero to less-than-one range into the range, in this case, of 900 to 1000. Begin by multiplying the result from RAND() by the magnitude of the range. Do that by multiplying the random values by 100, which is the difference between the upper and lower bounds of the range. Here’s an example:

DECLARE @rmin int, @rmax int;

SET @rmin = 900;

SET @rmax = 1000;

SELECT Name,

RAND(CHECKSUM(NEWID())) * (@rmax-@rmin)

FROM Production.Product;

Name

---------------------- -----------------

Adjustable Race 12.5043506882683

All-Purpose Bike Stand 46.3611080374763

AWC Logo Cap 17.1908607269767

BB Ball Bearing 89.5318634996859

Bearing Ball 50.74511276104

...

Next is to shift the spread of values so that they appear in the desired range. Do that by adding the minimum value as shown in the following query and its output. The result is a set of random values beginning at just above 900 and going to just less than 1000.

DECLARE @rmin int, @rmax int;

SET @rmin = 900;

SET @rmax = 1000;

SELECT Name,

RAND(CHECKSUM(NEWID())) * (@rmax-@rmin) + @rmin

FROM Production.Product;

Name

---------------------- ----------------

Adjustable Race 946.885865947398

All-Purpose Bike Stand 957.087533428096

AWC Logo Cap 924.321027483594

BB Ball Bearing 988.996724323006

Bearing Ball 943.797723186947

11-14. Reducing Space Used by Decimal Storage

Problem

You have very large tables with a great many decimal columns holding values notably smaller than their precisions allow. You want to reduce the amount of space to better reflect the actual values stored rather than the possible maximums.

![]() Note The solution described in this recipe is available only in the Enterprise Edition of SQL Server.

Note The solution described in this recipe is available only in the Enterprise Edition of SQL Server.

Solution

Enable vardecimal storage for your database. Do that by invoking sp_db_vardecimal_storage_format as follows:

EXEC sp_db_vardecimal_storage_format 'AdventureWorks2012', 'ON'

Then estimate the amount of space to be saved per table. For example, issue the following call to sp_estimated_rowsize_reduction_for_vardecimal to determine the average row length before and after vardecimal is enabled on the Production.BillOfMaterials table:

EXEC sys.sp_estimated_rowsize_reduction_for_vardecimal 'Production.BillOfMaterials'

Your results should be similar to the following:

avg_rowlen_fixed_format avg_rowlen_vardecimal_format row_count

---------------------- ---------------------------- ---------

57.00 56.00 2679

A one-byte-per-row savings is hardly worth pursuing. However, pursue it anyway by enabling vardecimal storage on the table:

sp_tableoption 'Production.BillOfMaterials', 'vardecimal storage format', 1

Be aware that converting to vardecimal is an offline operation. Be sure you can afford to take the table offline for the duration of the process.

How It Works

By switching on vardecimal storage for a table, you allow the engine to treat decimal values as variable length in much the same manner as variable-length strings, trading an increase in CPU time for a reduction in storage from not having to store unused bytes. You enable the use of the option at the database level. Then you can apply the option on a table-by-table basis.

While the vardecimal option sounds great on the surface, we recommend some caution. Make sure that the amount of disk space saved makes it really worth the trouble of enabling the option. Remember that there is a CPU trade-off. The example enables the option for a 2,679-row table and would save about one byte per row on average. Such a savings is fine for a book example but hardly worth pursing in real life. Go for a big win, or don’t play at all.

![]() Note You’ll find a detailed and useful discussion of the vardecimal option at http://msdn.microsoft.com/en-us/library/bb508963(v=SQL.90).aspx.

Note You’ll find a detailed and useful discussion of the vardecimal option at http://msdn.microsoft.com/en-us/library/bb508963(v=SQL.90).aspx.

You can generate a list of databases on your server showing for which ones vardecimal is enabled. Issue the following command to do that:

EXEC sp_db_vardecimal_storage_format

Your results should resemble the following. The Database Name values may be displayed extremely wide in Management Studio. You may need to scroll left and right to see the Vardecimal State values. I’ve elided much of the space between the columns in this output for the sake of readability.

Database Name Vardecimal State

-------------------- ----------------

master OFF

tempdb OFF

model OFF

msdb OFF

ReportServer ON

ReportServerTempDB ON

AdventureWorks2008R2 ON

Similarly, you can issue the following query to generate a list of tables within a database for which the option is enabled. (Increase the VARCHAR size in the CAST if your table or schema names combine to be longer than 40 characters.)

SELECT CAST(ss.name + '.' + so.name AS VARCHAR(40)) AS 'Table Name',

CASE objectproperty(so.object_id, N'TableHasVarDecimalStorageFormat')

WHEN 1 then 'ON' ELSE 'OFF'

END AS 'Vardecimal State'

FROM sys.objects so JOIN sys.schemas ss

ON so.schema_id = ss.schema_id

WHERE so.type_desc = 'USER_TABLE'

ORDER BY ss.name, so.name

Your results should be similar to the following:

Table Name Vardecimal State

-------------------------- ----------------

dbo.AWBuildVersion OFF

dbo.DatabaseLog OFF

dbo.ErrorLog OFF

...

Production.BillOfMaterials ON

...

To disable vardecimal storage on a table, invoke the sp_tableoption procedure with a third parameter of 0 rather than 1. Disable the option at the database level by first disabling it for all tables and then by executing sp_db_vardecimal_storage_format with a second parameter of 'OFF'.