Chapter 6. Engineering Component Systems

Tidiness is next to Godliness, or so goes the mantra of the obsessive compulsive. The partially decentralized society model of cooperation proves to be an important pattern when designing cooperative systems, but it leads to apparent complexity, too. An apparent way around this is to find a middle ground by dividing it up into specialized components.

During the 1800s, Western culture became particularly obsessed with the need to categorize things and separate them tidily into boxes. One reason might be that we cannot hold many things in our minds because of our limited Dunbar slots. Whatever the reason, our cultural aesthetic is for putting things tidily into boxes, and arranging shelves neatly.

Meanwhile, jungles and ecosystems stubbornly refuse to be patterned in this oversimplistic way. Nature selected tangled webs as its strategy for stable survival, not carefully organized rows and columns, or taxonomic trees of partitioned species. Those organizational structures are all human designs.

There are plenty of reasons why tidiness would not be a successful strategy for keeping promises. The complexity of bonding and maintaining an interactive relationship across boundaries is the understated price we pay for a tidy separation of concerns, when pieces cannot really do without one another.

Yet, building things from replicable patterns (like cells) is exactly what nature does. So the idea of making components with different functional categories is not foreign to nature. Where does the balance lie? Let’s see what light promises can shed on this matter.

Reasoning with Cause

Engineering is based on a model of cause and effect. If we can identify a property or phenomenon X, such that it reliably implies phenomenon Y under certain circumstances, then we can use it to keep promises that make a functional world. Indeed, if we can promise these properties, then we can engineer with some certainty.

For example, if an agent promises to use a service X in order to keep a promise of its own Y, then we could infer that promise Y is fragile to the loss of service X. These are chains of prerequisite dependence (see Figure 6-1).

Figure 6-1. Reasoning leads to branching processes that fan out into many possibilities and decrease certainty. The result can be as fragile as a house of cards.

Philosophers get into a muddle over what causation means, but engineers are pragmatic. We care less about whether we can argue precisely what caused an effect than we care about whether there is a possibility that an effect we rely on might go away. We manage such concerns by thinking about arranging key promises to dominate outcomes, in isolation from interfering effects. This is not quite the same thing as the “separation of concerns” often discussed in IT. Ironically, this usually means that if we need to couple systems together, we make as many of those couplings as possible weak, to minimize the channels of causal influence.

Componentization: Divide and Build!

The design of functional systems from components is a natural topic to consider from the viewpoint of autonomous agents and their promises. How will we break down a problem into components, and how do we put together new and unplanned systems from a preexisting set of parts? Indeed, the concept of autonomous agents exists for precisely this purpose.

Decomposing systems into components is not necessary for systems to work (an alternative with similar cost-benefit is the use of repeatable patterns during construction of a singular entity), but it is often desirable for economic reasons. A component design is really a commoditization of a reusable pattern, and the economics of component design are the same as the economics of mass production.

The electronics industry is a straightforward and well-known example of component design and use. There we have seen how the first components—resistors, inductors, capacitors, and transistors—were manufactured as ranges of separate atomic entities, each making a variety of promises about its properties. Later, as miniaturization took hold, many of these were packaged into chips that were more convenient, but less atomic, components. This has further led to an evolution of the way in which devices are manufactured and repaired. It is more common now to replace an entire integrated unit than to solder in a new capacitor, for example.

An analogy with medicine would be to favour transplanting whole organs rather than repairing existing ones.

What Do We Mean by Components?

Being more formal about what we mean by promises allows us to understand other definitions that build on them. Components are a good example of how to use the agent concept flexibly to reason about designs.

A component is an entity that behaves like an agent and makes a number of promises to other agents, within the context of a system. It could be represented as a bundle of promises emanating from a localized place. Components play a role within the scope of a larger system. For weakly coupled systems, components can be called standalone.

A standalone or independent component is an agent that makes only unconditional promises. For example, a candle stands alone, but is part of a room. In strongly coupled systems or subsystems, components are integral parts, embedded in a larger whole. A dependent (or embedded) component is an agent that makes one or more conditional promises, such as flour or sugar in a cake (see Figure 6-2).

Figure 6-2. Components can be standalone, without dependencies, or dependent.

What Systemic Promises Should Components Keep?

In addition to the functional promises that components try to keep, there are certain issues of design that would tend to make us call an agent a component of a larger system. Typical promises that components would be designed to keep could include:

-

To be replaceable or upgradable

-

To be reusable, interchangeable, or compatible

-

To behave predictably

-

To be (maximally) self-contained, or avoid depending on other components to keep their promises

When an object is a component, it has become separated from the source of its intent. It is another brick in the wall.

Can Agents Themselves Have Components? (Superagents)

What about the internal structure of agents in the promises? Can they have components? The answer depends on the level of detail to which we want to decompose an object and talk about its promises. Clearly, components can have as much private, internal structure as we like—that is a matter of design choice.

For example, we might consider a cake to be a component in a supermarket inventory. This makes certain promises like chocolate flavour, nut-free, low calorie, and so on. The cake has a list of ingredients or components too, like flour and sugar. The flour might promise to be whole grain, and the sugar might promise to be brown sugar. The definition of agents is a modelling choice.

When we aggregate agents into a new compound agency, I’ll use the term superagent.

Component Design and Roles

One simple structure for promise patterns is to map components onto roles. A component is simply a collection of one or more agents that forms a role, either by association or cooperation. Once embedded within a larger system, these roles can become appointed too, through the binding relationships with users and providers. Table 6-1 illustrates some example components and their roles in some common systems.

| System | Component | Role |

|---|---|---|

Television |

Integrated circuit |

Amplifier |

Pharmacy |

Pill |

Sedative |

Patient |

Intravenous drug |

Antibiotic |

Vertebrate |

Bone |

Skeleton |

Cart |

Wheel |

Mobility enabler |

Playlist |

Song by artist |

Soft jazz interlude |

Components Need to Be Assembled

A collection of components does not automatically become a system, unless perhaps it possesses swarm intelligence, like a flock of birds. Anyone who has been to IKEA knows that tables and chairs do not spontaneously self-assemble.

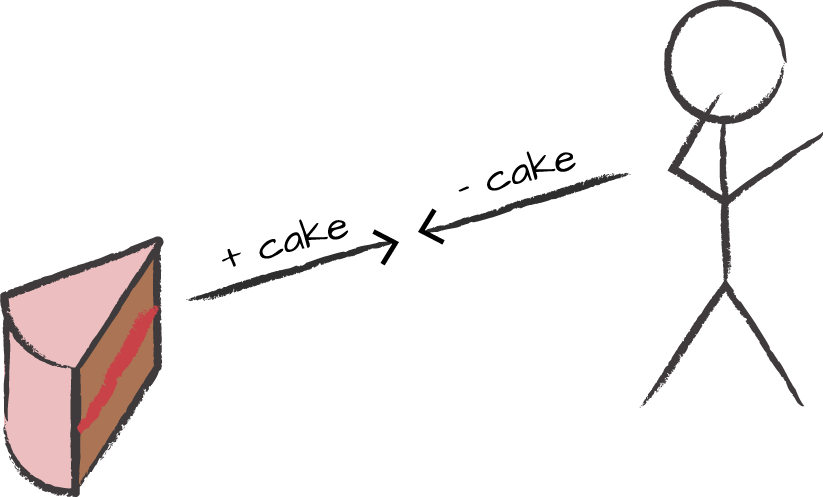

To complete a system composed of components, agents need to bind their matching promises together. Think of flat-packed furniture from IKEA, and you find clips, parts that plug into receptors, or screws that meet holes. These are effectively (+) and (-) promises made by the parts that say “I will bind to this kind of receptor” (see Figure 6-3).

Figure 6-3. Promises that give something (+), and promises that accept something (-), match in order to form a binding, much like a jigsaw puzzle.

In biology, there is the concept of receptors, too. Molecular receptors on cells (MHC) identify cells’ signatures to proteins that would bind to them. Viruses that have the proper parts to plug into receptors, but which subvert the normal function of the cell, can pervert the cell’s normal behaviour. Humans, making a semantic assessment, view this as a sickness because the cell is not behaving according to what they consider to be its intended purpose. Of course, evolution is not in the business of intent; it merely makes things that survive. We humans, on the other hand, project the hand of intent into everything.

The relationships formed between components need to be stable if the larger system is to remain stable.

Fragile Promises in Component Design

Building an appliance on components that make exclusive promises (i.e., promises that can be kept only for a limited number of users) is a fragile strategy. Trying to design for the avoidance of such conflicts is a good idea; however, one often has incomplete information about how components will be used, making this impossible to predict. This is one of the flaws in top-down design.

Some components contend for their dependencies. Components that make conditional promises, where the condition must be satisfied by only one exclusive promise from another component, may be called contentious or competitive. Multiple agents contend with one another to satisfy the the condition, but only one agent can do so. For example, you can only plug one appliance into a power socket at a time. Only one patient can swallow a pill at a time, thus a pill is a contentious agent that promises relief if taken.

Designing with contentious components can sometimes save on costs, but can lead to bottlenecks and resource issues in continuous system operation. Contentious promises are therefore fragile.

-

For example, consider an engine that promises to run only if it gets a special kind of fuel would be risky. What if the fuel becomes scarce (i.e., it cannot be promised)?

-

Multiple electrical appliances connected to the same power line contend for the promise of electrical power. If the maximum rating is a promise of, say, 10 Amperes, multiple appliances requiring a high current could easily exceed this.

-

A similar example can be made with traffic. Cars could be called contentious components of traffic, as they contend for the limited road and petrol/gas pumps at fueling stations.

-

Software applications running on a shared database or server have to contend for availability and capacity.

The examples reveal the effect of imperfect information during component design. The designer of an appliance cannot know the availability of dependencies when designing an isolated component, which begs the question: should one try to design components without the context of their use? The alternative is to allow components to emerge through experience, and abstract them out of their contexts by observing a common pattern.

We make risky choices usually to minimize costs, given the probability of needing to deliver on a promise of a resource. The risk comes from the fact that contention between promises is closely related to single points of failure in coordination and calibration. Contention leads to fragility.

Reusability of Components

If we are able to partition systems into components that make well-understood promises, then it’s natural to suppose that some of these promises will be useful is more than one scenario. Then we can imagine making components of general utility—reusable components.

Reusability is about being able to take a component that promises to work in one scenario and use it in another. We achieve this when we take an agent and insert it into another system of agents, whose use-promises match our component’s promises.

Any agent or agency can be reusable, so don’t think just about electronic components, flour and sugar, and so on. Think also about organizations, accounts departments, human beings that promise skills, and even movies. For instance, a knife is a component that can be used in many different scenarios for cutting. It doesn’t matter if it is used to cut bread or cheese, or to perform surgery. Electrical power offers a promise that can be used by a large number of other components, so we may call that reusable. Reusability means that a component makes a promise that can be accepted by and bind to many other agents’ use-promises (see Figure 6-4).

Figure 6-4. Reusability of a power adapter as a component that makes promises to share electric current from a plug to a number of sockets.

Formally, a component cannot be intrinsically reusable, but it can be reusable relative to some other components within its field of use. Then we can say that a component is reusable if its promised properties meet or exceed the use-promises (requirements) of every environment in which it is needed.

Interchangeability of Components

Two components might be called interchangeable, relative to an observer, if every promise made by the first component to the observer is also made identically by the second, and vice versa.

When components are changed, or revised, the local properties of the resulting system could change. This might further affect the promises of the entire system, as perceived by a user, assuming that the promise bindings allow it. In continuous delivery systems, changes can be made at any time as long as the semantics are constant.

If the semantics of the components change, either the total composite system has to change its promises, or a total revision of the design might be needed to maintain the same promises of the total system. Interchangeable components promise that this will not happen (see Figure 6-5).

Figure 6-5. Interchangeability means that components have to make exactly the same promises. These two power strips are not interchangeable.

A knife can be interchanged with an identical new knife in a restaurant without changing the function, even if one has a wooden handle and the other, a plastic one. A family pet probably can’t be interchanged with another family pet without changing the total family system.

Compatibility of Components

Compatibility of components is a weaker condition than interchangeability. Two components may be called compatible if one forms the same set of promise bindings with an agent as the second, but the details of the promises might not be identical in every aspect. A component might simply be “good enough” to be fit for the purpose.

Compatibility means that all the essential promises are in place. If components are compatible, it is possible for them to bind to the same contexts, even if the precise promises are not maintained at the same levels (see Figure 6-6).

Figure 6-6. Compatibility means that a replacement will do the job without all the promises being identical. This is up the the user of the promises to judge.

British power plugs are not interchangeable or compatible with the power sockets in America or the rest of Europe because they do not make complementary promises. Similarly, we can say that British and American power plugs are not interchangeable. British power plugs are somewhat over-engineered for robustness and safety, with individual fuses to isolate damaged equipment.

Conversely, there might be cases where it doesn’t matter which of a number of alternative components is chosen—the promises made are unspecific and the minor differences in how promises are kept do not affect a larger outcome. In other scenarios, it could matter explicitly. Imagine two brands of chocolate cake at the supermarket. These offerings can be considered components in a larger network of promises. How do we select or design these components so that other promises can be kept?

-

Doesn’t matter: You would normally choose a cheap brand of cake, but it is temporarily unavailable, so you buy the more expensive premium version.

-

Matters: One of the cakes contains nuts that you are allergic to, so it does not fulfill your needs.

If the promise made by the cake is too unspecific for its consumer, there can be a mismatch between expectation and service.

Backward Compatibility

Replacement components are often said to require backward compatibility, meaning that a replacement component will not undermine the promises made by an existing system. In fact, if one wants status quo, the correct term should be interchangeability, as a new component might be compatible but still not function in the same way.

When repairing devices, we are tempted to replace components with new ones that are almost the same, but this can lead to problems, even if the new components are better in relation to their own promises. For example, the promise of increased power in a new engine for a plane might lead to an imbalance in the engines, making handling difficult. Similarly, changing one tyre of a car might cause the car to pull in one direction.

Making the same kinds of promises as an older component is a necessary condition for interchangeability, but it is not sufficient to guarantee no change in the total design. We need identical promises, no more and no less, else there is the possibility that new promises introduced into a component will conflict with the total system in such a way as to be misaligned with the goals of the total design.

Diesel fuel makes many of the same promises as petrol or gasoline, but these fuels are not interchangeable in current engines. A new super-brand of fuel might promise better performance, but might wear out the engine more quickly. The insertion of new output transistors with better specification into a particular hi-fi amplifier actually improves the sound quality of the device. The transistors cannot be considered interchangeable, even though there is an improvement, because the promises they make are not the same, and the total system is affected. Now the older system is weaker and cannot be considered an adequate replacement for the new one.

Upgrading and Regression Testing of Components

When upgrading components that are not identical, a possibility is to design in a compatibility mode such that no new promises are added when they are used in this way. This allows component promises to remain interchangeable and expectations to be maintained. Downgrading can also be compatible but not interchangeable.

Figure 6-7. A regression test involves assessing whether component promises are still kept after changing the component. Does today’s piece of cake taste as good as yesterday’s piece?

A regression test is a trip wire or probe that makes a promise (-) to assess a service promise made by some software. The same promise is made to every agent promising a different version of the service promise. The ability to detect faults during testing depends on both what is promised (+) and what is used (-) by the test-agent.

Designing Promises for a Market

Imagine we have a number of components that make similar promises (e.g., screws, power sockets, servers, and software products). If the promises are identical, then the components are identical, and it clearly doesn’t matter which component we use. However, if the promises are generic and vague, there is a high level of uncertainty in forming expectations about the behaviour of the components.

For example, suppose a car rental company promises us a vehicle. It could give us a small model, a large model, a new model, a clapped-out wreck, or even a motorbike. It could be German, Japanese, American, etc. Clearly the promise of “some kind of vehicle” is not sufficiently specific for a user to necessarily form useful expectations.

Similarly, in a restaurant, it says “meat and vegetables” on the menu. Does this mean a medium-rare beef steak with buttered carrots and green beans, or baked rat with fries?

The potential for a mismatch of expectation steers how components should design their interfaces for consumers and systems with similar promises. It is often desirable for an agent consuming promises to have a simple point of contact (sometimes called a frontend) that gives them a point of one-stop shopping. This has both advantages and disadvantages to the user, as interchangeability of the backend promises is not assured.

Law of the Lowest Common Denominator

If a promiser makes a lowest common denominator promise, it is easy for users of the promise to consume (i.e., to understand and accept), but the vagueness of the promise makes it harder to trust in a desired outcome.

For example, supermarkets often rebrand their goods with generic labels like mega-store beans or super-saver ice cream. The lack of choice makes it easy for the user to choose, but it also means that the user doesn’t know what she is getting. Often in these cases, the promise given is implicitly one of a minimum level of quality.

In computing, a service provider offers a “SQL database” service. There are many components that offer this service, with very different implementations. PostgreSQL, MySQL, MSSQL, Oracle, and several others all claim to promise an SQL database. However, the detailed promises are very different from one another so that the components are not interchangeable. Writing software using a common interface to these databases thus leads to using only the most basic common features.

The law of the lowest common denominator. When a promise-giving component makes a generic promise interface to multiple components, it is forced to promise the least common denominator or minimal common functionality. Thus, generic interfaces lead to low certainty of outcome.

The user of a promise is both the driver and the filter to decide what must be satisfied (i.e., the agent’s requirements). This is how we paraphrase the idea that “the customer is always right” in terms of promises. If the user promises to use any meat-and-vegetables food promise by a caterer, he will doubtless end up with something of rather low quality, but if he promises to use only “seared filet, salted, no pepper,” the provider will have to promise to deliver on that, else the user would not accept it to form a promise binding.

Imposing Requirements: False Expectations

When designing or productizing components for consumers, it’s much more common to use the language of imposing requirements. Use-promises or impositions about specifications play equivalent roles in setting user expectations for promised outcomes. Both create a sense of obligation.

The problem is clear. If we try to start with user expectations, there is no reason why we might be able to fulfill them by putting together available components and resources. Only the component agents know what they can promise.

Cooperation is much more likely if intent is not imposed on individuals, especially when they are humans. Starting with what has been promised makes a lot more sense when agents only promise their own capabilities. Thus, if one can engineer voluntary cooperation by incentive, it is easier to trust the outcome of the promise.

This lesson has been seen in many cooperative scenarios, for example, where one group “throws a job over the wall” to another (“Catch!”) and expects it to be taken care of (see Figure 6-8).1

Figure 6-8. Throwing impositions over the wall is unreliable. A promise handshake gives better assurances.

If one trades this interaction for a continuous promise relationship, trust grows on both sides.

Component Choices That You Can’t Go Back On

Selecting one particular component from a collection has long-term consequences for component use and design if one selects a noninterchangeable component. The differences that are specific to its promises then lead the system along a path that moves farther away from the alternatives because other promises will come to rely on this choice through dependencies.

Choosing to use a fuel type to power a car (e.g., using a car that makes a diesel rather than a petrol/gasoline promise, has irreversible consequences for further use of that component). Choosing the manufacturing material for an aircraft, or the building site for a hotel, has irreversible consequences for their future use. Another example is the side of the road that countries drive on. Historians believe that driving on the lefthand side goes back to ancient Rome, and that this was the norm until Napolean created deliberate contention in Europe. Today, driving on the right is the dominant promise, but countries like the UK are locked into the opposite promise by the sheer cost of changing.2 A similar argument could be made about the use of metric and imperial measures across the world.3

Choosing to use one political party has few irreversible consequences, as it is unlikely that any other promises by the agent making the choice would depend on this choice.

The Art of Versioning

When we develop a tool or service, we release versions of these to the world. Now we have to ask, do version changes and upgrades keep the same promises as before? If not, is it the same product?

Consider Figure 6-9, which shows how a single promise agent can depend on a number of other agents. If the horizontal stacking represents components in a design, and vertical stacking indicates different versions of the same component, then each agent would be a replaceable and upgradable part.

Figure 6-9. A hierarchy of component dependencies.

In a system with multiple cross-dependencies, different versions of one component may depend on different versions of others. How may we be certain to keep consistent promises throughout a total system made of many such dependencies, given that any of the components can be reused multiple times, and may be interchanged for another during the course of a repair or upgrade? There is a growth of combinatorial complexity, which can lead to collapse by information overload as we exceed the Dunbar slots we have at our disposal.5

Consider the component arrangement in Figure 6-10, showing a component X that relies on another component Y, which in turn relies on component Z. The components exist in multiple versions, and the conditional promises made by X and Y specifically refer to these versions, as they must in order to be certain of what detailed promise is being kept.

Figure 6-10. A flat arrangement of interdependent components.

This situation is fragile if components Y and Z are altered in some way. There is no guarantee that X can continue to promise the same as before, thus its promise to its users is conditional on a given version of the components that it relies on, and each change to a component should be named differently to distinguish among these versions.

To avoid this problem, we can make a number of rules that guide the design of components.

If components promise dependency usage in a version-specific way, supporting components can coexist without contention. Promises by one component to use another can avoid being broken by referring to the specific versions that quench their promises-to-use. This avoids the risk of later invalidation of the promises by the component as the dependency versions evolve.

Non-version-specific promises are simpler to make and maintain, but they do not live in their own branch of reality, and thus they cannot simultaneously coexist. This is why multiple versions must be handled such that they can coexist without confusion or conflict. This is the many worlds issue.

Versioning takes care of the matter of component evolution and improvement in the presence of dependency. The next issue is what happens if a component is used multiple times within the same design. Can this lead to conflicts? There are now two issues surrounded by repeated promises:

-

If multiple components, with possibly several versions, make completely identical promises, this presents no problem, as identical promises are idempotent, meaning that they lead to compatible outcomes.

-

Incompatible, also called exclusive, promises prevent the reuse of components.

Note

Any component that can be used multiple times, for different purposes, within the same system, must be able to exist alongside other versions of the component and patterns of usage. Component versions that collaborate to avoid making conflicting promises across versions can more easily coexist.

The unique naming of components is a simple way to avoid some of these problems. We will describe this in the next section.

Names and Identifiers for “Branding” Component Promises

A simple matter like naming turns out to play an important role. A name is a promise that can imply many other promises in a given cultural arena. For example, if we promise someone a car, currently at least this would imply a promise of wheels.

What attributes qualify as names? Names do not have to be words. A name could be a shape. A cup does not need a name for us to recognize it. Components need to promise their function somehow, with some representation that users will recognize. Naming, in its broadest sense, allows components to be recognizable and distinguishable for reuse. Recognition may occur through shape, texture, or any form of sensual signalling.

In marketing, this kind of naming is often called branding. The concept of branding goes back to identifying marks burnt onto livestock. In marketing, branding looks at many aspects of the psychology of naming, as well as how to attract the attention of onlookers to make certain components more attractive to users than others.

It makes sense that bundles of promises make naming agreements within a system, according to their functional role, allowing components to be:

-

Quickly recognizable for use

-

Distinguishable from other components

-

Built from cultural or instinctive knowledge

Because an agent cannot impose its name on another agent, nor can it know how another agent refers to it, naming conventions have to be common knowledge within a collaborative subsystem. Establishing and trusting names is one of the fundamental challenges in systems of agents. This issue mirrors the acceptance of host and user identities in information systems using cryptographic keys, which can later be subjected to verification. The cryptographic methods mean nothing until one has an acceptable level of certainty about identity.

Naming needs to reflect variation in time and space, using version numbers and timestamps, or other virtual locational coordinates. Variation in space can be handled through scope, as it is parallel. However, variation in time is a serial property, indistinguishable from versioning, and therefore naming a set of promises must be unique within a given scope.

Note

A component name or identifier is any representative, promisable attribute, rendered in any medium, that maps uniquely to a set of two promises:

-

A description of the promises kept by the component (e.g., text like “Toyota Prius,” or a shape like a handle, indicating carrying or opening)

-

A description of the model or version in a family or series of evolving designs (e.g., 1984 model, version 2.3, or “panther”)

When components evolve through a number of related versions, we often keep a version identifier separate from the functional name because the name anchors the component to a set of broad promises, while the version number promises changes to the details.

Naming Promisee Usage (-) Rather than Function (+)

Every time we make use of a promise, there are two parties: the promiser and the promisee. Sometimes, a functional (+) promise is conditional on input from the agent, who is also the promisee. The input changes the result. The different uses of a reusable promise could be named individually, by the promisee, based on what it feeds to the promiser, for easy distinction. For example, a process agent, consuming input components and their promises, can promise differently named applications, each made from components as if they were separate entities. A collection of transistors, resistors, and capacitors might be a radio or a television. A collection of fabrics might be clothing or a tent. A mathematical function, like a cosine, promises a different result depending on the number fed into it. In each case, the template of the promise is the same, but the details of how it is used vary. This is what we mean by the promise of parameterization.

Parameters are customizing options, and are well understood in computer programming or mathematics. A parameter is something that makes a generic thing unique by plugging a context into the generality.

A description of what input data, parameters, or arguments are being supplied to the component’s function supplement the name of the function itself. In computer science, we call this a function call. Different function calls promise different outcomes, and, as long as causation is uniquely determined, this is just as good a name as the bundle of promises about what comes out. Indeed, it is probably more convenient, since we know the data we want to feed in, but not necessarily the promised data that comes out.

Attaching distinct names to instances of usage can be useful for identifying when and where a particular component was employed (e.g., when fault finding).

In computer programming, generic components are built into libraries that expose promises to using patterns of information. This is sometimes called an API or Application Programming Interface. The application programmer, or promise user, makes use of the library components in a particular context for a particular purpose. Each usage of a particular component may thus be identified by the information passed to it, or the other components connected to it. We may give each such example a name like “authentication dialogue,” “comment dialogue,” and so on. We can also use the set of parameters given to an API interface promise as part of the unique name of the reference.

The Cost of Modularity

When does it make economic sense to build components? Each promise carries an expected value, assessed individually by the agents and other components in a system. A promise viewpoint allows us to enumerate costs by attaching the valuations made by each agent individually, from each viewpoint: the eye of the beholder.

Even from the viewpoint of a single agent, the cost associated with a modular design is not necessarily a simple calculation. It includes the ongoing collaborative cost of isolating components into weakly coupled parts, designing their interfaces, and also the cost of maintaining and evolving the parts over time. The accessibility of the parts adds a cost, too. If components are hard to change or maintain because they are inside a closed box, then it might be simpler to replace the whole box. However, if the component is cheap and the rest of the box is expensive in total, then the reckoning is different.

Making tyres into components makes sense. Changing a flat tyre rather than replacing an entire car makes obvious sense, because the tyres are accessible and large, not to mention cheaper than a whole car. Making electronic components like transistors, capacitors, and resistors certainly made sense to begin with, when they were large and experimentation was important. This allowed them to be replaced individually, so it made sense to change failed components individually, thus saving the cost of unnecessary additional replacements. However, the miniaturization of electronics eventually changed this situation.

At some point it became cheap to manufacture integrated circuits that were so small that changing individual components became impractical. Thus the economics of componentization moved towards large-scale integration of parameterizable devices.

Virtual computational components, like software running on virtual servers in a data center, are apparently cheap to replace, and are used extensively to manage the evolution of software services. However, while these components are cheap to build, existing components gather history and contain runtime data that might be expensive to lose.

Modularity is generally considered to be a positive quality in a system, but when does componentization actually hurt?

-

When the cost of making and maintaining the components exceeds the cost of just building the total system, over the expected lifetime of the system. (Think of VLSI chips, which are composed of tens of thousands of individual transistors and ancillary components.)

-

If the components are not properly adapted to the task because of poor understanding of the patterns of usage. (Think of making do with commodity goods, where custom made would be preferable.)

-

If the additional overhead of packaging and connecting the components (combinatorics) exceeds other savings.

-

When the number of components becomes so large that it contributes to the cognitive burden of navigating the complexity of the total system. (Think of software that imports dozens of modules into every program file, or a book that assumes too much foreknowledge.)

Some Exercises

-

Think of some examples of business promises. Break these down into the promises made to support these “goals” within the business organization. Now compare this to the organization chart of the business. Do the promises match the job descriptions? Were jobs assigned by wishful thinking, or are they based on what the individual could promise to deliver?

-

Divide and conquer is a well-known concept. Delegating tasks (impositions) to different agents is one way to centrally share the burden of keeping a promise amongst a number of agents. However, it comes with its own cost of coordination.

Write down a promise you would like to keep, then write down the agencies to which you would like to delegate, and the promises each of them must now make, including the cost of coordinating, without losing coordination. How do you estimate the additional complexity of interacting with multiple agencies, compared to when a single agent was the point of contact? Is this an acceptable cost? When would it (not) be acceptable?

-

Innovation, in technology, can be helped or hindered by componentization. If you split a product into multiple parts, developed with independent responsiblity, the success must be influenced by the degree of coordination of those parts. Think about what this means for the ability to innovate and improve parts independently and together as a whole. Is componentization an illusion, sometimes or always?

-

Very-large–scale integration (VLSI) in computer chips is an example of the opposite of componentization. When does such packing make economic sense? What are the benefits and disadvantages of bundling many promises into a single agency?

-

(For technical readers) Write down the promises that distinguish a process container (e.g., a Docker instance, or a Solaris zone) from a statically compiled binary, running under the same operating system.

1 In IT, the DevOps movement came about in this way as software developers threw their latest creations over the proverbial wall to operations engineers to run in production.

2 Sweden made the change from driving on the left to the right overnight, avoiding accidents by arranging a campaign of staying at home to watch television.

3 The UK has made limited efforts to change to metric units by showing both units in some cases, but this has had little real impact.

4 This illustrates that when you issue is communication itself; it makes little sense to be stubborn about the language you speak, even if the language is imperfect.

5 See my book, In Search of Certainty, for a discussion of how Dunbar’s number applies to technology.