Chapter 5. Engineering Cooperation

Promising to work with peers is what we call cooperation. Promise Theory exposes cooperation as an exercise in information sharing. If we assess those other agents’ promises to be trustworthy, we can rely on them and use that as a basis for our own behaviour. This is true for humans, and it is true for technological proxies.

The subject of cooperation is about coordinating the behaviours of different autonomous agents, using promises to signal intent. We see the world as more than a collection of parts, and dare to design and construct larger, intentional systems that behave in beneficial ways.

Engineering Autonomous Agents

There are many studies of cooperation in the scientific literature from differing viewpoints. To make a claim of engineering, we propose to think in terms of tools and a somewhat precise language of technical meanings. This helps to minimize the uncertainty when interpreting agents’ promises and behaviours. Cooperation involves a few themes:

-

Promises and intentions (the causes)

-

Agreement

-

Cooperation

-

Equilibration

-

Behaviour and state (outcomes)

-

Emergence

In this chapter, I want to paint a picture of cooperation as a process just like any other described in natural science by atomizing agency into abstract agents, and then binding them back together into a documentable way through the promises they advertise. In this way, we develop a chemistry of intent.

Promisees, Stakeholders, and Trading Promises

So far, we’ve not said much about the promisees (i.e., those agents to whom promises are made). In a sense, the promisee is a relatively unimportant part of promises, for many aspects, because the agent principally affected by a promise is the autonomous promiser itself. A promise is a self-imposition, after all.

The exception to this is when we consider cooperation between agents because of the value of recognizing whether their promises will be kept. When an agent assesses a promise as valuable (to itself), it becomes a stakeholder in its outcome. This is why we aim promises at particular individuals in the first place. Promises form a currency of cooperation. Anyone can observe a promise, and decide whether or not it was kept, without being directly affected by it, but only agents with a stake in the collaboration hold the outcome to be of particular importance.

Promisees and stakeholders have their moment when promises become part of a web of incentive and trade in the exchange of favours. Thus the subject of cooperation is perhaps the first place where the arrow in a promise finds its role.

Broken Promises

Cooperation is about the keeping of promises to stakeholders, but so far we’ve not mentioned what it means for promises to be broken. Most of us probably feel an intuitive understanding of this already, as we experience promises being broken every day. Nevertheless, it is useful to express this more clearly.

Our most common understanding of breaking a promise is that it can be passively broken. In other words, there is a promise that is not kept due to inaction, or due to a failure to keep the terms of the promise. To know when such a promise has not been kept, there has to be a time limit of some kind built into the promise. Once the time limit has been exceeded, we can say that the promise has not been kept. The time limit might be entirely in the perception of the agent assessing the promise, of course. Every agent makes this determination autonomously.

There is also an active way of breaking a promise. This is when an agent makes another promise that is in conflict with the first. If the second promise is incompatible with the first, in the view of the promisees or stakeholders, or the second promise prevents the promiser from keeping the first promise in any way, then we can also say that promises have been broken.

Broken promises thus span conflicts of interest, as well as failures to behave properly.

What Are the Prerequisites for Cooperation?

For autonomous agents to coordinate their intent, they need to be able to communicate proposals to one another. The minimum promise that an agent has to keep is therefore to accept proposals from other agents (i.e., to listen). From this starting point, any agent could impose proposals on another agent.

In a more civil arrangement, agents might replace impositions with promises to respond and alter proposals, forming an ongoing relationship between the agents. These ongoing relationships are the stable fodder of cooperation. They leave open a channel for negotiation and collaboration.

Who Is Responsible for Keeping Promises?

From the first promise principle, agents are always responsible for keeping their own promises. No agent may make a promise on behalf of another. Regrettably, we are predisposed to think in terms of impositions and obligations, not promises, which pretend the exact opposite.

Impositions might not encourage cooperation at all (indeed they sometimes provoke stubborn opposition), but there is another way to win the influence of agents. As agents begin to trade promises with one another, there are indirect ways to encourage others to behave to our advantage, voluntarily, by promising their own behaviour in trade.

Agencies guide the outcomes of processes with different levels of involvement, and indeed different levels of success. This happens through incentives that work on behalf of self-interest. Agents might be “hands-on” (explicit) or “hands-off” (implicit) in their use of incentives, but in all cases their own promises exist to coax cooperative promises from others for individual benefit.

Every agent is thus responsible for carrying the burden of its own promises. In order to use Promise Theory as a tool for understanding cooperation, an engineer therefore needs to adjust his or her thinking to adopt the perspective of each individual agent in a system, one by one. This might be remarkably difficult to do, given our natural tendency to command the parts into compliance, but it pays off. The difference is that, when we look at the world from the perspective of the agencies, we begin to understand their limitations and take them into account. Practicing this way of thinking will cleanse the windows of perception on cooperative engineering, and change the way you think about orchestrating behaviour.

Mutual Bindings and Equilibrium of Agreement

Collaboration towards a shared outcome, or a common purpose, happens when we exchange information. This information especially includes promises to one another. The beginning of cooperation lies in agreeing about promise bindings (i.e., pairs of promises that induce agents to cooperate voluntarily).

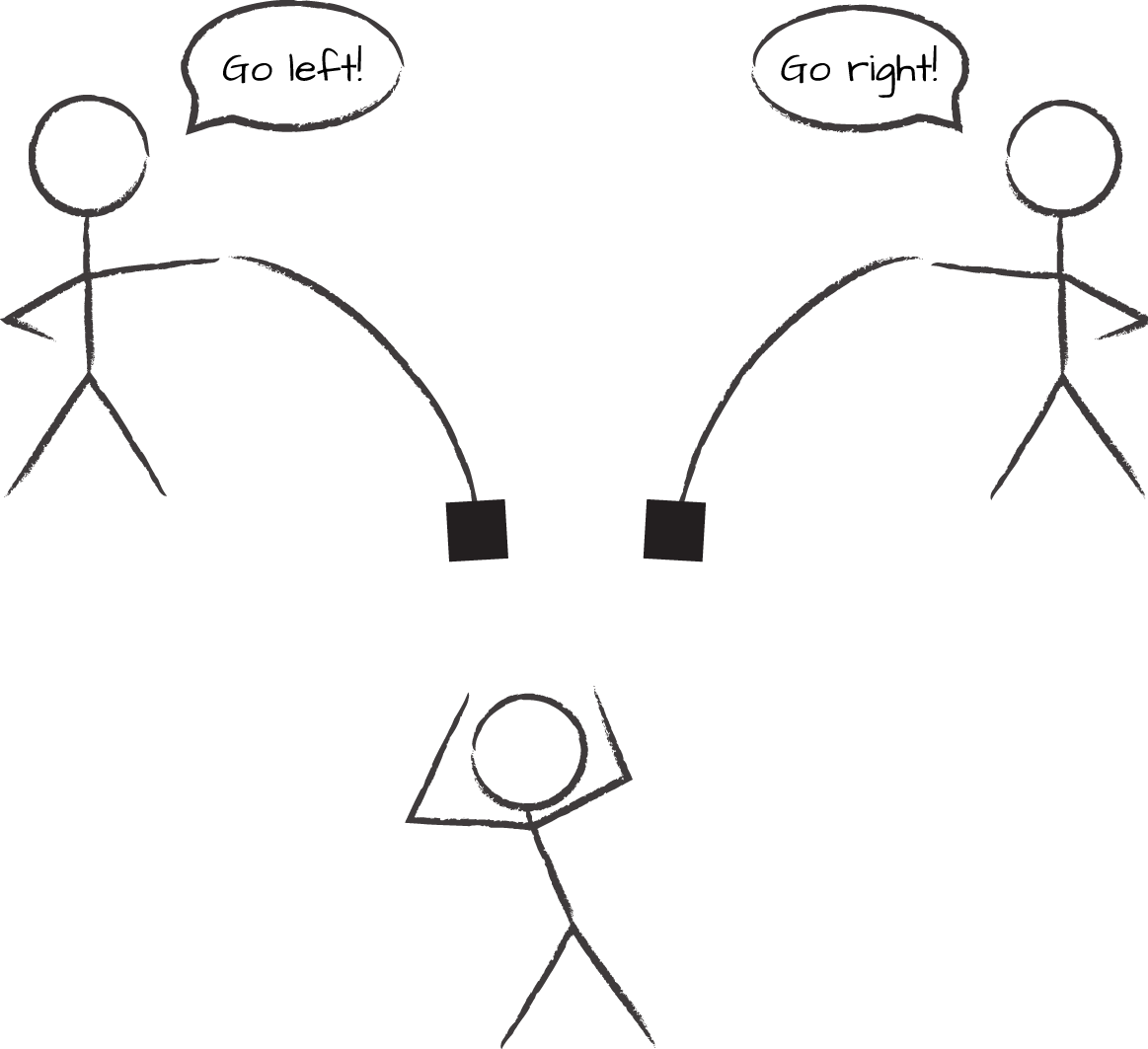

As agents negotiate, there is a tug-of-war between opposing interests, from the promises they are willing to make themselves, to those they are willing to accept from their counterparts. The theory of games refers to this tug-of-war stalemate as a Nash equilibrium. This is technical parlance for a compromise.

The process by which everyone ends up agreeing on the same thing is thus called equilibration. In information technology, this is sometimes called a consensus. Equilibrium means balance (think of the zodiac sign Libra, meaning balance), and this balance brings an intrinsic stability to processes and outcomes (see Figure 5-1). Although, culturally, we invariably favour the notion of “winning,” we should be very worried about making cooperative situations too one-sided.

The idea of winning and losing draws attention away from mutual cooperation and towards greed and instability. To have cooperation, we need a system of agents to form stable relationships. In other words, they need to keep their promises consistently, without wavering or changing their minds. The secret to balance is to find a formulation of mutual promises that each agent can maintain in a stable trade relationship with the others.

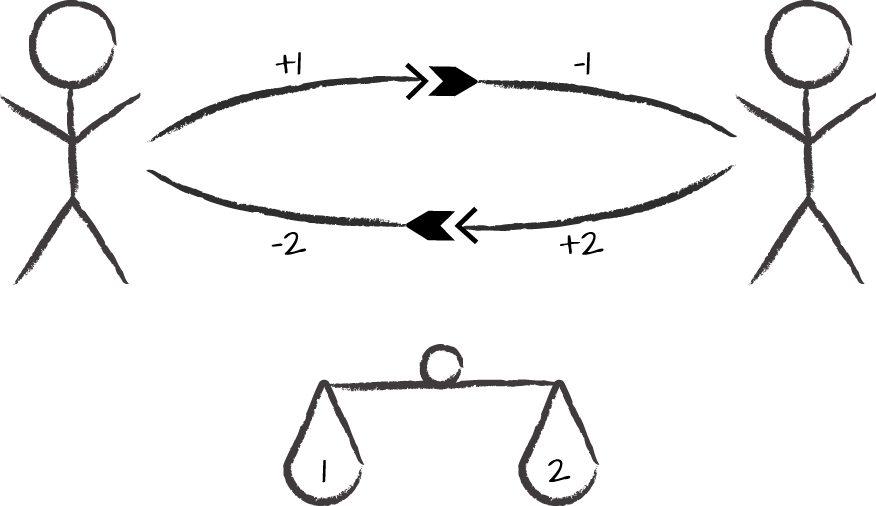

Figure 5-1. To talk bidirectionally and reach an equilibrium requires four promises! Promise bindings 1 and 2 must be assessed, by each agent, to be of acceptable return value in relation to the promise they give.

When one agent makes a promise to offer its service (+) and another accepts (-) that promise, it allows the first agent to pass information to the second. Both promises must be repeated in reverse other for the second agent to pass information back to the first.

This makes a total of four promises: two (+) and two (-). These promises form the basis for a common world view. Without that platform, it is difficult for agencies to work with common purpose.

Incompatible Promises: Conflicts of Intent

Some promises cannot be made at the same time. For instance, a door cannot promise to be both open and closed. A person cannot promise to be both in Amsterdam and New York at the same time. This can result in conflicts of intent.

- Conflicts of giving (+)

-

An agent can promise X or Y, but not both, while two other agents can promise to accept without conflict; for example, an agent promises to lend a book to both X and Y at the same time.

- Conflicts of usage (-)

-

A single agent promises to use incompatible promises X and Y from two different givers; for example, the agent promises to accept simultaneous flights to New York and Paris. The flights can both be offered, but cannot be simultaneously accepted.

Cooperating for Availability and the Redundancy Conundrum

One of the goals of systems and services, whether human-, mechanical-, or information-based, is to be reliable and predictable. Without a basic predictability, it is hard to make a functioning world. Although we haven’t fully specified what the capabilities of a promise agent are, realistically there must be limits to what it can promise: no agency is all-powerful.

It’s normal to call a single agent keeping a promise a single point of failure, because if, for whatever reason, it is either unable or unwilling to keep its promise, the promisee, relying on it, will experience a breach of trust. A design goal in the making of robust systems is to avoid single points of failure.

As usual, Promise Theory predicts two ways to improve the chances that a promise will be kept. The promiser can try harder to keep its (+) promise, and the promisee can try harder to secure its (-) promise to complete the binding. The issue is slightly different if, instead of a pre-agreed promise binding, the client imposes requests onto a promising agent without warning, as this indicates a divestment of responsibilty, rather than a partnership to cooperate.

One mechanism for improving availability is repetition, or learning, as agents in a promise binding keep their promises repeatedly. This is the value of rehearsal, or “practice makes perfect.” The phrase anti-fragile has been used to describe systems that can strengthen their promise-keeping by trial and error. Even with rehearsals, there are limits to what a single agent can promise.

The principal strategy for improving availability is to increase the redundancy of the agencies that keep promises. If an agent is trying to work harder, it can club together with other agents and form agents aligned in a collaborative role to keep the promise. If a client is trying harder to obtain a promise, it can seek out more agents offering the same promise, whether they are coordinated or not.

Let’s consider the cases for redundancy in turn, with the specific example of trying to obtain a taxi from one or more taxi providers.

A single taxi agent (promiser) cannot promise to be available to offer a ride to every client at all times, but several taxis can agree to form a collaborative group, placing themselves in the role of the taxi company (a role by cooperation). The taxi company, acting as a single super-agency, now promises to be available at all times. This must be a gamble, based on how many agents make the promise to drive a taxi and how many clients promise to use the taxi by booking up front.

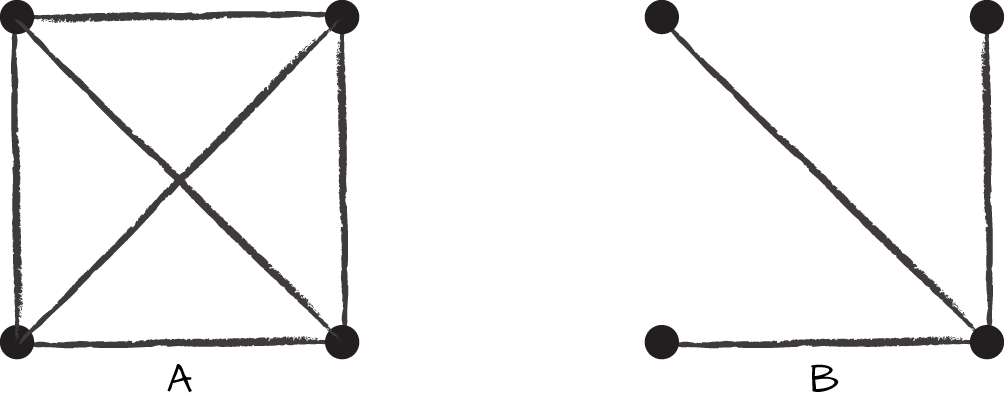

Figure 5-2. A stakeholder, wanting to use a promise provided redundantly, has to know all the possible agencies and their backups making the promise. It also wants a promise that all agents are equivalent, which means that those agencies need to promise to collaborate on consistency. These agents in turn might rely on other back-office services, provided redundantly, and so on, all the way down. Vertical promises are delegation promises, and horizontal promises are collaboration/coordination promises.

Clients can call any one of the taxis (as long as they know how to reach one of them), and enter into a promise binding that is conditional on finding a free car. The taxis can share the load between one another voluntarily, with a little pre-promised cooperative communication. If clients don’t book ahead, but call without warning, planning is harder, as the imposition must be directed to a single agent for all the collaborators. This might be a call centre. If that single agent is unavailable, it pushes the responsibility back onto the imposer to try another agent. This, in turn, means that the imposer personally has to know about all the agents it could possibly impose upon. That might include different taxi companies (i.e., different collaborative groups of agents making a similar promise). See Figure 5-3.

When multiple agents collaborate on keeping the same promises, they have to promise to coordinate with one another. The collaboration promises are auxiliary to the promises they will make to stakeholders: individual inward-facing promises to support a collective outward-facing promise. Sharing the load of impositions, however, requires a new kind of availability, with a new single point of failure: namely, the availability of the “point of presence” or call centre. The call centre solves one problem of locating redundant promisers at the expense of an exactly similar problem for the intermediate agency. I call this the load-balancing conundrum.

Figure 5-3. Different groups of taxis can collaborate in different groups to handle load by cooperation. Which provider should the user/stakeholder pick to keep the promise?

Now let’s flip the point of view over to the taxi-promise user (-), or promisee, and recall the basic principle of promises, namely that the user always has the last word on all decisions, because no agent can impose decisions.

Promisees or stakeholders, needing a ride, experience a problem with consuming redundancy. Each time a promisee has more than one alternative, it has to decide which agent to go to for a promise binding, and thus needs to know how to find all of the agents making the promise. This choice is independent of whatever the providers promise. The selection does not have to be within the same collaborative group: it could be from a competing taxi company, or from an independent operator.2

Again, one way to solve the problem of finding a suitable promiser, like the call centre, is to appoint an agent in the role of a directory service, broker, dispatcher, or load balancer (recall the DNS example, essentially like the phone book). This introduces a proxy agent, working on behalf of the user (we’ll return to discuss proxies in more detail in the next chapter). However, this addition once again introduces a new single point of failure, which also needs to be made redundant to keep the promise of availability. That simply brings back the same problem, one level higher. One can imagine adding layer upon layer, getting nowhere at all. There is no escape. It’s turtles all the way down.

What should be clear from the promise viewpoint is that the responsibility to find a provider always lies with the consumer. The consumer is the only acceptable single point of failure, because if it goes away, the problem is solved! The same arguments apply to load sharing in regards to availability. The conclusion is simple: introducing brokers and go-betweens might be a valid way to delegate, but in terms of redundancy, it makes no sense.

In software systems, it is common to divest responsibility and use middle-men load balancers, which introduce complexity and cost. The Promise Theory answer is simple: we should design software to know who to call. Software is best positioned to do its own load balancing, rather than relying on a proxy, but this means it needs to know all the addresses of all agents that can keep certain promises. Then, failing over, going to backup, or whatever we want to call it, has a maximum probability of delivering on the promise. Designing systems around promises rather than impositions leads to greater certainty.

Agreement as Promises: Consensus of Intent

Agreement is a key element of cooperation. To collaborate on a common goal, we have to be able to agree on what that is. This usually involves exchanging proposals and decisions.

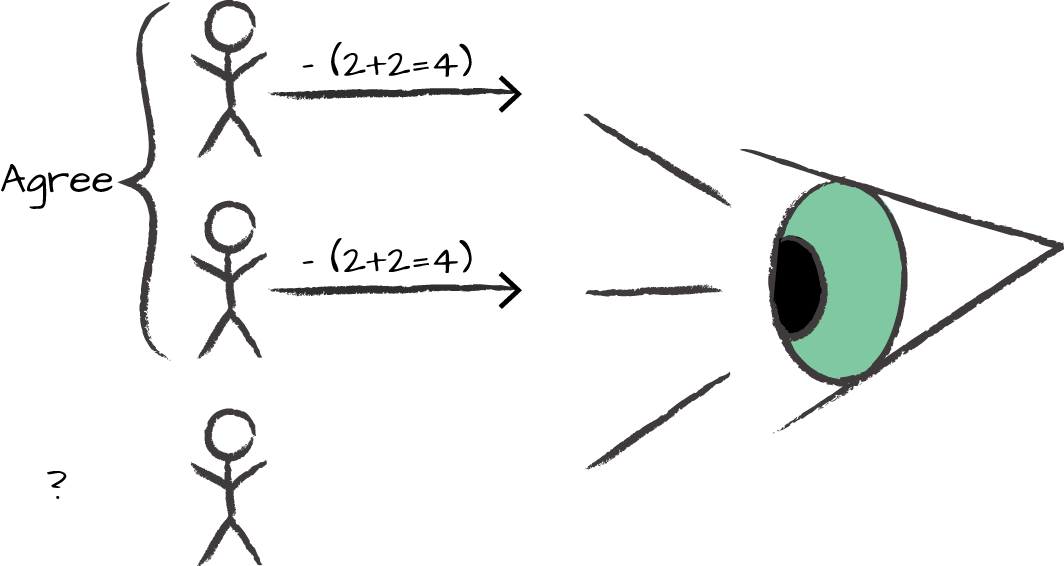

If agents promise to accept a proposal from another, then they attest to their belief in it. Suppose we have one or more agents promising to accept a promise. An observer, seeing such a promise to accept a proposal, could conclude that all of the agents (promising to accept the same proposal) in fact agreed about their position on this matter. So if there were three agents and only two agreed, it would look like Figure 5-4.

Figure 5-4. An observer sees two agents promise to use the same proposal, and hence agree. Nothing can be said about the third agent.

Agents, within some ensembles, may or may not know about their common state of agreement. However, an observer, privy to all the promises, observes a common pattern or role amongst the agents. The single agent making the promise accepted by the others assumes the role of a point of calibration for their behaviour.

When a promise proposal originates from another agent, accepting this proposal is an act of voluntary subordination (see Figure 5-5). If both agents trade such promises, the mutual subordination keeps a balance, like the tug-of-war we mentioned earlier.

Figure 5-5. Agent B agrees with agent A’s promised belief, subordinating to A. Here, only agent A can tell, because no one else sees B’s promise to accept the assertion.

It might be surprising to notice that agreement is a one-way promise. It is, in fact, a role by association. Agreement is also an autonomous decision: a single promise to accept a proposal. If we want to go further and have consensus, we need to arrange for mutual agreement. Such equilibrium of intent is the key to cooperation. Indeed, if both agents agreed with one another, we might speak not only of mutual agreement, but of an intent to cooperate (see Figure 5-6).

Figure 5-6. Cooperation means that agents promise to agree with one another about some subject.

Contractual Agreement

The preceding interpretation of agreement solves one of the long-running controversies in philosophy about whether contracts are bilateral sets of promises. This is now simply resolved with the notion of a promise proposal. A proposal is the text or body of a promise, without it having been adopted or made by any agent. Promise proposals are an important stage in the lifecycle of promises that allows promises to be negotiated.

A contract is a bundle of usually bilateral promise proposals between a number of agents that is intended to serve as the body of an agreement. A contract is not a proper agreement until it has been accepted by all parties (i.e., until the parties agree to it). This usually happens by signing.

All legal agreements, terms, and conditions may be considered sets of promises that define hypothetical boundary conditions for an interaction between parties or agents. The law is one such example. One expects that the promises described in the terms and conditions of the contract will rarely need to be enforced, because one hopes that things will never reach such a point; however, the terms define a standard response to such infractions (threats). Legal contracts define what happens mainly at the edge states of the behaviour one hopes to ensue.

Service-level agreements, for example, are legal documents describing a relationship between a provider and a consumer. The body of the agreement consists of the promised behaviours surrounding the delivery and consumption of a service. From this, we may define a service-level agreement as an agreement between two agents whose body describes a contract for service delivery and consumption.

Contracts and Signing

The act of signing a proposal is very similar to agreement. It is a realization of an act (+) that has the effect of making a promise to accept (-). This is an example of reinterpretation by a dual promise of the opposite polarity.

A signature thus behaves as a promise by the signing agent to use or accept a proposed bundle of promises. It is realized typically by attaching an agent’s unique identity, such as a personal mark, DNA, or digital key. During a negotiation, many promise proposals might be put forward, but only the ones that are signed turn into real promises (see Figure 5-7).

Figure 5-7. A signature is a promise to accept a proposal.

For example, when a client signs an insurance contract, he or she agrees about the proposed content, and the contract comes into force by signing. What about the countersigning by the insurance company? Sometimes this is explicit, sometimes implicit. If there is no explicit countersigning, one presumes that the act of writing the contract proposal on company letterhead counts as a sufficient signature or implicit promise to accept the agreement. However, one could imagine a dispute in which the insurer tried to renege on the content of a contract (e.g., by claiming it was written by a rogue employee on their company paper).

Explicit promises help clarify both the process and limitations of an agreement. Each party might promise asymmetrically to keep certain behaviours, but the overlap is the only part on which they agree.

Agreement in Groups

One of the problems we face in everyday life, both between humans and between information systems, is how to reach an agreement when not all agents are fully in the frame of information. For instance, the following is a classic problem from distributed computing that we can all identify with. The classic solutions to these problems in computing involve protocols or agreed sequences of commands.3 A command-oriented view makes this all very complicated and uncertain, so let’s take a promise-oriented viewpoint.

Suppose four agents—A, B, C, and D—need to try to come to a decision about when to meet for dinner. If we think in terms of command sequences, the following might happen:

-

A suggests Wednesday to B, C, and D.

-

In private, D and B agree on Tuesday.

-

D and C then agree that Thursday is better, also in private.

-

A talks to B and C, but cannot reach D to determine which conclusion was reached.

This might seem like a trivial thing. We just check the time, and the last decision wins. Well, there are all kinds of problems with that view. First of all, without D to confirm the order in which the conversations with B and C occurred, A only has the word of B and C that their clocks were synchronized and that they were telling the truth (see Figure 5-8).

Figure 5-8. Looking for a Nash equilibrium for group agreement.

A is unable to find out what the others have decided, unable to agree with them by signing off on the proposal. To solve this, more information is needed by the agents. The Promise Theory principles of autonomous agents very quickly show where the problem lies. To see why, let’s flip the perspective from agents telling one another when to meet, to agents telling one another when they can promise to meet. Now they are talking about only themselves, and what they know.

-

A promises he can meet on Wednesday to B, C, D.

-

D and B promise each other than they can both meet on Tuesday.

-

D and C promise each other that they can both meet on Thursday.

-

A is made aware of the promises by B and C, but cannot reach D.

Each agent knows only what promises he has been exposed to. So A knows he has said Wednesday, and B, C, and D know this too. A also knows that B has said Tuesday and that C has said Thursday, but doesn’t know what D has promised.

There is no problem with any of this. Each agent could autonomously meet at the promised time, and they would keep their promises. The problem is only that the intention was that they should all meet at the same time. That intention was a group intention, which has not been satisfied. Somehow, this must be communicated to each agent individually, which is just as hard as the problem of deciding when to meet for dinner. Each agent has to promise (+) his intent, and accept (-) the intent of the others to coordinate.

Let’s assume that the promise to meet one another (above) implies that A, B, C, and D all should meet at the same time, and that each agent understands this. If we look again at the promises above, we see that no one realizes that there is a problem except for D. To agree with B and C, he had to promise to accept the promise by B to meet on Tuesday, and accept to meet C on Thursday. These two promises are incompatible. So D knows there is a problem, and is responsible for accepting these promises (his own actions). So theoretically, D should not accept both of these options, and either B or C would not end up knowing D’s promise.

To know whether there is a solution, a God’s-eye view observation of the agents (as we, the readers, have) only needs to ask: do the intentions of the four agents overlap at any time (see the righthand side of Figure 5-8)? We can see that they don’t, but the autonomous agents are not privy to that information. The solution to the problem thus needs the agents to be less restrictive in their behaviour and to interact back and forth to exchange the information about suitable overlapping time. This process amounts to the way one would go about solving a game-theoretic decision by Nash equilibrium.

With only partial information, progress can still be made if the agents trust one another to inform each other about the history of previous communications.

-

A promises he can meet on Wednesday or Friday to B, C, and D.

-

D and B promise each other than they can both meet on Tuesday or Friday, given knowledge of item 1.

-

D and C promise each other that they can both meet on Tuesday or Thursday, given knowledge of items 1 and 2.

-

A is made aware of the promises by B and C, but cannot reach D.

Now A does not need to reach D as long as he trusts C to relay the history of the interactions. He can now see that the agents have not agreed on a time, but that if he can make Tuesday instead, there is a solution.

The lesson is that, in a world of incomplete information, trust can help you or harm you, but it’s a gamble. The challenge in information science is to determine agreement based only on information that an agent can promise itself.

Promoting Cooperation by Incentive: Beneficial Outcome

Autonomous agents cannot be forced, but they can be voluntarily guided by incentives. Understanding what incentives motivate agents is not really a part of promise theory, per se; but, because there is a simple relationship between promises and mathematical games, it makes ample sense to adopt all that is known about the subject from noncooperative game theory. Making a bundle of promises may be considered a strategy in the game theoretical sense.

This means that if you want to promote cooperation, it’s best to make promises that have perceived value to other agents. We’d better be careful though, because autonomous agents might value something quite unexpected—and not necessarily what we value ourselves. Offering money to a man without a parachute, on the way down from a plane, might not be an effective incentive. Value is in the eye of the beholder.

Some promise principles help to see how value arises:

- Locality

-

Avoid impositions, keep things close to you, and stay responsible.

- Reciprocity

-

Nurture a repeated relationship, think of what drives the economic motor of agent relationships.

- Deal with uncertainties

-

Have multiple contingencies for keeping promises. What will an agent do if a promise it relies on is not kept?

A television is a fragile system because it has no contingencies if a component, like the power supply, fails. An animal, on the other hand (or paw), has redundancy built in, and will not die from a single cell failure, or perhaps even the loss of a leg.

The Stability of Cooperation: What Axelrod Said

As hinted at in the last chapter, the science of cooperation has been studied at length using simple models of two-agent games, like the tug-of-war mentioned earlier. Political scientist Robert Axelrod, of the University of Michigan, explored the idea of games as models of cooperation in the 1970s. He asked: under what conditions will cooperative behaviour emerge in autonomous agents (i.e., without a God’s-eye view of cooperation)?

Axelrod showed that the Nash equilibrium from economic game theory allowed cooperation to take place quite autonomously, based entirely on individual promises and assessments. He did not use the language of promises, but the intent was the same. Agents could perceive value in mutual promises completely asymmetrically as long as they showed consistency in their promises. Each agent could have its own subjective view on the scale of payment and currency; after all, value is in the eye of the beholder. It is well worth reading Axelrod’s own account of these games, as there are several subtleties.4

The models showed that long-term value comes about when agents meet regularly. In a sense, they rehearse and reinforce their cooperation (as if rehearsing a piece on the piano) by reminding themselves of the value of it on a regular basis. This fits our everyday experience of getting the most out of a relationship when we practice, rehearse, revise, and repeat on a regular schedule. Scattered individuals who meet on an ad hoc basis will not form cooperative behaviour at all. Thus, the ongoing relationship may be essentially equated with the concept of lasting value. Put another way, it is not actions that represent lasting value, but rather the promise of repetition or persistently kept promises.

Interestingly, he also showed that stubborn strategies did not pay. Strategies in which agents behaved generously in an unconditional way, without putting preconditions ahead of their promises, led to the highest value outcomes for all parties as the cooperative relationship went on (Figure 5-9).

Figure 5-9. Unconditional promises win out in the long run.

The Need to Be Needed: Reinterpreting an Innate Incentive?

Semantics play a large role in cooperation. The role of semantics is often underestimated in decision models such as game theory, where it’s assumed that all the intents and purposes are agreed to in advance. So while deciding about fixed choices is straightforward, there are few simple rules about interpretation of intent. Let’s look at an interesting example of how agents can form their own interpretations of others’ promises, reading in their own meanings, if you like.

The need to feel needed is one of the motivating factors that makes humans engage in their jobs. Perhaps this is even an innate quality in humans. We don’t need warm, fuzzy feelings to see how this makes simple economic sense as a promise transaction. Pay attention to how we abstract away the humanity of a situation in order to turn a situation into something systemic. This is how we turn vagaries of human behaviour into engineering, and it is what Promise Theory does quite well by having just enough traces of the human analogues left to make a plausible formalization.

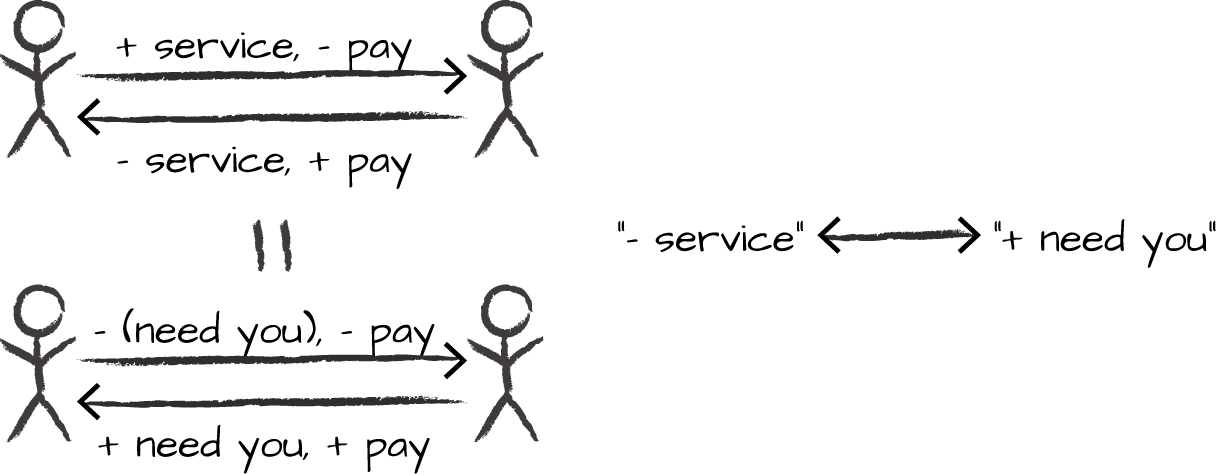

Let’s see what happens when we flip the signs of a promise to provide a service for payment (Figure 5-10). The counter-promise to use the service may be reinterpreted by saying that the service is needed or wanted by the other agent. Thus, if we flip the sign of the promise to give the service, this now translates into accepting the promise to be needed or wanted. In other words, providing a service plays the same role as needing to be needed, and it ends up next to the promise to receive actual payment.

Figure 5-10. We can understand the need to be needed from the duality of +/- promises. For every +/- promise, there is a dual -/+ interpretation too.

Conversely, we could have started with the idea that needing to be needed was a promise. Requirements form use-promises (-). If we then abstract self into a generic agency of “the service,” this becomes “need the service,” and “use service from.” Accepting the fact that your service is needed can straightforwardly, if mechanically, be interpreted as admitting that you will provide the service (without delving too deeply into the nuances of human wording). By flipping the polarity of complementary interpretations, it helps to unravel the mechanisms that lead to binding between agents.

Avoiding Conflicts of Interest

A conflict of interest is a situation where there are multiple promises and not all of them can be kept at the same time. How could we formulate promises so that conflicts of intent are minimized, and (should they occur) are obvious and fixable?

One way to avoid obvious difficulty is for each agent to divide up promises into types that do not interfere with one another. For example, I can promise to tie my shoelaces independently of promising to brush my teeth, but I can’t promise to ride a bike independently of promising to ride a horse, because riding is an exclusive activity, making the promises interdependent.5

Figure 5-11. Conflicts arise immediately from impositions because the intent is not local and voluntary.

Impositions and obligations are a recipe for conflict (see Figure 5-11), because the source of intent is distributed over multiple agents. Promises can be made only about self, so they are always local and voluntary. Impositions can be refused, but this may also lead to confusion.

If obligations and requirements are made outside the location where they are to be implemented, the local implementors of that intent might not even know about them. This is is a recipe for distributed conflict.

Emergent Phenomena as Collective Equilibria: Forming Superagents

When we think of emergent behaviour, we conjure pictures of ant’s nests or termite hills, flocks of birds, or shoals of fish (see Figure 5-12). However, most of the phenomena we rely on for our certain daily operations are emergent phenomena: the Internet, the economy, the weather, and so on. At some level, all behaviour of autonomous agents may be considered emergent. Unless explicit strong conditions are promised about every individual action, with a micromanaging level of detail, the behaviour cannot be deterministic.

As remarked previously, an emergent phenomenon is a mirage of semantics. An observer projects his own interpretation onto a phenomenon that appears to be organized, by assessing promises that are not really there. We imagine that the agencies we are observing must be making some kind of promise.

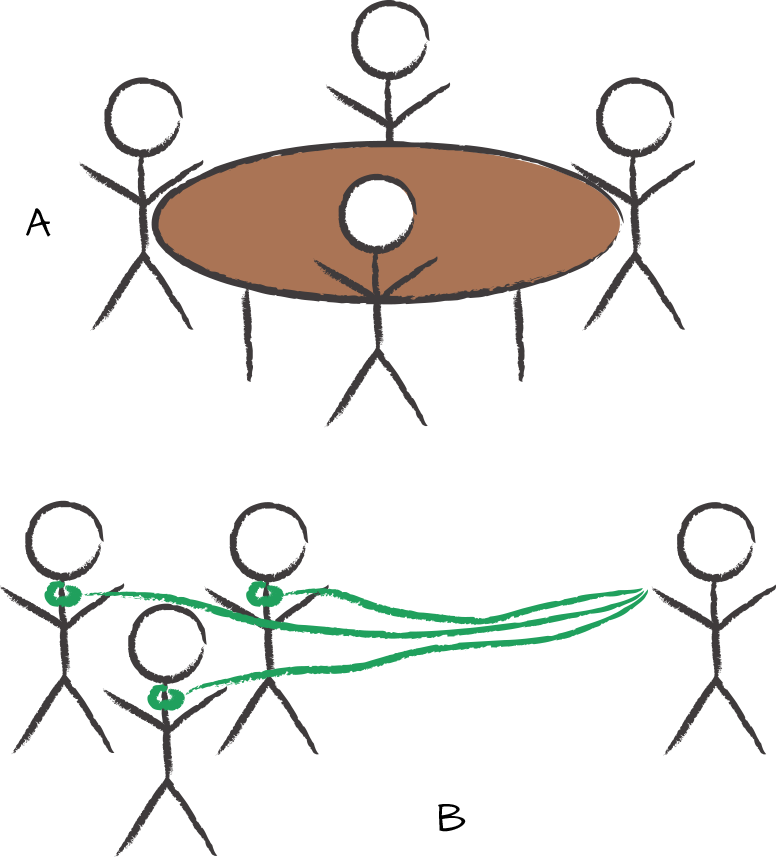

Figure 5-12. Cooperation by consensus or leadership.

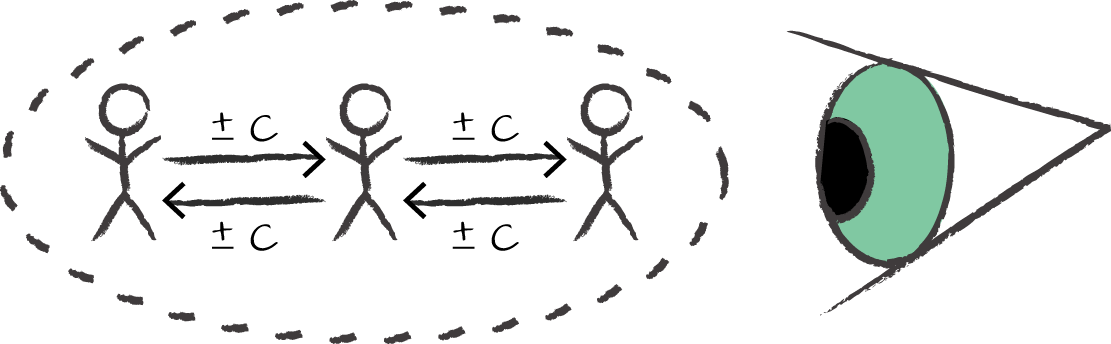

Emergent phenomena result from the stable outcomes of agents working at the point of balance or equilibrium (see Figure 5-13).

Figure 5-13. Two ways to equilibrate information/opinion: (a) swarm (everyone agrees with everyone individually as a society), (b) pilot leader (one leader makes an agreement with all subordinates, as a brain or glue for the collective).

Guiding the Outcome of Cooperation When It Is Emergent

Suppose we don’t know all the promises a system runs on, but assume that it is operating at some equilibrium, with an emergent behaviour that we can observe. Could we still influence the behaviour and obtain a sense of control?

The starting point for this must be that the actual agents can be influenced, not merely the collective “superagent” (which has no channel for communicating because it is only a ghost of the others). Are they individually receptive to change? If they react to one another, then they might react to external influence too, thus one might piggyback on this channel.

If there is a leader in the group, it has short access to its subordinates, and is the natural place to effect change. If not, the process might take longer. There are two cases (see Figure 5-14).

Figure 5-14. (a) and (b) again. This time (a) means sitting around a table and talking in a group, while (b) means dragging a team along with a single leader.

-

In (a) there is no central decision point, but each agent has many links to equilibrate. The equilibration time might be longer with this system, but the result, once achieved, will be more robust. There is no single point that might fail.

-

In (b) equilibration is faster, as there is a single point of consistency, and also of failure. It might be natural to choose that point as the leader, though there is nothing in theory that makes this necessary. This is a kind of leadership.

In a small team, you can have a strong leader and drag the others along by sheer effort. In a large team, the inertia of interacting with all of the subordinate agents will overwhelm the leader. If the leader agent loses its privileged position, then we revert to the default equilibration mechanism: autonomous, uncoordinated behaviour.

The structure of communications in these configurations suggests that change or adaptability, whether from within or without, favours small teams, or at least that the efficiency dwindles as teams get larger. Machines can be made to compensate for this by handling more communications, with greater brute force than humans can. Because of this, machines can handle larger groups without resorting to different tactics, but machines might not be able to keep the same promises as humans. This is one of the challenges of cybernetics and automation.

Although we might be able to influence the intention behind a cooperative group, we might not end up changing the behaviour if we ask it to do something that’s unstable. How stable is the result with respect to behaviour (dynamics), interpretation (semantics), and cost (economics)?

One of the important lessons of Promise Theory is that semantics and intent are always subordinate to the dynamical possibilities of a situation. If something cannot actually be realized, it doesn’t help to intend it furiously. Water will not flow uphill, no matter what the intent. With these caveats, one can still try to spread new actionable intentions to a group by starting somewhere and waiting for the changes to equilibrate through the cooperative channels that are already there. The more we understand the underlying promises, the easier it should be to crack the code.

Stability of Intent: Erratic Behaviour?

Cooperation can be either between different agents, or with oneself over time. If an agent seems uncoordinated with respect to itself, an observer might label it erratic, or even uncooperative.6 In a single agent, this could be dealt with as a problem in reliability. What about in a larger system?

Imagine a software company or service provider that changes its software promises over time. For example, a new version of a famous word processor changes the behaviour of a tool that you rely on. A website changes its layout, or some key functionality changes. These might not be actually broken promises, but are simply altered promises.

If the promises change too quickly, they are not useful tools for building expectation, and trust is stretched to a breaking point (see Figure 5-15). Such situations lessen the value of the promises. Users might begin to reevaluate whether they would trust an agent. In the example, software users want to trust the software or accept its promises, but a change throws their trust.

Figure 5-15. Converging to a target usually means behaving predictably! Lack of constant promises might lead to the perception of erratic behaviour.

Suppose an agent promises constant change, on the other hand. This is a possible way around the preceding issue. If promise users are promised constant change, then they can adjust their expectations accordingly. This is constancy at a meta-level. The point is that humans are creatures of habit or constant semantics. Changes in semantics lead to the reevaluation of trusted relationships, whether between humans directly, or by proxy through technology.

A way to avoid this is to maintain a principle of constancy of intent. This is actually the key to a philosophy that has grown up around so-called continuous delivery of services (see Chapter 8). One can promise change continuously, as long as the core semantics remain the same. New versions of reality for a product or service user must converge towards a stable set of intentions, and not be branching out into all kinds of possibilities.

This leads back to the matter of interpretation, however. Both human personalities and machine algorithms have different strategies for achieving constancy of intent. For example, searching for something in a store, or on the Internet, proposes a clear, intended target. The promise to search, however, can be approached in different ways. It might be implemented as a linear search (start at the beginning and work systematically through the list), or as a Monte Carlo approach, jumping to random locations and looking nearby. The latter approach sounds hopeless, but mathematically we know that this is by far the fastest approach to solving certain search problems.

Because perception often lies, agents have to be careful not to muddle desired outcomes with a preference for the modes of implementation.

When Being Not of One Mind Is an Advantage

Consensus and linear progressions are not always an advantage. There are methods in computation that rely on randomness, or unpredictable variation. These are called Monte Carlo methods, named after gambling methods, as random choices play a role in finding shortcuts. They are especially valuable in locating or searching for things.

This is just like the scene in the movies, where the protagonist says: “Let’s split up. You search this way, I’ll search that!”

Randomness and variation, so-called entropy, lie at the heart of solving so-called NP-hard problems, many of which have to do with decision-making and optimizing behaviours. We should not be too afraid of letting go of determinism and a sense of control.7

Human Error or Misplaced Intent?

Agreement in a group is called consensus. There does not need to be consensus in order for cooperative behaviour to come about, but there does need to be a sufficient mutual understanding of the meaning of promises.

In some systems, a common view comes about slowly by painstaking equilibration of intent. In other cases, agents will elect to follow some guiding star that short-circuits the process. The analogues in politics include dictators and democracies. Dictatorship has some efficiency about it, but many feel it is dehumanizing. It works very well when the agents who follow the leader do not have a need for individual freedom (e.g., parts in a machine).

Individuality can be a burden if it stands in the way of cooperation. However, the way we scale large industrial efforts by brute human force has led up to the model of trying to build machines from human parts. If people can give up their individuality (but not their humanity), and act as one mind, rather than one body, engineering would be a simple optimization problem. Consider it a goal of Promise Theory to depersonalize matters so that this is not an emotional decision.

By putting humans into machine-like scenarios, we often assert that humans are to blame for errors and erratic behaviours, because we expect machine-like behaviours. From a promise viewpoint, we see that one cannot make promises of machine-like behaviour on behalf of humans. If a user of promises does not rate the performance of another agent highly, it is up to that user to find a more compatible agent. Thus, instead of saying that people are the problem, we can say that semantics are the problem.

Semantics come from people too, but from those who observe and judge the outcomes of promised situations. If we expect something that was not promised, the desire for blame is the emotional response, and the search for a replacement is the pragmatic response.

Organization: Centralization Versus Decentralization

The argument for centralizing or decentralizing control is one of those ongoing debates in every area of engineering and design. We gravitate towards centralized models of control because they are familiar and easy to understand.

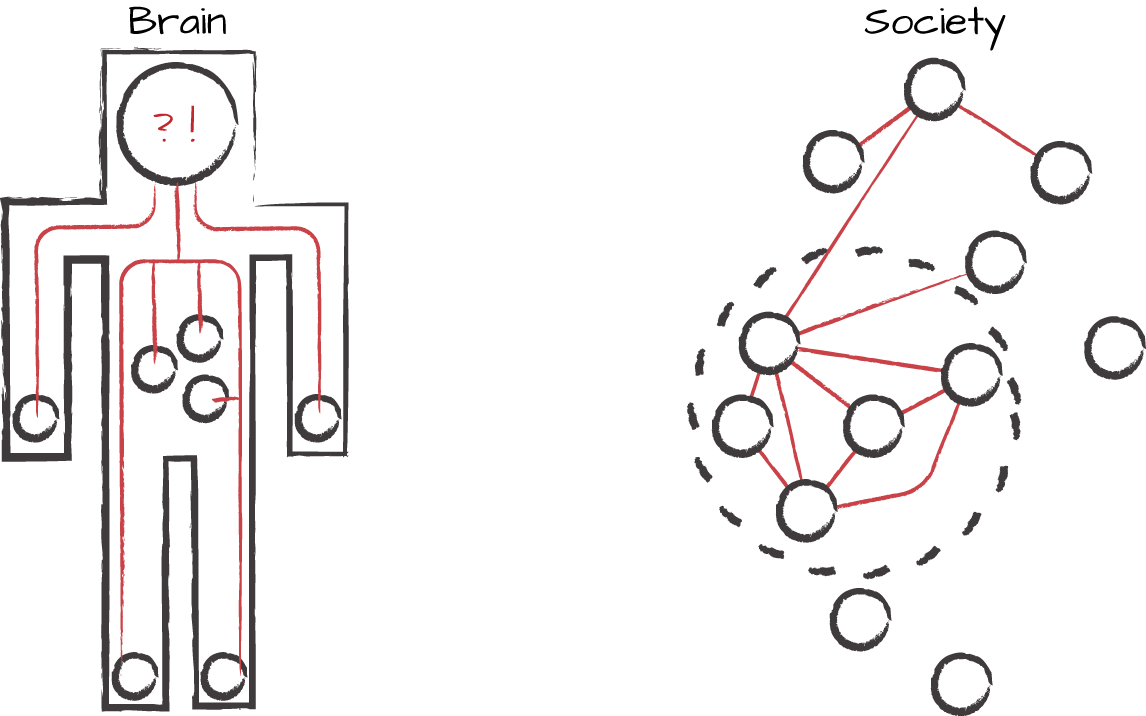

I like to call a centralized architecture a brain model (i.e., one in which there is a centralized controller that reaches out to a number of sensors and actuators through a network with the intent to control it). See Figure 5-16.

Figure 5-16. Central brains and distributed societies. A brainy dictator can be more agile than the slow consensus of a society, but is less robust.

Obviously, this is how brains are positioned (though not how they work internally); the brain is localized as a logically centralized controller, and the nervous system connects it to the rest of the body for sending and receiving pulses of communication. Not all of the body’s systems are managed in this way, of course, but the intentional aspects of our behaviour are.

A brain model is a signalling model. Signals are transmitted from a common command-and-control centre out to the provinces of an organism, and messages are returned to allow feedback. We are so familiar with this approach to controlling systems that we nearly always use it as the model of control. We see it in vertebrates; we use it to try to force-govern societies, based on police or military power; and we see it in companies. Most of management thinking revolves around a hierarchy of controllers. The advantage of this model is that we can all understand it. After all, we live it every day. But there is one problem: it doesn’t scale favourably to a large size.

The alternative to a brain model is what I’ll call a society model. I imagine a number of autonomous parts that are loosely coupled, without a single central controller. There can be cooperation between the parts (e.g., institutions or departments, communities or towns, depending on whether the clustering is logical or geographical). There are even places where certain information gets centralized (e.g., libraries, governing watchdogs, etc.), but the parts are capable of surviving independently and even reconfiguring without a central authority.

Societies can easily grow to a larger scale brain models because work can be shared between several agents which interact weakly at the edges, trading or exchanging information. If one connection to a service fails, the service does not necessarily become cut off from the rest, and it has sufficient autonomy to reconfigure and adapt. There is no need for a path from every part of the system to a single God-like location, which then has to cope with the load of processing and decision-making by sheer brute force.

The autonomous agent concept in Promise Theory allows us to recognize the limitations of not having information in a single place. By bringing together information into one place, a centralized system, like a brain, can make decisions that require analysis more consistently since only a single point of calibration is needed. From a promise perspective, a brain is a single agent that defines a role. It must be in possession of all the knowledge, and it must trust that knowledge in order to use it decisively. If these things are promised, and all other agents subordinate to the brain, this can be an effective model of cooperation. Most hierarchical organizations follow this model.

A brain service, whether centralized or embedded in a society, promises to handle impositions thrust upon it by sensors, and to respond to actuators by returning its own impositions. It’s a pushy model. It also promises to process and make decisions; thus any brain will benefit from speed and processing power. Any agent can handle up to a certain maximum capacity of impositions per second before becoming oversubscribed. Thus every agent is a bottleneck to handling impositions. Horizontal expansion (like a society) by parallelization handles this in a scale-free manner. Vertical expansion (like a central brain) has to keep throwing brute force, capacity, and speed at the problem. Moore’s law notwithstanding, this probably has limits.

Focused Interventions or Sweeping Policies?

The brain idea is a nice analogy, but it might be making you think too much about humans again, so let’s think about another example.

Flourescent lightbulbs are an interesting case of a cooperative system of agents (i.e., gaseous atoms that emit light together by an avalanche effect when stimulated electrically). You’ve probably seen two kinds of these: long strip lights, and short, folded bulb replacements.

Quick-start tubes have starter circuits that dump a quick surge of electrical current through the gas in the tube to create a sudden avalanche effect that lights up the whole tube in one go. Such tubes use a lot of energy to get started, but they start quickly. Most of the energy is used in switching them on. Once they are running, they use very little energy. Their (conditional) promise is to give off a strong light at low cost if you leave them switched on for long periods of time.

Newer “energy-saving” lightbulbs make a similar promise, but unconditionally. They don’t use quick-start capacitors, so they take a long time to warm up. This uses a lot less energy during startup, but the atoms take longer to reach a consensus and join the cooperative party. Once all the atoms have been reached, the light is just as bright and behaves in the same way as the older tubes.

The starter circuit in the first kind of tube acts as a central brain, imposing a consensus onto the atoms by brute force. Once the light is working, it has no value anymore, and the atoms run away with the process quite autonomously. Sometimes a brief intervention can shift from one stable state to another, without carrying the burden constantly.

For humans, it’s a bit like that when we are rehearsing something. Our brains as we imagine them really means our neocortex or logical brain. If we are learning a new piece on the piano, we need to use that intervention brain. But once we’ve practiced enough, the routine gets consigned to what we like to call muscle memory (the limbic system), which is much cheaper, and we no longer need to think about the process anymore. We might try to cram knowledge into our brains to kickstart the process, but it only gets cheap to maintain with continuity.

When we can replace impositions with stable promises, interventions can be replaced by ongoing policies. The result is cheaper and longer lasting. It can be just as agile if the policy is one of continuous change.

Societies and Functional Roles

The alterative to the central brain approach is to allow agents to cooperate individually. In the extreme case, this adds a lot of peer-to-peer communication to the picture, but it still allows agents to develop specializations and work on behalf of one another cooperatively.

Specialization means regions of different agency, with benefits such as focused learning, and so on. Agents can save costs by focusing on a single discipline. In Promise Theory, specialists should be understood as separate agents, each of which promises to know how to do its job. Those regions could be decentralized, as in societal institutions, or they could be specialized regions of a central brain (as in Washington DC or the European parliament). The advantage of decentralization is that there are fewer bottlenecks that limit the growth of the system. The disadvantage is that agents are not subordinated uniquely to one agency, so control is harder to perceive, and slower to equilibrate. Thus, decentralized systems exhibit scalability without brute force, at the cost of being harder to comprehend and slower to change.

A centralized brain makes it easier (and faster) for these institutional regions to share data, assuming they promise to do so, but it doesn’t place any limits on possibility. Only observation and correlation (calibration) require aggregation of information in a single central location.

Relationships: What Dunbar Said

Every promise binding is the basis for a relationship. Robert Axelrod showed, using game theory, that when such relationships plan for long-term gains, they become stable and mutually beneficial to the parties involved. An interesting counterpoint to that observation comes from realizing that relationships cost effort to maintain.

Psychologist Robin Dunbar discovered an important limitation of relationships from an anthropological perspective while studying the brain sizes of humans and other primates. He discovered that the modern (neocortical) brain size in primates limits how many relationships we can keep going at the same time. Moreover, the closer or more intimately we revisit our relationships, the fewer we can maintain, because a close relationship costs more to maintain. This has to play a role in the economics of cooperation. In other words, relationships cost, and the level of attention limits how many we can maintain. This means that there is a limit to the number of promises we can keep. Each agent has finite resources.

We seem to have a certain number of slots we can use for different levels of repetitive cost. Human brains can manage around 5 intimate relationships, around 15 in a family-like group, about 30 to 40 in a tribal or departmental working relationship, and 100 to 150 general acquaintances. Whatever semantics we associate with a group (i.e., whether we consider someone close a lover or a bitter enemy), family, or team—is the level of cognitive processing, or intimacy, that limits the number we can cope with. Once again, dynamics trump semantics.

We can now ask, what does this say about other kinds of relationships, with work processes, tools, or machinery? Cooperation is a promise we cannot take lightly.

Some Exercises

-

Think of daily activities where you believe you are in full control, like driving a car, making dinner, typing on your computer, giving orders at work, etc. Now try to think of the agents you rely on in these activities (car engine, tyres, cooker, coworkers, etc.) and rethink these cases as cooperative systems. What promises do the agencies you rely on make to you? What would happen if they didn’t keep their promises?

-

Compare so-called IT orchestration systems (scheduling and coordinating IT changes) that work by imposition (e.g., remote execution frameworks, network shells, push-based notification, etc.), with those that work by promising to coordinate (e.g., policy consistency frameworks with shared data). Compare the availability and robustness of the coordination by thinking about what happens if the promises they rely on are not kept.

-

Sketch out the promises and compare peer-to-peer systems (e.g., Skype, bit-torrent, OSPF, BGP, CFEngine, etc.), with follow the leader systems using directory services or control databases (e.g., etcd, consul, zookeeper, openvswitch, CFEngine, etc.). What is the origin of control in these two approaches to collaboration?

-

In IT product delivery, the concept of DevOps refers to the promises made between software developers and operations or system administrators that enable smooth delivery of software from intended design to actual consumption by a user. Think of a software example, and document the agencies and their promises in such a delivery. You should include, at least, developers, operations engineers, and the end user.

1 One agent should not promise something the other wouldn’t; for example, it should not promise a “backdoor” to a service.

2 In a case like this, agents don’t usually broadcast their promises individually to potential users because that would be considered spam (in Internet parlance). Flood advertising is often unwelcome in our noisy society.

3 Technologies such as vector clocks and Paxos, for instance.

4 Robert Axelrod, The Complexity of Cooperation: Agent-Based Models of Competition and Cooperation, 1997.

5 In mathematics, this is like looking for a spanning set of orthogonal vectors in a space. A change in X does not lead to a change in Y, so there is no conflict by promising either independently.

6 Interestingly, in Myers-Briggs personality typing, erratic or intuitive thinking versus sequential thinking is one of the distinctions that does lead to the misinterpretation of “artistic types” whose thoughts flit around without a perceivable target, as being erratic.

7 I wrote about this in detail in my book, In Search of Certainty: The Science of Our Information Infrastructure.