Chapter 1

Introduction to Trade-Off Analysis

Gregory S. Parnell

Department of Industrial Engineering, University of Arkansas, Fayetteville, AR, USA

Matthew Cilli

U.S. Army Armament Research Development and Engineering Center (ARDEC), Picatinny, NJ, USA

Azad M. Madni

Department of Astronautical Engineering, Systems Architecting and Engineering and Astronautical Engineering, Viterbi School of Engineering, University of Southern California, Los Angeles, CA, USA

Garry Roedler

Corporate Engineering LM Fellow, Engineering Outreach Program Manager, Lockheed Martin Corporation King of Prussia, PA

The complexity of man-made systems has increased to an unprecedented level. This has led to new opportunities, but also to increased challenges for the organizations that create and utilize systems.

(ISO/IEC/IEEE 15288, 2015)

1.1 Introduction

This book is about trade-off analyses in the life cycle of a system. It is written from the perspective of engineers, systems engineers, and other decision-makers involved in the life cycle of a system. In this book, we present the best practices for performing systems engineering trade-off analyses in a step-by-step, structured manner. Our intent is to make it an easy-to-understand and useful reference for students, practitioners, and researchers.

Systems are developed to create value for stakeholders by providing desired capabilities. Stakeholders include investors, government agencies, customers/acquirers, end users/operators, system developers/integrators, trainers, and system maintainers, among others. Decisions are ubiquitous across the system life cycle. System decision-makers (DMs) are those individuals who make important decisions pertaining to the technical and management compromises that shape the concept definition, system definition, system realization, deployment and use, and product and service life management (including maintenance, enhancement, and disposal).

When there are multiple stakeholders, there are often competing objectives and requirements. To achieve a certain attainment level on one objective, a sacrifice or trade-off may be required in the attainment level of other objectives. Similarly, complex system designs may offer multiple alternatives to achieve the system's objectives, and this, too, requires analysis to achieve the best balance among the trade-offs. The process that leads to a reasoned compromise in these situations is commonly referred to as a “trade-off analysis” or a “trade study.”

This book project began with a request by the International Council on Systems Engineering (INCOSE) (INCOSE Home Page, 2015) Corporate Advisory Board (CAB) to the INCOSE Decision Analysis Working Group. The CAB identified the lack of effective trade-off analysis methods as a key concern and requested help in documenting best practices. This book project was also motivated by the need to formalize systems engineering trade-off analysis to help make it an integral part of the systems engineering life cycle. It provides essential elaboration of the decision management process in ISO/IEC/IEEE 15288, Systems and Software Engineering – System Life Cycle Processes, the INCOSE Systems Engineering Handbook Version 4, and the Systems Engineering Body of Knowledge (SEBoK, 2015).

Decision-makers (DM), especially program managers and systems engineers, stand to benefit from a collaborative decision management process that engages all stakeholders (SH) who have a say in system design decisions. In particular, systems engineers can exploit trade-off studies to help define the problem/opportunity, characterize the solution space, identify sources of value, identify and evaluate alternatives, identify risks, acquire insights, and provide recommendations to system SHs and other DMs.

This book focuses on engineering trade-off analysis techniques for both systems and systems of systems (Madni and Sievers, 2014a,b; Ordoukhanian and Madni, 2015). We recommend that trade-off studies be consistent with SE standards (ISO/IEC/IEEE 15288:2015), based on a formal lexicon, have a sound mathematical foundation, and provide credible and timely data to DMs and other SHs. We provide such a lexicon and a formal foundation (Chapter 2) based on decision analysis for effective and efficient trade-off studies. Our approach supplements decision analysis, a central part of decision-based design (Hazelrigg, 1998), with Value-Focused Thinking (Keeney, 1992) within a model-based engineering framework (Madni & Sievers, 2015).

1.2 Trade-off Analyses Throughout the Life Cycle

New system development entails a number of interrelated decisions. Table 1.1 provides a partial list of decisions opportunities to improve the system value that are commonly encountered throughout a system's life cycle. Many of these decisions stand to benefit from a holistic perspective that combines the systems engineering discipline with a composite decision model that aggregates the data produced by engineering, performance, and cost models and translates them into terms that are relevant and meaningful to the various stakeholders, especially DMs. This holistic perspective is especially valuable in gate (go/no-go funding) decisions to ensure that affordable alternatives are available for the next life cycle stage.

Table 1.1 Partial List of Decision Opportunities throughout the Life Cycle

| Life Cycle Stage | Decision Opportunity |

| Exploratory research | Assess technology opportunity/initial business case

|

| Concept | Inform, generate, and refine a capability

|

Create solution class alternatives and select preferred alternative

|

|

| Development | Select/define system elements |

|

|

Select/design verification and validation methods

|

|

| Production | Craft production plans

|

| Operation, support | Generate maintenance approach

|

| Retirement | Retirement plan

|

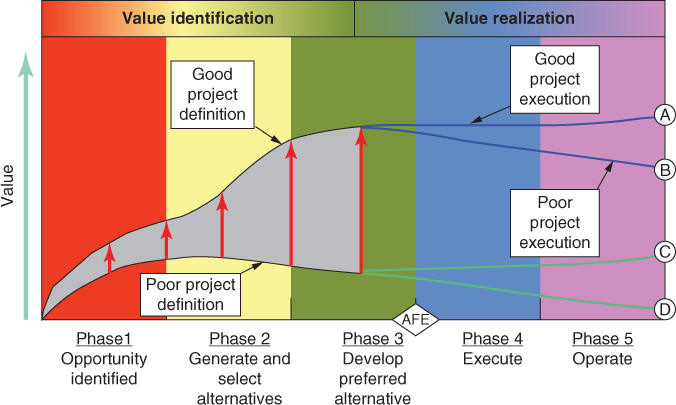

1.3 Trade-off Analysis to Identify System Value

Systems provide value through the capabilities they provide or the products and services they enable (Madni, 2012). Decision analysis is an operations research technique that provides models to define value and a sound data-driven, objective, defensible, mathematical foundation for trade-off analyses. The graphic shown in Figure 1.1 helps visualize the importance of opportunity definition (Chapter 6) to value creation. For example, Chevron uses the “Eagle's Beak”, as shown in this figure, to convey the importance of project definition and project execution. The five phases shown in Figure 1.1 constitute the project life cycle used by Chevron (Lavingia, 2014). The process leads to value identification and value realization. At Chevron, decision analysis plays an important role in the three phases of value identification: identify opportunity; generate and select alternatives; and develop the preferred opportunity. The Chevron process employs stages and gates similar to those found in most system life cycles. Each phase consists of activities that produce information; clearly defined deliverables; and an explicit decision to proceed, exit, or recycle. Chevron employs project management in all five phases of the Chevron Project Development and Execution Process (Decision-Making in an Uncertain World: A Chevron Case Study, 2014). Similarly, for the system life cycle, value identification occurs during the concept definition and system definition phases, and decision analysis plays the same important role.

Figure 1.1 Eagle's beak chart

Figure 1.1 highlights five important points. First, the problem or opportunity definition (see Chapter 6) is an important first step in value identification. Second, the generation of good alternatives (see Chapter 8) is critical to identifying higher value. Third, the development, evaluation, and selection of preferred alternatives can significantly increase value. Fourth, good project execution is required to realize potential value. Fifth, project execution is performed in the face of uncertainties (see Chapter 3). In this book, we focus on the value of using trade-off studies to help in the identification of both value and risk, as the timely identification of risk can help implementers mitigate potential barriers to value realization.

1.4 Trade-off Analysis to Identify System Uncertainties and Risks

System risks can affect performance, schedule, and cost. Building on several frameworks, Table 1.2 provides a list of the sources of systems risk (Parnell, 2009). The first column in Table 1.2 lists the potential source of risk. The second column lists the major questions defining the risk. The third column lists some of the major potential uncertainties for this risk source. The major questions and the uncertainties are meant to be illustrative and not all inclusive. Many of these risks create uncertainties, which should be considered in trade-off analyses. Chapter 3 provides techniques for using probability to model these uncertainties in trade-off analyses. Later chapters explicitly consider these uncertainties in illustrative trade-off analyses.

Table 1.2 Sources of Systems Risk

| Sources of Risk | Major Questions | Potential Uncertainties |

| Business | Will political, economic, labor, social, technological, environmental, legal, or other factors adversely affect the business environment? | Changes in political viewpoint (e.g., elections) Economic disruptions (e.g., recession) Global disruptions (e.g., supply chain) Changes to law Disruptive technologies Adverse publicity |

| Market | Will there be a market if the product or service works? | Consumer demand Threats from competitors (quality and price) and adversaries (e.g., hackers and terrorists) Continuing stakeholder support |

| Performance (technical) | Will the product or service meet the required/desired performance? | Defining future requirements in dynamic environments Understanding technical baseline Technology maturity to meet performance. Adequate modeling, simulation, test, and evaluation capabilities to predict and evaluate performance Impact to performance from external factors (e.g., interoperating systems) Availability of enabling systems needed to support use |

| Schedule | Can the system that provides the product or service be delivered on time? | Concurrency in development Impact of uncertain events on schedule Time and budget to resolve technical and cost risks |

| Development and production cost | Can the system be delivered within the budget? Will the cost be affordable? |

Changes in concept definition (mission or needs) Technology maturity Stability of the system definition Hardware and software development processes Industrial/supply chain capabilities Production/facilities capabilities Manufacturing processes |

| Management | Does the organization have the people, processes, and culture to manage a major system? | Organization culture SE and management experience and expertise Mature baselining (technical, cost, schedule) processes Reliable cost-estimating processes |

| Operations and support cost | Can the owner afford to operate and support the system? | Increasing operations and support (e.g., resource or environmental) costs Trades of performance versus ease/cost of operations and support Adaptability of the design Changes in maintenance or logistics strategy/needs |

| Sustainability | Will the system provide sustainable future value? | Availability of future resources and impact on the natural environment |

1.5 Trade-off Analyses can Integrate Value and Risk Analysis

Program managers for the development of a new system must consider performance, cost, and schedule, as they are all interrelated. We know that performance problems can cause cost increases and schedule delays. Similarly, schedule changes can increase costs. Finally, cost estimate increases can result in reduced performance targets or schedule delays to make the system more affordable. Trade-off analysis, cost analysis, and risk analysis are frequently separate analyses performed by different analysts. Cost analysts typically perform a cost-risk analysis using Monte Carlo simulation. Many trade-off studies ignore uncertainty and risk.

A major theme of this book is that trade-off analyses should be used to identify both system value and system risks and that the analysis needs to be performed in a more integrated manner. In Chapter 9, we discuss and provide examples of how system value, system costs, and system risks can be integrated by identifying the system features that impact value, cost, and risk.

1.6 Trade-off Analysis in the Systems Engineering Decision Management Process

Successful systems engineering requires sound decision making. Many systems engineering decisions are difficult because they include multiple competing objectives, numerous stakeholders, substantial uncertainty, significant consequences, and high accountability. In these cases, sound decision making requires a formal decision management process. The purpose of the decision management process, as defined by ISO/IEC/IEEE 15288:2015, is “…to provide a structured, analytical framework for objectively identifying, characterizing and evaluating a set of alternatives for a decision at any point in the life cycle and select the most beneficial course of action.” The process presented in this book aligns with the structure and principles of the decision management process of ISO/IEC/IEEE 15288, the INCOSE Systems Engineering Handbook v4.0 (INCOSE, 2015), the Systems Engineering Body of Knowledge (SEBoK), and an INCOSE proceedings paper that elaborated this process (Cilli & Parnell, 2014). This process was designed to use best practices and to avoid the trade-off analysis mistakes discussed in the next section.

The INCOSE decision management process, introduced in Figure 1.2, is presented in more detail in Chapter 5. The purpose of the process is to “provide a structured, analytical framework for objectively identifying, characterizing, and evaluating a set of alternatives for a decision at any point in the life cycle and select the most beneficial course of action.” The white text within the outer green ring identifies elements of a systems engineering process while the 10 blue arrows represent the 10 steps of the decision management process. Interactions between the systems engineering process and the decision management process are represented by the small, dotted green (outer ring to inner ring) or blue arrows (inner ring to outer ring). (The reader is referred to the online version of this book for color indication.)

Figure 1.2 INCOSE decision management process

The steps in the decision management process are briefly described in Table 1.3, with references to the primary chapters that provide additional details about each step. Chapter 5 describes and illustrates the INCOSE decision management process.

Table 1.3 Decision Management Process

| Process Step | Description | Primary Chapters |

| Frame decision | Describe the decision problem or opportunity that is the focus of the trade-off analysis in a particular system life cycle stage | Chapter 6 |

| Develop objectives and measures | Use mission and stakeholder analysis and the system artifacts in the life cycle stage (e.g., function, requirements) to define the objectives and value measures for each objective alternative needed to satisfy | Chapter 7 |

| Generate creative alternatives | Use a divergent–convergent process to develop creative, feasible alternatives | Chapter 8 |

| Assess alternatives via deterministic analysis | Use a value model to perform deterministic analysis for trade-off analyses | Chapters 9–14 |

| Synthesize results | Provide an assessment of the value of each alternative and the cost versus value to identify the dominated alternatives | Chapters 9–14 |

| Identify uncertainty and conduct probabilistic analysis | Identify the major scenarios and system features that are uncertain and conduct probability analysis | Chapters 9, 12–14 |

| Assess impact of uncertainty | Assess the impact of the uncertainties on value and cost | Chapters 9, 12–14 |

| Improve alternatives | Improve the alternatives by increasing their system value and/or reducing their associated system risk | Chapters 9–14 |

| Communicate trade-offs | Communicate the trade-off analysis results to decision-makers and other stakeholders | Chapters 9–14 |

| Present recommendations and implementation plan | Provide decision recommendations and an implementation plan to describe the next steps to implement the decision | Chapter 5 |

1.7 Trade-off Analysis Mistakes of Omission and Commission

Using the INCOSE decision management process, we identify and discuss the most common trade-off study mistakes of omission and commission (Parnell et al., 2014). Mistakes of omission are errors made by not doing the right things, while mistakes of commission are errors made by doing the right things the wrong way. For each step in the decision process, Table 1.4 provides a list of trade-off mistakes, the type of mistake (omission or commission), and the potential impacts.

Table 1.4 Trade-Off Mistakes

| Step | Mistakes | Omission/Commission | Impacts |

| Overall process | Not having a decision management process | Omission | No trade-off studies or variable trade-off study quality of those conducted Poor decisions; potential selection of a poor design Increased cost and schedule; inadequate performance Loss of SE credibility |

| Frame decision | Not obtaining access to key DM and SH Decision frame not defined |

Omission Omission |

No trade-off studies or trade-off studies on the wrong issues Incorrect selection criteria Loss of trade-off study and SE credibility |

| Develop objectives and measures | Objectives and/or measures not credible | Commission | Loss of trade-off study and SE credibility Potential selection of a poor design |

| Generate creative alternatives | Decision space not defined Doing an advocacy study |

Omission Commission |

Potential selection of poor design Potential increased cost and schedule Loss of trade-off study and SE credibility |

| Assess alternatives via deterministic analysis | Using non-normalized value functions Not using swing weights No sensitivity analysis |

Commission Commission Omission |

Potential selection of poor designs Loss of trade-off study and SE credibility |

| Synthesize results | Lack of a sound mathematical foundation | Omission | Potential selection of poor designs Loss of trade-off study and SE credibility |

| Identify uncertainty and conduct probabilistic analysis | Not identifying uncertainties Improper assessment of uncertainty |

Omission Commission |

Loss of trade-off study and SE credibility Potential selection of poor designs |

| Assess impact of uncertainty | Not integrating with system/program risk assessments | Omission | Potential selection of poor designs Loss of SE credibility |

| Improve alternatives | Not improving alternatives | Omission | Loss of trade-off study and SE credibility Potential selection of poor designs |

| Communicate trade-offs | Results not timely or understood | Commission | Recommendations not implemented Loss of SE credibility |

| Present recommendations and implementation plan | Recommendations not implemented | Commission | Loss of trade-off study and SE credibility |

| Overall process | Not using trade-off study models on subsequent trade-off studies | Omission | Loss of trade-off study and SE credibility |

1.7.1 Mistakes of Omission

There are 10 common mistakes of omission.

1.7.1.1 Not Having a Decision Management Process

One of the most fundamental trade-off analysis mistakes is not having a decision management process that provides a foundation for all studies. The decision management process should have the acceptance and participation of the decision-makers and other stakeholders. To achieve stakeholder acceptance, the process should be tailorable to the needs of each specific trade-off analysis. Having a sound decision management process can save time while allowing for organizational learning and development of best practices. The INCOSE decision management process, shown in Figure 1.2, is an example of this kind of process. Without such a process, engineers in an organization are essentially free to use their own, invariably unsound process, and unsound processes can have a long lifetime! Since systems engineers are the ones who frequently perform trade-off analysis for critical system decisions, a natural home for the decision management process is the systems engineering organization.

1.7.1.2 Not Obtaining Access to Key DM, SH, and Subject Matter Experts (SMEs)

Framing any system decision can be a challenge, especially without the right stakeholders involved. Therefore, it is critically important to have access to key decision makers, stakeholders, and SMEs to ensure that the opportunity is adequately defined and the important objectives have been identified. Challenges include gaining access to leaders and senior decision makers despite their busy schedules, including stakeholders who are critical to the system or its impact on them, and assuring access to SMEs in all steps of the trade-off study. To achieve this end, experiential opportunities that allow all stakeholders to readily understand the context and situation without having to understand SE notations are an imperative (Madni, 2016).

1.7.1.3 Decision Frame Not Defined

The first step in the decision management process is to identify and describe the decision opportunity in the context of the problem space. In decision analysis, we call this framing the decision. Experience has taught us that the initial problem is never the final problem (Madni, 2013; Madni et al., 1985). The frame describes how we look at the problem. A good decision frame begins with thorough research and mission/stakeholder analysis (Parnell et al., 2011). A decision hierarchy (Parnell et al., 2013), which lists the past decisions, the current decisions, and the subsequent decisions, can also be useful. A short paragraph, written in clear terms that define the problem, can be quite helpful to decision-makers, other stakeholders, and study participants.

1.7.1.4 Lack of a Sound Mathematical Foundation

To be credible and have defensible results, a trade-off study should be based on a sound mathematical foundation comprising both deterministic and probabilistic analyses. Several operations research and engineering analysis techniques (e.g., optimization, simulation, decision analysis) are potentially appropriate for trade-off studies. If all the objectives can be converted into dollars, then a net present value model would serve as a sound foundation. If not, then the mathematics of multiple objective decision analysis (MODA) offers a sound foundation for trade-off studies. Chapter 2 discusses this further.

1.7.1.5 Undefined Decision Space

Some trade-off studies list alternatives that are not explicitly connected to the decision space. In many studies, alternatives are listed as bullets on a PowerPoint chart. In these cases, there is no explicit understanding of the decision space. The best techniques to help develop good alternatives are those that explicitly define the decision space (see Chapter 8). One best practice technique is called Zwicky's Morphological Box or Alternative Generation Table (Parnell et al., 2011). In decision analysis, the technique is called the Strategy Table (Parnell et al., 2013), and it seeks to design alternatives that span the decision space. When the decision space is explicitly defined, it becomes possible to explore the decision space, identify more decision options, and come up with a better set of alternatives (Madni, 2012; Madni et al., 1985). The impact of not defining the decision space is the loss of the opportunity to create better alternatives to achieve the desired system value and/or reduce risk.

1.7.1.6 Absence of Sensitivity Analysis

Any deterministic trade-off study has to make multiple assumptions about parameters in the model(s). The parameters typically include shapes of the value curves, swing weights, scores on the performance measures, and other variables that are used to calculate the scores. There may be some uncertainty about what numerical value each parameter should have. The best practice is to perform sensitivity analysis to determine if the best alternative changes when the parameter settings are varied across a reasonable range. Based on the sensitivity analysis, additional effort should be devoted to understanding and modeling the most sensitive variables (Madni, 2015).

1.7.1.7 Not Identifying Uncertainty and Performing Probabilistic Analysis

Deterministic trade-off studies ignore uncertainties. Since uncertainty and risk are inherent in the life cycle of new systems, this omission is problematic. When decision analysis is used, it is easy to identify key uncertainties in deterministic models using deterministic sensitivity analysis, assess the uncertainties, and perform probabilistic analysis using Monte Carlo simulation, decision trees, influence diagrams, or probability management decisions (Parnell et al., 2011; Parnell et al., 2013). The impact of not modeling uncertainty is that we forgo the opportunity to understand the sources of risk early in the system life cycle when it is invariably easier to avoid, mitigate, or manage risks.

1.7.1.8 Not Improving Alternatives

Several trade-off studies assess only proposed alternatives and never consider improving them. With several bad alternatives, even a “correctly performed” trade-off study can do no better than identify a bad alternative! Keeney calls the focus on existing options Alternative-Focused Thinking and advocates using Value-Focused Thinking to define our values, create decision opportunities, use our values to create better alternatives, and improve the proposed alternatives (Keeney, 1992). The decision analysis model provides useful data for Value-Focused Thinking, since it defines the ideal alternative and the gaps between the best alternative and the ideal alternative.

1.7.1.9 Failure to Integrate Trade-Off Study Uncertainty Analysis with System/Program Risk Assessments

Uncertainty analysis performed in trade-off studies should be integrated with the system/program risk assessment process. Unfortunately, many times trade-off studies do a good job of analyzing uncertainty, but the results are not integrated into the system risk management process. On many programs, risk analysis is performed using a simple risk matrix with likelihood on the rows (columns) and consequences on the columns (rows). In this case, the risks being analyzed may or may not be linked to trade-off studies. An alternative approach is to use the trade-off analysis value and cost models to perform risk assessment. This approach may result in better assessment of the likelihood and consequences (the loss in potential value) of the risk. In addition, the results of the risk analysis can be used to identify the need for additional trade-off studies to mitigate or manage risk.

1.7.1.10 Failure to Use Trade-Off Models on Subsequent Studies

On some programs, each trade-off study is unique and there is no traceability between the results in one life cycle stage and the subsequent stages. This means the systems engineering organization might have been using very different value trade-offs for the same system without knowing it. A great deal of effort can go into developing trade-off study value models in early life cycle stages. The best practice is to use information from previous trade-off study value models (if available) and improve and tailor the model for subsequent studies. Using improved models can make the analysis results more accurate as well as more credible to decision-makers, stakeholders, and SMEs.

1.7.2 Mistakes of Commission

In addition to the 10 mistakes of omission, there are 6 common mistakes of commission.

1.7.2.1 Performing an Advocacy Study

Trade-off studies work best when a creative set of alternatives that span the decision space are developed (Madni, 2013). It is worth noting that the final decision will be only as good as the alternatives that are considered. Some project managers and systems engineers inappropriately convert a trade-off study to a biased advocacy study (Parnell et al., 2013). They advocate the alternative they recommend and use the study to highlight the weaknesses of other alternatives. Advocacy studies put a significant burden on the decision-makers and stakeholders to identify and ask the hard questions to make sure that the other potential alternatives do not provide higher value/lower risk than the advocated alternative. Decision-makers and stakeholders should insist on a clear definition of the opportunity and on a set of creative, feasible alternatives that cover the full range of possibilities to create value, including verified and validated data and selection criteria that are free of bias.

1.7.2.2 Objectives and/or Measures Not Credible

Trade-off studies require the development of a complete set of system objectives and measures. To meet the mathematical requirements of MODA, a nonoverlapping set of direct objectives is needed. In systems engineering, a great deal of effort is spent on identifying and analyzing system functions. The list of system functions can provide a good foundation for the development of objectives and value measures by constructing a functional value hierarchy (Buede & Miller, 2009; Parnell et al., 2011). The functional hierarchy has functions at the top level(s), then the objectives for each function, and value measures for each objective.

1.7.2.3 Using Measure Scores Instead of Normalized Value Functions

Trade-off studies require the ability to compare performance on one measure with performance on other measures. If we have converted every measure level into a common currency, for example, dollars, we can use dollars as the metric. If decision-makers are unwilling to use dollars, we can use MODA to quantify the value as a function of the capability versus the cost. MODA uses the value functions to enable this trade-off analysis. The value functions (sometimes called scoring functions) convert a value measure score into a normalized measure of value on a common scale. The most common scales are 0–1, 0–10, and 0–100. Value functions assess returns to scale on the range of the value measure score. Value functions usually are of four types: linear, diminishing returns, increasing returns, and S-curve (increasing, then linear, then decreasing returns). The value function will be increasing (for a maximize objective) or decreasing (for a minimize function). The value functions allow us to compare apples and oranges. These functions must at least be on an interval scale (Keeney, 1992). Zero value on an interval scale means the minimum acceptable value and does not mean the lack of value. If a ratio scale is used, zero value would mean no value. The best practice is to obtain the shape of the curve and the rationale for the curve shape before you assess points on the curve. This will provide very useful information when a decision-maker or stakeholder challenges the value judgments of one or more alternatives. See Chapter 2 for additional information.

1.7.2.4 Use of Importance Weights Instead of Swing Weights

A critical mistake in trade-off studies is using importance weights instead of swing weights. MODA quantitatively assesses the trade-offs between conflicting objectives by evaluating the alternative's contribution to the value measures (a score converted to value by single-dimensional value functions) and the importance of each value measure across the range of variation of the value measure (the swing weight). Every MODA book identifies this as a major problem. For example, “some experimentation with different ranges will quickly show that it is possible to change the rankings of the alternatives by changing the range that is used for each evaluation measure. This does not seem reasonable. The solution is to use swing weights” (Kirkwood, 1997). Swing weights play a key role in the additive value model presented in Chapter 2. The swing weights depend on the measure scales' importance and range. The word “swing” refers to varying the range of the value measure from its minimum acceptable level to its ideal level. If we hold constant all other measure ranges and reduce the range of one of the measure scales, the measure's relative swing weight decreases, and the swing weight assigned to the others increases since the weights have to add to 1.0. The following story explains the need for swing weights (Parnell et al., 2013).

Not using swing weights can have significant consequences on the credibility of the trade-off study.

1.7.2.5 Improper Assessment of Uncertainty

Uncertainty assessment requires an understanding of heuristics and cognitive biases that humans exhibit when dealing with uncertain information (Kirkwood, 1997). For example, humans anchor on irrelevant data and do a poor job of assessing the range of uncertainty. Therefore, an assessment should never begin by asking an individual for the mean of the distribution, since this will anchor the individual on the mean. Once anchored on the mean, humans seldom identify the bounds that capture the true range of uncertainty. The best practice is to start with the extremes of the distribution and work toward the middle to avoid anchoring. Another useful best practice is to use an uncertainty assessment form that captures the key information on the assessment (Parnell et al., 2013).

1.7.2.6 Results Not Timely or Not Understood

Performing a system trade-off study, developing insights, and communicating key insights to decision-makers and stakeholders is a challenging and important task that is usually performed under significant time pressure. Late studies have no impact. Complex technical charts may be difficult to grasp for individuals who do not use them all the time or who are not wired to think that way (Madni and Sievers, 2016). In addition, some engineers tend to provide detailed, technical information that decision-makers and stakeholders neither need nor want. The analyst should take actions to understand what level of information the decision-makers want to support their decisions and then identify and communicate key insights as clearly and concisely as possible. So perhaps the most challenging task for the analyst is identifying the important insights and determining how to convey them to decision-makers and stakeholders. The ability to identify insights and display quantitative information is a soft skill that those conducting trade-off studies need to develop. One of the best sources of advice for excellence in the presentation of quantitative data is the work of Edward Tufte (Tufte, 1983).

1.7.3 Impacts of the Trade-Off Analysis Mistakes

Earlier, we discussed mistakes of omission and commission as distinct errors. Any one of these errors can have significant consequences in system design and, ultimately, on the program and the system (Madni, 2010, 2011). Unfortunately, it is quite common to find multiple mistakes made in trade-off studies with some errors leading to, or cascading with, other errors. These cascading errors can lead to adverse impacts for the trade-off study team, decision-makers, stakeholders, and the system or program. In addition, repeating these trade-off mistakes can undermine the credibility of the SE organization/enterprise (Madni et al., 2005). In Figure 1.3, we show the dependencies among these errors. A dotted arrow represents correlation. A solid arrow represents high correlation. These dependencies show how the mistakes can cascade to the impacts described as follows.

Figure 1.3 Relationships among trade-off study mistakes and impacts

1.7.3.1 Potential Selection of Poor Designs

The ultimate impact of mistakes made during the conduct of a system design trade-off study is the selection of poor designs. This includes missed opportunities to increase value and reduce risk. The selection of a poor design may then lead to significant impacts to cost, schedule, and technical performance. The primary sources of this shortcoming are poor decision frame, alternatives that fail to span the decision space, not taking the time to improve alternatives, not integrating trade-off studies with system/program risk assessments, failure to implement recommendations, and results not produced in timely fashion or imperfectly understood. The most obvious, but often neglected, cause of poor designs is poor alternatives. There are two primary causes of poor alternatives. The first is beginning with a limited set of alternatives that does not span the decision space and then not applying techniques to expand the alternative set (Madni et al., 1985; Madni, 2013). The second cause is not systematically trying to improve and expand the alternatives during the trade-off study. The identification and improvement of a good set of alternatives are sometimes impeded by the biases of the people involved. For example, there are often preconceptions of adequate solutions that limit the thought process from looking at the full decision space. The alternative generation table and decision analysis models provide excellent information for Value-Focused Thinking to improve the alternatives (Parnell et al., 2011).

1.7.3.2 Loss of Systems Engineering (SE) Credibility

The long-term organizational impact of trade-off study mistakes is the loss of SE credibility in the program, organization, or enterprise, and ultimately with the customer or acquisition organization. Nearly any of the trade-off study errors can result in a loss of SE credibility, since they impact the quality of the analysis and the resulting decision. Once the SE credibility is lost, it takes a significant effort to restore it.

1.7.3.3 No Trade-Off Studies Conducted or Trade-Off Studies Conducted on Wrong Issues

The impact of a poor problem definition (decision frame) can lead to no trade-off studies being conducted or trade-off studies being conducted on the wrong issues. Defining the problem(s) is difficult and time-consuming; however, it is critical. A great solution to the wrong problem may not be even a feasible solution to the real problem. If the error is not detected, it can lead to the selection of a poor design. The causes of poor problem definition are usually the lack of a clear problem statement and a poor framing of the decision. The typical cause for a poor decision frame is not having access to (or not listening to) decision-makers and stakeholders. While the decision frame can be improved late in the study, it will likely have schedule and cost impacts.

1.7.3.4 Loss of Trade-off Study Credibility

The loss of trade-off study credibility can usually be traced to multiple causes. The first is not having a sound mathematical foundation for the analysis. This typically results in not using normalized value functions and/or swing weights. The second is lack of confidence on the part of decision makers and/or stakeholders in the objectives and measures, which they might perceive as incomplete or not credible. Not showing the linkage of objectives to system functions can contribute to this problem. The third is the lack of good alternatives that span the decision space. A symptom of this problem is an advocacy trade-off analysis that advocates the team's preferred alternative and denigrates the others. The fourth is not identifying uncertainties and performing risk analysis. Trade-off studies can be improved or even reconducted late in the study but most likely not without adverse schedule and cost impacts.

1.7.3.5 Not Implementing Recommendations

Not having the trade-off study recommendations implemented can be a disheartening outcome. The reasons may lie with analysts, decision-makers, and/or program managers. The analyst may not have worked the right problem, may not have developed a credible model, may not have sensitivity/uncertainty/risk analysis to provide confidence in the decision, may not have communicated well, may not have provided an implementation plan, or may have delivered the study too late. The decision-maker may not have provided the analyst enough guidance to work on the right problem, may not have bothered to understand the rationale for the recommendation, or may have already made up their own mind about the decision before or during the study. The program manager may have failed to secure the requisite funds to implement the recommendations (Neches and Madni, 2013).

1.8 Overview of the Book

Figure 1.4 provides a graphical overview of the organization of this book. The first four chapters provide the foundations for understanding trade-off analyses. This chapter has described the need for trade-off analysis and identified errors of omission and commission to avoid. Chapter 2 provides the mathematical foundations for trade-off analyses; Chapter 3 provides a review of the probability theory, cognitive biases, and probability assessment necessary to understand and model uncertainty; and Chapter 4 provides techniques for conducting resource analyses that are essential to assess system affordability.

Figure 1.4 Outline of the book

Chapter 5 presents the INCOSE decision management process for conducting a trade-off analysis, and provides a detailed illustrative example using some of the techniques illustrated in this book. Chapter 6 presents techniques for defining the opportunity space that frames the trade-off analysis. Chapter 7 describes how to identity and structure objectives and, then, how to develop value measures that quantify the attainment of these objectives. Chapter 8 provides commonly used techniques to generate and evaluate alternatives and compares the ability of these techniques to generate and evaluate alternatives. Chapter 9 provides an example of how we can perform an integrated model of value that can be used to perform value and risk trade-off analysis. The next five chapters discuss the life cycle stage context and provide examples of techniques that can be used to perform trade-off analyses in that stage. Since different types of information are available in the different life cycle stages, different trade-off analysis techniques are more appropriate. Chapter 10 discusses trade-off analysis that is conducted in the conceptual design phase. Chapter 11 discusses architecture trade-off analysis. Chapter 12 discusses design trade-off analysis. Chapter 13 discusses sustainment trade-off analyses. Chapter 14 discusses programmatic trade-off analyses (acceptance and retirement). Chapter 15 provides a summary and discusses future developments that may impact trade-off analyses.

1.8.1 Illustrative Examples and Techniques Used in the Book

This book uses illustrative examples to demonstrate how trade-off analysis techniques can be used to define the opportunity, identify value, identify uncertainties, evaluate alternatives, and provide insights about the best alternatives. There are many qualitative and quantitative (deterministic and probabilistic) techniques that have been proposed for trade-off analyses. The chapter authors reference many of these techniques in their chapters. However, for their illustrative examples, the authors have selected qualitative techniques that have proven useful and quantitative techniques that use sound mathematics and have been used effectively to provide actionable insights to decision-makers and stakeholders. Table 1.5 lists the illustrative examples we have selected to illustrate how to perform trade-off analyses.

Table 1.5 Illustrative Examples

| Chapter | Title | Illustrative Examples | Qualitative Techniques | Deterministic Techniques | Probabilistic Techniques |

| 5 | Understanding decision management | Unmanned aeronautical vehicle (UAV) | Functional value hierarchy | Multiattribute value and life cycle cost | Monte Carlo simulation (@risk) |

| 6 | Identifying opportunities | Drone performing community-based forest monitoring | Decision hierarchy | ||

| UAV to support dismounted soldiers on patrol | Decision hierarchy | ||||

| 7 | Identifying objectives and value measures | Army squad enhancement problem | Functional value hierarchy | ||

| Global nuclear detection architecture | Objectives value hierarchy | ||||

| 9 | An integrated model for trade-off analyses | Army squad enhancement problem | Influence diagram | Multiattribute value and life cycle cost | Agent-based simulation Monte Carlo simulation (probability management) |

| 10 | Exploring concept trade-offs | Maritime security system | Objectives hierarchy | Optimization | Agent-based simulation Utility |

| 11 | Architecture evaluation framework | Objectives hierarchy | |||

| 12 | Exploring the design space | Liftboat | Design parameter trade-offs | Design of experiments (DOE) (JMP) | |

| Commercial ship | Design parameter trade-offs | DOE (JMP) | |||

| NATO ship | Multiattribute value | Monte Carlo (Crystal Ball), DOE (JMP) | |||

| 13 | Performing programmatic trade-off analysis | Forest monitoring drone system | Influence diagram | Availability model and optimization | Monte Carlo simulation (@risk) |

| 14 | Performing programmatic trade-off analyses | System acceptance model System cancellation |

Operating characteristic curve and cost modeling | Statistics, hypothesis testing, decision trees | |

| Software failure predictive model | Logistics regression model(R) | ||||

| HR systems cancellation | NPV, return on investment, and break-even analysis | ||||

| Decommissioning offshore oil and gas platforms in California | Multiattribute value |

1.9 Key Terms

- Decision: A choice among alternatives that results in an allocation of resources.

- Decision Management Process: “a structured, analytical framework for objectively identifying, characterizing, and evaluating a set of alternatives for a decision at any point in the life cycle and selecting the most beneficial course of action.” The decision management process uses the systems analysis process to perform the assessments (ISO/IEC/IEEE 15288, 2015).

- Life Cycle Model: “An abstract functional model that represents the conceptualization of the need for the system, its realization, utilization, evolution, and disposal” (ISO/IEC/IEEE 15288, 2015).

- Mistake of Omission: Mistakes of omission are errors made by not doing the right things.

- Mistake of Commission: Mistakes of commission are errors made by doing the right things the wrong way.

- Stakeholder: “An individual or organization having a right, share, claim or interest or in its possession of characteristics that meet their needs or expectations” (ISO/IEC/IEEE 15288, 2015).

- System: “Systems are manmade, created and utilized to provide products or services in defined environments for the benefits of users and other stakeholders” (ISO/IEC/IEEE 15288, 2015).

- Risk: “The effect of uncertainty on objectives” (ISO/IEC/IEEE 15288, 2015).

- Uncertainty: Imperfect knowledge of the outcome of some future variable.

- Trade-off: “Decision making actions that select from various requirements and alternative solutions on the basis of net benefit to the stakeholders” (ISO/IEC/IEEE 15288, 2015).

- Trade-off Study: An engineering term for an analysis that provides insights to support system decision-making in a decision management process.

- Value: The benefits provided by a product or service to the stakeholders (customers, consumers, operators, etc.).

- Value Identification: The determination of the potential value of a new capability.

- Value Realization: The delivery of the value of a new capability.

1.10 Exercises

- 1.1 Trade-off analysis

- a. Provide a definition.

- b. Why are trade-offs needed?

- c. Who should participate in a trade-off analysis?

- d. Why is trade-off analysis different in different life cycle stages?

- e. Should trade-off analysis be performed to support life cycle gate decisions?

- f. Who should make the trade-off decisions?

- 1.2 Decision management

- a. What is a decision management process?

- b. Why does an organization need a decision management process?

- c. What are the benefits of having a decision management process?

- d. What is the impact of not having a decision management process?

- 1.3 Identify a system that is currently operational and answer the following questions:

- a. Identify the system stakeholders.

- b. Define value for the system.

- c. Describe the products and services of the system that provide value.

- d. Identify system risks.

- e. What is the anticipated life of the system?

- f. How difficult will it be to retire the system?

- 1.4 Identify a system early in its life cycle and answer the following questions:

- a. Identify the system stakeholders.

- b. Define value for the system.

- c. Describe the products and services of the system that provide value.

- d. Identify potential system risks.

- e. What is the anticipated life of the system?

- f. List one major decision in each future life cycle stage that could require a trade-off analysis.

- 1.5 Identify a system in development and answer the following questions:

- a. Describe the difference between value identification and value realization.

- b. What products and services will provide value?

- c. How are risks identified?

- d. What risks does the system have?

- e. What is the anticipated life of the system?

- f. List one major decision in each future life cycle stage that could require a trade-off analysis.

- 1.6 Trade-off analysis mistakes

- a. Why are trade-off analysis mistakes made?

- b. Describe the difference between a mistake of omission and a mistake of commission.

- c. What is the reason that trade-off analysis mistakes cascade?

- 1.7 Review a trade-off analysis paper in the proceedings of an engineering conference.

- a. How was value defined?

- b. What life cycle stage was considered?

- c. Were uncertainty and risk considered?

- d. Identify any mistakes of commission or omission in the paper.

- e. What trade-off analysis insights were provided?

- f. Was the trade-off analysis convincing?

- g. What decision was recommended or made?

- 1.8 Review a published trade-off analysis paper in a refereed engineering journal.

- a. How was value defined?

- b. What life cycle stage was considered?

- c. Were uncertainty and risk considered?

- d. Identify any mistakes of commission or omission in the paper.

- e. What trade-off analysis insights were provided?

- f. What decision was recommended or made?

- g. Was the trade-off analysis convincing?

References

- Buede, D.M. and Miller, W.D. (2009) The Engineering Design of Systems: Models and Methods, 2ndVol. Wiley Series in Systems Engineering edn, Wiley & Sons, Hoboken.

- Cilli, M. and Parnell, G. (2014). Systems Engineering Tradeoff Study Process Framework. International Council on Systems Engineering (INCOSE) International Symposium. June 30–July 3. Las Vegas, NV.

- Decision-making in an uncertain world: A Chevron Case Study. (2014). From Business Case Studies: http://businesscasestudies.co.uk/chevron/decision-making-in-an-uncertain-world/#axzz3NDPqSBNF (accessed 28 December 2014).

- Hazelrigg, G.A. (1998) A framework for decision-based engineering design. Journal of Mechanical Design, 120 (4), 653–658.

- INCOSE (2015) INCOSE systems engineering handbook v. 4.0. INCOSE, SE Handbook Working Group, INCOSE.

- INCOSE Home Page. (2015). http://www.incose.org/: http://www.incose.org/ (accessed 06 June 2015).

- ISO/IEC/IEEE (2015) Systems and Software Engineering - System Life Cycle Processes, International Organization for Standardization (ISO)/International Electrotechnical Commission (IEC)/Institute of Electrical and Electronics Engineers (IEEE), Geneva, Switzerland.

- Keeney, R.L. (1992) Value-Focused Thinking: A Path to Creative Decision Making, Harvard University Press, Cambridge, MA.

- Keeney, R. and Raiffa, H. (1976) Decision with Multiple Objectives: Preference and Value Tradeoffs, Wiley & Sons, New York.

- Kirkwood, C. (1997) Strategic Decision Making with Multiobjective Decision Analysis with Spreadsheets, Duxbury Press, Belmont, CA.

- Lavingia, N. J. (2014). Business Success through Excellence in Project Management. Critical Facilities Roundtable: http://www.cfroundtable.org/ldc/040706/excellence.pdf (accessed 28 December 2014).

- Madni, A.M. (2010) Integrating humans with software and systems: technical challenges and a research agenda. Systems Engineering, 13 (3), 232–245.

- Madni, A.M. “Integrating humans with and within software and systems: challenges and opportunities,” (Invited Paper) CrossTalk, The Journal of Defense Software Engineering, May/June 2011, “People Solutions.”

- Madni, A. (2012) Adaptable platform-based engineering: key enablers and outlook for the future. Systems Engineering, 15 (1), 95–107.

- Madni, A.M. (2013) Generating novel options during systems architecting: psychological principles, systems thinking, and computer-based aiding. Systems Engineering, 16 (4), 1–9.

- Madni, A.M. (2015) Expanding stakeholder participation in upfront system engineering through storytelling in virtual worlds. Systems Engineering, 18 (1), 16–27.

- Madni, A.M., Brenner, M.A., Costea, I., MacGregor, D., and Meshkinpour, F. “Option Generation: Problems, Principles, and Computer-Based Aiding,” Proceedings of 1985 IEEE International Conference on Systems, Man, and Cybernetics, Tucson, Arizona, November, 1985, pp 757–760.

- Madni, A.M., Sage, A., and Madni, C.C. “Infusion of Cognitive Engineering into Systems Engineering Processes and Practices,” Proceedings of the 2005 IEEE International Conference on Systems, Man, and Cybernetics, October 10–12, 2005, Hawaii.

- Madni, A.M. and Sievers, M. (2014a) Systems integration: key perspectives, experiences, and challenges. Systems Engineering, 17.1, 37–51.

- Madni, A.M. and Sievers, M. (2014b) System of systems integration: key considerations and challenges. Systems Engineering, 17.3, 330–347.

- Madni, A. M. and Sievers, M. N. (2015). Model based systems engineering: motivation, current status and needed advances. Systems Engineering, Accepted for publication, 2016.

- Madni, A.M. and Sievers, M. (2016) Model based systems engineering: motivation, current status and needed advances. Systems Engineering, Accepted for publication, 2016.

- Neches, R. and Madni, A.M. (2013) Towards affordably adaptable and effective systems. Systems Engineering, 16 (2), 224–234.

- Ordoukhanian, E. and Madni, A. M. (2015) System Trade-offs in Multi-UAV Networks. AIAA SPACE 2015 Conference and Exposition.

- Parnell, G. (2009). Evaluation of risks in complex problems. in Making Essential Choices with Scant Information: Front-end Decision-Making in Major Projects (eds T. Williams, K. Sunnevåg, and K. Samset), Palgrave MacMillan, Basingstoke, UK, pp. 230–256.

- Parnell, G.S., Bresnick, T.A., Tani, S.N., and Johnson, E.R. (2013) Handbook of Decision Analysis, Wiley & Sons.

- Parnell, G., Cilli, M., and Buede, D. (2014). Tradeoff Study Cascading Mistakes of Omission and Commission. International Symposium. June 30–July 3. Las Vegas, NV: INCOSE.

- Parnell, G.S., Driscoll, P.J., and Henderson, D.L. (eds) (2011) Decision Making for Systems Engineering and Management, 2nd edn, Wiley & Sons.

- SEBoK. (2015). Systems Engineering Body of Knowledge (SEBoK) wiki page. SEBoK: http://www.sebokwiki.org (accessed 06 June 2016).

- Tufte, E.R. (1983) The Visual Display of Quantitative Information, Graphics Press, Cheshire, CT.