Fault Tolerance

3.2.1. Fault, Failure, Availability, Reliability

3.4. Typical Module Failure Rates

3.5. Hardware Approaches to Fault Tolerance

3.5.1. The Basic N-Plex Idea: How to Build Failfast Modules

3.5.2. Failfast versus Failvote Voters in an N-Plex

3.6.1. N-Version Programming and Software Fault Tolerance

3.7. Fault Model and Software Fault Masking

3.7.1. An Overview of the Model

3.7.2. Building Highly Available Storage

3.7.2.1. The Structure of Storage Modules

3.7.2.2. Definition of Store and of Store Read and Write

3.7.2.4. Reliable Storage via N-Plexing and Repair

3.7.3. Highly Available Processes

3.7.3.3. Reliable Processes via Checkpoint-Restart

3.7.3.4. Reliable and Available Processes via Process Pairs

3.7.3.5. The Finer Points of Process-Pair Takeover

3.7.3.6. Persistent Processes-The Taming of Process Pairs

3.7.3.7. Reliable Processes plus Transactions Make Available Processes

3.1 Introduction

This chapter presents three views of fault tolerance: the hardware view, the software view, and the global (holistic) view. It sketches the fault-tolerance problem and shows that the presence of design faults is the ultimate limit to system availability; we have techniques that mask other kinds of faults.

After a brief historical perspective, the chapter begins with standard definitions. Next, some empirical studies and measurements of current systems and typical module failure rates are presented. Then hardware approaches to fault tolerance are surveyed. This leads to the issue of software fault tolerance (tolerating software bugs). The bulk of the chapter presents the fault model and software masking techniques typical of non-transactional systems. Transaction processing systems use these more primitive techniques to implement transactional storage, execution, and messages. The chapter then mentions the meta-system design rules (KISS, Murphy’s Law, and end-to-end arguments) and concludes with a discussion of system delusion that demonstrates an end-to-end issue.

The material of this chapter requires some very simple probability theory. For readers who may have forgotten this theory, the chapter begins with a crash course on probability. Readers who already know this material can skip it, but if you do not know it, don’t panic! It is easy.

3.1.1 A Crash Course in Simple Probability

The probability of an event, A, happening in a certain period is denoted P(A). Probabilities range between zero and one. Zero probability means that the event never occurs; one means that the event certainly occurs. The probability that an event, A, does not occur is 1 – P(A). A and B are independent events if the occurrence of one does not affect the probability of the occurrence of the other. Given independent events A and B, consider the following possibilities and their equations:

Both happen. The probability of both A and B happening in that period is the product of their probabilities:

![]()

At least one happens. The probability of at least one of A and B happening is the probability that A happens plus the product of the probability that A does not happen and that B happens:

This equation has a small term P(A) • P(B) that can be ignored if both probabilities are very small.

![]()

For example, if the chance a system fails in a day is .01, the chance the telephone network fails is .02, and the chance the terminal fails is .03, then the chance of all three failing (using Equation 3.1) is .01 • .02 • .03, or 6 • 10−6. The chance of any one of the three failing (using Equation 3.3) is .01 + .02 + .03, or .06.

Failure rates are often memoryless: If the system has been operating for a month, it is no more likely to fail now than it was a month ago. It is as though in each time unit the system flips the failure coin, and with probability P(A) the coin comes up fail; otherwise, the system keeps working without failure. To illustrate: The chance of your dying in the next ten minutes is just about the same as the chance of your dying tomorrow at this time. In the short term, then, your death rate (hazard) is memory less. Of course, when you are 100 years old the hazard function is likely to be higher.

Failure rates are such tiny numbers (say, 10−6) that the reciprocals of the probabilities are used. If the event rate P(A) is memory less and much less than one, the mean time (average time) before the event is expected to occur MT(A) is the reciprocal of the rate, or 1/P(A):

Mean time to event. If the probability P(A) of event A per unit of time is much less than one and is memoryless, then the mean time to event A is the reciprocal of the probability of the event:

![]()

The mean time to failure of the components used in the previous example is 100 days (1/.01) for the system, 50 days (1/.02) for the network, and 33 days (1/.03) for the terminal. The mean time to any of them failing is about 17 days (1/.06), and the mean time to all of them failing is 170,000 days (1/(6 • 10−6)).

System designers are often presented with modules A, B, C, which fail independently and have mean times to failures MT(A), MT(B), MT(C). If they are simply combined into a group G, then the failure of any one is likely to fail the whole group. What is MT(G)? Using Equation 3.3, the probability, P(G), that any one of them fails is approximately P(A) + P(B) + P(C). So, using Equation 3.4:

Mean time to any event. If events A, B, C have mean time MT(A), MT(B), MT(C), then the mean time to the first one of the three events is:

Given N events A, all with the same mean time to occur, MT(A), the mean time to the first event is:

![]()

This is the math needed for this chapter. But, it is very important to understand three key assumptions that underlie these assumptions: (1) The event probabilities must be independent, (2) the probabilities must be small, and (3) the distributions must be memoryless. Exercises 1 and 2 explore these points.

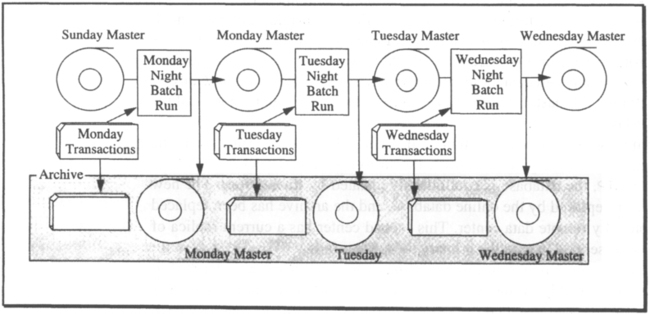

3.1.2 An External View of Fault Tolerance

Fault tolerance is a major concern of transaction processing. Early batch transaction processing systems achieved fault tolerance by using the old master-new master technique, in which the batch of transactions accumulated during the day, week, or month was applied to the old master file, producing a new master file. If the batch run failed, the operation was restarted. To protect against loss or damage of the transaction file (typically a deck of cards), or the master file (typically a tape), an archive copy of each was maintained. Recovery consisted of carefully making a copy of the old master or transaction batch, then using the copy to rerun the batch (see Figure 3.1). Copies of the old masters were retained for five days, then week-old, month-old, and year-old copies were kept. In addition, all transaction inputs were kept. With this data, any old state could be reconstructed.

Programs had internal checks to detect missing and incorrect data. When data was corrupted or missing, the redundant data in the old masters and in the transaction histories made it possible to reconstruct a correct current state.

This design tolerated any single failure and could tolerate several multi-failure scenarios. A failure might delay the batch processing, but the system could tolerate any failure short of destruction of both the archive and active copies of the files. In modern terminology, the old master-new master scheme tolerates any single fault; it is single-fault tolerant. It could be made n-fault tolerant by making n + 1 copies of each item, with n + 1 tape drives and operators, and storing them in n + 1 archives. But this was rarely done—single-fault tolerance was usually considered adequate, especially since the master file could probably be reconstructed from the old, old master (the Sunday Master in Figure 3.1) and the log (all the transactions since Sunday).

The more modern version of the same system is shown in Figure 3.2. In that system, the transactions are not batched but are processed while the customer waits. Using online transaction processing, or OLTP, the database is continuously updated by transactions. The new master database has been replaced by the online database, and the archive has been replaced by a second, geographically remote data center. This second center has a current replica of the data and can take over service in case the primary data center fails.

The bank with the system depicted in Figure 3.2 has gone to considerable lengths to provide its customers with a highly available service. It bought dual fault-tolerant data centers, dual telephone networks, and installed dual automated teller machines (ATMs) at each kiosk. In this example, the ATMs are the most unreliable component of the system, yielding about 99% availability. To mask the unreliability of the ATMs, the bank puts two at each customer site. If one ATM fails, the client can step to the adjacent one to perform the task. This is a good example of analyzing the overall system availability and applying redundancy where it is most appropriate.

Figure 3.2 raises the issue of what it means for a system of many components to be available. If the bank in that figure has 10,000 ATM kiosks, some kiosks (pairs of ATMs) will be out of service at any instant. In addition, it is always possible that the database is down, or at least the part that some customer wants to access is unavailable (being recovered). The system is probably never completely “up,” so the concept of system availability becomes:

System availability. The fraction of the offered load that is processed with acceptable response times.

System availability is usually expressed as a percentage. To apply this idea, suppose the ATMs in Figure 3.2 average one unscheduled outage every 100 days, and the problem takes on average a day to fix.1 Such a high failure rate (1%) dominates the system availability. From the customer’s perspective the system availability is 99% (=100% – 1%) without the duplexed ATMs. If the ATMs are duplexed at each site and if they have independent failure modes, then the customer is denied service only if both ATMs are broken. What is the chance of that? Using Equation 3.1, if the probability of one ATM failing in a day is 1%, then the chance of both breaking in the same day is .01% (.01 • .01). The ATM-pair availability is therefore 99.99%. Using Equation 3.4, this is a 10,000-day mean time to both failing. With 10,000 such kiosks, only one would normally be out of service at any instant.

A 99% availability was considered good in 1980, but by 1990 most systems are operating at better than 99.9% availability. After all, 99% availability is 100 minutes per week of denied service. As the nines begin to pile up in the availability measure, it becomes more convenient to think of availability in terms of denial-of-service measured in minutes per year. For example, 99.999% availability is about 5 minutes of service denial per year (see Table 3.3). Even this metric, though, is a little cumbersome so we introduce the concept of availability class, or simply class. This metric is analogous to the hardness of diamonds or the class of a clean room:

Table 3.3

Availability of typical systems classes. In 1990, the best systems were in the high-availability range with Class 5. The best of the general-purpose systems were in the fault-tolerant range.

| System Type | Unavailability(min/year) | Availability | Class |

| Unmanaged | 52,560 | 90.% | 1 |

| Managed | 5,256 | 99.% | 2 |

| Well-managed | 526 | 99.9% | 3 |

| Fault-tolerant | 53 | 99.99% | 4 |

| High-availability | 5 | 99.999% | 5 |

| Very-high-availability | .5 | 99.9999% | 6 |

| Ultra-availability | .05 | 99.99999% | 7 |

Availability class. The number of leading nines in the availability figure for a system or module. More formally, if the system availability is A, the system class is

![]()

Alternatively, the denial-of-service metric can be measured on a system-wide basis or on a per-customer basis. To see the per-customer view, consider the 99% available ATM that denies service to a customer an average of one time in 100 tries. If the customer uses an ATM twice a week, then a single ATM will deny a customer service once a year on average (≈ 2 • 52 weeks = 104 tries). A duplexed ATM will deny the customer service about once every hundred years. For most people, this is the difference between rarely down and never down.

To give a sense of these metrics, nuclear reactor monitoring equipment is specified to be class 5, telephone switches are specified to be class 6, and in-flight computers are specified to be class 9. In practice, these demanding specifications are sometimes met.

3.2 Definitions

Fault tolerance discussions benefit from terminology and concepts developed by an IFIP Working Group (IFIP WG 10.4) and by the IEEE Technical Committee on Fault-Tolerant Computing. The following sections review the key definitions set forth by these organizations.

3.2.1 Fault, Failure, Availability, Reliability

A system can be viewed as a single module, yet most systems are composed of multiple modules. These modules have internal structures consisting of smaller modules. Although this presentation discusses the behavior of a single module, the terminology applies recursively to modules with internal modules.

Each module has an ideal specified behavior and an observed behavior. A failure occurs when the observed behavior deviates from the specified behavior. A failure occurs because of an error, or a defect in the module. The cause of the error is a fault. The time between the occurrence of the error and the resulting failure is the error latency. When the error causes a failure, it becomes effective; before that, the failure is latent (see Figure 3.4).

For example, a programmer’s mistake is a fault. It creates a latent error in the software. When the erroneous instructions are executed with certain data values, they cause a failure and the error becomes effective. As a second example, a cosmic ray (fault) may discharge a memory cell, causing a memory error. When the memory is read, it produces the wrong answer (memory failure), and the error becomes effective.

The observed module behavior alternates between service accomplishment, when the module acts as specified, and service interruption, when the module’s behavior deviates from the specified behavior. These states are illustrated in Figure 3.4.

Module reliability measures the time from an initial instant to the next failure event. Reliability is statistically quantified as mean-time-to-failure (MTTF); service interruption is statistically quantified as mean-time-to-repair (MTTR).

Module availability measure the ratio of service-accomplishment to elapsed time. Availability is statistically quantified as

![]()

3.2.2 Taxonomy of Fault Avoidance and Fault Tolerance

Module reliability can be improved by reducing failures, and failures can be avoided by valid construction and by error correction. The following taxonomy of validation and correction from the IFIP Working Group [Laprie 1985] may help to define the terms.

Validation can remove errors during the construction process, thus ensuring that the constructed module conforms to the specified module. Since physical components fail during operation, validation alone cannot ensure high reliability or high availability. Error correction reduces failures by using redundancy to tolerate faults. Error correction is of two types.

The first type of error correction, latent error processing, tries to detect and repair latent errors before they become effective. Preventive maintenance is an example of latent error processing. The second type, effective error processing, tries to correct the error after it becomes effective. Effective error processing can either mask the error or recover from the error.

Error masking uses redundant information to deliver the correct service and to construct a correct new state. Error correcting codes (ECC) used for electronic, magnetic, and optical storage are examples of masking. Error recovery denies the requested service and sets the module to an error-free state. Error recovery can take two forms.

The first form of error recovery, backward error recovery, returns to a previous correct state. Checkpoint/restart is an example of backward error recovery. The second form, forward error recovery, constructs a new correct state. Redundancy in time, such as resending a damaged message or rereading a disk page, is an example of forward error recovery.

3.2.3 Repair, Failfast, Modularity, Recursive Design

Section 3.7 specifies the correct behavior and some faults of the classic software modules: processes, messages, and storage. A failure that merely constitutes a delay of the correct behavior—for example, responding in one day rather than in one second—is called a timing failure. Faults can be hard or soft. A module with a hard fault will not function correctly—it will continue with a high probability of failing—until it is repaired. A module with a soft fault appears to be repaired after the failure. Soft faults are also known as transient or intermittent faults. Timing faults, for example, are often soft.

Recall that the time between the occurrence of a fault (the error) and its detection (the failure) is called the fault latency. A module is failfast if it stops execution when it detects a fault (stops when it fails), and if it has a small fault latency (the fail and the fast). The term failstop is sometimes used to mean the same thing.

As shown later, failfast behavior is important because latent errors can turn a single fault into a cascade of faults when the recovery system tries to use the latent faulty components. Failfast minimizes fault latency and so minimizes latent faults.

Modules are built recursively. That is, the system is a module composed of modules, which in turn are composed of modules, and so on down to leptons and quarks. The goal is to start with ordinary hardware, organize it into failfast hardware and software modules, and build up a system (a super module) that has no faults and, accordingly, is a highly available system (module). This goal can be approached with the controlled use of redundancy and with techniques that allow the super-module to mask, or hide, the failures of its component modules. Many examples of this idea appear throughout this chapter and this book.

3.3 Empirical Studies

As Figures 3.1 and 3.2 show, there has been substantial progress in fault tolerance over the last few decades. Early computers were expected to fail once a day (very early ones failed even more often). Today it is common for modules, workstations, disks, memories, processors, and so on, to have mean-time-to-failure (MTTF) ratings of ten years or more (100,000 hours or more). Whole systems composed of hundreds or thousands of such modules can offer MTTF of one month if nothing special is done, or 100 years if great care is taken. The following subsections cite some of the empirical data that support this claim.

3.3.1 Outages Are Rare Events

In former times, everyone knew why computers failed: It was the hardware. Now that hardware is very reliable, outages (service unavailability) are rare, and, since much of our experience is anecdotal, patterns are not so easy to discern. The American telephone network had two major problems in 1990. In one outage, a fire in a midwest switch disabled most of the Chicago area for several days; in another outage, a software error clogged the long-haul network for eight hours. The New York Stock Exchange had four outages in the 1980s. It closed for a day because of a snowstorm, it closed for four hours due to a fire in the machine room, it closed 45 seconds early because of a software error, and trading stopped for three hours due to a financial panic. The Internet was accidentally clogged by a student one day, and so on.

If we look at hundreds of events from many different fault-tolerant systems, the main pattern that emerges is that these events are rare—in fact, very rare. Each is the combination of special circumstances. Some patterns do emerge, however, if we look at the sources of failures.

Perhaps the first thing to notice from the anecdotes above, and this is borne out in the broader context as well, is that few of the outages were caused by hardware faults. Fault-tolerance masks most hardware faults. If a fault-tolerant system failed due to a hardware fault, there was probably also a software error (the software should have masked the hardware fault) or an operator error (the operator did not initiate repair) or a maintenance error (all the standby spares were broken and had not been repaired) or an environmental failure (the machine room was on fire). As explained in greater detail in Sections 3.5 and 3.6, hardware designers have developed very simple and effective ways of making arbitrarily reliable hardware, and software designers have developed ways to mask most of the residual hardware faults. As hardware prices plummet, the use of these techniques is becoming standard. Outages (denial of service) can be traced to a few broad categories of causes:

Environment. Facilities failures such as the machine room, cooling, external power, data communication lines, weather, earthquakes, fires, floods, acts of war, and sabotage.

Operations. All the procedures and activities of normal system administration, system configuration, and system operation.

Maintenance. All the procedures and activities performed to maintain and repair the hardware and facilities. This does not include software maintenance, which is lumped in with software.

Hardware. The physical devices, exclusive of environmental support (air conditioning and utilities).

Software. All the programs in the system.

Process. Outages due to something else. Examples are labor disputes (strikes), shutdowns due to administrative decisions (the stock exchange shutdown at panic), and so on.

This taxonomy gives considerable latitude to interpretation. For instance, if a disgruntled operator destroys the system, is the damage due to sabotage (environment) or operations? Sabotage (environment) is probably the correct interpretation, because it was not a simple operations or maintenance mistake. If the system is located in an area of intense electrical storms, but lacks surge protection, is environment or process the cause? Process should probably be held accountable, because the problem has a solution that the customer has not installed.

3.3.2 Studies of Conventional Systems

Given this taxonomy, what emerges from measurements of “real” systems? Unfortunately, that is a secret. Almost no one wants to tell the world how reliable his system is. Since hundreds or thousands of systems must be examined to see any patterns, there is little hope of forming a clear picture of the situation.

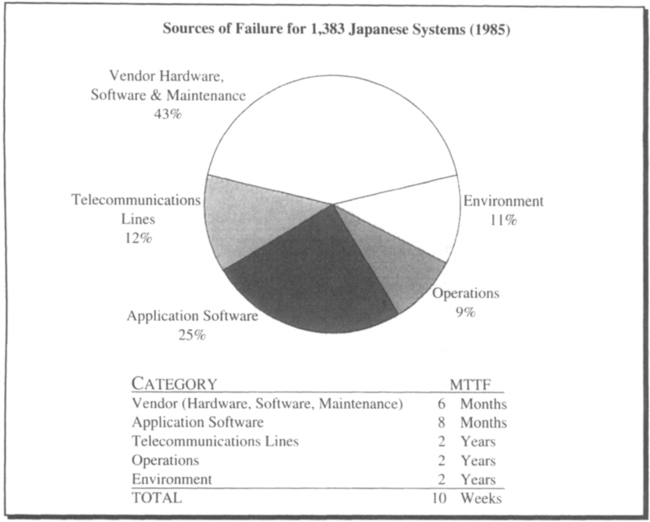

The most extensive public study was done by the Japanese Information Processing Development Corporation (JIPDEC) in 1985. It surveyed the outages reported by 1,383 institutions in 1985 [Watanabe 1986]. The institutions reported 7,517 outages, with average durations of 90 minutes, resulting in a MTTF of about ten weeks and an availability of 99.91%. The study is summarized in Figure 3.5.

This study is especially interesting since these are not fault-tolerant systems. They are just ordinary computers managed by people who have a justly deserved reputation for careful planning and good quality. These statistics compare favorably with the best numbers reported by comparable groups in the United States, and are better by a factor of ten than typical reports (see, for example, the study by Mourad [1985]).

The categories shown in Figure 3.5 do not correspond exactly to the ones introduced earlier; they lump hardware, vendor software, and vendor maintenance together. But the study shows one thing very clearly: if the vendor provided perfect hardware, software, and maintenance, the system MTTF would be four months (this is an application of Equation 3.5). If the goal is to build systems that do not fail for years or decades, then all aspects of availability must be addressed—simple hardware fault tolerance is not enough.

3.3.3 A Study of a Fault-Tolerant System

A more recent study of a fault-tolerant system provides a similar picture. Unfortunately, the study was based on outages reported to the vendor, and therefore it grossly underreports outages due to environment, operations, and application software. It really captures only the top wedge of the figure above. But since data is so scarce in this area, the results are presented with that caveat. The study covered the reported outages of Tandem computer systems between 1985 and 1990, encompassing approximately 7,000 customer years, 30,000 system years, 80,000 processor years, and over 200,000 disk years. The summary information for three periods is shown in Table 3.6.

Table 3.6

Summary of Tandem reported system outage data.

| 1985 | 1987 | 1989 | |

| Customers | 1,000 | 1,300 | 2,000 |

| Outage Customers | 176 | 205 | 164 |

| Systems | 2,400 | 6,000 | 9,000 |

| Processors | 7,000 | 15,000 | 25,500 |

| Disks | 16,000 | 46,000 | 74,000 |

| Reported Outages | 285 | 294 | 438 |

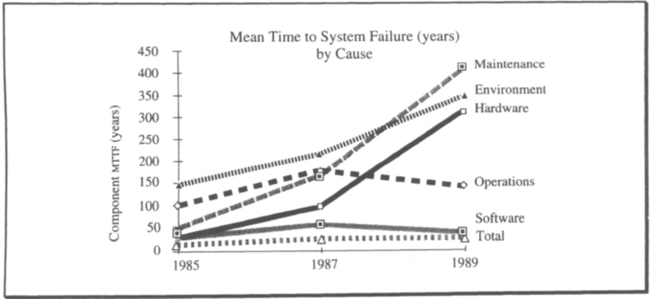

| System MTTF | 8 years | 20 years | 21 years |

Table 3.6 summarizes the statistical base, and Figures 3.7 and 3.8 display the information by category. They show the causes of outages and the historical trends: Reported system outages per 1,000 years (per millennium) improved by a factor of two by 1987 and then held steady. Most of the improvement came from improvements in hardware and maintenance, which together shrank from 50% of the outages to under 10%. By contrast, operations grew from 9% to 15% of outages. Software’s share of the problem got much bigger during the period, growing from 33% to more than 60% of the outages.

Figure 3.7 seems to imply that operations and software got worse, but that is not the case. Figure 3.8 shows that software and operations MTTF stayed about constant, while the other fault sources improved considerably.

Two forces explain the maintenance improvements: technology and design. Disks give the best example of both forces. In 1985, each disk had to be serviced once a year. This involved powering down the disk, replacing an air filter, adjusting the power system, and sometimes adjusting head alignment. In addition, the typical 1985 disk required one unscheduled service call per year for repair. This resulted in 32,000 tasks per year in 1985 for customer engineers, and it created many opportunities for mistakes. In addition, the disk cabinets and connectors were not designed for maintenance, rendering maintenance tasks awkward and requiring special tools. If technology and design had not changed, engineers would have been performing 150,000 maintenance tasks per year in 1989—the equivalent of 175 full-time people just doing the error-prone task of disk maintenance.

Instead, 1990-vintage disks have no scheduled maintenance. Fiber-optic connectors reduce cabling and connectors by a factor of 20, and installation and repair require no tools (only thumb screws are used). All field-replaceable units have built-in self-test and light-emitting diodes that indicate correct operation. In addition, disk MTTF rose from 8,000 hours to over 100,000 hours (observed) since 1985. Disk controllers and power supplies experienced similar dramatic improvements. The result was that the disk population grew ba factor of five while the absolute number of outages induced by disk maintenance shrank by a factor of four—a 2,000% improvement. Almost all the reported disk maintenance problems were with older disks or were incident to installing new disks.

This is just one example of how technology and design have improved the maintenance picture. Since 1985, the size of Tandem’s customer engineering staff has held almost constant and shifted its focus from maintenance to installation, even while the installed base tripled. This is an industry trend; other vendors report similar experience. Hardware maintenance is being simplified or eliminated.

Other hardware experienced a similar improvement in MTTF. Based on Table 3.6, during 1989 there were well over 30,000 hardware faults, yet only 29 resulted in a reported system outage. The vast majority were masked by the software (the Tandem system is single-fault tolerant, requiring two hardware faults to cause an outage). The MTTF of a duplexed pair goes up as the square of the MTTF of the individual modules (see Section 3.6). Thus, minor changes in module MTTF can have a dramatic effect on system MTTF. The clear conclusion is that hardware designers did a wonderful job. Hardware faults were rare, and all but 4% of the ones that did occur were masked by software.

While hardware and maintenance show a clear trend toward improvement, operations and software did not improve. The reason for this lies in the fact that few tools were provided to the operators; thus there is no reason to expect their performance to have changed. According to Figure 3.8, every 150 system-years some operator made a mistake serious enough to crash a system. Clearly, mistakes were made more frequently than that, but most operations mistakes were masked by the system, which has some operator fault tolerance.

Operations mistakes were split evenly between two broad categories: configuration and procedures. Configuration mistakes involve such things as having a startup file that asks the transaction manager to reinitialize itself. This works fine the first time the system starts, but it causes loss of transactions and data integrity when the system is restarted from a crash. Mixing incompatible software versions or using an old version of software are common configuration faults. The most common procedural mistake is letting the system fill up by allowing a file to get so big that either there is no more disk space for it or no new records can be added to it.

No clearer pattern of operations faults emerges from the Tandem study. The basic problem of improving operations is that the only known technique is to eliminate or automate the operations process, replacing operators with software.

As Figure 3.7 shows, software is a major source of outages. The software base (number of lines of code) grew by a factor of three during the study period, but the software MTTF held almost constant. This reflects a substantial improvement in software quality. But if these trends continue, the software will continue to grow at the same rate that the quality improves, and software MTTF will not improve. The software in this study uses many of the software fault tolerance techniques described later in this chapter. But that is not enough; a tenfold improvement in software quality is needed to achieve Class 5 availability. At present, no technique offers such an improvement without huge expense. Section 3.6 surveys the most effective approaches: N-version programming and process-pairs combined with transactions.

Thus far, we examined the failure rates of whole systems. We now look at typical failure rates for system components such as processors, disks, networks, and software.

3.4 Typical Module Failure Rates

System designers need rules of thumb for module failure modes, rates, and repair times. There are huge variations among these. Intervals between failures seem to obey exponential, or even hyper-exponential distributions. This makes talking about averages deceptive. In addition, failure rates often vary over time, following a bathtub curve (see Figure 3.9). The rate in the beginning, often called the burn-in rate, is high. After that, the rate remains low and nearly constant for many years. Then the device begins to age mechanically, or wear out, and the rate rises. Failure rates are usually quoted for the bottom of the bathtub curve. To mask burn-in, manufacturers often operate modules for a few hours or days to detect burn-in faults. Only modules passing this burn-in period are sent to customers.

Two kinds of failure rates are of interest: quoted and observed. Quoted failure rates are those promised by the vendor, while observed failure rates are those seen by the customer. For phone lines and for some modules, the observed numbers are much better than the quoted numbers because the quoted numbers are often guarantees, and the customer has a right to complain if the rate falls below the quoted rate. MTTF is usually quoted in hours, generally with only the first digit significant. Often only the magnitude of the MTTF is significant, so that 10,000 hours could actually be 7,000 or 12,000. With about 8,760 hours in a year, each year has about 10,000 hours. A 50,000-hour MTTF, therefore, means about a 5-year MTTF. Given these very approximate measures, here are some typical MTTFs:

Connectors and cables. Connectors and cables are commonly rated at 1,000 years MTTF. Their failure modes are corrosion of contacts, bent connector pins, and electrical erosion. Connectors and cables have high burn-in failure rates.

Logic boards. Depending on cooling, environment, and internal fault masking, logic boards are rated at between 3 and 20 years MTTF. The ratio of soft to hard faults varies from a low of 1:1 to 5:1 (typical) to greater than 10:1 for badly designed circuits.

Disks. Workstation and PC disks are notoriously unreliable (one-year MTTF observed), whereas “expensive disks” are quite reliable (rated at 5 years, with over 20 years MTTF observed). Table 3.10 gives these statistics (the term ECC refers to the extensive error correcting codes found in disk controllers to mask bad spots on the disk). Recent (June 1990) reports of IBM drives report 570 months MTTF for a population of 3,889 drives. This is approximately a 50-year MTTF. These drives will be obsolete long before they wear out or fail.

Table 3.10

mttf of various disk failures [Schulze 1989].

| Type of Error | MTTF | Recovery | Consequences |

| Soft data read error | 1 hour | Retry or ECC | None |

| Recoverable seek error | 6 hours | Retry | None |

| Maskable hard data read error | 3 days | ECC | Remap to new sector and rewrite good data |

| Unrecoverable data read error | 1 year | None | Remap to new sector, old data lost |

| Device needs repair | 5 years | Repair | Data unavailable |

| Miscorrected data read error | 107 years | None | Read wrong data |

Workstations. Workstation reliability varies enormously. The marketplace is extremely competitive, and manufacturers often economize by having inadequate power, cooling, or connectors. A minimal good-quality terminal is usually rated at three to five years (the display is often the weak link). Workstation hardware is usually rated at one to five years, disks often being the weak link. Workstation software seems to have a two-week MTTF [Long 1990].

Software. Production software has ≈3 design faults per 1,000 lines of code. Most of these bugs are soft; they can be masked by retry or restart. The ratio of soft to hard faults varies, but 100:1 is usual (see Subsection 3.6.2).

Data communications lines (USA). The telephone systems of North America and northern Europe are among the most reliable systems in the world. They are spectacularly well managed and provide admirable service. (The story is not so pleasant elsewhere.) With the advent of fiber optics, line quality is getting even better. Line quality is measured in error-free seconds. Raw fiber media rates are 10−9 BER (bit error rate). This suggests that one message in a million is lost. When all the intermediate components are added, error rates often rise to 10−5. If a message is about a kilobit, this suggests that 1:100 or 1:1000 messages are corrupted. These are soft error rates. Hard errors (outages) vary enormously and are clustered in bursts. Suppliers usually promise 95% error-free seconds, 10−6 BER, and 99.7% availability. There are frequent dropouts of 100 ms or less when no good data is transmitted. Duplexing the lines via independent paths should mask almost all such transient faults. Such duplexing is a service provided in most countries.

LANs. Raw cable and fiber are rated at 10−9 BER and 10−6 bad messages per hour. Most LAN problems arise from protocol violations or from overloads. One study of an Ethernet LAN over a 7-month period observed nine overload events due to broadcast storms, thrashing clients, babbling nodes, protocol mismatch, and analog noise [Maxion 1990]. This suggests a 3-week MTTF.

Power failures. Power is the most serious problem outside Japan and northern Europe. Based on Table 3.11, any system wanting MTTF measured in years should carefully condition its input power and should install a local uninterruptible power supply. In one study of a North American customer who did not do this, 75% of unscheduled outages were related to power failure. Table 3.11 summarizes the rates.

Table 3.11

Frequency and average duration of power outages in Europe and North America. Power in Japan is reputed to be comparable to that in Germany. Power in the Third World is reputed to be worse than that in Italy. Areas with severe electrical storms should expect much worse service. These numbers do not include sags (outages of less than a second) and surges (over-voltages that can burn out equipment). North American source is Tullis [1984].

| Out min/year | Events/year | Avg event(min) | ||

| North America | 125 | 2.3 | 54 | |

| England | 67 | 0.72 | 92 | |

| France | urban | 33 | 0.8 | 41 |

| rural | 390 | 5 | 78 | |

| Germany | urban | 7 | 0.33 | 20 |

| rural | 54 | 1.2 | 45 | |

| Italy | urban | 120 | 2.5 | 48 |

| rural | 300 | 5 | 60 |

These statistics indicate how frequently modules fail. The other important number is how quickly they are repaired. How long does it usually take a system to be repaired? Several studies indicate that two hours is a typical module repair time if the site is near a service center and reservoir of spare parts. Once repair is complete, the system must initiate recovery. The network probably will have shut down by then (most time-outs are less than two hours). One end-to-end indication of system repair times is the Japanese study mentioned previously (see Figure 3.5), which reported an average of 90 minutes. Another study of outage durations reports a median and mean of twice that (see Figure 3.12)—essentially two hours to diagnose and repair the system and about one hour to bring the application and network back to full operation.

Based on Figure 3.12, outages roughly follow a Poisson distribution. The distribution, however, has a very long tail, since long outages are over-represented.

The statistics in this section tell us that modules fail. The question, then, is how can we build a highly reliable system out of imperfect modules? The next few sections present the various approaches to this problem. First, the hardware approach is covered, then fault-tolerant and failfast software, and finally software masking of software and hardware faults.

3.5 Hardware Approaches to Fault Tolerance

John von Neumann pioneered the idea of building fault-tolerant computer systems. Starting with unreliable components, a model of neurons, he did a design for the human mind (a giant brain) with a 60-year MTTF. His charming and seminal paper concludes that the design requires a redundancy of about 20,000: 20,000 wires in each bundle and 20,000 neurons making each decision. Such redundancy was needed in his view, because he considered any fault in the system as a failure. His system had only one level of modularity, it lacked the notion of failfast, and it lacked the notion of repair. These three ideas and their interaction are extremely important. Because they allow computer systems to get by quite well with 104 times lower redundancy (2 rather than 20,000), they are well worth understanding.

3.5.1 The Basic N-Plex Idea: How to Build Failfast Modules

Failfast modules are easily constructed from ordinary hardware. The simplest design, called pairing or duplexing, connects the inputs and outputs of two modules to a comparator that stops if the module outputs disagree; this is the failfast aspect of the design. Although a pair fails about twice as often as a single module, a failfast module gives clean failure semantics; it also reduces the number of cases fault-tolerant software needs to deal with by converting all failures to a very simple class: stopping. To provide both failfast behavior and increased module MTTF, modules are n-plexed, and a voter takes a majority of their outputs. If there is no majority, the voter stops the n-plex module. The most common case in the n-plex design in which n is greater than 2 (n = 2 is simply pairing) is n = 3. This is generally called triple module redundancy, or TMR. The double and triple redundancy cases are shown in Part A of Figure 3.13.

The basic n-plex design generalizes to a recursive one that allows failfast modules to be aggregated into bigger failfast modules, and that tolerates voter failures and connector failures. In that design, each n-plexed module has n voters and n outputs (see Part B of Figure 3.13).

3.5.2 Failfast versus Failvote Voters in an N-Plex

As the voting scheme is described in the previous section, the voter requires that a majority of the modules be available. If there is no majority, the voter stops. This is called failvote. An alternative scheme, called either failfast voting or failfast represents a fundamental refinement. This scheme has the voter first sense which modules are available and then use the majority of the available modules. Thus, a failfast voter can operate with less than a majority of the modules.

If each of the component modules is failfast, then a failfast voter can operate by observing the outputs of the non-faulty modules. In theory, a voter combining the outputs of failfast sub-modules never detects a mismatch; it just detects missing votes from failed modules. For example, disk modules are usually assumed to be failfast. A failfast voter managing a pair of disks can detect disk failures and can still operate if only one of the two disks is functional. This permits the supermodule (disk pair) to function with only one module.

Even if the component modules are not failfast, the voter can still operate with failfast voting. To do so, a module is marked as failed each time it miscompares, and the voter ignores its inputs until it is repaired. Thus the voter forms a majority from the non-faulty modules. Take as an example a 10-plexed module composed of ordinary (not necessarily failfast) modules. A failvote voter operating the 10-plex will fail when five modules have failed. A failfast voter will continue operating until the ninth module fail, because a failfast voter on non-failfast modules requires only two available modules to operate. This indicates that fail-fast voting has better reliability than failvote voting.

Consider a failvote n-plex and ignore failures of the voters (comparators) and connectors. (For the scenario that follows, refer to Figure 3.14.) Given modules, each of which is failfast, with an MTTF of 10 years, the MTTF of the paired supermodule is about five years (10/2 years, using Equation 3.6).2 The MTTF for the triplex system is about 8.3 years (using Equation 3.6, it is 10/3, or about 3.3 years to the first failure and 10/2, or 5 years to the second and fatal failure). The analysis of pair-and-spare is a little more tricky, since the first pair will fail the first time one of the four processors fails. Equation 3.6 says that is expected to happen in 10/4, or 2.5 years. After one pair fails, the remaining pair is expected to operate for five years, as computed here. Thus, pair and spare has a 7.5-year MTTF. This logic (simply adding the times), incidentally, shows how the memoryless property simplifies analysis.

So far, all this redundancy does not look like a good idea. All three schemes cut the MTTF, making failure more likely. However, there are two extenuating circumstances. First, and most important, n-plexing yields failfast modules. Failfast modules have vastly simplified failure characteristics; they either work correctly or they stop. This simplicity makes it possible to reason about their behavior. If modules can have arbitrary failure behavior, there is little hope of building fault-tolerant systems.

The second benefit of triplexing and pair-and-spare is that if soft (transient) faults dominate, then pair-and-spare or TMR can be a big improvement (recall that modules continue to function after soft errors). Both schemes mask virtually all soft faults, and two of the three would have to have exactly the same fault for the voter to pass on the faulty result. Thus if the ratio of hard faults to total faults is 1:100, and if TMR masks all transient faults, the module MTTF rises by a factor of 100 to become 1,000 years MTTF; in addition, TMR failvote improves the MTTF from about 8.3 years to about 8,333 (≈10,000 • (1/3 + 1/2)) using Figure 3.14).

3.5.3 N-Plex plus Repair Results in High Availability

The key to getting this hundred-fold MTTF improvement is repairing the faulted module immediately after the fault. If the module has no internal state, recovery from a soft fault is easy; the module goes on to compute the next state from its next inputs. But typically, the faulted module is a processor or memory with an incorrect internal state. To repair it, the design must somehow resynchronize the faulted module with the other two modules. The easy way to do this is to reset all the modules to their initial states, but this will probably reflect the fault outside the module. Mechanisms to resynchronize the faulted module without disrupting the service of the other two modules are usually complex and ad hoc. For storage modules, such mechanisms consist of rewriting the state of the faulted storage cell. For processing modules, they generally set the state (registers) of the faulted processor to the state of one of the good processors.

The huge benefit of tolerating soft faults is just one aspect of the key to availability: repair. Soft errors postulate instant mean time to module repair; when the module faults, it is instantly repaired. Failvote duplex and failfast TMR both provide excellent reliability and availability if repair is added to the model.

The failure analysis of a failvote n-plex is easy to follow. Each module goes through the cycle of operation, fault, repair, and then operation again. The supermodule (the n-plex module) will fail if all the component modules fail at once. More formally, the n-plex will fail if all but one module are unavailable, and then the available module fails. The analysis first determines the probability that a particular module, N, will be the last to fail (all others have already failed and are still down). The probability that a particular module is unavailable is

![]()

Using Equation 3.1 with Equation 3.7, the probability that the other n – 1 modules are unavailable is then

![]()

Using Equation 3.4, the probability that a module, N, fails is

![]()

Equation 3.1 can combine Equations 3.8, and 3.9 to compute the probability that the last module N fails, and that all the other modules are unavailable:

![]()

This is the probability that module N causes the n-plex failure. There are n such identical modules. To compute the probability that any one of these n modules causes an n-plex failure, Equation 3.10 is combined n times using Equation 3.3:

![]()

Equation 3.11 is therefore the probability that a failvote n-plex will completely fail. Using Equation 3.4 with Equation 3.11 gives the mean time to total failure for a failfast n-plex:

![]()

Applying Equation 3.12 and using the 1-year MTTF with a 4-hour MTTR,

![]()

The corresponding result for a TMR group is

![]()

Starting with two modest one-year MTTF modules, we have now built a one-millennium module! Thousands of these could be used to build a supermodule with a one-year MTTF. The construction can be repeated to obtain a system with a one-millennium MTTF—a powerful idea (see Table 3.15).

Table 3.15

mttf estimates for various architectures using 1-year mttf modules with 4-hour mttr. Note: in the cost column, the letter ε represents a small additional cost.

| MTTF | Equation | Cost | |

| Simplex | 1 year | MTTF | 1 |

| Duplex: failvote | ≈0.5 years | ≈MTTF/2 | 2+ε |

| Duplex: failfast | ≈1.5 years | ≈MTTF(3/2) | 2+ε |

| Triplex: failvote | ≈.8 year | ≈MTTF(5/6) | 3+ε |

| Triplex: failfast | ≈1.8 year | ≈MTTF(11/6) | 3+ε |

| Pair and Spare: failvote | ≈7 year | ≈MTTF(3/4) | 4+ε |

| Triplex: failfast with repair | >10years | ≈MTTF/3MTTR | 3+ε |

| Duplex failfast + repair | >10 years | ≈MTTF/2MTTR | 2+ε |

3.5.4 The Voter’s Problem

There is a nasty flaw in the previous reasoning: Namely, the assumption that the voters and connectors are faultless. The recursive construction was careful to replicate the voters and connectors for this very reason, so that the construction actually tolerates failures in connectors and voters. Fundamentally, however, system reliability and availability are limited by the reliability of the top-level voters.

In some situations, voting can be carried all the way to the physical system. For example, by putting three motors on the airplane flaps or reactor rods and having them “vote” at the physical level, two can overcome the force of the third. Often the voter cannot be moved to the transducer. In those situations, the message itself must be failfast—it must have a checksum or some other consistency check—and the client must become the voter. Two examples of moving the voter to the client appear in Figure 3.16.

The first example in Figure 3.16 is a repetition of the banking system depicted in Figure 3.2. It shows the failure rates for the various system components and indicates that the ATM is the least reliable component. But using the pair-and-spare scheme produces instant repair times (repair in the sense of providing service): If one ATM fails, the client can step to the adjacent ATM. That moves the voter into the customer. If each ATM is failfast, the customer can operate with a correct one.

The second example in Figure 3.16 shows a disk pair. Data is read from either disk and written to both. Each disk and each controller is a failfast module. In addition, the wires are failfast, because the messages they carry are checksum protected. If the message checksum is wrong, the message is discarded. But the client (the processor in this case) must try the second channel (the second path) to the device if the first fails, much as the bank client must try the second ATM if the first fails. These are both examples of moving the voter into the client.

3.5.5 Summary

The foregoing presentation is from the traditional perspective of hardware fault tolerance. The next two sections develop similar ideas from a software perspective. To bridge these two perspectives, it is important to see the following connections:

Failfast is atomic. Failfast means that a hardware (or software) module either works correctly or does nothing. This is the atomic, all-or-nothing aspect of the ACID property (the A in ACID). Atomicity vastly simplifies reasoning about the way a system will fail.

Failfast is possible. There may be skepticism that it is possible to build failfast modules, but Figure 3.13 shows how to build arbitrarily complex hardware systems with the failfast property through suitable use of redundancy.

Reliable plus repair is consistent and durable. If modules fail and are repaired, then a module will always make correct state transitions (eventually), and the module state will be durable. The module will eventually be repaired and continue service. Consistent and durable are represented by the C and D of ACID.

To summarize, reliability plus repair means doing the right thing eventually; the module may stop, but eventually it is repaired and continues to function. Failfast is the A of ACID, and reliability plus repair are the A, C, and D of ACID. High availability modules can be built by n-plexing failfast modules and by using a failfast voting scheme.

Historically, transaction processing systems have been geared toward reliability—never losing the database. But for many reasons, they often delivered poor availability. Many TP systems were designed in an era when one failure per day was considered typical and when 98% availability was considered high. Technology advances and declining hardware prices have shifted the emphasis from reliability to availability, which means doing the right thing and doing it on time. Repair is the key to reliability, since with enough repair the job eventually gets done. Instant repair is the key to high availability: It almost always masks the failure such that module failures do not cause a denial of service. Combined with failfast, instant repair makes all single faults appear to be soft faults. Both TMR and pair-and-spare provide instant MTTR for hardware faults. These techniques make it possible to buy conventional components off the shelf and combine them to build super-reliable and super-available modules. The next sections show how to achieve instant software MTTR, implying highly available software systems.

3.6 Software Is the Problem

Both the Japanese study and the Tandem study of the causes of system failures point to software as a major source of problems (Subsection 3.3.2). There is a clear trend toward using software to mask hardware, environmental, operations, and maintenance faults. Thus, as all the other faults are masked, the software residue remains. In addition, software is being used to automate operations and simplify maintenance. All this implies millions of new lines of code. In general, production programs engineered using the best techniques (structured programming, walk-throughs, careful code inspections, extensive quality assurance, alpha and beta testing) have two or three bugs per thousand lines of code. Using this rule of thumb, a few million lines of code will have a few thousand bugs—megalines have kilobugs.

It is important to realize that perfect software is possible—it’s just a matter of time and money. The following program, for example, is perfect at adding unsigned integers modulo the machine word size:

As an amusing side note, our first version of this program had two bugs! It advertised add rather than modulo word size add, and it used signed integers, which meant it would get overflow and underflow traps on some machines. Now that it has been through QA, it is a perfect program: It does what it says it does.

Writing perfect software takes time for careful design, and money to pay for it. The U.S. space shuttle software is a case in point. At present, it costs $5,000 per line of code. This price3 includes careful design, code reviews, testing, and then more testing. Yet each time a shuttle flies, the pilots are given a known bug list. One such bug was that if two people typed on two keyboards at the same time, the input buffer would get the OR of the two keyboard inputs (the workaround was to only use one keyboard at a time). How could such a gross error get past such an expensive test process? Obviously, the U.S. government did not have enough time and money. No one, though, has more time and money than the U.S. government, which means that for practical purposes, perfect software of substantial complexity is impossible until someone breeds a species of super-programmers.

Few people believe design bugs can be eliminated. Good specifications, good design methodology, good tools, good management, and good designers are all essential to quality software. These are the fault-prevention approaches, and they do have a big payoff. However, after implementing all these improvements, there will still be a residue of problems.

3.6.1 N-Version Programming and Software Fault Tolerance

The main hope of dealing with design faults is for designers to develop techniques to tolerate design faults, much as hardware designers are able to tolerate hardware faults. Of course, hardware design is software, so hardware designers have the same problem: Their techniques to tolerate physical hardware faults do not mask design faults. There are two major software fault tolerance techniques:

N-version programming. Write the program n times, test each program carefully, and then operate all n programs in parallel, taking a majority vote for each answer. The resulting design diversity should mask many failures.

Transactions. Write each program as an ACID state transformation with consistency checks. At the end of the transaction, if the consistency checks are not met, abort the transaction and restart. Rerunning the transaction the second time should work.4

Both these approaches are statistical and both have merit, but both can fail if there is a fault in the original specification.

The n-version programming approach is expensive to implement and to maintain. That is because to get a majority, n must be at least 3. In addition, the n-version programming approach suffers from the “average IQ” problem. If n students are given a quiz, all of them will get the easy problems right, some will get the hard problems right, and almost none will get the hardest problem right (if it is a good quiz). Also, several will make the same mistakes. An n-version program taking this quiz would score in the 60th percentile. It would solve all the easy problems and might even solve a few of the harder problems. However, there would be no consensus, or consensus would be wrong, on the hardest problems.

A final problem with n-version programming is that module repair is not trivial. Since each module has a completely different internal state, one cannot simply copy the state of a good module to the state of a failing module. Without repair, n-plexing has a worse MTTF than simplexing (see Table 3.15).

N-version programming is often used in high-budget projects employing many low-budget programmers. You can imagine the controversy surrounding this idea. Better results might be obtained by spending three times more on better-quality programmers and on better infrastructure, or on more careful testing of one program.

3.6.2 Transactions and Software Fault Tolerance

Transactions are even more of a gamble. When a production computer system crashes due to software, computer users do not wait for the software to be fixed. They don’t wait for the next release, but instead restart the system and expect it to work the next time; after all, they reason, it worked yesterday. By using transactions, a recent consistent system state is restored so that service can continue. The theory is that it was a Heisenbug that crashed the system. A Heisenbug is a transient software error (a soft software error) that only appears occasionally and is related to timing or overload. Heisenbugs are contrasted to Bohrbugs which, like the Bohr atom, are good, solid things with deterministic behavior.

Although this is preposterous, the test of a theory is whether it explains the facts—and the Heisenbug theory does explain many observations. For example, a careful study by Adams [1984] of all software faults of large IBM systems over a five-year period showed that most bugs were Heisenbugs. The Adams study dichotomized bugs into benign bugs—ones that had bitten only one customer once—and virulent bugs—ones that had bitten many customers or had bitten one customer many times. The study showed that the vast majority (well over 99%) of the bugs were benign (Heisenbugs). Adams also concluded from this study that customers should not rush to install bug fixes for benign bugs, as the expense and risk are unjustified.

There are several other instances of the Heisenbug idea. Most large software systems have data structure repair programs that traverse data structures, looking for inconsistencies. Called auditors by AT&T and salvagers by others, these programs heuristically repair any inconsistencies they find. The code repairs the state by forming a hypothesis about what data is good and what data is damaged beyond repair. In effect, these programs try to mask latent faults left behind by some Heisenbug. Yet, their techniques are reported to improve system mean times to failure by an order of magnitude (for example, see the discussion of functional recovery routines in Mourad [1985] or in Chapter 8 of this book).

Heisenbug proponents suggest crashing the system and restarting at the first sign of trouble; this is the failfast approach. It appears to make things worse, since the system will be crashing all the time, and the database and network will be corrupted when the system is restarted. This is where transactions come in. Transactions, and their ACID properties, have four nice features:

Isolation. Each program is isolated from the concurrent activity of others and, consequently, from the failure of others.

Granularity. The effects of individual transactions can be discarded by rolling back a transaction, providing a fine granularity of failure.

Consistency. Rollback restores all state invariants, cleaning up any inconsistent data structures.

These features mean that transactions allow the system to crash and restart gracefully; the only thing lost is the time required to crash and restart. Transactions also limit the scope of failure by perhaps only undoing one transaction rather than restarting the whole system. But the core issue for distributed computing is that the whole system cannot be restarted; only pieces of it can be restarted, since a single part generally doesn’t control all the other parts of the network. A restart in a distributed system, then, needs an incremental technique (like transaction undo) to clean up any distributed state. Even if a transaction contains a Bohrbug, the correct distributed system state will be reconstructed by the transaction undo, and only that transaction will fail.

The programming style of failfast software designs is called defensive programming by analogy with the defensive automobile driving style advocated by traffic-safety experts. Defensive programming advocates that every software module check all its inputs and raise an exception if the inputs are incorrect. This essentially makes the software module failfast. The module checks all its parameters, and as it traverses internal data structures it checks their integrity. To give a specific example, a program traversing a doubly linked list checks the back-pointer in the next block to be sure that it points to the previous block, and the program checks other redundant fields in the block for sanity. If a list element does not satisfy these tests, then an error has been detected, and it is repaired by discarding the block or by repairing it. Whenever a module calls a subroutine, the callee checks the parameters for sanity, and the caller checks the routine’s results. In case an error is found, an exception handler is invoked. Exception handlers are much like the repair programs mentioned earlier in this section. The exception handler either masks the exception (if it is an internal inconsistency that can be repaired), or the module reflects the exception back to the caller (if it is the caller’s error). In extreme cases, the exception handler cannot mask the fault and, consequently, reflects it as a transaction abort, a subsystem restart, or another coarse form of recovery.

Failfast creates a need for instant crash and restart. This again may seem a preposterous approach, but computer system architectures are increasingly adopting this approach. The concept of process pair (covered in Subsection 3.7.3) specifies that one process should instantly (in milliseconds) take over for the other in case the primary process fails. In the current discussion, we take the more Olympian view of system pairs, that is two identical systems in two different places. The second system has all the data of the first and is receiving all the updates from the first. Figure 3.2 has an example of such a system pair. If one system fails, the other can take over almost instantly (within a second). If the primary crashes, a client who sent a request to the primary will get a response from the backup a second later. Customers who own such system pairs crash a node once a month just as a test to make sure that everything is working—and it usually is.

If Heisenbugs are the dominant form of software faults, then failfast plus transactions plus system pairs result in software fault tolerance. Geographically remote system pairs tolerate not just Heisenbugs, but many other problems as well. They tolerate environmental faults, operator faults, maintenance faults, and hardware faults. Two systems in two different places are not likely to have the same environmental problems: They are on different power grids, different phone grids, and different earthquake faults, and they have different weather systems overhead. They have independent operations staffs, different maintenance personnel, and different hardware. All this means that the two systems have largely independent failure modes. Section 12.6 develops the concept of system pairs in more detail.

3.6.3 Summary

Software faults are the dominant source of system failures. All other faults can be masked with a combination of redundancy, geographic diversity, and software to automate tasks. Software can automate system operations and mask operations and maintenance failures. Software faults, however, remain an unsolved problem.

There are two approaches to software fault tolerance: n-version programming and transactions. The two approaches could be combined. Advocates of n-version programming aim to combine several incorrect programs into a better, more reliable one. N-version programming may also be a good way to write failfast programs. Transaction advocates aim to detect incorrect programs and minimize their effects by undoing them. Transactions encourage a failfast design by allowing the system to quickly crash and restart in the most recent consistent state. By having a standby system, restart can begin within milliseconds.

3.7 Fault Model and Software Fault Masking

The application of the pair-and-spare or n-plex techniques to software modules is not obvious. How do you pair-and-spare a software module? How do you n-plex messages, remote procedure calls, and the like? The answer to these questions—process pairs—is neither trivial nor a direct application of the hardware n-plex and pair-and-spare approaches described in the previous section. To the authors’ knowledge, the best approach to process pairs was worked out in an unpublished classic written by Butler Lampson and Howard Sturgis in 1976 at Xerox. At about the same time Joel Bartlett designed and implemented similar ideas for Tandem’s Guardian operating system. The Lampson-Sturgis model has widely influenced subsequent work in the field; but, unfortunately it is not widely available. Bartlett’s work is even more inaccessible. The presentation here borrows heavily from those original works. The process pair discussion comes from experience with Bartlett’s design.

Designing fault-tolerant programs requires a model. The model must define correct behavior, and if the programs are to deal with faults, the model must describe the kinds of faults and their relative frequencies. Given such a model, programs can be written, system reliability can be estimated using probabilistic methods, and proofs that the programs are correct can be made with any desired degree of formality. The validity of the model cannot be established by proof, since there is no formal model of reality. The strongest possible claim is that the physical system behaves like the model with probability p, and that p is very close to 1. This claim can only be established empirically, by estimating p from measurements of real systems.

The model here involves three entity types: processes, messages, and storage.5 Each has a set of desired behaviors and a set of failure behaviors. This section shows how to transform each of the three entities from failfast entities into highly reliable and even highly available entities.

Faulty behavior is dichotomized into expected faults (those tolerated by the design), and unexpected faults (those that are not tolerated). Unexpected faults can be characterized as dense faults or Byzantine faults:

Dense faults. The algorithms will be n-fault tolerant. If there are more than n faults within a repair period, the service may be interrupted.

Byzantine faults. The fault model postulates certain behavior—for example it may postulate that programs are failfast. Faults in which the system does not conform to the model behavior are called Byzantine.

It is highly desirable that the system be failfast in the dense fault cases. That is, if there are more than n-faults in the repair window, the system should stop rather than continue the computation in some unspecified way. It is possible to measure the rate of unexpected faults in real systems and observe that the rate is small. Some representative measurements appeared in Sections 3.3 and 3.4.

Picking the model is a very painful and delicate process. One is constantly tom between simplicity and completeness. For example, when a storage module breaks and is repaired, in our model it returns to service in the empty state. A more complete model would have two kinds of repair. But a more complete model would be much bigger and would not make the ideas much clearer. The goal here is to provide a basis for understanding the fundamental issues and algorithms.

3.7.1 An Overview of the Model

The model describes each aspect of the system—storage, processors, and communications—by presenting a program that simulates the behavior of such entities, complete with their failure characteristics. For example, a storage module reads and writes data at storage addresses, but it also occasionally writes the data to the wrong place, invalidates a page, or damages the whole store (the latter two events require the store to be repaired). Given these errant storage modules, the presentation then shows programs to mask the failures through failvote duplexing and writes a program to repair the failures in the background.

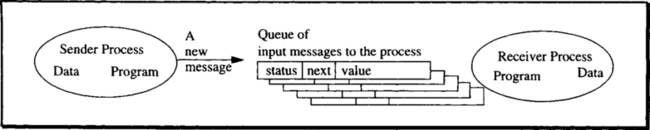

The presentation then models processes and messages. Messages can be lost, duplicated, delayed, corrupted, and permuted. By implementing sessions, timeouts, and message sequence numbers, all message failures are converted to lost messages. By combining this simple failure model with message acknowledgment and sender timeout plus message retransmission, the message system becomes highly available.

These techniques for building reliable stores and reliable messages demonstrate how software masks hardware faults, but they have little to do with masking software faults. Still, the presentation is instructive in that it sets the stage for the software fault tolerance discussion that follows.

Processes fail by occasionally being delayed for some repair period, having all their data reset to the null state, and then having all their input and output messages discarded. The presentation then uses this model to show how to build process-pairs. One process, called the primary, does all the work until it fails; then the second process, called the backup, takes over for the primary and continues the computation. During normal processing, the primary periodically sends I’m Alive messages to the backup. If the backup does not receive an I’m Alive message from the primary for a couple of messages periods, it assumes the primary has failed and takes over for the primary. Three kinds of takeover are described:

Checkpoint-restart. The primary records its state on a duplexed storage module. At takeover, the backup starts by reading these duplexed storage pages.

Checkpoint message. The primary sends its state changes as messages to the backup. At takeover, the backup gets its current state from the most recent message.

Persistent. The backup restarts in the null state and lets the transaction mechanism clean up (undo) any recent uncommitted state changes.

The benefits and programming styles of these three forms of process pairing are contrasted. To preview that discussion:

Quick repair. Process pairs obtain high availability processes by providing quick process repair.

Basic Pairs must Checkpoint. Certain programs are below the transaction level of abstraction and must therefore use some form of checkpointing to get highly available program execution. Examples of such primitive programs are the transaction mechanism itself, the operating system kernel, and the programs that control physical devices (disks and communications lines).

Persistent is Simple. Checkpointing of any sort is difficult to understand. Most people will want to use transactional persistent processes instead.

Process pairs mask hardware failures (processor failures) as well as transient software failures (Heisenbugs). As such, they are the key to software fault tolerance.

This concludes the overview. The following discussion turns to describing the behavior of storage, processes, and messages by the behavior of programs that simulate their behavior. The Lampson-Sturgis model is very instructive and provides a clear way of contrasting some subtle issues. However, the material is very challenging. Readers who do not care to delve in depth into this subject may wish to skim the rest of this section.

3.7.2 Building Highly Available Storage

Reliable storage is built as follows: First, the basic storage operations and failure behavior are defined. Then higher-level operations are defined; with high probability, these mask the failure behavior by n-plexing the devices. This is the analog of the hardware discussion of Section 3.6, but it is less abstract, since specific modules and specific failure modes are involved.

3.7.2.1 The Structure of Storage Modules

A storage module contains an array of pages and a status flag. If the module status is FALSE, then it has failed, and all operations on it return FALSE. Each page of a store has an address, a value, and a status. Addresses are positive integers up to some limit. The status is either TRUE or FALSE. If the page status is FALSE, the page value is invalid; otherwise, the page value stores the data most recently written to it. Two operations are defined on storage modules—one to write page values, and another to read them. Rewriting a page makes it valid (makes its status TRUE). Intuitively, these definitions are designed to model disks or RAM disks.

3.7.2.2 Definition of Store and of Store Read and Write

The definitions of the data structures for the programs are given in the following listing and are illustrated in Figure 3.17.

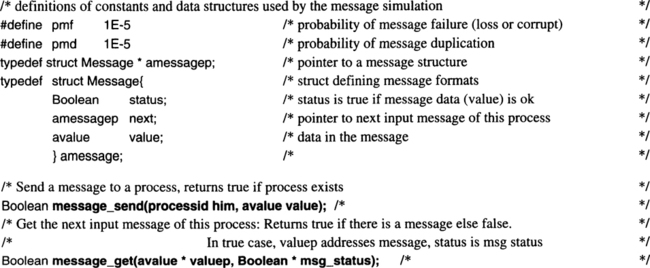

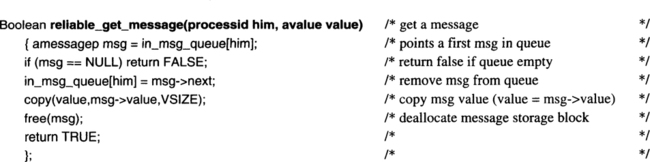

Storage objects support two operations: read and write. They are defined by the simple code fragments that follow. Notice in particular that writes may occasionally fail (with probability pwf) by having no effect.

These programs model, or simulate, durable storage devices such as disks, tapes, and battery-protected electronic RAM. For simplicity, the code does not model soft read faults (a read that fails but will be successful on retry). It does model the simple case of a write occasionally having no effect at all (null writes in the third statement of store_write()). This happens rarely, with a probability pwf (probability of write failure). As explained in the next paragraph, address faults (a write of a page other than the intended page) are modeled by page decay (spontaneous page failure) and by null writes (writes that have no effect).

The model assumes that reads and writes of incorrect locations produce a FALSE status flag and consequently are detectable errors. Both appear to be a spontaneous decay of the page (see below). Incorrect reads and writes are typically detected by storing the page address as part of the page value, so that a read of a wrong page produces a FALSE status flag. This mechanism also detects a write of a correct value to an incorrect address when the overwritten data is subsequently read, because the page address stored in the value will not match the page’s store address. In addition, each page is covered by a checksum that is used as follows:

Write. When a page is written, the writer computes the page checksum and stores it in the page, and

Read. When the page is read, the checksum is recomputed from the value and compared to the original checksum stored in the page.

If the checksums do not match, the page is invalid. All this validity checking is implicit in the status flag.

3.7.2.3 Storage Decay

Each store and each page may fail spontaneously, or a page may fail due to an incorrect store_write(). The spontaneous failure is modeled by a decay process for each store. Operating in the background, this decay process occasionally causes a page to become invalid (status = FALSE) or even invalidates the entire store. The model postulates that store and page errors are independent and that the frequency of such faults obeys a negative exponential distribution with means MTTVF and MTTSF, respectively. Table 3.10 suggests values for such means. The storage decay process is given in the code segment that follows.

As defined, storage gradually decays completely. According to the parameters described earlier, half the stores will have decayed after three years. Such stores are not reliable—reliable devices continue service from their pre-fault state when the fault is repaired. The model assumes that each store and each store page are failfast and are repaired and returned to service in an empty state; that is, all pages have status = FALSE. Algorithms presented next will make them reliable.

3.7.2.4 Reliable Storage via N-Plexing and Repair

In Section 3.6, we saw that repair is critical to availability. The goal is to build reliable stores from off-the-shelf, unreliable components. A reliable store can be constructed by n-plexing the stores, by reading and writing all members of an n-plex group, and by adding a page repair process for each group of stores. Storage is assumed to have exponentially distributed repair times with a mean of a few hours (MTSR ≈ 104 seconds). This includes the latency to detect storage faults.