Global resource serialization (GRS)

In a multitasking, multiprocessing environment, resource serialization is the technique used to coordinate access to resources that are used by more than one application task. When multiple users share data, a way to control access to that data is needed. Users that update data, for example, need exclusive access to that data. If several users try to update the same data at the same time, the result is data corruption. In contrast, users who only read data can safely access the same data at the same time.

Global Resource Serialization (GRS) is a z/OS component that offers the control needed to ensure the integrity of resources in a multisystem sysplex environment. Combining the z/OS systems that access shared resources into a global resource serialization complex enables you to serialize resources across multiple systems.

This chapter presents an overview of GRS covering the following topics:

•GRS benefits

•Resource access and GRS queue

•GRS services: enqueue, dequeue, and reserve

•GRS configurations: ring and star topology

•Migrating to a GRS star configuration

•Operational changes

•GRS exits

•GRS operator commands

4.1 GRS introduction

Figure 4-1 GRS introduction

GRS introduction

In a multisystem sysplex, z/OS provides a set of services that applications and subsystems can exploit. Multisystem applications reside on more than one z/OS system and give a single system image to the users. This requires serialization services that extend across z/OS systems.

GRS provides the services to ensure the integrity of resources in a multisystem environment. Combining the systems that access shared resources into a global resource serialization complex enables serialization across multiple systems.

GRS complex

In a GRS complex, programs can serialize access to data sets on shared DASD volumes at the data set level rather than at the DASD volume level. A program on one system can access one data set on a shared volume while other programs on any system can access other data sets on the same volume. Because GRS enables jobs to serialize resources at the data set level, it can reduce contention for these resources and minimize the chance of an interlock occurring between systems.

GRS ENQ

An ENQ (enqueue) occurs when GRS schedules use of a resource by a task. There are several techniques to implement serialization (or semaphores) among dispatchable units (TCBs and SRBs) in z/OS such as: enqueue (ENQ), z/OS locks, and z/OS latches. GRS deals with resources protected by ENQs only.

4.2 Resource access and GRS queue

Figure 4-2 Resource access and GRS queue

Resource access and GRS queue

When a task (executing a program) requests access to a serially reusable resource, the access can be requested as exclusive or shared. When GRS grants shared access to a resource, no exclusive users are getting access to the resource simultaneously. Likewise, when GRS grants exclusive access to a resource, all other requestors for the resource wait until the exclusive requestor frees the resource.

Because global resource serialization enables jobs to serialize their use of resources at the data set level, it can reduce contention for these resources and minimize the chance of an interlock occurring between systems. This ends the need to protect resources by job scheduling. Because global resource serialization maintains information about global resources in system storage, it does away with the data integrity exposure that occurs when there is a system reset while a reserve exists. A global resource serialization complex also allows serialization of shared logical resources – resources that might not be directly associated with a data set or DASD volumes. An ENQ with a scope of SYSTEMS might be used to synchronize processing in multisystem applications.

GRS resource requests

GRS manages the requests to a resource on a first-in-first-out (fifo) basis. For better understanding of how GRS manages a queue, consider the example shown in Figure 4-2:

1. Instance T1, user1 has exclusive access to the resource, all others wait in the queue.

2. When user1 releases the resource, the next user in the queue (user2) receives access to the resource. User2 requested shared access. The following users in the queue requesting shared access also get access to the resource, as shown in the T2 instance. The queue stops at the next user waiting for exclusive access, user6. Note that users wanting shared access behind user6 still wait in the queue. This behavior is to avoid the aging of user6 in the queue. Then, just one exclusive requester can block the full queue.

3. When the last shared user releases the resource, user6 (T3) receives access. Because user6 requested exclusive access, only user6 uses the resource. It doesn’t matter whether the access requested by the next user in the queue is shared or exclusive.

4. When user6 releases the resource, the next user in the queue (user7) receives access. Because the requested access is shared, GRS follows the queue, granting shared access, stopping at the next user requesting exclusive access, user10, as shown in T4.

4.3 Resource scope: Step, system, systems

Figure 4-3 Resource scope: Step, system, systems

Resource scope: Step, system, systems

When requesting access to a resource, the program must provide the scope of the resource. The scope can be: STEP, SYSTEM, and SYSTEMS. To avoid data exposure or affecting system performance, the correct resource scope must be given to GRS when requesting serialized access to a resource. Figure 4-3 illustrates what happens when resource serialization occurs according to the scope requested:

1. Suppose TASK1, running in the CICSA address space, wants to alter an internal area named TAB_A, which is not used at the moment of the request. TAB_A is also accessible by other tasks running in the same address space, CICSA. To ensure data integrity, TASK1 must request exclusive access to TAB_A. A different resource, with the same name, exists also in another address space (CICSB) in system SYSB. Let’s see what happens with TAB_A with different scope scenarios:

STEP GRS prevents any other task from accessing TAB_A in CICSA ASID. If TASK2 requests access, TASK2 must wait until TASK1 releases TAB_A.

SYSTEM GRS prevents any other task in any address spaces in SYSA from using a resource with the same name.

SYSTEMS GRS prevents any other task in any address spaces in the Parallel Sysplex from using a resource with the name TAB_A. Then, if any task in CICSB address space requests access to TAB_A in the same address space, GRS enqueues the task until TASK1 releases TAB_A in CICSA ASID.

2. Suppose JOB A1, in SYSA, requests exclusive access to data set APPLIC.DSN, which is also accessible by other systems. To protect data set integrity, the resource access must be SYSTEMS.

3. Suppose JOB A2 requests access to data set SYS1.PARMLIB on VOL140, cataloged only in SYSA. At the same time JOB B2, in SYSB, requests access to SYS1.PARMLIB on VOL150, cataloged only on SYSB. To avoid any impact, both must request their access with scope SYSTEM. Otherwise the second request would wait even if different resources are accessed.

Local resource

A local resource is a resource requested with a scope of STEP or SYSTEM. It is serialized only within the z/OS processing the request for the resource. If a z/OS is not part of a global resource serialization complex, all resources (with the exception of resources serialized with the RESERVE macro), regardless of scope (STEP, SYSTEM, or SYSTEMS), are local resources.

Global resource

A global resource is a resource requested with a scope of SYSTEMS. It is serialized among all systems in the complex.

RESERVE macro

The RESERVE macro serializes access to a resource (a data set on a shared DASD volume) by obtaining control of the volume on which the resource resides to prevent jobs on other systems from using any data set on the entire volume. There is an ENQ associated with the volume RESERVE; this is required because a volume is reserved by the z/OS image and not the requester’s unit of work. As such, the ENQ is used to serialize across the requesters from the same system.

4.4 GRS macro services

Figure 4-4 GRS macro services

GRS macro services

GRS services are available throughout assembler macros. The macros are: ENQ, DEQ, ISGENQ, and RESERVE. The ENQ API issuer provides a qname, rname, and scope to identify the resource to serialize; this is all that the DISPLAY GRS command can use to present information about the resource. In many cases, it is hard for the operator or systems programmer to understand what these values represent. Global resource serialization provides the ISGDGRSRES installation exit to allow the application to add additional information for a given resource on the DISPLAY GRS output.

ISQENQ macro

The ISGENQ macro combines the serialization abilities of the ENQ, DEQ, and RESERVE macros. ISGENQ supports AMODE 31 and 64 in primary or AR ASC mode.

With the ISGENQ macro you can:

•Obtain a single resource or multiple resources with or without associated device reserves.

•Change the status of a single existing ISGENQ request or multiple existing ISGENQ requests.

•Release serialization from a single or multiple ISGENQ requests.

•Test an obtain request.

•Reserve a DASD volume.

Local and global resource

When an application uses a macro (ISGENQ, ENQ, DEQ, and RESERVE) to serialize resources, global resource serialization uses the RNL, various global resource serialization installation exits, and the scope on the macro to determine whether a resource is a local resource or a global resource, as follows:

•A local resource is a resource requested with a scope of STEP or SYSTEM. It is serialized only within the system processing the request for the resource. If a system is not part of a global resource serialization complex, all resources (with the exception of resources serialized with the RESERVE macro), regardless of scope (STEP, SYSTEM, SYSTEMS), are local resources.

•A global resource is a resource requested with a scope of SYSTEMS or SYSPLEX. It is serialized among all systems in the complex. The ISGENQ, ENQ, DEQ, and RESERVE macros identify a resource by its symbolic name. The symbolic name has three parts:

– Major name: qname

– Minor name: rname

– Scope: The scope is one of the following:

STEP - SYSTEM - SYSTEMS - SYSPLEX

The resource_scope parameter determines which other tasks, address spaces, or systems can use the resource.

Resource serialization

Resource serialization offers a naming convention to identify the resources. All programs (z/OS inclusive) that share the resources must use the major_name, minor_name, and scope value consistently.

A resource could have a name of APPL01,MASTER,SYSTEM. The major name (qname) is APPL01, the minor name (rname) is MASTER, and the scope is SYSTEM. Global resource serialization identifies each resource by its entire symbolic name. That means a resource that is specified as A,B,SYSTEMS is considered a different resource from A,B,SYSTEM or A,B,STEP because the scope of each resource is different. In addition, the ISGENQ macro uses the scope of SYSTEMS and the scope of SYSPLEX interchangeably, so a resource with the symbolic name of A.B.SYSTEMS would be the same as a resource with the name A.B.SYSPLEX.

Obtaining a resource is done through the ENQ (or ISGENQ) macro, and requests (using a name) either shared or exclusive control of one or more serially reusable resources. Once control of a resource has been assigned to a task, it remains with that task until the DEQ macro is issued. If any of the resources are not available, the task is placed in a wait condition until all of the requested resources are available. If the resource is granted, the task is not placed in wait state and the next instruction in the program requesting the ENQ is naturally executed,

Release resource access

The issuing of the DEQ macro (or ISGDEQ) by the resource owner releases the resource from the task. Also, when a task ends abnormally the recovery termination manager (a z/OS component) issues automatically DEQs to all the ENQ resources held by the abending task.

|

Note: In addition, the ISGENQ macro uses the scope of SYSTEMS and the scope of SYSPLEX interchangeably, so a resource with the symbolic name of A.B.SYSTEMS would be the same as a resource with the name A.B.SYSPLEX. z/OS services use standard major names for resource serialization. For data set access, the major name is SYSDSN, the minor name is the data set name.

|

4.5 RESERVE macro service

Figure 4-5 RESERVE macro service

RESERVE macro service

The RESERVE macro reserves a DASD device for use by a particular system. The macro must be issued by each task needing access to a shared DASD. The RESERVE macro locks out other systems sharing the device. Reserve is a combination of GRS ENQ (to protect tasks of the same z/OS) and hardware I/O reserve that is implemented by the DASD I/O controller.

The hardware I/O reserve actually occurs when the first I/O originated in the z/OS, where the reserve was originated done to the device after the RESERVE macro is issued. The Synchronous reserve feature (SYNCHRES option), allows an installation to specify whether the z/OS should obtain a hardware reserve for a device prior to granting a global resource serialization ENQ. This option might prevent jobs that have a delay between a hardware reserve request being issued and the first I/O operation to the device. Prior to the implementation of the SYNCHRES option, the opportunity for a deadlock situation was more likely to occur.

The RESERVE macro has by default a scope of SYSTEMS, contrary to ENQ and DEQ, which can have either STEP, SYSTEM, or SYSTEMS. When the reserving program no longer needs the reserved device, a DEQ must be issued to release and free the resource.

Collateral effects of RESERVE

The RESERVE macro serializes access to a resource (a data set on a shared DASD volume) by obtaining control of the entire volume. This is due to the hardware reserve function done by the DASD controller. Then, jobs on other systems are prevented from using any data set on this volume. Serializing access to data sets on shared DASD volumes by RESERVE macro protects the resource, although it might create several critical problems:

•When a task on one system has issued a RESERVE macro to obtain control of a data set, no programs on other systems can access any other data set on that volume. Protecting the integrity of the resource means delay of jobs that need the volume but do not need that specific resource, as well as delay of jobs that need only read access to that specific resource. These jobs must wait until the system that issued the reserve releases the device. This scenario gets worse because now you may have 3390 volumes with a very big capacity, such as 27 GB.

Because the reserve ties up an entire volume, it increases the chance of an interlock (also called a deadlock) occurring between two tasks on different systems, as well as the chance that the lock expands to affect other tasks. (An interlock is unresolved contention for use of a resource.)

•A single system can monopolize a shared device when it encounters multiple reserves for the same device because the system does not release the device until DEQ macros are processed for all of those reserves. No other system can use the device until the reserving system releases the device. This is also called a long reserve.

•A system reset while a reserve exists terminates the reserve. The loss of the reserve can leave the resource in a damaged state if, for example, a program had only partly updated the resource when the reserve ended. This type of potential damage to a resource is a data integrity exposure.

An installation using GRS can override RESERVE macros without changing existing programs, using GRS Resource Name Conversion Lists in SYS1.PARMLIB. This technique overcomes the drawbacks of the RESERVE macro and can provide a path for installation growth by increasing the availability of the systems and the computing power available for applications. Using the conversion list the RESERVE macro behaves as a SYSTEMS ENQ, or in other word, it will be GRS the one in charge of the data set serialization.

The RESERVE macro is only recommended to serialize data sets among z/OS systems located in different sysplexes. In this case the current GRS version cannot protect the data set resource.

4.6 Resource name list (RNL)

Figure 4-6 Resource name list (RNL)

Resource name list (RNL)

Some environments may require a different resource scope from that specified by the application through ENQ and ISGENQ macros. GRS allows an installation to change the scope of a resource, without changing the application, through the use of lists named Resource Name List (RNL).

There are three RNLs available:

SYSTEM INCLUSION RNL Lists resources for requests with scope SYSTEM in the macros to be converted to scope SYSTEMS.

SYSTEMS EXCLUSION RNL Lists resources for requests with scope SYSTEMS in the macros to be converted to scope SYSTEM.

RESERVE CONVERSION RNL Lists resources for RESERVE requests for which the hardware reserve is to be suppressed and the requests are treated as ENQ requests with scope SYSTEMS.

Resource name list specification

By placing the name of a resource in the appropriate RNL, GRS knows how to handle this specific resource. The RNLs enable you to build a global resource serialization complex without first having to change your existing programs.

A very important comment is that all the RNLS have a sysplex scope, that is, all the z/OS systems in the sysplex must share the same set of RNLs. Use the RNLDEF statement to define an entry in the RNL, as shown in Figure 4-6. Each RNL entry indicates whether the name is generic, specific, or pattern, as follows:

•Specific resource name entry matches a search argument when they are exactly same.

•Generic resource name entry is a portion of a resource name. A match occurs whenever the specified portion of generic resource name entry matches the same portion of the input search argument.

•Installation can use RNL wildcarding to pattern match resource names; you can use an asterisk to match on any number of characters up to the length of respective major_name (up to 8) or minor_name (up to 255) or use a (?) to match on a single character.

Using wildcarding, you can convert all RESERVEs to global ENQs with a single statement. To use RNL wildcarding you have to specify TYPE(PATTERN) in the RNLDEF statement.

You can use the DISPLAY GRS,RNL=xxxx command to display all the lists or just one of them. Also with SET GRSRNL=xxxx is possible to change dynamically the RNLs in the sysplex.

When an application uses the ENQ, ISGENQ, DEQ, or RESERVE macros to serialize resources, GRS uses RNLs and the scope on the ENQ, DEQ, or RESERVE macro to determine whether a resource is a local resource or a global resource.

In the IEASYSxx, there is the parameter GRSRNL=EXCLUDE meaning that all the resources are going to be treated by GRS as local. The purpose of such is to allow customers to have other serialization product besides GRS. However, even in this case GRS must be active because certain z/OS components may use the RNL =NO option in the ENQ (or ISGENQ) macro to force the global serialization through GRS.

|

Important: RNLs for every system in the GRS complex must be identical; they must contain the same resource name entries, and these must appear in the same order. During initialization, global resource serialization checks to make sure that the RNLs on each system are identical. If they are different, global resource serialization does not allow the system to join the complex.

|

GRS exits

You can use ENQ/DEQ exits ISGGSIEX, ISGGSEEX, and ISGGRCEX to do the following:

•Change resource names (major and minor names)

•Change the resource SCOPE

•Change the UCB address (for a RESERVE)

•Indicate to convert a RESERVE to ENQ or ENQ to a RESERVE (add a UCB specification)

•Indicate to bypass RNL processing

These exits are invoked only for SYSTEM and SYSTEMS requests. Important to note that they have a local scope, contrary of RNLs that are at sysplex level. They are required for example in the following scenario: All z/OS systems share the same SYS1.PARMLIB with the exception of one of them that has its own SYS.PARMLIB (with the same name). In this case the exit is activate in this z/OS to make such resource local.

GQSCAN macro service

The GQSCAN macro extracts information from Global Resource Serialization about contention (users and resources). It is used by performance monitors as RMF. It causes exchange of GRS messages through XCF links.

4.7 GRSRNL=EXCLUDE migration to full RNLs

Figure 4-7 GRSRNL=EXCLUDE option

GRSRNL=EXCLUDE option

Migrating from a GRSRNL=EXCLUDE environment to full RNLs does not require a sysplex-wide IPL for certain environments. A FORCE option for the SET GRSRNL=xx command processing can dynamically switch to the specified RNLs and move all previous requests to the appropriate scope (SYSTEM or SYSTEMS) as if the RNLs were in place when the original ENQ requests were issued. This feature eliminates the IPL of the entire sysplex when making this change.

Usage restrictions

The usage restrictions are enforced to ensure data integrity. The migration is canceled if any of these requirements are not met and GRSRNL=EXCLUDE remains in effect. Consider the following:

•When in GRS Ring mode, the ISG248I message is issued indicating that the SET GRSRNL command is not accepted in a GRSRNL=EXCLUDE environment.

•When in GRS Star mode, before the SET GRSRNL=xx command migration begins, message ISG880D prompts the user to confirm the use of the FORCE option, as follows:

ISG880D WARNING: GRSRNL=EXCLUDE IS IN USE. REPLYING FORCE WILL RESULT IN THE USE OF SPECIFIED RNLS. REPLY C TO CANCEL

Usage considerations

The following restrictions are required for data integrity:

•Can only be issued in a single system GRS STAR sysplex with GRSRNL=EXCLUDE.

•No ISGNQXIT or ISGNQXITFAST exit routines can be active, nor requests that were affected by one.

•No ISGNQXITBATCH or ISGNQXITBATCHCND exit routines can be active.

•No local resource requests can exist that must become global where that global resource is already owned. This could result if some requests were issued with RNL=NO.

•No resources with a MASID request can exist where only some of the requesters will become global. This could result if some requests were issued with RNL=NO or if some requests were issued with different scopes.

•No RESERVEs can be held that were converted by the ISGNQXITBATCH or ISGNQXITBATCHCND exit that do not get converted by the new RNLs. This could result if a resource was managed globally by an alternative serialization product before the migration, but afterwards is to be serialized by a device RESERVE.

|

Note: Any errors in changing the RNL configuration can lead to deadlocks or data integrity errors. When doing migration to the specified RNLs, the only way to move back to GRSRNL=EXCLUDE is a sysplex-wide IPL. The FORCE option cannot be specified from the existing RNLs to another set of RNLs. Long-held resources can delay such a change indefinitely, and therefore a cancellation of jobs or even a sysplex-wide outage is required to end the job.

|

4.8 GRS configuration modes

Figure 4-8 GRS configuration modes

GRS configuration modes

Starting with MVS/ESA Version 4, it is required that GRS is active in the sysplex. Any multisystem sysplex is thus required to activate global serialization for all members of the sysplex. Any global ENQ implies that all GRS address spaces must be aware of such ENQ. There are two GRS configuration modes available, which make this awareness possible.

GRS ring mode

When GRS is configured in ring mode, each system in GRS complex maintains a list for all requests for global resources in the complex, through the manipulation of global ENQ/DEQ information that flows through GRSs in a ring topology. This configuration is old and it is not recommended due to performance and availability reasons.

GRS star mode

You can define a GRS complex in a star mode configuration. It is still possible to run all z/OS releases using the ring configuration. The main change in the star mode method of processing requests for global ENQs resources is that they are managed through a coupling facility lock structure, and that each GRS maintains only its own global requests. The star configuration has positive effects on sysplex growth, response time for global requests, continuous availability and processor and storage resources consumption.

If you build a multisystem sysplex, you automatically build a GRS complex with it. When a system joins the sysplex, the system also joins the global resource serialization complex.

4.9 GRS ring configuration

Figure 4-9 GRS ring configuration

GRS ring configuration

The first implementation of GRS was a GRS ring mode and was the only method used for serializing requests for global resources. The ring consists of one or more GRS systems connected to each other by communication links. The links are used to pass information about requests for global resources from one GRS to another in the complex. Requests are made by passing a message or token, called the ring system authority message (RSA-message), between systems in a ring fashion. When a system receives the RSA, it inserts global ENQ and DEQ information and passes it along to the next GRS to copy. It also makes a copy of the global ENQ/DEQ information that was inserted by other GRS address spaces. When the RSA returns to the originating system, it knows that all other GRS systems have seen its request, so the request is removed. The GRS can now process those requests by adding them to the global resource queues, and can now determine which jobs own resources, and which jobs must wait for resources owned by other jobs. Each system takes this information and updates its own global queues.

Systems participating in a ring are dependent on each other to perform global resource serialization processing. For this reason, the following areas are all adversely affected as the number of systems in a ring increases:

•Storage consumption

•Processing capacity

•Response time

•CPU consumption

•Availability/Recovery time

4.10 GRS ring topology

Figure 4-10 GRS ring topology

GRS ring topology

A global resource serialization ring complex consists of one or more systems connected by communication links. Global resource serialization uses the links to pass information about requests for global resources from one system in the complex to another.

Figure 4-10 shows a four-system global resource serialization ring complex. When all four systems in the complex are actively using global resource serialization, the complex and the ring are the same. A GRS complex consists of one or more systems connected to each other in a ring configuration by using:

•XCF communication paths (CTCs) and signalling paths through a coupling facility, for the z/OS systems that belong to a sysplex. If all z/OS systems belong to a sysplex, then GRS uses XCF services to communicate along the GRS ring; this configuration is called a sysplex matching complex.

•GRS-managed channel-to-channel (CTCs) adapters; for the z/OS systems that do not belong to a sysplex, this configuration is called a mixed complex.

The complex shown has a communication link between each system and every other system; such a complex is a fully-connected complex. A sysplex requires full connectivity between systems. Therefore, when the sysplex and the complex are the same, the complex has full connectivity. Although a mixed complex might not be fully connected, a fully-connected complex allows the systems to build the ring in any order and allows any system to withdraw from the ring without affecting the other systems. It also offers more options for recovery if a failure disrupts ring processing.

The concept of the global resource serialization ring is important because, regardless of the physical configuration of systems and links that make up the complex, global resource serialization uses a ring processing protocol to communicate information from one system to another. Once the ring is active, the primary means of communication is the ring system authority message (RSA-message).

RSA-message

The RSA-message contains information about requests for global resources (as well as control information). It passes from one system in the ring to another. No system can grant a request for a global resource until other systems in the ring know about the request; your installation, however, can control how many systems must know about the request before a system can grant access to a resource. The RSA-message contains the information each system needs to protect the integrity of resources; different systems cannot grant exclusive access to the same resource to different requesters at the same time.

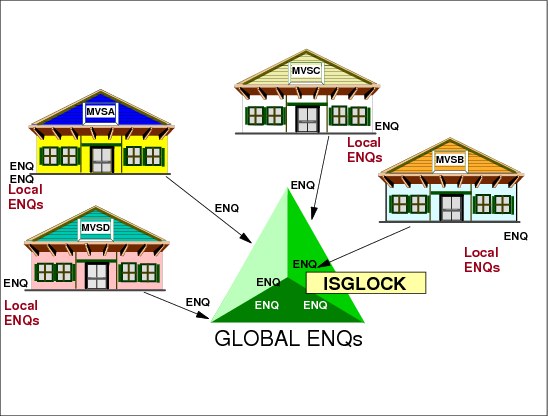

4.11 GRS star configuration

Figure 4-11 GRS star configuration

GRS star configuration

The star method of serializing global resources is built around a coupling facility in which the global resource serialization lock structure, ISGLOCK, resides. In a star complex, when an ISGENQ, ENQ, DEQ, or RESERVE macro is issued for a global resource, GRS uses information in the ISGLOCK structure to coordinate resource allocation across all z/OS systems in the sysplex.

In a star complex, GRS requires the use of the coupling facility for the serialization lock structure ISGLOCK. The coupling facility makes data sharing possible by allowing data to be accessed throughout the sysplex by ensuring the data remain consistent among each z/OS in the sysplex. The coupling facility provides the medium (global intelligent memory) in GRS to share information about requests for global resources between z/OS systems. Communication links (CF links) are used to connect the coupling facility with the GRS address spaces in the global resource serialization star sysplex.

|

Note: If you only have one coupling facility, using the star method is not recommended. Loss of that coupling facility would cause all systems in the complex to go into a wait state. In a production environment you should define at least two coupling facilities and implement GRS star instead of GRS ring.

|

4.12 GRS star topology

Figure 4-12 GRS star topology

GRS star topology

The star method of serializing global resources is built around a coupling facility in which the global resource serialization lock structure, ISGLOCK, resides. In a star complex, when an ISGENQ, ENQ, DEQ, or RESERVE macro is issued for a global resource, MVS uses information in the ISGLOCK structure to coordinate resource allocation across all systems in the sysplex.

Coupling facility lock structures

In a star complex, global resource serialization requires the use of the coupling facility. The coupling facility makes data sharing possible by allowing data to be accessed throughout the sysplex by ensuring the data remain consistent among each system in the sysplex. The coupling facility provides the medium in global resource serialization to share information about requests for global resources between systems. Communication links are used to connect the coupling facility with the systems in the global resource serialization star sysplex.

GRS uses contention detection and management capability of the XES lock structure to determine and assign ownership of a particular global resource. Each system maintains only a local copy of its own global resources, and the GRS coupling facility lock structure has the overall image of all system global resources in use.

Figure 4-12 shows a GRS star complex, where the global ENQ/DEQ services use the coupling facility lock structure to manage and arbitrate the serialization requests, and the global GQSCAN service uses the XCF services to communicate across the sysplex.

4.13 GRS star highlights

Figure 4-13 GRS star highlights

Central storage consumption

In a GRS ring configuration, each system in the ring maintains a queue of all global resource serialization requests for the entire sysplex. Because of the frequency of ENQ/DEQ processing, each system uses central storage that is proportional to the number of outstanding resource requests and the number of z/OS systems in the sysplex. Basically, in a GRS ring the amount of real storage consumed by GRS in each system goes up linearly with the number of z/OS systems in the sysplex. In the case of GRS star support, no GRS n the sysplex maintains a complete queue of all the global resource requests for the entire sysplex. Because of this approach, the central storage consumption by GRS for each system is governed only by number of requests from that z/OS.

Processing capacity

In a GRS ring, all global resource serialization requests are circulated around the ring in a single message buffer called the ring system authority (RSA). Basically, the RSA can be viewed as a floating data area that is shared by all systems in the sysplex, but owned by only one system at a time. To handle a global resource request, the originating system must place the request in the RSA, and pass it around the ring so all other systems are aware of the request. Clearly, since the RSA is a shared data area of limited size, the number of requests that each system can place in the RSA diminishes as the number of systems sharing the RSA increases. Also, as the number of z/OS systems in the ring increases, the length of time for the RSA to be passed around the ring increases. The net effect of this is that the GRS processing capacity goes down as the number of z/OS systems in the ring is increased (that is, fewer requests per second are handled by the ring).

In the case of the GRS star support, this problem is eliminated since all of the requests are handled by mapping ENQ/DEQ requests to XES lock structure requests. With this approach, there is no need to wait for the serialization mechanism, the RSA, to be held by this z/OS before processing the global resource request. Being able to process requests as they are received does improve processing capacity. For instance, there can never be a situation where the system will have to defer excess requests (when the RSA is full).

GRS response time

Very closely related to the capacity problem is the response time that it takes for GRS to handle a request. As can be seen from the capacity discussion, an increase in the number of z/OS systems in the GRS ring results in an increase in the average length of time a given z/OS must wait before it can receive control of the RSA to initiate a new request. Additionally, the length of time that it then takes to circulate the request around the ring before it can be processed is also increased. As a result, the GRS response time on a per request basis increases linearly as the number of z/OS systems in the ring is increased. In the case of the GRS star support, the same processing flow that addressed the capacity constraint also addresses the problem of responsiveness.

CPU consumption

In a GRS ring, each z/OS must maintain a view of the entire global resource queue. This means each z/OS must not only process the requests originating on it, but also must process the global requests originating on all the other z/OS systems in the ring. The effect of this is that each global ENQ/DEQ request generated in a ring consumes processor time on all the z/OS systems in the sysplex, and as the sysplex grows, the amount of processor time GRS ring consumes across the sysplex grows. In the case of the GRS star support, the overhead of processing an ENQ/DEQ request is limited to only the z/OS on which the request originates. Thus, the total processor time consumed across the sysplex is less than that consumed by a GRS ring. However, take into consideration that GRS star topology consumes coupling facility resources, such as: ICFs cycles, central storage and CF links bandwidth.

Availability and recovery

In a GRS ring, if one of the z/OS systems fails, all the other z/OS systems are affected during the ring reconstruction time. None of the remaining z/OS systems in the sysplex can process any further ENQ/DEQ requests until the ring is reconstructed. To rebuild the ring, each of the remaining z/OS systems must resynchronize and rebuild their view of the global resource queue. The amount of time this may take depends on the number of z/OS systems in the sysplex and the number of requests in the global resource queue. In the case of the GRS star support, availability is improved by the fact that there is less interdependency between the z/OS systems in the GRS complex. Whenever a z/OS fails, no action need be taken by the other z/OS systems in the sysplex, because there is no resource request data kept on any other z/OS for the requesters on the failing z/OS. All that needs to occur is the analysis of the contention for the resource, which could determine a new owner (or owners) of the resource, should the resource have been owned by the requester on the failing z/OS. This approach allows for a much quicker recovery time from the z/OS systems remaining in the sysplex, since there is no longer a need to clean up the resource queues or determine a new ring topology for the remaining z/OS systems.

Ring versus star topology

A GRS ring configuration can be used when you have no coupling facility available. Remember that GRS in ring mode can use CTCs to pass the RSA, without the need of XCF. The GRS star configuration is suggested for all Parallel Sysplex configurations. The GRS star configuration allows for sysplex growth, and also is of value to installations currently running a sysplex because of the improved responsiveness, the reduced consumption of processor and storage, and the better availability and recovery time.

4.14 GRS star configuration planning

Figure 4-14 GRS star configuration planning

Number of z/OS systems

The design of the GRS allows for an unlimited number of z/OS systems. The star topology for serializing global resources can support complexes of up to 32 z/OS systems due to parallel sysplex limitations. These 32 z/OS systems are supported efficiently and effectively.

Avoid data integrity exposures

To avoid a data integrity exposure, ensure that no z/OS outside the sysplex can access the same shared DASD as any z/OS in the sysplex. If that is unavoidable, as often happens, you must serialize the data on the shared DASD with the RESERVE macro and no using for such resource the conversion list. The sample GRS exit ISGGREX0 may help in managing DASD sharing among and outside sysplexes.

As stated before, in a star complex, GRS uses the lock services of the cross-system extended services (XES) component of z/OS to serialize access to global resources, and the GRS star method for processing global requests operates in a sysplex like any other z/OS component that uses the coupling facility. Therefore, a coupling facility with connectivity from all z/OS systems in the GRS complex is required.

GRS star connectivity

The overall design of GRS star makes the connectivity configuration relatively simple. GRS star use sysplex couple data sets, that for sure are shared by all z/OS systems in the sysplex. An alternate sysplex couple data set is recommended to be used to facilitate the migration from a ring to a star complex, and for availability reasons.

XCF stores information related to sysplex, systems, and XCF groups (such as global resource serialization), on the sysplex couple data set. GRS star stores RNL information into the sysplex couple data set.

Couple data sets

The following policies located in couple data sets are used to manage GRS star:

•Coupling facility resource management (CFRM) policy, which is required, defines how XES manages coupling facility structures (ISGLOCK in particular).

•Sysplex failure management (SFM) policy, which is optional, defines how system and signaling connectivity failures should be managed. This is the a recommended way of operating.

Other requirements

In designing a GRS star configuration, verify that the following requirements are met:

•Hardware requirements:

A fully interconnected coupling facility (accessible by all z/OS systems)

•All z/OS systems in a GRS star complex must be in the same sysplex

•All z/OS systems in a star complex must be connected to a coupling facility containing the GRS lock structure whose name should be ISGLOCK. For availability reasons in case of coupling facility failure, a second coupling facility should be available to rebuild the structure used by GRS.

•A GRS star complex and a ring complex cannot be interconnected, and therefore they cannot coexist in a single GRS complex.

•GRS star does not support a mixed complex: all z/OS systems in the sysplex should participate in the star complex.

•All z/OS systems must be at z/OS Version 1 Release 2 level or above.

4.15 GRS star implementation

Figure 4-15 GRS star implementation

GRS star implementation

Before we start, it is important to mention that the huge majority of customers already migrate from ring to star. And this subject is here just completeness.

Figure 4-15 shows the steps necessary to implement the GRS star complex. It is assumed that a sysplex has already been implemented, that a coupling facility is operational, and that a GRS ring complex that matches the sysplex is working. This also means that the RNLs have been implemented according to the installation needs.

Sysplex couple data set definition

Add the GRS parameter to the sysplex couple data set for the star complex. The sysplex couple data set must be formatted with the IXCL1DSU utility. The GRS parameter is in the DEFINEDS statement. If this is not done, XCF issues the message:

IXC291I ITEM NAME NOT DEFINED, GRS

For more information on the IXCL1DSU utility, see: z/OS MVS Setting Up a Sysplex, SA22-7625

Figure 4-16 Example of IXCL1DSU format utility statements

The ITEM NAME(GRS) NUMBER(1) statement allocates space in the couple data set for GRS usage. The support for star complex does not introduce changes in RNL functions or in the dynamic RNL changes.

Make the new CDS available to sysplex

The SETXCF command allows to add the new formatted data sets dynamically:

SETXCF COUPLE,PSWITCH Switches the current alternate sysplex CDS to primary. The primary old sysplex CDS is removed.

SETXCF COUPLE,ACOUPLE=dsname1 Specifies the new CDS to be used as an alternate sysplex CDS.

SETXCF COUPLE,PSWITCH Switches the current alternate sysplex CDS to primary. This command removes the primary old sysplex CDS.

SETXCF COUPLE,ACOUPLE=dsname2 Add the second new formatted data set as alternate CDS.

The COUPLExx parmlib member must reflect the sysplex couple data set names for future IPLs. An example of COUPLExx member entry is:

COUPLE SYSPLEX(WTSCPLX1)

PCOUPLE(SYS1.XCF.CDS01)

ACOUPLE(SYS1.XCF.CDS02)

PCOUPLE(SYS1.XCF.CDS01)

ACOUPLE(SYS1.XCF.CDS02)

4.16 Define GRS lock structure

Figure 4-17 Define the GRS lock structure

Define the GRS lock structure

Figure 4-17 shows an example of a GRS lock structure definition. Use the IXCMIAPU utility to define the structure in a new CFRM policy.

For more information on the IXCMIAPU utility, see z/OS MVS Setting Up a Sysplex, SA22-7625. GRS uses the XES lock structure to reflect a composite system level of interest for each global resource. The interest is recorded in the user data associated with the lock request.

The name of the lock structure must be ISGLOCK, and the size depends on the following factors:

•The number of z/OS in the sysplex

•The type of workload being performed

Use the CFSIZER tool to determine the best size for your ISGLOCK lock structure. This tool is available via the Web page:

CFSIZER structure size calculations are always based on the highest available CFCC level. Input for the tool is the peak global resources value. The GRS help link of CFSIZER contains information on how to determine this value.

4.17 Parmlib changes

Figure 4-18 Parmlib changes

IEASYSxx parmlib member

Initializing a star complex requires specifying STAR on the GRS= system parameter in the IEASYSxx parmlib member, or in response to message IEA101A during IPL. The START, JOIN, and TRYJOIN options apply to a ring complex only.

The PLEXCFG (also in IEASYSxx) parameter should be MULTISYSTEM; the relation between GRS= and PLEXCFG parameters are:

•Use GRS=NONE and PLEXCFG=XCFLOCAL or MONOPLEX when there is a single-system sysplex and no GRS complex.

•Use GRS=TRYJOIN (or GRS=START or JOIN) and PLEXCFG=MULTISYSTEM when there is a sysplex of two or more z/OS systems and the GRS ring complex uses XCF signalling services.

•Use GRS=STAR and PLEXCFG=MULTISYSTEM when there is a GRS star complex.

GRSCNFxx parmlib member

Basically, the GRSCNFxx member of parmlib is not required when initializing a star complex that uses the default CTRACE parmlib member, CTIGRS00, which is supplied with the z/OS. If you want to initialize a star complex using a CTRACE parmlib member other than the default, you must use the GRSDEF statement in the GRSCNFxx parmlib member. CTRACE describes the options for the GRS component trace, this trace is requested by IBM, when an error is suspected in the code, that is an extremely rare situation. See Figure 4-19 for a GRSCNFxx parmlib member example.

Figure 4-19 GRSCNFxx parmlib member example

GRS ring to star conversions

Apart from the two GRSDEF parameters listed in the example, all the other parameters on the GRSDEF statement (ACCELSYS, RESMIL, TOLINT, CTC, REJOIN, and RESTART) apply only to z/OS systems initializing in a ring complex. Although they can be specified on the GRSDEF statement and are parsed and syntax checked, they are not used when initializing z/OS systems into a GRS star complex. GRS ignores the parameters that are not applicable on the GRSDEF statement when initializing z/OS systems into a star complex, as well as when initializing z/OS systems into a ring complex.

If the ring parameters are left in the GRSCNFxx parmlib member, there is the potential, due to making an error in the specification of the GRS= parameter in IEASYSxx, to create two GRS complexes.

For this reason, even if it is possible for an installation to create a single GRSCNFxx parmlib member that can be used for the initialization of either a star or a ring complex, it is suggested you have different GRSCNFxx members, one for star and one for ring, and use the GRSCNF= keyword in IEASYSxx to select the proper one. This helps in the transition from a ring to a star complex if the installation elects to use the SETGRS MODE=STAR capability to make the transition.

4.18 GRS ring to GRS star

Figure 4-20 GRS ring to GRS star

GRS ring to GRS star

The SETGRS command is used to migrate an active ring to a star complex. There is no SETGRS option to migrate from a star to a ring complex. Returning to a ring complex requires an IPL of the entire sysplex.

The following command is used to migrate from a ring complex to a star complex:

SETGRS MODE=STAR

The SETGRS command can be issued from any z/OS in the complex and has sysplex scope.

While processing a SETGRS MODE=STAR command, processing is suspended for the GRS ENQ, DEQ, RESERVE, and GQSCAN for global resource data will fail with RC=X'0C', RSN=X'10'. The length of time GRS requesters are suspended may be a few minutes while the ISGLOCK lock structure and GRS sysplex couple data set records are initialized, and changes to the internal GRS control block structures are initialized as well.

Migration considerations

The migration should be invoked at a time when the amount of global resource request activity is likely to be minimal.

The SETGRS MODE=STAR request is valid under the following conditions:

•GRS is currently running a ring complex that exactly matches the sysplex.

•All z/OS systems in the ring complex support z/OS Version 1 Release 2 or later.

•All z/OS systems in the ring complex are connected to a coupling facility.

•All z/OS systems can access the ISGLOCK lock structure on the coupling facility.

•The GRS records are defined on the sysplex couple data sets.

•There are no active dynamic RNL changes in progress.

GRS commands

The DISPLAY GRS (D GRS) command shows the state of each z/OS in the complex. The D GRS shows z/OS status only as it relates to the GRS ring.

You can also use D GRS to display the local and global resources requested by the z/OS systems in the ring, contention information, the contents of the RNLs, and jobs that are delaying or suspended by a SET GRSRNL command.

You can issue D GRS from any z/OS in the ring and at any time after the ring has been started. The D GRS display shows the status of the ring from that system's point of view; thus, the displays issued from different systems might show different results.

4.19 GRS star complex overview

Figure 4-21 GRS star complex overview

GRS star complex overview

In a GRS star complex, when an ENQ, RESERVE, or DEQ request is issued for a global resource, the request is converted to an XES lock request. The XES lock structure coordinates the requests it receives to ensure proper resource serialization across all GRSs in the complex, and notifies the originating GRS about the status of each request. Based on the results of these lock requests, GRS responds to the requester (sometime placing it in wait) with the result of the global request.

As shown in Figure 4-21, each system in the GRS star complex has a server task (dispatchable unit) executing in the GRS address space. All ENQ, DEQ, RESERVE, and GQSCAN requests for global resources that are issued from any of the address spaces on a system are always first passed to the GRS address space on the requester’s system. In the GRS address space, the server task performs the processing necessary to handle a given request and interfaces with the XES lock structure, if necessary, to process the request. In case of a GQSCAN request for global resource information, the server task packages the request for transmission and sends it to each of the other server tasks in the GRS complex using the XCF services. This is necessary because the status of global requests is maintained at the system level.

Request for a global resource

The request for global resource information is queued to the GQSCAN/ISGQUERY server on that system. Global resource serialization suspends the GQSCAN/ISGQUERY requester.

For GQSCAN/ISGQUERY users that do not need information about other systems and can not tolerate suspension. Global resource serialization processing will then package the request for transmission and send it to each of the other systems in the star complex.

Each system in the complex scans its system global resource queue for global resource requests that match the GQSCAN/ISGQUERY selection criteria, and respond to the originating system server with the requested global resource information, if any, and a return code.

The originating system waits for responses from all of the systems in the sysplex and builds a composite response, which is returned to the caller’s output area through the GQSCAN/ISGQUERY back-end processing.

Control is then returned to the caller with the appropriate return code.

4.20 Global ENQ processing

Figure 4-22 Global ENQ processing

Global ENQ processing

ENQ processing for the RET=NONE and RET=ECB keywords is shown at a high level in Figure 4-22. These operands mean that control of all the resources is unconditionally requested, if not the requesting task should be placed in a a wait state through an ECB. In a GRS star complex, no GRS maintains a complete view of the outstanding global resource requests, in contrast with the GRS ring philosophy of maintaining a complete view of the global resource requests on all systems in the complex. Instead, each system maintains a queue of each of the local requests, called the system global resource queue. Arbitrating requests for global resources from different systems in the complex is managed by putting a subset of the information from the system global resource queue into the user data associated with the lock request made to the XES lock structure for a particular resource.

Using lock structures

In a GRS star complex, requests for ownership of global resources are handled through a lock structure on a coupling facility that is fully interconnected with all the systems in the sysplex. GRS interfaces with the XES lock structure to reflect a composite system-level view of interest in each of the global resources for which there is at least one requester. This interest is recorded in the user data associated with the lock request.

Each time there is a change in the composite state of a resource, GRS updates the user data reflecting the new state of interest in the XES lock structure. In general, this composite state is altered each time a change is made to the set of owners of the resource or the set of waiters for the resource.

ENQ requests

Each time an ENQ request is received, the server task analyzes the state of the resource request queue for the resource. If the new request alters the composite state of the queue, an IXLLOCK request is made to reflect the changed state of the resource for the requesting system. If the resource is immediately available, the IXLLOCK request indicates that the system can grant ownership of the resource. If the XES lock structure cannot immediately grant ownership of the resource, the IXLLOCK request completes with the return code X' 04', and the server task holds the request pending and the requester in a wait state until the completion exit is driven for the request.

Only one IXLLOCK can ever be in progress from a particular system and for a particular global resource at one time. Requests for a resource that are received while a request is in progress are held pending until all preceding requests for the resource have been through IXLLOCK processing. The requests are processed in first-in first-out (FIFO) order. If contention exists for the resource, the requester is not granted ownership until some set of requesters dequeue from the resource. When the requester is to be assigned ownership of the resource, the contention exit, driven as the result of a DEQ request, drives the notify exit of the server task that is managing the requester.

Limits for ENQs

GRS allows flexibility and control over the system-wide maximums. The default values are:

•16,384 for unauthorized requests

•250,000 for authorized requests

This allows you to set the system-wide maximum values. Furthermore, it is now possible for an authorized caller to set its own address space-specific limits. These new values substantially increased STAR mode capacity. The majority of the persistent ENQs are global ENQs because they are data set related. There is only minimal relief for GRS=NONE, GRS=RING mode systems, and systems running some ISV serialization products.

ISGADMIN service

The ISGADMIN service allows you to programmatically change the maximum number of concurrent ENQ, ISGENQ, RESERVE, GQSCAN, and ISGQUERY requests in an address space. This is useful for subsystems such as CICS and DB2, which have large numbers of concurrently outstanding ENQs, query requests, or both. Using ISGADMIN, you can set the maximum limits of unauthorized and authorized concurrent requests. It is impossible to set the maximums lower than the system-wide default values.

New keywords in the GRSCNFxx parmlib member

To allow you to control the system-wide maximums, two new keywords are added to the GRSCNFxx parmlib member:

ENQMAXU(value) Identifies the system-wide maximum of concurrent ENQ requests for unauthorized requesters. The ENQMAXU range is 16,384 to 99,999,999. The default is 16,384.

ENQMAXA(value) Identifies the system-wide maximum of concurrent ENQ requests for authorized requesters. The ENQMAXA range is 250,000 to 99,999,999. The default is 250,000.

Use the SETGRS ENQMAXU command to set the system-wide maximum number of concurrent unauthorized requests, and the SETGRS ENQMAXA command to set the system-wide maximum number of concurrent authorized requests.

SETGRS ENQMAXU=nnnnnnnn[,NOPROMPT|NP]

SETGRS ENQMAXA=nnnnnnnn[,NOPROMPT|NP]

4.21 Global DEQ processing

Figure 4-23 Global DEQ processing

Global DEQ processing

In a GRS star complex, releasing a resource has the effect of altering the system’s interest in the resource. GRS alters the user data associated with the resource, and issues an IXLLOCK request to reflect the new interest. Figure 4-23 illustrates the global DEQ process.

After resuming the requesting task, the server task performs local processing and issues an IXLLOCK request if the DEQ results in an alteration of the system’s composite state of the resource. If there is no global contention on the resource, XES returns from the IXLLOCK service with a return code of X'0', indicating that no asynchronous ownership processing is occurring.

When IXLLOCK completes with return code X'04', XES indicates that there may be contention for the resource and that asynchronous processing occurs for this resource. The server task places this resource into a pending state, keeping all subsequent requests queued in FIFO order until the asynchronous processing completes. See “Contention notification system (CNS)” on page 222

GRS contention exit

XES gathers the user data from each of the z/OS systems interested in the resource and presents it to the contention exit that GRS provides. The contention exit determines, based on each of the systems’ composite states, which systems have owners of the resource. The contention exit causes the notify exit on each of the systems to be driven. The notify exit informs the server task of the change in ownership of the resource. The server task posts the new owner (or owners) of the resource.

GRS fast DEQ requests

In a GRS star complex, a DEQ request is handled as a fast DEQ under the following conditions:

•The DEQ is for a single resource.

•The resource request (ENQ for the resource) has already been reflected in the GRS lock structure.

•The request for the resource is not the target of a MASID/MTCB request.

This is equivalent to the implementation of fast DEQ in a GRS ring complex.

If the resource request has not yet been reflected in the GRS lock structure, the DEQ requester waits until the IXLLOCK for that request has been completed. Following this, the request is resumed in parallel with the completion of the DEQ request.

4.22 Contention notification

Figure 4-24 Contention notification

Contention notification system (CNS)

In ring mode, each system in the global resource serialization complex knows about the complex-wide SYSTEMS scope ENQs that allow each system to issue the appropriate event notification facility (ENF) signal 51 contention notification event. However, in star mode, each system only knows about the ENQs that are issued by the system.

Resource contention

Resource contention can result in poor system performance. When resource contention lasts over a long period of time, it can result in program starvation or deadlock conditions. When running in ring mode, each system in the GRS complex is aware of the complex-wide ENQs, which allows each system to issue the appropriate ENF 51 contention notification event. However, when running in star mode, each system only knows the ENQs that are issued by itself.

ENF 51 signal

To ensure that the ENF 51 contention notification event is issued on all systems in the GRS complex in a proper sequential order, one system in the complex is appointed as the sysplex-wide contention notifying system (CNS). All ENQ contention events are sent to the CNS, which then issues a sysplex-wide ENF 51. GRS provides two APIs to query for contention information:

ISGECA Obtains waiter and blocker information for GRS-managed resources.

ISGQUERY Obtains the status of resources and requester of those resources.

GRS issues an ENF 51 to notify monitoring programs to track resource contention. This is useful in determining contention bottlenecks, preventing bottlenecks, and potentially automating correction of these conditions.

During an IPL, or if the CNS can no longer perform its duties, any system in the complex can act as the CNS. You can determine which system is the current CNS with the DISPLAY GRS command. In the example shown in Figure 4-25, you can see that the CNS is SC64. Figure 4-26 shows an example of changing the CNS from system SC64 to system SC70.

|

D GRS

ISG343I 15.44.37 GRS STATUS 544

SYSTEM STATE SYSTEM STATE

SC65 CONNECTED SC64 CONNECTED

SC70 CONNECTED SC63 CONNECTED

GRS STAR MODE INFORMATION

LOCK STRUCTURE (ISGLOCK) CONTAINS 1048576 LOCKS.

THE CONTENTION NOTIFYING SYSTEM IS SC64

SYNCHRES: YES

ENQMAXU: 16384

ENQMAXA: 250000

GRSQ: CONTENTION

|

Figure 4-25 Output of the DISPLAY GRS command

|

SETGRS CNS=SC70

*092 ISG366D CONFIRM REQUEST TO MIGRATE THE CNS TO SC70. REPLY CNS=SC70

TO CONFIRM OR C TO CANCEL.

092CNS=SC70

IEE600I REPLY TO 092 IS;CNS=SC70

IEE712I SETGRS PROCESSING COMPLETE

ISG364I CONTENTION NOTIFYING SYSTEM MOVED FROM SYSTEM SC64 TO SYSTEM SC70. OPERATOR COMMAND INITIATED.

D GRS

ISG343I 15.54.47 GRS STATUS 565

SYSTEM STATE SYSTEM STATE

SC65 CONNECTED SC64 CONNECTED

SC70 CONNECTED SC63 CONNECTED

GRS STAR MODE INFORMATION

LOCK STRUCTURE (ISGLOCK) CONTAINS 1048576 LOCKS.

THE CONTENTION NOTIFYING SYSTEM IS SC70

SYNCHRES: YES

ENQMAXU: 16384

ENQMAXA: 250000

GRSQ: CONTENTION

|

Figure 4-26 Setting the CNS using SETGRS command

4.23 GQSCAN request for global resource data

Figure 4-27 GQSCAN request for global resource data

GQSCAN request for global resource data

The basic flow of a GQSCAN request for global resource in a star complex is shown in Figure 4-27. In the case of a GQSCAN request for global resource information, the request is passed from the issuing address space to the GRS server task on that z/OS system, and the GQSCAN request is waited. The server task then packages the request for transmission and sends it to each of the server tasks in the GRS star complex.

Each server task in the complex scans its system global resource queue that matches the GQSCAN selection criteria, responding to the originating system with the requested global resource information (if any) and a return code. The originating server task waits for responses from all of the server tasks in the complex and builds a composite response to be returned to the caller.

Waiting the issuer of GQSCAN when global resource information is requested is a significant change from the way GQSCAN works in a GRS ring complex. In a GRS ring complex, GQSCAN processing is performed synchronously with respect to the caller’s task.

A GQSCAN macro option is provided to allow the issuer to indicate whether or not cross-system processing for global resource information should be performed. The no cross-system option (XSYS=NO) is provided primarily for the GQSCAN that cannot be put into a wait, and does not require that data about requesters on other systems in the complex. XSYS=YES is the default option.

4.24 ISGGREX0 RNL conversion exit

Figure 4-28 ISGGREX0 RNL conversion exit

ISGGREX0 RNL conversion exit

When GRS receives a ENQ/ISNENQ/RESERVE/DEQ request for a resource with a scope of SYSTEM or SYSTEMS, the installation-replaceable scan RNL exit (ISGGREX0) is invoked to search the appropriate RNL. However, this exit is not invoked, if the request specifies RNL=NO or you have specified GRSRNL=EXCLUDE on the IEASYSxx parmlib member. A return code from ISGGREX0 indicates whether or not the input resource name exists in the RNL.

Input to the ISGGREX0 consists of the QNAME, the RNAME, and the RNAME length of the resource. The IBM default ISGGREX0 exit search routine finds a matching RNL entry when:

•A specific resource name entry in the RNL matches the specific resource name in the search argument.

The length of the specific RNAME is important. A specific entry does not match a resource name that is padded with blank characters to the right.

•A generic QNAME entry in the RNL matches the QNAME of the search argument.

•A generic QNAME entry in the RNL matches the corresponding portion of the resource name in the search argument.

4.25 ISGGREX0 conversion exit flow

Figure 4-29 ISGGREX0 conversion exit flow

ISGGREX0 conversion exit flow

GRS has a user exit, ISGGREX0, that receives control whenever an ENQ/DEQ/RESERVE request is issued for a resource. ISGGREX0 scans the input resource name list (RNL) for the resource name specified in the input parameter element list (PEL). A return code from the exit routine indicates whether or not the input resource name appears in the RNL.

You can use ISGGREX0 to determine whether the input resource name exists in the RNL. Replacing ISGGREX0 changes the technique that GRS normally uses to scan an RNL. Changing the search technique can have an adverse effect on system performance, especially when the RNLs contain many specific entries.

The routine has three external entry points:

•ISGGSIEX scans the SYSTEM inclusion RNL.

•ISGGSEEX scans the SYSTEM exclusion RNL.

•ISGGRCEX scans the RESERVE conversion RNL.

Invoking the exit routine

Depending on the RNL the request requires, the exit routine is invoked at the appropriate entry point for the SYSTEM inclusion RNL, the SYSTEM exclusion RNL, or the RESERVE conversion RNL.

You can modify the IBM-supplied exit routine to perform the processing necessary for your system. Use the source version of the exit routine provided as member ISGGREXS in SYS1.SAMPLIB.

For example, ISGGRCEX can be used to avoid converting reserves against a volume on a system outside the global resource serialization complex.

The ISGGREX0 exit routine in each system belonging to the same GRS complex must yield the same scan results; otherwise, resource integrity cannot be guaranteed. For the same reason, the RNLs themselves must be the same. The exit routine resides in the nucleus and to change or activate it, a sysplex-wide IPL is required. See z/OS MVS: Installation Exits, SA22-7593, for details.

4.26 Shared DASD between sysplexes

Figure 4-30 Shared DASD between sysplexes

Shared DASD between sysplexes

The resources your systems need to share determine the systems in the complex. The most likely candidates, of course, are those systems that are already serializing access to resources on shared DASD volumes and, especially, those systems where interlocks, contention, or data protection by job scheduling are causing significant problems.

It is possible to support shared DASD with z/OS systems across GRSplexes, as shown in Figure 4-30, by coding a special exit and placing it in the GRS ISGNQXIT exit. Since it is possible for a single installation to have two or more global resource serialization complexes, each operating independently. However, the independent complexes cannot share resources. Also, be certain there are no common links available to global resource serialization on any two complexes.

To avoid a data integrity exposure, ensure that no system outside the complex can access the same shared DASD as any system in the complex. If that is unavoidable, however, you must serialize the data on the shared DASD with the RESERVE macro. You need to decide how many of these systems to combine in one complex.

ISGNQXIT exit

This exit code allows all RESERVE requests for specified volumes in the RNL list to result in an HW RESERVE whenever the resource name is specified in the RNL list. By using the new ISGNQXIT exit, and adding an RNL definition to the conversion table with a QNAME not used by the system and RNAMEs that identify the volumes to share outside of GRS, the RESERVE requests for the volumes included in the RNL definitions always result in an HW RESERVE.

RNLDEF RNL(CON) TYPE(SPECIFIC|GENERIC)

QNAME(HWRESERV)

RNAME(VOLSER|volser-prefix)

The same RESERVE resource requests, which address other volumes, should have the possibility to be filtered through the conversion RNLs to have the HW RESERVE eliminated.

Prior to RNL processing, your system can implement the ISGNQXIT or the ISGNQXITFAST installation exits. These exit routines will affect the way ISGENQ, ENQ, DEQ, and RESERVE requests are handled. The following attributes can be altered (note that for certain types of requests, the exits can change the return/reason code):

•QName

•RName

•Scope

•Device UCB address

•Convert Reserve

•Bypass RNLs.

4.27 ISGNQXITFAST and ISGNQXIT exits

Figure 4-31 ISGNQXITFAST and ISGNQXIT exits

ISGNQXITFAST and ISGNQXIT exits

For each ENQ/DEQ/RESERVE request with SCOPE=SYSTEM or SCOPE=SYSTEMS, the system invokes the ENQ/DEQ installation exit points.

For this purpose, GRS with wildcard support provides exit point ISGNQXIT. GRS also provides the exit point ISGNQXITFAST, which is intended to offer a higher performance alternative to ISGNQXIT.

Both installation exits can modify attributes of the ENQ/DEQ/RESERVE request prior to Resource Names List (RNL) processing; the only difference is the environment with which the exits receive control. For additional information, refer to z/OS MVS: Installation Exits, SA22-7593.

For the purpose of cross-sysplex DASD sharing, only one of the provided sample installation exits ISGNQXITFAST and ISGNQXIT should be active. Both installation exits provide the same functionality and have the same external parameters.

|

Note: System programmers only use RNLs and either the ISGNQXITFAST or ISGNQXIT installation exit. All of the other exits are intended for the independent software vendors (ISVs). As of z/OS V1R9 and later, the installation exits that are used by the ISVs and the ISGNQXITFAST exit (not the ISGNQXIT exit) can get control in a cross memory environment.

|

Whenever global resource serialization encounters a request for a resource with a scope of SYSTEM or SYSTEMS, global resource serialization searches the appropriate RNL to determine the scope of the requested resource. Global resource serialization will not scan the RNL if one of the following is true:

•The request specifies RNL=NO.

•During the system IPL, GRSRNL=EXCLUDE was specified.

•During the system IPL, GRS=NONE in IEASYSxx was specified.

•The ISGNQXIT or ISGNQXITFAST installation exit routines are installed and active on the system.

GRS enhancements for dynamic exits

All major internal GRS control blocks are moved above the bar. This caused some problems for others’ serialization products to extend their monitoring software through external available interfaces. The dynamic GRS exits was enhanced so that the alternate serialization products can fully support the (ENQ, DEQ, RESERVE for both PCs & SVCs) interface in their resource monitoring.

These are the GRS dynamic relevant exits that alternate serialization products can use (ISGCNFXITSYSTEM and ISGCNFXITSYSPLEX were updated and ISGNQXITQUEUED2 is completely new), as follows:

•ISGNXITPREBATCH performance-oriented exit used to determine if the ISGNQXITBATCHCND should be called for a particular ENQ request.

•ISGNQXITBATCHCND details of a particular ENQ request prior to GRS queuing it up.

•ISGCNFXITSYSTEM filtering exit for contention seen for scope=SYSTEM ENQs.

•ISGENDOFLQCB notification of the last requester of a resource DEQing.

•ISGCNFXITSYSPLEX filtering exit for contention seen for scope=SYSTEMS ENQs.

•ISGNQXITQUEUED1 follow-up from the batch exit where local resources have been queued.

•ISGNQXITQUEUED2 follow-up from the batch and queued2 exits where global resources have been queued.

4.28 GRS star operating (1)

Figure 4-32 GRS star operating (1)

GRS star operating (1)

The DISPLAY GRS command shows the state of each system in the complex. The system status is not an indication of how well a system is currently running. There are four systems in the complex, all in GRS state connected. The ISGLOCK structure contains 1048576 locks and the GRS contention notifying system is SC70.

DISPLAY GRS,CONTENTION is useful for analyzing whether a resource contention exists in the complex. In Figure 4-32 sample outputs ASID 0073 and ASID 0075 both hold exclusive a resource with the same ASIDs waiting for exclusive access. The problem looks like a contention deadlock.

To get more details, DISPLAY GRS,ANALYZE,BLOCKER or ANALYZE,WAITER or ANALYZE,DEPENDENCY can be used to find out why a resource is blocked and which job or ASID is responsible for holding the resource.

4.29 GRS star operating (2)

Figure 4-33 GRS star operating (2)

GRS star operating (2)

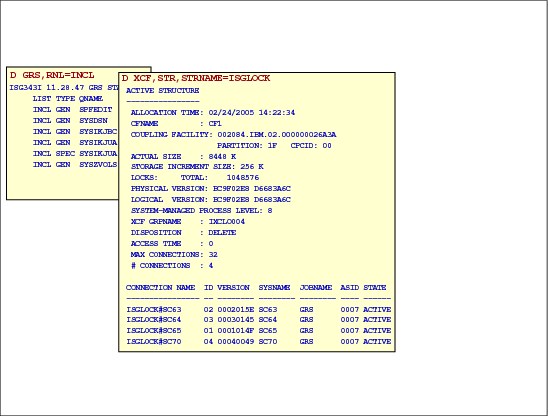

DISPLAY GRS,RNL=INCL shows the contents of the inclusion RNL. See 4.6, “Resource name list (RNL)” on page 195 for more details.

Figure 4-33 shows a partial display of the ISGLOCK structure details gathered with the

D XCF,STR,STRNAME=ISGLOCK command. This display is useful for seeing the ISGLOCK structure CFRM policy definition parameters. The output also shows the systems connected to the structure and their connection names.

D XCF,STR,STRNAME=ISGLOCK command. This display is useful for seeing the ISGLOCK structure CFRM policy definition parameters. The output also shows the systems connected to the structure and their connection names.

4.30 Global resource serialization latch manager

Figure 4-34 Global resource serialization latch manager

Global resource serialization latch manager

The global resource serialization latch manager is a service that authorized programs can use to serialize resources within an address space or, using cross memory capability, within a single MVS system. Programs can call the latch manager services while running in task or service request block (SRB) mode.

GRS ENQ and latch services

GRS provides two sets of critical system serialization services. The GRS ENQ services provide the ability to serialize an abstract resource within the scope of a JOB STEP, SYSTEM, or multi-system complex (GRS complex). The GRS complex is usually equal to the sysplex. Via the HW reserve function, DASD volumes can be shared between different systems that are not in the same GRS complex or even in the same operating system, for example, between z/VM and z/OS. Enq/Reserve services can be used by authorized or unauthorized users. Almost every component, subsystem, and many applications use ENQ in some shape or form.

The GRS latch services provide a high-speed serialization service for authorized callers only. User-provided storage is used to manage a lock table that is indexed by a user-defined lock number. GRS latch is also widely used. Very large users are USS, System Logger, RRS, MVS, and many others.

New latch request information

A unit of work address and the “latch request hold elapsed time” is displayed by the D GRS,CONTENTION,LATCH command output. This solves a long term problem determination issue related to which units of work within an ASID are affected and if displayed, contention is for new or old instances of contention. With current releases, this is impossible to determine.

The difference is between long-term contention and new instances of short-term contention. For example, every time you look, the same players are in contention but you do not know whether something is moving or not. Some latches can be in frequent contention.

The new information provided is as follows:

•The holding and waiting units of work TCB or SRB within an ASID

•The amount of time that the latch is in contention

Not having this information makes it impossible to determine whether a latch was in contention continuously between intervals. For example, it has gone in and out of contention, but every time you look the same players are in contention.

Command examples

If latch contention exists, the system displays the messages shown in Figure 4-35.

|

D GRS,LATCH,CONTENTION

ISG343I 23.00.04 GRS LATCH STATUS 886

LATCH SET NAME: MY.FIRST.LATCHSET

CREATOR JOBNAME: APPINITJ CREATOR ASID: 0011

LATCH NUMBER: 1

REQUESTOR ASID EXC/SHR OWN/WAIT WORKUNIT TCB ELAPSED TIME

MYJOB1 001 EXCLUSIVE OWN 006E6CF0 Y 00:00:40.003

DATACHG 0019 EXCLUSIVE WAIT 006E6B58 Y 00:00:28.001

DBREC 0019 SHARED WAIT 006E6CF0 Y 00:00:27.003

LATCH NUMBER: 2

REQUESTOR ASID EXC/SHR OWN/WAIT WORKUNIT TCB ELAPSED TIME

PEEKDAT1 0011 SHARED OWN 007E6CF0 Y 00:00:32.040

PEEKDAT2 0019 SHARED OWN 007F6CF0 Y 00:00:32.040

CHGDAT 0019 EXCLUSIVE WAIT 007D6CF0 Y 00:00:07.020

LATCH SET NAME: SYS1.FIRST.LATCHSET

CREATOR JOBNAME: INITJOB2 CREATOR ASID: 0019

LATCH NUMBER: 1

REQUESTOR ASID EXC/SHR OWN/WAIT WORKUNIT TCB ELAPSED TIME

MYJOB2 0019 SHARED OWN 006E6CF0 Y 00:01:59.030

LATCH NUMBER: 2

REQUESTOR ASID EXC/SHR OWN/WAIT WORKUNIT TCB ELAPSED TIME

TRANJOB1 0019 SHARED OWN 006E7B58 Y 01:05:06.020

TRANJOB2 0019 EXCLUSIVE WAIT 006E9B58 Y 00:01:05.003

|

Figure 4-35 D GRS,LATCH,CONTENTION command output

|

Note: Refer to z/OS MVS Diagnosis: Reference, GA22-7588 and component-specific documentation for additional information on what specific latch contention could mean and what steps should be taken for different circumstances.

|