![]()

Chapter 7

Evaluating, comparing, and expanding models

![]()

In the previous chapter we discussed discrepancy measures for checking the fit of model to data. In this chapter we seek not to check models but to compare them and explore directions for improvement. Even if all of the models being considered have mismatches with the data, it can be informative to evaluate their predictive accuracy and consider where to go next. The challenge we focus on here is the estimation of the predictive model accuracy correcting for the bias inherent in evaluating a model’s predictions of the data that were used to fit it.

We proceed as follows. First we discuss measures of predictive fit, using a small linear regression as a running example. We consider the differences between external validation, fit to training data, and cross-validation in Bayesian contexts. Next we describe information criteria, which are estimates and approximations to cross-validated or externally validated fit, used for adjusting for overfitting when measuring predictive error. Section 7.3 considers the use of predictive error measures for model comparison using the 8-schools model as an example. The chapter continues with Bayes factors and continuous model expansion and concludes in Section 7.6 with an extended discussion of robustness to model assumptions in the context of a simple but nontrivial sampling example.

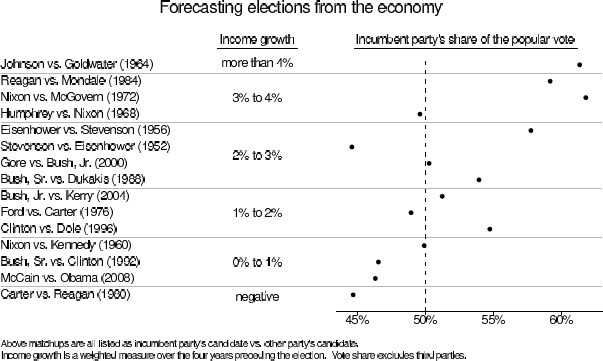

Example. Forecasting presidential elections

We shall use a simple linear regression as a running example. Figure 7.1 shows a quick summary of economic conditions and presidential elections over the past several decades. It is based on the ‘bread and peace’ model created by political scientist Douglas Hibbs to forecast elections based solely on economic growth (with corrections for wartime, notably Adlai Stevenson’s exceptionally poor performance in 1952 and Hubert Humphrey’s loss in 1968, years when Democrats were presiding over unpopular wars). Better forecasts are possible using additional information such as incumbency and opinion polls, but what is impressive here is that this simple model does pretty well all by itself.

For simplicity, we predict y (vote share) solely from x (economic performance), using a linear regression, y ~ N(a + bx, σ2), with a noninformative prior distribution, p(a, b, log σ) ∝ 1. Although these data form a time series, we are treating them here as a simple regression problem.

The posterior distribution for linear regression with a conjugate prior is normal-inverse-![]() 2. We go over the derivation in Chapter 14; here we quickly present the distribution for our two-coefficient example so that we can go forward and use this distribution in our predictive error measures. The posterior distribution is most conveniently factored as p(a, b, σ2

2. We go over the derivation in Chapter 14; here we quickly present the distribution for our two-coefficient example so that we can go forward and use this distribution in our predictive error measures. The posterior distribution is most conveniently factored as p(a, b, σ2![]() y) = p(σ2

y) = p(σ2![]() y)p(a, b

y)p(a, b![]() σ2, y):

σ2, y):

• The marginal posterior distribution of the variance parameter is where

![]()

Figure 7.1 Douglas Hibbs’s ‘bread and peace’ model of voting and the economy. Presidential elections since 1952 are listed in order of the economic performance at the end of the preceding administration (as measured by inflation-adjusted growth in average personal income). The better the economy, the better the incumbent party’s candidate generally does, with the biggest exceptions being 1952 (Korean War) and 1968 (Vietnam War).

where

![]()

and X is the n × J matrix of predictors, in this case the 15 × 2 matrix whose first column is a column of 1’s and whose second column is the vector x of economic performance numbers.

• The conditional posterior distribution of the vector of coefficients, β = (a, b), is

![]()

where

![]()

For the data at hand, s = 3.6, ![]() = (45.9, 3.2), and

= (45.9, 3.2), and ![]() .

.

7.1 Measures of predictive accuracy

One way to evaluate a model is through the accuracy of its predictions. Sometimes we care about this accuracy for its own sake, as when evaluating a forecast. In other settings, predictive accuracy is valued for comparing different models rather than for its own sake. We begin by considering different ways of defining the accuracy or error of a model’s predictions, then discuss methods for estimating predictive accuracy or error from data.

Preferably, the measure of predictive accuracy is specifically tailored for the application at hand, and it measures as correctly as possible the benefit (or cost) of predicting future data with the model. Examples of application-specific measures are classification accuracy and monetary cost. More examples are given in Chapter 9 in the context of decision analysis. For many data analyses, however, explicit benefit or cost information is not available, and the predictive performance of a model is assessed by generic scoring functions and rules.

In point prediction (predictive point estimation or point forecasting) a single value is reported as a prediction of the unknown future observation. Measures of predictive accuracy for point prediction are called scoring functions. We consider the squared error as an example as it is the most common scoring function in the literature on prediction.

Mean squared error. A model’s fit to new data can be summarized in point prediction by mean squared error,![]() , or a weighted version such as

, or a weighted version such as ![]() These measures have the advantage of being easy to compute and, more importantly, to interpret, but the disadvantage of being less appropriate for models that are far from the normal distribution.

These measures have the advantage of being easy to compute and, more importantly, to interpret, but the disadvantage of being less appropriate for models that are far from the normal distribution.

In probabilistic prediction (probabilistic forecasting) the aim is to report inferences about ![]() in such a way that the full uncertainty over

in such a way that the full uncertainty over ![]() is taken into account. Measures of predictive accuracy for probabilistic prediction are called scoring rules. Examples include the quadratic, logarithmic, and zero-one scores. Good scoring rules for prediction are proper and local: propriety of the scoring rule motivates the decision maker to report his or her beliefs honestly, and locality incorporates the possibility that bad predictions for some

is taken into account. Measures of predictive accuracy for probabilistic prediction are called scoring rules. Examples include the quadratic, logarithmic, and zero-one scores. Good scoring rules for prediction are proper and local: propriety of the scoring rule motivates the decision maker to report his or her beliefs honestly, and locality incorporates the possibility that bad predictions for some ![]() may be judged more harshly than others. It can be shown that the logarithmic score is the unique (up to an affine transformation) local and proper scoring rule, and it is commonly used for evaluating probabilistic predictions.

may be judged more harshly than others. It can be shown that the logarithmic score is the unique (up to an affine transformation) local and proper scoring rule, and it is commonly used for evaluating probabilistic predictions.

Log predictive density or log-likelihood. The logarithmic score for predictions is the log predictive density log p(y![]() θ), which is proportional to the mean squared error if the model is normal with constant variance. The log predictive density is also sometimes called the log-likelihood. The log predictive density has an important role in statistical model comparison because of its connection to the Kullback-Leibler information measure. In the limit of large sample sizes, the model with the lowest Kullback-Leibler information—and thus, the highest expected log predictive density—will have the highest posterior probability. Thus, it seems reasonable to use expected log predictive density as a measure of overall model fit. Due to its generality, we use the log predictive density to measure predictive accuracy in this chapter.

θ), which is proportional to the mean squared error if the model is normal with constant variance. The log predictive density is also sometimes called the log-likelihood. The log predictive density has an important role in statistical model comparison because of its connection to the Kullback-Leibler information measure. In the limit of large sample sizes, the model with the lowest Kullback-Leibler information—and thus, the highest expected log predictive density—will have the highest posterior probability. Thus, it seems reasonable to use expected log predictive density as a measure of overall model fit. Due to its generality, we use the log predictive density to measure predictive accuracy in this chapter.

Given that we are working with the log predictive density, the question may arise: why not use the log posterior? Why only use the data model and not the prior density in this calculation? The answer is that we are interested here in summarizing the fit of model to data, and for this purpose the prior is relevant in estimating the parameters but not in assessing a model’s accuracy.

We are not saying that the prior cannot be used in assessing a model’s fit to data; rather we say that the prior density is not relevant in computing predictive accuracy. Predictive accuracy is not the only concern when evaluating a model, and even within the bailiwick of predictive accuracy, the prior is relevant in that it affects inferences about θ and thus affects any calculations involving p(y![]() θ). In a sparse-data setting, a poor choice of prior distribution can lead to weak inferences and poor predictions.

θ). In a sparse-data setting, a poor choice of prior distribution can lead to weak inferences and poor predictions.

Predictive accuracy for a single data point

The ideal measure of a model’s fit would be its out-of-sample predictive performance for new data produced from the true data-generating process (external validation). We label f as the true model, y as the observed data (thus, a single realization of the dataset y from the distribution f(y)), and ![]() as future data or alternative datasets that could have been seen. The out-of-sample predictive fit for a new data point

as future data or alternative datasets that could have been seen. The out-of-sample predictive fit for a new data point ![]() i using logarithmic score is then,

i using logarithmic score is then,

![]()

In the above expression, ppost(![]() i) is the predictive density for

i) is the predictive density for ![]() i induced by the posterior distribution ppost(θ). We have introduced the notation ppost here to represent the posterior distribution because our expressions will soon become more complicated and it will be convenient to avoid explicitly showing the conditioning of our inferences on the observed data y. More generally we use ppost and Epost to denote any probability or expectation that averages over the posterior distribution of θ.

i induced by the posterior distribution ppost(θ). We have introduced the notation ppost here to represent the posterior distribution because our expressions will soon become more complicated and it will be convenient to avoid explicitly showing the conditioning of our inferences on the observed data y. More generally we use ppost and Epost to denote any probability or expectation that averages over the posterior distribution of θ.

Averaging over the distribution of future data

We must then take one further step. The future data ![]() i are themselves unknown and thus we define the expected out-of-sample log predictive density,

i are themselves unknown and thus we define the expected out-of-sample log predictive density,

In any application, we would have some ppost but we do not in general know the data distribution f. A natural way to estimate the expected out-of-sample log predictive density would be to plug in an estimate for f, but this will tend to imply too good a fit, as we discuss in Section 7.2. For now we consider the estimation of predictive accuracy in a Bayesian context.

To keep comparability with the given dataset, one can define a measure of predictive accuracy for the n data points taken one at a time:

which must be defined based on some agreed-upon division of the data y into individual data points yi. The advantage of using a pointwise measure, rather than working with the joint posterior predictive distribution, ppost(![]() ) is in the connection of the pointwise calculation to cross-validation, which allows some fairly general approaches to approximation of out-of-sample fit using available data.

) is in the connection of the pointwise calculation to cross-validation, which allows some fairly general approaches to approximation of out-of-sample fit using available data.

It is sometimes useful to consider predictive accuracy given a point estimate ![]() , thus,

, thus,

![]()

For models with independent data given parameters, there is no difference between joint or pointwise prediction given a point estimate, as ![]() .

.

Evaluating predictive accuracy for a fitted model

In practice the parameter θ is not known, so we cannot know the log predictive density log p(y![]() θ). For the reasons discussed above we would like to work with the posterior distribution, ppost(θ) = p(θ

θ). For the reasons discussed above we would like to work with the posterior distribution, ppost(θ) = p(θ![]() y), and summarize the predictive accuracy of the fitted model to data by

y), and summarize the predictive accuracy of the fitted model to data by

To compute this predictive density in practice, we can evaluate the expectation using draws from ppost(θ), the usual posterior simulations, which we label θs, s = 1, …, S:

We typically assume that the number of simulation draws S is large enough to fully capture the posterior distribution; thus we shall refer to the theoretical value (7.4) and the computation (7.5) interchangeably as the log pointwise predictive density or lppd of the data.

As we shall discuss in Section 7.2, the lppd of observed data y is an overestimate of the elppd for future data (7.2). Hence the plan is to start with (7.5) and then apply some sort of bias correction to get a reasonable estimate of (7.2).

Choices in defining the likelihood and predictive quantities

As is well known in hierarchical modeling, the line separating prior distribution from likelihood is somewhat arbitrary and is related to the question of what aspects of the data will be changed in hypothetical replications. In a hierarchical model with direct parameters α1, …, αJ and hyperparameters ![]() , we can imagine replicating new data in existing groups (with the ‘likelihood’ being proportional to

, we can imagine replicating new data in existing groups (with the ‘likelihood’ being proportional to ![]() or new data in new groups (a new αJ+1 is drawn, and the ‘likelihood’ is proportional to

or new data in new groups (a new αJ+1 is drawn, and the ‘likelihood’ is proportional to ![]() . In either case we can easily compute the posterior predictive density of the observed data y:

. In either case we can easily compute the posterior predictive density of the observed data y:

• When predicting ![]() (that is, new data from existing groups), we compute

(that is, new data from existing groups), we compute ![]() for each posterior simulation

for each posterior simulation ![]() and then take the average, as in (7.5).

and then take the average, as in (7.5).

• When predicting ![]() (that is, new data from a new group), we sample

(that is, new data from a new group), we sample ![]() to compute

to compute ![]() .

.

Similarly, in a mixture model, we can consider replications conditioning on the mixture indicators, or replications in which the mixture indicators are redrawn as well.

Similar choices arise even in the simplest experiments. For example, in the model ![]() , we have the option of assuming the sample size is fixed by design (that is, leaving n unmodeled) or treating it as a random variable and allowing a new ñ in a hypothetical replication.

, we have the option of assuming the sample size is fixed by design (that is, leaving n unmodeled) or treating it as a random variable and allowing a new ñ in a hypothetical replication.

We are not bothered by the nonuniqueness of the predictive distribution. Just as with posterior predictive checks, different distributions correspond to different potential uses of a posterior inference. Given some particular data, a model might predict new data accurately in some scenarios but not in others.

7.2 Information criteria and cross-validation

For historical reasons, measures of predictive accuracy are referred to as information criteria and are typically defined based on the deviance (the log predictive density of the data given a point estimate of the fitted model, multiplied by -2; that is -2 log ![]() .

.

A point estimate ![]() and posterior distribution ppost(θ) are fit to the data y, and out-of-sample predictions will typically be less accurate than implied by the within-sample predictive accuracy. To put it another way, the accuracy of a fitted model’s predictions of future data will generally be lower, in expectation, than the accuracy of the same model’s predictions for observed data—even if the family of models being fit happens to include the true data-generating process, and even if the parameters in the model happen to be sampled exactly from the specified prior distribution.

and posterior distribution ppost(θ) are fit to the data y, and out-of-sample predictions will typically be less accurate than implied by the within-sample predictive accuracy. To put it another way, the accuracy of a fitted model’s predictions of future data will generally be lower, in expectation, than the accuracy of the same model’s predictions for observed data—even if the family of models being fit happens to include the true data-generating process, and even if the parameters in the model happen to be sampled exactly from the specified prior distribution.

We are interested in prediction accuracy for two reasons: first, to measure the performance of a model that we are using; second, to compare models. Our goal in model comparison is not necessarily to pick the model with lowest estimated prediction error or even to average over candidate models—as discussed in Section 7.5, we prefer continuous model expansion to discrete model choice or averaging—but at least to put different models on a common scale. Even models with completely different parameterizations can be used to predict the same measurements.

When different models have the same number of parameters estimated in the same way, one might simply compare their best-fit log predictive densities directly, but when comparing models of differing size or differing effective size (for example, comparing logistic regressions fit using uniform, spline, or Gaussian process priors), it is important to make some adjustment for the natural ability of a larger model to fit data better, even if only by chance.

Estimating out-of-sample predictive accuracy using available data

Several methods are available to estimate the expected predictive accuracy without waiting for out-of-sample data. We cannot compute formulas such as (7.1) directly because we do not know the true distribution, f. Instead we can consider various approximations. We know of no approximation that works in general, but predictive accuracy is important enough that it is still worth trying. We list several reasonable-seeming approximations here. Each of these methods has flaws, which tells us that any predictive accuracy measure that we compute will be only approximate.

• Within-sample predictive accuracy. A naive estimate of the expected log predictive density for new data is the log predictive density for existing data. As discussed above, we would like to work with the Bayesian pointwise formula, that is, lppd as computed using the simulation (7.5). This summary is quick and easy to understand but is in general an overestimate of (7.2) because it is evaluated on the data from which the model was fit.

• Adjusted within-sample predictive accuracy. Given that lppd is a biased estimate of elppd, the next logical step is to correct that bias. Formulas such as AIC, DIC, and WAIC (all discussed below) give approximately unbiased estimates of elppd by starting with something like lppd and then subtracting a correction for the number of parameters, or the effective number of parameters, being fit. These adjustments can give reasonable answers in many cases but have the general problem of being correct at best only in expectation, not necessarily in any given case.

• Cross-validation. One can attempt to capture out-of-sample prediction error by fitting the model to training data and then evaluating this predictive accuracy on a holdout set. Cross-validation avoids the problem of overfitting but remains tied to the data at hand and thus can be correct at best only in expectation. In addition, cross-validation can be computationally expensive: to get a stable estimate typically requires many data partitions and fits. At the extreme, leave-one-out cross-validation (LOO-CV) requires n fits except when some computational shortcut can be used to approximate the computations.

Log predictive density asymptotically, or for normal linear models

Before introducing information criteria it is useful to discuss some asymptotical results. Under conditions specified in Chapter 4, the posterior distribution, p(θ![]() y), approaches a normal distribution in the limit of increasing sample size. In this asymptotic limit, the posterior is dominated by the likelihood—the prior contributes only one factor, while the likelihood contributes n factors, one for each data point—and so the likelihood function also approaches the same normal distribution.

y), approaches a normal distribution in the limit of increasing sample size. In this asymptotic limit, the posterior is dominated by the likelihood—the prior contributes only one factor, while the likelihood contributes n factors, one for each data point—and so the likelihood function also approaches the same normal distribution.

Figure 7.2 Posterior distribution of the log predictive density log p(y![]() θ) for the election forecasting example. The variation comes from posterior uncertainty in θ. The maximum value of the distribution, -40.3, is the log predictive density when θ is at the maximum likelihood estimate. The mean of the distribution is -42.0, and the difference between the mean and the maximum is 1.7, which is close to the value of 3/2 that would be predicted from asymptotic theory, given that we are estimating 3 parameters (two coefficients and a residual variance).

θ) for the election forecasting example. The variation comes from posterior uncertainty in θ. The maximum value of the distribution, -40.3, is the log predictive density when θ is at the maximum likelihood estimate. The mean of the distribution is -42.0, and the difference between the mean and the maximum is 1.7, which is close to the value of 3/2 that would be predicted from asymptotic theory, given that we are estimating 3 parameters (two coefficients and a residual variance).

As sample size n → ∞, we can label the limiting posterior distribution as ![]() . In this limit the log predictive density is

. In this limit the log predictive density is

![]()

where c(y) is a constant that only depends on the data y and the model class but not on the parameters θ.

The limiting multivariate normal distribution for θ induces a posterior distribution for the log predictive density that ends up being a constant ![]() minus 1/2 times a -

minus 1/2 times a -![]() k2 random variable, where k is the dimension of θ, that is, the number of parameters in the model. The maximum of this distribution of the log predictive density is attained when θ equals the maximum likelihood estimate (of course), and its posterior mean is at a value k/2 lower. For actual posterior distributions, this asymptotic result is only an approximation, but it will be useful as a benchmark for interpreting the log predictive density as a measure of fit.

k2 random variable, where k is the dimension of θ, that is, the number of parameters in the model. The maximum of this distribution of the log predictive density is attained when θ equals the maximum likelihood estimate (of course), and its posterior mean is at a value k/2 lower. For actual posterior distributions, this asymptotic result is only an approximation, but it will be useful as a benchmark for interpreting the log predictive density as a measure of fit.

With singular models (such as mixture models and overparameterized complex models more generally discussed in Part V of this book), a single data model can arise from more than one possible parameter vector, the Fisher information matrix is not positive definite, plug-in estimates are not representative of the posterior distribution, and the deviance does not converge to a ![]() 2 distribution.

2 distribution.

Example. Fit of the election forecasting model: Bayesian inference

The log predictive probability density of the data is ![]() , with an uncertainty induced by the posterior distribution,

, with an uncertainty induced by the posterior distribution, ![]() .

.

Posterior distribution of the observed log predictive density, p(y![]() θ). The posterior distribution

θ). The posterior distribution ![]() is normal-inverse-

is normal-inverse-![]() 2. To get a sense of uncertainty in the log predictive density

2. To get a sense of uncertainty in the log predictive density ![]() , we compute it for each of S = 10,000 posterior simulation draws of θ. Figure 7.2 shows the resulting distribution, which looks roughly like a

, we compute it for each of S = 10,000 posterior simulation draws of θ. Figure 7.2 shows the resulting distribution, which looks roughly like a ![]() 32 (no surprise since three parameters are being estimated—two coefficients and a variance—and the sample size of 15 is large enough that we would expect the asymptotic normal approximation to the posterior distribution to be pretty good), scaled by a factor of -1/2 and shifted so that its upper limit corresponds to the maximum likelihood estimate (with log predictive density of -40.3, as noted earlier). The mean of the posterior distribution of the log predictive density is -42.0, and the difference between the mean and the maximum is 1.7, which is close to the value of 3/2 that would be predicted from asymptotic theory, given that 3 parameters are being estimated.

32 (no surprise since three parameters are being estimated—two coefficients and a variance—and the sample size of 15 is large enough that we would expect the asymptotic normal approximation to the posterior distribution to be pretty good), scaled by a factor of -1/2 and shifted so that its upper limit corresponds to the maximum likelihood estimate (with log predictive density of -40.3, as noted earlier). The mean of the posterior distribution of the log predictive density is -42.0, and the difference between the mean and the maximum is 1.7, which is close to the value of 3/2 that would be predicted from asymptotic theory, given that 3 parameters are being estimated.

Akaike information criterion (AIC)

In much of the statistical literature on predictive accuracy, inference for θ is summarized not by a posterior distribution ppost but by a point estimate ![]() , typically the maximum likelihood estimate. Out-of-sample predictive accuracy is then defined not by the expected log posterior predictive density (7.1) but by elpd

, typically the maximum likelihood estimate. Out-of-sample predictive accuracy is then defined not by the expected log posterior predictive density (7.1) but by elpd![]() defined in (7.3), where both y and

defined in (7.3), where both y and ![]() are random. There is no direct way to calculate (7.3); instead the standard approach is to use the log posterior density of the observed data y given a point estimate

are random. There is no direct way to calculate (7.3); instead the standard approach is to use the log posterior density of the observed data y given a point estimate ![]() and correct for bias due to overfitting.

and correct for bias due to overfitting.

Let k be the number of parameters estimated in the model. The simplest bias correction is based on the asymptotic normal posterior distribution. In this limit (or in the special case of a normal linear model with known variance and uniform prior distribution), subtracting k from the log predictive density given the maximum likelihood estimate is a correction for how much the fitting of k parameters will increase predictive accuracy, by chance alone:

![]()

AIC is defined as the above quantity multiplied by -2; thus ![]() .

.

It makes sense to adjust the deviance for fitted parameters, but once we go beyond linear models with flat priors, we cannot simply add k. Informative prior distributions and hierarchical structures tend to reduce the amount of overfitting, compared to what would happen under simple least squares or maximum likelihood estimation.

For models with informative priors or hierarchical structure, the effective number of parameters strongly depends on the variance of the group-level parameters. We shall illustrate in Section 7.3 with the example of educational testing experiments in 8 schools. Under the hierarchical model in that example, we would expect the effective number of parameters to be somewhere between 8 (one for each school) and 1 (for the average of the school effects).

Deviance information criterion (DIC) and effective number of parameters

DIC is a somewhat Bayesian version of AIC that takes formula (7.6) and makes two changes, replacing the maximum likelihood estimate ![]() with the posterior mean

with the posterior mean ![]() and replacing k with a data-based bias correction. The new measure of predictive accuracy is,

and replacing k with a data-based bias correction. The new measure of predictive accuracy is,

![]()

where pDIC is the effective number of parameters, defined as,

![]()

where the expectation in the second term is an average of θ over its posterior distribution. Expression (7.8) is calculated using simulations θs, s = 1, …, S as,

![]()

The posterior mean of θ will produce the maximum log predictive density when it happens to be the same as the mode, and negative pDIC can be produced if posterior mean is far from the mode.

An alternative version of DIC uses a slightly different definition of effective number of parameters:

![]()

Both pDIC and pDIC alt give the correct answer in the limit of fixed model and large n and can be derived from the asymptotic -![]() 2 distribution (shifted and scaled by a factor of -1/2) of the log predictive density. For linear models with uniform prior distributions, both these measures of effective sample size reduce to k. Of these two measures, pDIC is more numerically stable but pDIC alt has the advantage of always being positive. Compared to previous proposals for estimating the effective number of parameters, easier and more stable Monte Carlo approximation of DIC made it quickly popular.

2 distribution (shifted and scaled by a factor of -1/2) of the log predictive density. For linear models with uniform prior distributions, both these measures of effective sample size reduce to k. Of these two measures, pDIC is more numerically stable but pDIC alt has the advantage of always being positive. Compared to previous proposals for estimating the effective number of parameters, easier and more stable Monte Carlo approximation of DIC made it quickly popular.

The actual quantity called DIC is defined in terms of the deviance rather than the log predictive density; thus,

![]()

Watanabe-Akaike or widely available information criterion (WAIC)

WAIC is a more fully Bayesian approach for estimating the out-of-sample expectation (7.2), starting with the computed log pointwise posterior predictive density (7.5) and then adding a correction for effective number of parameters to adjust for overfitting.

Two adjustments have been proposed in the literature. Both are based on pointwise calculations and can be viewed as approximations to cross-validation, based on derivations not shown here.

The first approach is a difference, similar to that used to construct pDIC:

![]()

which can be computed from simulations by replacing the expectations by averages over the S posterior draws θs:

![]()

The other measure uses the variance of individual terms in the log predictive density summed over the n data points:

![]()

This expression looks similar to (7.10), the formula for pDIC alt (although without the factor of 2), but is more stable because it computes the variance separately for each data point and then sums; the summing yields stability.

To calculate (7.11), we compute the posterior variance of the log predictive density for each data point yi, that is, Vs=1S log p(yi![]() θs), where Vs=1S represents the sample variance,

θs), where Vs=1S represents the sample variance, ![]() . Summing over all the data points yi gives the effective number of parameters:

. Summing over all the data points yi gives the effective number of parameters:

![]()

We can then use either pWAIC 1 or pWAIC 2 as a bias correction:

![]()

In the present discussion, we evaluate both pWAIC 1 and pWAIC 2. For practical use, we recommend pWAIC 2 because its series expansion has closer resemblance to the series expansion for LOO-CV and also in practice seems to give results closer to LOO-CV.

As with AIC and DIC, we define WAIC as -2 times the expression (7.13) so as to be on the deviance scale. In Watanabe’s original definition, WAIC is the negative of the average log pointwise predictive density (assuming the prediction of a single new data point) and thus is divided by n and does not have the factor 2; here we scale it so as to be comparable with AIC, DIC, and other measures of deviance.

For a normal linear model with large sample size, known variance, and uniform prior distribution on the coefficients, pWAIC 1 and pWAIC 2 are approximately equal to the number of parameters in the model. More generally, the adjustment can be thought of as an approximation to the number of ‘unconstrained’ parameters in the model, where a parameter counts as 1 if it is estimated with no constraints or prior information, 0 if it is fully constrained or if all the information about the parameter comes from the prior distribution, or an intermediate value if both the data and prior distributions are informative.

Compared to AIC and DIC, WAIC has the desirable property of averaging over the posterior distribution rather than conditioning on a point estimate. This is especially relevant in a predictive context, as WAIC is evaluating the predictions that are actually being used for new data in a Bayesian context. AIC and DIC estimate the performance of the plug-in predictive density, but Bayesian users of these measures would still use the posterior predictive density for predictions.

Other information criteria are based on Fisher’s asymptotic theory assuming a regular model for which the likelihood or the posterior converges to a single point, and where maximum likelihood and other plug-in estimates are asymptotically equivalent. WAIC works also with singular models and thus is particularly helpful for models with hierarchical and mixture structures in which the number of parameters increases with sample size and where point estimates often do not make sense.

For all these reasons, we find WAIC more appealing than AIC and DIC. A cost of using WAIC is that it relies on a partition of the data into n pieces, which is not so easy to do in some structured-data settings such as time series, spatial, and network data. AIC and DIC do not make this partition explicitly, but derivations of AIC and DIC assume that residuals are independent given the point estimate ![]() : conditioning on a point estimate

: conditioning on a point estimate ![]() eliminates posterior dependence at the cost of not fully capturing posterior uncertainty.

eliminates posterior dependence at the cost of not fully capturing posterior uncertainty.

Effective number of parameters as a random variable

It makes sense that pDIC and pWAIC depend not just on the structure of the model but on the particular data that happen to be observed. For a simple example, consider the model yi, …, yn ~ N(θ, 1), with n large and θ ~ U(0, ∞). That is, θ is constrained to be positive but otherwise has a noninformative uniform prior distribution. How many parameters are being estimated in this model? If the measurement y is close to zero, then the effective number of parameters p is approximately 1/2, since roughly half the information in the posterior distribution is coming from the data and half from the prior constraint of positivity. However, if y is positive and large, then the constraint is essentially irrelevant, and the effective number of parameters is approximately 1. This example illustrates that, even with a fixed model and fixed true parameters, it can make sense for the effective number of parameters to depend on data.

‘Bayesian’ information criterion (BIC)

There is also something called the Bayesian information criterion (a misleading name, we believe) that adjusts for the number of fitted parameters with a penalty that increases with the sample size, n. The formula is ![]() , which for large datasets gives a larger penalty per parameter compared to AIC and thus favors simpler models. BIC differs from the other information criteria considered here in being motivated not by an estimation of predictive fit but by the goal of approximating the marginal probability density of the data, p(y), under the model, which can be used to estimate relative posterior probabilities in a setting of discrete model comparison. For reasons described in Section 7.4, we do not typically find it useful to think about the posterior probabilities of models, but we recognize that others find BIC and similar measures helpful for both theoretical and applied reason. At this point, we merely point out that BIC has a different goal than the other measures we have discussed. It is completely possible for a complicated model to predict well and have a low AIC, DIC, and WAIC, but, because of the penalty function, to have a relatively high (that is, poor) BIC. Given that BIC is not intended to predict out-of-sample model performance but rather is designed for other purposes, we do not consider it further here.

, which for large datasets gives a larger penalty per parameter compared to AIC and thus favors simpler models. BIC differs from the other information criteria considered here in being motivated not by an estimation of predictive fit but by the goal of approximating the marginal probability density of the data, p(y), under the model, which can be used to estimate relative posterior probabilities in a setting of discrete model comparison. For reasons described in Section 7.4, we do not typically find it useful to think about the posterior probabilities of models, but we recognize that others find BIC and similar measures helpful for both theoretical and applied reason. At this point, we merely point out that BIC has a different goal than the other measures we have discussed. It is completely possible for a complicated model to predict well and have a low AIC, DIC, and WAIC, but, because of the penalty function, to have a relatively high (that is, poor) BIC. Given that BIC is not intended to predict out-of-sample model performance but rather is designed for other purposes, we do not consider it further here.

Leave-one-out cross-validation

In Bayesian cross-validation, the data are repeatedly partitioned into a training set ytrain and a holdout set yholdout, and then the model is fit to ytrain (thus yielding a posterior distribution ptrain(θ) = p(θ![]() ytrain)), with this fit evaluated using an estimate of the log predictive density of the holdout data, log

ytrain)), with this fit evaluated using an estimate of the log predictive density of the holdout data, log ![]() . Assuming the posterior distribution p(θ

. Assuming the posterior distribution p(θ![]() ytrain) is summarized by S simulation draws θs, we calculate the log predictive density as log

ytrain) is summarized by S simulation draws θs, we calculate the log predictive density as log ![]() .

.

For simplicity, we restrict our attention here to leave-one-out cross-validation (LOO-CV), the special case with n partitions in which each holdout set represents a single data point. Performing the analysis for each of the n data points (or perhaps a random subset for efficient computation if n is large) yields n different inferences ppost(-i), each summarized by S posterior simulations, θis.

The Bayesian LOO-CV estimate of out-of-sample predictive fit is

![]()

Each prediction is conditioned on n - 1 data points, which causes underestimation of the predictive fit. For large n the difference is negligible, but for small n (or when using k-fold cross-validation) we can use a first order bias correction b by estimating how much better predictions would be obtained if conditioning on n data points:

![]()

where

![]()

The bias-corrected Bayesian LOO-CV is then

![]()

The bias correction b is rarely used as it is usually small, but we include it for completeness.

To make comparisons to other methods, we compute an estimate of the effective number of parameters as

![]()

or, using bias-corrected LOO-CV,

![]()

Cross-validation is like WAIC in that it requires data to be divided into disjoint, ideally conditionally independent, pieces. This represents a limitation of the approach when applied to structured models. In addition, cross-validation can be computationally expensive except in settings where shortcuts are available to approximate the distributions ppost(-i) without having to re-fit the model each time. For the examples in this chapter such shortcuts are available, but we use the brute force approach for clarity. If no shortcuts are available, common approach is to use k-fold cross-validation where data is partitioned in k sets. With moderate value of k, for example 10, computation time is reasonable in most applications.

Under some conditions, different information criteria have been shown to be asymptotically equal to leave-one-out cross-validation (in the limit n → ∞, the bias correction can be ignored in the proofs). AIC has been shown to be asymptotically equal to LOO-CV as computed using the maximum likelihood estimate. DIC is a variation of the regularized information criteria which have been shown to be asymptotically equal to LOO-CV using plug-in predictive densities. WAIC has been shown to be asymptotically equal to Bayesian LOO-CV.

For finite n there is a difference, as LOO-CV conditions the posterior predictive densities on n - 1 data points. These differences can be apparent for small n or in hierarchical models, as we discuss in our examples. Other differences arise in regression or hierarchical models. LOO-CV assumes the prediction task ![]() ) while WAIC estimates

) while WAIC estimates ![]() , so WAIC is making predictions only at x-locations already observed (or in subgroups indexed by xi). This can make a noticeable difference in flexible regression models such as Gaussian processes or hierarchical models where prediction given xi may depend only weakly on all other data points (y-i, x-i). We illustrate with a simple hierarchical model in Section 7.3.

, so WAIC is making predictions only at x-locations already observed (or in subgroups indexed by xi). This can make a noticeable difference in flexible regression models such as Gaussian processes or hierarchical models where prediction given xi may depend only weakly on all other data points (y-i, x-i). We illustrate with a simple hierarchical model in Section 7.3.

The cross-validation estimates are similar to the non-Bayesian resampling method known as jackknife. Even though we are working with the posterior distribution, our goal is to estimate an expectation averaging over yrep in its true, unknown distribution, f; thus, we are studying the frequency properties of a Bayesian procedure.

Comparing different estimates of out-of sample prediction accuracy

All the different measures discussed above are based on adjusting the log predictive density of the observed data by subtracting an approximate bias correction. The measures differ both in their starting points and in their adjustments.

AIC starts with the log predictive density of the data conditional on the maximum likelihood estimate ![]() , DIC conditions on the posterior mean E(θ

, DIC conditions on the posterior mean E(θ![]() y), and WAIC starts with the log predictive density, averaging over ppost(θ) = p(θ

y), and WAIC starts with the log predictive density, averaging over ppost(θ) = p(θ![]() y). Of these three approaches, only WAIC is fully Bayesian and so it is our preference when using a bias correction formula. Cross-validation can be applied to any starting point, but it is also based on the log pointwise predictive density.

y). Of these three approaches, only WAIC is fully Bayesian and so it is our preference when using a bias correction formula. Cross-validation can be applied to any starting point, but it is also based on the log pointwise predictive density.

Example. Predictive error in the election forecasting model

We illustrate the different estimates of out-of-sample log predictive density using the regression model of 15 elections introduced on page 165.

AIC. Fit to all 15 data points, the maximum likelihood estimate of the vector ![]() is (45.9, 3.2, 3.6). Since 3 parameters are estimated, the value of

is (45.9, 3.2, 3.6). Since 3 parameters are estimated, the value of ![]() is

is

![]()

and ![]()

DIC. The relevant formula is ![]() .

.

The second of these terms is invariant to reparameterization; we calculate it as

![]()

based on a large number S of simulation draws.

The first term is not invariant. With respect to the prior p(a, b, log σ) ∝ 1, the posterior means of a and b are 45.9 and 3.2, the same as the maximum likelihood estimate. The posterior means of σ, σ2, and log σ are ![]() , and

, and ![]() Parameterizing using σ, we get

Parameterizing using σ, we get

![]()

which gives ![]()

WAIC. The log pointwise predictive probability of the observed data under the fitted model is

![]()

The effective number of parameters can be calculated as

![]()

or

![]()

Then ![]() .

.

Leave-one-out cross-validation. We fit the model 15 times, leaving out a different data point each time. For each fit of the model, we sample S times from the posterior distribution of the parameters and compute the log predictive density. The cross-validated pointwise predictive accuracy is

![]()

which equals -43.8. Multiplying by -2 to be on the same scale as AIC and the others, we get 87.6. The effective number of parameters from cross-validation, from (7.15), is ![]() .

.

Given that this model includes two linear coefficients and a variance parameter, these all look like reasonable estimates of effective number of parameters.

External validation. How well will the model predict new data? The 2012 election gives an answer, but it is just one data point. This illustrates the difficulty with external validation for this sort of problem.

7.3 Model comparison based on predictive performance

There are generally many options in setting up a model for any applied problem. Our usual approach is to start with a simple model that uses only some of the available information—for example, not using some possible predictors in a regression, fitting a normal model to discrete data, or ignoring evidence of unequal variances and fitting a simple equal-variance model. Once we have successfully fitted a simple model, we can check its fit to data (as discussed in Sections 6.3 and 6.4) and then expand it (as discussed in Section 7.5).

There are two typical scenarios in which models are compared. First, when a model is expanded, it is natural to compare the smaller to the larger model and assess what has been gained by expanding the model (or, conversely, if a model is simplified, to assess what was lost). This generalizes into the problem of comparing a set of nested models and judging how much complexity is necessary to fit the data.

In comparing nested models, the larger model typically has the advantage of making more sense and fitting the data better but the disadvantage of being more difficult to understand and compute. The key questions of model comparison are typically: (1) is the improvement in fit large enough to justify the additional difficulty in fitting, and (2) is the prior distribution on the additional parameters reasonable?

The second scenario of model comparison is between two or more nonnested models—neither model generalizes the other. One might compare regressions that use different sets of predictors to fit the same data, for example, modeling political behavior using information based on past voting results or on demographics. In these settings, we are typically not interested in choosing one of the models—it would be better, both in substantive and predictive terms, to construct a larger model that includes both as special cases, including both sets of predictors and also potential interactions in a larger regression, possibly with an informative prior distribution if needed to control the estimation of all the extra parameters. However, it can be useful to compare the fit of the different models, to see how either set of predictors performs when considered alone.

In any case, when evaluating models in this way, it is important to adjust for overfitting, especially when comparing models that vary greatly in their complexity.

Example. Expected predictive accuracy of models for the eight schools

In the example of Section 5.5, three modes of inference were proposed:

• No pooling: Separate estimates for each of the eight schools, reflecting that the experiments were performed independently and so each school’s observed value is an unbiased estimate of its own treatment effect. This model has eight parameters: an estimate for each school.

• Complete pooling: A combined estimate averaging the data from all schools into a single number, reflecting that the eight schools were actually quite similar (as were the eight different treatments), and also reflecting that the variation among the eight estimates (the left column of numbers in Table 5.2) is no larger than would be expected by chance alone given the standard errors (the rightmost column in the table). This model has only one, shared, parameter.

• Hierarchical model: A Bayesian meta-analysis, partially pooling the eight estimates toward a common mean. This model has eight parameters but they are constrained through their hierarchical distribution and are not estimated independently; thus the effective number of parameters should be some number less than 8.

Table 7.1 Deviance (-2 times log predictive density) and corrections for parameter fitting using AIC, DIC, WAIC (using the correction pWAIC 2), and leave-one-out cross-validation for each of three models fitted to the data in Table 5.2. Lower values of AIC/DIC/WAIC imply higher predictive accuracy.

Blank cells in the table correspond to measures that are undefined: AIC is defined relative to the maximum likelihood estimate and so is inappropriate for the hierarchical model; cross-validation requires prediction for the held-out case, which is impossible under the no-pooling model.

The no-pooling model has the best raw fit to data, but after correcting for fitted parameters, the complete-pooling model has lowest estimated expected predictive error under the different measures. In general, we would expect the hierarchical model to win, but in this particular case, setting τ = 0 (that is, the complete-pooling model) happens to give the best average predictive performance.

Table 7.1 illustrates the use of predictive log densities and information criteria to compare the three models—no pooling, complete pooling, and hierarchical—fitted to the SAT coaching data. We only have data at the group level, so we necessarily define our data points and cross-validation based on the 8 schools, not the individual students.

We shall go down the rows of Table 7.1 to understand how the different information criteria work for each of these three models, then we discuss how these measures can be used to compare the models.

AIC. The log predictive density is higher—that is, a better fit—for the no pooling model. This makes sense: with no pooling, the maximum likelihood estimate is right at the data, whereas with complete pooling there is only one number to fit all 8 schools. However, the ranking of the models changes after adjusting for the fitted parameters (8 for no pooling, 1 for complete pooling), and the expected log predictive density is estimated to be the best (that is, AIC is lowest) for complete pooling. The last column of the table is blank for AIC, as this procedure is defined based on maximum likelihood estimation which is meaningless for the hierarchical model.

DIC. For the no-pooling and complete-pooling models with their flat priors, DIC gives results identical to AIC (except for possible simulation variability, which we have essentially eliminated here by using a large number of posterior simulation draws). DIC for the hierarchical model gives something in between: a direct fit to data (lpd) that is better than complete pooling but not as good as the (overfit) no pooling, and an effective number of parameters of 2.8, closer to 1 than to 8, which makes sense given that the estimated school effects are pooled almost all the way back to their common mean. Adding in the correction for fitting, complete pooling wins, which makes sense given that in this case the data are consistent with zero between-group variance.

WAIC. This fully Bayesian measure gives results similar to DIC. The fit to observed data is slightly worse for each model (that is, the numbers for lppd are slightly more negative than the corresponding values for lpd, higher up in the table), accounting for the fact that the posterior predictive density has a wider distribution and thus has lower density values at the mode, compared to the predictive density conditional on the point estimate. However, the correction for effective number of parameters is lower (for no pooling and the hierarchical model, pWAIC is about half of pDIC), consistent with the theoretical behavior of WAIC when there is only a single data point per parameter, while for complete pooling, pWAIC is only a bit less than 1 (roughly consistent with what we would expect from a sample size of 8). For all three models here, pWAIC is much less than pDIC, with this difference arising from the fact that the lppd in WAIC is already accounting for much of the uncertainty arising from parameter estimation.

Cross-validation. For this example it is impossible to cross-validate the no-pooling model as it would require the impossible task of obtaining a prediction from a held-out school given the other seven. This illustrates one main difference to information criteria, which assume new prediction for these same schools and thus work also in no-pooling model. For complete pooling and for the hierarchical model, we can perform leave-one-out cross-validation directly. In this model the local prediction of cross-validation is based only on the information coming from the other schools, while the local prediction in WAIC is based on the local observation as well as the information coming from the other schools. In both cases the prediction is for unknown future data, but the amount of information used is different and thus predictive performance estimates differ more when the hierarchical prior becomes more vague (with the difference going to infinity as the hierarchical prior becomes uninformative, to yield the no-pooling model).

Comparing the three models. For this particular dataset, complete pooling wins the expected out-of-sample prediction competition. Typically it is best to estimate the hierarchical variance but, in this case, τ = 0 is the best fit to the data, and this is reflected in the center column in Table 7.1, where the expected log predictive densities are higher than for no pooling or complete pooling.

That said, we still prefer the hierarchical model here, because we do not believe that τ is truly zero. For example, the estimated effect in school A is 28 (with a standard error of 15) and the estimate in school C is -3 (with a standard error of 16). This difference is not statistically significant and, indeed, the data are consistent with there being zero variation of effects between schools; nonetheless we would feel uncomfortable, for example, stating that the posterior probability is 0.5 that the effect in school C is larger than the effect in school A, given that data that show school A looking better. It might, however, be preferable to use a more informative prior distribution on τ, given that very large values are both substantively implausible and also contribute to some of the predictive uncertainty under this model.

In general, predictive accuracy measures are useful in parallel with posterior predictive checks to see if there are important patterns in the data that are not captured by each model. As with predictive checking, the log score can be computed in different ways for a hierarchical model depending on whether the parameters θ and replications yrep correspond to estimates and replications of new data from the existing groups (as we have performed the calculations in the above example) or new groups (additional schools from the N(μ, τ2) distribution in the above example).

Evaluating predictive error comparisons

When comparing models in their predictive accuracy, two issues arise, which might be called statistical and practical significance. Lack of statistical significance arises from uncertainty in the estimates of comparative out-of-sample prediction accuracy and is ultimately associated with variation in individual prediction errors which manifests itself in averages for any finite dataset. Some asymptotic theory suggests that the sampling variance of any estimate of average prediction error will be of order 1, so that, roughly speaking, differences of less than 1 could typically be attributed to chance. But this asymptotic result does not necessarily hold for nonnested models. A practical estimate of related sampling uncertainty can be obtained by analyzing the variation in the expected log predictive densities ![]() using parametric or nonparametric approaches.

using parametric or nonparametric approaches.

Sometimes it may be possible to use an application-specific scoring function that is so familiar for subject-matter experts that they can interpret the practical significance of differences. For example, epidemiologists are used to looking at differences in area under receiver operating characteristic curve (AUC) for classification and survival models. In settings without such conventional measures, it is not always clear how to interpret the magnitude of a difference in log predictive probability when comparing two models. Is a difference of 2 important? 10? 100? One way to understand such differences is to calibrate based on simpler models. For example, consider two models for a survey of n voters in an American election, with one model being completely empty (predicting p = 0.5 for each voter to support either party) and the other correctly assigning probabilities of 0.4 and 0.6 (one way or another) to the voters. Setting aside uncertainties involved in fitting, the expected log predictive probability is log(0.5) = -0.693 per respondent for the first model and 0.6log(0.6) + 0.4log(0.4) = -0.673 per respondent for the second model. The expected improvement in log predictive probability from fitting the better model is then 0.02n. So, for n = 1000, this comes to an improvement of 20, but for n = 10 the predictive improvement is only 2. This would seem to accord with intuition: going from 50/50 to 60/40 is a clear win in a large sample, but in a smaller predictive dataset the modeling benefit would be hard to see amid the noise.

In our studies of public opinion and epidemiology, we have seen cases where a model that is larger and better (in the sense of giving more reasonable predictions) does not appear dominant in the predictive comparisons. This can happen because the improvements are small on an absolute scale (for example, changing the predicted average response among a particular category of the population from 55% Yes to 60% Yes) and concentrated in only a few subsets of the population (those for which there is enough data so that a more complicated model yields noticeably different predictions). Average out-of-sample prediction error can be a useful measure but it does not tell the whole story of model fit.

Bias induced by model selection

Cross-validation and information criteria make a correction for using the data twice (in constructing the posterior and in model assessment) and obtain asymptotically unbiased estimates of predictive performance for a given model. However, when these methods are used to choose a model selection, the predictive performance estimate of the selected model is biased due to the selection process.

If the number of compared models is small, the bias is small, but if the number of candidate models is very large (for example, if the number of models grows exponentially as the number of observations n grows, or the number of predictors p is much larger than log n in covariate selection) a model selection procedure can strongly overfit the data. It is possible to estimate the selection-induced bias and obtain unbiased estimates, for example by using another level of cross-validation. This does not, however, prevent the model selection procedure from possibly overfitting to the observations and consequently selecting models with suboptimal predictive performance. This is one reason we view cross-validation and information criteria as an approach for understanding fitted models rather than for choosing among them.

Challenges

The current state of the art of measurement of predictive model fit remains unsatisfying. Formulas such as AIC, DIC, and WAIC fail in various examples: AIC does not work in settings with strong prior information, DIC gives nonsensical results when the posterior distribution is not well summarized by its mean, and WAIC relies on a data partition that would cause difficulties with structured models such as for spatial or network data. Cross-validation is appealing but can be computationally expensive and also is not always well defined in dependent data settings.

For these reasons, Bayesian statisticians do not always use predictive error comparisons in applied work, but we recognize that there are times when it can be useful to compare highly dissimilar models, and, for that purpose, predictive comparisons can make sense. In addition, measures of effective numbers of parameters are appealing tools for understanding statistical procedures, especially when considering models such as splines and Gaussian processes that have complicated dependence structures and thus no obvious formulas to summarize model complexity.

Thus we see the value of the methods described here, for all their flaws. Right now our preferred choice is cross-validation, with WAIC as a fast and computationally convenient alternative. WAIC is fully Bayesian (using the posterior distribution rather than a point estimate), gives reasonable results in the examples we have considered here, and has a more-or-less explicit connection to cross-validation, as can be seen from its formulation based on the pointwise predictive density.

7.4 Model comparison using Bayes factors

So far in this chapter we have discussed model evaluation and comparison based on expected predictive accuracy. Another way to compare models is through a Bayesian analysis in which each model is given a prior probability which, when multiplied by the marginal likelihood (the probability of the data given the model) yields a quantity that is proportional to the posterior probability of the model. This fully Bayesian approach has some appeal but we generally do not recommend it because, in practice, the marginal likelihood is highly sensitive to aspects of the model that are typically assigned arbitrarily and are untestable from data. Here we present the general idea and illustrate with two examples, one where it makes sense to assign prior and posterior probabilities to discrete models, and one example where it does not.

In a problem in which a discrete set of competing models is proposed, the term Bayes factor is sometimes used for the ratio of the marginal probability density under one model to the marginal density under a second model. If we label two competing models as H1 and H2, then the ratio of their posterior probabilities is

![]()

where

![]()

In many cases, the competing models have a common set of parameters, but this is not necessary; hence the notation θi for the parameters in model Hi. As expression (7.16) makes clear, the Bayes factor is only defined when the marginal density of y under each model is proper.

The goal when using Bayes factors is to choose a single model Hi or average over a discrete set using their posterior probabilities, p(Hi![]() y). As we show in examples in this book, we generally prefer to replace a discrete set of models with an expanded continuous family. The bibliographic note at the end of the chapter provides pointers to more extensive treatments of Bayes factors.

y). As we show in examples in this book, we generally prefer to replace a discrete set of models with an expanded continuous family. The bibliographic note at the end of the chapter provides pointers to more extensive treatments of Bayes factors.

Bayes factors can work well when the underlying model is truly discrete and for which it makes sense to consider one or the other model as being a good description of the data. We illustrate with an example from genetics.

Example. A discrete example in which Bayes factors are helpful

The Bayesian inference for the genetics example in Section 1.4 can be fruitfully expressed using Bayes factors, with the two competing ‘models’ being H1: the woman is affected, and H2: the woman is unaffected, that is, θ = 1 and θ = 0 in the notation of Section 1.4. The prior odds are p(H2)/p(H1) = 1, and the Bayes factor of the data that the woman has two unaffected sons is p(y![]() H2)/p(y

H2)/p(y![]() H1) = 1.0/0.25. The posterior odds are thus p(H2

H1) = 1.0/0.25. The posterior odds are thus p(H2![]() y)/p(H1

y)/p(H1![]() y) = 4. Computation by multiplying odds ratios makes the accumulation of evidence clear.

y) = 4. Computation by multiplying odds ratios makes the accumulation of evidence clear.

This example has two features that allow Bayes factors to be helpful. First, each of the discrete alternatives makes scientific sense, and there are no obvious scientific models in between. Second, the marginal distribution of the data under each model, p(y![]() Hi), is proper.

Hi), is proper.

Bayes factors do not work so well for models that are inherently continuous. For example, we do not like models that assign a positive probability to the event θ = 0, if θ is some continuous parameter such as a treatment effect. Similarly, if a researcher expresses interest in comparing or choosing among various discrete regression models (the problem of variable selection), we would prefer to include all the candidate variables, using a prior distribution to partially pool the coefficients to zero if this is desired. To illustrate the problems with Bayes factors for continuous models, we use the example of the no-pooling and complete-pooling models for the 8 schools problem.

Example. A continuous example where Bayes factors are a distraction

We now consider a case in which discrete model comparisons and Bayes factors distract from scientific inference. Suppose we had analyzed the data in Section 5.5 from the 8 schools using Bayes factors for the discrete collection of previously proposed standard models, no pooling (H1) and complete pooling (H2):

(Recall that the standard deviations σj are assumed known in this example.)

If we use Bayes factors to choose or average among these models, we are immediately confronted with the fact that the Bayes factor—the ratio p(y![]() H1)/p(y

H1)/p(y![]() H2)—is not defined; because the prior distributions are improper, the ratio of density functions is 0/0. Consequently, if we wish to continue with the approach of assigning posterior probabilities to these two discrete models, we must consider (1) proper prior distributions, or (2) improper prior distributions that are carefully constructed as limits of proper distributions. In either case, we shall see that the results are unsatisfactory. More explicitly, suppose we replace the flat prior distributions in H1 and H2 by independent normal prior distributions, N(0, A2), for some large A. The resulting posterior distribution for the effect in school j is

H2)—is not defined; because the prior distributions are improper, the ratio of density functions is 0/0. Consequently, if we wish to continue with the approach of assigning posterior probabilities to these two discrete models, we must consider (1) proper prior distributions, or (2) improper prior distributions that are carefully constructed as limits of proper distributions. In either case, we shall see that the results are unsatisfactory. More explicitly, suppose we replace the flat prior distributions in H1 and H2 by independent normal prior distributions, N(0, A2), for some large A. The resulting posterior distribution for the effect in school j is

![]()

where the two conditional posterior distributions are normal centered near yj and ![]() , respectively, and λ is proportional to the prior odds times the Bayes factor, which is a function of the data and A (see Exercise 7.4). The Bayes factor for this problem is highly sensitive to the prior variance, A2; as A increases (with fixed data and fixed prior odds, p(H2)/p(H1)) the posterior distribution becomes more and more concentrated on H2, the complete pooling model. Therefore, the Bayes factor cannot be reasonably applied to the original models with noninformative prior densities, even if they are carefully defined as limits of proper prior distributions.

, respectively, and λ is proportional to the prior odds times the Bayes factor, which is a function of the data and A (see Exercise 7.4). The Bayes factor for this problem is highly sensitive to the prior variance, A2; as A increases (with fixed data and fixed prior odds, p(H2)/p(H1)) the posterior distribution becomes more and more concentrated on H2, the complete pooling model. Therefore, the Bayes factor cannot be reasonably applied to the original models with noninformative prior densities, even if they are carefully defined as limits of proper prior distributions.

Yet another problem with the Bayes factor for this example is revealed by considering its behavior as the number of schools being fitted to the model increases. The posterior distribution for θj under the mixture of H1 and H2 turns out to be sensitive to the dimensionality of the problem, as much different inferences would be obtained if, for example, the model were applied to similar data on 80 schools (see Exercise 7.4). It makes no scientific sense for the posterior distribution to be highly sensitive to aspects of the prior distributions and problem structure that are scientifically incidental.

Thus, if we were to use a Bayes factor for this problem, we would find a problem in the model-checking stage (a discrepancy between posterior distribution and substantive knowledge), and we would be moved toward setting up a smoother, continuous family of models to bridge the gap between the two extremes. A reasonable continuous family of models is yj ~ N(θj, σj2), θj ~ N(μ, τ2), with a flat prior distribution on μ, and τ in the range [0, ∞); this is the model we used in Section 5.5. Once the continuous expanded model is fitted, there is no reason to assign discrete positive probabilities to the values τ = 0 and τ = ∞, considering that neither makes scientific sense.

7.5 Continuous model expansion

Sensitivity analysis

In general, the posterior distribution of the model parameters can either overestimate or underestimate different aspects of ‘true’ posterior uncertainty. The posterior distribution typically overestimates uncertainty in the sense that one does not, in general, include all of one’s substantive knowledge in the model; hence the utility of checking the model against one’s substantive knowledge. On the other hand, the posterior distribution underestimates uncertainty in two senses: first, the assumed model is almost certainly wrong—hence the need for posterior model checking against the observed data—and second, other reasonable models could have fit the observed data equally well, hence the need for sensitivity analysis. We have already addressed model checking. In this section, we consider the uncertainty in posterior inferences due to the existence of reasonable alternative models and discuss how to expand the model to account for this uncertainty. Alternative models can differ in the specification of the prior distribution, in the specification of the likelihood, or both. Model checking and sensitivity analysis go together: when conducting sensitivity analysis, it is only necessary to consider models that fit substantive knowledge and observed data in relevant ways.

The basic method of sensitivity analysis is to fit several probability models to the same problem. It is often possible to avoid surprises in sensitivity analyses by replacing improper prior distributions with proper distributions that represent substantive prior knowledge. In addition, different questions are differently affected by model changes. Naturally posterior inferences concerning medians of posterior distributions are generally less sensitive to changes in the model than inferences about means or extreme quantiles. Similarly, predictive inferences about quantities that are most like the observed data are most reliable; for example, in a regression model, interpolation is typically less sensitive to linearity assumptions than extrapolation. It is sometimes possible to perform a sensitivity analysis by using ‘robust’ models, which ensure that unusual observations (or larger units of analysis in a hierarchical model) do not exert an undue influence on inferences. The typical example is the use of the t distribution in place of the normal (either for the sampling or the population distribution). Such models can be useful but require more computational effort. We consider robust models in Chapter 17.

Adding parameters to a model

There are several possible reasons to expand a model:

1. If the model does not fit the data or prior knowledge in some important way, it should be altered in some way, possibly by adding enough new parameters to allow a better fit.

2. If a modeling assumption is questionable or has no real justification, one can broaden the class of models (for example, replacing a normal by a t, as we do in Section 17.4 for the SAT coaching example).

3. If two different models, p1(y, θ) and p2(y, θ), are under consideration, they can be combined into a larger model using a continuous parameterization that includes the original models as special cases. For example, the hierarchical model for SAT coaching in Chapter 5 is a continuous generalization of the complete-pooling (τ = 0) and no-pooling (τ = ∞) models.

4. A model can be expanded to include new data; for example, an experiment previously analyzed on its own can be inserted into a hierarchical population model. Another common example is expanding a regression model of y![]() x to a multivariate model of (x, y) in order to model missing data in x (see Chapter 18).

x to a multivariate model of (x, y) in order to model missing data in x (see Chapter 18).

All these applications of model expansion have the same mathematical structure: the old model, p(y, θ), is embedded in or replaced by a new model, p(y, θ, ![]() ) or, more generally,

) or, more generally,![]() , where y* represents the added data.

, where y* represents the added data.

The joint posterior distribution of the new parameters, ![]() , and the parameters θ of the old model is,

, and the parameters θ of the old model is,

![]()

The conditional prior distribution, p(θ![]()

![]() ), and the likelihood, p(y, y*

), and the likelihood, p(y, y*![]() θ,

θ, ![]() ), are determined by the expanded family. The marginal distribution of

), are determined by the expanded family. The marginal distribution of ![]() is obtained by averaging over θ:

is obtained by averaging over θ:

![]()

In any expansion of a Bayesian model, one must specify a set of prior distributions, p(θ![]()

![]() ), to replace the old p(θ), and also a hyperprior distribution p(