Chapter Four. Algorithms and Data Structures

This chapter presents fundamental data types that are essential building blocks for a broad variety of applications. We present full implementations, even though some of them are built into Python, so that you can have a clear idea of how they work and why they are important.

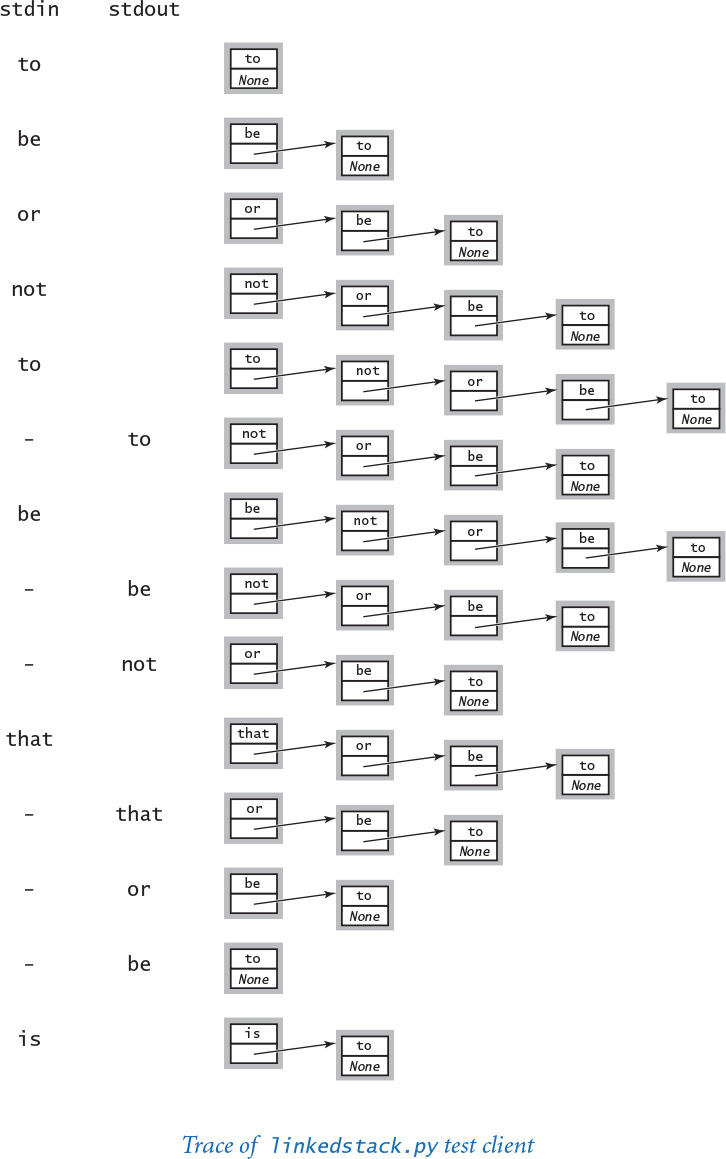

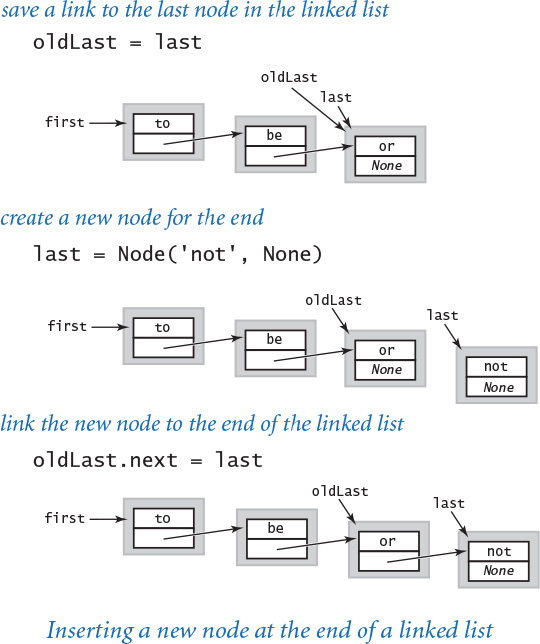

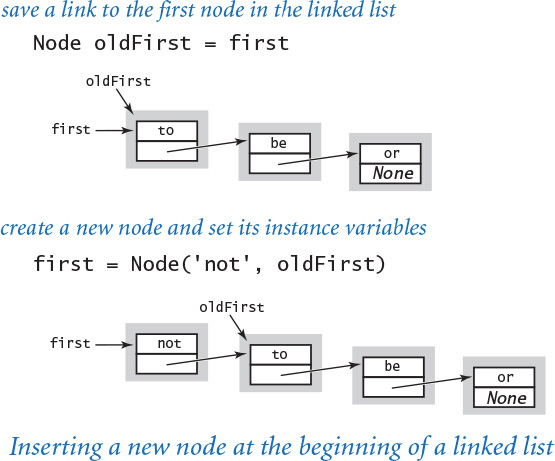

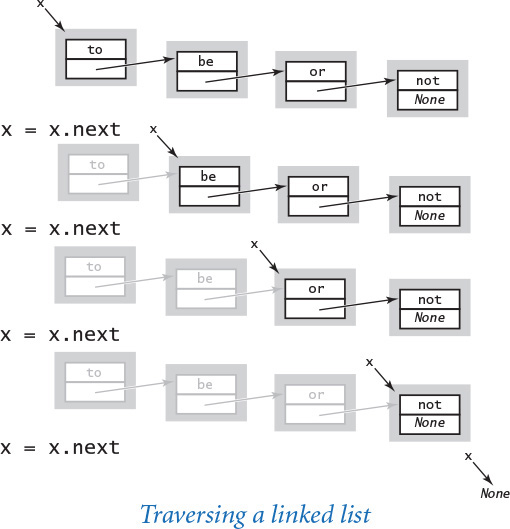

Objects can contain references to other objects, so we can build structures known as linked structures, which can be arbitrarily complex. With linked structures and arrays, we can build data structures to organize information in such a way that we can efficiently process it with associated algorithms. In a data type, we use the set of values to build data structures and the methods that operate on those values to implement algorithms.

The algorithms and data structures that we consider in this chapter introduce a body of knowledge developed over the past several decades that constitutes the basis for the efficient use of computers for a broad variety of applications. From n-body simulation problems in physics to genetic sequencing problems in bioinformatics, the basic approaches we describe have become essential in scientific research; from database systems to search engines, these methods are the foundation of commercial computing. As the scope of computing applications continues to expand, so grows the impact of these basic approaches.

Algorithms and data structures themselves are valid subjects of scientific inquiry. Accordingly, we begin by describing a scientific approach for analyzing the performance of algorithms, which we use throughout the chapter to study the performance characteristics of our implementations.

4.1 Performance

In this section, you will learn to respect a principle that is succinctly expressed in yet another mantra that should live with you whenever you program: Pay attention to the cost. If you become an engineer, that will be your job; if you become a biologist or a physicist, the cost will dictate which scientific problems you can address; if you are in business or become an economist, this principle needs no defense; and if you become a software developer, the cost will dictate whether the software that you build will be useful to your clients.

To study the cost of running them, we study our programs themselves via the scientific method, the commonly accepted body of techniques universally used by scientists to develop knowledge about the natural world. We also apply mathematical analysis to derive concise models of the cost.

Which features of the natural world are we studying? In most situations, we are interested in one fundamental characteristic: time. Whenever we run a program, we are performing an experiment involving the natural world, putting a complex system of electronic circuitry through series of state changes involving a huge number of discrete events that we are confident will eventually stabilize to a state with results that we want to interpret. Although developed in the abstract world of Python programming, these events most definitely are happening in the natural world. What will be the elapsed time until we see the result? It makes a great deal of difference to us whether that time is a millisecond, a second, a day, or a week. Therefore, we want to learn, through the scientific method, how to properly control the situation, just as when we launch a rocket, build a bridge, or smash an atom.

On the one hand, modern programs and programming environments are complex; on the other hand, they are developed from a simple (but powerful) set of abstractions. It is a small miracle that a program produces the same result each time we run it. To predict the time required, we take advantage of the relative simplicity of the supporting infrastructure that we use to build programs. You may be surprised at the ease with which you can develop cost estimates and predict the performance characteristics of many of the programs that you compose.

• Observe some feature of the natural world.

• Hypothesize a model that is consistent with the observations.

• Predict events using the hypothesis.

• Verify the predictions by making further observations.

• Validate by repeating until the hypothesis and observations agree.

One of the key tenets of the scientific method is that the experiments we design must be reproducible, so that others can convince themselves of the validity of the hypothesis. In addition, the hypotheses we formulate must be falsifiable—we have the possibility of knowing for sure when a hypothesis is wrong (and thus needs revision).

Observations

Our first challenge is to make quantitative measurements of the running time of our programs. Although measuring the exact running time of our program is difficult, usually we are happy with estimates. There are a number of tools available to help us obtain such approximations. Perhaps the simplest is a physical stopwatch or the Stopwatch data type (see PROGRAM 3.2.2). We can simply run a program on various inputs, measuring the amount of time to process each input.

Our first qualitative observation about most programs is that there is a problem size that characterizes the difficulty of the computational task. Normally, the problem size is either the size of the input or the value of a command-line argument. Intuitively, the running time should increase with the problem size, but the question of how much it increases naturally arises every time we develop and run a program.

Another qualitative observation for many programs is that the running time is relatively insensitive to the input itself; it depends primarily on the problem size. If this relationship does not hold, we need to run more experiments to better understand, and perhaps better control, the running time’s sensitivity to the input. Since this relationship does often hold, we focus now on the goal of better quantifying the correspondence between problem size and running time.

As a concrete example, we start with threesum.py (PROGRAM 4.1.1), which counts the number of triples in an array of n numbers that sum to 0. This computation may seem contrived to you, but it is deeply related to numerous fundamental computational tasks, particularly those found in computational geometry, so it is a problem worthy of careful study. What is the relationship between the problem size n and the running time for threesum.py?

Hypotheses

In the early days of computer science, Donald Knuth showed that, despite all of the complicating factors in understanding the running times of our programs, it is possible in principle to create accurate models that can help us predict precisely how long a particular program will take. Proper analysis of this sort involves:

• Detailed understanding of the program

• Detailed understanding of the system and the computer

• Advanced tools of mathematical analysis

Thus it is best left for experts. Every programmer, however, needs to know how to make back-of-the-envelope performance estimates. Fortunately, we can often acquire such knowledge by using a combination of empirical observations and a small set of mathematical tools.

Doubling hypotheses

For a great many programs, we can quickly formulate a hypothesis for the following question: What is the effect on the running time of doubling the size of the input? For clarity, we refer to this hypothesis as a doubling hypothesis. Perhaps the easiest way to pay attention to the cost is to ask yourself this question about your programs during development and also as you use them in practical applications. Next, we describe how to develop answers via the scientific method.

Empirical analysis

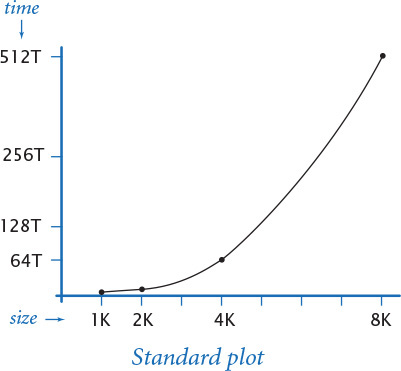

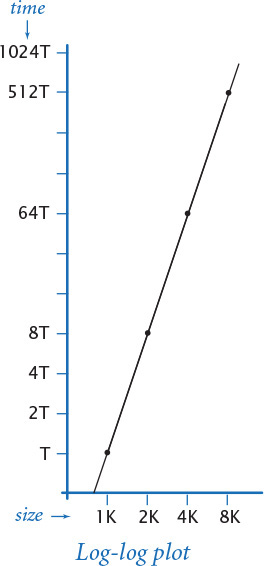

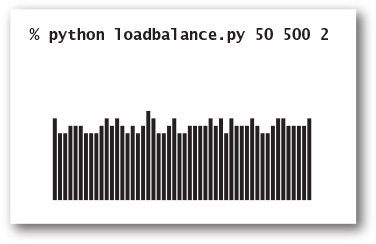

Clearly, we can get a headstart on developing a doubling hypothesis by doubling the size of the input and observing the effect on the running time. For example, doublingtest.py (PROGRAM 4.1.2) generates a sequence of random input arrays for threesum.py, doubling the array length at each step, and writes the ratio of running times of threesum.countTriples() for each input over the previous (which was one-half the size). If you run this program, you will find yourself caught in a prediction–verification cycle: It writes several lines very quickly, but then begins to slow down. Each time it writes a line, you find yourself wondering how long it will be until it writes the next line. Checking the stopwatch as the program runs, it is easy to predict that the elapsed time increases by about a factor of 8 to write each line. This prediction is verified by the Stopwatch measurements that the program writes, and leads immediately to the hypothesis that the running time increases by a factor of 8 when the input size doubles. We might also plot the running times, either on a standard plot (left), which shows that the rate of increase of the running time increases with input size, or on a log-log plot. In the case of threesum.py, the log-log plot (below) is a straight line with slope 3, which clearly suggests the hypothesis that the running time satisfies a power law of the form cn3 (see EXERCISE 4.1.29).

Program 4.1.1 3-sum problem (threesum.py)

import stdarray

import stdio

def writeTriples(a):

# See Exercise 4.1.1.

def countTriples(a):

n = len(a)

count = 0

for i in range(n):

for j in range(i+1, n):

for k in range(j+1, n):

if (a[i] + a[j] + a[k]) == 0:

count += 1

return count

def main():

a = stdarray.readInt1D()

count = countTriples(a)

stdio.writeln(count)

if count < 10:

writeTriples(a)

if __name__ == '__main__': main()

a[] | array of integers

n | length of a[]

count | number of triples that sum to 0

This program reads an array of integers from standard input, and writes to standard output the number of triples in the array that sum to 0. If the number is low, then it also writes the triples. The file 1000ints.txt contains 1,000 random 32-bit integers (between –231 and 231 – 1). Such a file is not likely to have such a triple (see EXERCISE 4.1.27).

% more 8ints.txt

8

30

-30

-20

-10

40

0

10

5

% python threesum.py < 8ints.txt

4

30 -30 0

30 -20 -10

-30 -10 40

-10 0 10

% python threesum.py < 1000ints.txt

0

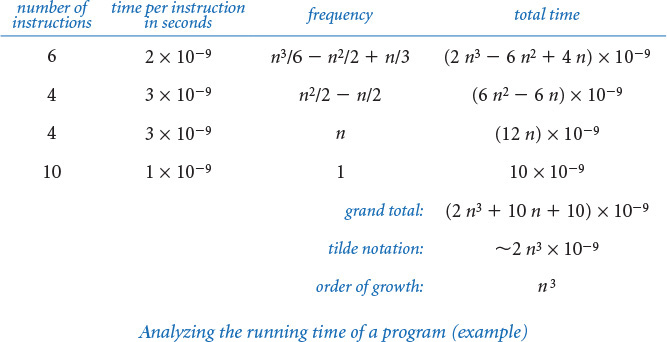

Mathematical analysis

Knuth’s basic insight on building a mathematical model to describe the running time of a program is simple: the total running time is determined by two primary factors:

• The cost of executing each statement

• The frequency of execution of each statement

The former is a property of the system, and the latter is a property of the algorithm. If we know both for all instructions in the program, we can multiply them together and sum for all instructions in the program to get the running time.

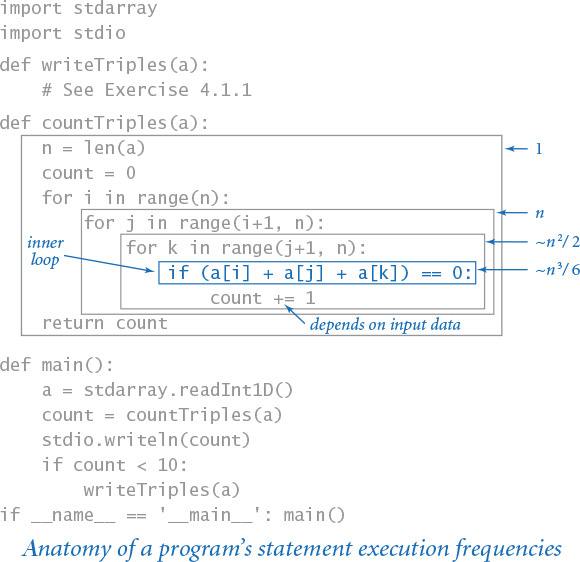

The primary challenge is to determine the frequency of execution of the statements. Some statements are easy to analyze; for example, the statement that initializes count to 0 in threesum.countTriples() is executed only once. Others require higher-level reasoning; for example, the if statement in threesum.countTriples() is executed precisely n (n–1)(n–2)/6 times (that is precisely the number of ways to pick three different numbers from the input array—see EXERCISE 4.1.5).

Frequency analyses of this sort can lead to complicated and lengthy mathematical expressions. To substantially simplify matters in the mathematical analysis, we develop simpler approximate expressions in two ways. First, we work with the leading term of mathematical expressions by using a mathematical device known as the tilde notation. We write ~ f(n) to represent any quantity that, when divided by f(n), approaches 1 as n grows. We also write g(n) ~ f(n) to indicate that g(n) / f(n) approaches 1 as n grows. With this notation, we can ignore complicated parts of an expression that represent small values. For example, the if statement in threesum.py is executed ~n3/6 times because n (n–1) (n–2) / 6 = n3/6 – n2/2 + n/3, which, when divided by n3/6, approaches 1 as n grows. This notation is useful when the terms after the leading term are relatively insignificant (for example, when n = 1,000, this assumption amounts to saying that –n2/2 + n/3 ≈ – 499,667 is relatively insignificant by comparison with n3/6 ≈ 166,666,667, which it is). Second, we focus on the instructions that are executed most frequently, sometimes referred to as the inner loop of the program. In this program it is reasonable to assume that the time devoted to the instructions outside the inner loop is relatively insignificant.

Program 4.1.2 Validating a doubling hypothesis (doublingtest.py)

import sys

import stdarray

import stdio

import stdrandom

import threesum

from stopwatch import Stopwatch

# Time to solve a random three-sum instance of size n.

def timeTrial(n):

a = stdarray.create1D(n, 0)

for i in range(n):

a[i] = stdrandom.uniformInt(-1000000, 1000000)

watch = Stopwatch()

count = threesum.countTriples(a)

return watch.elapsedTime()

n = int(sys.argv[1])

while True:

previous = timeTrial(n // 2)

current = timeTrial(n)

ratio = current / previous

stdio.writef('%7d %4.2f

', n, ratio)

n *= 2

n | problem size

a[] | random integers

watch | stopwatch

n | problem size

previous | running time for n // 2

current | running time for n

ratio | ratio of running times

This program writes to standard output a table of doubling ratios for the three-sum problem. The table shows how doubling the problem size affects the running time of the function call threesum.countTriples() for problem sizes that double for each row of the table. These experiments lead to the hypothesis that the running time increases by a factor of 8 when the input size doubles. When you run the program, note carefully that the elapsed time increases by a factor of 8 for each line written, validating the hypothesis.

% python doublingtest.py 256

256 7.52

512 8.09

1024 8.07

2048 7.97

...

The key point in analyzing the running time of a program is this: for a great many programs, the running time satisfies the relationship

T(n) ∼ c f(n)

where c is a constant and f(n) is a function known as the order of growth of the running time. For typical programs, f(n) is a function such as log n, n, n log n, n2, or n3, as you will soon see (customarily, we express order-of-growth functions without any constant coefficient). When f(n) is a power of n, as is often the case, this assumption is equivalent to saying that the running time satisfies a power law. In the case of threesum.py, it is a hypothesis already verified by our empirical observations: the order of growth of the running time of threesum.py is n3. The value of the constant c depends both on the cost of executing instructions and on details of the frequency analysis, but we normally do not need to work out the value, as you will now see.

The order of growth is a simple but powerful model of running time. For example, knowing the order of growth typically leads immediately to a doubling hypothesis. In the case of threesum.py, knowing that the order of growth is n3 tells us to expect the running time to increase by a factor of 8 when we double the size of the problem because

T(2n)/T(n) → c (2n)3/(cn3) = 8

This matches the value resulting from the empirical analysis, thus validating both the model and the experiments. Study this example carefully, because you can use the same method to better understand the performance of any program that you compose.

Knuth showed that it is possible to develop an accurate mathematical model of the running time of any program, and many experts have devoted much effort to developing such models. But you do not need such a detailed model to understand the performance of your programs: it is typically safe to ignore the cost of the instructions outside the inner loop (because that cost is negligible by comparison to the cost of the instruction in the inner loop) and not necessary to know the value of the constant in the running-time approximation (because it cancels out when you use a doubling hypothesis to make predictions).

These approximations are significant because they relate the abstract world of a Python program to the real world of a computer running it. The approximations are such that characteristics of the particular machine that you are using do not play a significant role in the models—the analysis separates the algorithm from the system. The order of growth of the running time of threesum.py is n3 does not depend on whether it is implemented in Python or whether it is running on your laptop, someone else’s mobile phone, or a supercomputer; rather, it depends primarily on the fact that it examines all the triples. The properties of the computer and the system are all summarized in various assumptions about the relationship between program statements and machine instructions, and in the actual running times that we observe as the basis for the doubling hypothesis. The algorithm that you are using determines the order of growth. This separation is a powerful concept because it allows us to develop knowledge about the performance of algorithms and then apply that knowledge to any computer. In fact, much of the knowledge about the performance of classic algorithms was developed decades ago, but that knowledge is still relevant to today’s computers.

Empirical and mathematical analyses like those we have described constitute a model (an explanation of what is going on) that might be formalized by listing all of the assumptions mentioned (each instruction takes the same amount of time each time it is executed, running time has the given form, and so forth). Not many programs are worthy of a detailed model, but you need to have an idea of the running time that you might expect for every program that you compose. Pay attention to the cost. Formulating a doubling hypothesis—through empirical studies, mathematical analysis, or (preferably) both—is a good way to start. This information about performance is extremely useful, and you will soon find yourself formulating and validating hypotheses every time you run a program. Indeed, doing so is a good use of your time while you wait for your program to finish!

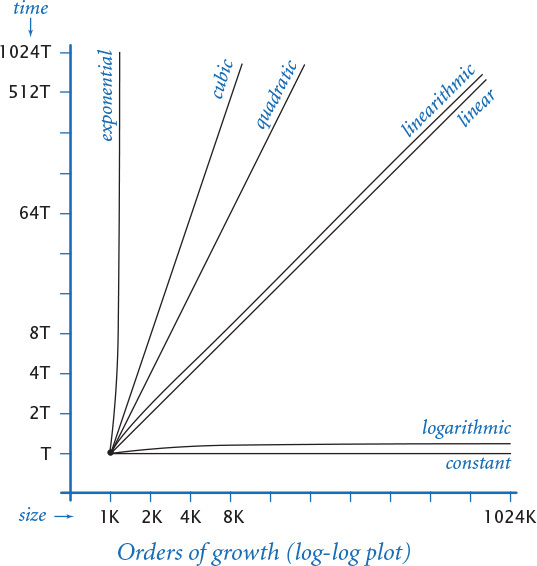

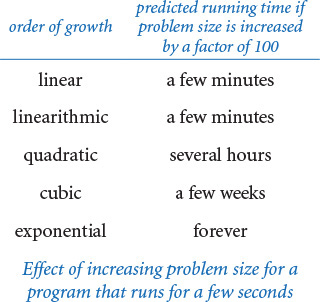

Order of growth classifications

We use just a few structural primitives (statements, conditionals, loops, and function calls) to build Python programs, so very often the order of growth of our programs is one of just a few functions of the problem size, summarized in the table on the next page. These functions immediately lead to a doubling hypothesis, which we can verify by running the programs. Indeed, you have been running programs that exhibit these orders of growth, as you can see in the following brief discussions.

Constant

A program whose running time’s order of growth is constant executes a fixed number of statements to finish its job; consequently, its running time does not depend on the problem size. Our first several programs in CHAPTER 1—such as helloworld.py (PROGRAM 1.1.1) and leapyear.py (PROGRAM 1.2.5)—fall into this category: they each execute several statements just once.

All of Python’s operations on standard numeric types take constant time. That is, applying an operation to a large number consumes no more time than does applying it to a small number. (One exception is that operations involving integers with a huge number of digits can consume more than constant time; see the Q&A at the end of this section for details.) The functions in Python’s math module also take constant time. Note that we do not specify the size of the constant. For example, the constant for math.atan2() is somewhat larger than the constant for math.hypot().

Logarithmic

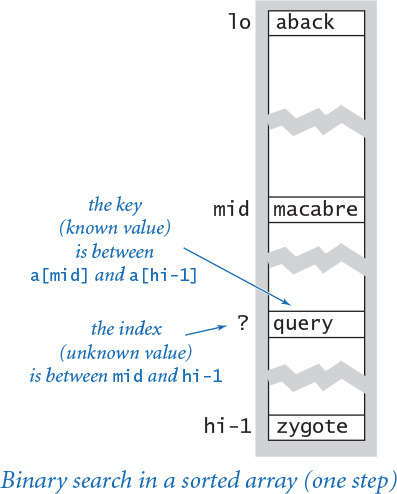

A program whose running time’s order of growth is logarithmic is barely slower than a constant-time program. The classic example of a program whose running time is logarithmic in the problem size is looking for an element in a sorted array, which we consider in the next section (see PROGRAM 4.2.3, binarysearch.py). The base of the logarithm is not relevant with respect to the order of growth (since all logarithms with a constant base are related by a constant factor), so we use log n when referring to the order of growth. Occasionally, we write more precise formulas using lg n (base 2, or binary log) or ln n (base e, or natural logarithm) because both arise naturally when studying computer programs. For example, lg n, rounded up, is the number of bits in the binary representation of n, and ln n arises in the analysis of binary search trees (see SECTION 4.4).

Linear

Programs that spend a constant amount of time processing each piece of input data, or that are based on a single for loop, are quite common. The order of growth of such a program is said to be linear—its running time is directly proportional to the problem size. PROGRAM 1.5.3 (average.py), which computes the average of the numbers on standard input, is prototypical, as is our code to shuffle the elements in an array in SECTION 1.4. Filters such as plotfilter.py (PROGRAM 1.5.5) also fall into this category, as do the various image-processing filters that we considered in SECTION 3.2, which perform a constant number of arithmetic operations per input pixel.

Linearithmic

We use the term linearithmic to describe programs whose running time for a problem of size n has order of growth n log n. Again, the base of the logarithm is not relevant. For example, couponcollector.py (PROGRAM 1.4.2) is linearithmic. The prototypical example is mergesort (see PROGRAM 4.2.6). Several important problems have natural solutions that are quadratic but clever algorithms that are linearithmic. Such algorithms (including mergesort) are critically important in practice because they enable us to address problem sizes far larger than could be addressed with quadratic solutions. In the next section, we consider a general design technique for developing linearithmic algorithms.

Quadratic

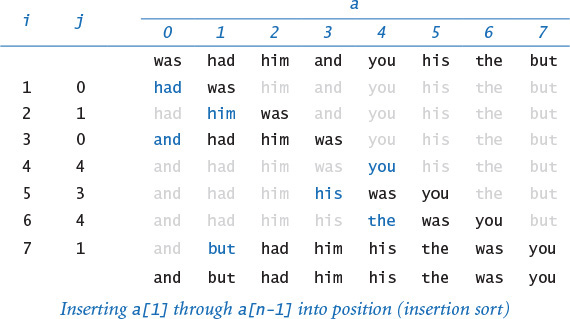

A typical program whose running time has order of growth n2 has two nested for loops, used for some calculation involving all pairs of n elements. The force update double loop in universe.py (PROGRAM 3.4.2) is a prototype of the programs in this classification, as is the elementary sorting algorithm insertion sort (see PROGRAM 4.2.4).

Cubic

Our example for this section, threesum.py, is cubic (its running time has order of growth n3) because it has three nested for loops, to process all triples of n elements. The running time of matrix multiplication, as implemented in SECTION 1.4 has order of growth m3 to multiply two m-by-m matrices, so the basic matrix multiplication algorithm is often considered to be cubic. However, the size of the input (the number of elements in the matrices) is proportional to n = m2, so the algorithm is best classified as n3/2, not cubic.

Exponential

As discussed in SECTION 2.3, both towersofhanoi.py (PROGRAM 2.3.2) and beckett.py (PROGRAM 2.3.3) have running times proportional to 2n because they process all subsets of n elements. Generally, we use the term “exponential” to refer to algorithms whose order of growth is bn for any constant b > 1, even though different values of b lead to vastly different running times. Exponential algorithms are extremely slow—you should never run one of them for a large problem. They play a critical role in the theory of algorithms because there exists a large class of problems for which it seems that an exponential algorithm is the best possible choice.

These classifications are the most common, but certainly not a complete set. Indeed, the detailed analysis of algorithms can require the full gamut of mathematical tools that have been developed over the centuries. Understanding the running time of programs such as factors.py (PROGRAM 1.3.9), primesieve.py (PROGRAM 1.4.3), and euclid.py (PROGRAM 2.3.1) requires fundamental results from number theory. Classic algorithms like hashst.py (PROGRAM 4.4.3) and bst.py (PROGRAM 4.4.4) require careful mathematical analysis. The programs sqrt.py (PROGRAM 1.3.6) and markov.py (PROGRAM 1.6.3) are prototypes for numerical computation: their running time depends on the rate of convergence of a computation to a desired numerical result. Monte Carlo simulations such as gambler.py (PROGRAM 1.3.8) and percolation.py (PROGRAM 2.4.6) and variants are of interest precisely because detailed mathematical models for them are not available.

Nevertheless, a great many of the programs that you will compose have straightforward performance characteristics that can be described accurately by one of the orders of growth that we have considered, as documented in the table on the preceding page. Accordingly, we can usually work with simple higher-level hypotheses, such as the order of growth of the running time of mergesort is linearithmic. For economy, we abbreviate such a statement to just say mergesort is linearithmic. Most of our hypotheses about cost are of this form, or of the form mergesort is faster than insertion sort. Again, a notable feature of such hypotheses is that they are statements about algorithms, not just about programs.

Predictions

You can always try to estimate the running time of a program by simply running it, but that might be a poor way to proceed when the problem size is large. In that case, it is analogous to trying to estimate where a rocket will land by launching it, how destructive a bomb will be by igniting it, or whether a bridge will stand by building it.

Knowing the order of growth of the running time allows us to make decisions about addressing large problems so that we can invest whatever resources we have to deal with the specific problems that we actually need to solve. We typically use the results of verified hypotheses about the order of growth of the running time of programs in one of the following ways.

Estimating the feasibility of solving large problems

To pay attention to the cost, you need to answer this basic question for every program that you compose: Will this program be able to process this input data in a reasonable amount of time? For example, a cubic algorithm that runs in a couple of seconds for a problem of size n will require a few weeks for a problem of size 100n because it will be a million (1003) times slower, and a couple of million seconds is a few weeks. If that is the size of the problem that you need to solve, you have to find a better method. Knowing the order of growth of the running time of an algorithm provides precisely the information that you need to understand limitations on the size of the problems that you can solve. Developing such understanding is the most important reason to study performance. Without it, you are likely have no idea how much time a program will consume; with it, you can perform a back-of-the-envelope calculation to estimate costs and proceed accordingly.

Estimating the value of using a faster computer

To pay attention to the cost, you also may be faced with this basic question: How much faster can I solve a problem if I run it on a faster computer? Again, knowing the order of growth of the running time provides precisely the information that you need. A famous rule of thumb known as Moore’s law implies that you can expect to have a computer with about double the speed and double the memory 18 months from now, or a computer with about 10 times the speed and 10 times the memory in about 5 years. It is natural to think that if you buy a new computer that is 10 times faster and has 10 times more memory than your old one, you can solve a problem 10 times the size, but that is unmistakably not the case for quadratic or cubic algorithms. Whether it is an investment banker running daily financial models or a scientist running a program to analyze experimental data or an engineer running simulations to test a design, it is not unusual for people to regularly run programs that take several hours to complete. Suppose that you are using a program whose running time is cubic, and then buy a new computer that is 10 times faster with 10 times more memory, not just because you need a new computer, but because you face problem sizes that are 10 times larger. The rude awakening is that your program will take 100 times longer than before! This kind of situation is the primary reason that linear and linearithmic algorithms are so valuable: with such an algorithm and a new computer that is 10 times faster with 10 times more memory than the old computer, you can solve a problem that is 10 times larger than could be solved by the old computer in the same amount of time. In other words, you cannot keep pace with Moore’s law if you are using a quadratic or a cubic algorithm.

Comparing programs

We always seek ways to improve our programs, and we can often extend or modify our hypotheses to evaluate the effectiveness of various improvements. With the ability to predict performance, we can make design decisions during development that can guide us toward better, more efficient implementations. In many cases, we can determine the order of growth of the running times and develop accurate hypotheses about comparative performance. The order of growth is extremely useful in this process because it allows us to compare one particular algorithm with whole classes of algorithms. For example, once we have a linearithmic algorithm to solve a problem, we become less interested in quadratic or cubic algorithms to solve the same problem.

Caveats

There are many reasons that you might get inconsistent or misleading results when trying to analyze program performance in detail. All of them have to do with the idea that one or more of the basic assumptions underlying our hypotheses might not be quite correct. We can develop new hypotheses based on new assumptions, but the more details that we take into account, the more care we need in the analysis.

Instruction time

The assumption that each instruction always takes the same amount of time is not always correct. For example, most modern computer systems use a technique known as caching to organize memory, in which case accessing elements in huge arrays can take much longer if they are not close together in the array. You may be able to observe the effect of caching for threesum.py by letting doublingtest.py run for a while. After seeming to converge to 8, the ratio of running times may jump to a larger value for large arrays because of caching.

Nondominant inner loop

The assumption that the inner loop dominates may not always be correct. The problem size n might not be sufficiently large to make the leading term in the mathematical description of the frequency of execution of instructions in the inner loop so much larger than lower-order terms that we can ignore them. Some programs have a significant amount of code outside the inner loop that needs to be taken into consideration.

System considerations

Typically, there are many, many things going on in your computer. Python is just one application of many competing for resources, and Python itself has many options and controls that significantly affect performance. Such considerations can interfere with the bedrock principle of the scientific method that experiments should be reproducible, since what is happening at this moment in your computer will never be reproduced again. Whatever else is going on in your system (that is beyond your control) should in principle be negligible.

Too close to call

Often, when we compare two different programs for the same task, one might be faster in some situations, and slower in others. One or more of the considerations just mentioned could make the difference. Again, there is a natural tendency among some programmers (and some students) to devote an extreme amount of energy running such horseraces to find the “best” implementation, but such work is best left for experts.

Strong dependence on input values

One of the first assumptions that we made to determine the order of growth of the program’s running time of a program was that the running time should be relatively insensitive to the input values. When that is not the case, we may get inconsistent results or be unable to validate our hypotheses. Our running example threesum.py does not have this problem, but we will see several examples in this chapter of programs whose running time does depends on the input values. Often, a prime design goal is to eliminate such dependence on the input values. If we cannot do so, we need to more carefully model the kind of input to be processed in the problems that we need to solve, which may be a significant challenge. For example, if we are composing a program to process a genome, how do we know how it will perform on a different genome? But a good model describing the genomes found in nature is precisely what scientists seek, so estimating the running time of our programs on data found in nature actually makes a valuable contribution to that model!

Multiple problem parameters

We have been focusing on measuring performance as a function of a single parameter n, generally the value of a command-line argument or the size of the input. However, it is not unusual to measure performance using two (or more) parameters. For example, suppose that a[] is an array of length m and b[] is an array of length n. Consider the following code fragment that counts the number of pairs i and j for which a[i] + b[j] equals 0:

for i in range(m):

for j in range(n):

if a[i] + b[j] == 0:

count += 1

In such cases, we treat the parameters m and n separately, holding one fixed while analyzing the other. For example, the order of growth of the running time of this code fragment is mn.

Despite all these caveats, understanding the order of growth of the running time of each program is valuable knowledge for any programmer, and the methods that we have described are powerful and broadly applicable. Knuth’s insight was that we can carry these methods through to the last detail in principle to make detailed, accurate predictions. Typical computer systems are extremely complex and close analysis is best left for experts, but the same methods are effective for developing approximate estimates of the running time of any program. A rocket scientist needs to have some idea of whether a test flight will land in the ocean or in a city; a medical researcher needs to know whether a drug trial will kill or cure all the subjects; and any scientist or engineer using a computer program needs to have some idea of whether it will run for a second or for a year.

Performance guarantees

For some programs, we demand that the running time of a program is less than a certain bound for any input of a given size. To provide such performance guarantees, theoreticians take an extremely pessimistic view: what would the running time be in the worst case?

For example, such a conservative approach might be appropriate for the software that runs a nuclear reactor or an air traffic control system or the brakes in your car. We must guarantee that such software completes its job within the bounds that we set because the result could be catastrophic if it does not. Scientists normally do not contemplate the worst case when studying the natural world: in biology, the worst case might the extinction of the human race; in physics, the worst case might be the end of the universe. But the worst case can be a very real concern in computer systems, where the input is generated by another (potentially malicious) user, rather than by nature. For example, websites that do not use algorithms with performance guarantees are subject to denial-of-service attacks, where hackers flood them with pathological requests that make them run harmfully slower than planned.

Performance guarantees are difficult to verify with the scientific method, because we cannot test a hypothesis such as mergesort is guaranteed to be linearithmic without trying all possible inputs, which we cannot do because there are far too many of them. We might falsify such a hypothesis by providing inputs for which mergesort is slow, but how can we prove it to be true? We must do so not with experimentation, but rather with mathematical models.

It is the task of the algorithm analyst to discover as much relevant information about an algorithm as possible, and it is the task of the applications programmer to apply that knowledge to develop programs that effectively solve the problems at hand. For example, if you are using a quadratic algorithm to solve a problem but can find an algorithm that is guaranteed to be linearithmic, you will usually prefer the linearithmic one. On rare occasions, you might still prefer the quadratic algorithm because it is faster on the kinds of inputs that you need to solve or because the linearithmic algorithm is too complex to implement.

Ideally, we want algorithms that lead to clear and compact code that provides both a good worst-case guarantee and good performance on inputs of interest. Many of the classic algorithms that we consider in this chapter are of importance for a broad variety of applications precisely because they have all of these properties. Using these algorithms as models, you can develop good solutions yourself for typical problems that you face while programming.

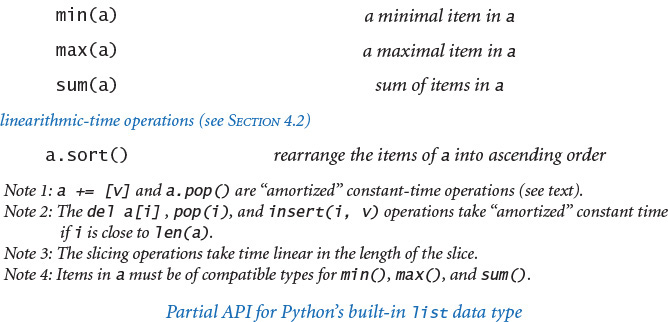

Python lists and arrays

Python’s built-in list data type represents a mutable sequence of objects. We have been using Python lists throughout the book—recall that we use Python lists as arrays because they support the four core array operations: creation, indexed access, indexed assignment, and iteration. However, Python lists are more general than arrays because you can also insert items into and delete items from Python lists. Even though Python programmers typically do not distinguish between lists and arrays, many other programmers do make such a distinction. For example, in many programming languages, arrays are of fixed length and do not support insertions or deletions. Indeed, all of the array-processing code that we have considered in this book so far could have been done using fixed-length arrays.

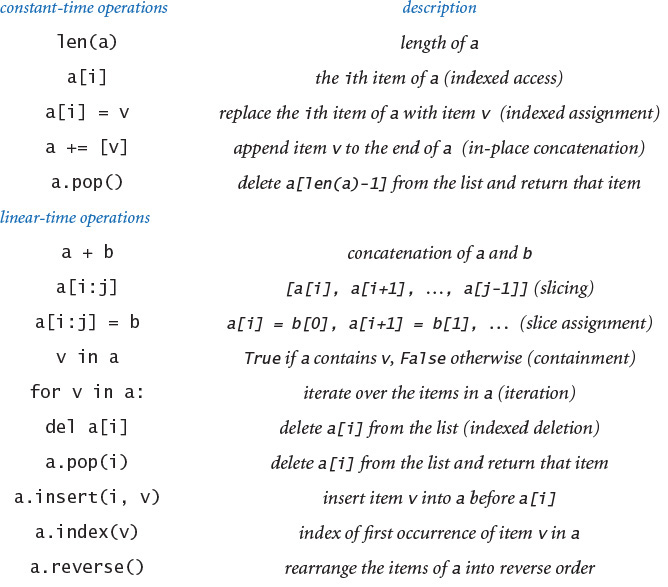

The table on the facing page gives the most commonly used operations for Python lists. Note that several of these operations—indexing, slicing, concatenation, deletion, containment, and iteration—enjoy direct language support in the form of special syntax. As illustrated in the table at right, some of these operations return values while others mutate the invoking list.

We have deferred this API to this section because programmers who use Python lists without following our mantra pay attention to the cost are in for trouble. For example, consider these two code snippets:

# quadratic time # linear time

a = [] a = []

for i in range(n): for i in range(n):

a .insert(0, 'slow') a.insert(i, 'fast')

The one on the left takes quadratic time; the one on the right takes linear time. To understand why Python list operations have the performance characteristics that they do, you need to learn more about Python’s resizing array representation of lists, which we discuss next.

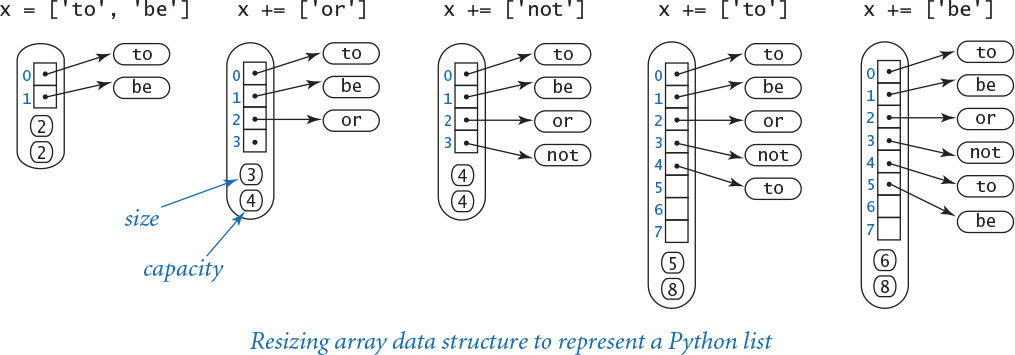

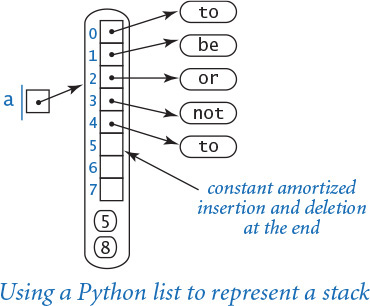

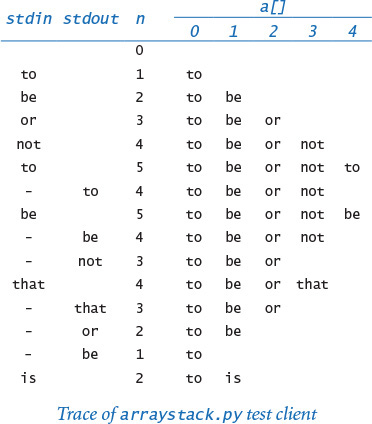

Resizing arrays

A resizing array is a data structure that stores a sequence of items (not necessarily fixed in length), which can be accessed by indexing. To implement a resizing array (at the machine level), Python uses a fixed-length array (allocated as one contiguous block of memory) to store the item references. The array is divided into two logical parts: the first part of the array contains the items in the sequence; the second part of the array is unused and reserved for subsequent insertions. Thus, we can append or remove items from the end in constant time, using the reserved space. We use the term size to refer to the number of items in the data structure and the term capacity to refer to the length of the underlying array.

The main challenge is ensuring that the data structure has sufficient capacity to hold all of the items, but is not so large as to waste an excessive amount of memory. Achieving these two goals turns out to be remarkably easy.

First, if we want to append an item to the end of a resizing array, we check its capacity. If there is room, we simply insert the new item at the end. If not, we double its capacity by creating a new array of twice the length and copying the items from the old array into the new array.

Similarly, if we want to remove an item from the end of the resizing array, we check its capacity. If it is excessively large, we halve its capacity by creating a new array of half the length and copying the items from the old array into the new array. An appropriate test is to check whether the size of the resizing array is less than one-fourth of its capacity. That way, after the capacity is halved, the resizing array is about half full and can accommodate a substantial number of insertions before we have to change its capacity again.

The doubling-and-halving strategy guarantees that the resizing array remains between 25% and 100% full, so that space is linear in the number of items. The specific strategy is not sacrosanct. For example, typical Python implementations expand the capacity by a factor of 9/8 (instead of 2) when the resizing array is full. This wastes less space (but triggers more expansion and shrinking operations).

Amortized analysis

The doubling-and-halving strategy is a judicious tradeoff between wasting space (by setting the capacity to be too large and leaving much of the array unused) and wasting time (either by creating a new array or by reorganizing the existing array). More important, we can prove that the cost of doubling and halving is always absorbed (to within a constant factor) in the cost of other Python list operations.

More precisely, starting from an empty Python list, any sequence of n operations labeled as “constant time” in the API table on page 531 takes time linear in n. In other words, the total cost of any such sequence of Python list operations divided by the number of operations is bounded by a constant. This kind of analysis is known as amortized analysis. This guarantee is not as strong as saying that each operation is constant-time, but it has the same implications in many applications (for example, when our primary interest is in total running time). We leave the full details as an exercise for the mathematically inclined.

For the special case where we perform a sequence of n insertions into an empty resizing array, the idea is simple: each insertion takes constant time to add the item; each insertion that triggers a resizing (when the current size is a power of 2) takes additional time proportional to n to copy the elements from the old array of length n to a new array of length 2n. Thus, assuming n is a power of 2 for simplicity, the total cost is proportional to

(1 + 1 + 1 + ... + 1) + (1 + 2 + 4 + 8 + ... + n) ~ 3n

The first term (which sums to n) accounts for the n insertion operations; the second term (which sums to 2n − 1) accounts for the lg n resizing operations.

Understanding resizing arrays is important in Python programming. For example, it explains why creating a Python list of n items by repeatedly appending items to the end takes time proportional to n (and why creating a list of n items by repeatedly prepending items to the front takes time proportional to n2). Such performance pitfalls are precisely the reason that we recommend using narrower interfaces that address performance.

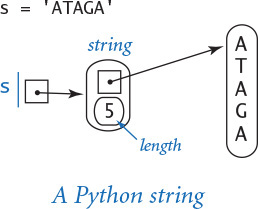

Strings

Python’s string data type has some similarity to Python lists, with one very important exception: strings are immutable. When we introduced strings, we did not emphasize this fact, but it makes lists and strings quite different. For example, you may not have noticed at the time, but you cannot change a character in a string. For example, you might think that you could capitalize a string s having the value 'hello' with s[0] = 'H', but that will result in this run-time error:

TypeError: 'str' object does not support item assignment

If you want 'Hello', you need to create a completely new string. This difference reinforces the idea of immutability and has significant implications with regard to performance, which we now examine.

Internal representation

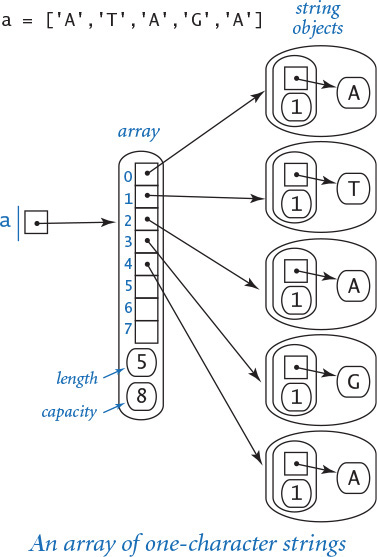

First, Python uses a much simpler internal representation for strings than for lists/arrays, as detailed in the diagram at left. Specifically, a string object contains two pieces of information:

• A reference to a place in memory where the characters in the string are stored contiguously

• The length of the string

By contrast, consider the diagram at right, which is an array of one-character strings. We will consider a more detailed analysis later in this section, but you can see that the string representation is certainly significantly simpler. It uses much less space per character and provides faster access to each character. In many applications, these characteristics are very important because strings can be very long. So, it is important both that the memory usage be not much more than is required for the characters themselves and that characters can be quickly accessed by their index, as in an array.

Performance

As for arrays, indexed access and computing the length of strings are constant-time operations. It is clear from the API at the beginning of SECTION 3.1 that most other operations take linear time as a function of the length of the input string or strings, because they refer to a copy of the string. In particular, concatenating a character to a string takes linear time and concatenating two strings takes time proportional to the length of the result. An example is shown at right. With respect to performance, this is the most significant difference between strings and lists/arrays: Python does not have resizable strings, because strings are immutable.

Example

Not understanding the performance of string concatenation often leads to performance bugs. The most common performance bug is building up a long string one character at a time. For example, consider the following code fragment to create a new string whose characters are in reverse order of the characters in a string s:

n = len(s)

reverse = ''

for i in range(n):

reverse = s[i] + reverse

During iteration i of the for loop, the string concatenation operator produces a string of length i+1. Thus, the overall running time is proportional to 1 + 2 + ... + n ~ n2 / 2 (see EXERCISE 4.1.4). That is, the code fragment takes quadratic time as a function of the string length n (see EXERCISE 4.1.13 for a linear-time solution).

Understanding differences between data types like strings and Python lists is critical in learning any programming language, and programmers must be vigilant to ensure they avoid performance bugs like the one just described. This has been the case since the early days of data abstraction. For example, the string data type in the C language that was developed in the 1970s has a linear-time length function, and countless programmers using the length in a for loop that iterates through the string have found themselves with a quadratic-time performance bug for a simple linear-time job.

Memory

As with running time, a program’s memory usage connects directly to the physical world: a substantial amount of your computer’s circuitry enables your program to store values and later retrieve them. The more values you need to store at any given instant, the more circuitry you need. To pay attention to the cost, you need to be aware of memory usage. You probably are aware of limits on memory usage on your computer (even more so than for time) because you probably have paid extra money to get more memory.

At the outset, you should be aware that the flexibility that we get from Python’s pure approach to object-oriented programming (everything is an object, even boolean values) comes with costs, and one of the most significant costs is the amount of memory consumed. This will become more clear as we examine some specific examples.

Memory usage is well defined for Python on your computer (every value will require precisely the same amount of memory each time that you run your program), but Python is implemented on a very wide range of computational devices, and memory consumption is implementation-dependent. Different versions of Python might implement the same data type in different ways. For economy, we use the word typical to signal values that are particularly subject to machine dependencies. Analyzing memory usage is somewhat different from analyzing time usage, primarily because one of Python’s most significant features is its memory allocation system, which is supposed to relieve you of having to worry about memory. Certainly, you are well advised to take advantage of this feature when appropriate. Still, it is your responsibility to know, at least approximately, when a program’s memory requirements might prevent you from solving a given problem.

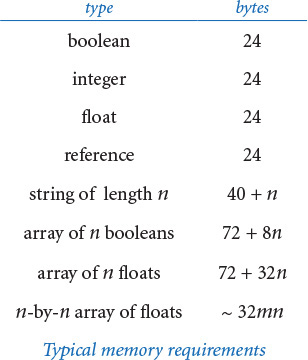

Computer memory is divided into bytes, where each byte consists of 8 bits, and where each bit is a single binary digit. To determine the memory usage of a Python program, we count up the number of objects used by the program and weight them by the number of bytes that they require, according to their type. To use that approach, we must know the number of bytes consumed by an object of any given type. To determine the memory consumption of an object, we add the amount of memory used by each of its instance variables to the overhead associated with each object.

Python does not define the sizes of the built-in data types that we have been using (int, float, bool, str, and list); the sizes of objects of those types differ from system to system. Accordingly, the sizes of data types that you create also will differ from system to system because they are based on these built-in data types. The function call sys.getsizeof(x) returns the number of bytes that a built-in object x consumes on your system. The numbers that we give in this section are observations gathered by using this function in interactive Python on one typical system. We encourage you to do the same on your computer!

Python 3 alert

The numbers we give in this section are for a typical Python 2 system. Python 3 uses a more complicated memory model. For example, the memory used by the int object 0 is not the same as the memory used by the int object 1.

Integers

To represent an int object whose value is in the range (–263 to 263 – 1), Python uses 16 bytes for overhead and 8 bytes (that is, 64 bits) for the numeric value. So, for example, Python uses 24 bytes to represent the int object whose value is 0, 24 bytes to represent the int object whose value is 1234, and so forth. In most applications, we are not dealing with huge integers outside this range, so the memory consumption for each integer is 24 bytes. Python switches to a different internal representation for integers outside this range, which consumes memory proportional to the number of digits in the integer, as in the case with strings (see below).

Floats

To represent a float object, Python uses 16 bytes for overhead and 8 bytes for the numeric value (that is, the mantissa, exponent, and sign), no matter what value the object has. So a float object always consumes 24 bytes.

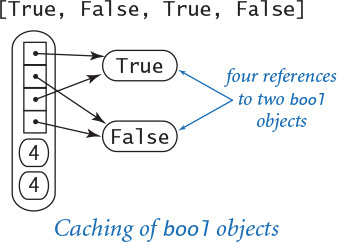

Booleans

In principle, Python could represent a boolean value using a single bit of computer memory. In practice, Python represents boolean values as integers. Specifically, Python uses 24 bytes to represent the bool object True and 24 bytes to represent the bool object False. That is a factor of 192 higher than the minimum amount needed! However, this wastefulness is partially mitigated because Python “caches” the two boolean objects, as we discuss next.

Caching

To save memory, Python creates only one copy of objects with certain values. For example, Python creates only one bool object with value true and only one with value false. That is, every boolean variables holds a reference to one of these two objects. This caching technique is possible because the bool data type is immutable. On typical systems, Python also caches small int values (between –5 and 256), as they are the ones that programmers use most often. Python does not typically cache float objects.

Strings

To represent a str object, Python uses 40 bytes for overhead (including the string length), plus one byte for each character of the string. So, for example, Python represents the string 'abc' using 40 + 3 = 43 bytes and represents the string 'abcdefghijklmnopqr' using 40 + 18 = 58 bytes. Python typically caches only string literals and one-character strings.

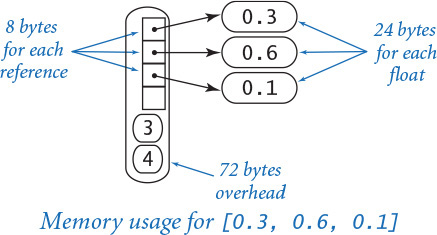

Arrays (Python lists)

To represent an array, Python uses 72 bytes for overhead (including the array length) plus 8 bytes for each object reference (one for each element in the array). So, for example, the Python representation of the array [0.3, 0.6, 0.1] uses 72 + 8*3 = 96 bytes. This does not include the memory for the objects that the array references, so the total memory consumption for the array [0.3, 0.6, 0.1] is 96 + 3*24 = 168 bytes. In general, the memory consumption for an array of n integers or floats is 72 + 32n bytes. This total is likely to be an underestimate, because the resizing array data structure that Python uses to implement arrays may consume an additional n bytes in reserved space.

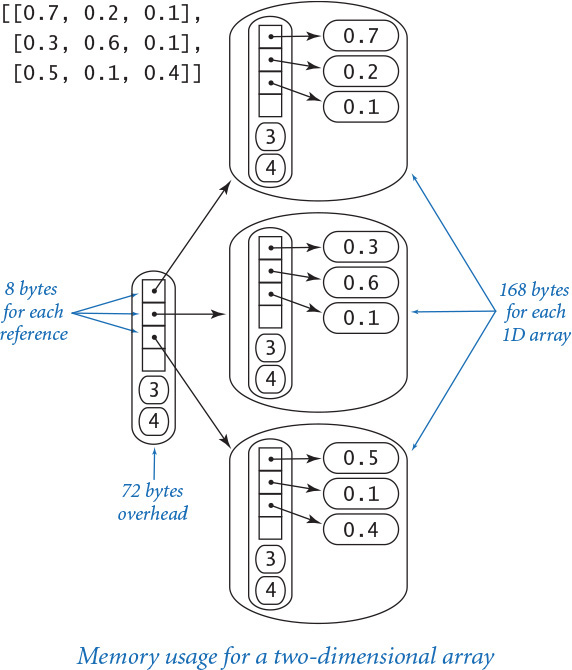

Two-dimensional arrays and arrays of objects

A two-dimensional array is an array of arrays, so we can calculate the memory consumption of a two-dimensional array with m rows and n columns from the information in the previous paragraph. Each row is an array that consumes 72 + 32n bytes, so the total is 72 (overhead) plus 8m (references to the rows) plus m(72 + 32n) (memory for the m rows) bytes, for a grand total of 72 + 80m + 32mn bytes. The same logic works for an array of any type of object: if an object uses x bytes, an array of m such objects consumes a total of 72 + m(x+8) bytes. Again, this is likely to be a slight underestimate because of the resizing array data structure Python uses to represent arrays. Note: Python’s sys.getsizeof(x) is not much help in these calculations because it does not calculate the memory for the objects themselves—it returns 72 + 8m for any array of length m (or any two-dimensional array with m rows).

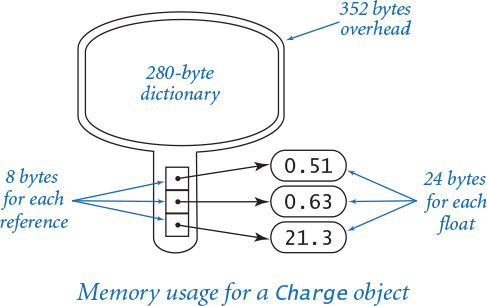

Objects

A key question for Python programming is the following: How much memory is required to represent a user-defined object? The answer to this question may surprise you, but is important to know: hundreds of bytes, at least. Specifically, Python uses 72 bytes of overhead plus 280 bytes for a dictionary that binds instance variables to objects (we will discuss dictionaries in SECTION 4.4) plus 24 bytes for a reference to each instance variable plus memory for the instance variables themselves. For example, to represent a Charge object, Python uses at least 72 + 280 = 352 bytes for overhead, 8 * 3 = 24 bytes to store the object references for the three instance variables, 24 bytes to store the float object referenced by the _rx instance variable, 24 bytes to store the float object referenced by the _ry instance variable, and 24 bytes to store the float object referenced by the _q instance variable, for a grand total of (at least) 448 bytes. The total might be even higher on your system, because some implementations consume even more overhead.

These basic mechanisms are effective for estimating the memory usage of a great many programs, but there are numerous complicating factors that can make the task significantly more difficult. For example, we have already noted the potential effect of caching. Moreover, memory consumption is a complicated dynamic process when function calls are involved because the system memory allocation mechanism plays a more important role. For example, when your program calls a function, the system allocates the memory needed for the function (for its local variables) from a special area of memory called the stack, and when the method returns to the caller, this memory is returned to the stack. For this reason, creating arrays or other large objects in recursive functions is dangerous, since each recursive call implies significant memory usage. When you create an object, the system allocates the memory needed for the object from another special area of memory known as the heap. Every object lives until no references to it remain, at which point a system process known as garbage collection reclaims its memory for the heap. Such dynamics can make the task of precisely estimating memory usage of a program challenging.

Despite all these caveats, it is important for every Python programmer to understand that each object of a user-defined type is likely to consume a large amount of memory. So, a Python program that defines a large number of objects of a user-defined type can use much more space (and time) than you might expect. That is especially true if each object contains only a few instance variables—in that case, the ratio of memory devoted to overhead versus memory devoted to instance variables is very high. For example, if you compare the performance of our Complex type with Python’s built-in complex type for mandelbrot.py, you will certainly notice this effect. Numerous object-oriented languages have come and gone since the concept was introduced decades ago, and many of them eventually embraced lightweight objects for user-defined types. Python offers two advanced features for this purpose—named tuples and slots—but we will not take advantage of such memory optimizations in this book.

Perspective

Good performance is important. An impossibly slow program is almost as useless as an incorrect one, so it is certainly worthwhile to pay attention to the cost at the outset, so as to have some idea of what sorts of problems you might feasibly address. In particular, it is always wise to have some idea of which code constitutes the inner loop of your programs.

Perhaps the most common mistake made in programming is to pay too much attention to performance characteristics. Your first priority is to make your code clear and correct. Modifying a program for the sole purpose of speeding it up is best left for experts. Indeed, doing so is often counterproductive, as it tends to create code that is complicated and difficult to understand. C. A. R. Hoare (the inventor of quicksort and a leading proponent of composing clear and correct code) once summarized this idea by saying that “premature optimization is the root of all evil,” to which Knuth added the qualifier “(or at least most of it) in programming.” Beyond that, improving the running time is not worthwhile if the available cost benefits are insignificant. For example, improving the running time of a program by a factor of 10 is inconsequential if the running time is only an instant. Even when a program takes a few minutes to run, the total time required to implement and debug an improved algorithm might be substantially longer than the time required simply to run a slightly slower one—you may as well let the computer do the work. Worse, you might spend a considerable amount of time and effort implementing ideas that do not actually make a program any faster.

Perhaps the second most common mistake made in developing an algorithm is to ignore performance characteristics. Faster algorithms are often more complicated than brute-force solutions, so you might be tempted to accept a slower algorithm to avoid having to deal with more complicated code. However, you can sometimes reap huge savings with just a few lines of good code. Users of a surprising number of computer systems lose substantial time waiting for simple quadratic algorithms to finish solving a problem, even though linear or linearithmic algorithms are available that are only slightly more complicated and could therefore solve the problem in a fraction of the time. When we are dealing with huge problem sizes, we often have no choice but to seek better algorithms.

Improving a program to make it clearer, more efficient, and elegant should be your goal every time that you work on it. If you pay attention to the cost all the way through the development of a program, you will reap the benefits every time you use it.

Q&A

Q. The text notes that operations on very large integers can consume more than constant time. Can you be more precise?

A. Not really. The definition of “very large” is system dependent. For most practical purposes, you can consider operations applied to 32- or 64-bit integers to work in constant time. Modern applications in cryptography involve huge numbers with hundreds or thousands of digits.

Q. How do I find out how long it takes to add or multiply two floats on my computer?

A. Run some experiments! The program timeops.py on the booksite uses Stopwatch to test the execution time of various arithmetic operations on integers and floats. This technique measures the actual elapsed time as would be observed on a wall clock. If your system is not running many other applications, it can produce accurate results. Python also includes the timeit module for measuring the running time of small code fragments.

Q. Is there any way to measure processor time instead of wall clock time?

A. On some systems, the function call time.clock() returns the current processor time as a float, expressed in seconds. When available, you should substitute time.time() with time.clock() for benchmarking Python programs.

Q. How much time do functions such as math.sqrt(), math.log(), and math.sin() take?

A. Run some experiments! Stopwatch makes it easy to compose programs such as timeops.py to answer questions of this sort for yourself. You will be able to use your computer much more effectively if you get in the habit of doing so.

Q. Why does allocating an array (Python list) of size n take time proportional to n?

A. Python initializes all array elements to whatever values the programmer specifies. That is, in Python there is no way to allocate memory for an array without also assigning an object reference to each element of the array. Assigning object references to each element of an array of size n takes time proportional to n.

Q. How do I find out how much memory is available for my Python programs?

A. Since Python will raise a MemoryError when it runs out of memory, it is not difficult to run some experiments. For example, use this program (bigarray.py):

import sys

import stdarray

import stdio

n = int(sys.argv[1])

a = stdarray.create1D(n, 0)

stdio.writeln('finished')

and run it like this:

% python bigarray.py 100000000

finished

to show that you have room for 100 million integers. But if you type

% python bigarray.py 1000000000

Python will hang, crash, or raise a run-time error; you can conclude that you do not have room for an array of 1 billion integers.

Q. What does it mean when someone says that the worst-case running time of an algorithm is O(n2)?

A. That is an example of a notation known as big-O notation. We write f(n) is O(g(n)) if there exist constants c and n0 such that f(n) ≤ c g(n) for all n > n0. In other words, the function f(n) is bounded above by g(n), up to constant factors and for sufficiently large values of n. For example, the function 30n2 + 10n+ 7 is O(n2). We say that the worst-case running time of an algorithm is O(g(n)) if the running time as a function of the input size n is O(g(n)) for all possible inputs. This notation is widely used by theoretical computer scientists to prove theorems about algorithms, so you are sure to see it if you take a course in algorithms and data structures. It provides a worst-case performance guarantee.

Q. So can I use the fact that the worst-case running time of an algorithm is O(n3) or O(n2) to predict performance?

A. No, because the actual running time might be much less. For example, the function 30n2 + 10n+ 7 is O(n2), but it is also O(n3) and O(n10) because big-O notation provides only an upper bound on the worst-case running time. Moreover, even if there is some family of inputs for which the running time is proportional to the given function, perhaps these inputs are not encountered in practice. Consequently, you cannot use big-O notation to predict performance. The tilde notation and order-of-growth classifications that we use are more precise than big-O notation because they provide matching upper and lower bounds on the growth of the function. Many programmers incorrectly use big-O notation to indicate matching upper and lower bounds.

Q. How much memory does Python typically use to store a tuple of n items?

A. 56 + 8n bytes, plus whatever memory is needed for the objects themselves. This is a bit less than for arrays because Python can implement a tuple (at the machine level) using an array instead of a resizing array.

Q. Why does Python use so much memory (280 bytes) to store a dictionary that maps an object’s instance variables to its values?

A. In principle, different objects from the same data type can have different instance variables. In this case, Python would need some way to manage an arbitrary number of possible instance variables for each object. But most Python code does not call for this (and, as a matter of style, we never need it in this book).

Exercises

4.1.1 Implement the function writeAllTriples() for threesum.py, which writes all of the triples that sum to zero.

4.1.2 Modify threesum.py to take a command-line argument x and find a triple of numbers on standard input whose sum is closest to x.

4.1.3 Compose a program foursum.py that reads an integer n from standard input, then reads n integers from standard input, and counts the number of distinct 4-tuples that sum to zero. Use a quadruply nested loop. What is the order of growth of the running time of your program? Estimate the largest n that your program can handle in an hour. Then, run your program to validate your hypothesis.

4.1.4 Prove that 1 + 2 + ... + n = n(n+1)/2.

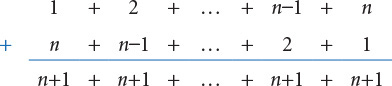

Solution: We proved this by induction at the beginning of SECTION 2.3. Here is the basis for another proof:

4.1.5 Prove by induction that the number of distinct triples of integers between 0 and n–1 is n(n–1)(n–2)/6.

Solution: The formula is correct for n = 2. For n > 2, count all the triples that do not include n, which is (n–1)(n–2)(n–3)/6 by the inductive hypothesis, and all the triples that do include n–1, which is (n–1)(n–2)/2, to get the total

(n–1)(n–2)(n–3)/6 + (n–1)(n–2)/2 = n(n–1)(n–2)/6.

4.1.6 Show by approximating with integrals that the number of distinct triples of integers between 0 and n–1 is about n3/6.

Solution: ![]()

4.1.7 What is the value of x (as a function of n) after running the following code fragment?

x = 0

for i in range(n):

for j in range(i+1, n):

for k in range(j+1, n):

x += 1

Solution: n (n–1) (n–2) / 6.

4.1.8 Use tilde notation to simplify each of the following formulas, and give the order of growth of each:

a. n(n – 1)(n – 2) (n – 3)/24

b. (n – 2) (lg n – 2) (lg n +2)

c. n(n +1) – n2

d. n(n +1)/2 +n lg n

e. ln((n – 1)(n – 2) (n – 3))2

4.1.9 Is the following code fragment linear, quadratic, or cubic (as a function of n)?

for i in range(n):

for j in range(n):

if i == j: c[i][j] = 1.0

else: c[i][j] = 0.0

4.1.10 Suppose the running time of an algorithm on inputs of size 1,000, 2,000, 3,000, and 4,000 is 5 seconds, 20 seconds, 45 seconds, and 80 seconds, respectively. Estimate how long it will take to solve a problem of size 5,000. Is the algorithm linear, linearithmic, quadratic, cubic, or exponential?

4.1.11 Which would you prefer: a quadratic, linearithmic, or linear algorithm?

Solution: While it is tempting to make a quick decision based on the order of growth, it is very easy to be misled by doing so. You need to have some idea of the problem size and of the relative value of the leading coefficients of the running time. For example, suppose that the running times are n2 seconds, 100 n log2 n seconds, and 10,000n seconds. The quadratic algorithm will be fastest for n up to about 1,000, and the linear algorithm will never be faster than the linearithmic one (n would have to be greater than 2100—far too large to bother considering).

4.1.12 Apply the scientific method to develop and validate a hypothesis about order of growth of the running time of the following code fragment, as a function of the argument n:

def f(n):

if (n == 0): return 1

return f(n-1) + f(n-1)

4.1.13 Apply the scientific method to develop and validate a hypothesis about order of growth of the running time of each of the following code fragments as a function of n:

s = ''

for i in range(n):

if stdrandom.bernoulli(0.5): s += '0'

else: s += '1'

s = ''

for i in range(n):

oldS = s

if stdrandom.bernoulli(0.5): s += '0'

else: s += '1'

Solution: On many systems, the first is linear; the second is quadratic. You have no way of knowing why: In the first case, Python detects that s is the only variable that refers to the string, so it appends each character to the string as it would with a list (in amortized constant time) even though the string is immutable! A safer alternative is to create a list containing the characters and concatenate them together with by calling the join() method.

a = []

for i in range(n):

if stdrandom.bernoulli(0.5): a += ['0']

else: a += ['1']

s = ''.join(a)

4.1.14 Each of the four Python functions below returns a string of length n whose characters are all x. Determine the order of growth of the running time of each function. Recall that concatenating two strings in Python takes time proportional to the sum of their lengths.

def f1(n):

if (n == 0):

return ''

temp = f1(n // 2)

if (n % 2 == 0): return temp + temp

else: return temp + temp + 'x'

def f2(n):

s = ''

for i in range(n):

s += 'x'

return s

def f3(n):

if (n == 0): return ''

if (n == 1): return 'x'

return f3(n//2) + f3(n - n//2)

def f4(n):

temp = stdarray.create1D(n, 'x')

return ''.join(temp)

def f5(n):

return 'x' * n

4.1.15 Each of the three Python functions below returns the reverse of a string of length n. What is the order of growth of the running time of each function?

def reverse1(s):

n = len(s)

reverse = ''

for i in range(n):

reverse = s[i] + reverse

return reverse

def reverse2(s):

n = len(s)

if (n <= 1):

return s

left = s[0 : n//2]

right = s[n//2 : n]

return reverse2(right) + reverse2(left)

def reverse3(s):

return s[::-1]

The slice expression s[::-1] uses an optional third argument to specify the step size.

4.1.16 The following code fragment (adapted from a Java programming book) creates a random permutation of the integers from 0 to n–1. Determine the order of growth of its running time as a function of n. Compare its order of growth with the shuffling code in SECTION 1.4.

a = stdarray.create1D(n, 0)

taken = stdarray.create1D(n, False)

count = 0

while (count < n):

r = stdrandom.uniform(0, n)

if not taken[r]:

a[r] = count

taken[r] = True

count += 1

4.1.17 How many times does the following code fragment execute the first if statement in the triply nested loop?

for i in range(n):

for j in range(n):

for k in range(n):

if (i < j) and (j < k):

if a[i] + a[j] + a[k] == 0:

count += 1

Use tilde notation to simply your answer.

4.1.18 Apply the scientific method to develop and validate a hypothesis about order of growth of the running time of the collect() method in coupon.py (PROGRAM 2.1.3), as a function of the argument n. Note: Doubling is not effective for distinguishing between the linear and linearithmic hypotheses—you might try squaring the size of the input.

4.1.19 Apply the scientific method to develop and validate a hypothesis about order of growth of the running time of markov.py (PROGRAM 1.6.3), as a function of the arguments moves and n.

4.1.20 Compose a program mooreslaw.py that takes a command-line argument n and writes the increase in processor speed over a decade if processor speed doubles every n months. How much will processor speed increase over the next decade if speeds double every n = 15 months? 24 months?

4.1.21 Using the memory model from the text, give the memory requirements for each object of the following data types from CHAPTER 3:

a. Stopwatch

b. Turtle

c. Vector

d. Body

e. Universe

4.1.22 Estimate, as a function of the grid size n, the amount of memory used by visualizev.py (PROGRAM 2.4.4) with vertical percolation detection (PROGRAM 2.4.2). Extra credit: Answer the same question for the case where the recursive percolation detection method percolation.py (PROGRAM 2.4.6) is used.

4.1.23 Estimate the size of the largest n-by-n array of integers that your computer can hold, and then try to allocate such an array.

4.1.24 Estimate, as a function of the number of documents n and the dimension d, the amount of space used by comparedocuments.py (PROGRAM 3.3.5).

4.1.25 Compose a version of primesieve.py (PROGRAM 1.4.3) that uses an array of integers instead of an array of booleans and uses 32 bits in each integer, to raise the largest value of n that it can handle by a factor of 32.

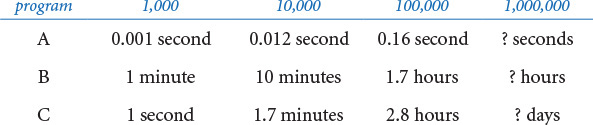

4.1.26 The following table gives running times for various programs for various values of n. Fill in the blanks with estimates that you think are reasonable on the basis of the information given.

Give hypotheses for the order of growth of the running time of each program.

Creative Exercises

4.1.27 Three-sum analysis. Calculate the probability that no triple among n random 32-bit integers sums to 0, and give an approximate estimate for n equal to 1,000, 2,000, and 4,000. Extra credit: Give an approximate formula for the expected number of such triples (as a function of n), and run experiments to validate your hypothesis.

4.1.28 Closest pair. Design a quadratic algorithm that finds the pair of integers that are closest to each other. (In the next section you will be asked to find a linearithmic algorithm.)

4.1.29 Power law. Show that a log-log plot of the function cnb has slope b and x-intercept log c. What are the slope and x-intercept for 4 n3 (log n)2 ?

4.1.30 Sum furthest from zero. Design an algorithm that finds the pair of integers whose sum is furthest from zero. Can you discover a linear-time algorithm?

4.1.31 The “beck” exploit. A popular web server supports a function no2slash() whose purpose is to collapse multiple / characters. For example, the string /d1///d2////d3/test.html becomes /d1/d2/d3/test.html. The original algorithm was to repeatedly search for a / and copy the remainder of the string:

def no2slash(name):

for x in range(1, len(name)):

if x > 0:

if (name[x-1] == '/') and (name[x] == '/'):

for y in range(x+1, len(name)):

name[y-1] = name[y]

else:

x += 1

Unfortunately, the running time of this code is quadratic in the number of / characters in the input. By sending multiple simultaneous requests with large numbers of / characters, a hacker can deluge a server and starve other processes for CPU time, thereby creating a denial-of-service attack. Develop a version of no2slash() that runs in linear time and does not allow for this type of attack.

4.1.32 Young tableaux. Suppose you have in memory an n-by-n array of integers a[][] such that a[i][j] < a[i+1][j] and a[i][j] < a[i][j+1] for all i and j, like the table below:

5 23 54 67 89

6 69 73 74 90

10 71 83 84 91

60 73 84 86 92

99 91 92 93 94

Devise an algorithm whose order of growth is linear in n to determine whether a given integer x is in a given Young tableaux.

4.1.33 Subset sum. Compose a program anysum.py that takes an integer n from standard input, then reads n integers from standard input, and counts the number of subsets that sum to 0. Give the order of growth of the running time of your program.

4.1.34 Array rotation. Given an array of n elements, give a linear-time algorithm to rotate the array by k positions. That is, if the array contains a0, a1, ..., an-1, the rotated array is ak, ak+1, ..., an-1, a0, ..., ak-1. Use at most a constant amount of extra space (array indices and array values). Hint: Reverse three subarrays.

4.1.35 Finding a duplicated integer. (a) Given an array of n integers from 1 to n with one value repeated twice and one missing, give an algorithm that finds the missing integer, in linear time and constant extra space. (b) Given a read-only array of n integers, where each value from 1 to n–1 occurs once and one occurs twice, give an algorithm that finds the duplicated value, in linear time and constant extra space. (c) Given a read-only array of n integers with values between 1 and n–1, give an algorithm that finds a duplicated value, in linear time and constant extra space.

4.1.36 Factorial. Design a fast algorithm to compute n! for large values of n. Use your program to compute the longest run of consecutive 9s in 1000000!. Develop and validate a hypothesis for the order of growth of the running time of your algorithm.

4.1.37 Maximum sum. Design a linear-time algorithm that finds a contiguous subsequence of at most m in a sequence of n integers that has the highest sum among all such subsequences. Implement your algorithm, and confirm that the order of growth of its running time is linear.

4.1.38 Pattern matching. Given an n-by-n array of black (1) and white (0) pixels, design a linear algorithm that finds the largest square subarray that consists of entirely black pixels. As an example, the following 8-by-8 array contains a 3-by-3 subarray entirely of black pixels:

1 0 1 1 1 0 0 0

0 0 0 1 0 1 0 0

0 0 1 1 1 0 0 0

0 0 1 1 1 0 1 0

0 0 1 1 1 1 1 1

0 1 0 1 1 1 1 0

0 1 0 1 1 0 1 0

0 0 0 1 1 1 1 0

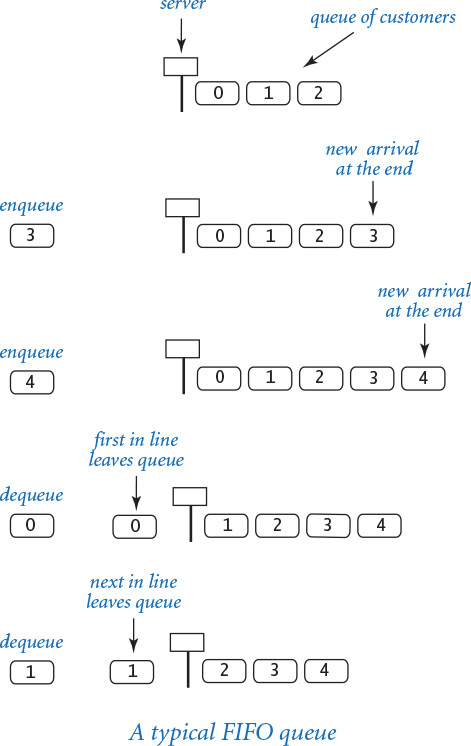

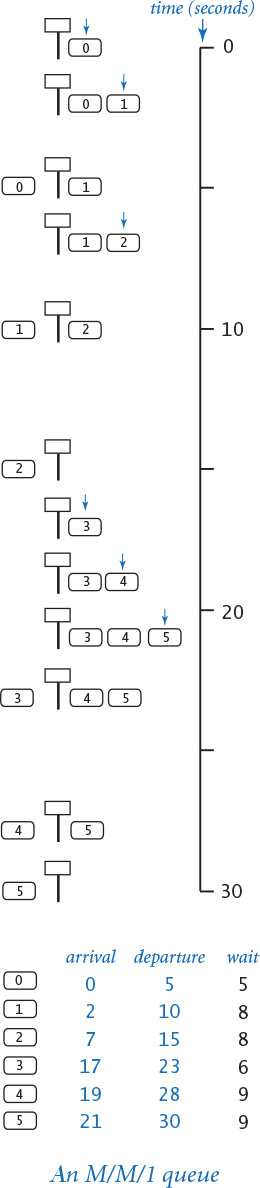

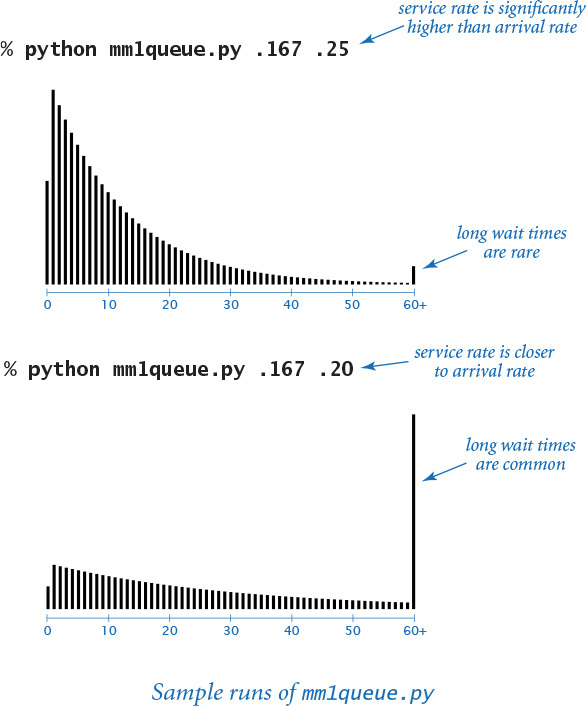

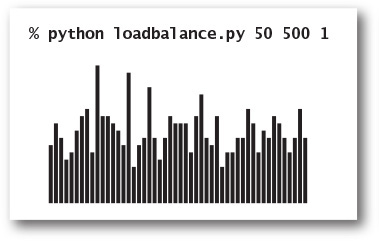

Implement your algorithm and confirm that the order of growth of its running time is linear in the number of pixels. Extra credit: Design an algorithm to find the largest rectangular black subarray.