This is the longest chapter of the audio section, and may actually be the longest chapter of the entire book. But don't feel too discouraged, because the length is not related to the difficulty. We are going to cover multiple APIs, comparing their functionality to give you a better understanding of how they work. The goal is to help you select the API that accomplishes the most in the least amount of effort for your own game.

First, we will look at how you might use large audio files in your games. Then we will cover the Media Player framework, which allows you to access the iPod music library from within your application. We will walk through an example that lets the user construct a playlist from the iPod music library to be used as background music for Space Rocks!.

Next, we will move on to the more generic audio streaming APIs: AVFoundation, OpenAL, and Audio Queue Services. Using each of these APIs, we will repeat implementing background music for Space Rocks!. And in the OpenAL example, we will do sort of a grand finale and embellish the game by adding streaming speech and fully integrating it with the OpenAL capabilities we implemented in the previous two chapters.

Then we will turn our attention to audio capture. Once again, we will compare and contrast three APIs: Audio Queue Services, AVFoundation, and OpenAL.

Finally, we bring things full circle and close with some OpenGL and OpenAL optimization tips.

In the previous chapters, we focused on playing short sound effects. But what about longer things like music? Most games have background music, right?

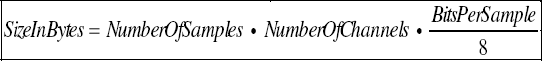

For performance, we have been preloading all the audio files into RAM. But this isn't feasible for large audio files. A 44 kHz, 60-second stereo sample takes about 10MB of memory to hold (as linear PCM). For those wondering how to do the math:

Now, 10MB is a lot of RAM to use, particularly when we are listening to only a small piece of the 1-minute audio sample at any given moment. And on a RAM-limited device such as an iPhone, a more memory-efficient approach is critical if you hope to do anything else in your game, such as graphics.

In this chapter, we will look at APIs to help us deal with large amounts of audio data. Since the device we are talking about originates from a portable music player, it is only reasonable that iPhone OS provides special APIs to allow you to access the iPod music library. We will also look at the more general audio APIs that allow us to play our own bundled music. These general audio APIs are often referred to as buffer-queuing APIs or streaming APIs, because you continuously feed small chunks of data to the audio system. But I don't want you to get myopia for just music—these audio APIs are general purpose, and can handle other types of audio.

You might be thinking that you don't need anything beyond music in your game. However, there are other types of long-duration sounds that you may require. Speech is the most notable element. Speech can find many ways into games. Adventure games with large casts of characters with dialogue, such as the cult classic Star Control 2: The Ur-Quan Masters and the recently rereleased The Secret of Monkey Island (see Figures 12-1 and 12-2) are prime examples of games that make heavy use of both simultaneous music and speech throughout the entire game.

Figure 12.1. The Secret of Monkey Island is a prime example of a game that has both music and speech. It's now available for iPhone OS!

Figure 12.2. In-game screenshot of The Secret of Monkey Island. Voice actors provide speech for the dialogue to enhance the quality of the game.

It is also common for games to have an opening sequence that provides a narrator. And even action-oriented games need speech occasionally. Half-Life opened with an automated train tour guide talking to you as you descended into the compound, and you meet somewhat chatty scientists along the way. And for many people, the most memorable things from Duke Nukem 3D are the corny lines Duke says throughout the game.

Quick, arcade-style games may use streaming sound—perhaps for an opening or to tell you "game over"—because the sound is too infrequently used to be worth keeping resident. I recall seeing a port of Street Fighter 1 on the semi-obscure video game console TurboGrafx-16 with the CD-ROM drive add-on. To get the "Round 1 fight" and "<Character> wins" speeches, the console accessed the CD-ROM. The load times were hideously slow on this machine, so the game would pause multiple seconds to pull this dialogue. But the console had very limited memory, so the game developers decided not to preload these files. Whether they actually streamed them, I cannot say definitively, but they could have.

Games built around immersive environments that might not have a dedicated soundtrack still might need streaming. Imagine entering a room like a bar that has a TV, a radio, and a jukebox within earshot. You will be hearing all these simultaneously, and they are probably not short looping samples if they are going to be interesting.

You may remember my anecdote about SDL_mixer from Chapter 10, and how it ultimately led me to try OpenAL. My problem with SDL_mixer was it got tunnel vision on music. SDL_mixer made a strong distinction between sound effects (the short-duration sounds completely preloaded for performance) and music. SDL_mixer had a distinct API for dealing with music that was separate from sound effects. This in itself was not necessarily bad, but SDL_mixer made the assumption that you would only ever need one "music" channel. The problem I ran into was that I needed both speech and music. But speech was too long to be a "sound effect." Furthermore, at the time, SDL_mixer didn't support highly compressed audio such as MP3 for the nonmusic channel, which was one of the odd things about having a distinct API for music and sound effects. So SDL_mixer was pretty much a dead-end for my usage case without major hacking. This event scarred me for life, so I encourage you not to box yourself in by thinking too small.

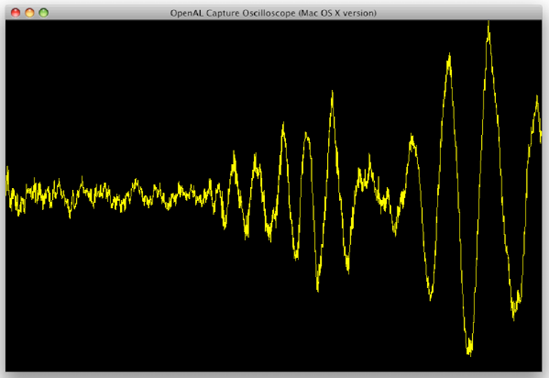

And on that note, there is one final thing we will briefly address in this chapter that is related to streaming: audio input, also known as audio capture or audio recording. Audio capture also deals with continuous small chunks of data, but the difference is that it is coming from the audio system, rather than having you submit it to the audio system.

iPhone OS 3.0 was the first version to let you access to the iPod music library programmatically via the Media Player framework. You can access songs, audiobooks, and podcasts from the user's iPod music library and play them in your application. This is one step beyond just setting the audio session to allow the user to mix in music from the iPod application (see Figure 12-3). This framework gives you ultimate authority over which songs are played. You can also present a media item picker so the user may change songs without quitting your application (and returning to the iPod application).

Figure 12.3. The built-in iPod application in Cover Flow mode. Prior to iPhone OS 3.0, allowing your application to mix audio with the playing iPod application was the only way to let users play their own music in your application, albeit manually. Now with the Media Player framework, this can be accomplished more directly.

Note

The Media Player framework does not allow you to get direct access to the raw files or to the PCM audio data being played. There is also limited access to metadata. That means certain applications are not currently possible. Something as simple as a music visualizer really isn't possible because you can't analyze the PCM audio as it plays. And any game that hopes to analyze the music data, such as a beat/rhythm type of game, will not be able to access this data.

We will go through a short example. This example will probably be quite different from what you'll find in other books and documentation. Basic playback is quite simple, so there generally is an emphasis on building rich UIs to accompany your application. These include notifications to know when a song has changed (so you can update status text), how to search through collections to find songs that meet special criteria, and how to retrieve album artwork and display it in your app. But this is a game book, so we are going to take another approach.

Here, we are going to access the iPod library, present a media item picker, and play. The unique aspect is that we will use our existing Space Rocks! code and mix that in with our existing OpenAL audio that we used for sound effects.

First, you should make sure you have at least one song installed in your device's iPod library. (Production code should consider what to do in the case of an empty iPod library.) Also note that the iPhone simulator is currently not supported, so you must run this on an actual device.

Second, I want to remind you that we have been setting our audio session to kAudioSessionCategory_AmbientSound in the Space Rocks! code thus far. This allows mixing your application's audio with other applications. More specifically, this allows you to hear both your OpenAL sound effects and the iPod. (If you haven't already tried it, you might take a moment to go to the iPod application and start playing a song. Then start up Space Rocks! and hear the mixed sound for yourself.) When using the Media Player framework, you must continue to use the AmbientSound mode if you want to hear both your OpenAL sound effects and the Media Player framework audio.

Finally, we need to make a decision about how we want our media player to behave, as Apple gives us two options. Apple provides an application music player and an iPod music player. The iPod music player ties in directly to the built-in iPod music player application and shares the same state (e.g., shuffle and repeat modes). When you quit your application using this player, music still playing will continue to play. In contrast, the application music player gets its own state, and music will terminate when you quit your application. For this example, we will use the iPod music player, mostly because I find its seamless behavior with the built-in iPod player to be distinctive from the other streaming APIs we will be looking at later. Those who wish to use the application music player shouldn't fret about missing out. The programming interface is the same.

We will continue building on Space Rocks! from the previous chapter, specifically, the project SpaceRocksOpenAL3D_6_SourceRelative. The completed project for this example is SpaceRocksMediaPlayerFramework.

To get started, we will create a new class named IPodMusicController to encapsulate the Media Player code. We also need to add the Media Player framework to the project.

Our IPodMusicController will be a singleton. It will encapsulate several convenience methods and will conform to the Apple's MPMediaPickerControllerDelegate so we can respond to the MPMediaPicker's delegate callback methods.

#import <UIKit/UIKit.h>

#import <MediaPlayer/MediaPlayer.h>

@interface IPodMusicController : NSObject <MPMediaPickerControllerDelegate>

{

}

+ (IPodMusicController*) sharedMusicController;

- (void) startApplication;

- (void) presentMediaPicker:(UIViewController*)current_view_controller;

@endThe startApplication method will define what we want to do when our application starts. So what do we want to do? Let's keep it fairly simple. We will get an iPod music player and start playing music if it isn't already playing.

Apple's MPMusicPlayerController class represents the iPod music player. It also uses a singleton pattern, which you've seen multiple times in previous chapters. For brevity, the implementation of the singleton accessor method is omitted here. See the finished example for the method named sharedMusicController.

Let's focus on the startApplication method.

- (void) startApplication

{

MPMusicPlayerController* music_player = [MPMusicPlayerController iPodMusicPlayer];

// Set or otherwise take iPod's current modes

// [music_player setShuffleMode:MPMusicShuffleModeOff];

// [music_player setRepeatMode:MPMusicRepeatModeNone];

if(MPMusicPlaybackStateStopped == music_player.playbackState)

{

// Get all songs in the library and make them the list to play

[music_player setQueueWithQuery:[MPMediaQuery songsQuery]];

[music_player play];

}

else if(MPMusicPlaybackStatePaused == music_player.playbackState)

{

// Assuming that a song is already been selected to play

[music_player play];

}

else if(MPMusicPlaybackStatePlaying == music_player.playbackState){

// Do nothing, let it continue playing

}

else

{

NSLog(@"Unhandled MPMusicPlayerController state: %d", music_player.playbackState);

}

}As you can see in the startApplication method, to get the iPod music player, we simply do this:

MPMusicPlayerController* music_player = [MPMusicPlayerController iPodMusicPlayer];

If we wanted to get the application music player instead, we would invoke this method instead:

MPMusicPlayerController* music_player = [MPMusicPlayerController applicationMusicPlayer];

Optionally, we can set up some properties, such as the shuffle and repeat modes, like this:

[music_player setShuffleMode:MPMusicShuffleModeOff]; [music_player setRepeatMode:MPMusicRepeatModeNone];

We won't set them for this example, and instead rely on the iPod's current modes.

Next, we need to find some songs to play and start playing them. The MPMediaQuery class allows you to form queries for specific files. It also provides some convenient methods, which we will take advantage of to query for all songs contained in the library. The MPMusicPlayerController has a method called setQueueWithQuery, which will add the results of the query to the iPod's play queue. So by the end of it, we will construct a queue containing all of the songs in the library.

[music_player setQueueWithQuery:[MPMediaQuery songsQuery]];

Then to play, we just invoke play:

[music_player play];

But you can see from this method implementation that we get a little fancy. We have a large if-else block to detect whether the iPod is currently playing audio, using the MPMusicPlayerController's playbackState property. We can instruct the iPod to create a new queue only if it is not already playing audio. There are six different states: playing, paused, stopped, interrupted (e.g., phone call interruption), seeking forward, and seeking backward. For this example, we will concern ourselves with only the first three states.

Tip

You may encounter the paused state more frequently than you might initially expect. This is because the iPod application doesn't have a stop button; it has only a pause button. Users who were playing music on their iPod and "stopped" it in the middle of a song are likely to be paused. You will encounter the stopped state if the user has just rebooted the device or let the iPod finish playing a playlist to completion. I separate the case for demonstration purposes, but for real applications, you may consider lumping stopped and paused into the same case, as the users may not remember they were in the middle of a song.

Now we need to invoke this method from Space Rocks! We will return to BBSceneController.m and add the following line to its init method:

[[IPodMusicController sharedMusicController] startApplication];

(Don't forget to #import "IPodMusicController.h" at the top of the file.)

Now, when the game loads, you should hear music playing from your iPod. And you should notice that the OpenAL audio still works. Congratulations!

Note

MPMusicPlayerController has a volume property. However, this volume is the master volume control, so if you change the volume, it affects both the iPod music and the OpenAL audio in the same way. This may make volume balancing between the iPod and OpenAL very difficult—if not impossible—as you will have only fine-grained control over OpenAL gain levels, and not the iPod in isolation.

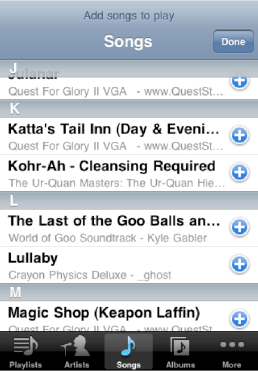

Now we will go one extra step and allow the user to build a list of songs from a picker (see Figures 12-4 and 12-5). Unfortunately, our Space Rocks! code really didn't intend us to do this kind of thing. So what you are about to see is kind of a hack. But I don't want to overwhelm you with a lot of support code to make this clean, as our focus is on the picker and music player.

Figure 12.4. Scrolling though the list of albums presented in the media item picker in the albums display mode showing my geeky but on-topic/game-related iPod music library.

Figure 12.5. Scrolling through the same media item picker in the songs display mode. Note the buttons on the right side of the table view list entries that allow you to add a song to the playlist you are constructing.

As you might have noticed earlier, I listed a prototype in IPodMusicController for this method:

- (void) presentMediaPicker:(UIViewController*)current_view_controller;

We will write this method to encapsulate creating the picker and displaying it.

Apple provides a ready-to-use view controller subclass called MPMediaPickerController, which will allow you to pick items from your iPod library. Apple calls this the media item picker. It looks very much like the picker in the iPod application. One very notable difference, however, is that there is no Cover Flow mode with the media item picker.

Despite the shortcoming, we will use this class for our picker. Add the implementation for presentMediaPicker in IPodMusicController.m.

- (void) presentMediaPicker:(UIViewController*)current_view_controller

{

MPMediaPickerController* media_picker = [[[MPMediaPickerController alloc]

initWithMediaTypes:MPMediaTypeAnyAudio] autorelease];

[media_picker setDelegate:self];

[media_picker setAllowsPickingMultipleItems:YES];

// For a message at the top of the view

media_picker.prompt = NSLocalizedString(@"Add songs to play", "Prompt in media item

picker");

[current_view_controller presentModalViewController:media_picker animated:YES];

}In the first line, we create a new MPMediaPickerController. It takes a mask parameter that allows you to restrict which media types you want to display in your picker. Valid values are MPMediaTypeMusic, MPMediaTypePodcast, MPMediaTypeAudioBook, and the convenience mask MPMediaTypeAnyAudio. We will use the latter, since the user may have been listening to a podcast or audiobook before starting Space Rocks!, and I don't see any reason to restrict it.

In the next line, we set the picker's delegate to self. Remember that earlier we made the IPodMusicController class conform to the MPMediaPickerControllerDelegate protocol. We will implement the delegate methods in this class shortly. Setting the delegate to self will ensure our delegate methods are invoked.

Next, we set an option on the picker to allow picking multiple items. This will allow the users to build a list of songs they want played, rather than just selecting a single song. This isn't that critical for our short game, but it is an option that you might want to use in your own games.

Then we set another option to show a text label at the very top of the picker. We will display the string "Add songs to play". We set the prompt property with this string. You may have noticed the use of NSLocalizedString. In principle, when generating text programmatically, you should always think about localization. Since this is a Cocoa-level API, we can use Cocoa's localization support functions. Since we are not actually localizing this application, this is a little overkill, but it's here as a reminder to you to think about localization.

Now we are ready to display the media item picker. Notice that we passed in a parameter called current_view_controller. This represents our current active view controller. We want to push our media item picker view controller onto our active view controller, so we send the presentModalViewController message to our active view controller, with the media picker view controller as the parameter.

Next, let's implement the two delegate methods. The first delegate method is invoked after the user selects songs and taps Done. The second delegate method is invoked if the user cancels picking, (e.g., taps Done without selecting any songs).

#pragma mark MPMediaPickerControllerDelegate methods

- (void) mediaPicker:(MPMediaPickerController*)media_picker

didPickMediaItems:(MPMediaItemCollection*)item_collection

{

MPMusicPlayerController* music_player = [MPMusicPlayerController iPodMusicPlayer];

[music_player setQueueWithItemCollection:item_collection];

[music_player play];

[media_picker.parentViewController dismissModalViewControllerAnimated:YES];

}

- (void) mediaPickerDidCancel:(MPMediaPickerController *)media_picker

{

[media_picker.parentViewController dismissModalViewControllerAnimated:YES];

}The code in the first method should seem familiar, as it is almost the same as our startApplication code. We get the iPodMusicController, set the queue, and call play. Since this delegate method provides us the item collection, we use the setQueueWithItemCollection method instead of the query version. Lastly, in both functions, we remove the media picker item from the view controller so we can get back to the game.

That's the media item picker in a nutshell. Now we need to design a way for the user to bring up the picker. As I said, this is a hack. Rather than spending time trying to work in some new button, we will exploit the accelerometer and use a shake motion to be the trigger for bringing up a picker. (This also has the benefit of being kind of cool.) We will embark on another little side quest to accomplish this. (You might think that a better thing to do is shuffle the songs on shake, which you might like to try on your own.)

In Chapter 3, you learned how to use the accelerometer. But we are going to spice things up and do something a little different. In iPhone OS 3.0, Apple introduced new APIs to make detecting shakes much easier:

- (void) motionBegan:(UIEventSubtype)the_motion withEvent:(UIEvent*)the_event; - (void) motionEnded:(UIEventSubtype)the_motion withEvent:(UIEvent*)the_event; - (void) motionCancelled:(UIEventSubtype)the_motion withEvent:(UIEvent*)the_event;

These were added into the UIResponder class, so you no longer need to access the accelerometer directly and analyze the raw accelerometer data yourself for common motions like shaking. Here, we will just use motionBegan:withEvent:.

Since this is part of the UIResponder class, we need to find the best place to add this code. This happens to be BBInputViewController, where we also handle all our touch events.

We will add the method and implement it as follows:

- (void) motionBegan:(UIEventSubtype)the_motion withEvent:(UIEvent *)the_event

{

if(UIEventSubtypeMotionShake == the_motion)

{

[[IPodMusicController sharedMusicController] presentMediaPicker:self];

}

}This is very straightforward. We just check to make sure the motion is a shake event. If it is, we invoke our presentMediaPicker: method, which we just implemented. We pass self as the parameter because our BBInputViewController instance is the current active view controller to which we want to attach the picker.

For this code to actually work though, we need to do some setup. We need to implement three more methods for this class to make our BBInputViewController the first responder. Otherwise, the motionBegan:withEvent: method we just implemented will never be invoked. To do this, we drop in the following code:

- (BOOL) canBecomeFirstResponder

{

return YES;

}

- (void) viewDidAppear:(BOOL)is_animated

{

[self becomeFirstResponder];

[super viewDidAppear:is_animated];

}

- (void) viewWillDisappear:(BOOL)is_animated

{

[self resignFirstResponder];

[super viewWillDisappear:is_animated];

}Now you are ready to try it. Start up Space Rocks! and give the device a shake to bring up the picker. Select some songs and tap Done. You should hear the music change to your new selection. Shake it again and pick some other songs. Fun, eh? (Yes, pausing the game when presenting the media item picker would be a great idea, but since we currently lack a game pause feature, I felt that would be one side quest too far.)

This concludes our Media Player framework example. We will now move to general streaming APIs.

Audio streaming is the term I use to describe dealing with large audio data. Ultimately, the idea is to break down the audio into small, manageable buffers. Then in the playback case, you submit each small buffer to the audio system to play when it is ready to receive more data. This avoids needing to load the entire thing into memory at the same time and exhausting your limited amount of RAM.

The type of streaming I'm describing here is not the same as network audio streaming, which is often associated with things like Internet radio. With network audio streaming, the emphasis is on the network part. The idea is that you are reading in packets of data over the network and playing it. In principle, the audio streaming I am describing is pretty much the same idea, but lower level and only about the audio system. It doesn't really care where the data came from—it could be from the network, from a file, captured from a microphone, or dynamically generated from an algorithm.

While the concept is simple, depending on the API you use, preparing the buffers for use and knowing when to submit more data can be tedious. We will discuss three native APIs you can use for streaming: AVFoundation, OpenAL, and Audio Queue Services. Each has its own advantages and disadvantages.

AVFoundation is the easiest to use but the most limited in capabilities. OpenAL is lower-level, but more flexible than AVFoundation and can work seamlessly with all the cool OpenAL features we've covered in the previous two chapters. Audio Queue Services is at about the same level of difficulty as OpenAL, but may offer features and conveniences that OpenAL does not provide.

For applications that are not already using OpenAL already for nonstreaming audio, but need audio streaming capabilities that AVFoundation does not provide, Audio Queue Services is a compelling choice. But if your application is already using OpenAL for nonstreaming audio, the impedance mismatch between Audio Queue Services and OpenAL may cause you to miss easy opportunities to exploit cool things OpenAL can already do for you, such as spatializing your streaming audio (as demonstrated in this chapter).

Here's some good news: You already know how to play long files (stream) with AVAudioPlayer. We walked through an example in Chapter 9. AVAudioPlayer takes care of all the messy bookkeeping and threading, so you don't need to worry about it if you use this API.

There is more good news: iPhone OS allows you to use different audio APIs without jumping through hoops. We can take our Space Rocks! OpenAL-based application and add background music using AVAudioPlayer.

One thing to keep in mind is that you want to set the audio session only once (don't set it up in your AVFoundation code, and again in your OpenAL code). But interruptions must be handled for each API.

We will continue building on SpaceRocksOpenAL3D_6_SourceRelative from the previous chapter. (Note that this version does not include the changes we made for background music using the Media Player framework.) The completed project for this example is SpaceRocksAVFoundation. We will go through this very quickly, since you've already seen most of it in Chapter 9.

As a baseline template, let's copy over our AVPlaybackSoundController class from Chapter 9 into the Space Rocks! project. We will then gut the code to remove the things we don't need. We will also add a few new methods to make it easier to integrate with Space Rocks! In truth, it would probably be just as easy to use Apple's AVAudioPlayer directly in the BBSceneController, but I wanted a separate place to put the interruption delegate callbacks to keep things clean.

Starting with the AVPlaybackSoundController header, let's delete all the old methods. Then delete the separate speech and music player. In its place, we'll create a generic "stream" player. The idea is that if the game needs multiple streams, we can instantiate multiple instances of this class. I suppose this makes this class less of a "controller," but oh well.

The class also conformed to the AVAudioSessionDelegate protocol. We will let the OpenAL controller class continue to set up and manage the audio session, so we can delete this, too. We need a way to specify an arbitrary sound file, so we'll make a new designated initializer called initWithSoundFile:, which takes an NSString* parameter. Finally, we'll add some new methods and properties to control playing, pausing, stopping, volume, and looping. The modified AVPlaybackSoundController.h file should look like this:

#import <AVFoundation/AVFoundation.h>

#import <UIKit/UIKit.h>

@interface AVPlaybackSoundController : NSObject <AVAudioPlayerDelegate>

{

AVAudioPlayer* avStreamPlayer;

}

@property(nonatomic, retain) AVAudioPlayer* avStreamPlayer;

@property(nonatomic, assign) NSInteger numberOfLoops;

@property(nonatomic, assign) float volume;

- (id) initWithSoundFile:(NSString*) sound_file_basename;

- (void) play;

- (void) pause;

- (void) stop;

@endIn our implementation, we delete almost everything except the AVAudioPlayerDelegate methods. We will purge the audioPlayerDidFinishPlaying:successfully:-specific implementation though. We then just implement the new methods. Most of them are direct pass-throughs to AVAudioPlayer. The one exception is our new initializer. This code will create our new AVAudioPlayer instance. We also copy and paste the file-detection code we use from the OpenAL section to locate a file without requiring an extension. The new AVPlaybackSoundController.m file looks like this:

#import "AVPlaybackSoundController.h"

@implementation AVPlaybackSoundController

@synthesize avStreamPlayer;

- (id) initWithSoundFile:(NSString*)sound_file_basename

{

NSURL* file_url = nil;

NSError* file_error = nil;

// Create a temporary array containing the file extensions we want to handle.

// Note: This list is not exhaustive of all the types Core Audio can handle.

NSArray* file_extension_array = [[NSArray alloc]

initWithObjects:@"caf", @"wav", @"aac", @"mp3", @"aiff", @"mp4", @"m4a", nil];

for(NSString* file_extension in file_extension_array)

{

// We need to first check to make sure the file exists;

// otherwise NSURL's initFileWithPath:ofType will crash if the file doesn't exist

NSString* full_file_name = [NSString stringWithFormat:@"%@/%@.%@",

[[NSBundle mainBundle] resourcePath], sound_file_basename, file_extension];

if(YES == [[NSFileManager defaultManager] fileExistsAtPath:full_file_name])

{

file_url = [[[NSURL alloc] initFileURLWithPath:[[NSBundle mainBundle]

pathForResource:sound_file_basename ofType:file_extension]] autorelease];

break;

}

}

[file_extension_array release];

if(nil == file_url)

{

NSLog(@"Failed to locate audio file with basename: %@", sound_file_basename);

return nil;

}

self = [super init];

if(nil != self)

{

avStreamPlayer = [[AVAudioPlayer alloc] initWithContentsOfURL:file_url

error:&file_error];

if(file_error)

{

NSLog(@"Error loading stream file: %@", [file_error localizedDescription]);

}

avStreamPlayer.delegate = self;

// Optional: Presumably, the player will start buffering now instead of on play.

[avStreamPlayer prepareToPlay];

}

return self;

}

- (void) play

{

[self.avStreamPlayer play];}

- (void) pause

{

[self.avStreamPlayer pause];

}

- (void) stop

{

[self.avStreamPlayer stop];

}

- (void) setNumberOfLoops:(NSInteger)number_of_loops

{

self.avStreamPlayer.numberOfLoops = number_of_loops;

}

- (NSInteger) numberOfLoops

{

return self.avStreamPlayer.numberOfLoops;

}

- (void) setVolume:(float)volume_level

{

self.avStreamPlayer.volume = volume_level;

}

- (float) volume

{

return self.avStreamPlayer.volume;

}

- (void) dealloc

{

[avStreamPlayer release];

[super dealloc];

}

#pragma mark AVAudioPlayer delegate methods

- (void) audioPlayerDidFinishPlaying:(AVAudioPlayer*)which_player

successfully:(BOOL)the_flag

{

}

- (void) audioPlayerDecodeErrorDidOccur:(AVAudioPlayer*)the_player

error:(NSError*)the_error

{

NSLog(@"AVAudioPlayer audioPlayerDecodeErrorDidOccur: %@", [the_error

localizedDescription]);

}

- (void) audioPlayerBeginInterruption:(AVAudioPlayer*)which_player

{

}

- (void) audioPlayerEndInterruption:(AVAudioPlayer*)which_player{

[which_player play];

}

@endWe probably should do something interesting with audioPlayerDidFinishPlaying:successfully: to integrate it with the rest of the game engine, similar to what we did with the OpenAL resource manager. But since this example is concerned only with playing background music, which we will infinitely loop, we won't worry about that here.

Now for the integration. Remember to add AVFoundation.framework to the project so it can be linked. In BBConfiguration.h, we'll add our background music file. Prior to this point, I've been making my own sound effects, or in a few instances, finding public domain stuff on the Internet. But creating a good soundtrack exceeds my talents, and it is very hard to find one in the public domain or free for commercial use. Fortunately for us, Ben Smith got permission from the musician he used for Snowferno to reuse one of his soundtracks for Space Rocks! for our book. Please do not use this song for your own projects, as this permission does not extend beyond this book's example.

Attribution Credits: Music by Michael Shaieb © Copyright 2009 FatLab Music From "Snowferno" for iPhone/iPod touch

Also, for demonstration purposes, I have compressed the soundtrack using AAC into an .m4a container file. This is to demonstrate that we can use restricted compression formats for our audio files in our game. Chapter 11 covered audio file formats. Remember that the hardware decoder can handle only one file in compression format at a time. While compressing it, I converted it down to 22 kHz to match all our other sample rates for performance. You'll find the file D-ay-Z-ray_mix_090502.m4a in the completed project. Make sure to add it to your project if you are following along.

Add this line to BBConfiguration.h:

#define BACKGROUND_MUSIC @"D-ay-Z-ray_mix_090502"

In BBSceneController.h, we're going to add a new instance variable:

@class AVPlaybackSoundController;

@interface BBSceneController : NSObject <EWSoundCallbackDelegate> {

...

AVPlaybackSoundController* backgroundMusicPlayer;

}In BBSceneController.m, we are going to create a new instance of the backgroundMusicPlayer in the init method. We want to use our background music file and set the player to infinitely loop. To avoid overwhelming all the other audio, we will reduce the volume of the music. Once this is all set up, we tell the player to play.

- (id) init

{

self = [super init];

if(nil != self)

{

SetPreferredSampleRate(22050.0);

[[OpenALSoundController sharedSoundController] setSoundCallbackDelegate:self];

[self invokeLoadResources];

[[OpenALSoundController sharedSoundController]

setDistanceModel:AL_INVERSE_DISTANCE_CLAMPED];

[[OpenALSoundController sharedSoundController] setDopplerFactor:1.0];

[[OpenALSoundController sharedSoundController] setSpeedOfSound:343.3];

backgroundMusicPlayer = [[AVPlaybackSoundController alloc]

initWithSoundFile:BACKGROUND_MUSIC];

backgroundMusicPlayer.numberOfLoops = −1; // loop music

backgroundMusicPlayer.volume = 0.5;

}

return self;

}Conversely, in our dealloc method, we should remember to delete the player for good measure.

[backgroundMusicPlayer stop]; [backgroundMusicPlayer release];

And, of course, we need to actually start playing the music. We could do this in init, but the startScene method seems to be more appropriate. So add the following line there:

[backgroundMusicPlayer play];

Finally, in the audio session initialization code in our OpenALSoundController class, we should switch the mode from Ambient to Solo Ambient, because we are using an .m4a file for our background music, and we would like to minimize the burden on the CPU by using the hardware decoder. If we don't do this, the music will play using the software decoder, which will work but could significantly degrade the performance of Space Rocks!, since the game already pushes the CPU pretty hard, even without audio. And it doesn't make a lot of sense to keep using Ambient mode, which would allow mixing iPod music, now that we have our own soundtrack. Alternatively, we could avoid using a restricted compression format for our audio and not worry about this. But since we have an idle hardware decoding unit and can benefit from higher compression, we will exploit these capabilities. The remaining streaming examples will also switch to Solo Ambient for these same reasons.

Find our call to InitAudioSession in OpenALSoundController.m's init method, and change the first parameter to kAudioSessionCategory_SoloAmbientSound.

InitAudioSession(kAudioSessionCategory_SoloAmbientSound, MyInterruptionCallback, self, PREFERRED_SAMPLE_OUTPUT_RATE);

Congratulations! Not only do you have background music, but you have also learned all the basic essential elements in creating a complete game solution with respect to audio. Many developers will often be satisfied here. They can play OpenAL sound effects and stream audio easily with AVFoundation.

But I encourage you to stick around for the next section on the OpenAL streaming solution. While it's more difficult, you'll continue to get features such as spatialized sound. You'll also be able to reuse more infrastructure, so things will integrate more smoothly, instead of having two disparate audio systems that don't really talk to each other. For example, we currently do nothing with AVAudioPlayer's audioPlayerDidFinishPlaying:successfully: method because we would have to think about how it would integrate with the rest of the system. We already defined all those behaviors for our OpenAL system, so there is less ambiguity on how we should handle it with OpenAL streaming.

Before we jump into the technical specifics of how OpenAL handles streaming, it may be a useful thought exercise to imagine how you might accomplish streaming with what you already know. Let's start with the three fundamental objects in OpenAL: buffers, sources, and listeners. Listeners are irrelevant to this discussion, so we can ignore them. It's going to come down to buffers and sources.

Conceptually, streaming is just playing small buffers sequentially. You use small buffers so you don't consume too much RAM trying to load a whole giant file.

To implement streaming with what you already know, you might try something like this (pseudo code):

while( StillHaveDataInAudioFile(music_file) )

{

pcm_data = GetSmallBufferOfPCMDataFromFile(music_file);

alBufferData(al_buffer, pcm_data, ...); // copy the data into a OpenAL buffer

alSourcei(al_source, AL_BUFFER, al_buffer); // attach OpenAL Buffer to OpenAL source

alSourcePlay(al_source);

MagicFunctionThatWaitsForPlayingToStop();

}In this pseudo code, we read a small chunk of data from a file, copy the data into OpenAL, and then play the data. Once the playback ends, we repeat the process until we have no more audio data.

In a theoretical world, this code could work. But in the real world, there is a fatal flaw of latency risk. Once the playback ends, you need to load the next chunk of data and then start playing it before the user perceives a pause in the playback. If you have a very fast, low-latency computer, you might get away with this, but probably not.

You could try to improve on this function by using a double-buffer technique (often employed in graphics). You would create two OpenAL buffers. While the source is playing, you could start reading and copying the next chunk of data. Then when the playback ends, you can immediately feed the source the next buffer. You still have a latency risk, in that you might not be able to call play fast enough, but your overall latency has been greatly reduced. To mitigate the case of being overloaded and falling behind, you could simply extend the number of buffers you attempt to preload. Instead of having two buffers, try ten or a hundred.

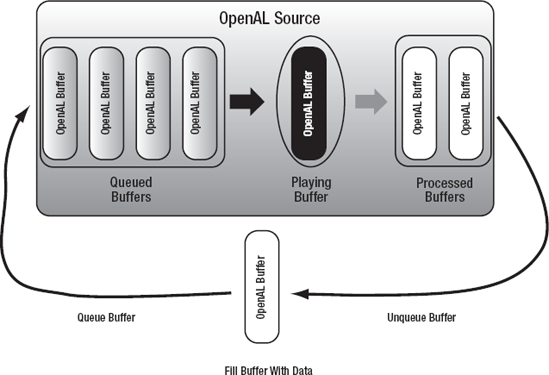

Fortunately, OpenAL solves this last remaining problem by providing several additional API functions that allow you to queue buffers to a playing (or stopped) source. That way, you don't need to worry about swapping the buffer at the perfect time and calling play to restart. OpenAL will do that on your behalf behind the scenes. This is a reasonably elegant design, in that you don't need to introduce any new object types. You remain with just buffers, sources, and listeners. Figure 12-6 illustrates how buffer queuing works in OpenAL.

Figure 12.6. The OpenAL buffer queuing life cycle. OpenAL allows you to queue OpenAL buffers on an OpenAL source. The source will play a buffer, and when finished, it will mark the buffer as processed. The source will then automatically start playing the next buffer in the queue. To keep the cycle going, you should reclaim the processed buffers, fill them with new audio data, and queue them again to the source. Note that you are permitted to have multiple OpenAL sources doing buffer queuing.

That said, there is some grunt work and bookkeeping you must do. You will need to manage multiple buffers, and find opportune times to fill the buffers with new data and add them to the queue on your designated source(s). You are also required to unqueue[30] buffers from your source(s) when OpenAL is done with them (called processed buffers).

Because there is enough going on, I felt it would be better to start with a simple isolated example before trying to integrate streaming into Space Rocks! We will start with a new project. The completed project for this example is called BasicOpenALStreaming. The project has a UI that is similar to the interface we constructed in Chapter 9 for AVPlayback, but even simpler (see Figure 12-7).

In this example, we will just play music and forego the speech player. The play button will play and pause the music. For interest, we will add a volume slider. The volume slider is connected to the OpenAL listener gain, so we are using it like a master volume control.

All the important code is contained in the new class OpenALStreamingController. Much of this class should look very familiar to you, as it is mostly a repeat of the first OpenAL examples in Chapter 10 (i.e., initialize an audio session, create a source, and so on).

Following the pattern we used for OpenALSoundController in Chapter 10, let's examine the changes we need to make for OpenALStreamingController.m's initOpenAL method.

- (void) initOpenAL

{

openALDevice = alcOpenDevice(NULL);

if(openALDevice != NULL)

{// Use the Apple extension to set the mixer rate

alcMacOSXMixerOutputRate(44100.0);

// Create a new OpenAL context

// The new context will render to the OpenAL device just created

openALContext = alcCreateContext(openALDevice, 0);

if(openALContext != NULL)

{

// Make the new context the current OpenAL context

alcMakeContextCurrent(openALContext);

}

else

{

NSLog(@"Error, could not create audio context.");

return;

}

}

else

{

NSLog(@"Error, could not get audio device.");

return;

}

alGenSources(1, &streamingSource);

alGenBuffers(MAX_OPENAL_QUEUE_BUFFERS, availableALBufferArray);

availableALBufferArrayCurrentIndex = 0;

// File is from Internet Archive Open Source Audio, US Army Band, public domain

// http://www.archive.org/details/TheBattleHymnOfTheRepublic_993

NSURL* file_url = [NSURL fileURLWithPath:[[NSBundle mainBundle]

pathForResource:@"battle_hymn_of_the_republic" ofType:@"mp3"]];

if(file_url)

{

streamingAudioRef = MyGetExtAudioFileRef((CFURLRef)file_url,

&streamingAudioDescription);

}

else

{

NSLog(@"Could not find file!

");

streamingAudioRef = NULL;

}

intermediateDataBuffer = malloc(INTERMEDIATE_BUFFER_SIZE);

}The only new/customized code is at the bottom of the method after the OpenAL context is initialized.

First, we create an OpenAL source, which we will use to play our music using alGenSources. Next, we create five OpenAL buffers using the array version of the function. The number of buffers was picked somewhat arbitrarily. We'll talk about picking the number a little later, but for now, just know that our program will have a maximum of five buffers queued at any one time.

Then we open the music file we are going to play. I am reusing the same file from the AVPlayback example in Chapter 9.

The first semi-new thing is that we call MyGetExtAudioFileRef. Recall that in our side quest in Chapter 10 when we loaded files into OpenAL using Core Audio, we deliberately broke up the function into three pieces, but we used only the third piece, MyGetOpenALAudioDataAll, which is built using the other two pieces. MyGetOpenALAudioDataAll loads the entire file into a buffer. But since we don't want to load the entire file for this streaming example, we need to use the two other pieces. The first piece, MyGetExtAudioFileRef, will just open the file using Core Audio (Extended File Services) and return a file handle (ExtAudioFileRef). We save this file reference in an instance variable, because we need to read the data from it later as we stream. We also have streamingAudioDescription as an instance variable, because we need to keep the audio description metadata around for the other function. (We'll get to the second piece a little later.)

Next, we allocate memory for a PCM buffer. This is memory we will have Extended File Services decode file data into so we can use it. I have hard-coded the buffer to be 32KB, which means this demo is going to stream audio data in 32,768-byte chunks at a time.

That concludes our initialization. Let's now go to the heart of the program, which is in the animationCallback: method in OpenALStreamingController. This is where all the important stuff happens.

We need to do two things with OpenAL streaming: queue buffers to play and unqueue buffers that have finished playing (processed buffers). We will start with unqueuing (and reclaiming) the processed buffers. I like to do this first, because I can then turn around and immediately use that buffer again for the next queue.

First, OpenAL will let us query how many buffers have been processed using alGetSourcei with AL_BUFFERS_PROCESSED. In OpenALStreamingController.m's animationCallback: method, we need to have the following:

ALint buffers_processed = 0; alGetSourcei(streamingSource, AL_BUFFERS_PROCESSED, &buffers_processed);

Next, we can write a simple while loop to unqueue and reclaim each processed buffer, one at a time.[31] To actually unqueue a buffer, OpenAL provides the function alSourceUnqueueBuffers. You provide it the source you want to unqueue from, the number of buffers you want to unqueue, and a pointer to where the unqueued buffer IDs will be returned.

while(buffers_processed > 0)

{

ALuint unqueued_buffer;

alSourceUnqueueBuffers(streamingSource, 1, &unqueued_buffer);availableALBufferArrayCurrentIndex--; availableALBufferArray[availableALBufferArrayCurrentIndex] = unqueued_buffer; buffers_processed--; }

We keep an array of available buffers so we can use them in the next step. We use the array like a stack, hence the incrementing and decrementing of the availableALBufferArrayCurrentIndex. Don't get too hung up on this part. Just consider it an opaque data structure. Because we're doing a minimalist example, I wanted to avoid using Cocoa data structures or introducing my own. The next example will not be minimalist.

Now we are ready to queue a buffer. When we queue a buffer, we need to load a chunk of the data from the file, get the data into OpenAL, and queue it to the OpenAL source. We are going to queue only one buffer in a single function pass. The idea is that this function will be called repeatedly (30 times a second or so). The assumption is the function call rate will be faster than OpenAL can use up the buffer, so there will always be multiple buffers queued in a given moment, up to some maximum number of queue buffers that we define. For this example, MAX_OPENAL_QUEUE_BUFFERS is hard-coded as follows:

#define MAX_OPENAL_QUEUE_BUFFERS 5

We continue adding code to animationCallback: in OpenALStreamingController.m. The first thing we do is check to make sure we have available buffers to queue. If not, then we don't need to do anything.

if(availableALBufferArrayCurrentIndex < MAX_OPENAL_QUEUE_BUFFERS)

{Once we establish we need to queue a new buffer, we do it.

ALuint current_buffer = availableALBufferArray[availableALBufferArrayCurrentIndex];

ALsizei buffer_size;

ALenum data_format;

ALsizei sample_rate;

MyGetDataFromExtAudioRef(streamingAudioRef, &streamingAudioDescription,

INTERMEDIATE_BUFFER_SIZE, &intermediateDataBuffer, &buffer_size,

&data_format, &sample_rate);

if(0 == buffer_size) // will loop music on EOF (which is 0 bytes)

{

MyRewindExtAudioData(streamingAudioRef);

MyGetDataFromExtAudioRef(streamingAudioRef, &streamingAudioDescription,

INTERMEDIATE_BUFFER_SIZE, &intermediateDataBuffer, &buffer_size,

&data_format, &sample_rate);

}

alBufferData(current_buffer, data_format, intermediateDataBuffer, buffer_size,

sample_rate);

alSourceQueueBuffers(streamingSource, 1, ¤t_buffer);availableALBufferArrayCurrentIndex++;

We use our MyGetDataFromExtAudioRef function (which is our second piece of the Core Audio file loader from Chapter 10) to fetch a chunk of data from our file. We provide the file handle and the audio description, which you can consider as opaque data types for Core Audio. We provide the buffer size and buffer to which we want the data copied. The function will return by reference the amount of data we actually get back, the OpenAL data format, and the sample rate. We can then feed these three items directly into alBufferData. We use alBufferData for simplicity in this example. For performance, we should consider using alBufferDataStatic, since this function is going to be called a lot, and we are going to be streaming in the middle of game play where performance is more critical. We will change this in the next example.

Finally, we call OpenAL's function alSourceQueueBuffers to queue the buffer. We specify the source, the number of buffers, and an array containing the buffer IDs.

There is a corner case handled in the preceding code. If we get 0 bytes back from MyGetDataFromExtAudioRef, it means we hit the end of the file (EOF). For this example, we want to loop the music, so we call our custom helper function MyRewindExtAudioData, which rewinds the file pointer to the beginning. We then grab the data again. If you were thinking you could use OpenAL's built-in loop functionality, this won't work. OpenAL doesn't have access to the full data anymore, since we broke everything into small pieces and unqueued the old data (the beginning of the file). We must implement looping ourselves.

We've now queued the buffer, but there is one more step we may need to take. It could happen that we were not quick enough in queuing more data, and OpenAL ran out of data to play. If OpenAL runs out of data to play, it must stop playing. This makes sense, because OpenAL can't know whether we were too slow at queuing more data or we actually finished playing (and don't plan to loop). I call the case where we were too slow a buffer underrun.

To remedy the potential buffer underrun case, our task is to find out if the OpenAL source is still playing. If it is not playing, we need to determine if it is because of a buffer underrun or because we don't want to play (e.g., finished playing, wanted to pause, etc.).

We will use alGetSourcei to find the source state to figure out if we are not playing. Then we can use alGetSourcei to get the number of buffers queued to help determine if we are in a buffer underrun situation. (We will have one queued buffer now since we just added one in the last step.) Then we make sure the user didn't pause the player, which would be a legitimate reason for not playing even though we have buffers queued. If we determine we should, in fact, be playing, we simply call alSourcePlay.

ALenum current_playing_state;

alGetSourcei(streamingSource, AL_SOURCE_STATE, ¤t_playing_state);

// Handle buffer underrun case

if(AL_PLAYING != current_playing_state)

{ALint buffers_queued = 0;

alGetSourcei(streamingSource, AL_BUFFERS_QUEUED, &buffers_queued);

if(buffers_queued > 0 && NO == self.isStreamingPaused)

{

// Need to restart play

alSourcePlay(streamingSource);

}

}We use alSourcePause for the first time in this example. It probably behaves exactly as you think it does, so there is not much to say about it.

You may be wondering why we removed the fast-forward and rewind buttons from the AVPlayback example. The reason is that seeking is more difficult with OpenAL streaming than it is with AVAudioPlayer.

OpenAL does have support for seeking. There are three different attributes you can use with alSource* and alGetSource*: AL_SEC_OFFSET, AL_SAMPLE_OFFSET, and AL_BYTE_OFFSET. These deal with the positions in seconds, samples, or bytes. With fully loaded buffers, seeking is pretty easy. But with streaming, you will need to do some additional bookkeeping.

The problem is that with streaming, OpenAL can seek only within the range of what is currently queued. If you seek to something that is outside that range, it won't work. In addition, you need to make sure your file handle is kept in sync with your seek request; otherwise, you will be streaming new data in at the wrong position. Thus, you really need to seek at the file level instead of the OpenAL level.

Generally, emptying the current queue and rebuffering it at the new position is the easiest solution. (If you don't empty the queue, you may have a lag between when the users requested a seek and when they actually hear it.) But, of course, as you've just emptied your queue and need to start over, you may have introduced some new latency issues. For this kind of application, probably no one will care though. For simplicity, this was omitted from the example. For the curious, notice that our MyRewindExtAudioData function wraps ExtAudioFileSeek. This is what you'll probably want to use to seek using Extended File Services.

Recall that in this example, we fill only one buffer per update loop pass. This means the game loop must be run through at least five times before we can fill our queue to our designated maximum of MAX_OPENAL_QUEUE_BUFFERS. This brings up the question, "What is the best strategy for filling the queue?"

There are two extremes to consider. The first extreme is what we did in the example. We fill a single buffer and move on. The advantages of this strategy are that it is easy to implement and it has a cheap startup cost. It allows us to spread the buffer fill-up cost over a longer period of time. The disadvantage is that when we first start up, we don't have a safety net. We are particularly vulnerable to an untimely system usage spike that prevents us from being able to queue the next buffer in time.

The other extreme is that we fill up our queue to our designated maximum when we start playing. So, in this example, rather than filling just one buffer, we would fill up five buffers. While this gives us extra protection from starvation, we have a trade-off of a longer startup cost. This may manifest itself as a performance hiccup in the game if the startup cost is too great. For example, the player may fire a weapon that we tied to streaming. If we spend too much time up front to load more buffers, the user may perceive a delay between the time he touched the fire button and when the weapon actually seemed to fire in the game.

You will need to experiment to find the best pattern for you and your game. You might consider hybrids of the two extremes, where on startup, you queue two or three buffers instead of the maximum. You might also consider varying the buffer sizes and the number of buffers you queue based on metrics such as how empty the queue is or how taxed the CPU is. And, of course, multithreading/concurrency can be used, too.

Finally, you may be wondering why we picked five buffers and made our buffers 32KB. This is somewhat of a guessing game. You generally need to find values that give good performance for your case. You are trying to balance different things.

The buffer size will affect how long it takes to load new data from the file. Larger buffers take more time. If you take too much time, you will starve the queue, because you didn't get data into the queue fast enough. In my personal experience, I have found 1KB to 64KB to be the range for buffer sizes. More typically, I tend to deal with 8KB to 32KB. Apple seems to like 16KB to 64KB. Some of Apple's examples include a CalculateBytesForTime function, which will dynamically decide the size based on some criteria. (It's worth a look.)

I also like powers of two because of a hard-to-reproduce bug I had way back with the Loki OpenAL implementation. When not using power-of-two buffer sizes, I had certain playback problems. That implementation is dead now, but the habit stuck with me. Some digital processing libraries, like for a Fast Fourier Transform (FFT), often prefer arrays in power-of-two sizes for performance reasons. So it doesn't hurt.[32]

As for the number of buffers, you basically want enough to prevent buffer underruns. The more buffers you have queued, the less likely it will be that you deplete the queue before you can add more. However, if you have too many buffers, you are wasting memory. The point of streaming is to save memory. And, obviously, there is a relationship with the buffer size. Larger buffer sizes will last longer, so you need fewer buffers.

Also, you might think about the startup number of buffers. In our example, we queue only one buffer to start with. One consequence of that is we must make our buffer larger to avoid early starvation. If we had queued more buffers, the buffer size could be smaller.

This is the moment you've been waiting for. Now that you understand OpenAL buffer queuing, let's integrate it into Space Rocks!, thereby completing our game engine.

We will again use SpaceRocksOpenAL3D_6_SourceRelative from the previous chapter as our starting point for this example. The completed project for this example is SpaceRocksOpenALStreaming1. (This version does not include the changes we made for background music using Media Player framework or AVFoundation. This will be an exclusively OpenAL project.)

The core changes will occur in two locations. We will need to add streaming support to our update loop in OpenALSoundController, and we need a new class to encapsulate our stream buffer data.

Let's start with the new class. We will name it EWStreamBufferData and create .h and .m files for it. The purpose of this class is analogous to the EWSoundBufferData class we made earlier, except it will be for streamed data.

@interface EWStreamBufferData : NSObject

{

ALenum openalFormat;

ALsizei dataSize;

ALsizei sampleRate;In Chapter 10, we implemented a central/shared database of audio files with soundFileDictionary and EWSoundBufferData. We will not be repeating that pattern with EWStreamBufferData. This is because it makes no sense to share streamed data. With fully loaded sounds, we can share the explosion sound between the UFO and the spaceship. But with streamed data, we can't do this because we have only a small chunk of the data in memory at any given time. So, in the case of the explosion sound, the spaceship might be starting to explode while the UFO is ending its explosion. Each needs a different buffer segment in the underlying sound file. For streamed data, if both the UFO and spaceship use the same file, they still need to have separate instances of EWStreamBufferData. Because of the differences between the two classes, I have opted to not make EWStreamBufferData a subclass of EWSoundBufferData. You could do this if you want, but you would need to modify the existing code to then be aware of the differences.

This class will contain the multiple OpenAL buffers needed for buffer queuing. In the previous example, we used five buffers. This time, we will use 32. (I'll explain the increase in buffers later.) We will use the alBufferDataStatic extension for improved performance, so we also need 32 buffers for raw PCM data.

#define EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS 32 ALuint openalDataBufferArray[EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS]; void* pcmDataBufferArray[EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS];

We will also need to keep track of which buffers are queued and which are available. In the previous example, I apologized for being too minimalist for using a single C array. This time, we will use two NSMutableArrays for more clarity.

NSMutableArray* availableDataBuffersQueue; NSMutableArray* queuedDataBuffersQueue;

This class will also contain the ExtAudioFileRef (file handle) to the audio file (and AudioStreamBasicDescription) so we can load data into the buffers from the file as we need it.

ExtAudioFileRef streamingAudioRef; AudioStreamBasicDescription streamingAudioDescription;

We also have a few properties. We have a streamingPaused property in case we need to pause the audio, which will allow us to disambiguate from a buffer underrun, as in the previous example. (We will not actually pause the audio in our game.) We add audioLooping so it can be an option instead of hard-coded. And we add an atEOF property so we can record if we hit the end of file.

BOOL audioLooping; BOOL streamingPaused; BOOL atEOF; } @property(nonatomic, assign, getter=isAudioLooping) BOOL audioLooping; @property(nonatomic, assign, getter=isStreamingPaused) BOOL streamingPaused; @property(nonatomic, assign, getter=isAtEOF) BOOL atEOF;

We will also add three methods:

+ (EWStreamBufferData*) streamBufferDataFromFileBaseName:(NSString*)sound_file_basename; - (EWStreamBufferData*) initFromFileBaseName:(NSString*)sound_file_basename; - (BOOL) updateQueue:(ALuint)streaming_source; @end

The initFromFileBaseName: instance method is our designated initializer, which sets the file to be used for streaming. For convenience, we have a class method named streamBufferDataFromFileBaseName:, which ultimately does the same thing as initFromFileBaseName:, except that it returns an autoreleased object (as you would expect, following standard Cocoa naming conventions).

You might have noticed that this design contrasts slightly with the method soundBufferDataFromFileBaseName:, which we placed in OpenALSoundController instead of EWSoundBufferData. The main reason is that for EWSoundBufferData objects, we had a central database to allow resource sharing. Since OpenALSoundController is a singleton, it was convenient to put the central database there. EWStreamBufferData differs in that we won't have a central database. Since this class is relatively small, I thought we might take the opportunity to relieve the burden on OpenALSoundController. Ultimately, the two classes are going to work together, so it doesn't matter too much. But for symmetry, we will add a convenience method to OpenALSoundController named streamBufferDataFromFileBaseName:, which just calls the one in this class.

The updateQueue: method is where we are going to do most of the unqueuing and queuing work. We could do this in the OpenALSoundController, but as you saw, the code is a bit lengthy. So again, I thought I we might take the opportunity to relieve the burden on OpenALSoundController.

Finally, because we are using alBufferDataStatic, we want an easy way to keep our PCM buffer associated with our OpenAL buffer, particularly with our NSMutableArray queues. We introduce a helper class that just encapsulates both buffers so we know they belong together.

@interface EWStreamBufferDataContainer : NSObject

{

ALuint openalDataBuffer;

void* pcmDataBuffer;

}

@property(nonatomic, assign) ALuint openalDataBuffer;

@property(nonatomic, assign) void* pcmDataBuffer;

@endIn our initialization code for EWStreamBufferData, we need to allocate a bunch of memory: 32 OpenAL buffers (via alGenBuffers), 32 PCM buffers (via malloc), and 2 NSMutableArrays, which are going to mirror the buffer queue state by recording which buffers are queued and which are available. Let's first look at the code related to initialization:

@implementation EWStreamBufferData

@synthesize audioLooping;

@synthesize streamingPaused;

@synthesize atEOF;

- (void) createOpenALBuffers

{

for(NSUInteger i=0; i<EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS; i++)

{

pcmDataBufferArray[i] = malloc(EW_STREAM_BUFFER_DATA_INTERMEDIATE_BUFFER_SIZE);

}

alGenBuffers(EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS, openalDataBufferArray);

availableDataBuffersQueue = [[NSMutableArray alloc]

initWithCapacity:EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS];queuedDataBuffersQueue = [[NSMutableArray alloc]

initWithCapacity:EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS];

for(NSUInteger i=0; i<EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS; i++)

{

EWStreamBufferDataContainer* stream_buffer_data_container =

[[EWStreamBufferDataContainer alloc] init];

stream_buffer_data_container.openalDataBuffer = openalDataBufferArray[i];

stream_buffer_data_container.pcmDataBuffer = pcmDataBufferArray[i];

[availableDataBuffersQueue addObject:stream_buffer_data_container];

[stream_buffer_data_container release];

}

}

- (id) init

{

self = [super init];

if(nil != self)

{

[self createOpenALBuffers];

}

return self;

}

- (EWStreamBufferData*) initFromFileBaseName:(NSString*)sound_file_basename

{

self = [super init];

if(nil != self)

{

[self createOpenALBuffers];

NSURL* file_url = nil;

// Create a temporary array containing all the file extensions we want to handle.

// Note: This list is not exhaustive of all the types Core Audio can handle.

NSArray* file_extension_array = [[NSArray alloc]

initWithObjects:@"caf", @"wav", @"aac", @"mp3", @"aiff", @"mp4", @"m4a", nil];

for(NSString* file_extension in file_extension_array)

{

// We need to first check to make sure the file exists;

// otherwise NSURL's initFileWithPath:ofType will crash if the file doesn't exist

NSString* full_file_name = [NSString stringWithFormat:@"%@/%@.%@",

[[NSBundle mainBundle] resourcePath], sound_file_basename, file_extension];

if(YES == [[NSFileManager defaultManager] fileExistsAtPath:full_file_name])

{

file_url = [[NSURL alloc] initFileURLWithPath:[[NSBundle mainBundle]

pathForResource:sound_file_basename ofType:file_extension]];

break;

}

}

[file_extension_array release];

if(nil == file_url)

{

NSLog(@"Failed to locate audio file with basename: %@", sound_file_basename);

[self release];

return nil;}

streamingAudioRef = MyGetExtAudioFileRef((CFURLRef)file_url,

&streamingAudioDescription);

[file_url release];

if(NULL == streamingAudioRef)

{

NSLog(@"Failed to load audio data from file: %@", sound_file_basename);

[self release];

return nil;

}

}

return self;

}

+ (EWStreamBufferData*) streamBufferDataFromFileBaseName:(NSString*)sound_file_basename

{

return [[[EWStreamBufferData alloc] initFromFileBaseName:sound_file_basename] autorelease];

}Let's examine the initFromFileBaseName: method. The first part of the method is just a copy-and-paste of our file-finding code that tries to guess file extensions. The following is the only important line in this function:

streamingAudioRef = MyGetExtAudioFileRef((CFURLRef)file_url, &streamingAudioDescription);

This opens our file and returns the file handle and AudioStreamBasicDescription.

The streamBufferDataFromFileBaseName: convenience class method just invokes the initFromFileBaseName: instance method.

Finally, in the createOpenALBuffers method, the final for loop fills our availableDataBuffersQueue with our EWStramBufferDataContainer wrapper object. That way, it is easy to get at both the PCM buffer and OpenAL buffer from the array object.

You might be wondering why we have two arrays when the previous example had only one. With two arrays, we can easily know if a buffer is queued or available. We are doing a little extra work here by mirroring (or shadowing) the OpenAL state, but it's not much more work.

For correctness, we also should write our dealloc code:

- (void) dealloc

{

[self destroyOpenALBuffers];

if(streamingAudioRef)

{

ExtAudioFileDispose(streamingAudioRef);

}

[super dealloc];

}- (void) destroyOpenALBuffers

{

[availableDataBuffersQueue release];

[queuedDataBuffersQueue release];

alDeleteBuffers(EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS,

openalDataBufferArray);

for(NSUInteger i=0; i<EW_STREAM_BUFFER_DATA_MAX_OPENAL_QUEUE_BUFFERS; i++)

{

free(pcmDataBufferArray[i]);

pcmDataBufferArray[i] = NULL;

}

}There shouldn't be any surprises here, except that this class also keeps a file handle, so we need to remember to close the file handle if it is open.

The real meat of this class is the updateQueue: method. As I said, we are going to put all the stuff in the updateQueue update loop from the BasicOpenALStreaming example. This loop should look very familiar to you, as it is the same algorithm. For brevity, I won't reproduce the entire body of the code here, but it's included with the completed project example. However, we will zoom in on subsections of the method next.

As in the BasicOpenALStreaming example, let's start with unqueuing the processed buffers in the updateQueue method.

ALint buffers_processed = 0;

alGetSourcei(streaming_source, AL_BUFFERS_PROCESSED, &buffers_processed);

while(buffers_processed > 0)

{

ALuint unqueued_buffer;

alSourceUnqueueBuffers(streaming_source, 1, &unqueued_buffer);

[availableDataBuffersQueue insertObject:[queuedDataBuffersQueue lastObject]

atIndex:0];

[queuedDataBuffersQueue removeLastObject];

buffers_processed--;

}Astute observers might notice that we do nothing with the unqueued_buffer we retrieve from OpenAL. This is because we are mirroring (shadowing) the OpenAL buffer queue with our two arrays, so we already know which buffer was unqueued.[33]

Continuing in updateQueue, let's queue a new buffer if we haven't gone over the number of queued buffers specified by our self-imposed maximum, which is 32 buffers. Since we have a data structure that holds all our available buffers, we can just check if the data structure has any buffers.

if([availableDataBuffersQueue count] > 0 && NO == self.isAtEOF)

{

// Have more buffers to queue

EWStreamBufferDataContainer* current_stream_buffer_data_container =

[availableDataBuffersQueue lastObject];

ALuint current_buffer = current_stream_buffer_data_container.openalDataBuffer;

void* current_pcm_buffer = current_stream_buffer_data_container.pcmDataBuffer;

ALenum al_format;

ALsizei buffer_size;

ALsizei sample_rate;

MyGetDataFromExtAudioRef(streamingAudioRef, &streamingAudioDescription,

EW_STREAM_BUFFER_DATA_INTERMEDIATE_BUFFER_SIZE,

¤t_pcm_buffer, &buffer_size, &al_format, &sample_rate);

if(0 == buffer_size) // will loop music on EOF (which is 0 bytes)

{

if(YES == self.isAudioLooping)

{

MyRewindExtAudioData(streamingAudioRef);

MyGetDataFromExtAudioRef(streamingAudioRef, &streamingAudioDescription,

EW_STREAM_BUFFER_DATA_INTERMEDIATE_BUFFER_SIZE,

¤t_pcm_buffer, &buffer_size, &al_format, &sample_rate);

}

else

{

self.atEOF = YES;

}

}

if(buffer_size > 0)

{

alBufferDataStatic(current_buffer, al_format, current_pcm_buffer, buffer_size,

sample_rate);

alSourceQueueBuffers(streaming_source, 1, ¤t_buffer);

[queuedDataBuffersQueue insertObject:current_stream_buffer_data_container

atIndex:0];

[availableDataBuffersQueue removeLastObject];There are just a few minor new things here. First, audio looping is now an option, so we have additional checks for atEOF. If we are at an EOF, we don't want to do anything. (We will have additional logic at the end of this function to determine what to do next if we do encounter EOF.) If we encounter EOF while fetching data, we record that in our instance variable, but only if we are not supposed to loop audio. If we are supposed to loop, we pretend we never hit EOF and just rewind the file.

The other minor thing is we now use alBufferDataStatic.

Still in updateQueue, let's handle the buffer underrun case.

ALenum current_playing_state;

alGetSourcei(streaming_source, AL_SOURCE_STATE, ¤t_playing_state);

// Handle buffer underrun case

if(AL_PLAYING != current_playing_state)

{

ALint buffers_queued = 0;

alGetSourcei(streaming_source, AL_BUFFERS_QUEUED, &buffers_queued);

if(buffers_queued > 0 && NO == self.isStreamingPaused)

{

// Need to restart play

alSourcePlay(streaming_source);

}

}

}

}This code is essentially identical to the previous example.

The part to handle EOF and finished playing is new but simple. To end the updateQueue method, for convenience, this function will return YES if we discover that the source has finished playing its sound. (The OpenALSoundController will use this information later.) This requires two conditions:

We must have encountered an EOF.

The OpenAL source must have stopped playing.

Once we detect those two conditions, we just record the state so we can return it. We also add a repeat check for processed buffers, since typically, once it is detected that the sound is finished playing, this method will no longer be invoked for that buffer instance. This is a paranoid check. In theory, there is a potential race condition between OpenAL processing buffers and us trying to remove them. It is possible that once we pass the processed buffers check at the top of this method, OpenAL ends up processing another buffer while we are in the middle of the function. We would like to leave everything in a pristine state when it is determined that that we are finished playing, so we run the processed buffers check one last time and remove the buffers as necessary.

if(YES == self.isAtEOF)

{

ALenum current_playing_state;

alGetSourcei(streaming_source, AL_SOURCE_STATE, ¤t_playing_state);

if(AL_STOPPED == current_playing_state)

{

finished_playing = YES;

alGetSourcei(streaming_source, AL_BUFFERS_PROCESSED, &buffers_processed);while(buffers_processed > 0)

{

ALuint unqueued_buffer;

alSourceUnqueueBuffers(streaming_source, 1, &unqueued_buffer);

[availableDataBuffersQueue insertObject:[queuedDataBuffersQueue lastObject]

atIndex:0];

[queuedDataBuffersQueue removeLastObject];

buffers_processed--;

}

}

}

return finished_playing;

}Whew! You made it through this section. I hope that wasn't too bad, as you've already seen most of it before. Now we need to make some changes to the OpenALSoundController.

Since the OpenALSoundController is our centerpiece for all our OpenAL code, we need to tie in the stuff we just wrote with this class. There are only a few new methods we are going to introduce in OpenALSoundController:

- (EWStreamBufferData*) streamBufferDataFromFileBaseName:(NSString*)sound_file_basename; - (void) playStream:(ALuint)source_id streamBufferData:(EWStreamBufferData*)stream_buffer_data; - (void) setSourceGain:(ALfloat)gain_level sourceID:(ALuint)source_id;

The method streamBufferDataFromFileBaseName: is just a pass-through to EWStreamBufferData's streamBufferDataFromFileBaseName:, which we add mostly for aesthetic reasons. This gives us symmetry with OpenALSoundController's soundBufferDataFromFileBaseName: method.

- (EWStreamBufferData*) streamBufferDataFromFileBaseName:(NSString*)sound_file_basename

{

return [EWStreamBufferData streamBufferDataFromFileBaseName:sound_file_basename];

}The setSourceGain: method is kind of a concession/hack. Prior to this, only our BBSceneObjects made sound. They all access their OpenAL source properties (such as gain) through EWSoundSourceObjects. But because we are focusing on background music, it doesn't make a lot of sense to have a BBSceneObject to play background music. So, this is a concession to let us set the volume level on our background music without needing the entire SceneObject infrastructure.

- (void) setSourceGain:(ALfloat)gain_level sourceID:(ALuint)source_id

{

alSourcef(source_id, AL_GAIN, gain_level);