![]()

Performance Monitoring and Optimizing

An installed Linux server comes with default performance settings. That means that it will perform well for an average workload. Unfortunately, many servers are going beyond average, which means that optimization can be applied. In this chapter, you’ll read how to monitor and optimize performance. The first part of this chapter is about performance monitoring. In the second part, you’ll learn how to optimize performance.

The following topics are covered in this chapter:

- Performance Monitoring

- Optimizing Performance

- Optimizing Linux Performance Using Cgroups

Performance Monitoring

Before you can actually optimize anything, you have to know what’s going on. In this first section of the chapter, you’ll learn how to analyze performance. We’ll start with one of the most common but also one of the most informative tools: top.

Interpreting What’s Going On: top

Before starting to look at details, you should have a general overview of the current state of your server. The top utility is an excellent tool to help you with that. Let’s start by having a look at a server that is used as a virtualization server, hosting multiple virtual machines (see Listing 15-1).

Listing 15-1. Using top on a Busy Server

top - 10:47: 49 up 1 day, 16:56, 3 users, load average: 0.08, 0.06, 0.10

Tasks: 409 total, 1 running, 408 sleeping, 0 stopped, 0 zombie

%Cpu(s): 1.6 us, 0.4 sy, 0.0 ni, 98.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem: 16196548 total, 13197772 used, 2998776 free, 4692 buffers

KiB Swap: 4194300 total, 0 used, 4194300 free. 4679428 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1489 root 20 0 1074368 23568 11836 S 3.3 0.1 51:18.32 libvirtd

12730 root 20 0 6018668 2.058g 56760 S 2.7 13.3 52:07.62 virt-manager

19586 qemu 20 0 1320328 532616 8028 S 2.0 3.3 23:08.54 qemu-kvm

13719 qemu 20 0 1211512 508476 8028 S 1.7 3.1 23:42.33 qemu-kvm

18450 qemu 20 0 1336528 526252 8016 S 1.7 3.2 23:39.71 qemu-kvm

18513 qemu 20 0 1274928 463408 8036 S 1.7 2.9 23:28.97 qemu-kvm

18540 qemu 20 0 1274932 467276 8020 S 1.7 2.9 23:32.23 qemu-kvm

19542 qemu 20 0 1320840 514224 8032 S 1.7 3.2 23:03.55 qemu-kvm

19631 qemu 20 0 1315620 501828 8012 S 1.7 3.1 23:10.92 qemu-kvm

24773 qemu 20 0 1342848 547784 8016 S 1.7 3.4 23:38.80 qemu-kvm

3572 root 20 0 950484 148812 42644 S 1.3 0.9 39:24.33 firefox

16388 qemu 20 0 1275076 465400 7996 S 1.3 2.9 22:51.46 qemu-kvm

18919 qemu 20 0 1318728 510000 8020 S 1.3 3.1 23:46.81 qemu-kvm

28791 root 20 0 123792 1876 1152 R 0.3 0.0 0:00.03 top

1 root 20 0 53500 7644 3788 S 0.0 0.0 0:07.07 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:00.13 kthreadd

3 root 20 0 0 0 0 S 0.0 0.0 0:03.27 ksoftirqd/0

5 root 0 -20 0 0 0 S 0.0 0.0 0:00.00 kworker/0:0H

7 root rt 0 0 0 0 S 0.0 0.0 0:00.19 migration/0

8 root 20 0 0 0 0 S 0.0 0.0 0:00.00 rcu_bh

9 root 20 0 0 0 0 S 0.0 0.0 0:00.00 rcuob/0

10 root 20 0 0 0 0 S 0.0 0.0 0:00.00 rcuob/1

11 root 20 0 0 0 0 S 0.0 0.0 0:00.00 rcuob/2

CPU Monitoring with top

When analyzing performance, you start at the first line of the top output. The load average parameters at the end of the line are of special interest. There are three of them, indicating the load average for the last minute, the last five minutes, and the last fifteen minutes. The load average gives the average amount of processes that were in the run queue. Stated otherwise, the load average gives that which is actually being handled, or waiting to be handled. As ultimately a CPU core can handle one process at any moment only, a load average of 1.00 on a 1-CPU would be the ideal load, indicating that the CPU is completely busy.

Looking at load average in this way is a little bit too simple, though. Some processes don’t demand that much from the CPU; other processes do. So, in some cases, performance can be good on a 1-CPU system that gives a load average of 8.00, while on other occasions, performance might be suffering, if load average is only at 1.00. Load average is a good start, but it’s not good enough just by itself.

Consider, for example, a task that is running completely on the CPU. You can force such a task by entering the following code line:

while true; do true; done

This task will completely claim one CPU core, thus causing a workload of 1.00. Because, however, this is a task that doesn’t do any input/output (I/O), the task does not have waiting times, and therefore, for a task like this, 1.00 is considered a heavy workload, because if another task is started, processes will have to be queued owing to of a lack of available resources.

Let’s now consider a task that is I/O intensive, such as a task in which your complete hard drive is copied to the null device (dd if=/dev/sda of=/dev/null). This task will also easily cause a workload that is 1.00 or higher, but because there is a lot of waiting for I/O involved in a task like that, it’s not as bad as the while true task. That is because while waiting for I/O, the CPU can do something else. So don’t be too quick in drawing conclusions from the load line.

When seeing that your server’s CPUs are very busy, you should further analyze. First, you should relate the load average to the amount of CPUs in your server. By default, top provides a summary for all CPUs in your server. Press the 1 on the keyboard, to show a line for each CPU core in your server. Because most modern servers are multi-core, you should apply this option, as it gives you information about the multiprocessing environment as well. In Listing 15-2, you can see an example in which usage statistics are provided on a four-core server:

Listing 15-2. Monitoring Performance on a Four-Core Server

top - 11:06:29 up 1 day, 17:15, 3 users, load average: 6.80, 4.20, 1.95

Tasks : 424 total, 3 running, 421 sleeping, 0 stopped, 0 zombie

%Cpu0 : 84.9 us, 11.7 sy, 0.0 ni, 2.0 id, 0.7 wa, 0.0 hi, 0.7 si, 0.0 st

%Cpu1 : 86.6 us, 9.4 sy, 0.0 ni, 3.0 id, 0.3 wa, 0.0 hi, 0.7 si, 0.0 st

%Cpu2 : 86.6 us, 9.7 sy, 0.0 ni, 2.7 id, 0.7 wa, 0.0 hi, 0.3 si, 0.0 st

%Cpu3 : 88.0 us, 9.0 sy, 0.0 ni, 2.7 id, 0.3 wa, 0.0 hi, 0.0 si, 0.0 st

KiB Mem: 16196548 total, 16021536 used, 175012 free, 3956 buffers

KiB Swap: 4194300 total, 10072 used, 4184228 free. 3700732 cached Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

29694 qemu 20 0 1424580 658276 8068 S 72.6 4.1 3:30.70 qemu-kvm

29934 qemu 20 0 1221208 614936 8064 S 69.7 3.8 1:08.35 qemu-kvm

29863 qemu 20 0 1386616 637948 8052 S 56.7 3.9 1:54.51 qemu-kvm

29627 qemu 20 0 1417552 643716 8064 S 56.1 4.0 4:37.15 qemu-kvm

29785 qemu 20 0 1425656 657500 8064 S 54.7 4.1 2:39.03 qemu-kvm

12730 root 20 0 7276512 2.566g 70496 R 26.5 16.6 54:20.94 virt-manager

3225 root 20 0 1950632 215728 35300 S 25.2 1.3 14:52.82 gnome-shell

1489 root 20 0 1074368 23600 11836 S 6.6 0.1 52:09.98 libvirtd

1144 root 20 0 226540 51348 35704 S 6.3 0.3 4:19.12 Xorg

18540 qemu 20 0 1274932 467276 8020 R 6.0 2.9 23:47.89 qemu-kvm

18450 qemu 20 0 1336528 526252 8016 S 2.3 3.2 23:55.18 qemu-kvm

18919 qemu 20 0 1318728 510000 8020 S 1.0 3.1 24:02.42 qemu-kvm

19631 qemu 20 0 1315620 501828 8012 S 1.0 3.1 23:26.65 qemu-kvm

24773 qemu 20 0 1334652 538816 8016 S 1.0 3.3 23:54.71 qemu-kvm

3572 root 20 0 950484 172500 42636 S 0.7 1.1 39:36.99 firefox

28791 root 20 0 123792 1876 1152 R 0.7 0.0 0:03.25 top

339 root 0 -20 0 0 0 S 0.3 0.0 0:04.00 kworker/1:1H

428 root 20 0 0 0 0 S 0.3 0.0 0:39.65 xfsaild/dm-1

921 root 20 0 19112 1164 948 S 0.3 0.0 0:09.19 irqbalance

26424 root 20 0 0 0 0 S 0.3 0.0 0:00.13 kworker/u8:2

When considering exactly what your server is doing, the CPU lines are an important indicator. In there, you can monitor CPU performance, divided in different performance categories. In the following list, you can see these options summarized:

- us: This refers to a workload in user space. Typically, this relates to processes that are started by end users and don’t run with root priorities. If you see a high load in here, that means that your server is heavily used by applications.

- sy: This refers to the work that is done in system space. These are important tasks in which the kernel of your operating system is involved as well. Load average in here should, in general, not be too high. You can see it elevated when particular jobs are executed, for example, a large compiling job. If the load here is high, it can indicate elevated hardware activity.

- ni: This indicator relates to the amount of jobs started with an adjusted nice value.

- id: Here you can see how busy the idle loop is. This special loop indicates the amount of time that your CPU is doing nothing. Therefore, a high percentage in the idle loop means a CPU that is not too busy.

- wa: This is an important indicator. The wa parameter refers to the amount of time that your CPU is waiting for I/O. If the value that you see here is often above 30 percent, that could indicate a problem on the I/O-channel, which involves storage and network. See the sections “Monitoring Storage Performance” and “Understanding Network Performance” later in this chapter to find out what may be happening.

- hi: The hi parameter relates to the time the CPU spends handling hardware interrupts. You will see some utilization here when a device is particularly busy (optical drives do stress this parameter from time to time), but normally you won’t ever see it above a few percent.

- si: This parameter relates to software interrupts. Typically, these are lower priority interrupts that are created by the kernel. You will probably never see a high utilization in this field.

- st: The st parameter relates to an environment in which virtualization is used. In some virtual environments, a virtual machine can take (“steal,” hence “st”) CPU time from the host operating system. If this occurs, you will see some utilization in the st field. If the utilization here starts getting really high, you should consider off-loading virtual machines from your server.

Memory Monitoring with top

The second set of information to get from top concerns the lines about memory and swap usage. The memory lines contain five parameters (of which the last is in the swap line). These are

- total: This is the total amount of physical memory installed in your server.

- used: The amount of memory that is currently in use by something. This includes memory in buffers and cache.

- free: The amount of memory that is not currently in use. On a typical server that is operational for more than a couple of hours, you will always see that this value is rather low (see, for example, Listing 15-2, in which it has dropped down to next to nothing).

- buffers: This parameter relates to the write cache that your server uses. It also contains file system tables and other unstructured data that the server has to have in memory. All data that a server has to write to disk is written to the write cache first. From there, the disk controller takes care of this data when it has time to write it. The advantage of using write cache is that from the perspective of the end user, the data is written, so the application the user is using does not have to wait anymore. This buffer cache, however, is memory that is used for nonessential purposes, and when an application requires more memory and can’t allocate that from the pool of free memory, the write cache can be written to disk (flushed), so that memory that was used by the write cache is available for other purposes. Essentially, write cache is a good thing that makes your server performing faster.

- cached: When a user requests a file from the server, the file normally has to be read from the hard disk. Because a hard disk is typically about 1,000 times slower than RAM, this process causes major delays. For that reason, every time, after fetching a file from the server hard drive, the file is stored in cache. This is a read cache and has one purpose only: to speed up reads. When memory that is currently allocated to the read cache is needed for other purposes, the read cache can be freed immediately, so that more memory can be added to the pool of available (“free”) memory. Your server will typically see a high amount of cached memory. Especially if your server is used for reads mostly, this is considered good, as it will speed up your server. In case your server is used for reads mostly, and this parameter falls below 30 percent of total available memory, you will most likely get a slowed-down performance. Add more RAM if this happens. Be aware that there are exceptions, though. Servers running large databases typically don’t have a very high read cache, as the data are stored in memory that is claimed by the database, and they are not managed by the Linux kernel.

Understanding swap

When considering memory usage, you should also consider the amount of swap that is being allocated. Swap is RAM that is emulated on disk. That may sound like a bad idea that really slows down server performance, but it doesn’t have to be.

To understand swap usage, you should understand the different kinds of memory that are in use on a Linux server. Linux distinguishes between active and inactive memory, and between file and anon memory. You can get these parameters from the /proc/meminfo file (see Listing 15-3).

Listing 15-3. Getting Detailed Memory Information from /proc/meminfo

[root@lab ~]# cat /proc/meminfo

MemTotal: 16196548 kB

MemFree: 1730808 kB

MemAvailable: 5248720 kB

Buffers: 3956 kB

Cached: 4045672 kB

SwapCached: 0 kB

Active: 10900288 kB

Inactive: 3019436 kB

Active(anon): 9725132 kB

Inactive(anon): 627268 kB

Active(file): 1175156 kB

Inactive(file): 2392168 kB

Unevictable: 25100 kB

Mlocked: 25100 kB

SwapTotal: 4194300 kB

SwapFree: 4194300 kB

Anon (anonymous) memory refers to memory that is allocated by programs. File memory refers to memory that is used as cache or buffers. On any Linux system, these two kinds of memory can be flagged as active or inactive. Inactive file memory typically exists on a server that doesn’t need the RAM for anything else. If memory pressure arises, the kernel can clear this memory immediately to make more RAM available. Inactive anon memory is memory that has to be allocated. However, as it hasn’t been used actively, it can be moved to a slower kind of memory. That exactly is what swap is used for.

If in swap there’s only inactive anon memory, swap helps optimizing the memory performance of a system. By moving out these inactive memory pages, more memory becomes available for caching, which is good for the overall performance of a server. Hence, if a Linux server shows some activity in swap, that is not a bad sign at all.

EXERCISE 15-1. MONITORING BUFFER AND CACHE MEMORY

In this exercise, you’ll monitor how buffer and cache memory are used. To start with a clean image, you’ll first restart your server, so that no old data is in buffers or cache. Next, you’ll run some commands that will cause the buffer and cache memory to be filled. At the end, you’ll clear the total amount of buffer and cache memory by using /proc/sys/vm/drop_caches.

- Reboot your server.

- After rebooting, open two root console windows. In one window, start top, so that you’ll have a real-time overview of what’s happening. Note the current memory allocation. Buffers and cache should be low, and your server should have a relatively large amount of free memory available.

- Run the following script to read data, which will fill your server cache.

cd /etc

for I in *

do

cat $I

done - You should now see an increase in cache (probably not much, as the contents of the /etc directory typically isn’t that high).

- Run the following command to fill the buffer cache: ls -Rl / > /dev/null &.

- You’ll notice that the buffer cache has filled a bit as well.

- Optionally, you can run some more commands that will fill buffers as well as cache, such as dd if=/dev/sda of=/dev/null & (which has a much greater impact than the previous commands).

- Once finished, type free -m, to observe the current usage of buffers and cache.

- Tell the kernel to drop all buffers and cache that it doesn’t really need at this moment, by using echo 2 > /proc/sys/vm/drop_caches.

Process Monitoring with top

The lower part of top is reserved for information about the most active processes. In this part, you’ll see a few parameters related to these processes. By default, the following parameters are shown:

- PID: The Process ID of the process

- USER: The user who started the process

- PR: The priority of the process. The priority of any process is determined automatically, and the process with the highest priority is eligible to be serviced first from the queue of runnable processes. Some processes run with a real-time priority, which is indicated as RT. Processes with this priority can claim CPU cycles in real time, which means that they will always have highest priority.

- NI: The nice value with which the process was started

- VIRT: The amount of memory that was claimed by the process when it first started

- RES: Stands for resident memory. This relates to the amount of memory that a process is really using. You will see that in some cases, this is considerably lower than the parameter mentioned in the VIRT column. This is because many process like to over-allocate. This means they claim more memory than they really need, just in case they’ll need it at some point.

- SHR: The amount of memory this process uses that is shared with another process

- S: The status of a process

- %CPU: Relates to the percentage of CPU time this process is using. You will normally see the process with the highest CPU utilization mentioned on top of this list.

- %MEM: The percentage of memory that this process has claimed

- TIME+: The total amount of time that this process has been using CPU cycles

- COMMAND: The name of the command that relates to this process

Understanding Linux Memory Allocation

When analyzing Linux memory usage, you should know how Linux uses virtual and resident memory. Virtual memory on Linux is to be taken literally: it is a nonexisting amount of memory that the Linux kernel can be referred to. When looking at the contents of the /proc/meminfo file, you can see that the amount of virtual memory is set to approximately 35TB of RAM:

VmallocTotal: 34359738367 kB

VmallocUsed: 486380 kB

VmallocChunk: 34359160008 kB

Virtual memory is used by the Linux kernel to allow programs to make a memory reservation. After making this reservation, no other application can reserve the same memory. Making the reservation is a matter of setting pointers and nothing else. It doesn’t mean that the memory reservation is also actually going to be used. When a program has to use the memory it has reserved, it is going to issue a malloc system call, which means that the memory is actually going to be allocated. At that moment, we’re talking about resident memory.

That Linux uses virtual memory when reserving memory may cause trouble later on. A program that has reserved memory—even if it is virtual memory—would expect that it can also use that memory. But that is not the case, as virtual memory, in general, is much more than the amount of physical RAM + Swap that is available. This is known as memory over-commit or over-allocation, and in some cases, memory over-allocation can cause trouble. If a process has reserved virtual memory that cannot be mapped to physical memory, you may encounter an OOM (out of memory) situation. If that happens, processes will get killed. In the “Optimizing Performance” section, later in this chapter, you’ll learn about some parameters that tell you how to prevent such situations.

Analyzing CPU Performance

The top utility offers a good starting point for performance tuning. However, if you really need to dig deep into a performance problem, top does not offer sufficient information, and more advanced tools will be required. In this section, you’ll learn what you can do to find out more about CPU performance-related problems.

Most people tend to start analyzing a performance problem at the CPU, since they think CPU performance is the most important on a server. In most situations, this is not true. Assuming that you have a recent CPU, and not an old 486-based CPU, you will not often see a performance problem that really is related to the CPU. In most cases, a problem that appears to be CPU-related is likely caused by something else. For example, your CPU may just be waiting for data to be written to disk. Before getting into details, let’s have a look at a brief exercise that teaches how CPU performance can be monitored.

EXERCISE 15-2. ANALYZING CPU PERFORMANCE

In this exercise, you’ll run two different commands that will both analyze CPU performance. You’ll notice a difference in the behavior of both commands.

- Log in as root and open two terminal windows. In one of these windows, start top.

- In the second window, run the command dd if=/dev/urandom of=/dev/null. You will see the usage percentage in the us column going up, as well as the usage in the sy column. Press 1 if you have a multi-core system. You’ll notice that one CPU core is completely occupied by this task.

- Stop the dd job and write a small script in the home directory of user root that has the following content:

[root@hnl ~]# cat wait

#!/bin/bash

COUNTER=0

while true

do

dd if=/dev/urandom of=/root/file.$COUNTER bs=1M count=1

COUNTER=$(( COUNTER + 1 ))

[ COUNTER = 1000 ] && exit

done - Run the script. You’ll notice that first, the sy parameter in top goes up, and after a while, the wa parameter goes up as well. This is because the I/O channel gets too busy, and the CPU has to wait for data to be committed to I/O. Based on the hardware you’re using, you might not see immediate results. If that is the case, start the script a second time.

- Make sure that both the script and the dd command have stopped, and close the root shells.

Understanding CPU Performance

To monitor what is happening on your CPU, you should know how the Linux kernel works with the CPU. A key component is the run queue. Before being served by the CPU, every process enters the run queue. There’s a run queue for every CPU core in the system. Once a process is in the run queue, it can be runnable or blocked. A runnable process is a process that is competing for CPU time; a blocked process is just waiting.

The Linux scheduler decides which runnable process to run next, based on the current priority of the process. A blocked process doesn’t compete for CPU time. The load average line in top gives a summary of the workload that results from all runnable and blocked processes combined. If you want to know how many of the processes are currently in either runnable or blocked state, use vmstat. The columns r and b show the amount of runnable and blocked processes. In Listing 15-4, you can see what this looks like on a system in which vmstat has polled the system five times, with a two-second interval.

Listing 15-4. Use vmstat to See How Many Processes Are in Runnable or Blocked State

[root@lab ~]# vmstat 2 5

procs -----------memory----------- --swap-- ----io---- --system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 1412260 3956 3571532 0 0 39 62 0 71 3 1 97 0 0

0 0 0 1412252 3956 3571564 0 0 0 0 1217 3478 2 1 97 0 0

0 0 0 1412376 3956 3571564 0 0 0 0 1183 3448 2 1 97 0 0

0 0 0 1412220 3956 3571564 0 0 0 0 1189 3388 2 1 97 0 0

0 0 0 1412252 3956 3571564 0 0 0 0 1217 3425 2 1 97 0 0

Context Switches and Interrupts

A modern Linux system is always a multitasking system. This is true for every processor architecture that can be used, because the Linux kernel constantly switches between different process. In order to perform this switch, the CPU needs to save all the context information for the old process and retrieve the context information for the new process. The performance price of these context switches, therefore, is heavy.

In an ideal world, you have to make sure that the number of context switches is limited as much as possible. You may do this by using a multi-core CPU architecture or a server with multiple CPUs, or a combination of both, but if you do, you have to make sure that processes are locked to a dedicated CPU core, to prevent context switches.

Processes that are serviced by the kernel scheduler, however, are not the only cause of context switching. Another important reason for a context switch to occur is hardware interrupts. This is a piece of hardware demanding processor time. To see what it has been doing, you can look at the contents of the /proc/interrupts file (see Listing 15-5).

Listing 15-5. The /proc/interrupts File Shows You Exactly How Many of Each Interrupt Has Been Handled

[root@lab proc]# cat interrupts

CPU0 CPU1 CPU2 CPU3

0: 54 0 0 0 IR-IO-APIC-edge timer

8: 0 0 0 1 IR-IO-APIC-edge rtc0

9: 0 0 0 0 IR-IO-APIC-fasteoi acpi

23: 0 0 36 1 IR-IO-APIC-fasteoi ehci_hcd:usb1

56: 0 0 0 0 DMAR_MSI-edge dmar0

57: 0 0 0 0 DMAR_MSI-edge dmar1

58: 68468 113385 59982 38591 IR-PCI-MSI-edge xhci_hcd

59: 17 9185792 29 6 IR-PCI-MSI-edge eno1

60: 660908 640712 274180 280446 IR-PCI-MSI-edge ahci

61: 379094 149796 827403 152584 IR-PCI-MSI-edge i915

62: 13 0 0 0 IR-PCI-MSI-edge mei_me

63: 263 1 6 1 IR-PCI-MSI-edge snd_hda_intel

64: 1770 506 106 516 IR-PCI-MSI-edge snd_hda_intel

NMI: 967 983 762 745 Non-maskable interrupts

LOC: 32241233 32493830 20152850 20140483 Local timer interrupts

SPU: 0 0 0 0 Spurious interrupts

PMI: 967 983 762 745 Performance monitoring interrupts

IWI: 122505 122449 110316 112272 IRQ work interrupts

RTR: 0 0 0 0 APIC ICR read retries

RES: 2486212 2351025 1841935 1821599 Rescheduling interrupts

CAL: 483791 496810 318516 290537 Function call interrupts

TLB: 231573 234010 173163 171368 TLB shootdowns

TRM: 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 Threshold APIC interrupts

MCE: 0 0 0 0 Machine check exceptions

MCP: 512 512 512 512 Machine check polls

As mentioned, in a multi-core environment, context switches can cause a performance overhead. You can see if these occur often by using the top utility. It can provide information about the CPU that was last used by any process, but you have to switch this on. To do that, from the top utility, first press the f command and type j. (on some distributions, you’ll have to scroll instead, to select the appropriate option). This will switch the option last used CPU (SMP) on for an SMP environment. In Listing 15-6, you can see the interface from which you can do this. Note that to make this setting permanent, you can use the W command from top. This causes all modifications to the top program to be written to the ~/.toprc file, so that they can be loaded again at restart of top.

Listing 15-6. After Pressing the F Key, You Can Switch Different Options On or Off in top

Fields Management for window 1:Def, whose current sort field is %CPU

Navigate with Up/Dn, Right selects for move then <Enter> or Left commits,

'd' or <Space> toggles display, 's' sets sort. Use 'q' or <Esc> to end!

* PID = Process Id TIME = CPU Time

* USER = Effective User Name SWAP = Swapped Size (KiB)

* PR = Priority CODE = Code Size (KiB)

* NI = Nice Value DATA = Data+Stack (KiB)

* VIRT = Virtual Image (KiB) nMaj = Major Page Faults

* RES = Resident Size (KiB) nMin = Minor Page Faults

* SHR = Shared Memory (KiB) nDRT = Dirty Pages Count

* S = Process Status WCHAN = Sleeping in Function

* %CPU = CPU Usage Flags = Task Flags <sched.h>

* %MEM = Memory Usage (RES) CGROUPS = Control Groups

* TIME+ = CPU Time, hundredths SUPGIDS = Supp Groups IDs

* COMMAND = Command Name/Line SUPGRPS = Supp Groups Names

PPID = Parent Process pid TGID = Thread Group Id

UID = Effective User Id ENVIRON = Environment vars

RUID = Real User Id vMj = Major Faults delta

RUSER = Real User Name vMn = Minor Faults delta

SUID = Saved User Id USED = Res+Swap Size (KiB)

SUSER = Saved User Name nsIPC = IPC namespace Inode

GID = Group Id nsMNT = MNT namespace Inode

GROUP = Group Name nsNET = NET namespace Inode

PGRP = Process Group Id nsPID = PID namespace Inode

TTY = Controlling Tty nsUSER = USER namespace Inode

TPGID = Tty Process Grp Id nsUTS = UTS namespace Inode

SID = Session Id

nTH = Number of Threads

P = Last Used Cpu (SMP)

After switching the last used CPU option on, you will see the column P in top that displays the number of the CPU that was last used by a process.

Using vmstat

To monitor CPU utilization, top offers a very good starting point. If that doesn’t offer you enough, you may prefer the vmstat utility. With vmstat, you can get a nice, detailed view of what is happening on your server. Of special interest is the CPU section, which contains the five most important parameters on CPU usage:

- cs: The amount of context switches

- us: The percentage of time the CPU has spent in user space

- sy: The percentage of time the CPU has spent in system space

- id: The percentage of CPU utilization in the idle loop

- wa: The percentage of utilization the CPU was waiting for I/O

When working with vmstat, you should know that there are two ways to use it. Probably the most useful way to run it is in the so-called sample mode. In this mode, a sample is taken every n seconds. Specify the amount of seconds for the sample as an option when starting vmstat. Running performance monitoring utilities in this way is always good, because it will show your progress over a given amount of time. You may find it useful, as well, to run vmstat for a given amount of time only.

Another useful way to run vmstat is with the option -s. In this mode, vmstat shows you the statistics since the system has booted. As you can see in Listing 15-7, apart from the CPU-related options, vmstat shows information about processors, memory, swap, io, and system as well. These options are covered later in this chapter.

Listing 15-7. Using vmstat -s

[root@lab ~]# vmstat -s

16196548 K total memory

14783440 K used memory

11201308 K active memory

3031324 K inactive memory

1413108 K free memory

3956 K buffer memory

3571580 K swap cache

4194300 K total swap

0 K used swap

4194300 K free swap

1562406 non-nice user cpu ticks

1411 nice user cpu ticks

294539 system cpu ticks

57856573 idle cpu ticks

22608 IO-wait cpu ticks

12 IRQ cpu ticks

5622 softirq cpu ticks

0 stolen cpu ticks

23019937 pages paged in

37008693 pages paged out

842 pages swapped in

3393 pages swapped out

129706133 interrupts

344528651 CPU context switches

1408204254 boot time

132661 forks

Analyzing Memory Usage

Memory is probably the most important component of your server, from a performance perspective. The CPU can only work smoothly if processes are ready in memory and can be offered from there. If this is not the case, the server has to get its data from the I/O channel, which is about 1,000 times slower to access than memory. From the processor’s point of view, even system RAM is relatively slow. Therefore, modern server processors have large amounts of cache, which is even faster than memory.

You have read how to interpret basic memory statistics, as provided by top earlier in this chapter; therefore, I will not cover them again. In this section, you can read about some more advanced memory-related information.

Page Size

A basic concept in memory handling is the memory page size. On an x86_64 system, typically 4KB pages are used. This means that everything that happens, happens in chunks of 4KB. Nothing wrong with that, if you have a server handling large amounts of small files. If, however, your server handles huge files, it is highly inefficient if only these small 4KB pages are used. For that purpose, your server can use huge pages with a default size of 2MB per page. Later in this chapter, you’ll learn how to configure huge pages.

A server can run out of memory. In that event, it uses swapping. Swap memory is emulated RAM on the server’s hard drive. Because in swap the hard disk is involved, you should avoid it, if possible. Access times to a hard drive are about 1,000 times slower than access times to RAM. To monitor current swap use, you can use free -m, which will show you the amount of swap that is currently being used. See Listing 15-8 for an example.

Listing 15-8. free -m Provides Information About Swap Usage

[root@lab ~]# free -m

total used free shared buffers cached

Mem: 15816 14438 1378 475 3 3487

-/+buffers/cache: 10946 4870

Swap: 4095 0 4095

As you can see in the preceding listing, on the server where this sample comes from, nothing is wrong; there is no swap usage at all, and that is good.

If, on the other hand, you see that your server is swapping, the next thing you must know is how actively it is swapping. To provide information about this, the vmstat utility provides useful information. This utility provides swap information in the si (swap in) and so (swap out) columns.

If swap space is used, you should also have a look at the /proc/meminfo file, to relate the use of swap to the amount of inactive anon memory pages. If the amount of swap that is used is larger than the amount of anon memory pages that you observe in /proc/meminfo, it means that active memory is being swapped. That is bad news for performance, and if that happens, you must install more RAM. If the amount of swap that is in use is smaller than the amount of inactive anon memory pages in /proc/meminfo, there’s no problem, and you’re good. If, however, you have more memory in swap than the amount of inactive anonymous pages, you’re probably in trouble, because active memory is being swapped. That means that there’s too much I/O traffic, which will slow down your system.

Kernel Memory

When analyzing memory usage, you should also take into account the memory that is used by the kernel itself. This is called slab memory. You can see in the /proc/meminfo file the amount of slab currently in use. Normally, the amount of kernel memory that is in use is relatively small. To get more information about it, you can use the slabtop utility.

This utility provides information about the different parts (referred to as objects) of the kernel and what exactly they are doing. For normal performance analysis purposes, the SIZE and NAME columns are the most interesting ones. The other columns are of interest mainly to programmers and kernel developers and, therefore, are not described in this chapter. In Listing 15-9, you can see an example of information provided by slabtop.

Listing 15-9. The slabtop Utility Provides Information About Kernel Memory Usage

Active / Total Objects (% used) : 1859018 / 2294038 (81.0%)

Active / Total Slabs (% used) : 56547 / 56547 (100.0%)

Active / Total Caches (% used) : 75 / 109 (68.8%)

Active / Total Size (% used) : 275964.30K / 327113.79K (84.4%)

Minimum / Average / Maximum Object : 0.01K / 0.14K / 15.69K

OBJS ACTIVE USE OBJ SIZE SLABS OBJ/SLAB CACHE SIZE NAME

1202526 786196 65% 0.10K 30834 39 123336K buffer_head

166912 166697 99% 0.03K 1304 128 5216K kmalloc-32

134232 134106 99% 0.19K 6392 21 25568K dentry

122196 121732 99% 0.08K 2396 51 9584K selinux_inode_security

115940 115940 100% 0.02K 682 170 2728K fsnotify_event_holder

99456 98536 99% 0.06K 1554 64 6216K kmalloc-64

79360 79360 100% 0.01K 155 512 620K kmalloc-8

70296 70296 100% 0.64K 2929 24 46864K proc_inode_cache

64512 63218 97% 0.02K 252 256 1008K kmalloc-16

38248 26376 68% 0.57K 1366 28 21856K radix_tree_node

29232 29232 100% 1.00K 1827 16 29232K xfs_inode

28332 28332 100% 0.11K 787 36 3148K sysfs_dir_cache

28242 27919 98% 0.21K 1569 18 6276K vm_area_struct

18117 17926 98% 0.58K 671 27 10736K inode_cache

14992 14150 94% 0.25K 937 16 3748K kmalloc-256

10752 10752 100% 0.06K 168 64 672K anon_vma

9376 8206 87% 0.12K 293 32 1172K kmalloc-128

8058 8058 100% 0.04K 79 102 316K Acpi-Namespace

7308 7027 96% 0.09K 174 42 696K kmalloc-96

4788 4788 100% 0.38K 228 21 1824K blkdev_requests

4704 4704 100% 0.07K 84 56 336K Acpi-ParseExt

The most interesting information a system administrator would receive from slabtop is the amount of memory a particular slab (part of the kernel) is using. If, for instance, you’ve recently performed some tasks on the file system, you may find that the inode_cache is relatively high. If that is just for a short period of time, it’s no problem. The Linux kernel wakes up routines when they are needed, while they can be closed fast when they’re no longer needed. If, however, you see that one part of the routine that is started continuously uses high amounts of memory, that might be an indication that you have some optimization to do.

EXERCISE 15-3. ANALYZING KERNEL MEMORY

In this exercise, you’ll cause a little bit of stress on your server, and you’re going to use slabtop to find out which parts of the kernel are getting busy. As the Linux kernel is sophisticated and uses its resources as efficiently as possible, you won’t see huge changes, but some subtle changes can be detected anyway.

- Open two terminal windows in which you are root.

- On one terminal window, type slabtop and have a look at what the different slabs are currently doing.

- In the other terminal window, use ls -lR /. You should see the dentry cache increasing. This is the part of memory where the kernel caches directory entries.

- Once the ls -lR command has finished, type dd if=/dev/sda of=/dev/null, to create some read activity. You’ll see the buffer_head parameter increasing. These are the file system buffers that are used to cache information the dd command uses.

Using ps for Analyzing Memory

When tuning memory utilization, there is one more utility that you should never forget, and that is ps. The advantage of ps, is that it gives memory usage information on all processes on your server and it is easy to grep on its result to find information about particular processes. To monitor memory usage, the ps aux command is very useful. It provides memory information in the VSZ and the RSS columns. The VSZ (Virtual Size) parameter provides information about the virtual memory that is used. This relates to the total amount of memory that is claimed by a process. The RSS (Resident Size) parameter refers to the amount of memory that is really in use. Listing 15-10 gives an example of some lines of ps aux output.

Listing 15-10. ps aux Gives Memory Usage Information for Particular Processes

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

root 1 0.0 0.0 53500 7664 ? Ss Aug16 0:07 /usr/lib/systemd/systemd--switched-root --system --deserialize 23

root 2 0.0 0.0 0 0 ? Z S Aug16 0:00 [kthreadd]

...

qemu 31274 2.0 2.5 1286920 407748 ? Sl 11:16 4:56 /usr/libexec/qemu-kvm -name vm

root 31276 0.0 0.0 0 0 ? S 11:16 0:00 [vhost-31274]

root 31280 0.0 0.0 0 0 ? S 11:16 0:00 [kvm-pit/31274]

qemu 31301 2.0 2.5 1287656 412868 ? Sl 11:16 4:58 /usr/libexec/qemu-kvm -name vm

root 31303 0.0 0.0 0 0 ? S 11:16 0:00 [vhost-31301]

root 31307 0.0 0.0 0 0 ? S 11:16 0:00 [kvm-pit/31301]

root 31314 0.0 0.0 0 0 ? S 11:16 0:00 [kworker/u8:2]

qemu 31322 2.1 2.5 1284036 413216 ? Sl 11:16 5:01 /usr/libexec/qemu-kvm -name vm

root 31324 0.0 0.0 0 0 ? S 11:16 0:00 [vhost-31322]

root 31328 0.0 0.0 0 0 ? S 11:16 0:00 [kvm-pit/31322]

qemu 31347 2.1 2.5 1284528 408636 ? Sl 11:16 5:01 /usr/libexec/qemu-kvm -name vm

root 31350 0.0 0.0 0 0 ? S 11:16 0:00 [vhost-31347]

root 31354 0.0 0.0 0 0 ? S 11:16 0:00 [kvm-pit/31347]

When looking at the output of ps aux, you may notice that there are two different kinds of processes. The name of some are between square brackets; the names of others are not. If the name of a process is between square brackets, the process is part of the kernel. All other processes are “normal” processes.

If you need more information about a process and what exactly it is doing, there are two ways to get that information. First, you can check the /proc directory for the particular process, for example, /proc/5658 gives information for the process with PID 5658. In this directory, you’ll find the maps file that gives some more insight into how memory is mapped for this process. As you can see in Listing 15-11, this information is rather detailed. It includes the exact memory addresses this process is using and even tells you about subroutines and libraries that are related to this process.

Listing 15-11. The /proc/PID/maps File Gives Detailed Information on Memory Utilization of Particular Processes

00400000-004dd000 r-xp 00000000 fd:01 134326347 /usr/bin/bash

006dc000-006dd000 r--p 000dc000 fd:01 134326347 /usr/bin/bash

006dd000-006e6000 rw-p 000dd000 fd:01 134326347 /usr/bin/bash

006e6000-006ec000 rw-p 00000000 00:00 0

014d0000-015d6000 rw-p 00000000 00:00 0 [heap]

7fcae4779000-7fcaeaca0000 r--p 00000000 fd:01 201334187 /usr/lib/locale/locale-archive

7fcaeaca0000-7fcaeacab000 r-xp 00000000 fd:01 201334158 /usr/lib64/libnss_files-2.17.so

7fcaeacab000-7fcaeaeaa000 ---p 0000b000 fd:01 201334158 /usr/lib64/libnss_files-2.17.so

7fcaeaeaa000-7fcaeaeab000 r--p 0000a000 fd:01 201334158 /usr/lib64/libnss_files-2.17.so

7fcaeaeab000-7fcaeaeac000 rw-p 0000b000 fd:01 201334158 /usr/lib64/libnss_files-2.17.so

7fcaeaeac000-7fcaeb062000 r-xp 00000000 fd:01 201334140 /usr/lib64/libc-2.17.so

7fcaeb062000-7fcaeb262000 ---p 001b6000 fd:01 201334140 /usr/lib64/libc-2.17.so

7fcaeb262000-7fcaeb266000 r--p 001b6000 fd:01 201334140 /usr/lib64/libc-2.17.so

7fcaeb266000-7fcaeb268000 rw-p 001ba000 fd:01 201334140 /usr/lib64/libc-2.17.so

7fcaeb268000-7fcaeb26d000 rw-p 00000000 00:00 0

The pmap command also shows what a process is doing. It gets its information from the /proc/PID/maps file. One of the advantages of the pmap command is that it gives detailed information about the order in which a process does its work. You can see calls to external libraries, as well as additional memory allocation (malloc) requests that the program is doing, as reflected in the lines that have [anon] at the end.

Monitoring Storage Performance

One of the hardest things to do properly is the monitoring of storage utilization. The reason is that the storage channel typically is at the end of the chain. Other elements in your server can have a positive as well as a negative influence on storage performance. For example, if your server is low on memory, that will be reflected in storage performance, because if you don’t have enough memory, there can’t be a lot of cache and buffers, and thus, your server has more work to do on the storage channel.

Likewise, a slow CPU can have a negative impact on storage performance, because the queue of runnable processes can’t be cleared fast enough. Therefore, before jumping to the conclusion that you have bad performance on the storage channel, you should really try to take other factors into consideration as well.

It is generally hard to optimize storage performance on a server. The best behavior really depends on the kind of workload your server typically has. For instance, a server that has a lot of reads has other needs than a server that does mainly write. A server that is doing writes most of the time can benefit from a storage channel with many disks, because more controllers can work on clearing the write buffer cache from memory. If, however, your server is mainly reading data, the effect of having many disks is just the opposite. Because of the large amount of disks, seek times will increase, and therefore, performance will be negatively affected.

Following are some indicators of storage performance problems. Have a look and see if one of these is the case with your server, and if it is, go and analyze what is happening.

- Memory buffers and cache is heavily used while CPU utilization is low.

- There is high disk or controller utilization.

- Long network response times are occurring while network utilization is low.

- The wa parameter in top shows very high.

Understanding Disk Working

Before trying to understand storage performance, there is another factor that you should consider, and that is the way that storage activity typically takes place. First, a storage device, in general, handles large sequential transfers better than small random transfers. This is because, in memory, you can configure read ahead and write ahead, which means that the storage controller already goes to the next block it probably has to go to. If your server handles small files mostly, read ahead buffers will have no effect at all. On the contrary, they will only slow it down.

In addition, you should be aware that in modern environments, three different types of storage devices are used. If storage is handled by a Storage Area Network (SAN), it’s often not possible to do much about storage optimization. If local storage is used, it makes a big difference if that is SSD-based storage or storage that uses rotating platters.

From the tools perspective, there are three tools that really count when doing disk performance analysis. The first tool to start your disk performance analysis is vmstat. This tool has a couple of options that help you see what is happening on a particular disk device, such as -d, which gives you statistics for individual disks, or -p, which gives partition performance statistics. As you have already seen, you can use vmstat with an interval parameter and a count parameter as well. In Listing 15-12, you can see the result of the command vmstat -d, which gives detailed information on storage utilization for all disk devices on your server.

Listing 15-12. To Understand Storage Usage, Start with vmstat

[root@lab ~]# vmstat -d

disk- ------------reads------------ ------------writes----------- -----IO------

total merged sectors ms total merged sectors ms cur sec

sda 932899 1821123 46129712 596065 938744 2512536 74210979 3953625 0 731

dm-0 1882 0 15056 537 3397 0 27160 86223 0 0

dm-1 17287 0 1226434 17917 62316 0 17270450 2186073 0 93

sdb 216 116 1686 182 0 0 0 0 0 0

dm-2 51387 0 2378598 16168 58063 0 3224216 130009 0 35

dm-3 51441 0 2402329 25443 55309 0 3250147 140122 0 40

In the output of this command, you can see detailed statistics about the reads and writes that have occurred on a disk. The following parameters are displayed when using vmstat -d:

- reads: total: The total number of reads that was requested

- reads: merged: The total number of adjacent locations that have been merged to improve performance. This is the result of the read ahead parameter. High numbers are good. A high number here means that within the same read request, a couple of adjacent blocks have been read as well.

- reads: sectors: The total number of disk sectors that has been read

- reads: ms: Total time spent reading from disk

- writes: total: The total number of writes

- writes: merged: The total number of writes to adjacent sectors

- writes: sectors: The total number of sectors that has been written

- writes: ms: The total time in milliseconds your system has spent writing data

- IO: cur: The total number of I/O requests currently in progress

- IO: sec: The total amount of time spent waiting for I/O to complete

Another way of monitoring disk performance with vmstat is by running it in sample mode. For example, the command vmstat 2 10 will run ten samples with a two-second interval. Listing 15-13 shows the result of this command.

Listing 15-13. In Sample Mode, You Can Get a Real-Time Impression of Disk Utilization

[root@lab ~]# vmstat 2 10

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu-----

r b swpd free buff cache si so bi bo in cs us sy id wa st

0 0 0 1319012 3956 3574176 0 0 36 58 26 8 3 1 97 0 0

0 0 0 1318532 3956 3574176 0 0 0 2 1212 3476 2 1 97 0 0

0 0 0 1318540 3956 3574176 0 0 0 0 1189 3469 2 1 97 0 0

0 0 0 1318788 3956 3574176 0 0 0 0 1250 3826 3 1 97 0 0

0 0 0 1317852 3956 3574176 0 0 0 0 1245 3816 3 1 97 0 0

0 0 0 1318044 3956 3574176 0 0 0 0 1208 3675 2 0 97 0 0

1 0 0 1318044 3956 3574176 0 0 0 0 1193 3384 2 1 97 0 0

0 0 0 1318044 3956 3574176 0 0 0 0 1212 3419 2 0 97 0 0

0 0 0 1318044 3956 3574176 0 0 0 0 1229 3506 2 1 97 0 0

3 0 0 1318028 3956 3574176 0 0 0 0 1227 3738 2 1 97 0 0

The columns that count in the preceding sample listing are the io: bi and io: bo columns, because they show the number of blocks that came in from the storage channel (bi) and the number of blocks that were written to the storage channel (bo).

Another tool to monitor performance on the storage channel, is iostat. It is not installed by default. Use zypper in sysstat, if you don’t have it. It provides an overview per device of the amount of reads and writes. In the example in Listing 15-14, you can see the following device parameters being displayed:

- tps: The number of transactions (read plus writes) that was handled per second

- Blk_read/s: The number of blocks that was read per second

- Blk_wrtn/s: The rate of disk blocks written per second

- Blk_read: The total number of blocks that was read since startup

- Blk_wrtn: The total number of blocks that was written since startup

Listing 15-14. The iostat Utility Provides Information About the Number of Blocks That Was Read and Written per Second

[root@hnl ~]# iostat

Linux 3.10.0-123.el7.x86_64 (lab.sandervanvugt.nl) 08/18/2014 _x86_64_ (4 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

2.63 0.00 0.53 0.04 0.00 96.80

Device: tps kB_read/s kB_wrtn/s kB_read kB_wrtn

sda 11.28 138.98 223.59 23064928 37106736

dm-0 0.03 0.05 0.08 7528 13580

dm-1 0.48 3.70 52.04 613289 8636472

sdb 0.00 0.01 0.00 843 0

dm-2 0.66 7.17 9.71 1189299 1612108

dm-3 0.64 7.24 9.79 1201164 1625073

dm-4 0.65 7.24 9.62 1201986 1596805

dm-5 0.65 7.38 9.62 1225284 1596418

dm-6 0.65 7.38 9.57 1224767 1588105

dm-7 0.65 7.31 9.53 1213582 1582201

If, when used in this way, iostat doesn’t give you enough detail, you can use the -x option as well. This option gives much more information and, therefore, doesn’t fit on the screen nicely, in most cases. In Listing 15-15, you can see an example.

Listing 15-15. iostat -x Gives You Much More Information About What Is Happening on the Storage Channel

[root@hnl ~]# iostat -x

Linux 3.10.0-123.el7.x86_64 (lab.sandervanvugt.nl) 08/18/2014 _x86_64_ (4 CPU)

avg-cpu: %user %nice %system %iowait %steal %idle

2.63 0.00 0.53 0.04 0.00 96.80

Device: rrqm/s wrqm/s r/s w/s rkB/s wkB/s avgrq-sz avgqu-sz await r_await w_await svctm %util

sda 10.97 15.13 5.62 5.66 138.94 223.52 64.29 0.03 2.43 0.64 4.21 0.39 0.44

dm-0 0.00 0.00 0.01 0.02 0.05 0.08 8.00 0.00 16.43 0.29 25.38 0.15 0.00

dm-1 0.00 0.00 0.10 0.38 3.69 52.02 231.77 0.01 27.61 1.04 34.96 1.18 0.06

sdb 0.00 0.00 0.00 0.00 0.01 0.00 7.81 0.00 0.84 0.84 0.00 0.82 0.00

When using the -x option, iostat gives you the following information:

- rrqm/s: Reads per second merged before issued to disk. Compare this to the information in the r/s column, to find out how much gain of efficiency you have because of read ahead.

- wrqm/s: Writes per second merged before issued to disk. Compare this to the w/s parameter, to see how much performance gain you have because of write ahead.

- r/s: The number of real reads per second

- w/s: The number of real reads per second

- rsec/s: The number of 512-byte sectors that was read per second

- wsec: The number of 51-byte sectors that was written per second

- avgrq-sz: The average size of disk requests in sectors. This parameter provides important information, as it shows you the size of the average files that were requested from disk. Based on the information that you receive from this parameter, you can optimize your file system.

- avgqu-sz: The average size of the disk request queue. This should be low at all times, because it gives the amount of pending disk requests. A high number here means that the performance of your storage channel cannot cope with the performance of your network.

- await: The average waiting time in milliseconds. This is the time the request has been waiting in the I/Oqueue plus the time that it actually took to service this request. This parameter should also be low in all cases.

- svctm: The average service time in milliseconds. This is the time it took before a request could be submitted to disk. If this parameter is below a couple of milliseconds (never more than ten), nothing is wrong with your server. If, however, this parameter is higher than ten, something is wrong, and you should consider doing some storage optimization.

- %util: The percentage of CPU utilization that was related to I/O

Finding Most Busy Processes with iotop

The most useful tool to analyze performance on a server is iotop. This tool also is not installed by default. Use zypper install iostat to install it. Running iotop is as easy as running top. Just start the utility, and you will see which process is causing you an I/O headache. The busiest process is listed on top, and you can also see details about the reads and writes that this process performs (see Listing 15-16).

Within iotop, you’ll see two different kinds of processes. There are processes whose name is written between square brackets. These are kernel processes that aren’t loaded as a separate binary but are a part of the kernel itself. All other processes listed are normal binaries.

Listing 15-16. Analyzing I/O Performance with iotop

[root@hnl ~]# iotop

Total DISK READ : 0.00 B/s | Total DISK WRITE : 0.00 B/s

Actual DISK READ: 0.00 B/s | Actual DISK WRITE: 0.00 B/s

TID PRIO USER DISK READ DISK WRITE SWAPIN IO> COMMAND

24960 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.01 % [kworker/1:2]

1 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % systemd --switche~ --deserialize 23

2 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [kthreadd]

3 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [ksoftirqd/0]

16388 be/4 qemu 0.00 B/s 0.00 B/s 0.00 % 0.00 % qemu-kvm -name vm~us=pci.0,addr=0x7

5 be/0 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [kworker/0:0H]

16390 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [vhost-16388]

7 rt/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [migration/0]

8 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcu_bh]

9 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcuob/0]

10 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcuob/1]

11 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcuob/2]

12 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcuob/3]

Normally, you would start analyzing I/O performance because of an abnormality in the regular I/O load. For example, you may find a high wa indicator in top. In Exercise 15-4, you’ll explore an I/O problem using this approach.

EXERCISE 15-4. EXPLORING I/O PERFORMANCE

In this exercise, you’ll start a couple of I/O-intensive tasks. You’ll first see abnormal behavior occurring in top, after which you’ll use iotop to explore what is going on.

- Open two root shells. In one shell, run top. In the second shell, start the command dd if=/dev/sda of=/dev/null. Run this command four times.

- Observe what happens in top. You will notice that the wa parameter goes up. Press 1. If you’re using a multi-core system, you should also see that the workload is evenly load-balanced between cores.

- Start iotop. You will see that the four dd processes are listed on top, but you’ll notice no other kernel processes that are significantly high in the list.

- Use find / -exec xxd {} ; to create some read activity. In iotop, you should see the process itself listed above, but no further significant workload.

- Create a script with the following content:

#!/bin/bash

while true

do

cp -R / blah.tmp

rm -f /blah.tmp

sync

done - Run the script and observe the list of processes in iotop. You should occasionally see the flush process doing a lot of work. This is to synchronize the newly written files back from the buffer cache to disk.

Understanding Network Performance

On a typical server, network performance is as important as disk, memory, and CPU performance. After all, the data has to be delivered over the network to the end user. The problem, however, is that things aren’t always as they seem. In some cases, a network problem can be caused by misconfiguration in server RAM. If, for example, packets get dropped on the network, the reason may very well be that your server just doesn’t have enough buffers reserved for receiving packets, which may be because your server is low on memory. Again, everything is related, and it’s your task to find the real cause of the troubles.

When considering network performance, you should always ask yourself what exactly you want to know. As you are aware, several layers of communication are used on the network. If you want to analyze a problem with your Samba server, that requires a completely different approach from analyzing a problem with dropped packets. A good network performance analysis always bottom-up. That means that you first have to check what is happening at the physical layer of the OSI model and then go up through the Ethernet, IP, TCP/UDP, and protocol layers.

When analyzing network performance, you should always start by checking the status of the network interface itself. Don’t use ifconfig; it really is a deprecated utility. Use ip -s link instead (see Listing 15-17).

Listing 15-17. Use ip -s link to See What Is Happening on Your Network Board

[root@vm8 ~]# ip -s link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

RX: bytes packets errors dropped overrun mcast

0 0 0 0 0 0

TX: bytes packets errors dropped carrier collsns

0 0 0 0 0 0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP mode DEFAULT qlen 1000

link/ether 52:54:00:30:3f:94 brd ff:ff:ff:ff:ff:ff

RX: bytes packets errors dropped overrun mcast

2824323 53309 0 0 0 0

TX: bytes packets errors dropped carrier collsns

8706 60 0 0 0 0

The most important information that is given by ip -s link is that about the number of packets that has been transmitted and received.

It’s not especially the number of packets that is of interest here but, mainly, the number of erroneous packets. In fact, all of these parameters should be 0 at all times. If you see anything else, you should check what is going on. The following error indicators are displayed:

- Errors: The amount of packets that had an error. Typically, this is due to bad cabling or a duplex mismatch. In modern networks, duplex settings are detected automatically, and most of the time, that goes quite well. So, if you see an increasing number here, it might be a good idea to replace the patch cable to your server.

- Dropped: A packet gets dropped if on the server there has been no memory available to receive the packet. Dropped packets will also occur on a server that runs out of memory, so make sure that you have enough physical memory installed in your server.

- Overruns: An overrun will occur if your NIC gets overwhelmed with packets. If you are using up-to-date hardware, overruns may indicate that someone is doing a denial of service attack on your server.

- Carrier: The carrier is the electrical wave that is used for modulation of the signal. It really is the component that carries the data over your network. The error counter should be 0 at all times, and if it isn’t, you probably have a physical problem with the network board, so it’s time to replace the network board itself.

- Collisions: You may see this error in Ethernet networks where a hub is used instead of a switch. Modern switches make packet collisions impossible, so you will probably never see this error anymore.

If you see a problem when using ip -s link, the next step should be to check your network board settings. Use ethtool to find out the settings you’re currently using and make sure they match the settings of other network components, such as switches. (Note that this command does not work on many KVM virtual machines.) Listing 15-18 shows what you can expect.

Listing 15-18. Use ethtool to Check Settings of Your Network Board

[root@lab ~]# ethtool eno1

Settings for eno1:

Supported ports: [ TP ]

Supported link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Supported pause frame use: No

Supports auto-negotiation: Yes

Advertised link modes: 10baseT/Half 10baseT/Full

100baseT/Half 100baseT/Full

1000baseT/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Speed: 1000Mb/s

Duplex: Full

Port: Twisted Pair

PHYAD: 2

Transceiver: internal

Auto-negotiation: on

MDI-X: off (auto)

Supports Wake-on: pumbg

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

Typically, there are just a few parameters from the ethtool output that are of interest, and these are the Speed and Duplex settings. They show you how your network board is talking to other nodes. If you see, for example, that your server is set to full duplex, whereas all other nodes in your network use half duplex, you’ve found your problem and know what you need to fix. Duplex setting misconfigurations are becoming more and more uncommon, however. A common error is that the supported link speed cannot be reached. If a network card supports gigabit, but only gives 100Mbit/s, that is often due to a hardware misconfiguration of one of the network devices that is involved.

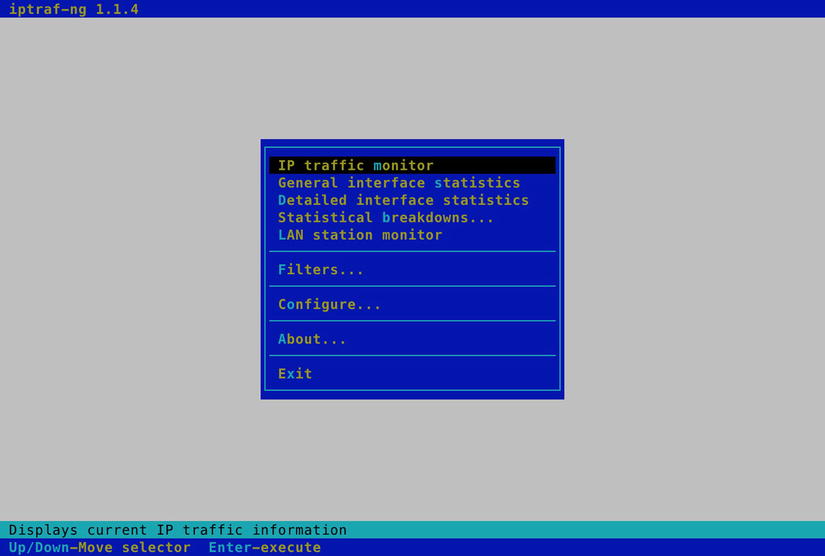

Another good tool with which to monitor what is happening on the network is IPTraf-ng (start it by typing iptraf-ng). This useful tool, however, is not included in the default installation or SLES repositories. You can download the RPM from the Internet, after which it can be installed manually. This is a real-time monitoring tool that shows what is happening on the network from a text-user interface. After starting, it will show you a menu from which you can choose what you want to see. Different useful filtering options are offered. (See Figure 15-1.)

Figure 15-1. IPTraf allows you to analyze network traffic from a menu

Before starting IPTraf, use the configure option. From there, you can specify exactly what you want to see and how you want it to be displayed. For instance, a useful setting to change is the additional port range. By default, IPTraf shows activity on privileged TCP/UDP ports only. If you have a specific application that you want to monitor that doesn’t use one of these privileged ports, select Additional ports from the configuration interface and specify additional ports that you want to monitor. (See Figure 15-2.)

Figure 15-2. Use the filter options to select what you want to see

After telling IPTraf how to do its work, use the IP traffic monitor option to start the tool. Next, you can select the interface on which you want to listen, or just hit Enter to listen on all interfaces. This will start the IPTraf interface, which displays everything that is going on at your server and also exactly on what port it is happening. In Figure 15-3, you can see that the server that is monitored currently has two sessions enabled, and also you can see which are the IP addresses and ports involved in that session.

Figure 15-3. IPtraf gives a quick overview of the kind of traffic sent on an interface

If it’s not so much the performance on the network board that you are interested in but more what is happening at the service level, netstat is a good basic network performance tool. It uses different parameters to show you what ports are open and on what ports your server sees activity. My personal favorite way of using netstat is by issuing the netstat -tulpn command. This gives an overview of all listening ports on the server and even tells you what other node is connected to a particular port. See Listing 15-19 for an overview.

Listing 15-19. With netstat, You Can See What Ports Are Listening on Your Server and Who Is Connected

[root@lab ~]# netstat -tulpn

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:5913 0.0.0.0:* LISTEN 31322/qemu-kvm

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 1980/master

tcp 0 0 127.0.0.1:5914 0.0.0.0:* LISTEN 31347/qemu-kvm

tcp 0 0 127.0.0.1:6010 0.0.0.0:* LISTEN 28676/sshd: sander@

tcp 0 0 0.0.0.0:48702 0.0.0.0:* LISTEN 1542/rpc.statd

tcp 0 0 0.0.0.0:2022 0.0.0.0:* LISTEN 1509/sshd

tcp 0 0 127.0.0.1:5900 0.0.0.0:* LISTEN 13719/qemu-kvm

tcp 0 0 127.0.0.1:5901 0.0.0.0:* LISTEN 16388/qemu-kvm

tcp 0 0 127.0.0.1:5902 0.0.0.0:* LISTEN 18513/qemu-kvm

tcp 0 0 127.0.0.1:5903 0.0.0.0:* LISTEN 18540/qemu-kvm

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 1498/rpcbind

tcp 0 0 127.0.0.1:5904 0.0.0.0:* LISTEN 18450/qemu-kvm

tcp 0 0 127.0.0.1:5905 0.0.0.0:* LISTEN 18919/qemu-kvm

tcp 0 0 127.0.0.1:5906 0.0.0.0:* LISTEN 19542/qemu-kvm

tcp 0 0 127.0.0.1:5907 0.0.0.0:* LISTEN 19586/qemu-kvm

tcp 0 0 127.0.0.1:5908 0.0.0.0:* LISTEN 19631/qemu-kvm

tcp 0 0 127.0.0.1:5909 0.0.0.0:* LISTEN 24773/qemu-kvm

tcp 0 0 192.168.122.1:53 0.0.0.0:* LISTEN 2939/dnsmasq

tcp 0 0 127.0.0.1:5910 0.0.0.0:* LISTEN 31234/qemu-kvm

tcp 0 0 127.0.0.1:5911 0.0.0.0:* LISTEN 31274/qemu-kvm

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 3228/cupsd

tcp 0 0 127.0.0.1:5912 0.0.0.0:* LISTEN 31301/qemu-kvm

tcp6 0 0 ::1:25 :::* LISTEN 1980/master

tcp6 0 0 ::1:6010 :::* LISTEN 28676/sshd: sander@

tcp6 0 0 :::2022 :::* LISTEN 1509/sshd

tcp6 0 0 :::111 :::* LISTEN 1498/rpcbind

tcp6 0 0 :::58226 :::* LISTEN 1542/rpc.statd

tcp6 0 0 :::21 :::* LISTEN 25370/vsftpd

tcp6 0 0 fe80::fc54:ff:fe88:e:53 :::* LISTEN 2939/dnsmasq

tcp6 0 0 ::1:631 :::* LISTEN 3228/cupsd

udp 0 0 192.168.122.1:53 0.0.0.0:* 2939/dnsmasq

udp 0 0 0.0.0.0:67 0.0.0.0:* 2939/dnsmasq

udp 0 0 0.0.0.0:111 0.0.0.0:* 1498/rpcbind

udp 0 0 0.0.0.0:123 0.0.0.0:* 926/chronyd

udp 0 0 127.0.0.1:323 0.0.0.0:* 926/chronyd

udp 0 0 0.0.0.0:816 0.0.0.0:* 1498/rpcbind

udp 0 0 127.0.0.1:870 0.0.0.0:* 1542/rpc.statd

udp 0 0 0.0.0.0:35523 0.0.0.0:* 891/avahi-daemon: r

udp 0 0 0.0.0.0:52582 0.0.0.0:* 1542/rpc.statd

udp 0 0 0.0.0.0:5353 0.0.0.0:* 891/avahi-daemon: r

When using netstat, many options are available. Following, you’ll find an overview of the most interesting ones:

- p: Shows the PID of the program that has opened a port

- c: Updates the display every second

- s: Shows statistics for IP, UDP, TCP, and ICMP

- t: Shows TCP sockets

- u: Shows UDP sockets

- w: Shows RAW sockets

- l: Shows listening ports

- n: Resolves addresses to names

There are many other tools to monitor the network as well, most of them fall beyond the scope of this chapter, because they are rather protocol- or service-specific and won’t help you as much in finding performance problems on the network. There is, however, one very simple performance-testing method that I always use when analyzing a performance problem, which I will talk about at the end of this section.

In many cases, to judge network performance, you’re only interested in knowing how fast data can be copied to and from your server. After all, that’s the only parameter that you can change. To measure that, you can use a simple test. I like to create a big file (1GB, for example) and copy that over the network. To measure time, I use the time command, which gives a clear impression of how long it really took to copy the file. For example, time scp server:/bigfile /localdir will end with a summary of the total time it took to copy the file over. This is an excellent test, especially when you start optimizing performance, as it will show you immediately whether or not you’ve reached your goals.

Optimizing Performance

Now that you know what to look for in your server’s performance, it’s time to start optimizing. Optimizing performance is a complicated job, and you shouldn’t have the impression that after reading the tips in this chapter you know everything about server performance optimization. Nevertheless, it’s good to know about at least some of the basic approaches to make your server perform better.

You can look at performance optimization in two different ways. For some people, it involves just changing some parameters and seeing what happens. That is not the best approach. A much better approach is when you first start with performance monitoring. This will give you some clear ideas on what exactly is happening with performance on your server. Before optimizing anything, you should know what exactly to optimize. For example, if the network performs poorly, you should know if that is because of problems on the network, or just because you don’t have enough memory allocated for the network. So make sure you know what to optimize. You’ve just read in the previous sections how you can do this.

Once you know what to optimize, it comes down to doing it. In many situations, optimizing performance means writing a parameter to the /proc file system. This file system is created by the kernel when your server comes up and normally contains the settings that your kernel is working with. Under /proc/sys, you’ll find many system parameters that can be changed. The easy way to do this is by just echoing the new value to the configuration file. For example, the /proc/sys/vm/swappiness file contains a value that indicates how willing your server is to swap. The range of this value is between 0 and 100, a low value means that your server will avoid a swap as long as possible; a high value means that your server is more willing to swap. The default value in this file is 60. If you think your server is too eager to swap, you could change it, using the following:

echo "30" > /proc/sys/vm/swappiness

This method works well, but there is a problem. As soon as the server restarts, you will lose this value. So the better solution is to store it in a configuration file and make sure that the configuration file is read when your server comes up again. A configuration file exists for this purpose, and the name of the file is /etc/sysctl.conf. When booting, your server starts the sysctl service that reads this configuration file and applies all settings in it. The sysctl file is always read when your server starts to apply the settings it contains.

In /etc/sysctl.conf, you refer to files that exist in the /proc/sys hierarchy. So the name of the file you are referring to is relative to this directory. Also, instead of using a slash as the separator between directory, subdirectories, and files, it is common to use a dot (even if the slash is accepted as well). That means that to apply the change to the swappiness parameter as explained above, you would include the following line in /etc/sysctl.conf:

vm.swappiness=30

This setting would be applied the next time that your server reboots. Instead of just writing it to the configuration file, you can apply it to the current sysctl settings as well. To do that, use the sysctl command. The following command can be used to apply this setting immediately:

sysctl -w vm.swappiness=30

Using sysctl -w is exactly the same as using the echo "30" > /proc/sys/vm/swappiness command—it does not also write the setting to the sysctl.conf file. The most practical way of applying these settings is to write them to /etc/sysctl.conf first and then activate them using sysctl -p /etc/sysctl.conf. Once activated in this way, you can also get an overview of all current sysctl settings, using sysctl -a. In Listing 15-20, you can see a part of the output of this command.

Listing 15-20. sysctl -a Shows All Current sysctl Settings

vm.min_free_kbytes = 67584

vm.min_slab_ratio = 5

vm.min_unmapped_ratio = 1

vm.mmap_min_addr = 4096

vm.nr_hugepages = 0

vm.nr_hugepages_mempolicy = 0

vm.nr_overcommit_hugepages = 0

vm.nr_pdflush_threads = 0

vm.numa_zonelist_order = default

vm.oom_dump_tasks = 1

vm.oom_kill_allocating_task = 0

vm.overcommit_kbytes = 0

vm.overcommit_memory = 0

vm.overcommit_ratio = 50

vm.page-cluster = 3

vm.panic_on_oom = 0

vm.percpu_pagelist_fraction = 0

vm.scan_unevictable_pages = 0

vm.stat_interval = 1

vm.swappiness = 60

vm.user_reserve_kbytes = 131072

vm.vfs_cache_pressure = 100

vm.zone_reclaim_mode = 0

The output of sysctl -a is overwhelming, as all the kernel tunables are shown, and there are hundreds of them. I recommend that you use it in combination with grep, to find the information you need. For example, sysctl -a | grep huge would only show you lines that have the text huge in their output.

Using a Simple Performance Optimization Test

Although sysctl and its configuration file sysctl.conf are very useful tools to change performance-related settings, you shouldn’t use them immediately. Before writing a parameter to the system, make sure this really is the parameter you need. The big question, though, is how to know that for sure. There’s only one answer to that: testing. Before starting any test, be aware that tests always have their limitations. The test proposed here is far from perfect, and you shouldn’t use this test alone to draw definitive conclusions about the performance optimization of your server. Nevertheless, it gives a good impression especially of the write performance on your server.

The test consists of creating a 1GB file, using the following:

dd if=/dev/zero of=/root/1GBfile bs=1M count=1024

By copying this file around and measuring the time it takes to copy it, you can get a decent idea of the effect of some of the parameters. Many tasks you perform on your Linux server are I/O-related, so this simple test can give you an impression of whether or not there is any improvement. To measure the time it takes to copy this file, use the time command, followed by cp, as in time cp /root/1GBfile /tmp. In Listing 15-21, you can see what this looks like when doing it on your server.

Listing 15-21. Timing How Long It Takes to Copy a Large File Around, to Get an Idea of the Current Performance of Your Server

[root@hnl ~]# dd if=/dev/zero of=/1Gfile bs=1M count=1024

1024+0 records in

1024+0 records out

1073741824 bytes (1.1 GB) copied, 16.0352 s, 67.0 MB/s

[root@hnl ~]# time cp /1Gfile /tmp

real 0m20.469s

user 0m0.005s

sys 0m7.568s

The time command gives you three different indicators: the real time, the user time, and the sys (system) time it took to complete the command. Real time is the time from initiation to completion of the command. User time is the time the kernel spent in user space, and sys time is the time the kernel spent in system space. When doing a test such as this, it is important to interpret it in the right way. Consider, for example, Listing 15-22, in which the same command is repeated a few seconds later.

Listing 15-22. The Same Test, Ten Seconds Later

[root@hnl ~]# time cp /1Gfile /tmp

real 0m33.511s

user 0m0.003s

sys 0m7.436s

As you can see, the command now performs slower than the first time it was used. This is only in real time, however, and not in sys time. Is this the result of a performance parameter that I’ve changed in between? No, but let’s have a look at the result of free –m, as in Listing 15-23.

Listing 15-23. Take Other Factors into Consideration

root@hnl:~# free -m

total used free shared buffers cached

Mem: 3987 2246 1741 0 17 2108

-/+buffers/cache: 119 3867

Swap: 2047 0 2047

Any idea what has happened here? The entire 1GB file was put in cache when the command was first executed. As you can see, free -m shows almost 2GB of data in cache, which wasn’t there before and that has an influence on the time it takes to copy a large file around.

So what lesson is there to learn? Performance optimization is complex. You have to take into account multiple factors that all have an influence on the performance of your server. Only when this is done the right way will you truly see how your server performs and whether or not you have succeeded in improving its performance. When not looking properly, you may miss things and think you have improved performance, while in reality, you have worsened it. So, it is important to develop reliable procedures for performance testing and stick to them.

CPU Tuning

In this section, you’ll learn what you can do to optimize the performance of your server’s CPU. First you’ll learn about some aspects of the working of the CPU that are important when trying to optimize performance parameters for the CPU, then you’ll read about some common techniques to optimize CPU utilization.

Understanding CPU Performance