Chapter 2. With a License to Intend

Intentionality is that elusive quality that describes purpose. It is distinctly human judgement. When we intend something, it contributes meaning to our grand designs. We measure our lives by this sense of purpose. It is an intensely sensitive issue for us. Purpose is entirely in the eye of the beholder, and we are the beholders.

An Imposition Too Far

Throwing a ball to someone, without warning, is an imposition. There was no preplanned promise that advertised the intention up front; the ball was simply aimed and thrown. The imposition obviously does not imply an obligation to catch the ball. The imposee (catcher) might not be able to receive the imposition, or might be unwilling to receive it. Either way, the autonomy of the catcher is not compromised by the fact that the thrower attempts to impose upon it.

The thrower might view the purpose of the imposition as an act of kindness, for example, inviting someone to join in the fun. The recipient might simply be annoyed by the imposition, being uninterested in sport or busy with something else.

In the second part of Figure 2-1, the two players have promised to throw and catch, perhaps as part of the agreement to play a game. Was this a rule imposed on them? Perhaps, but in that case they accepted it and decided to promise compliance. If not, perhaps they made up the “rule” themselves. A rule is merely a seed for voluntary cooperation.

Figure 2-1. An imposition does not imply coercion. Autonomous agents still participate voluntarily. On the right, a lack of a promise to respond might be an unwillingness to respond or an inability to respond.

Reformulating Your World into Promises

Seeing the world through the lens of promises, instead of impositions, is a frame of mind, one that will allow you to see obvious strengths and weaknesses quickly when thinking about intended outcomes.

Promises are about signalling purpose through the language of intended outcomes. Western culture has come to use obligation and law as a primary point of view, rather than autonomous choice, which is more present in some Eastern cultures. There is a lawmaking culture that goes back at least as far as the Biblical story of Moses. Chances are, then, that you are not particularly used to thinking about everyday matters in terms of promises. It turns out, however, that they are absolutely everywhere.

Here are some examples of the kinds of statements we may refer to as promises:

-

I promise you that I will walk the dog.

-

I promise you that I fed your cat while you were away.

-

We promise to accept cash payments.

-

We promise to accept validated credit cards.

-

I’ll lock the door when I leave.

-

I promise not to lock the door when I leave.

-

We’ll definitely wash our hands before touching the food.

These examples might be called something like service promises, as they are promises made by one agent about something potentially of value to another. We’ll return to the idea of services promises often. They are all intended outcomes yet to be verified.

Proxies for Human Agency

Thanks to human ingenuity and propensity for transference (some might say anthropomorphism, if they can pronounce it), promises can be made by inanimate agents too. Inanimate objects frequently serve as proxies for human intent. Thus it is useful to extend the notion of promises to allow inanimate objects and other entities to make promises. Consider the following promises that might be made in the world of information technology:

-

The Internet Service Provider promises to deliver broadband Internet for a fixed monthly payment.

-

The security officer promises that the system will conform to security requirements.

-

The support personnel promise to be available by pager 24 hours a day.

-

Support staff promises to reply to queries within 24 hours.

-

Road markings promise you that you may park in a space.

-

An emergency exit sign promises you a way out.

These are straightforward promises that could be made more specific. The final promise could also be restated in more abstract terms, transferring the promise to an abstract entity, “the help desk”:

-

The company help desk promises to reply to service requests within 24 hours.

-

The weather promises to be fine.

This latter example illustrates how we transfer the intentions of promises to entities that we consider to be responsible by association. It is a small step from this transference to a more general assignment of promises to individual components in a piece of technology. We abstract agency by progressive generalization:

-

Martin on the front desk promised to give me a wake-up call at 7 a.m.

-

The person on the front desk promised to give me a wake-up call at 7 a.m.

-

The front desk promised to give me a wake-up call at 7 a.m.

Suddenly what started out as a named individual finds proxy in a piece of furniture.

In a similar way, we attach agency to all manner of tools, which may be considered to issue promises about their intended function:

-

I am a doctor and I promise to try to heal you.

-

I am a meat knife and promise to cut efficiently through meat.

-

I am a logic gate and promise to transform a

TRUEsignal into aFALSEsignal, and vice versa. -

I am a variable that promises to represent the value 17 of type integer.

-

I am a command-line interpreter and promise to accept input and execute commands from the user.

-

I am a router and promise to accept packets from a list of authorized IP addresses.

-

I am a compliance monitor and promise to verify and automatically repair the state of the system based on this description of system configuration and policy.

-

I am a high availability server, and I promise you service delivery with 99.9999% availability.

-

I am an emergency fire service, and I promise to come to your rescue if you dial 911.

From these examples we see that the essence of promises is quite general. Indeed such promises are all around us in everyday life, both in mundane clothing as well as in technical disciplines. Statements about engineering specifications can also profitably be considered as promises, even though we might not ordinarily think of them in this way.

Practice reformulating your issues as promises.

What Are the Agencies of Promises?

Look around you and consider what things (effectively) make promises.

-

A friend or colleague

-

The organization you work for

-

A road sign

-

A pharmaceutical drug

-

A table

-

A nonstick pan

-

A window

-

A raincoat

-

The floor

-

The walls

-

The book you are reading

-

The power socket

What Things Can Be Promised?

Things that make sense to promise are things we know can be kept (i.e., brought to a certain state and maintained).

-

States, arrangements, or configurations, like a layout

-

Idempotent operations (things that happen once): delete a file, empty the trash

-

Regular, steady state, or continuous change: constant speed

-

An event that already happened

What Things Can’t Be Promised?

We already said that the basic rule of autonomy is that an agent cannot make a promise about anyone or anything other than itself. This is a simple rule of thumb. If it did, another agent assessing the promise would be right to reject that promise as a breach of trust and devalue the promiser’s reputation, as it is clear speculation.

For example, a manager might try to promise that her team will deliver a project by a deadline. If she is honest, she will make this conditional on the cooperation of the members of her team, otherwise this is effectively an imposition on her team (with or without their knowing). It might even be a lie or a deception (the dark side of promises). Without promises from each of her team members, she has no way of knowing that they will be able to deliver the project by the deadline. If this seems silly, please think again. The aim of science is for realism, not for unrealistic authority. Impositions do not bring certainty; promises that we trust are a kind of best guess.

Are there any other limitations?

Changes that are relative rather than absolute don’t make much sense as promises—for example, turn left, turn upside down. Technically, these are imperatives, or nonidempotent commands. Each time we make the change, the outcome changes, so we can’t make a promise about a final outcome. If we are talking about the action itself, how do we verify that it was kept? How many times should we turn? How often?

The truth is, anyone can promise anything at all, as in Monty Python’s sketch “Stake Your Claim!”, where contestants promise: “I claim that I can burrow through an elephant!” and “I claim that I wrote all of Shakespeare’s plays, and my wife and I wrote his sonnets.” These things are, formally, promises. However, they are obviously deceptions, or outright lies. If we lie and are found out, the value of our promises is reduced as a consequence.

The impartial thing to do would be to leave this as a matter for other agents to assess, but there are some basic things that nature itself promises, which allow us to draw upon a few rules of thumb.

A minimum requirement for a promise might be for there to exist some kind of causal connection between the promiser and the outcome of a promise in order to be able to keep it (i.e., in order for it to be a plausible promise).1 So it would be fine to promise that you fed someone’s cat, but less plausible to promise that you alone created the universe you were born into.

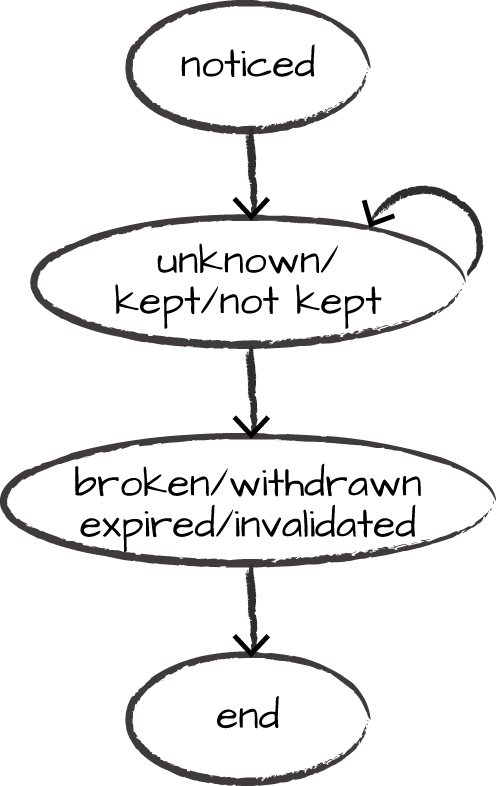

The Lifecycle of Promises

The promise lifecycle refers to the various states through which a promise passes, from proposal to the end (see Table 2-1). The lifecycle of a promise may now be viewed from either the perspective of the agent making the promise (Figure 2-2), or from the perspective of the promisee (Figure 2-3), or in fact any another agent that is external but in scope of the promise.

| Promise State | Description |

|---|---|

proposed |

A promise statement has been written down but not yet made. |

issued |

A promise has been published to everyone in its scope. |

noticed |

A published promise is noticed by an external agent. |

unknown |

The promise has been published, but its outcome is unknown. |

kept |

The promise is assessed to have been kept. |

not kept |

The promise is assessed to have been not kept. |

broken |

The promise is assessed to have been broken. |

withdrawn |

The promise is withdrawn by the promiser. |

expired |

The promise has passed some expiry date. |

invalidated |

The original assumptions allowing the promise to be kept have been invalidated by something beyond the promiser’s control. |

end |

The time at which a promise ceases to exist. |

Once a promise is broken or otherwise enters one of its end states (invalidated, expired, etc.), its lifecycle is ended, and further intentions about the subject must be described by new promises.

Figure 2-2. The promise lifecycle for a promiser.

From the perspective of the promisee, or other external agent in scope, we have a similar lifecycle, except that the promise is first noticed when published by the promiser (Figure 2-3).

Figure 2-3. The promise lifecycle for a promisee.

Keeping Promises

What does it mean to keep a promise? What is the timeline? When is a promise kept? How many events need to occur? What needs to happen? What is the lifecycle of a promise? Probably it means that some essential state has changed or been preserved in an agent’s world, or its state has been maintained or preserved. The configuration of the world measures the outcome of a promise. The result also has a value to the agent. These things are assessments to be made by any agent that knows about the promise. Agents can assess promises differently, each based on their own standards. What one agent considers as a promise kept might be rejected by another as inadequate.

Cooperation: The Polarity of Give and Take

When promises don’t go in both directions, a cooperative relationship is in danger of falling apart and we should be suspicious. Why would one agent be interested in the other agent, if not mutual intent? This is a sign of potential instability. This seems initially trite and a human-only limitation, but even machinery works in this way. Physics itself has such mechanisms built into it.

In reality, promises and impositions are always seen from the subjective vantage point of one of the autonomous agents. There is not automatically any “God’s-eye view” of what all agents know. We may call such a subjective view the “world” of the agent. In computer science, we would call this a distributed system. Promises have two polarities, with respect to this world: inwards or outwards from the agent.

-

Promises and impositions to give something (outwards from an agent)

-

Promises and impositions to receive something (inwards to an agent)

In the mathematical formulation of promises, we use positive and negative signs for these polarities, as if they were charges. It’s easy to visualize the differences with examples:

-

A (+) promise (to give) could be: “I promise you a rose garden,” or “I promise email service on port 25.”

-

A (-) promise (to use/receive) could be: “I accept your offer of marriage,” or “I accept your promise of data, and add you to my access control list.”

-

A (+) imposition (to give) could be: “You’d better give me your lunch money!” or “You’d better give me the address of this DNS domain!”

-

A (-) imposition (to use/receive) could be: “Catch this!” or “Process this transaction!”

For every promise made to serve or provide something (call it X) by one agent in a supply chain, the next agent has to promise to use the result X in order to promise Y to the next agent, and so on. Someone has to receive or use the service (promises labelled “-”) that is offered or given (promises labelled “+”). So there is a simple symmetry of intent, with polarity like a battery driving the activity in a system. Chains, however, are fragile: every agent is a single point of failure, so we try for short-tiered cooperatives. In truth, most organizations are not chains, but networks of promises.

If one agent promises to give something, this does not imply that the recipient agent promises to accept it, since that would violate the principle of autonomy. This also applies if an agent imposes on another agent, for example to give something (please contribute to our charity), or to receive something (you really must accept our charity). Neither of these need influence the agent on which one imposes these suggestions, but one can try nevertheless.

The plus-minus symmetry means that there are two viewpoints to every problem. You can practice flipping these viewpoints to better understand systems.

How Much Does a Promise Binding Count?

An author promises his editor to write 10 pages, and the editor promises to accept 5 pages. The probable outcome is that 5 pages will end up in print. Similarly, if the author promises to write 5 pages and the editor promises to print 10, the probable outcome is 5 pages, though it might be zero if there is an additional condition about all or nothing. If a personal computer has a gigabit network adapter, but the wall connection promises to deliver only 100 megabits, then the binding will be 100 megabits.

Promises and Trust Are Symbiotic

The usefulness of a promise is intimately connected with our trust in the agents making promises. Equivalently we can talk of the belief that promises are valid, in whatever meaning we choose to apply. In a world without trust, promises would be completely ineffective.

For some, this aspect of the world promise could be disconcerting. Particularly, those who are progeny of the so-called “exact sciences” are taught to describe the world in apparently objective terms, and the notion of something that involves human subjective appraisal feels intuitively wrong. However, the role of promises is to offer a framework for reducing the uncertainty about the outcome of certain events, not to offer guarantees or some insistence on determinism. In many ways, this is like the modern theories of the natural world where indeterminism is built in at a fundamental level, without sacrificing the ability to make predictions.

Promoting Certainty

Promises express outcomes, hopefully in a clear way. If promises are unclear, they have little value. The success of promises lies in being able to make assertions about the intent of a thing or a group of things.

The autonomy principle means that we always start with the independent objects and see how to bring them together. This bottom-up strategy combines many lesser things into a larger group of greater things. This coarse graining improves certainty because you end up with fewer things, or a reduction of detail. The space of possible outcomes is always shrinking.

We are often taught to think top-down, in a kind of divide-and-conquer strategy. This is the opposite of aggregation: it is a branching process. It starts with a root, or point of departure, and diverges exponentially into a great number of outcomes. How could one be sure of something in an exponentially diverging space of outcomes?

For promises to lead to certainty, they need to be noncontentious (see Chapter 6). Promises can coexist as long as they do not overlap or tread on each others’ toes. Avoiding conflict between agents leads to certainty in a group. Ultimately Promise Theory makes conflict resolution easy.

Because agents are autonomous and can promise only their own behaviour, they cannot inflict outcomes on other agents (impositions try to do this, but can be ignored, at least in principle).

-

If a single agent promises (offers or +) two things that are in conflict, it knows because it has all the information; it can resolve because it has all the control.

-

If an agent accepts (uses or -) or uses two promises that are in conflict, it is aware because it has accepted them; it has all the information and all the control to stop accepting one.

In other words, the strategy of autonomy puts all of the information in one place. Autonomy makes infomation local. This is what brings certainty.

Some Exercises

-

Look at the examples of (+) and (-) promises, in “Cooperation: The Polarity of Give and Take”. For each (+) promise, what would be the matching (-) promise, and vice versa?

-

Now think of an IT project to build a server array for your latest software. The purpose of this thought experiment is to try making predictions along and against the grain of causation.

How might you go about specifying requirements for the server array? For example, would you start from an expectation of usage demand? Would you base the requirements on a limited budget? Does this help you design an architecture for the server array? How can you decide what hardware to buy from this?

Now instead of thinking about requirements, look at some hardware catalogues for servers and network gear. Look at the device specifications, which are promises. From these promises, can you determine what kind of hardware you need to buy? How can you predict what service level the hardware will deliver? Does this change your architectural design?

Try going in the opposite direction and rewrite the hardware promises from the catalogue as project requirements. Does this make sense? Would it be possible to require twice the specifications than what you see in the catalogue? If so, how would you go about fulfilling those requirements?

1 Statisticians these days are taught that causation is a dirty word because there was a time when it was common to confuse correlation with causation. Correlation is a mutual (bidirectional) property, with no arrow. Causation proper, on the other hand, refers to the perceived arrow of time, and basically says that if A precedes B, and there is an interaction between the two, then A might contribute to B, like a stepping stone. This is unproblematic.