Chapter 5: Request, Receive, Load, and Transform Data

Requesting Medicare Administrative Claims and Enrollment Data

Sources of Medicare Claims and Enrollment Data

Our Project’s Data Requirements and Request Specifications

Contacting ResDAC and Completing Paperwork

Receiving, Decrypting, and Loading Medicare Administrative Claims and Enrollment Data

Receiving Our Medicare Administrative Data

Decrypting Our Medicare Administrative Data

Loading Our Medicare Administrative Data into SAS Data Sets

Algorithms: Transforming Base Claim and Line Level Data Sets into Single Claim-Level Files

Transforming Base Claim and Line-Level Carrier Data into a Claim-Level File

The purpose of this chapter is to discuss the process for requesting, receiving, loading, and transforming Medicare administrative claims and enrollment data. As discussed in Chapter 1, the online companion to this book is at http://support.sas.com/publishing/authors/gillingham.html. Here, you will find information on creating dummy source data, the code in this and subsequent chapters, as well as answers to the exercises in this book. I expect you to visit the book’s website, create your own dummy source data, and run the code yourself.

Requesting Medicare Administrative Claims and Enrollment Data1

From scheduling and budget perspectives, it is very important to keep in mind that a data request can take a lot of time and cost a lot of money to fulfill (although there are ways to defray this cost for researchers2). Therefore, it is important to plan as much as possible prior to initiating our data request so that we minimize the likelihood of needing to request a re-extraction because of missing files or variables. Because research programming involves an iterative investigatory process, we will never be perfectly certain that we have identified every data need. However, I have found that executing the kind of planning discussed in Chapter 4 not only minimizes the risk of rework, but also improves the project’s ability to absorb rework if necessary. Therefore, now that we have determined our research programming project’s plan and spent some time thinking about the high-level requirements, we know enough about our project to request data. More to the point, we know the research questions we need to answer and the data we will use to answer those questions. We will use this information to guide our data request. Indeed, as we will see, the successful completion of our programming project requires requesting and using personally identifiable beneficiary-level claims and enrollment data.

The data we need to complete our research programming project are not generally available to the public. We must obtain the data from CMS, a process that requires requesting, receiving, and loading the data. Recall that we touched on the concept of Medicare administrative data sources in Chapter 3 in order to frame the discussion of the contents and structure of the Medicare claims and enrollment data we will use to complete our research programming project. We will now review and expand on that discussion.

There are several routes to procuring Medicare data for research purposes.3 Depending on our source of funding, some routes may not be available to us. In the extreme case, employees of CMS have access to data from a variety of internal sources, including CMS’s Data Extract System (DESY), CMS’s Virtual Research Data Center (VRDC), and the Integrated Data Repository (IDR). Researchers not employed by CMS, including federal contractors and academics, may also have access to DESY, the VRDC, and the IDR but, depending on the source of funding for their project, use of these sources may not be permissible. Instead, contractors and academics may need to access Medicare claims and enrollment data by working with CMS’s Research Data Assistance Center (ResDAC) and CMS’s data distribution contractor.

So where are we going to get the data for our research programming project? From an instructional perspective, this is a tough question. Indeed, there are differences involved in using each of the sources that would be useful to know and that can influence what programming techniques we discuss in this text. Here are some examples:

• Submitting a request through ResDAC to be filled by CMS’s data distribution contractor involves planning for certain paperwork and delivery timeframes. Using DESY, the VRDC, or the IDR also involves certain planning activities, such as acquiring a user account with CMS.

• Using the IDR requires skill in querying a relational database, as well as more specialized knowledge to take advantage of attributes like TeraData indexing.

• Each data source may deliver the data in a different format. For example, data from DESY is typically delivered on a mainframe environment as flat text files (access to CMS’s mainframe environment, as well as knowledge of how to load the flat file, is required to work with these files).

With this in mind, our instructional plan is based on three key assumptions:

1. We will request our source data through ResDAC and CMS’s data distribution contractor because it is probably the most common route to Medicare data traveled by the research community. Besides, as we just discussed, other routes like DESY and the IDR require specialized skills that fall outside the scope of this text, and access to these routes are uncommon for researchers. Therefore, in this chapter, we will review how to request and receive identifiable Medicare claims and enrollment data through ResDAC and CMS’s data distribution contractor; we will no longer focus on other data sources, like DESY, the VRDC, or the IDR.

2. Our source data will arrive from CMS’s data distribution contractor as files that we must read into SAS data sets. However, we will not spend time learning to load the flat files into SAS data sets because CMS’s data distribution contractor provides SAS programs that load the files into SAS data sets. We start our programming work by using SAS data sets, assuming we have already gone through the process of loading the files we received from CMS’s data distribution contractor into these SAS data sets.

3. Our source data will arrive in separate files by claim type, with each claim type separated into files for claim header (called “base claim” files) and claim line information. We will create files that contain both base claim and claim line data in a single record (thus halving the number of claims data sets we work with) by combining the base claim and line files.4 Creating and using claim-level files for instructional purposes makes it easier to understand the concept of a claim as a bill for services rendered because all of the information on a claim is contained in a single record. Well, at least it has always been easier for me to understand it and teach it that way! Most importantly, it provides an achievable, common starting point irrespective of the source of our data, and thereby makes our discussions accessible to a broader audience.5

With our instructional plan set, let’s now discuss a little more about data available through CMS’s data distribution contractor, describe the process of requesting the data needed for our example research programming project, and write code to create the claims and enrollment data used throughout the remainder of this text.

Our example research programming project calls for the use of personally identifiable (we define “personally identifiable” below) claims and enrollment data files. However, it is worthwhile to pause for a moment and discuss other data available to the research community from CMS. A complete review of all Medicare data available to the research community is outside the scope of this book, but I would hate to leave the reader without a general understanding of the Medicare data CMS offers to the research community. Who knows, you may need something like non-identifiable data for future projects, so we may as well spend a little time obtaining a broader view of the Medicare research data landscape!

CMS offers a dizzying array of datasets for research purposes. The most straightforward method of understanding the Medicare data available to the research community is to group the files into the following three categories:

1. Research Identifiable Files (RIFs): Claims and enrollment files containing personally identifiable information used to identify specific Medicare beneficiaries and providers, including the beneficiary identifier (which can be a Social Security Number), provider identifier (providers can be identified using a Tax Identifier, which in some cases is a Social Security Number), date of birth, and geographic place of residence. The files available include paid institutional and non-institutional claims for Medicare Fee-for-Service beneficiaries, and enrollment data like the Master Beneficiary Summary File (MBSF). These datasets can be queried to extract information for a specific population of beneficiaries or providers. In addition, CMS creates files containing claims and enrollment data for samples of 5% of the Medicare beneficiary population, eliminating the need for researchers to request the full datasets (the size of which is overwhelming) and create their own samples. Because the RIFs contain personally-identifiable information, the use of such files is subjected to strict oversight by CMS, and access to these data is provided only when necessary. The data request for our sample research programming project will be from the RIFs.

2. Limited Data Sets (LDS): Claims and enrollment files containing the same information provided in the RIFs except for certain confidential identifiers. Even with such exclusions, LDS files are considered personally identifiable datasets and are subject to strict privacy rules. LDS files can be requested by completing an LDS data request packet.

3. Public Use Files (PUFs), or Non-Identifiable Files): These datasets generally contain summary-level, aggregated information on the Medicare program. Some research projects only require the aggregated results that are found in these non-identifiable files, eliminating the need for the researcher to acquire the data and personally aggregate the files. Note that requests for PUFs are made directly to CMS, though payment may still be required. Visit https://www.cms.gov/NonIdentifiableDataFiles/ for more details. In addition, note that CMS also provides synthetic PUFs for data entrepreneurs. See http://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/SynPUFs/DE_Syn_PUF.html for additional details.

In the case of our research programming project, we want the ability to report results by provider, necessitating the identification of providers. In addition, we will be looking at beneficiary-level information, necessitating data at the beneficiary-level. Of course, as we explained earlier, although we pretend to obtain our data from CMS’s data distribution contractor, we actually use the synthetic PUFs for data entrepreneurs as the source data used for this book. Therefore, we are simply simulating the exercise of requesting, obtaining, and using personally identifiable data.

Because it is relevant to understanding our work in future chapters, let’s provide additional details on the personally identifiable data we are requesting, as well as the file we are using for our data request. In Chapter 1, we discussed that the starting point for our example research programming project is a file provided by CMS that contains identifiers for the providers that participated in the example pilot program we are studying, along with identifiers for the beneficiaries associated with those providers. Associating providers and beneficiaries to assign responsibility for a beneficiary’s care is called attribution. Constructing algorithms for provider attribution is outside of the scope of this text, so we will simply assume that this file of providers and associated beneficiaries provided by CMS can be used for provider attribution. From this point forward, we refer to this data set as a “finder file” (because we will send it to CMS’s data distribution contractor upon request for their use in finding all of the claims for the beneficiaries listed in the file). We may also refer to this file as an “attribution file” (because in subsequent chapters we will use it to describe the providers associated with the beneficiaries in the file). Further, we assume this finder file will be provided to us as both a flat text file and a SAS data set (so we will not present code for reading the file into a SAS data set).

Our request to CMS must describe the need for identifying claims and enrollment information associated with the entities in our finder file. Therefore, we will ask CMS’s data distribution contractor to extract claims and enrollment data for beneficiaries associated with the providers who participated in our example pilot program by providing the data distribution contractor with our finder file. Our data request will be for the following files for calendar year 2010: Part B carrier claims, DME claims (even though we will not use them in this book), inpatient claims, outpatient claims, SNF claims, hospice claims, home health claims, and MBSF enrollment data.6 We will use the data received from CMS’s data distribution contractor to develop algorithms to produce summaries of payment, utilization, and quality outcomes. In Chapter 10, we will use these analytic file summaries of beneficiary-level data to analyze the providers who participated in the pilot program (and we will use the aforementioned “attribution file” assumed to be provided by CMS to accomplish this task).

It is important to keep in mind that ResDAC’s mission is to assist researchers with CMS data. When you work on your own research programming project, you will need to contact ResDAC and obtain the latest instructions on how to execute a data request. Not only does ResDAC’s expert staff answer questions about obtaining data, but they also answer questions about how to use the data. In other words, there are many resources for you to turn to in the data extraction process: from this book, to ResDAC, to fellow professional research systems specialists, to fellow researchers, to folks at CMS! The following high-level instructions for completing a data request to ResDAC are intended to introduce the topic of requesting data and are not a substitute for contacting ResDAC directly. ResDAC staff are the real experts, and the remainder of this chapter is no substitute for seeking their know-how.

Our first step is to visit the ResDAC and data distribution contractor’s websites7 to obtain the most up-to-date forms and information on data (like data dictionaries). We may wish to contact ResDAC to discuss our planned request, the research questions we need to answer, the data we believe we must request in order to answer those questions, and other pertinent information like the source of our funding. We may also wish to request an estimate of the cost to fulfill our data request at this time. (Depending on your source of funding, you may need to request a cost estimate prior to agreeing to undertake research just to ensure that your project is able to afford the data necessary to perform research. As with most tasks in project work, making contact and gathering information as early as possible typically pays large dividends down the road.)

Now that we have made initial contact and have an idea of what is contained in the request packet and the process we will need to work through, we can begin to fill out the paperwork. Walking through every step in the request is beyond the scope of this book (and not necessary given that ResDAC experts are available to provide guidance as you complete the request). Therefore, we will simply review the major steps in the process and highlight important information.

1. Complete a Data Use Agreement (CMS form CMS-R-0235, commonly referred to as a DUA, and contained in the request packet we downloaded from the ResDAC website). All requests for identifiable data must be accompanied by a DUA. A DUA is a contract between the users of the data (in the case of our research programming project, us!) and the owners of the data (in all cases, CMS). The contract specifies rules to keep the use of CMS’s data in compliance with CMS’s data use policies, including the Privacy Act of 1974, a federal law that covers the use of personally identifiable information. The DUA specifies the person responsible for the data (called the Data Custodian), as well as authorized users of the data. Use of the data is strictly limited to those individuals listed on the DUA (or who have a signed DUA signature addendum). The contract also specifies how to deal with the data at the close of the project (more on that in Chapter 10).

2. Complete the remainder of the data request packet. This collection of documents contains everything we need for our request as well as detailed instructions and checklists for completing the required forms. A precise and methodical approach to completing the request packet will minimize back-and-forth throughout the request process. For example, many of the documents in the request packet ask us to describe our research programming project and the identifiable files we are requesting. All such descriptive information should be consistent across all documents. The request packet includes the following documents:

a. A request for the identifiable data on our organization’s letterhead. A sample request letter is included in the request packet. The letter simply describes the purpose of our research programming project and how it relates to our need for the identifiable data files we are requesting.

b. An Executive Summary form that asks us to describe our study, our plan for managing the data (e.g., how the data will be stored and destroyed and who will have access to it). ResDAC provides documents that guide the completion of the Executive Summary form.

c. A Research Study Protocol Format form that asks us to describe the background, objectives, and methods of our study, as well as how the files included in our data request are necessary to meet these objectives.8

d. Our completed Data Use Agreement form.

e. Documentation that our request has been reviewed and approved by an Internal Review Board (IRB). This authorization is necessary for CMS to be able to release personally identifiable data.

f. Documentation that our project has funding for the purchase of the data. The data can be expensive, so we must provide evidence that we have received funding internally from our own organization or from something like a grant or a contract with a federal agency.

g. A Specification Worksheet that describes the user of the data, the data custodian, payment information, and information needed to perform the data extract. For example, we must describe the operating system of the hardware we will use to process the data and the type of media on which we wish to receive the data.

h. A completed request for a CMS Cost Estimate. This document asks questions pertaining to the complexity of the data request.

3. Next, we must email a draft of the request packet to ResDAC for their review and commentary. It is important to allow plenty of time in your project schedule to receive ResDAC’s comments on the draft materials, respond to those comments, and resend the modified draft request packet for approval.

4. Once ResDAC approves the draft request packet, ResDAC submits the materials to CMS for review and approval. The approval process contains many steps so it is important to allow plenty of time for the process to play out. For example, the request is assigned a control number and is subsequently presented to CMS’s Privacy Board for review and approval. It is prudent to plan for a minimum of three or four weeks to pass between the submission of the final packet to CMS and getting word regarding the final approval of our request.

5. Once our request is reviewed and approved, we can make payment. It can take two weeks for the payment process to run its course.

6. Immediately upon submitting payment (we do not have to wait for confirmation), we can submit the instructions we would like to use for data extraction, including the aforementioned finder file on which our extraction will be based. However, processing will not begin until payment has been finalized by CMS.

7. Sit back, relax, and wait for your data to arrive! Go see a movie, get a massage, or take a vacation. Once CMS has finalized our payment and submitted our request to the data distribution contractor for processing, it can take at least 4-6 weeks to receive the data files we have been longing for!

Our patience has been rewarded; we have received the Medicare data we requested! We tear into the shipping package to reveal a mass storage device that contains our claims and enrollment data. We plug the storage device into our PC and find that it contains the following files:

• The requested data formatted as flat files with fixed columns compressed and encrypted into self-decrypting archive files (SDAs, recognizable by the file extension .dat)

• SAS code for loading the files into SAS datasets (recognizable by the file extension .sas)

• Summary information detailing the data transmission (recognizable by the file extension .fts, short for “file transfer summary”)

• A host of documentation and data dictionaries including a ‘readme’ file listing the files that comprise the data transmission package, instructions for decrypting the data, spreadsheets detailing the variables included in each file, information on the diagnosis and procedure codes in the data, and data dictionaries

• Although not included in our package, remember that we have our finder file (or attribution file) for use in later chapters! We assume that this finder file is a SAS data set.

Our first hurdle is to uncompress and decrypt our data. We received our decryption key (a fancy way of saying “password”) via email from CMS’s data distribution contractor. One of the most common questions I receive relates to the inability to decrypt a file; this problem is usually rooted in accidentally attempting to decrypt the file to the media on which we received our data. Copying the files to our hard drive prior to attempting to decrypt the data avoids this problem. I recommend an additional step of copying all of the files included on the mass storage device into a directory and then making the directory read-only. This ensures we cannot accidentally modify our data, and we can work from this directory with confidence. Because the files are SDAs, we do not need to acquire any special decryption software; aside from the email containing our decryption key, everything we need for the decryption process is included in our shipment including an executable file (.exe) that runs the decryption process. We need simply to follow the instructions for decrypting the data files included in our data shipment.

Once our files are copied and decrypted, we must load them into SAS datasets. As stated above, CMS’s data distribution contractor makes the process as simple as possible by including SAS code and the instructions necessary to complete the task in our data shipment. Therefore, we will not spend time (nor will we provide code) discussing how to load our source files into SAS datasets, but it is worth the time to discuss a few details.

First, note that we received the following files:

• Base claim and revenue center files for all institutional claims (inpatient, outpatient, SNF, home health, and hospice). Recall that we discussed the difference between the base claim and the revenue center files in Chapter 3.8

• Base claim and claim line files for all non-institutional claims (DME and Carrier). Recall that we discussed the difference between the base claim and the line level files in Chapter 3.

• We also received the Master Beneficiary Summary File (MBSF) containing enrollment data. Recall that we discussed the MBSF in Chapter 3.

In addition, note that we received file transfer summary files (the aforementioned file with the extension .fts) and SAS program files that correspond in name to each of the claims and enrollment files. The transfer summary files contain information on the contents of each of our claims and enrollment files that confirm we loaded the data into SAS correctly. For example, the files contain information on the number of columns and rows, the length of the rows, and the size of the file. Once we load the flat files, the number of records in our SAS datasets should match the count of rows in the file transfer summary.

Finally, when loading the flat files into SAS datasets, we must include the proper SAS LIBNAMEs to ensure we are reading and writing to the proper locations (remembering that we assigned read-only rights to our source data directory). We must also be cognizant of how our file sizes will change as we move the data from compressed, encrypted files to flat files, and from flat files to SAS datasets. Planning ahead to ensure that we have the appropriate amount of storage allocated for our project is important. We listed the LIBNAMEs we will use for this project in Chapter 4. As a reminder, we will load the raw data received from CMS’s data distribution contractor (as well as any other source data) into a library nicknamed SRC. We will transform this data and save it to a library nicknamed ETL.

After loading our claims and enrollment data into SAS data sets, we have the following files stored in our library with the alias SRC:

• Carrier base claim and line level files (SRC.CARRIER2010CLAIM and SRC.CARRIER2010LINE, respectively)

• DME base claim and line level files (SRC.DME2010CLAIM and SRC.DME2010LINE, respectively)

• Inpatient base claim and claim line files (SRC.IP2010CLAIM and SRC.IP2010LINE, respectively)

• Skilled Nursing Facility base claim and revenue center level files (SRC.SNF2010CLAIM and SRC.SNF2010LINE, respectively)

• Outpatient base claim and revenue center level files (SRC.OP2010CLAIM and SRC.OP2010LINE, respectively)

• Home health base claim and revenue center level files (SRC.HH2010CLAIM and SRC.HH2010LINE, respectively)

• Hospice base claim and revenue center level files (SRC.HS2010CLAIM and SRC.HS2010LINE, respectively)

• Master Beneficiary Summary File enrollment data (SRC.MBSF_AB_2010)

• Social Security Administration (SSA) state and county codes, and corresponding state and county names (more on this in Chapter 6)

• The finder file of provider identifiers and the beneficiaries attributed to those providers, received from CMS at the outset of the project and used in the extraction of our claims and enrollment data (SRC.PROV_BENE_FF)

The above data sets are available on the book’s website (http://support.sas.com/publishing/authors/gillingham.html) and represent the starting point for our programming work. Note that the file names and variable names we use may differ from those provided by CMS’s data distribution contractor.

Let’s start our programming work at the point after we have loaded the data we received from CMS’s data distribution contractor into SAS data sets saved in the library with the alias SRC (again, we will not program the actual loading of the data because the data distribution contractor provides the programs for that task). At this point, we have base claim and revenue center level SAS data sets for each institutional claim type. In addition, we have base claim and line level SAS data sets for each non-institutional claim type. As mentioned above, we will combine the base claim and revenue center (or line) files into a single file per claim type, with one record for each claim and its associated claim lines.9 Note that we will not provide code for executing this task for each claim type, nor will we provide code for using all variables in these data sets. Rather, we will provide a set of code for transforming data sets for Carrier claims, using a limited set of variables.10 Using these examples, the reader can easily create code for performing this procedure on other claim types and additional variables.

Let’s combine our base claim and line level carrier SAS data sets into a single carrier SAS data set with one record for each claim and its associated claim lines. In Step 5.1, we sort the carrier line file (SRC.CARRIER2010LINE) by the variables BENE_ID, CLM_ID, and CLM_LN.

/* STEP 5.1: SORT CARRIER LINE FILE IN PREPARATION FOR TRANSFORMATION */

proc sort data=src.carrier2010line out=carrier2010line;

by bene_id clm_id clm_ln;

run;

In Step 5.2, we transform the sorted carrier line file (CARRIER2010LINE) from one record per line (identified using the variable CLM_LN) to one record for each claim, with the claim line variables arrayed in that record. Because carrier claims can have up to 13 claim lines, we must create 13 new variables.11 As you can see, we do the same for the other variables in our carrier line. These variables will be used in our work in later chapters.

/* STEP 5.2: TRANSFORM CARRIER LINE FILE */

data carrier2010line_wide(drop=i expnsdt1 expnsdt2 hcpcs_cd line_icd_dgns_cd clm_ln linepmt prfnpi tax_num);

format expnsdt1_1-expnsdt1_13 mmddyy10.

expnsdt2_1-expnsdt2_13 mmddyy10.

line_icd_dgns_cd1-line_icd_dgns_cd13 $5.

hcpcs_cd1-hcpcs_cd13 $7.

linepmt1-linepmt13 10.2

prfnpi1-prfnpi13 $12.

tax_num1-tax_num13 $10.;

set carrier2010line;

by bene_id clm_id clm_ln;

retain expnsdt1_1-expnsdt1_13

expnsdt2_1-expnsdt2_13

line_icd_dgns_cd1-line_icd_dgns_cd13

hcpcs_cd1-hcpcs_cd13

linepmt1-linepmt13

prfnpi1-prfnpi13

tax_num1-tax_num13;

array xline_icd_dgns_cd(13) line_icd_dgns_cd1-line_icd_dgns_cd13;

array xexpnsdt1_(13) expnsdt1_1-expnsdt1_13;

array xexpnsdt2_(13) expnsdt2_1-expnsdt2_13;

array xhcpcs_cd(13) hcpcs_cd1-hcpcs_cd13;

array xlinepmt(13) linepmt1-linepmt13;

array xprfnpi(13) prfnpi1-prfnpi13;

array xtax_num(13) tax_num1-tax_num13;

if first.clm_id then do;

do i=1 to 13;

xline_icd_dgns_cd(clm_ln)='';

xexpnsdt1_(clm_ln)=.;

xexpnsdt2_(clm_ln)=.;

xhcpcs_cd(clm_ln)='';

xlinepmt(clm_ln)=.;

xprfnpi(clm_ln)='';

xtax_num(clm_ln)='';

end;

end;

xline_icd_dgns_cd(clm_ln)=line_icd_dgns_cd;

xexpnsdt1_(clm_ln)=expnsdt1;

xexpnsdt2_(clm_ln)=expnsdt2;

xhcpcs_cd(clm_ln)=hcpcs_cd;

xlinepmt(clm_ln)=linepmt;

xprfnpi(clm_ln)=prfnpi;

xtax_num(clm_ln)=tax_num;

if last.clm_id then output;

run;

Figure 5.1 shows the observations for a specific BENE_ID in the CARRIER2010LINE data set. Note how the variables that describe a service, like diagnosis code (LINE_ICD_DGNS_CD) variable, are stored as individual records for different line numbers (CLM_LN) of the same claim (CLM_ID).

Figure 5.1: Sorted Carrier Line File

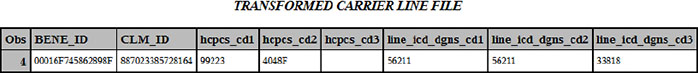

Figure 5.2 is a single observation taken from the CARRIER2010LINE_WIDE data set for the same beneficiary displayed above. You can see that the procedure code and diagnosis code variables formerly stored in individual rows are now arrayed in a single row.

Figure 5.2: Transformed Carrier Line File

In Step 5.3, we sort the base claim SAS data set (SRC.CARRIER2010CLAIM) and the transformed claim line SAS data set (CARRIER2010LINE_WIDE) by BENE_ID and CLM_ID in preparation for merging the two files to create a carrier SAS data set that contains one record per base claim, with all associated claim lines. We save the output of the sort of the carrier base claim file, named CARRIER2010CLAIM, to our work directory.

/* STEP 5.3: SORT BASE CLAIM AND TRANSFORMED LINE FILES IN PREPARATION FOR MERGE */

proc sort data=src.carrier2010claim out=carrier2010claim;

by bene_id clm_id;

run;

proc sort data=carrier2010line_wide;

by bene_id clm_id;

run;

Figure 5.3 is a single observation taken from the CARRIER2010CLAIM data set for the same beneficiary displayed for Step 5.3 above.

Figure 5.3: Sorted Carrier Base Claim File

In Step 5.4, we merge the CARRIER2010CLAIM and CARRIER2010LINE_WIDE files by the unique beneficiary identifier (BENE_ID) and the unique claim identifier (CLM_ID), using an “if a and b” merge. We output a SAS data set called ETL.CARR_2010. This outputted carrier SAS data set, sorted by BENE_ID, will be used for identifying Part B carrier services throughout the remainder of this book. Note that we also output a file called CARR_NOMATCH that contains records that did not match by BENE_ID and CLM_ID for quality assurance purposes (mismatches indicate a potential issue with the data or your code).

/* STEP 5.4: MERGE BASE CLAIM AND TRANSFORMED LINE FILES */

data etl.carr_2010 carr_nomatch;

merge carrier2010claim(in=a) carrier2010line_wide(in=b);

by bene_id clm_id;

if a and b then output etl.carr_2010;

else output carr_nomatch;

run;

Figure 5.4 is a single record of the outputted ETL.CARR_2010 data set for the same beneficiary displayed above. You can see that the base claim and line files have been merged such that one record per claim exists, where variables like procedure code and diagnosis code formerly stored in individual rows in the line file are now arrayed in a single row, along with the base claim detail of the dates on the claim (FROM_DT and THRU_DT).

Figure 5.4: Merged Transformed Carrier Line and Base Claim Files

In this chapter, we learned how to request, receive, load, and transform the Medicare administrative claims and enrollment data we will use to complete our research programming project. To this end, we discussed the following information:

• A data request can take a lot of time and cost a lot of money to fulfill, so it is important to plan as much as possible prior to initiating our data request.

• Depending on the source of your funding, there are several routes to procuring Medicare data for research purposes. We discussed CMS’s Integrated Data Repository (IDR), Data Extract System (DESY), and Virtual Research Data Center (VRDC). In addition, we discussed the Research Data Assistance Center (ResDAC), and CMS’s data distribution contractor.

• ResDAC and CMS’s data distribution contractor are the research community’s gateway to CMS data.

• Our instructional plan is to request our data from CMS’s data distribution contractor and quickly convert the claims data we receive into separate files for each claim type that contain all of the information for a claim in one record.

• CMS offers a variety of datasets for research purposes which we grouped into three categories: Research Identifiable Files (RIFs), Limited Data Sets (LDS), and Public Use Files (PUFs). Consult with ResDAC to ensure that you are requesting the right kind of data for your research.

• Our data request utilized a finder file provided by CMS to query the following claims and enrollment data for calendar year 2010: Part B Carrier claims, DME claims, inpatient claims, outpatient claims, SNF claims, hospice claims, home health claims, and MBSF enrollment data.

• We discussed a high-level overview of the data acquisition process.

• The package of data we received from CMS’s data distribution contractor contained our requested data (in encrypted files), the SAS code for loading the files into SAS datasets, summary information detailing the data transmission, and a host of documentation and data dictionaries.

• We decrypted our data files and loaded the files into SAS datasets. Then, we combined the administrative claims files, separated into files by claim type, header, and line information, into a set of files by claim type that contain all information on the paid claim in a single record. We retained access to the finder file (also called our attribution file) for use in subsequent chapters.

1 As stated in Chapter 3, all discussions of acquisition of source data are for instructional purposes. The data we use in this book are fake, and subject to the disclaimer presented in Chapter 1. In other words, although we pretend to go through the process of requesting data from CMS, we are simply describing how the process would occur, and we did not actually procure the data used in this book through that process.

2 See, for example, ResDAC’s Data Request for Student Research webpage, available at http://www.resdac.org/data-request-student-research.

3 The list of data sources is not exhaustive, and new sources of data do become available.

4 For instructional purposes, as part of our analytic work we will transpose some of the files we create in this chapter to create a file containing one record per line.

5 All this said, it is important to note that there are advantages to maintaining separate claim header and claim line data sets, and using PROC SQL to join the data sets when necessary. In your work, and consistent with our discussion about planning, you should consider the most efficient way to arrange your data and write your SAS code.

6 The reader may wish to request a prior or subsequent year of data to study some measures like hospital readmissions, or to make comparisons to prior years of performance outcomes (i.e., compare a provider’s performance in 2010 to her performance in 2009). For our simple examples, this will not be necessary.

7 See www.resdac.org and www.ccwdata.org, respectively.

8 For a federally funded study, the methods section should be included rather than writing up a new protocol per the packet sample.

9 The institutional files can also include other reference code files for things like condition and span codes. We will not use these files, so we will not discuss them further.

10 Although it is important to be aware of the fact that inpatient claims can spill over into more than one claim record, it does not happen often, so we will not write code to handle this situation.

11 Our code and explanation draws on a helpful SAS Learning Module provided by UCLA’s Institute for Digital Research and Education, available at http://www.ats.ucla.edu/stat/sas/modules/longtowide_data.htm.