But there is no benefit of visualization if you cannot make use of it in terms of understanding how and what the model has learned. To gain a better intuition on what the model has learned we will be using Tensorboard.

Tensorboard is a powerful tool which can be used to build various kinds of plots to monitor your models while training process, build deep learning architectures and also word embeddings. Let's build a tensorboard embedding projection and make use of it to do various kinds of analysis.

To build an embedding plot in Tensorboard we need to perform following steps:

- Collect the words and the respective tensors (300-D vectors) that we learned on previous steps.

- Create a variable in the graph which will hold the tensors.

- Initialize Projector

- Include an appropriately named embedding layer

- Store all the words with a .tsv formatted metadata file. These file types are used by tensorboard to load and display words.

- Link the .tsv metadata file to the projector object.

- Define a function which will store all the summary checkpoints

This is the code to complete these 7 steps:

vocab_list = points.word.values.tolist()

embeddings = all_word_vectors_matrix

embedding_var = tf.Variable(all_word_vectors_matrix, dtype='float32', name='embedding')

projector_config = projector.ProjectorConfig()

embedding = projector_config.embeddings.add()

embedding.tensor_name = embedding_var.name

LOG_DIR='./'

metadata_file = os.path.join("sample.tsv")

with open(os.path.join(LOG_DIR, metadata_file), 'wt') as metadata:

metadata.writelines("%s " % w.encode('utf-8') for w in vocab_list)

embedding.metadata_path = os.path.join(os.getcwd(), metadata_file)

# Use the same LOG_DIR where you stored your checkpoint.

summary_writer = tf.summary.FileWriter(LOG_DIR)

# The next line writes a projector_config.pbtxt in the LOG_DIR. TensorBoard will

# read this file during startup.

projector.visualize_embeddings(summary_writer, projector_config)

saver = tf.train.Saver([embedding_var])

with tf.Session() as sess:

# Initialize the model

sess.run(tf.global_variables_initializer())

saver.save(sess, os.path.join(LOG_DIR, metadata_file+'.ckpt'))

Once the tensorboard preparation module is executed, the binaries, metadata, and checkpoints get stored in the disk (as shown in the following figure):

To visualize the tensorboard, execute the following command in command prompt:

tensorboard --logdir=/path/of/the/checkpoint/

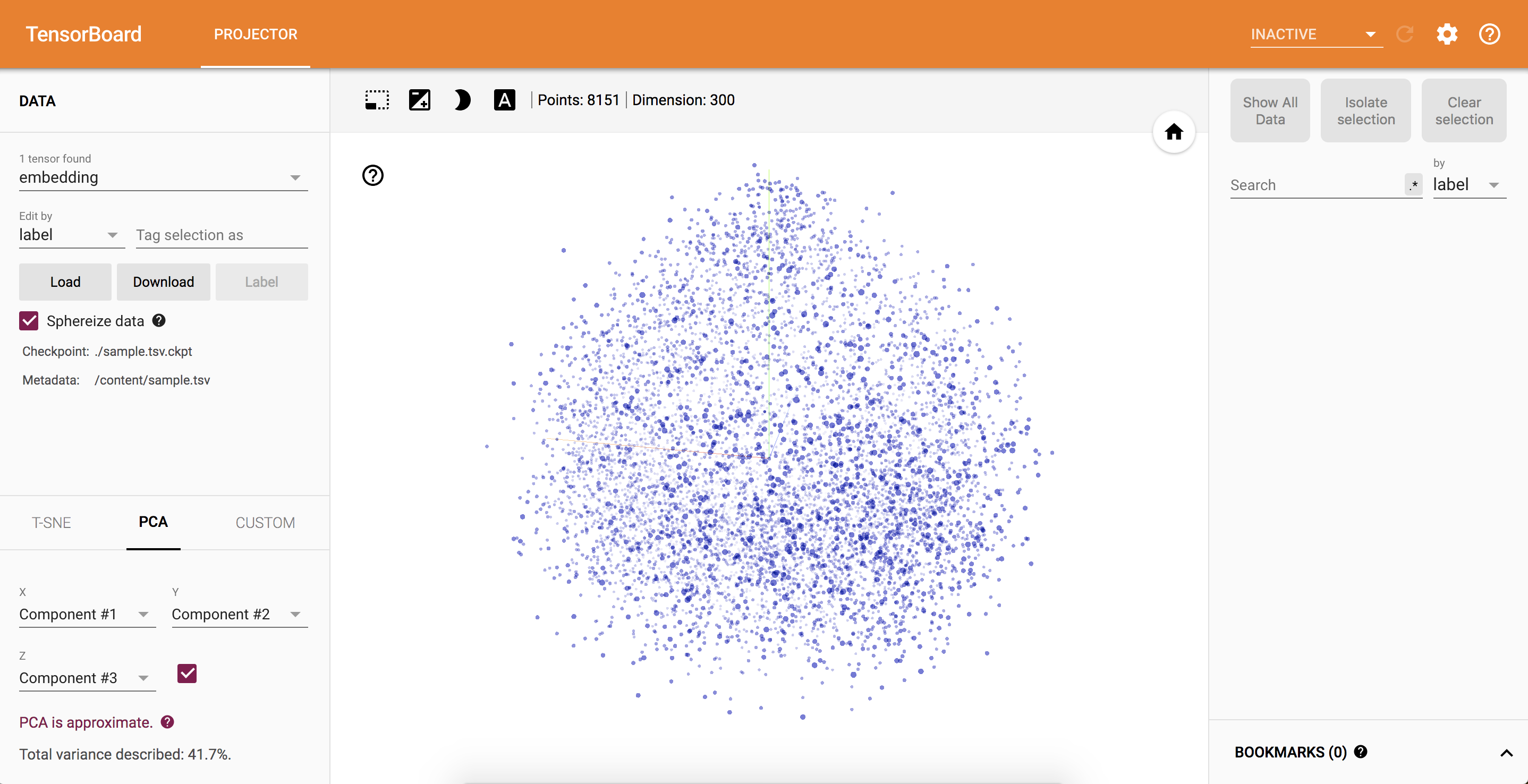

Now in browser open http://localhost:6006/#projector, this is tensorboard with all the data points projected in 3d space. You can zoom in, zoom out, look for the specific word and also re-train the model using t-SNE and visualize the cluster formation of the dataset: