1. What Is a Robot Anyway?

Robot Sensitivity Training Lesson #1: All robots are machines, but not all machines are robots.

Ask any 10 people what a robot is and you’re bound to get at least 10 different opinions: radio controlled toy dogs, automated bank teller machines, remote-controlled battle-bots, self-operating vacuum cleaners, drones that fly unattended, voice-activated smartphones, battery-operated action figures, titanium-plated hydraulic-powered battle chassis exoskeletons, and the list goes on.

It might not be easy to define what a robot is, but we all know one when we see one, right? The rapid growth of software-controlled devices has blurred the line between automated devices and robots. Just because an appliance or device is software-controlled does not make that appliance or device a robot. And being automated or self-operated is not enough for a machine to be given the privileged status of robot.

Many remote-controlled, self-operated devices and machines are given the status of robot but don’t make the cut. Table 1.1 shows a few of the wide-ranging and sometimes contradictory dictionary type definitions for robot.

Table 1.1 Wide-Ranging, Sometimes Contradictory Definitions for the term Robot

The Seven Criteria of Defining a Robot

Before we can embark on our mission to program robots, we need a good definition for what makes a robot a robot. So when does a self-operating, software-controlled device qualify as a robot? At ASC (Advanced Software Construction, where the authors build smart engines for robots and softbots), we require a machine to meet the following seven criteria:

1. It must be capable of sensing its external and internal environments in one or more ways through the use of its programming.

2. Its reprogrammable behavior, actions, and control are the result of executing a programmed set of instructions.

3. It must be capable of affecting, interacting with, or operating on its external environment in one or more ways through its programming.

4. It must have its own power source.

5. It must have a language suitable for the representation of discrete instructions and data as well as support for programming.

6. Once initiated it must be capable of executing its programming without the need for external intervention (controversial).

7. It must be a nonliving machine.

Let’s take a look at these criteria in more detail.

Criterion #1: Sensing the Environment

For a robot to be useful or effective it must have some way of sensing, measuring, evaluating, or monitoring its environment and situation. What senses a robot needs and how the robot uses those senses in its environment are determined by the task(s) the robot is expected to perform. A robot might need to identify objects in the environment, record or distinguish sounds, take measurements of materials encountered, locate or avoid objects by touch, and so on. Without the capability of sensing its environment and situation in some way it would be difficult for a robot to perform tasks. In addition to having some way to sense its environment and situation the robot has to have the capability of accepting instructions on how, when, where, and why to use its senses.

Criterion #2: Programmable Actions and Behavior

There must be some way to give a robot a set of instructions detailing:

![]() What actions to perform

What actions to perform

![]() When to perform actions

When to perform actions

![]() Where to perform actions

Where to perform actions

![]() Under what situations to perform actions

Under what situations to perform actions

![]() How to perform actions

How to perform actions

As we see throughout this book programming a robot amounts to giving a robot a set of instructions on what, when, where, why, and how to perform a set of actions.

Criterion #3: Change, Interact with, or Operate on Environment

For a robot to be useful it has to not only sense but also change its environment or situation in some way. In other words, a robot has to be capable of doing something to something, or doing something with something! A robot has to be capable of making a difference in its environment or situation or it cannot be useful. The process of taking an action or carrying out a task has to affect or operate on the environment or there wouldn’t be any way of knowing whether the robot’s actions were effective. A robot’s actions change its environment, scenario, or situation in some measurable way, and that change should be a direct result of the set of instructions given to the robot.

Criterion #4: Power Source Required

One of the primary functions of a robot is to perform some kind of action. That action causes the robot to expend energy. That energy has to be derived from some kind of power source, whether it be battery, electrical, wind, water, solar, and so on. A robot can operate and perform action only as long as its power source supplies energy.

Criterion #5: A Language Suitable for Representing Instructions and Data

A robot has to be given a set of instructions that determine how, what, where, when, and under which situations or scenarios an action is to take place. Some instructions for action are hard-wired into the robot. These are instructions the robot always executes as long as it has an active power source and regardless of the situation the robot is in. This is part of the “machine” aspect of a robot.

One of the most important differences between a regular machine and a robot is that a robot can receive new instructions without having to be rebuilt or have its hardware changed and without having to be rewired. A robot has a language used to receive instructions and commands. The robot’s language must be capable of representing commands and data used to describe the robot’s environment, scenario, or situation. The robot’s language facility must allow instruction to be given without the need for physical rewiring. That is, a robot must be reprogrammable through a set of instructions.

Criterion #6: Autonomy Without External Intervention

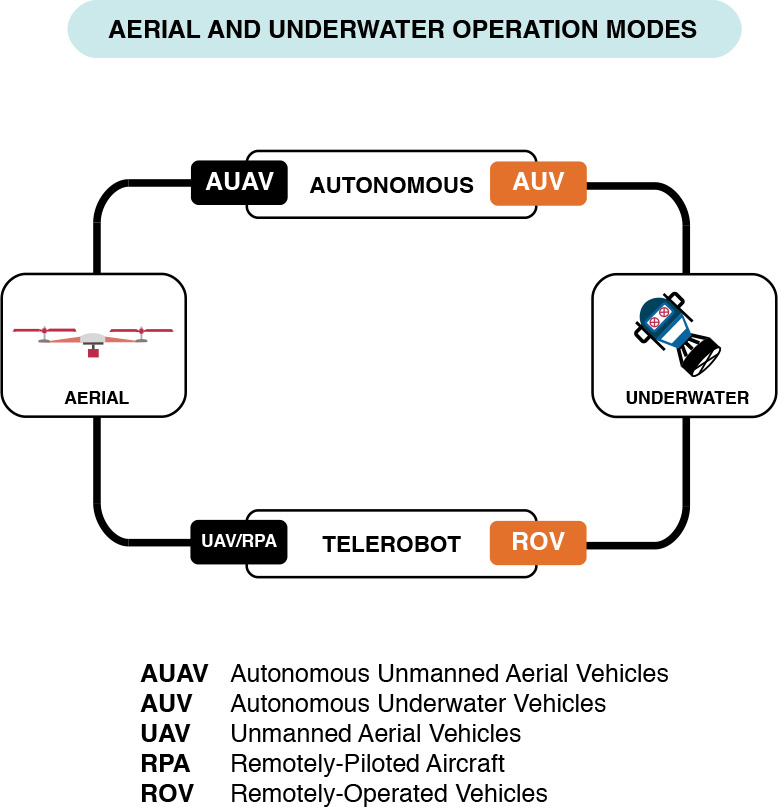

We take the hard line and a have a controversial position with this requirement for a robot. For our purposes, true robots are fully autonomous. This position is not shared among all roboticists. Figure 1.1 is from our robot boot camp and shows the two basic categories of robot operation.

Robot operation or robot control can be generally divided into two categories:

![]() Autonomous robots

Autonomous robots

Like autonomous robots, telerobots do receive instructions, but their instructions are real-time and received in real-time or delayed-time from external sources (humans, computers, or other machines). Instructions are sent with a remote control of some type, and the robot performs the action the instruction requires. Sometimes one signal from a remote control triggers multiple actions once the robot receives the signal, and in other cases it is a one-for-one proposition—each signal corresponds to one action.

The key point to note here is that without the remote control or external intervention the robot does not perform any action. On the other hand, an autonomous robot’s instructions are stored prior to the robot taking a set of actions. An autonomous robot has its marching orders and does not rely on remote controls to perform or initiate each action. To be clear, there are hybrid robots and variations on a theme where the telerobots have some autonomous actions and autonomous robots sometimes have a puppet mode.

But we make a distinction between fully autonomous and semiautonomous robots and throughout this book may refer to strong autonomy and weak autonomy. This book introduces the reader to the concepts and techniques involved in programming fully autonomous robots; remote-control programming techniques are not covered.

Criterion #7: A Nonliving Machine

Although plants and animals are sometimes considered programmable machines, we exclude them from the definition of robot. Many ethical considerations must be worked out as we build, program, and deploy robots. If we ever get to a point in robotics where the robots are considered living, then a change of the definition of robot will definitely be in order.

Robot Categories

While many types of machines may meet one or more of our seven criteria, to be considered a true robot, it is necessary that the machine meets at a minimum these seven criteria. To be clear, a robot may have more than these seven characteristics but not fewer. Fortunately, there is no requirement that a robot be humanlike, possess intelligence, or have emotions. In fact, most robots in use today have little in common with humans. Robots fall into three basic categories as shown in Figure 1.2.

The three categories of robot can also be further divided based on how they are operated and programmed. We previously described robots as being either telerobots or autonomous robots. So we can have ground-based, aerial, or underwater robots that are teleoperated or autonomous. Figure 1.3 shows a simple breakdown for aerial and underwater robots.

Aerial and Underwater Robots

Aerial robots are referred to as UAVs (unmanned aerial vehicles) or AUAVs (autonomous unmanned aerial vehicles). Not every UAV and AUAV qualifies as a robot. Remember our seven criteria. Most UAVs are just machines, but some meet all seven criteria and can be programmed to perform tasks. Underwater robots are referred to as ROVs (remotely operated vehicles) and AUVs (autonomous underwater vehicles). Like UAVs, most ROVs are just machines and don’t rise to the level of robot, but the ones that do can be programmed and controlled like any other robot.

As you might have guessed, aerial and underwater robots regularly face issues that ground-based robots typically don’t face. For instance, underwater robots must be programmed to navigate and perform underwater and must handle all the challenges that come with aquatic environments (e.g., water pressure, currents, water, etc.). Most ground-based robots are not programmed to operate in aquatic environments and are not typically designed to withstand getting wet.

Aerial robots are designed to take off and land and typically operate hundreds or thousands of feet above ground in the air. UAV robot programming has to take into consideration all the challenges that an airborne machine must face (e.g., what happens if an aerial robot loses all power?). A ground-based robot may run out of power and simply stop.

UAVs and ROVs can face certain disaster if there are navigational or power problems. But ground-based robots can also sometimes meet disaster. They can fall off edges, tumble down stairs, run into liquids, or get caught in bad weather. Ordinarily robots are designed and programmed to operate in one of these categories. It is difficult to build and program a robot that can operate in multiple categories. UAVs don’t usually do water and ROVs don’t usually do air.

Although most of the examples in this book focus on ground-based robots, the robot programming concepts and techniques that we introduce can be applied to robots in all three categories. A robot is a robot. Figure 1.4 shows a simplified robot component skeleton.

All true robots have the basic component skeleton shown in Figure 1.4. No matter which category a robot falls in (ground-based, aerial, or underwater), it contains at least four types of programmable components:

![]() One or more sensors

One or more sensors

![]() One or more actuators

One or more actuators

![]() One or more end-effectors/environmental effectors

One or more end-effectors/environmental effectors

![]() One or more microcontrollers

One or more microcontrollers

These four types of components are at the heart of most basic robot programming. In its simplest form, programming a robot boils down to programming the robot’s sensors, actuators, and end-effectors using the controller. Yes, there is more to programming a robot than just dealing with the sensors, actuators, effectors, and controllers, but these components make up most of the stuff that is in, on, and part of the robot. The other major aspect of robot programming involves the robot’s scenario, which we introduce later. But first let’s take a closer (although simplified) look at these four basic programmable robot components.

What Is a Sensor?

Sensors are the robot’s eyes and ears to the world. They are components that allow a robot to receive input, signals, data, or information about its immediate environment. Sensors are the robot’s interface to the world. In other words, the sensors are the robot’s senses.

Robot sensors come in many different types, shapes, and sizes. There are robot sensors that are sensitive to temperature; sensors that sense sound, infrared light, motion, radio waves, gas, and soil composition; and sensors that measure gravitational pull. Sensors can use a camera for vision and identify direction. Whereas human beings are limited to the five basic senses of sight, touch, smell, taste, and hearing, a robot has almost a limitless potential for sensors. A robot can have almost any kind of sensor and can be equipped with as many sensors as the power supply supports. We cover sensors in detail in Chapter 5, “A Close Look at Sensors.” But for now just think of sensors as the devices that provide a robot with input and data about its immediate situation or scenario.

Each sensor is responsible for giving the robot some kind of feedback about its current environment. Typically, a robot reads values from a sensor or sends values to a sensor. But sensors don’t just automatically sense. The robot’s programming dictates when, how, where, and to what extent to use the sensor. The programming determines what mode the sensor is in and what to do with feedback received from the sensor.

For instance, say I have a robot equipped with a light sensor. Depending on the sensor’s sophistication, I can instruct the robot to use its light sensor to determine whether an object is blue and then to retrieve only blue objects. If a robot is equipped with a sound sensor, I can instruct it to perform one action if it hears one kind of sound and execute another action if it hears a different kind of sound.

Not all sensors are created equal. For any sensor that you can equip a robot with, there is a range of low-end to high-end sensors in that category. For instance, some light sensors can detect only four colors, while other light sensors can detect 256 colors. Part of programming a robot includes becoming familiar with the robot’s sensor set, the sensor capabilities, and limitations. The more versatile and sophisticated a sensor is, the more elaborate the associated robot task can be.

A robot’s effectiveness can be limited by its sensors. A robot that has a camera sensor with a range limited to a few inches cannot see something a foot away. A robot with a sensor that detects only light in the infrared cannot see ultraviolet light, and so on. We discuss robot effectiveness in this book and describe it in terms of the robot skeleton (refer to Figure 1.4). We rate a robot’s potential effectiveness based on:

![]() Actuator effectiveness

Actuator effectiveness

![]() Sensor effectiveness

Sensor effectiveness

![]() End-effector effectiveness

End-effector effectiveness

![]() Microcontroller effectiveness

Microcontroller effectiveness

REQUIRE

By this simple measure of robot effectiveness, sensors count for approximately one-fourth of the potential robot effectiveness. We have developed a method of measuring robot potential that we call REQUIRE (Robot Effectiveness Quotient Used in Real Environments). We use REQUIRE as an initial litmus test in determining what we can program a robot to do and what we won’t be able to program that robot to do. We explain REQUIRE later and use it as a robot performance metric throughout the book. It is important to note that 25% of a robot’s potential effectiveness is determined by the quality of its sensors and how they can be programmed.

What Is an Actuator?

The actuator is the component(s) that provides movement of robot parts. Motors usually play the role of an actuator for a robot. The motor could be electric, hydraulic, pneumatic, or use some other source of potential energy. The actuator provides movement for a robot arm or movement for tractors, wheels, propellers, paddles, wings, or robotic legs. Actuators allow a robot to move its sensors and to rotate, shift, open, close, raise, lower, twist, and turn its components.

Programmable Robot Speed and Robot Strength

The actuators/motors ultimately determine how fast a robot can move. A robot’s acceleration is tied to its actuators. The actuators are also responsible for how much a robot can lift or how much a robot can hold. Actuators are intimately involved in how much torque or force a robot can generate. Fully programming a robot involves giving the robot instructions for how, when, why, where, and to what extent to use the actuators. It is difficult to imagine or build a robot that has no movement of any sort. That movement might be external or internal, but it is present.

![]() Tip

Tip

Recall criterion #3 from our list of robot requirements: It must be able to affect, interact with, or operate on its external environment in one or more ways through its programming.

It must be able to affect, interact with, or operate on its external environment in one or more ways through its programming.

It is very difficult to imagine or build a robot that has no movement of any sort. That movement might be external or internal but it is present.

A robot must operate on its environment in some way, and the actuator is a key component to this interaction. Like the sensor set, a robot’s actuators can enable or limit its potential effectiveness. For example, an actuator that permits a robot to rotate its arm only 45 degrees would not be effective in a situation requiring a 90-degree rotation. Or if the actuator could move a propeller only 200 rpm where 1000 rpm is required, that actuator would prevent the robot from properly performing the required task.

The robot has to have the right type, range, speed, and degree of movement for the occasion. Actuators are the programmable components providing this movement. Actuators are involved with how much weight or mass a robot can move. If the task requires that the robot lift a 2-liter or 40-oz. container of liquid, but the robot’s actuators only support a maximum of 12 ounces, the robot is doomed to fail.

A robot’s effectiveness is often measured by how useful it is in the specified situation or scenario. The actuators often dictate how much work a robot can or cannot perform. Like the sensors, the robot’s actuators don’t simply actuate by themselves; they must be programmed. Like sensors, actuators range from low-end, simple functions to high-end, adaptable, sophisticated functions. The more flexible the programming facilities, the better. We discuss actuators in more detail in Chapter 7, “Programming Motors and Servos.”

What Is an End-Effector?

The end-effector is the hardware that allows the robot to handle, manipulate, alter, or control objects in its environment. The end-effector is the hardware that causes a robot’s actions to have an effect on its environment or scenario. Actuators and end-effectors are usually closely related. Most end-effectors require the use of or interaction with the actuators.

![]() Note

Note

End-effectors also help to fulfill part of criterion #3 for our definition of robot. A robot has to be capable of doing something to something, or doing something with something! A robot has to make a difference in its environment or situation or it cannot be useful.

Robot arms, grippers, claws, and hands are common examples of end-effectors. End-effectors come in many shapes and sizes and have a variety of functions. For example, a robot can use components as diverse as drills, projectiles, pulleys, magnets, lasers, sonic blasts, or even fishnets for end-effectors. Like sensors and actuators, end-effectors are also under the control of the robot’s programming.

Part of the challenge of programming any robot is instructing the robot to use its end-effectors sufficiently to get the task done. The end-effectors can also limit a robot’s successful completion of a task. Like sensors, a robot can be equipped with multiple effectors allowing it to manipulate different types of objects, or similar objects simultaneously. Yes, in some cases the best end-effectors are the ones that work in groups of two, four, six, and so on. The end-effectors must be capable of interacting with the objects needed to be manipulated by the robot within the robot’s scenario or environment. We take a detailed look at end-effectors in Chapter 7.

What Is a Controller?

The controller is the component that the robot uses to “control” its sensors, end-effectors, actuators, and movement. The controller is the “brain” of the robot. The controller function could be divided between multiple controllers in the robot but usually is implemented as a microcontroller. A microcontroller is a small, single computer on a chip. Figure 1.5 shows the basic components of a microcontroller.

The controller or microcontroller is the component of the robot that is programmable and supports the programming of the robot’s actions and behaviors. By definition, machines without at least one microcontroller can’t be robots. But keep in mind being programmable is only one of seven criteria.

![]() Note

Note

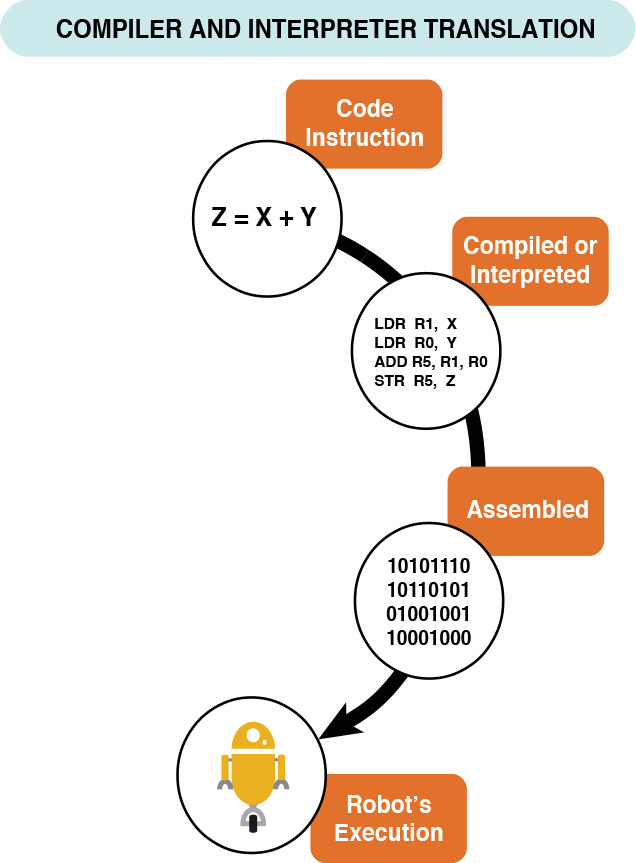

The processor executes instructions. A processor has its own kind of machine language. A set of instructions sent to a processor must ultimately be translated into the language of the processor. So if we start out with a set of instructions in English we want to give a processor to execute, that set of instructions must ultimately be translated into instructions the processor inside the microcontroller can understand and execute. Figure 1.6 shows the basic steps from “bright idea to processor” machine instruction.

The controller controls and is the component that has the robot’s memory. Note in Figure 1.5 there are four components. The processor is responsible for calculations, symbolic manipulation, executing instructions, and signal processing. The input ports receive signals from sensors and send those signals to the processor for processing. The processor sends signals or commands to the output ports connected to actuators so they can perform action.

The signals sent to sensors cause the sensors to be put into sensor mode. The signals sent to actuators initialize the actuators, set motor speeds, cause motor movement, stop motor movement, and so on.

So the processor gets feedback through the input ports from the sensors and sends signals and commands to the output ports that ultimately are directed to motors; robot arms; and robot-movable parts such as tractors, pulley wheels, and other end-effectors.

In this book, BURT, discussed previously in the robot boot camp, is used to translate bright ideas to machine language, so you can be clear on what takes place during the robot programming process. Unless you have a microcontroller that uses English or some other natural language as its internal language, all instructions, commands, and signals have to be translated from whatever form they start out in to a format that can be recognized by the processor within the microcontroller. Figure 1.7 shows a simple look at the interaction between the sensors, actuators, end-effectors, and the microcontroller.

The memory component in Figure 1.7 is the place where the robot’s instructions are stored and where the robot’s current operation data is stored. The current operation data includes data from the sensors, actuators, processor, or any other peripherals needed to store data or information that must be ultimately processed by the controller’s processor. We have in essence reduced a robot to its basic robot skeleton:

![]() Sensors

Sensors

![]() Actuators

Actuators

![]() Microcontrollers

Microcontrollers

At its most basic level, programming a robot amounts to manipulating the robot’s sensors, end-effectors, and motion through a set of instructions given to the microcontroller. Regardless of whether we are programming a ground-based, aerial, or underwater robot, the basic robot skeleton is the same, and a core set of primary programming activities must be tackled. Programming a robot to autonomously execute a task requires we communicate that task in some way to the robot’s microcontroller. Only after the robot’s microcontroller has the task can it be executed by the robot. Figure 1.8 shows our translated robot skeleton.

Figure 1.8 shows what the basic robot components are analogous to. Robot sensors, actuators, and end-effectors can definitely come in more elaborate forms, but Figure 1.8 shows some of the more commonly used components and conveys the basic ideas we use in this book.

![]() Note

Note

We place a special emphasis on the microcontroller because it is the primary programmable component of a robot, and when robot programming is discussed, the microcontroller is usually what is under discussion. The end-effectors and sensors are important, but the microcontroller is in the driver’s seat and is what sets and reads the sensors and controls the movement of the robots and the use of the end-effectors. Table 1.2 lists some of the commonly used microcontrollers for low-cost robots.

Although most of examples in this book were developed using Atmega, ARM7, and ARM9 microcontrollers, the programming concepts we introduce can be applied to any robot that has the basic robot skeleton referred to in Figure 1.4 and that meets our seven robot criteria.

What Scenario Is the Robot In?

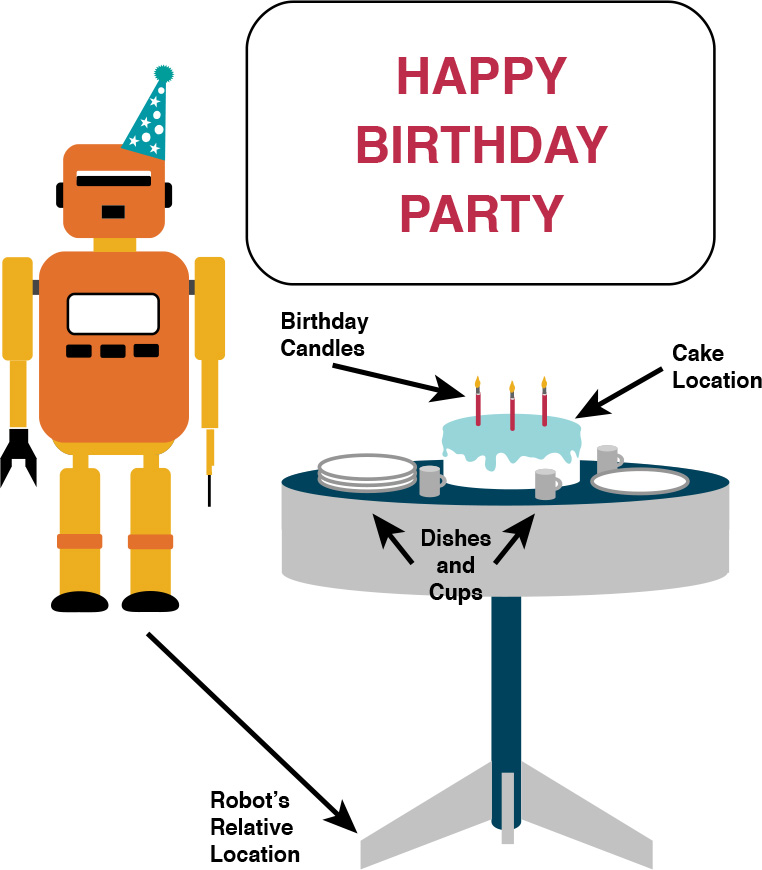

The robot skeleton tells only half the story of programming a robot. The other half, which is not part of the actual robot, is the robot’s scenario or situation. Robots perform tasks of some type in a particular context. For a robot to be useful it must cause some kind of effect in context. The robot’s tasks and environments are not just random, unspecified notions. Useful robots execute tasks within specified scenarios or situations. The robot is given some role to play in the scenario and situation. Let’s take, for example, a robot at a birthday party in Figure 1.9.

We want to assign the robot, let’s call him BR-1, two tasks:

![]() Light the candles on the cake.

Light the candles on the cake.

![]() Clear away the dishes and cups after the party ends.

Clear away the dishes and cups after the party ends.

The birthday party is the robot’s scenario. The robot, BR-1, has the role of lighting the candles and clearing away the dishes and cups. Scenarios come with expectations. Useful robots are expectation driven. There are expectations at a birthday party. A typical birthday party has a location, cake, ice cream, guests, someone celebrating a birthday, a time, and possibly presents. For BR-1 to carry out its role at the birthday party, it must have instructions that address the scenario or situation-specific things like

![]() The location of the cake

The location of the cake

![]() How many candles to light

How many candles to light

![]() The robot’s location relative to the cake

The robot’s location relative to the cake

![]() When to light the candles

When to light the candles

![]() The number of dishes and cups and so on

The number of dishes and cups and so on

The robot’s usefulness and success are determined by how well it fulfills its role in the specified situation. Every scenario or situation has a location, a set of objects, conditions, and a sequence of events. Autonomous robots are situated within scenarios and are expectation driven. When a robot is programmed, it is expected to participate in and affect a situation or scenario in some way. Representing and communicating the scenario and set of expectations to the robot is the second half of the story of robot programming.

![]() Tip

Tip

In a nutshell, programming a robot to be useful amounts to programming the robot to use its sensors and end-effectors to accomplish its role and meet its expectations by executing a set of tasks in some specified situation or scenario.

Programming a useful robot can be broken down into two basic areas:

![]() Instructing the robot to use its basic capabilities to fulfill some set of expectations

Instructing the robot to use its basic capabilities to fulfill some set of expectations

![]() Explaining to the robot what the expectations are in some given situation or scenario

Explaining to the robot what the expectations are in some given situation or scenario

An autonomous robot is useful when it meets its expectations in the specified situation or scenario through its programming without the need for human intervention. So then half the job requires programming the robot to execute some task or set of tasks.

The other half requires instructing the robot to execute its function in the specified scenario or scenarios. Our approach to robot programming is scenario driven. Robots have roles in situations and scenarios, and those situations and scenarios must be part of the robot’s instructions and programming for the robot to be successful in executing its task(s).

Giving the Robot Instructions

If we expect a robot to play some role in some scenario, how do we tell it what to do? How do we give it instructions? In the answers to these questions lie the adventure, challenge, wonder, dread, and possibly regret in programming a robot.

Humans use natural languages, gestures, body language, and facial expressions to communicate. Robots are machines and only understand the machine language of the microcontroller. Therein lies the rub. We speak and communicate one way and robots communicate another. And we do not yet know how to build robots to directly understand and process human communication. So even if we have a robot that has the sensors, end-effectors, and capabilities to do what we require it to do, how do we communicate the task? How do we give it the right set of instructions?

Every Robot Has a Language

So exactly what language does a robot understand? The native language of the robot is the language of the robot’s microcontroller. No matter how one communicates to a robot, ultimately that communication has to be translated into the language of the microcontroller. The microcontroller is a computer, and most computers speak machine language.

Machine Language

Machine language is made of 0s and 1s. So, technically, the only language a robot really understands is strings and sequences of 0s and 1s. For example:

0000001, 1010101, 00010010, 10101010, 11111111

And if you wanted to (or were forced to) write a set of instructions for the robot in the robot’s native language, it would consist of line upon line of 0s and 1s. For example, Listing 1.1 is a simple ARM machine language (sometimes referred to as binary language) program.

Listing 1.1 ARM Machine Language Program

11100101100111110001000000010000

11100101100111110001000000001000

11100000100000010101000000000000

11100101100011110101000000001000

This program takes numbers from two memory locations in the processor, adds them together, and stores the result in a third memory location. Most robot controllers speak a language similar to the machine language shown in Listing 1.1.

The arrangement and number of 0s and 1s may change from controller to controller, but what you see is what you get. Pure machine language can be difficult to read, and it’s easy to flip a 1 or 0, or count the 1s and 0s incorrectly.

Assembly Language

Assembly language is a more readable form of machine language that uses short symbols for instructions and represents binary using hexadecimal or octal notation. Listing 1.2 is an assembly language example of the kind of operations shown in Listing 1.1.

Listing 1.2 Assembly Language Version of Listing 1.1

LDR R1, X

LDR R0, Y

ADD R5, R1, R0

STR R5, Z

While Listing 1.2 is more readable than Listing 1.1 and although entering assembly language programs is less prone to errors than entering machine language programs, the microcontroller assembly language is still a bit cryptic. It’s not exactly clear we’re taking two numbers X and Y, adding them together, and then storing the result in Z.

![]() Note

Note

Machine language is sometimes referred to as a first-generation language. Assembly language is sometimes referred to as a second-generation language.

In general, the closer a computer language gets to a natural language the higher the generation designation it receives. So a third-generation language is closer to English than a second-generation language, and a fourth-generation language is closer than a third-generation language, and so on.

So ideally, we want to use a language as close to our own language as possible to instruct our robot. Unfortunately, a higher generation of language typically requires more hardware resources (e.g., circuits, memory, processor capability) and requires the controller to be more complex and less efficient. So the microcontrollers tend to have only second-generation instruction sets.

What we need is a universal translator, something that allows us to write our instructions in a human language like English or Japanese and automatically translate it into machine language or assembly language. The field of computers has not yet produced such a universal translator, but we are halfway there.

Meeting the Robot’s Language Halfway

Compilers and interpreters are software programs that convert one language into another language. They allow a programmer to write a set of instructions in a higher generation language and then convert it to a lower generation language. For example, the assembly language program from Listing 1.2

LDR R1, X

LDR R0, Y

ADD R5, R1, R0

STR R5, Z

can be written using the instruction

Z = X + Y

Notice in Figure 1.10 that the compiler or interpreter converts our higher-level instruction into assembly language, but an assembler is the program that converts the assembly language to machine language. Figure 1.10 also shows a simple version of the idea of a tool-chain.

Tool-chains are used in the programming of robots. Although we can’t use natural languages yet, we have come a long way from assembly language as the only choice for programming robots. In fact, today we have many higher-level languages for programming robots, including graphic languages such as Labview, graphic environments such as Choreograph, puppet modes, and third-, fourth-, and fifth-generation programming languages.

Figure 1.11 shows a taxonomy of some commonly used generations of robot programming languages.

Figure 1.11 A taxonomy of some commonly used robot programming languages and graphical programming environments

Graphic languages are sometimes referred to as fifth- and sixth-generation programming languages. This is in part because graphic languages allow you to express your ideas more naturally rather than expressing your ideas from the machine’s point of view. So one of the challenges of instructing or programming robots lies in the gap between expressing the set of instructions the way you think of them versus expressing the set of instructions the way a microcontroller sees them.

Graphical robot programming environments and graphic languages attempt to address the gap by allowing you to program the robots using graphics and pictures. The Bioloid1 Motion Editor and the Robosapien2 RS Media Body Con Editor are two examples of this kind of environment and are shown in Figure 1.11.

1. Bioloid is a modular robotics system manufactured by Robotis.

2. Robosapien is a self-contained robotics system manufactured by Wowee.

These kinds of environments work by allowing you to graphically manipulate or set the movements of the robot and initial values of the sensors and speeds and forces of the actuators. The robot is programmed by setting values and moving graphical levers and graphical controls. In some cases it’s a simple matter of filling out a form in the software that has the information and data that needs to be passed to the robot. The graphical environment isolates the programmer from the real work of programming the robot.

It is important to keep in mind that ultimately the robot’s microcontroller is expecting assembly/machine language. Somebody or something has to provide the native instructions. So these graphical environments have internal compilers and interpreters doing the work of converting the graphical ideas into lower-level languages and ultimately into the controller’s machine language. Expensive robotic systems have had these graphical environments for some time, but they are now available for low-cost robots. Table 1.3 lists several commonly used graphical environments/languages for low-cost robotic systems.

Puppet Modes

Closely related to the idea of visually programming the robot is the notion of direct manipulation or puppet mode. Puppet modes allow the programmer to manipulate a graphic of the robot using the keyboard, mouse, touchpad, touchscreen, or some other pointing device, as well as allowing the programming to physically manipulate the parts of the robot to the desired positions.

The puppet mode acts as a kind of action recorder. If you want the robot’s head to turn left, you can point the graphic of the robot’s head to the left and the puppet mode remembers that. If you want the robot to move forward, you move the robot’s parts into the forward position, whether it be legs, wheels, tractors, and so on, and the puppet mode remembers that. Once you have recorded all the movements you want the robot to perform, you then send that information to the robot (usually using some kind of cable or wireless connection), and the robot physically performs the actions recorded in puppet mode.

Likewise, if the programmer puts the robot into puppet mode (provided the robot has a puppet mode), then the physical manipulation of the robot that the programmer performs is recorded. Once the programmer takes the robot out of record mode, the robot executes the sequence of actions recorded during the puppet mode session. Puppet mode is a kind of programming by imitation. The robot records how it is being manipulated, remembers those manipulations, and then duplicates the sequence of actions. Using a puppet mode allows the programmer to bypass typing in a sequence of instructions and relieves the programmer of having to figure out how to represent a sequence of actions in a robot language. The availability of visual languages, visual environments, and puppet modes for robot systems will only increase, and their sophistication will increase also. However, most visual programming environments have a couple of critical shortcomings.

How Is the Robot Scenario Represented in Visual Programming Environments?

Recall that half the effort of programming an autonomous robot to be effective requires instructing the robot on how to execute its role within a scenario or situation. Typically, visual robot programming environments such as those listed in Table 1.3 have no easy way to represent the robot’s situation or scenario. They usually only include a simulation of a robot in a generic space with a potential simple object.

For example, there would be no easy way to represent our birthday party scenario in the average visual robot environment. The visual/graphical environments and puppet mode approach are effective at programming a sequence of movements that don’t have to interact with anything. But autonomous robots need to affect the environment to be useful and need to be instructed about the environment to be effective, and graphical environments fall short in this regard. The robot programming we do in this book requires a more effective approach.

Midamba’s Predicament

Robots have a language. Robots can be instructed to execute tasks autonomously. Robots can be instructed about situations and scenarios. Robots can play roles within situations and scenarios and effect change in their environments. This is the central theme of this book: instructing a robot to execute a task in the context of a specific situation, scenario, or event. And this brings us to the unfortunate dilemma of our poor stranded Sea-Dooer, Midamba.

Robot Scenario #1

When we last saw Midamba in our robot boot camp in the introduction, his electric-powered Sea-Doo had run low on battery power. Midamba had a spare battery, but the spare had acid corrosion on the terminals. He had just enough power to get to a nearby island where he might possibly find help.

Unfortunately for Midamba, the only thing on the island was an experimental chemical facility totally controlled by autonomous robots. But all was not lost; Midamba figured if there was a chemical in the facility that could neutralize the battery acid, he could clean his spare battery and be on his way.

A front office to the facility was occupied by a few robots. There were also some containers, beakers, and test tubes of chemicals, but he had no way to determine whether they could be used. The office was separated from a warehouse area where other chemicals were stored, and there was no apparent way into the area. Midamba could see the robots from two monitors where robots were moving about in the warehouse area transporting containers, marking containers, lifting objects, and so on.

In the front office was also a computer, a microphone, and a manual titled Robot Programming: A Guide to Controlling Autonomous Robots by Cameron Hughes and Tracey Hughes. Perhaps with a little luck he could find something in the manual that would instruct him on how to program one of the robots to find and retrieve the chemical he needed. Figure 1.12 and Figure 1.13 shows the beginning of Midamba’s Predicament.

So we follow Midamba as an example, as he steps through the manual. The first chapter he turns to is “Robot Vocabularies.”

What’s Ahead?

In Chapter 2, “Robot Vocabularies,” we will discuss the language of the robot and how human language will be translated into the language the robot can understand in order for it to perform the tasks you want it to perform autonomously.