2. Robot Vocabularies

Robot Sensitivity Training Lesson #2: A robot’s actions are only as good as the instructions given that describe those actions.

Robots have language. They speak the language of the microcontroller. Humans speak what are called natural languages (e.g., Cantonese, Yoruba, Spanish). We communicate with each other using natural languages, but to communicate to robots we have to either build robots that understand natural languages or find some way to express our intentions for the robot in a language it can process.

At this point in time little progress has been made in building robots that can fully understand natural languages. So we are left with the task of finding ways to express our instructions and intentions in something other than natural language.

Recall that the role of the interpreter and compiler (shown previously in Figure 1.10 and here again in Figure 2.1) is to translate from a higher-level language like Java or C++ to a lower-level language like assembly, byte-code, or machine language (binary).

Figure 2.1 The role of the interpreter and compiler translation from a higher-level language to a lower-level language

![]() Note

Note

Important Terminology: The controller or microcontroller is the component of the robot that is programmable and supports the programming of the robot’s actions and behaviors. By definition, machines without at least one microcontroller are not robots.

One strategy is to meet the robot halfway. That is, find a language easy for humans to use and not too hard to translate into the language of the robot (i.e., microcontroller), and then use a compiler or interpreter to do the translation for us. Java and C++ are high-level languages used to program robots. They are third-generation languages and are a big improvement over programming directly in machine language or assembly language (second generation), but they are not natural languages. It still requires additional effort to express human ideas and intentions using them.

Why the Additional Effort?

It usually takes several robot instructions to implement one high-level or human language instruction. For example, consider the following simple and single human language instruction:

Robot, hold this can of oil

This involves several instructions on the robot side. Figure 2.2 shows a partial BURT (Basic Universal Robot Translator) translation of this instruction to Arduino sketch code (C Language) that could be used to communicate this instruction to a robot and RS Media—a bipedal robot from WowWee that uses an ARM9 microcontroller with embedded Linux—code (Java Language).

Figure 2.2 A partial BURT translation of a natural language instruction translated to RS Media code (Java Language) and to Arduino sketch code (C Language).

Notice the BURT translation requires multiple lines of code. In both cases, a compiler is used to translate from a high-level language to ARM assembly language of the robot. The Java code is further removed from ARM than the Arduino language. The Java compiler compiles the Java into machine independent byte-code, which requires another layer to translate it into a machine-specific code. Figure 2.3 shows some of the layers involved in the translation of C and Java programs to ARM assembly.

Many of the microcontrollers used to control low-cost robots are ARM-based microcontrollers. If you are interested in using or understanding assembly language to program a low-cost robot, the ARM controller is a good place to start.

![]() Note

Note

Important Terminology: Assembly language is a more readable form of machine language that uses short symbols for instructions and represents binary using hexadecimal or octal notation.

Notice in Figure 2.3 the software layers required to go from C or Java to ARM assembly. But what about the work necessary for the translation between “robot hold this can of oil” and the C or Java code? The BURT translator shows us that we start out with an English instruction and somehow we translate that into C and Java.

How is this done? Why is this done? Notice the instructions in the BURT translation do not mention can or oil or the concept of “hold this.” These concepts are not directly part of the microcontroller language, and they are not built-in commands in the high-level C or Java languages.

They represent part of a robot vocabulary we want to use and we want the robot to understand (but does not yet exist).

![]() Note

Note

A robot vocabulary is the language you use to assign a robot tasks for a specific situation or scenario. A primary function of programming useful autonomous robots is the creation of robot vocabularies.

However, before we can decide what our robot vocabulary will look like we need to be familiar with the robot’s profile and its capabilities. Consider these questions:

![]() What kind of robot is it?

What kind of robot is it?

![]() What kind and how many sensors does the robot have?

What kind and how many sensors does the robot have?

![]() What kind and how many end-effectors does the robot have?

What kind and how many end-effectors does the robot have?

![]() How does it move and so on?

How does it move and so on?

The robot vocabulary has to be tied to the robot’s capabilities. For example, we cannot give a robot an instruction to lift something if the robot has no hardware designed for lifting. We cannot give a robot an instruction to move to a certain location if the robot is not mobile.

![]() Note

Note

Important Terminology: Sensors are the robot’s eyes and ears. They are components that allow a robot to receive input, signals, data, or information about its immediate environment. Sensors are the robot’s interface to the world.

The end-effector is the hardware that allows the robot to handle, manipulate, alter, or control objects in its environment. The end-effector is the hardware that causes a robot’s actions to have an effect on its environment.

We can approach the robot and its vocabulary in two ways:

![]() Create a vocabulary and then obtain a robot that has the related capabilities.

Create a vocabulary and then obtain a robot that has the related capabilities.

![]() Create a vocabulary based on the capabilities of the robot that will be used.

Create a vocabulary based on the capabilities of the robot that will be used.

In either case, the robot’s capabilities must be identified. A robot capability matrix can be used for this purpose.

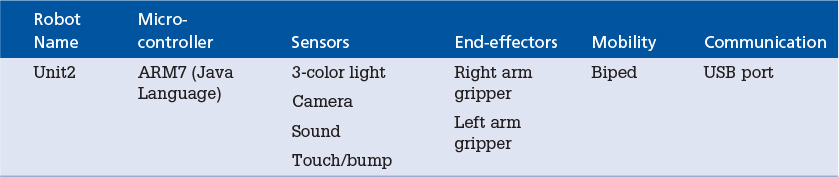

Table 2.1 shows a sample capability matrix for the robot called Unit2, the RS Media robot we will use for some of the examples in this book.

A capability matrix gives us an easy-to-use reference for the robot’s potential skill set. The initial robot vocabulary has to rely on the robot’s base set of capabilities.

You are free to design your own robot vocabularies based on the particular robot capability and scenarios your robot will be used in. Notice in Table 2.1 that the robot Unit2 has a camera sensor and color sensors. This allows Unit2 to see objects. This means our robot vocabulary could use words like watch, look, photograph, or scan.

![]() Note

Note

There is no one right robot vocabulary. Sure, there are some common actions that robots use, but the vocabulary chosen for a robot in any given situation is totally up to the designer or programmer.

Being a biped means that Unit2 is mobile. Therefore, the vocabulary could include words like move, travel, walk, or proceed. The fact that Unit2 has a USB port suggests our robot vocabulary could use words like open, connect, disconnect, send, and receive. The initial robot vocabulary forms the basis of the instructions you give to the robot causing it to perform the required tasks.

If we look again at the capability matrix in Table 2.1, a possible vocabulary that would allow us to give Unit2 instructions might include

![]() Travel forward

Travel forward

![]() Scan for a blue can of oil

Scan for a blue can of oil

![]() Lift the blue can of oil

Lift the blue can of oil

![]() Photograph the can of oil

Photograph the can of oil

One of the most important concepts you are introduced to in this book is how to come up with a robot vocabulary, how to implement a vocabulary using the capabilities your robot has, and how to determine the vocabularies the robot’s microcontroller can process. In the preceding example, the Unit2’s ARM9 microcontroller would not recognize the following command:

![]() Travel forward or scan for a blue can of oil

Travel forward or scan for a blue can of oil

But that is the vocabulary we want to use with the robot. In this case, the tasks that we want the robot to perform involve traveling forward, looking for a blue can of oil, lifting it up, and then photographing it. We want to be able to communicate this set of tasks as simply as we can.

We have some situation or scenario we want an autonomous robot to play a role in. We develop a list of instructions that capture or describe the role we want the robot to play, and we want that list of instructions to be representative of the robot’s task. Creating a robot vocabulary is part of the overall process of successfully programming an autonomous robot that performs useful roles in particular situations or scenarios that need a vocabulary.

Identify the Actions

One of the first steps in coming up with a robot vocabulary is to create the capability matrix and then based on the matrix identify the kinds of actions the robot can perform. For example, in the case of Unit2 from Table 2.1, the actions might be listed as

![]() Scan

Scan

![]() Lift

Lift

![]() Travel

Travel

![]() Stop

Stop

![]() Connect

Connect

![]() Disconnect

Disconnect

![]() Put Down

Put Down

![]() Drop

Drop

![]() Move Forward

Move Forward

![]() Move Backward

Move Backward

Eventually we have to instruct the robot what we mean by scan, travel, connect, and so on. We described Unit2 as potentially being capable of scanning for a blue can of oil. Where does “blue can of oil” fit into our basic vocabulary?

Although Table 2.1 specifies our robot has color sensors, there is nothing about cans of oil in the capability matrix. This brings us to another important point that we expand on throughout the book:

![]() Half the robot’s vocabulary is based on the robot’s profile or capabilities.

Half the robot’s vocabulary is based on the robot’s profile or capabilities.

![]() The other half of the robot’s vocabulary is based on the scenario or situation where the robot is expected to operate.

The other half of the robot’s vocabulary is based on the scenario or situation where the robot is expected to operate.

These are important concepts essential to programming useful autonomous robots. The remainder of this book is spent elaborating on these two important ideas.

The Autonomous Robot’s ROLL Model

Designing and implementing how you are going to describe your robot to use its basic capabilities, and designing and implementing how you are going to describe to your robot its role in a given situation or scenario is what programming autonomous robots is all about. To program your robot you need to be able to describe

![]() What to do

What to do

![]() When to do it

When to do it

![]() Where to do it

Where to do it

![]() How to do it

How to do it

Of equal importance is describing to your robot what the “it” is, or what does “where” or “when” refer to. As we see, this requires several levels of robot vocabulary.

![]() Note

Note

In this book, we use the word ontology to refer to a description of a robot scenario or robot situation.

For our purposes, an autonomous robot has seven levels of robot vocabulary that we call the robot’s ROLL (Robot Ontology Language Level) model. Figure 2.4 shows the seven levels of the robot’s ROLL model.

For now, we focus only on the robot programming that can occur at any one of the seven levels. The seven levels can be roughly divided into two groups:

![]() Robot capabilities

Robot capabilities

![]() Robot roles

Robot roles

![]() Tip

Tip

Keep the ROLL model handy because we refer to it often.

Robot Capabilities

The robot vocabulary in levels 1 through 4 is basically directed at the capabilities of the robot—that is, what actions the robot can take and how those actions are implemented for the robot’s hardware. Figure 2.5 shows how the language levels are related.

The level 4 robot vocabulary is implemented by level 3 instructions. We expand on this later. We use level 3 instructions to define what we mean by the level 4 robot base vocabulary. As you recall, level 3 instructions (third-generation languages) are translated by interpreters or compilers into level 2 or level 1 microcontroller instructions.

Robot Roles in Scenarios and Situations

Levels 5 through 7 from the robot ROLL model shown in Figure 2.4 handle the robot vocabulary used to give the robot instructions about particular scenarios and situations. Autonomous robots execute tasks for particular scenarios or situations.

Let’s take, for example, BR-1, our birthday robot from Chapter 1, “What Is a Robot Anyway?”, Figure 1.9. We had a birthday party scenario where the robot’s task was to light the candles and remove the plates and cups after the party was over.

A situation is a snapshot of an event in the scenario. For instance, one situation for BR-1, our birthday party robot, is a cake on a table with unlit candles. Another situation is the robot moving toward the cake. Another situation is positioning the lighter over the candles, and so on. Any scenario can be divided into a collection of situations. When you put all the situations together you have a scenario. The robot is considered successful if it properly executes all the tasks in its role for a given scenario.

Level 5 Situation Vocabularies

Level 5 has a vocabulary that describes particular situations in a scenario. Let’s take a look at some of the situations from Robot Scenario #1 in Chapter 1, where Midamba finds himself stranded. There are robots in a research facility; there are chemicals in the research facility. Some chemicals are liquids; others are gases. Some robots are mobile and some are not. The research facility has a certain size, shelves, and containers. The robots are at their designated locations in the facility. Figure 2.6 shows some of the things that have to be defined using the level 5 vocabulary for Midamba’s predicament.

One of the situations Midamba has in Scenario #1 requires a vocabulary the robots can process that handles the following:

![]() Size of their area in the research facility

Size of their area in the research facility

![]() Robot’s current location

Robot’s current location

![]() Locations of containers in the area

Locations of containers in the area

![]() Sizes of containers in the area

Sizes of containers in the area

![]() Heights of shelves

Heights of shelves

![]() Types of chemicals and so on

Types of chemicals and so on

![]() Note

Note

Notice that a robot vocabulary that describes these properties differs from the kind of vocabulary necessary to describe the robot’s basic capabilities. Before each action a robot takes, we can describe the current situation. After each action the robot takes, we can describe the current situation. The actions a robot takes always change the situation in one or more ways.

Level 6 Task Vocabulary

A level 6 vocabulary is similar to a level 4 vocabulary in that it describes actions and capabilities of the robot. The difference is that level 6 vocabularies describe actions and capabilities that are situation specific.

For example, if Midamba had access to our Unit2 robot with the hardware listed in the capability matrix of Table 2.1, an appropriate task vocabulary would support instructions like

![]() Action 1. Scan the shelves for a blue can of oil.

Action 1. Scan the shelves for a blue can of oil.

![]() Action 2. Measure the grade of the oil.

Action 2. Measure the grade of the oil.

![]() Action 3. Retrieve the can of oil if it is grade A and contains at least 2 quarts.

Action 3. Retrieve the can of oil if it is grade A and contains at least 2 quarts.

![]() Note

Note

Notice all the actions involve vocabulary specific to a particular situation. So the level 6 task vocabulary can be described as a situation specific level 4 vocabulary.

Level 7 Scenario Vocabulary

Our novice robot programmer Midamba has a scenario where one or more robots in the research area must identify some kind of chemical that can help him charge his primary battery or neutralize the acid on his spare battery. Once the chemical is identified, the robots must deliver it to him. The sequence of tasks in the situations make up the scenario. The scenario vocabulary for Unit2 would support a scenario description like the following:

Unit2, from your current position scan the shelves

until you find a chemical that can either charge

my primary battery or clean the acid from my

spare battery and then retrieve that chemical

and return it to me.

Ideally, the level 7 robot vocabulary would allow you to program the robot to accomplish this set of tasks in a straightforward manner. Utilitarian autonomous robots have to have some kind of level 7 vocabulary to be useful and effective. The relationship between robot vocabulary levels 5 through 7 is shown in Figure 2.7.

The vocabulary of levels 5 through 7 allows the programmer to describe the robot’s role in a given situation and scenario and how the robot is to accomplish that role.

What’s Ahead?

The first part of this book focuses on programming robot capabilities, such as sensor, movement, and end-effector programming. The second part of this book focuses on programming robots to execute roles in particular situations or scenarios. Keep in mind you can program a robot at any of these levels.

In some cases, some programming may already be done for you. But truly programming an autonomous robot to be successful in some scenario requires an appropriate ROLL model and implementation. Before we describe in more detail Midamba’s robots and their scenarios, we describe how to RSVP your robot in Chapter 3, “RSVP: Robot Scenario Visual Planning.”