6.2 Wizard of Oz

a human ‘wizard’ controls how your sketch responds to a person’s interactions

Most of the interactions seen so far are fairly simple state transition diagrams. We’ve also shown how prospective ‘users’ can actually drive the animation of such systems by actions, giving the illusion that the system actually exists. Yet this only works when we know what user action will lead from one state to another. There are two situations where this next step cannot be anticipated easily.

1. Difficulty of understanding input. Unlike a simple button press or menu selection, your animated sketch may not be able to understand the user’s input action, e.g., gestures, speech, or the meaning of entered text.

2. Responding to input. The response of your animated sketch depends on the user’s action and thus cannot be anticipated, e.g., the actual text entered.

The problem is that your animated sketch has no real back-end to understand and to respond to complex input. The solution is to make a human – a Wizard – the back-end. We’ll illustrate how this works by examples that people have actually used to examine futuristic system ideas.

Example 1: The Listening Typewriter

In 1984, senior executives did not normally use computers. The issue was that they saw typing as something that secretaries did. To solve this problem, John Gould and his colleagues at IBM wanted to develop a ‘listening typewriter’, where executives would dictate to the computer, using speech to compose and edit letters, memos, and documents. While such speech recognition systems are now commonly available, at that time Gould didn’t know if such systems would actually be useful or if it would be worth IBM’s high development cost. He decided to prototype a listening typewriter using Wizard of Oz. He also wanted to look at two conditions: a system that could understand isolated words spoken one at a time (i.e., with pauses between words), and a continuous speech recognition system. Our example will illustrate the isolated words condition.

The Wizard of Oz method is named after the well-known 1939 movie of the same name. The Wizard is an intimidating being who appears as a large disembodied face surrounded by smoke and flames and who speaks in a booming voice. However, Toto the dog exposes the Wizard as a fake when he pulls away a curtain to reveal a very ordinary man operating a console that controls the appearance and sound of the Wizard.

Around 1980, John Kelley adapted the ‘Wizard of Oz’ term to experimental design, where he acted as the ‘man behind the curtain’ to simulate a computer’s response to people’s natural language commands.

John Gould and others popularized the idea in 1984 through his listening typewriter study, as detailed in Example 1.

What the User Saw

The sketch below shows what the user – an executive – would see. The user would speak individual words into the microphone, where the spoken words (if known by the computer) would appear as text on the screen. If the computer did not know the word, a series of XXXXs would appear. The user would also have the opportunity to correct errors by special commands. For example, if the user said ‘NUTS’ the computer would erase the last word, while NUTS 5 would erase the last 5 words. Other special words let the user tell the computer to spell out unknown words (via ‘SPELLMODE’ and ‘ENDSPELLMODE’, and add formatting (e.g., ‘CAPIT’ to capitalize the first letter of a word, and ‘NEWPARAGRAPH’ for a new paragraph).

What Was Actually Happening

Computers at that time could not do this kind of speech recognition reliably, so Gould and colleagues simulated it. They used a combination of a human Wizard that interpreted what the user said, and a computer that had simple rules for what to do with words typed to it by the Wizard. The sketch below shows how this was done. The Wizard typist, located in a different room, listened to each word the user said, and then typed that word into the computer. The computer would then check each word typed to see if it was in its limited dictionary. If it was, it would display it on the user’s screen. If it wasn’t it would display XXXXs. For special command words, the typist would enter an abbreviation of it, which the computer would interpret as a command to trigger the desired effect on the user’s screen.

Keeping It Real

The chief danger in using a Wizard of Oz is that the Wizard can have powers of comprehension that no system could have. For example, a human Wizard can understand complex speech or gestural input that cannot be implemented reliably. The solution – as done in the listening typewriter – is to limit the Wizard’s intelligence to things that can be implemented realistically (see the 1993 paper by Maulsby, Greenberg, and Mander for more details and another example of how this can be done).

1. The Wizard’s understanding of user input is based on a constrained input interaction model that explicitly lists the kinds of instructions that a system – if implemented – can understand and the feedback it can formulate. For example, even though the typist could understand and type continuous speech, the typist was told to listen to just a single spoken word, type in that spoken word, and then hit <enter>. The typist was also told to recognize and translate certain words as commands, which were then entered as an abbreviation. The computer further constrained input by recognizing only those words and commands in its limited vocabulary.

2. The Wizard’s response should be based on an ‘algorithm’ or ‘rules’ that limits the actions it takes to those that can be realistically implemented at a later time. For example, if the user said “type 10 exclamation marks”, the Wizard’s algorithm would be to just type in that phrase exactly, rather than ‘!!!!!’. Similarly, the computer substituted XXXXs for words it could not understand, and could respond to only a small set of editing commands.

Example 2: Robotic Interruption

In their 2011 paper, Paul Saulnier and his colleagues wanted to see how a person would respond to a robot that interrupted him, where the robot’s behavior depended on the urgency of the situation. They were interested in how simple robot actions are interpreted by a person. This includes: how the robot moves toward and looks at a person seated in an office, whether the robot does this from outside or at the doorway, how close the robot approaches, and its speed of motion. Yet to build such a robot would be difficult, as the robot would have to know how to locate the person and move toward him, all while doing appropriate physical behaviors. Instead, they simulated the robot’s behavior by Wizard of Oz.

What the Person Saw

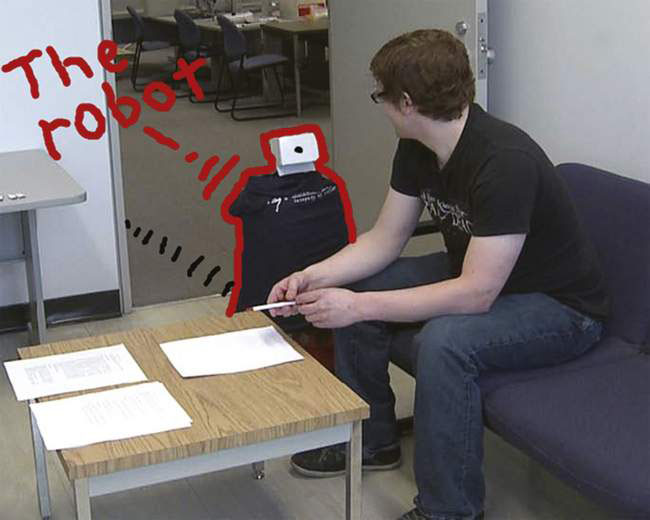

The person, who was seated in a room near a doorway, would see the robot move outside the doorway. In one condition, the person would see the robot slowly come to the doorway, glance in at him for several seconds, and then leave. In another condition, the person would see the robot enter the room doorway, gaze directly at him, (as seen in the figure below) and even rotate its head back and forth to try to give the person a sense of urgency.

What Was Actually Happening

As with the Listening Typewriter, a Wizard, sitting out of sight just outside the doorway, was actually remotely controlling the robot. Unlike the Listening Typewriter, the person knew that the Wizard was there. The Wizard controlled the robot using a gamepad controller to issue commands, which in turn would control the robot’s direction and speed, and buttons corresponding to short pre-programmed sequences of robot behavior (e.g., head shaking).

Example 3: The Fax Machine

As a teaching exercise, I (author Greenberg) used Wizard of Oz to illustrate how even a very simple sketch could be used to test an interface. In the previous chapter, I described how I created a sketch of a fax machine, projected that sketch onto a wall, and then invited a student to explain it so I could determine his initial mental model. We continue from there. I told the student that the feed tray was at the bottom of the fax, and then handed the student a sheet of paper and said, “Send this document to our main office, whose fax number is 666-9548. As you do so, tell me what you are doing and thinking.” This method, called think aloud, will be discussed in detail in Chapter 6.3.

Typically, a student would start by touching the numbers on the dial pad on the projected screen and say something like what is shown in the first image: “I enter the number 666-9546 on this keypad…” as he did each key press. The problem is that the “fax machine” was just a single sketched image, so it could not respond to his actions. To fix this problem, I become the Wizard, where I would tell the student how the fax machine would respond, for example “666-9548 appears on the little display.”

I even simulated noises, such as the modem sound that occurs when the person presses the “send” button at the bottom, as shown in the second image.

This example differs considerably from the previous ones in that the system “responses” are not actually done on the sketch, but occur in the imagination of the students as they listen to the Wizard’s description. Yet in spite of this, students bought into this easily. As students tried to do more complex tasks (such as “store your number so you can redial it later”), they were clearly exploring the interface of the fax machine and its responses (as told to them by the Wizard) to try to learn how to complete their task. They became immersed in the “system” as sketch.

This example also shows why it is important for the Wizard to understand the algorithm – in this case the responses of the fax machine – to a user’s input. The Wizard needed to know what each button press would do. In difficult tasks, such as the number storing one, the Wizard had to know the ‘correct’ sequence of events to store a number. The Wizard also had to know what would happen if the user got things wrong, where the user would press other incorrect buttons and button sequences during an attempt to solve the task.

Does the Wizard have to be the “man behind the curtain”? The three examples differ considerably in the relationship between the Wizard and the participant. The participant in the Listening Typewriter was ‘deceived’ into thinking the system was real, as he was completely unaware of the Wizard. In Robotic Interruption, the participant was introduced to the Wizard and told that the Wizard was operating the robot, but the Wizard was kept out of view during the actual simulation. In the Fax Machine, the participant was very aware of the Wizard, as not only was the Wizard next to him but was telling him what the response would be as part of their conversation. No system feedback was provided by the sketch itself.

Yet in all cases, people usually buy into the simulation. We suspect that in routine Wizard of Oz simulations, the degree to which you have to hide the presence of the Wizard won’t be critical. Of course, there will be always exceptions to this rule that depend on the situation.

Gould J.D., Conti J., Hovanyecz T. Composing Letters with a Simulated Listening Typewriter. Communications of the ACM. 1983;26(4):295–308. (April)

Kelley J.F. An Iterative Design Methodology for User-Friendly Natural Language Office Information Applications. ACM Transactions on Office Information Systems. 1984;2(1):26–41. March

Maulsby D., Greenberg S., Mander R.. Prototyping an intelligent agent through Wizard of Oz. Proceedings of the ACM CHI’93 Conference on Human Factors in Computing Systems. Amsterdam, The Netherlands: ACM Press; 1993:277–284.

Saulnier P., Sharlin E., Greenberg S.. Exploring Minimal Nonverbal Interruption in HRI. Proceedings of the IEEE International Symposium on Robot and Human Interactive Communication (Ro-Man 2011). Atlanta, Georgia: IEEE Press; 2011.

You Now Know

You can act as the ‘back-end’ of your system, where you act as a Wizard that interprets people’s input actions and where you trigger (or perform) the expected system response. To keep this real, you need to constrain your interpretations and responses to those that your sketched system could actually perform.