6 Information per bit

6.1 Information as the reciprocal of uncertainty

Remember the complaint about traditional interval arithmetic wasting bits: If you use two fixed-size floats to represent bounds, then either you have a tight bound (which means most of the bits in the two numbers are the same and therefore redundant), or the bound is loose (which means both floats have more precision than is justified). Unums and ubounds are not only more bit-efficient than traditional intervals, they also provide a way to manage the ratio of information to storage, either automatically or at the request of the programmer.

Information, or answer quality, is inversely proportional to the width of a bound. If you have no bound at all on an answer, then your information is zero because the bound on the answer is [– ∞, ∞].

We use computers to give us information about something, whether it is the chance of rain three days from now or the estimated value of an investment portfolio or where to find good coffee nearby.

The idea that information is measurable with a real number seems peculiar to computer people who only work with integer (or fixed point) problems, where you either get the answer or you don’t. Too many computer science degrees have been earned by solving homework problems drawn only from the integer world, like “find all the ways to arrange eight chess queens on a chessboard such that none of them can attack each other.”

Programs involving real numbers need to track and maximize information about the answer. They also need to optimize the ratio of information to compute time; that often means optimizing the ratio of information to the number of bits, since moving bits around takes most of the time. Readers familiar with Claude Shannon’s definition of “information” may notice a concept similarity.

We need a couple more tools in our arsenal for allowing speed-accuracy trade-offs to be done, either by the computer automatically, or as a user choice.

6.2 “Unifying” a ubound to a single unum

Suppose we are using the {4, 6} environment (capable of IEEE double precision) and the result of a unum calculation is the interval (12.03, 13.98), expressed as a ubound. Because the ubound is a pair of unums, it requires 122 bits of storage. That is more compact than traditional interval arithmetic, but it is still an awful lot of bits just to say a value is “between twelve and fourteen, roughly.” The answer information is the reciprocal of the width of the bound, approximately

Instead, we could opt to use a ubound with only one unum to represent the interval (12, 14), and accept the slight loss of information about the answer. The ubound that represents (12, 14) takes up only 17 bits. From 122 bits to 17 bits with almost the same information about the answer means an approximately sevenfold increase in information per bit.

For intermediate calculations, it might not be a good idea to loosen the bound. However, when it comes time to declare something a final answer, and place it back into main memory or present it to the computer user, optimizing the ratio of information to bits might make sense. Unlike rounding of floats, the act of loosening a ubound should always be an explicit, optional command.

In an ideal unum computing environment, the unification function would be mostly or entirely baked into the hardware to make it fast. The prototype spells out the logic that would have to be turned into an equivalent circuit.

6.3 Unification in the prototype

6.3.1 The option of lossy compression

In the prototype, the unify [ub] function finds the smallest single unum that contains the general interval represented by a ubound ub, if such a unum exists. If no such unum exists, ub is returned without change. The result must be either exact, or one ULP wide. If inexact, the approach is to find the smallest ULP that describes the separation of the two bounds, express each bound as a multiple of that ULP value, and while they do not differ by exactly one ULP, double the size of the ULP. Eventually we find a one-ULP separation and can represent the range with a single inexact unum. We cannot unify any ranges that span 0, ±1, or ±2, or ±3 since the coarsest unums do not include those points. There are other special cases to deal with. The code for unify is in Appendix C.6.

When we construct unum arithmetic operations, we will typically compute the left bound and the right bound separately. The unify routine can be the final pass that notices if a lossless compression of the answer to a single unum is possible. For example, in computing the square root of the open interval (1, 4), the algorithm finds the square root of the left and right endpoints, marks both as open with the ubit, and initially stores it as a ubound pair {. However, that pair represents the same general interval as ![]() + ubitmask,

+ ubitmask, ![]() - ubitmask}

- ubitmask}{, because the ULP width is 1. Lossless compression requires no permission; unum arithmetic should do it automatically, whenever possible.![]() + ubitmask}

+ ubitmask}

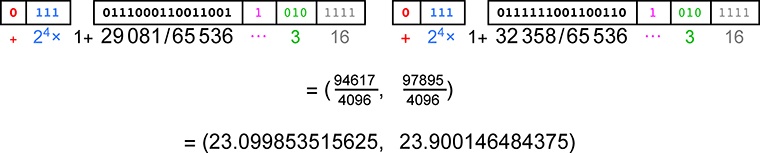

As an example from the prototype, we can unify a ubound that encloses the interval (23.1, 23.9). In the {3, 4 } environment, it takes 57 bits to express the two-part ubound:

The function nbits [u] counts the number of bits in a ubound u. It adds the number of bits in the one or two unums that make up the ubound, and adds 1 for the bit that says whether the ubound is a single unum or a pair of unums.

Now if we compute unify [{23.1, 23.9}], the result is a one-part ubound, with far fewer bits:

That looks like a 16-bit number, but again, we also count the bit that indicates whether the ubound contains one or two unums:

Knowledge about the answer dropped because the bound is now wider. The bound expanded by the ratio , so the information decreased by the reciprocal of that: , a twenty percent drop. However, the bits needed to express the bound improved by . So information per bit improves by a factor of 0.8 × 3.35 ⋯ = 2.68 ⋯.

While the unum philosophy is to automate as much error control as possible, there is always the option of “switching to manual,” since there may be a decision about the trade-off between accuracy and cost (storage, energy, time) that only a human can make, and it varies according to the application. There is a straightforward way to give the computer a guideline instead of the user having to decide each case.

6.3.2 Intelligent unification

A unum environment can have a policy regarding when to unify to replace precise bounds with looser ones in the interest of saving storage and bandwidth. For example, a policy might be that if unification improves information-per-bit by more than r (set either automatically or by the programmer), then it is worth doing. This is the kind of intelligence a human uses when doing calculations by hand. The routine that does intelligent unification in the prototype is smartunify [u,r ], where u is a ubound, and r is a required improvement ratio in information-per-bit.

Using a ratio of 1.0 says we have to get the same information-per-bit or better. An r ratio of 0.25 means we will accept a unify result even if it makes the information-perbit as much as four times less than before, a valid goal if data compression is far more important than accuracy. A ratio of 1.5 would only unify if a 1.5 times improvement in the information-per-bit is possible. Many other more sophisticated policies are possible.

As an example of conditional unification, try smartunify on the interval used in the previous example, with a requested improvement factor of at least 3:

It did not change the ubound, since it could not achieve the requested improvement factor. Try again, but this time ask for an improvement factor of at least 2.5:

The prototype code for smartunify is shown in Appendix C.6, and the reader is encouraged to think about other policies that could automate the management of error and maximize information-per-bit. For example, information-per-bit can be improved while still keeping two separate unum endpoints, but using endpoints with fewer fraction bits. In the {3, 5} environment, a ubound representing (23.1, 23.9) demands the full 32-bit fraction size for each endpoint, yet the bounds are so far apart that it might make sense to relax the bound to, say, (23.0625, 23.9375) where the fractions only take 8 bits to express. Doing so reduces the storage from 91 to 43 bits, an improvement of about 2.1 times, while the bound only gets looser by about nine percent, so information-per-bit goes up by a factor of about 1.9.

One can imagine making the improvement ratio r part of the environment settings, just like esizesize and fsizesize. Just as in compressing music or video, sometimes you want the result to take less storage and sometimes you want higher fidelity. The programmer still has to exercise some initial judgment, but the computer does the hard part of the work managing the calculation after it is given that guidance.

Incidentally, asking for aggressive unification after every operation reduces a unum environment to something very much like significance arithmetic, with its much more limited ability to express the inexactness of left and right endpoints separately. Doing so might be useful in limited situations where input numbers are involved in only short sequences of calculations before output, but in general it is better to wait until the very end of a calculation to look for opportunities to increase information-per-bit.

6.3.3 Much ado about almost nothing and almost infinite

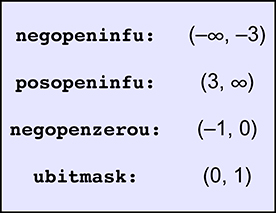

While there is only one way to express exact values – ∞ or + ∞, in any environment, there are generally many unums expressing open intervals that have – ∞ as the lower bound or + ∞ as the upper bound. For economy, we use the most compact unums that represent open intervals starting at – ∞ or ending in + ∞. In the prototype, they are negopeninfu and posopeninfu. Doing a better job than floats at handling “overflow” does not need to cost many bits.

There is a corresponding compact way to express the “almost zero” values, both positive and negative. If the upper bound is zero and open, then the smallest unum with that upper bound is (–1, 0), so we will keep negopenzerou handy as well. These unum values are among those that are changed automatically whenever setenv is called. The positive equivalent of negopenzero is a number we have already seen: ubitmask. We show it again in the table below for the sake of completeness.

These values only require three bits to the left of the utag. One exception: If the environment is {0, 0 }, the range of negopeninfu is (-∞, -2), and posopeninfu represents (2, ∞).

Exercise for the reader: What three bits left of the utag generate the intervals in the above table?

Suppose we need to express the range of values (-0.3, ∞), in a medium-precision {3, 4} environment. The expensive way to express the open infinity on the right is to use the unum for maxreal but with ubitmask added, which represents

![]()

That unum consumes 33 bits in addition to the 8 bits in the utag, and we disregard the huge number on the left end anyway when using this as the right-hand endpoint of a ubound. If we instead use the unum for posopeninfu, it only requires three bits in addition to the utag.

So the compact way to express (-0.3, ∞) as a ubound is with {-0.3, posopeninfu}:

That entire ubound takes only 39 bits to express.

6.4 Can ubounds save storage compared with floats?

Earlier we argued that unums can often save storage compared with floats. With ubounds, however, there is a good chance of needing two unums instead of one float. Is there still hope that, on average, ubound math will take fewer bits than fixed-size floats, even without doing frequent unification operations? First, let’s take a closer look at the storage requirements of unums versus floats.

You might suspect that most numbers are inexact in actual applications, so that with unums you will almost always be using the maximum number of bits as well as the utag, and thus winding up using more storage than floats. However, this usually is not the case, for five reasons.

Reason 1: Exponent waste. The number of exponent bits in a float is almost always overkill in practical computations, so a unum will frequently use far fewer exponent bits. Even single-precision IEEE floats span 85 orders of magnitude; have you ever seen a program that legitimately needs that much dynamic range? If a single-precision or double-precision calculation stays mostly within the range of about –500 to 500, you will usually only need four bits of exponent. Staying in that range saves four bits versus single precision, seven bits versus double precision, and eleven bits versus quad precision.

Reason 2: No trailing zeros for exact values. If the fraction bits are random and the number is exact, then there is a 50% chance the last bit is 0 and can be compacted away, a 25% chance that the last two bits are 0 and can be compacted away, and so on. The average compression from this effect alone is bits.

Just from these first two reasons, we get back most of the bits spent appending a utag to every bit string.

Reason 3: Shorter strings for common numbers. Many real-life calculations involve floating point versions of small integers or simple fractions. Browse through any physics or math textbook and you will see numbers like –1, 3, , 4, etc., in the formulas. Like the “ ” used when you compute kinetic energy as m v2, for example.

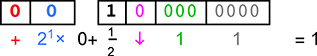

With conventional floating point, adding the real number 1 in double precision requires the 64-bit float representing 1.0, which has a lot of wasteful bits:

![]()

Whereas for unums, storing the value 1.0 always takes only three bits in addition to the size of the tag: 0, 0, and 1. In a {4, 6 } environment capable of IEEE double precision, the unum for 1.0 only takes 15 bits to express:

Unums are very concise when expressing small integers times a power of two, and such numbers arise more often than you might think in calculations.

Reason 4: Left-digit destruction saves space. Adding or subtracting numbers often destroys significant bits on the left or right side of the fraction field, and unum format is smart enough to exploit that loss by compressing away the lost bits. The savings from this effect depends on the calculation, which is why we need plenty of experiments on real problems to get a feel for the amount of savings that is possible.

Reason 5: No over-insurance. Computing with guaranteed bounds frees the user to ask only for a few decimals, instead of having to over-insure the calculation with far more decimals. Even half-precision floats start to seem excessive for many important applications, like the analysis of seismic data for oil exploration or the imaging of a medical scan.

In many cases, even a pair of unums needs fewer total bits to store than a single floating point number, so ubounds still wind up being a very economical way to express rigorous arithmetic. The comparison is not really fair, since floats usually produce an erroneous answer and unums produce a correct one, but it is intriguing that in many cases, it is not a trade-off; we can get better answers with fewer bits. And don’t forget that when a result is finally ready to send to off-chip memory, it can usually be unified to a single unum. The need for two-unum ubounds mainly occurs in the on-chip calculations.

Some believe the solution to rounding error is to push for quad precision in hardware. That goes exactly the wrong direction, since it doubles the energy, power, bandwidth, and storage consumed by every operation, and it still provides no measure of information loss at the end of a calculation. What is interesting is to go the other direction, and ask:

How tiny can a unum string be and still be useful for computing with real numbers?