The first time we saw a description of Test-Driven Development (TDD), our immediate thought was: "That's just backwards!" Wanting to give the process the benefit of the doubt, Matt went ahead and put TDD into practice on his own projects, attended seminars, kept up with the latest test-driven trends, and so forth. But the nagging feeling was still there that it just didn't feel right to drive the design from the tests. There was also the feeling that TDD is highly labor-intensive, luring developers into an expensive and ultimately futile chase for the holy grail of 100% test coverage. Not all code is created equal, and some code benefits more from test coverage than other code.[1] There just had to be a better way to benefit from automated testing.

Design-Driven Testing (DDT) was the result: a fusion of up-front analysis and design with an agile, test-driven mindset. In many ways it's a reversal of the thinking behind TDD, which is why we had some fun with the name. But still, somebody obviously has it backwards. We invite you to walk through the next few chapters and decide for yourself who's forwards and who's backwards.

In this chapter we provide a high-level overview of DDT, and we compare the key differences between DDT and TDD. As DDT also covers analysis and acceptance testing, sometimes we compare DDT with practices that are typically layered on top of TDD (courtesy of XP or newer variants, such as Acceptance TDD).

Then, in Chapters 2 and 3, we walk through a "Hello world!" example, first using TDD and then using DDT.

The rest of the book puts DDT into action, with a full-on example based on a real project, a hotel finder application based in a heterogeneous environment with a Flex front-end and Java back-end, serving up maps from ArcGIS Server and querying an XML-based hotel database. It's a non-trivial project with a mixture of technologies and languages—increasingly common these days—and provides many challenges that put DDT through its paces.

In some ways DDT is an answer to the problems that become apparent with other testing methodologies, such as TDD; but it's also very much an answer to the much bigger problems that occur when

no testing is done (or no automated tests are written)

some testing is done (or some tests are written) but it's all a bit aimless and ad hoc

too much testing is done (or too many low-leverage tests are written)

That last one might seem a bit strange—surely there can be no such thing as too much testing? But if the testing is counter-productive, or repetitive, then the extra time spent on testing could be time wasted (law of diminishing returns and all that). There are only so many ways you can press a doorbell, and how many of those will be done in real life? Is it really necessary to prove that the doorbell still works when submerged in 1000 ft. of water? The idea behind DDT is that the tests you write and perform are closely tied into the customer's requirements, so you spend time testing only what needs to be tested.

Let's look at some of the problems that you should be able to address using DDT.

When writing tests, it's sometimes unclear when you're "done"... you could go on writing tests of all kinds, forever, until your codebase is 100% covered with tests. But why stop there? There are still unexplored permutations to be tested, additional tests to write... and so on, until the customer gives up waiting for something to be delivered, and pulls the plug. With DDT, your tests are driven directly from your use cases and conceptual design; it's all quite systematic, so you'll know precisely when you're done.

Note

At a client Doug visited recently, the project team decided they were done with acceptance testing when their timebox indicated they had better start coding. We suspect this is fairly common.

Code coverage has become synonymous with "good work." Code metrics tools such as Clover provide reports that over-eager managers can print out, roll up, and bash developers over the head with if the developers are not writing enough unit tests. But if 100% code coverage is practically unattainable, you could be forgiven for asking: why bother at all? Why set out knowing in advance that the goal can never be achieved? By contrast, we wanted DDT to provide a clear, achievable goal. "Completeness" in DDT isn't about blanket code coverage, it's about ensuring that key decision points in the code—logical software functions—are adequately tested.

You still see software processes that put "testing" as a self-contained phase, all the way after requirements, design, and coding phases. It's well established that leaving bug-hunting and fixing until late in a project increases the time and cost involved in eliminating those bugs. While it does make sense intuitively that you can't test something before it exists, DDT (like TDD and other agile processes) gets into the nooks and crannies of development, and provides early feedback on the state of your code and the design.

It sounds obvious, but code that is badly designed tends to be rigid, difficult to adapt or re-use in some other context, and full of side effects. By contrast, DDT inherently promotes good design and well-written, easily testable code. This all makes it extremely difficult to write tests for. In the TDD world, the code you create will be inherently testable, because you write the tests first. But you end up with an awful lot of unit tests of questionable value, and it's tempting to skip valuable parts of the analysis and design thought process because "the code is the design." With DDT, we wanted a testing process that inherently promotes good design and well-written, easily testable code.

Note

Every project that Matt has joined halfway through, without exception, has been written in such a way as to make the code difficult (or virtually impossible) to test. Coders often try adding tests to their code and quickly give up, having come to the conclusion that unit testing is too hard. It's a widespread problem, so we devote Chapter 9 to the problem of difficult-to-test code, and look at just why particular coding styles and design patterns make unit testing difficult.

TDD is, by its nature, all about testing at the detailed design level. We hate to say it, but in its separation from Extreme Programming, TDD lost a valuable companion: acceptance tests. Books on TDD omit this vital aspect of automated testing entirely, and, instead, talk about picking a user story (aka requirement) and immediately writing a unit test for it.

DDT promotes writing both acceptance tests and unit tests, but at its core are controller tests, which are halfway between the two. Controller tests are "developer tests," that look like unit tests, but that operate at the conceptual design level (aka "logical software functions"). They provide a highly beneficial glue between analysis (the problem space) and design (the solution space).

It's not uncommon for developers to write a few tests, discover that they haven't achieved any tangible results, and go back to cranking out untested code. In our experience, the 100% code coverage "holy grail," in particular, can breed complacency among developers. If 100% is impossible or impractical, is 90% okay? How about 80%? I didn't write tests for these classes over here, but the universe didn't implode (yet)... so why bother at all? If the goal set out by your testing process is easily and obviously achievable, you should find that the developers in your team go at it with a greater sense of purpose. This brings us to the last issue that DDT tackles.

Aimless testing is sometimes worse than not testing at all, because it provides an illusion of safety. This is true of both manual testing (where a tester follows a test script, or just clicks around the UI and deems the product good to ship), and writing of automated tests (where developers write a bunch of tests in an ad hoc fashion).

Aimless unit testing is also a problem because unit tests mean more code to maintain and can make it difficult to modify existing code without breaking tests that make too many assumptions about the code's internals. Moreover, writing the tests themselves eats up valuable time.

Knowing why you're testing, and knowing why you're writing a particular unit test—being able to state succinctly what the test is proving—ensures that each test must pull its own weight. Its existence, and the time spent writing it, must be justified. The purpose of DDT tests is simple: to prove systematically that the design fulfills the requirements and the code matches up with the design.

In this section we provide a lightning tour of the DDT process, distilled down to the cookbook steps. While DDT can be adapted to the OOAD process of your choice, it was designed originally to be used with the ICONIX Process (an agile OOAD process that uses a core subset of UML).[2] In this section, we show each step in the ICONIX Process matched by a corresponding DDT step. The idea is that DDT provides instant feedback, validating each step in the analysis/design process.

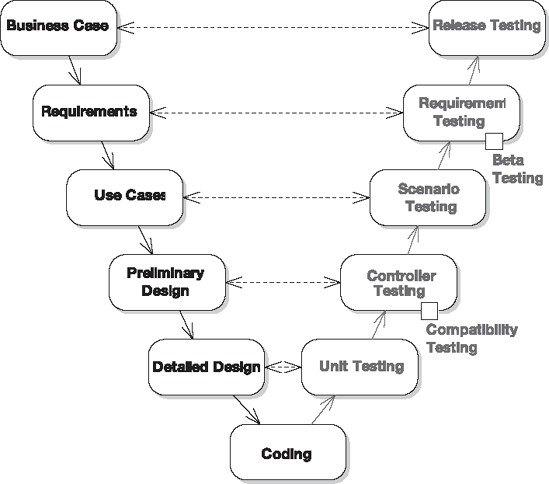

Figure 1-1 shows the four principal test artifacts: unit tests, controller tests, scenario tests, and business requirement tests. As you can see, unit tests are fundamentally rooted in the design/solution/implementation space. They're written and "owned" by coders. Above these, controller tests are sandwiched between the analysis and design spaces, and help to provide a bridge between the two. Scenario tests belong in the analysis space, and are manual test specs containing step-by-step instructions for the testers to follow, that expand out all sunny-day/rainy-day permutations of a use case. Once you're comfortable with the process and the organization is more amenable to the idea, we also highly recommend basing "end-to-end" integration tests on the scenario test specs. Finally, business requirement tests are almost always manual test specs; they facilitate the "human sanity check" before a new version of the product is signed off for release into the wild.

The tests vary in granularity (that is, the amount of underlying code that each test is validating), and in the number of automated tests that are actually written. We show this in Figure 1-2.

Figure 1-2. Test granularity: the closer you get to the design/code, the more tests are written; but each test is smaller in scope.

Figure 1-2 also shows that—if your scenario test scripts are also implemented as automated integration tests—each use case is covered by exactly one test class (it's actually one test case per use case scenario; but more about that later). Using the ICONIX Process, use cases are divided into controllers, and (as you might expect) each controller test covers exactly one controller. The controllers are then divided into actual code functions/methods, and the more important methods get one or more unit test methods.[3]

You've probably seen the traditional "V" model of software development, with analysis/design/development activities on the left, and testing activities on the right. With a little wrangling, DDT actually fits this model pretty well, as we show in Figure 1-3.

Each step on the left is a step in the ICONIX Process, which DDT is designed to complement; each step on the right is a part of DDT. As you create requirements, you create a requirements test spec to provide feedback—so that, at a broad level, you'll know when the project is done. The real core part of DDT (at least for programmers) centers around controller testing, which provides feedback for the conceptual design step and a systematic method of testing software behavior. The core part of DDT for analysts and QA testers centers around scenario testing.

With the V diagram in Figure 1-3 as a reference point, let's walk through ICONIX/DDT one step at a time. We're deliberately not stipulating any tools here. However, we encourage you to have a peek at Chapter 3 where we show a "Hello world!" example (actually a Login use case) of DDT in action, using Enterprise Architect (EA). So, with that in mind, let's step through the process.

ICONIX

ICONIX

ICONIX

For each controller, create a test case. These test cases validate the critical behavior of your design, as driven by the controllers identified in your use cases during robustness analysis (hence the name "controller test"). Each controller test is created as a method in your chosen unit testing framework (JUnit, FlexUnit, NUnit etc). Because each test covers a "logical" software function (a group of "real" functions with one collective output), you'll end up writing fewer controller tests than you would TDD-style unit tests.

For each controller test case, think about the expected acceptance criteria—what constitutes a successful run of this part of the use case? (Refer back to the use case alternate courses for a handy list.)

ICONIX

If you're familiar with TDD, you'll notice that this process differs significantly. There are actually many ways in which the two processes are similar (both in mechanics and underlying goals). However, there are also both practical and philosophical differences. We cover the main differences in the next section.

The techniques that we describe in this book are not, for the most part, incompatible with TDD—in fact, we hope TDDers can take these principles and techniques and apply them successfully in their own projects. But there are some fundamental differences between our guiding philosophy and those of the original TDD gurus, as we show in Table 1-1. We explain our comments further in the "top 10" lists at the start of Chapters 2 and 3.

Table 1-1. Differences Between TDD and ICONIX/DDT

TDD | ICONIX/DDT |

|---|---|

Tests are used to drive the design of the application. | With DDT it's the other way around: the tests are driven from the design, and, therefore, the tests are there primarily to validate the design. That said, there's more common ground between the two processes than you might think. A lot of the "design churn" (aka refactoring) to be found in TDD projects can be calmed down and given some stability by first applying the up-front design and testing techniques described in this book. |

The code is the design and the tests are the documentation. | The design is the design, the code is the code, and the tests are the tests. With DDT, you'll use modern development tools to keep the documented design model in sync with the code. |

Following TDD, you may end up with a lot of tests (and we mean a lot of tests). | DDT takes a "test smarter, not harder" approach, meaning tests are more focused on code "hot spots." |

TDD tests have their own purpose; therefore, on a true test-first project the tests will look subtly different from a "classical" fine-grained unit test. A TDD unit test might test more than a single method at a time. | In DDT, a unit test is usually there to validate a single method. DDT unit tests are closer to "real" unit tests. As you write each test, you'll look at the detailed design, pick the next message being passed between objects, and write a test case for it. |

DDT also has controller tests, which are broader in scope.[a] So TDD tests are somewhere between unit tests and controller tests in terms of scope. | |

TDD doesn't have acceptance tests unless you mix in part of another process. The emphasis (e.g., with XP) tends to be on automated acceptance tests: if your "executable specification" (aka acceptance tests) can't be automated, then the process falls down. As we explore in Part 3, writing and maintaining automated acceptance tests can be very difficult. | DDT "acceptance tests" (which encompass both scenario tests and business requirement tests) are "manual" test specs for consumption by a human. Scenario tests can be automated (and we recommend doing this if at all possible), but the process doesn't depend on it. |

TDD is much finer-grained when it comes to design.[b] With the test-first approach, you pick a story card from the wall, discuss the success criteria on the back of the card with the tester and/or customer representative, write the first (failing) test, write some code to make the test pass, write the next test, and so on, until the story card is implemented. You then review the design and refactor if needed, i.e., "after-the-event" design. | We actually view DDT as pretty fine-grained: you might base your initial design effort on, say, a package of use cases. From the resulting design model you identify your tests and classes, and go ahead and code them up. Run the tests as you write the code. |

A green bar in TDD means "all the tests I've written so far are not failing." | A green bar in DDT means "all the critical design junctures, logical software functions, and user/system interactions I've implemented so far are passing as designed." We know which result gives us more confidence in our code... |

After making a test pass, review the design and refactor the code if you think it's needed. | With DDT, "design churn" is minimized because the design is thought through with a broader set of functionality in mind. We don't pretend that there'll be no design changes when you start coding, or that the requirements will never change, but the process helps keep these changes to a minimum. The process also allows for changes—see Chapter 4. |

TDD: An essential part of the process is to first write the test and then write the code. | With DDT we don't stipulate: if you feel more comfortable writing the test before the accompanying code, absolutely go ahead. You can be doing this and still be "true" to DDT. |

With TDD, if you feel more comfortable doing more up-front design than your peers consider to be cool... go ahead. You can be doing this and still be "true" to TDD. (That said, doing a lot of up-front design and writing all those billions of tests would represent a lot of duplicated effort). | With DDT, an essential part of the process is to first create a design, and then write the tests and the code. However, you'll end up writing fewer tests than in TDD, because the tests you'll most benefit from writing are pinpointed during the analysis and design process. |

[a] If some code is already covered by a controller test, you don't need to write duplicate unit tests to cover the same code, unless the code is algorithmic or mission-critical—in which case, it's an area of code that will benefit from additional tests. We cover design-driven algorithm testing in Chapter 12. [b] Kent Beck's description of this was "a waterfall run through a blender." | |

So, with DDT you don't drive the design from the unit tests. This is not to say that the design in a DDT project isn't affected by the tests. Inevitably you'll end up basing the design around testability. As we'll explore in Chapter 3, code that hasn't been written with testability in mind is an absolute pig to test. Therefore, it's important to build testability into your designs from as early a stage as possible. It's no coincidence that code that is easily tested also generally happens to be well-designed code. To put it another way, the qualities of a code design that result in it being easy to unit-test are also the same qualities that make the code clear, maintainable, flexible, malleable, well factored, and highly modular. It's also no coincidence that the ICONIX Process and DDT place their primary emphasis on creating designs that have these exact qualities.

The main example that we'll use throughout this book is known as Mapplet 2.0, a real-world hotel-finder street map that is hosted on vresorts.com (a travel web site owned by one of the co-authors). We invite you to compare the use cases in this book with the finished product on the web site.

Mapplet 2.0 is a next-generation version of the example generated for our earlier book, Agile Development with ICONIX Process, which has been used successfully as a teaching example in open enrollment training classes by ICONIX over the past few years. The original "Mapplet 1.0" was a thin-client application written using HTML and JavaScript with server-side C#, whereas the groovy new version has a Flex rich-client front-end with server-side components written in Java. In today's world of heterogeneous enterprise technologies, a mix of languages and technologies is a common situation, so we won't shy away from the prospect of testing an application that is collectively written in more than one language. As a result, you'll see some tests written in Flex and some in Java. We'll demonstrate how to write tests that clearly validate the design and the requirements, walking across boundaries between architectural layers to test individual business concerns.

Figures 1-4 and 1-5 show the original, HTML-based Mapplet 1.0 in action.

Figure 1-4. The HTML/Ajax-based Mapplet 1.0 following the "US Map" use case: starting with the US map, zoom in to your region of interest.

By contrast, Figure 1-6 leaps ahead to the finished product, the Flash-based Mapplet 2.0.

In this chapter we introduced the key concepts that we'll be exploring in this book. We gave a 40,000-ft. overview of DDT and compared it with Test-Driven Development, which in many organizations is becoming increasingly synonymous with "writing some unit tests." We also introduced the main project that we'll be following, a real-life GIS/hotel-search application for a travel web site.

Although this book isn't specifically about Test-Driven Development, it does help to make comparisons with it, as TDD is very much in the developer consciousness. So in the next chapter, we'll walk through a "Hello world!" example—actually a "Login" use case—developed first using TDD, and then (in Chapter 3) using DDT.

In Part 2, we'll begin to explore the Mapplet 2.0 development—backwards. In other words, we'll start with the finished product in Chapter 4, and work our way back in time, through detailed design, conceptual design, use cases, and finally (or firstly, depending which way you look at it) the initial set of business requirements in Chapters 5–8.

We'll finish this first chapter by introducing the DDT process diagram (see Figure 1-7). Each chapter in Part 2 begins with this diagram, along with a sort of "you are here" circle to indicate what's covered in that chapter. As you can see, DDT distinguishes between a "developer's zone" of automated tests that provide feedback into the design, and a "customer zone" of test scripts (the customer tests can also be automated, but that's advanced stuff—see Chapter 11).

If your team is debating whether to use DDT in place of TDD, we would suggest that you point out that controller tests are essentially a "drop-in" replacement for TDD-style unit tests—and you don't have to write as many!

[1] Think algorithms vs. boilerplate code such as property getters and setters.

[2] We provide enough information on the ICONIX Process in this book to allow you to get ahead with DDT on your own projects; but if you also want to learn the ICONIX Process in depth, check out our companion book, Use Case Driven Object Modeling with UML: Theory and Practice.

[3] So (you might well be wondering), how do I decide whether to cover a method with a unit test or a controller test? Simple—implement the controller tests first; if there's any "significant" (non-boilerplate) code left uncovered, consider giving it a unit test. There is more about this decision point in Chapters 5 and 6.

[4] Integration tests have their own set of problems that, depending on factors such as the culture of your organization and the ready availability of up-to-date, isolated test database schemas, aren't always easily surmounted. There is more about implementing scenario tests as automated integration tests in Part 3.