Automated integration testing is a pig, but it's an important pig. And it's important for precisely the reasons it's so difficult.

The wider the scope of your automated test, the more problems you'll encounter; but these are also "real-world" problems that will be faced by your code, both during development and when it's released. So an integration test that breaks—and they will break frequently, e.g., when a dependent system has changed unexpectedly—isn't just a drag, it's providing an early warning that the system is about to break "for real." Think of an integration test as your network canary.

Integration tests are also important, and worth the pain of setting them up and keeping them working, because without them, you've just got unit tests. By themselves, unit tests are too myopic; they don't assert that a complete operation works, from the point when a user clicks "Go" through the complete operation, to the results displayed on the user's screen. An end-to-end test confirms that all the pieces fit together as expected. Integration is potentially problematic in any project, which is why it's so important to test.

Note

The scope of an integration test can range from a simple controller test (testing a small group of functions working together) to a complete end-to-end scenario encompassing several tiers—middleware, database, etc.—in an enterprise system. In this chapter we'll focus on integration tests that involve linking remote systems together.

However, there's no getting around the fact that automated integration tests are also difficult and time-consuming to write and maintain. So we've put this chapter in the Advanced section of the book. This topic shouldn't be viewed as "optional," but you may find that it helps to have a clear adoption path while you're implementing DDT in your project.

Another reason we've separated out integration testing is that, depending on your organization, it might simply not be possible (at the current time) to implement meaningful integration tests there; e.g., the DBAs may not be prepared to set up a test database containing specific test data that can be reinstated at the run of a script. If this is the case, we hope you still get what you can from this chapter, and that when other teams start to see the results of your testing efforts, they might warm to the benefits of automated integration tests.

Keep in mind that we're using "integration testing" to refer to both "external system calls" and "end-to-end testing," i.e., "putting it all together tests." Here are our top ten integration testing guidelines:

9. Don't forget security tests.

8. Decide which "level" of integration test to write.

7. Drive unit/controller-level integration tests from your conceptual design.

6. Drive scenario tests from your use case scenarios.

5. Write end-to-end scenario tests, but don't stall the project if they're too difficult.

4. Use a "business-friendly" testing framework to house scenario tests.

3. Test GUI code as part of your scenario tests.

2. Don't underestimate how problematic integration tests are to write and maintain, but...

1. Don't underestimate their value.

The rest of this chapter is structured around this list...

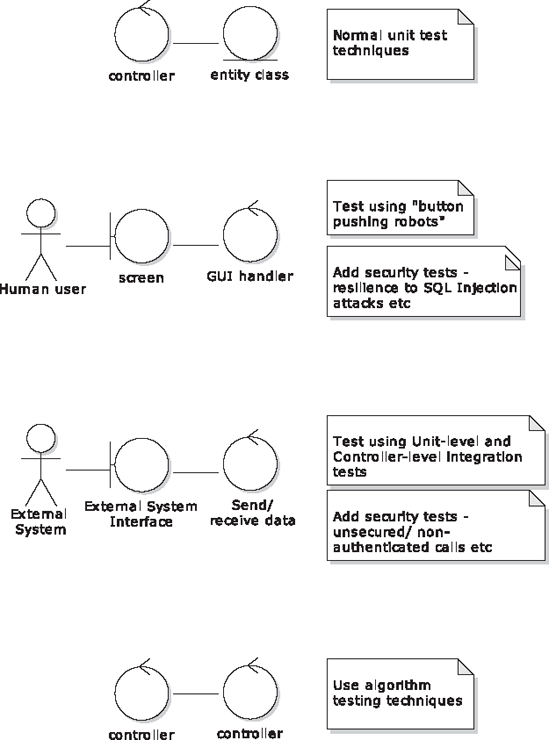

Examine your conceptual design to find test patterns. These patterns will indicate when you might need a GUI test, an external system integration test, an algorithm test, etc. As Figure 11-1 shows, a robustness diagram (i.e., conceptual design) provides a handy way of determining what type of test to write.

If you have a controller talking to an entity class, then that's the easiest case: use an isolated unit or controller test (see Chapters 5 and 6).

If you have a controller that is handling a GUI event (e.g., the user clicked a button or pushed a slider), enlist the help of a GUI testing toolkit (more about these later in the chapter), aka button-pushing robot, to simulate the user input. You would also want, as a standard sort of test, to be able to check for susceptibility to SQL Injection attacks, cross-site scripting, etc., at this level.

If you have a controller talking to an external system interface (usually remote, i.e., on a separate server somewhere), then it's time to write a unit-level integration test (more about these in the next section). Again, this would be a good opportunity to include security tests—this time from a client's point of view, but ensuring that the client can't make unsecured calls, or calls to an external service without the correct (or any) credentials. If you're also developing the server-side module that the client converses with, then it also makes sense to implement the server-side corollary to these security tests.

Finally, a controller talking to another controller suggests that this is some algorithmic code, and potentially could do with some "zoomed-in" tests that are finer-grained even than normal unit tests (i.e., sub-atomic). We cover design-driven algorithm testing in Chapter 12.

As you can see, none of these are really "end-to-end" tests: they're integration tests, but focusing only on the objects immediately on either side of the interface. Keeping the tests focused in this way—keeping everything scoped down to manageable, bite-sized chunks—can reduce many of the headaches we described earlier in this chapter.

However, automated end-to-end testing does also have its uses—more about this level of testing later.

Remember to test security (it's really important, and it's not that difficult to do). It seems like a week doesn't go by without a report of an SQL injection attack or cross-site scripting exploit impacting a high-profile web site. It's bamboozling, since SQL injection attacks, in particular, are so simple to avoid.

While lack of security is an ever-present issue that your tests must be designed to expose, the flipside—security that works—can also pose a problem for your tests. We'll talk about that in a moment, but first let's take a look at one of the more common—and easily prevented—security holes.

SQL injection isn't the only type of exploit, of course, but it's worth a brief description as it's such an outrageously common—and easily avoided—form of attack. The attack, in a nutshell, is made possible when a search form is inserted directly into an SQL query; e.g., searching for hotels in Blackpool, the SQL query, constructed in your server code, would look something like this:

SELECT * FROM hotels WHERE city = 'Blackpool';

In the search form, the user would enter a town/city; the server component extracts this value from the submitted form, and places it directly into the query. The Java code would look something like this:

String city = form.getValue("city");

String query = "SELECT * FROM hotels WHERE city = '" + city + "';";Giving effectively free access to the database opens up all sorts of potential for sneaky shenanigans from unscrupulous exploiters. All the user has to do is add his or her own closing single-quote in the search form, as in the following:

Blackpool'

Then the constructed query passed to the database will look like this:

SELECT * FROM hotels WHERE city = 'Blackpool'';

The database would, of course, reject this with a syntax error. How this is shown in the front-end is down to how you code the error handling—another "rainy day scenario" that must be accounted for, and have its own controller in the conceptual design and, therefore, its own test case.

But it's a simple task for the user to turn this into a valid query, by way of an SQL comment:

Blackpool'; --

This will be passed directly to the database as the following:

SELECT * FROM hotels WHERE city = 'Blackpool'; --';

Everything after the -- is treated as a comment, so the door to your data is now wide open. How about if the user types into the search form the following?

Blackpool'; DROP TABLE hotels; --

Your server-side code will faithfully construct this into the following SQL, and pass it straight to the database, which, in turn, will chug away without question, just following orders:

SELECT * FROM hotels WHERE city = 'Blackpool'; DROP TABLE hotels; --

Depending on how malicious the attacker is, he or she could wreak all sorts of havoc, or (worse, in a way) build on the "base exploit" to extract other users' passwords from the database. There's a good list of SQL Injection examples on http://unixwiz.net.[60]

Protecting against this type of attack isn't simply a case of "sanitizing" the single-quotes, as this excludes valid names such as "Brer O'Hare," in which a quote is a perfectly valid character. Depending on which language/platform/database you're using, there are plenty of libraries whose creators have thought through all the possible combinations of "problem characters." The library function will escape these characters for you—e.g., MySQL has a built-in function, mysql_read_escape_string(). You just have to make sure you call one. Or even better, use bind variables and avoid the problem altogether.

But either way, make sure you write tests that confirm your application is immune from SQL injection attacks. For example, use Selenium (or directly use HttpClient in your JUnit test) to post a "malicious" form to the webserver, and then query the test database directly to make sure the attack didn't work.

Also keep an eye on the SANS "top cyber security risks" page,[61] and keep thinking about tests you can write—which can usually be shared among projects or components—to verify that your system isn't vulnerable to these kinds of attacks.

Setting up a secure session for automated tests can be a bit of a drag, but usually you only have to do it once. It's worth doing, because it brings the test system much closer to being a realistic environment—meaning the tests stand a better chance of catching "real-life" problems before the system's released into the wild.

However, one issue with security is that of passwords. Depending how integrated your system is with Microsoft Exchange, LDAP, Single Sign-On (SSO), Windows (or Unix) logins, etc., there may be an issue with storing a "real" password so that the test code can log in as a user and run its tests. The answer is, of course, to set up a test account where the password is commonly known, and the test account has restricted access just to the test environment. The ability to do this will depend on just how co-operative your organization's network services team happens to be.

As ever, we want to make your life easier by recommending that you write tests that give you as much leverage as possible, and to avoid duplicated effort. So, with that in mind, here are the three levels of DDT integration testing, starting from the most "macroscopic," zoomed-out level, down to the microscopic, zoomed-in world of unit tests:

Scenario-level integration tests

Controller-level integration tests

Unit-level integration tests

Table 11-1 describes the properties of each level of integration test.

Table 11-1. How the Three Levels of Integration Test Differ

Level | Scope | What it covers |

|---|---|---|

Scenario | A "broadly scoped" end-to-end test | A whole use case scenario |

Controller | A "medium-sized" test | A logical software function |

Unit | A "small" test | One function or method |

They key characteristic of all three is that you don't use mock objects or stunt services to isolate the code. Instead, let the code run wild (so to speak), calling out to whatever services or databases it needs to. Naturally, these will need to be in a test environment, and the test data will need to be strictly controlled, otherwise you'll have no end of problems with tests breaking because someone's been messing with the test data.

These tests will need to be run separately from the main build: because they're so easily broken by other teams, you definitely don't want your build to be dependent on them working. You do want the tests to be run automatically, just not as often as the isolated tests. Setting them up to run once an hour, or even just nightly, would be perfectly fine.

Start by identifying and writing integration tests at the scenario level. These are "end-to-end" tests, as they involve the entire system running, from one end of a use case scenario to the other. ("Start-to-finish" might be a more apt description than "end-to-end"!) They also involve simulating a user operating the GUI—clicking buttons, selecting rows in a table, etc.; most importantly, they involve the code sending actual requests to actual remote systems. These are true integration tests, as they involve every part of all the finished components working together as one fully integrated system.

Usually, scenario-level integration tests are quite straightforward to write, once you know how. But on occasion they can be difficult to write, due to some technical quirks or limitations in the system or framework you're utilizing. In this case, don't "bust a gut" or grind progress to a halt while trying to implement them. Instead, move to the next level down.

Controller-level integration tests are exactly like the controller tests in Chapter 6: they're there to validate that the logical software functions identified during conceptual design have been implemented correctly—and remain implemented correctly. However, there's one key difference: the integration tests don't isolate the software functions inside a walled garden; they call out to external services, and other classes within the same system. This makes them susceptible to the problems we described at the start of this chapter, so you'd normally only write an integration test at the controller level if the software function in question is solely focused on making a remote call.

If there are any gaps in the fence left over, plug them up with unit-level integration tests. These are the most "zoomed-in" and the most focused of the tests.

Note

You could feasibly cover all the integration points with unit tests, but if you're also writing scenario-level tests then there wouldn't be any point duplicating the integration points already covered.

Let's walk through the three levels, from the narrowest in scope up to the broadest.

We cover unit-level and controller-level integration tests together as, when they're making external calls, in reality, there's very little to separate them. It sometimes just helps to think of them as distinct concepts when determining how much code an individual test should cover.

As Figure 11-2 shows, each external system call is a potential point of failure, and should be covered by a test. You want to line the "seams" of your remote interface with tests. So each controller (software function) on the left potentially gets its own unit/controller test. (We say "potentially" because some of these control-points may already be covered by a broader-scoped integration test.)

It's well worth writing unit-level integration tests if each call can be isolated as in Figure 11-2. However, you'll sometimes find that your code makes a series of remote system calls, and it's difficult to isolate one aspect of the behavior to test. This is one time when broader-scoped, scenario-level tests can actually be easier to write. Deciding which level of test to write is simply a judgment call, depending on a) how straightforward it is to isolate the software behavior, and b) how detailed or complex the code is, and whether you feel that it would benefit from a finer-grained integration test.

Later in the chapter we talk about the complexities, barriers, and wrinkles you'll face while writing integration tests. But for now, it's worth comparing the sort of "walled garden" isolated controller tests we showed in Chapter 6 with an equivalent controller-level integration test.

Chapter 6 dealt with the Advanced Search use case, in which the user enters search criteria and gets back a collection of matching hotels on a map, and listed in the search widget for further whittling-down. Here's a sample test that initiates a sequence in which a call is made to an HTTP service, the XML response is parsed, and a HotelCollection containing the matching hotels is returned. The test then confirms that the number of hotels returned is as expected. Here's the test method:

@Test

public void searchForHotels() throws Exception {

HotelSearchClient searchClient = new HotelSearchClient();

Object[] params = {

"city", "New York"

,"coordinates", new String[] {"latitude", "123"

,"longitude", "456"

,"maximumDistance", "7"

}

};

HotelCollection hotels = searchClient.runQuery(params);

assertEquals(4, hotels.size());

}The runQuery() method encapsulates the work of querying the HTTP service and parsing the XML response into a HotelCollection object; i.e., there are several methods being called "behind the scenes" in the search code.

So this is both a controller test and, essentially, an integration test, as the code is calling out to an external service. The point here is that the result-set isn't really under our control. There may be four matching hotels returned today, but tomorrow one of the hotels could close down, or more hotels may be added to the database. So the test would fail, even though the service hasn't really changed and still technically works. Add to this all of the problems you'll face with integration tests, which we describe later in this chapter, and it quickly becomes obvious that there's a trade-off in terms of time spent dealing with these issues vs. the value you get from integration tests.

If you just want to test the part of the code that parses the XML response and constructs the HotelCollection, then you'd use an isolated controller test—use a "mock service" so that HotelSearchClient returns a consistent XML response.

Here's the same test method, with additional code to transform it from an integration test into an isolated controller test—the four new lines are shown in bold:

@Test

public void searchForHotels() throws Exception {

HotelSearchClient searchClient = new HotelSearchClient();

Object[] params = {

"city", "New York"

,"coordinates", new String[] {

"latitude", "123"

,"longitude", "456"

,"maximumDistance", "7"

}

};

String xmlResponse = load("AdvancedSearch.xml");

HttpClient mockClient = mock(HttpClient.class);

when(mockClient.execute(params)).thenReturn(xmlResponse);

searchClient.setHttpClient(mockClient);

HotelCollection hotels = searchClient.runQuery(params);

assertEquals(4, hotels.size());

}This version loads up the simulated XML response from a file, and then inserts the mock HttpClient into the code being tested. We've fenced the code in so that it thinks it's making an external call, but is really being fed a simulated response.

It really is that simple to pass in a mock object—a minute's work—and it makes life much easier in terms of testing.[62]

However—and that should really be a big "however" in a 50-point bold+italic font—hiding the live interface loses a major benefit of integration testing: the whole point of integration testing is that we are testing the live interface. The number of hotels may change, but what if the format of the XML was randomly changed by the service vendor? Our system would break, and we might not find out until end-users report that they're seeing errors. A controller-level integration test like the one we just showed would catch this change straightaway.

You could, of course, reduce the integration test's dependence on specific data by changing the nature of the assertion; e.g., instead of checking for a range of hotels returned, check that one particular expected hotel is in the result-set, and that its fields are in the right place (the hotel name is in the Name field, city is in the City field, etc.). There'll still be cases where the test has to check data that's likely to change; in this case, you'll just have to grit your teeth and update the test each time.

Note

Both of the tests we just showed are valid: they're achieving different things. The integration test is checking that the live interface hasn't inexplicably changed; the isolated test is focused on the XML parsing and HotelCollection construction. While there appears to be an overlap between them, they both need to exist in separate test suites (one run during the build, the other on a regular basis but independently of the build).

So the "pain" of integration testing is worth enduring, as it's quite minor compared with the pain of producing a system without integration tests.

Scenario-level integration tests are "end-to-end" tests. By this, we mean that the test verifies the complete scenario, from the initial screen being displayed, through each user step and system response, to the conclusion of the scenario. If you're thinking that scenario-level tests sound just like automated versions of the "use case thread expansion" scenario test scripts from Chapter 7, you'd be right: that's exactly what they are. So, however you go about automating them, the first stage in automating scenario-level tests is to follow the steps described in Chapter 7, to identify the individual use case threads and define test cases and test scenarios for each one.

Tip

There's a lot to be said for being able to run a full suite of end-to-end integration tests through a repeatable suite of user scenarios, just by clicking "Run". And there's even more to be said for making these end-to-end tests run automatically, either once per hour or once per night, so you don't have to worry about people forgetting to run them.

There are many, many options regarding how to write scenario-level tests. In the next section we'll suggest a few, and give some pointers for further reading, though none of these is really DDT-specific or counts as "the DDT way." The main thing to keep in mind is that an automated scenario-level test needs to follow a specific use case scenario as closely as possible, and should have the same pass/fail conditions as the acceptance criteria you identified for your "manual" scenario tests (again, see Chapter 7).

Another thing to keep in mind is that scenario-level integration tests are simply unit tests with a much broader scope: so they can be written just like JUnit tests, with setUp(), tearDown(), and test methods, and with an "assert" statement at the end to confirm that the test passed. The language or the test framework might differ, but the principle is essentially the same.

It'll always be important to have real-life humans running your scenario tests (see Chapter 7), using initiative, patience and intuition to spot errors; but there's also value in automating these same scenario tests so that they can be run repeatedly, rather like a Mechanical Turk on steroids.

That said, automated scenario tests do have their own set of unique challenges. If the project hasn't been designed from the ground up to make testing easy, then automating these tests might be more trouble than it's worth. If you find that the project is in danger of stalling because everyone's struggling to automate scenario/acceptance tests, just stick to manual testing using the test cases discussed in Chapter 7, and fall back on unit- and controller-level integration tests.

The challenges when automating scenario tests are these:

Simulating a user interacting with the UI

Running the tests in a specific sequence, to emulate the steps in a scenario

Sharing a test database with other developers and teams

Connecting to a "real" test environment (databases, services, etc.)

We've already discussed the last item, and the first item has its own "top ten items" (which we'll get to shortly); so let's look at the other two, and then show an example based on the Mapplet's Advanced Search use case from Chapter 6.

You'll want to run tests in a specific sequence. Do that as a way of emulating the steps in a scenario.

Scenario-level tests have a lot in common with behavior-driven development (BDD), which also operates at the scenario level. In fact, BDD uses "scenario files," each of which describes a series of steps: the user does this, then the user does that... BDD scenarios are very close to use case scenarios, so close that it's possible to use BDD test frameworks such as JBehave or NBehave to implement DDT scenario-level integration tests.

Another possibility is to use a customer/business-oriented acceptance testing framework such as Fitnesse—essentially a collaborative wiki in which BAs and testers can define test scenarios (really, inputs and expected outputs on existing functions), which are then linked to code-level tests.

One final possibility, which xUnit purists will shoot us for suggesting, is—given JUnit and FlexUnit don't provide a mechanism for specifying the order that their test methods/functions are executed in—to write a single test function in your scenario test case, which then makes a series of function calls, each function mapping to one step in your use case scenario.

Databases are possibly the most problematic aspect of integration testing. If your test searches for a collection of hotels balanced on a sandy island in the Maldives, the expectation that the same set of hotels will always be returned might not remain correct over time. It's a reasonable expectation: a test needs consistent, predictable data to check against its expected results. However, who's to say that another team won't update the same test database with a new set of hotels? Your test will break, due to something outside your team's control.

A list of hotels should be fairly static, but the problem can be exacerbated when the data is more dynamic, e.g., client account balance. Your test might perform an operation that updates the balance; the next step will be to query the database to return the correct balance, but in that moment someone else's test runs, which changes the balance to something else—pulling the rug out from beneath the feet of your own test. Data contention with multiple tests running against a shared database is, as you'd expect, unpredictable: not good for a repeatable test.

One particularly tempting answer is to isolate a copy of the database for your own tests. This may or may not be practicable, depending on the size of the database, and how often it's updated. Each time someone adds a new column to a table, or inserts a new foreign key, every test instance of the database, on each developer's PC and the testers' virtual desktops, would need to be updated.

A simple answer is to run the integration tests less often, and only run them from one location—a test server that's scheduled to run the integration tests each night at 1 a.m., say. In fact, this is a fairly vital setup for integration tests. They just can't be run in the same "free-for-all" way that unit tests are run.

But there's still the problem that the database may not be in a consistent state at the start of each test.

From a data perspective, think of each integration test as a very large unit test: self-contained, affecting a limited/bounded portion of the overall database. Just as a unit test initially sets up its test fixtures (in the setUp() method), so an integration test can prepare the database with just the data it needs to run the test: a restricted set of hotels and their expected amenities, for example.

Tip

Identify a partial dataset for each integration test, and set it up when the test case is run.

For example, the following method (part of the Hotel class) calculates the overall cost of staying at a hotel over a number of nights (ignoring complications like different prices for different nights during the stay):

public double calculateCostOfStay(int numberOfNights, String discountCode) {

double price = getPricePerNight(new Date());

double discount = getDiscount(discountCode);

return (price * numberOfNights) - discount;

}We could test this method of Hotel with the following JUnit code:

@Test

public void calculateCostOfStay() throws Exception {

Hotel hotel = HotelsDB.find("Hilton", "Dusseldorf");

double cost = hotel.calculateCostOfStay(7, "VRESORTS SUPERSAVER");

assertEquals(1300, cost, 1e-8);

}This code attempts to find a hotel from a live connection to the test database, and then calls the method we want to test. There's a pretty big assumption here: that the rooms will cost $200 per night, and that there's an applicable discount code that will produce a $100 discount (resulting in a total cost of stay for seven consecutive nights of $1300). That might have been correct when the test was written; however, you'd expect the database to be updated at random times, e.g., the QA team might from time to time re-inject it with a fresh set of data from the production environment, with a totally different set of costs per night, discount codes, and so on.

To get around this problem, your integration test's setup code could prepare the test data by actually writing what it needs to the database. In the following example, JUnit's @BeforeClass annotation is used so that this setup code is run once for the whole test case (and not repeatedly for each individual test method):

@BeforeClass

public static void prepareTestData() throws Exception {

Hotel hotel = HotelsDB.findOrCreate("Hilton", "Dusseldorf");

hotel.setPricePerNight(200.00);

hotel.addDiscount(100.00, "VRESORTS SUPERSAVER");

hotel.save();

}As long as it's a fair bet that other people won't be updating the test database simultaneously (e.g., run your integration tests overnight), this is an easy, low-impact way of ensuring that the test data is exactly what that precise test needs, updated "just-in-time" before the test is run.

This approach keeps the amount of setup work needed to a minimum, and, in fact, makes the overall task much less daunting than having to keep an entire database in a consistent and repeatable state for the tests.

We'll run through a quick example using one of the scenarios (or "use case threads") from the Mapplet—specifically, the basic course for Advanced Search. Here it is as a quick reminder:

The system enables the Advanced Search widget when an AOI exists of "local" size. The user clicks the Advanced Search icon; the system expands the Advanced Search widget and populates Check-in/Check-out fields with defaults.

The user specifies their Reservation Detail including Check-in and Check-out dates, number of adults and number of rooms; the system checks that the dates are valid.

The user selects additional Hotel Filter criteria including desired amenities, price range, star rating, hotel chain, and the "hot rates only" check box.

The user clicks FIND. The system searches for hotels within the current AOI, and filters the results according to the Hotel Filter Criteria, producing a Hotel Collection.

Invoke Display Hotels on Map and Display Hotels on List Widget.

Tip

We were asked whether the first sentence of this use case should be changed to something like "The advanced search widget is enabled when an AOI of 'local' size exists." The simple answer is: Nooooooooo. Your use case should always be written in active voice, so that it translates well into an object-oriented design. You can find (lots) more information about the subject of use case narrative style in Use Case Driven Object Modeling with UML: Theory and Practice.

We want to write an automated scenario test that will step through each step in the use case, manipulating the GUI and validating the results as it goes along. Ultimately we're interested in the end-result displayed on the screen, based on the initial input; but a few additional checks along the way (without going overboard, and as long as they don't cause the test to delve too deeply inside the product code) are worth doing.

An xUnit test class is the simplest way to implement a scenario test, being closest to the code—therefore, the test won't involve any additional files or setup. However, using a unit test is also the least satisfactory way, as it offers zero visibility to the customer, testers, and Business Analysts (BAs), all of whom we assume aren't programmers or don't want to be asked to roll their sleeves up and get programming.

The Mapplet scenario tests are implemented in FlexUnit, because the Flex client is where the UI action happens. So even though the system being tested does traverse the network onto a Java server, the actual interaction—and thus the scenario tests—are all Flex-based.

The scenario test for the Advanced Search basic course looks like this:

[Test]

public function useAddress_BasicCourse(): void {

findLocalAOI();

enableAdvancedSearchWidget();

clickAdvancedSearchIcon();

confirmAdvancedSearchWidgetExpanded();

confirmFieldsPopulatedWithDefaults();

enterReservationDetail();

confirmValidationPassed();

selectHotelFilterCriteria();

clickFIND();

confirmMatchingHotelsOnMap();

confirmMatchingHotelsOnListWidget();

}Notice how this covers each user-triggered step in the use case. For example, the clickAdvancedSearchIcon() function will programmatically click the Advanced Search icon, triggering the same event that would happen if the user clicked the icon.

We don't add additional steps for events that happen after the user has clicked a button, say, as these additional steps will happen as a natural consequence of the user clicking the button. However, we do include "confirm" functions, in which we assert that the UI is being shown as expected; e.g., confirmAdvancedSearchWidgetExpanded() does exactly that— it confirms that, in the UI, the "state" of the Advanced Search Widget is that it's expanded.

As we mentioned, this style of scenario test is the easiest for a developer to write and maintain, but you'll never get a customer or business analyst to pore over your test code and sign off on it—at least, not meaningfully. So (depending on the needs of your project) you might prefer to use a more "business-friendly" test framework, such as Fitnesse or one of the BDD frameworks.

House your scenario tests in a business-friendly testing framework such as the Behavior-Driven Development (BDD) framework.[64] BDD is an approach to agile testing that encourages the developers, QA, and customer/BAs to work more closely together. To use the BDD framework for your scenario tests, you'll need to map each use case scenario to a BDD-style scenario—not as difficult as it sounds, as they're already pretty similar.

A BDD version of the Advanced Search use case scenario would look like this:

Given an AOI exists of "local" size

When the system enables the Advanced Search widget

And the user clicks the Advanced Search icon

And the user specifies Reservation Details of check-in today and check-out next week, 2 adults and 1 room

And the user selects Hotel Filter criteria

And the user clicks FIND

Then the system displays the Hotels on the map and List Widget

(In this context at least, And is syntactically equivalent to When.)[65]

Depending on your BDD framework, the framework will text-match each line with a particular Step method, and then run the Step methods in the sequence specified by the scenario, passing in the appropriate value ("check-in," "check-out," number of adults and rooms, etc.) as an argument to the relevant Step methods.

Note

One similarity is that the "Given" step at the start of the BDD scenario is usually equivalent to the initial "Display" step in an ICONIX use case: "The system displays the Quick Search window" translates to "Given the system is displaying the Quick Search window."

You may have noticed already that only about half of the original use case is described here. That's because BDD scenarios tend to focus on the user steps, finishing up with a "Then" step that is the system's eventual response, the condition to test for. However, with ICONIX/DDT use case scenarios, pretty much every user step has a system response: click a button, the system responds. Type something in, the system responds. And so on. So to match this closer to an ICONIX-style use case scenario, almost every line should be followed by a "Then" that shows how the system responded, and allows the test to confirm that this actually happened.

To generate a DDT-equivalent BDD scenario, each "When" step would be immediately followed by a counterpart "Then" system response. So the example BDD scenario, written in a more DDT-like style, would read as follows:

Given the system displays the Map Viewer

And an AOI exists of "local" size

Then the system enables the Advanced Search widget

When the user clicks the Advanced Search icon

Then the system expands the Advanced Search widget and populates fields with defaults

When the user specifies Reservation Details of check-in today and check-out next week, 2 adults and 1 room

Then the system checks that the dates are valid

When the user selects Hotel Filter criteria

And the user clicks FIND

Then the system displays the Hotels on the map and List Widget

It should then be a relatively straightforward task to map each test case to a specific BDD step.

At the time of writing, there's a paucity of BDD support for Flex developers. So we'll show the example here using the Java-based JBehave (which also happens to leverage JUnit, meaning that your JBehave-based scenario tests can be run on any build/test server that supports running JUnit tests).

Note

ICONIX-style "active voice" use cases often start with a screen being displayed. So we changed the opening part of the scenario to begin with displaying the Map Viewer. This results in a check being performed to verify that the system properly displays the Advanced Search widget.

Testing GUI code is often shied away from as the most apparently difficult part of automated testing. In the past, it certainly used to be, with heavyweight "robot"-style frameworks simulating mouse clicks at rigid X,Y screen co-ordinates. Naturally such frameworks were ridiculously fragile, as even a change in screen resolution or window decoration (anything that even slightly offsets the X, Y co-ordinates) would break the entire suite of tests and require every single test to be redone.

GUI testing has come a long way in recent times, though. Swing developers have it easy with toolkits such as Abbot,[66] UISpec4J,[67] and FEST.[68] Swing testing is actually pretty straightforward even without an additional toolkit, as everything's accessible via the code, and with built-in methods already available such as button.doClick().

.NET developers may like to check out NunitForms,[69] an NUnit extension for windows.forms testing.

Flex developers also have plenty of options, e.g., FlexMonkey[70] and RIATest.[71]

And finally, web developers are probably already familiar with the deservedly popular Selenium web testing framework.[72] The ever-changing nature of web development—where the "delivery platform of the month" is usually specific versions of half a dozen different web browsers, and IE 6, but next month could involve a whole new set of browser versions—means that automated scenario testing is more important than ever for web developers. Swapping a different browser version into your tests provides a good early indication of whether a browser update will cause problems for your application.

Integration tests can be problematic. Don't underestimate by how much.

Unit testing—"testing in the small"—is really about matching up the interface between the calling code and the function being tested. Interfaces are inherently tricky, because they can change. So what happens if you "zoom out" from this close-in picture, and start to look at remote interfaces? It's easy (and rather common) to picture a remote systems environment like the one in Figure 11-3. Client code makes a call out over the network to a remote interface, retrieves the result, and (in the case of a test) asserts that the result is as expected.

Unfortunately, the reality is more like Figure 11-4.

The same problems that take place between code interfaces during unit or controller testing become much bigger, and a whole new breed of problems is also introduced. Here are some of the typical issues that you may well encounter when writing remote integration tests in a shared environment:

We've been looking at some of these issues in the course of this chapter, presenting ways to mitigate each one. Let's have a quick run-through of the remaining issues here.

Note

We hope that, by the end of the chapter, you'll agree with us that the problems we've just described aren't just limited to integration tests—they're typical of systems development in general, and integration tests actually highlight these problems earlier, saving you time overall.

Socket code that works perfectly on one PC with local loopback might fail unexpectedly when connecting over a network, simply because communication takes longer. The programmer might not have thought to include a "retry" function, because the code never timed out while running it locally. Similarly, he may not have included buffering, simply reading data until no more bytes are available (when more could still be on the way), because locally the data would always all arrive at once.

This is a good example of where struggling to create an integration test (and the test environment, with network-deployed middleware, etc.) can actually save time in the long run. Encountering these real issues in an end-to-end test means they'll be addressed during development, rather than later when the allegedly "finished" system is deployed, and discovered not to be working.

We touched on this in the previous section, but the problem of a database changing (tables being dropped or renamed, new columns added/renamed/removed, or their type being changed, foreign keys randomly removed, and so on) seems to have a particularly harsh effect on automated tests. Each time the database is changed, it's not just the product code that needs to be updated, but the tests that assume the previous structure as well.

Keeping your tests "black box" can help a lot here. In fact, as you'll see later, the only real way for integration tests to be viable is to keep them as "black box" as possible. Because their scope is much broader than myopic unit tests, they're susceptible to more changes at each juncture in the system they pass over. So the fewer assumptions about the system under test that are placed in the test code, the more resilient your integration tests will be to changes. In the case of database changes, this means not putting any "knowledge" of the database at all (column names, table names, etc.) in the tests, but making sure everything goes through a business domain-level interface.

Evolutionary development (as "agile" used to be known) has become rather popular, because it gives teams the excuse to design the system as they go along, refactoring services, interfaces, and data structures on the way.[73] While the opposite antipattern (the rigid refusal to change anything once done) is also bad, being too fluid or agile can be highly problematic in a multi-team project. Team A publishes an interface that is consumed by team B's client module. Then team A suddenly changes their interface, breaking team B's module because team B had somehow forgotten that other teams were using their public interface. It happens all the time, and it slows development to a crawl because teams only find out that their (previously working) system has broken at the most inopportune moments.

Again, this will break an automated test before it breaks the "real" deployed product code. This may be regarded as an example of why automated integration tests are so fragile, but, in fact, it's another example of the automated tests providing an early warning that the real system will break if it's deployed in its current state.

It's to be expected that the remote system is under construction just as your client system is. So the team developing the other system is bound to have their fair share of teething troubles and minor meltdowns. A set of automated integration tests will find a problem straightaway, causing the tests to break—when, otherwise, the other team may have quietly fixed the problem before anyone even noticed. So automated tests can amplify integration issues, forcing teams to address a disproportionately higher number of problems. This can be viewed as a good or a bad thing depending on how full or empty your cup happens to be on that day. On the positive side, bugs will probably be fixed a lot quicker if they're discovered and raised much sooner—i.e., as soon as one of your tests breaks.

A "cloudy day" isn't a tangible problem—in fact, perhaps we're just feeling a bit negative after listing all these other problems! But there are definitely days when nothing on an agile project seems to fit together quite right—you know, those days when the emergent architecture emerges into a Dali-like dreamscape. Whole days can be wasted trying to get client module A to connect with and talk to middleware module B, Figure 11-5 being a case in point! If client module A happens to be test code, it's tempting to give up, deeming the test effort "not worth it," and return to the product code—storing up the integration issues for later.

With these problems in mind, it could be easy to dismiss integration tests as just too difficult to be worth bothering with. But that would be to miss their point—and their intrinsic value—entirely.

Integrating two systems is one of the most problematic areas of software development. Because it's so difficult, automated integration tests are also difficult to write and maintain—not least because they keep breaking, for exactly the same reasons remote code keeps breaking. So many teams simply avoid writing integration tests, pointing out their problematic nature as the reason. Kind of ironic, huh?

As we demonstrated earlier in this chapter with the Hotel Search controller-level test, the real value of integration tests is in providing a consistent, relentless checking of how your code matches up with live, external interfaces. External interfaces are, by their nature, outside your team's control; so there's value in an ever-present "breaker switch" that trips whenever an interface has been updated with a change that breaks your code.

A breaking integration test also immediately pinpoints the cause of a problem. Otherwise, the error might manifest with a seemingly unrelated front-end error or oddity—a strange message or garbled data appearing in your web application—which would take time to trace back to some random change made by one of your service vendors, or by another team that you're working with. The integration test, conversely, will break precisely because the external interface has done something unexpected.

We'll round off the chapter by summing up some of the key points (and adding a couple of new ones) to think about while writing integration tests. Here is our list:

Create a test environment (test database, etc.) to run the tests in. This should be as close as possible to the live release environment. A frequent data refresh from the live system will keep the test database fresh and realistic. Multiple test environments may be necessary, but try not to go crazy as they all need to be maintained. However, you might want one database that can be torn down and reinstated regularly to provide a clean environment every time, plus a database with a dataset similar to the live system, to allow system, load, and performance testing.

Start each test scenario with a script that creates a "test sandbox" containing just the data needed for that test. A common reason given for not running integration tests against a real database is that it would take too much effort to "reset" the database prior to each run of the test suite. In fact, it's much easier to simply have each test scenario set up just the data it needs to run the scenario.

Don't run a tear-down script after the test. That might sound odd, but if a test has to clean up the database after itself so that it can run successfully next time, then it totally relies on its cleanup script having run successfully the previous time. If the cleanup failed last time, then the test can never run again (albeit not without some cursing and hunting for the problem and figuring out which cleanup script to manually run, to get the tests up and running again). It's much easier if you consistently just run a "setup script" at the start of each test scenario.

Don't run the scenario tests as part of your regular build, or you'll end up with a very fragile build process. The tests will be making calls to external systems, and will be highly dependent on test data being in a specific state, not to mention other unpredictable factors such as two people running the same tests at the same time, against the same test database. It's great for unit and controller tests to be part of your automated build because their output is always deterministic, but the scenario tests, not so much. That said, you still want the scenario tests to be run regularly. If they're not set up to run automatically, chances are they'll be forgotten about, and left to gradually decay until it would take too much effort to get them all passing again. So a good "middle ground" is to schedule them to run automatically on a server, either hourly or (at a minimum) once per night. Make sure the test results are automatically emailed to the whole team.

Think of scenario tests as "black box" tests, because they don't "know" about the internals of the code under test. Conversely, unit tests are almost always "white box" because they often need to set internal parameters in the code under test, or substitute services with mock objects. Controller tests, meanwhile, are "gray box" tests because it's preferable for them to be white box, but occasionally they do need to delve into the code under test.

Alice was just beginning to think to herself, "Now, what am I to do with this creature when I get it home?" when the baby grunted again, so violently, that she looked down into its face in some alarm. This time there could be no mistake about it: it was neither more nor less than a pig, and she felt that it would be quite absurd for her to carry it further.[74]

In this chapter we've illustrated some of the main issues you'll encounter while writing integration tests, but countered these with the point that it's those same issues that actually make integration testing so darned important. While integration testing is a pig, it's an important pig that should be put on a pedestal (preferably a reinforced one).

By "forcing the issue" and addressing integration issues as soon as they arise—i.e., as soon as an integration test breaks, pinpointing the issue—you can prevent the problems from piling up until they're all discovered later in the project (or worse, discovered by irate end-users).

We also demonstrated some key techniques for writing integration tests, DDT-style: first, by identifying the type of test to write by looking for patterns in your conceptual design diagrams, and then by writing the tests at varying levels of granularity. Unit-level and controller-level integration tests help to line the seams between your code and external interfaces, while scenario-level integration tests provide "end-to-end" testing, automating the "use case scenario thread" test scripts that we described in Chapter 7.

In the next chapter, we swap the "integration testing telescope" with an "algorithm testing microscope," and change the scope entirely to look at finely detailed, sub-atomic unit tests.

[60] http://unixwiz.net/techtips/sql-injection.html

[61] www.sans.org/top-cyber-security-risks

[62] One side-effect that we should point out is that we've just transformed the test from a "black box" test to a "white box" (or "clear box") test—because the test code now "knows" that HotelSearchClient uses an HttpClient instance internally. As we discussed in Chapters 5 and 6, this is sometimes a necessary trade-off in order to isolate the code under test, but it does mean that your code is that bit less maintainable—because a change inside the code, e.g., replacing HttpClient with some other toolkit, or even just an upgraded version of HttpClient with its own API change, means that the test must also be updated.

[63] See http://fitnesse.org.

[64] See http://behaviour-driven.org/Introduction.

[65] "And being equivalent to when is very curious, indeed," said Alice. "It's almost as if 'and are they equivalent?' is the same as 'when are they equivalent'? And how do I say 'and when are they equivalent?'"

[66] http://abbot.sourceforge.net/doc/overview.shtml

[67] www.uispec4j.org

[68] http://fest.easytesting.org/swing/wiki/pmwiki.php

[69] http://nunitforms.sourceforge.net/

[70] www.gorillalogic.com/flexmonkey

[71] www.riatest.com

[72] http://seleniumhq.org/

[73] Our favorite example of this is Scott Ambler's epic work on "Agile Database Techniques" (Wiley, October 2003) much beloved by DBAs everywhere. And our favorite line: "Therefore if data professionals wish to remain relevant they must embrace evolutionary development." Riiiiight...