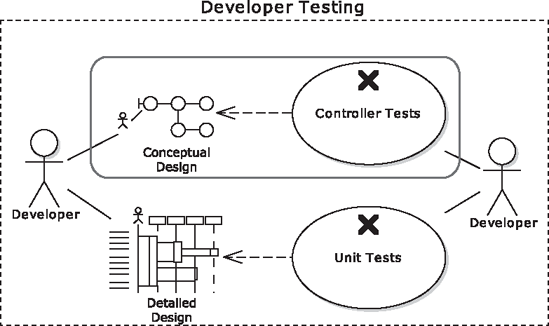

As you saw in Chapter 5, unit testing doesn't have to involve exhaustively covering every single line of code, or even every single method, with tests. There's a law of diminishing returns—and increasing difficulty—as you push the code coverage percentile ever higher. By taking a step back and looking at the design on a broader scale, it's possible to pick out the key areas of code that act as input/output junctures, and focus the tests on those areas.

But what if you could take an even smarter approach? What if, rather than manually picking out the "input/output junctures" by sight, you could identify these areas systematically, using a conceptual design of the system? This would give your tests more leverage: proportionately fewer tests covering greater ground, but with less chance of missing key logic.

DDT provides controller tests for exactly this purpose. Controller tests are just like unit tests, but broader-grained: the controller tests are based on "logical software functions" (we'll describe that term in just a moment).

Meanwhile, the more traditional, finer-grained unit tests do still have a place: they're still perfect for testing algorithmic code and business logic, which is usually at the core of some systems and should be covered with tests from all angles, like soldiers surrounding a particularly dangerous alien rabbit.[34] But you should find that you need to write far fewer unit tests if you write the controller tests first.

Whereas a unit test may be "concerned" with one software method, a controller test operates on a small group of closely related methods that together perform a useful function. You can do controller testing with DDT because the ICONIX Process supports a "conceptual design" stage, which uses robustness diagrams as an intermediate representation of a use case at the conceptual level. The robustness diagram is a pictorial version of a use case, with individual software behaviors ("controllers") linked up and represented on the diagram.

Note

Developers indoctrinated with the TDD mindset are certainly welcome to pursue full and rigorous comprehensive unit testing until they've passed out at the keyboard. But those developers who would like to get 95% of the benefits for 25% of the work should find this chapter to be quite useful! If you've opened this book looking for the "test smarter, not harder" part, you'll want to pay close attention to this chapter.

As with unit tests in Chapter 5, this chapter is chiefly about controller tests that "isolate" the code being tested: that is, they substitute external system calls with "pretend" versions, so as to keep the tests focused on the software functions they're meant to be focused on. Integration tests—where the code is allowed to call out to external systems—are still incredibly important, as they test a fragile and inherently error-prone part of any system, but they're also harder to write and maintain. So—without lessening their importance—we see integration tests as an advanced topic, so they're covered in Chapter 11.

Note

Traditionally, "integration tests" involve testing groups of units that are all part of the system under test, as well as testing external interfaces—i.e., not just tests involving external systems. With DDT, the former is covered by controller tests, so we've reserved the term "integration test" to mean purely tests that involve code calling out to external systems.

Let's now start with our top ten controller testing "to-do" list, which we'll base the whole chapter around, following the Mapplet Use Address use case for the examples.

When you're exploring your project's conceptual design and writing the corresponding controller tests, be sure to follow these ten "to-do" items.

10. Start with a robustness diagram that shows the conceptual design of your use case.

9. Create a separate test case for each controller.

8. For each test case, define one or more test scenarios.

7. Fill in the Description, Input, and Acceptance Criteria fields for each test scenario. These will be copied right into the test code as comments, making the perfect "test recipe" to code the tests from.

6. Generate Junit, FlexUnit, and NUnit test classes from your test scenarios.

5. Implement the tests, using the test comments to stay on course.

4. Write code that's easy to test.

3. Write "gray box" controller tests.

2. String controller tests together, just like molecular chains, and verify the results at each step in the chain.

1. Write a separate suite of controller-level integration tests.

Begin your journey into controller testing by creating a robustness diagram showing the conceptual design of your use case. In this chapter we'll follow along with the Advanced Search use case from the Mapplet project. In Chapter 5 we covered the server-side part of this interaction with unit tests. In this chapter we'll cover the client-side, focusing on the "Dates are correct?" controller.

To recap, here's the use case that we'll be working from in our example:

- BASIC COURSE:

The system enables the Advanced Search widget when an AOI exists of "local" size. The user clicks the Advanced Search icon; the system expands the Advanced Search widget and populates Check-in/Check-out fields with defaults.

The user specifies their Reservation Detail including check-in and check-out dates, number of adults, and number of rooms; the system checks that the dates are valid.

The user selects additional Hotel Filter criteria including desired amenities, price range, star rating, hotel chain, and the "hot rates only" check box.

The user clicks "Find." The system searches for hotels within the current AOI, and filters the results according to the Hotel Filter Criteria, producing a Hotel Collection.

Invoke Display Hotels on Map and Display Hotels on List Widget.

- ALTERNATE COURSES:

Check-out date prior to check-in date: The system displays the user-error dialog "Check-out date prior to Check-in date."

Check-in date prior to today: The system displays the user-error dialog: "Check-in date is in the past."

System did not return any matching hotels: The system displays the message "No hotels found."

"Show Landmarks" button is enabled: The system displays landmarks on the map.

User clicks the Clear button: Refine Hotel Search clears all entries and populates check-in/check-out fields automatically with defaults

If you're wondering how to get from this kind of behavioral description to working source code, that's what the ICONIX Process is all about. DDT provides the corollary to this process: as you analyze the use case and turn it into a conceptual design, you create controller tests based on the design. The controller tests provide a duality: they're like yin to the conceptual design's yang. But what is a conceptual design, and how do you create controller tests from it? Let's look at that next.

The ICONIX Process supports a "conceptual design" stage, which uses robustness diagrams as an intermediate representation of a use case at the conceptual level. The robustness diagram is a pictorial version of a use case, with individual software behaviors ("controllers") linked up and represented on the diagram.[35] Figure 6-1 shows an example robustness diagram created for the Advanced Search use case. Notice that each step in the use case's description (on the left) can be traced along the diagram on the right.

A robustness diagram is made up of some key pictorial elements: boundary objects (the screens or web pages), entities (classes that represent data, and, we hope, some behavior too), and controllers (the actions, or verbs, or software behavior). Figure 6-1 shows what each of these looks like:

Note

In Flex, MXML files are the boundary objects. The Advanced Search widget will be mapped out in AdvancedSearchWidget.mxml. It's good OO design to keep business logic (e.g., validation) out of the boundary objects, and instead place the behavior code where the data lives—on the entity objects. In practical terms, this means creating a separate ActionScript class for each entity, and mapping controllers to ActionScript functions.[36]

So in Figure 6-2, which follows, the user interacts with the Advanced Search widget (boundary object, i.e., MXML). Clicking the "Find" button triggers the "Search for hotels and filter results" controller. Similarly, selecting check-in and check-out dates triggers the creation of a Reservation Detail object (entity) and validation of the reservation dates that the user entered. You should be able to read the use-case scenario text while following along the robustness diagram. Because all the behavior in the scenario text is also represented in the controllers on the diagram, it is an excellent idea to write a test for each controller... done consistently, this will provide complete test coverage of all the system behavior specified in your use cases!

Tip

When drawing robustness diagrams, don't forget to also map out the alternate courses, or "rainy day scenarios." These represent all the things that could go wrong, or tasks that the user might perform differently; often the bulk of a system's code is written to cover alternate courses.

Robustness diagrams can seem a little alien at first (we've heard them referred to as "Martian"), but as soon as you realize exactly what they represent (and what their purpose is), you should find that they suddenly click into place. And then you'll find that they're a really useful tool for turning a use case into an object-oriented design and accompanying set of controller tests. It's sometimes useful to think of them like an object-oriented "stenographer's shorthand" that helps you to construct an object model from a use case.

It's easiest to view a robustness diagram as somewhere between an activity diagram (aka flowchart) and a class diagram. Its main purpose is to help you create use cases that can be designed from, and to take the first step in creating an OO design—"objectifying" your use case. Not by coincidence, the same process that turns your use case into a concrete, unambiguous, object-oriented description of software behavior is also remarkably effective at identifying the "control points" in your design that want to be tested the most.

To learn about robustness diagrams in the context of the ICONIX Process and DDT, we suggest you read this book's companion volume, Use Case Driven Object Modeling with UML: Theory and Practice.

Figure 6-2. Robustness diagram for the Advanced Search use case (shading indicates alternate course-driven controllers)

Notice that the use case Basic Course starts with the system displaying the Advanced Search widget; this is reflected in the diagram with the Display widget controller pointing to the Advanced Search widget boundary object. Display code tends to be non-trivial, so it's important for it to be accounted for. This way you'll also end up with a controller test for displaying the window.

One of the major benefits of robustness analysis is disambiguation of your use-case scenario text. This will not only make the design stage easier, it'll also make the controller tests easier to write. Note that the acceptance tests (which we cover in Chapters 7 and 8) shouldn't be affected by these changes, as we haven't changed the intent of the use case, or the sequence of events described in the scenarios from an end-user or business perspective. All we've really done is tighten up the description from a technical, or design, perspective.

For each controller, create a single test case.

Take another look at the controllers that we created in the previous step (see Figure 6-2). Remember from Figure 6-1 that the controllers are the circles with an arrow at the top: they represent the logical functions, or system behavior (the verbs/actions). When you create the detailed design (as you did in Chapter 5), each of these "logical functions" is implemented as one or more real or "physical" software functions (see Figure 6-3). For testing purposes, the logical function is close enough to the business to be a useful "customer-level" test, and close enough to the real code to be a useful design-level test—sort of an über unit test.

Figure 6-3. During detailed design, the logical functions are mapped to "real" implemented functions or methods. They're also allocated to classes. In the conceptual design we simply give the function a name.

Note

Figure 6-3 illustrates why controller testing gives you more "bang for your buck" than unit testing: it takes less test code to cover one controller than to individually cover all the associated fine-grained (or "atomic") software methods. But the pass/fail criteria are essentially the same. You do, however, always have the option to test at the atomic level. We're not saying "don't test at the atomic level"; it's always the programmer's choice whether to test at the physical or logical levels. If you're running out of time with your unit testing[37], it's good to know that you do at least have all the logical functions covered with controller tests.

When each controller begins executing, you can reasonably expect the system to be in a specific state. And when the controller finishes executing, you can expect the system to have moved on, and for its outputs to be in a predictable state given those initial values. The controller tests verify that the system does indeed end up in that predicted state after each controller has run (given the specific inputs, or initial state).

Let's now create some test cases for the controllers in our robustness diagram. To do this in EA, use the Agile/ICONIX add-in (as in Chapter 5). Right-click the diagram and choose Create Tests from Robustness (see Figure 6-4).

The new test cases are shown in Figure 6-5. The original controllers (from the robustness diagram) are shown on the left; each one now has its own test case (shown on the right).

Each test case has one scenario, "Default Run Scenario." You'll want to add something more interesting and specific to your application's behavior; so let's start to add some new test scenarios.

Take each test case that you have, and define one or more scenarios that exercise it. For the example in this chapter, we'll zero in on the "Dates are correct?" controller, and its associated "Dates are correct?" test case. Before we create the test scenarios for the Mapplet, let's pause for a moment to think about what a test scenario actually is, and the best way to go about identifying it.

Individual test scenarios are where you put one juncture in your code through a number of possible inputs. If you have a test case called "Test Validate Date Range," various test scenarios could be thrown at it, e.g., "Check-in date is in the past," "Check-out date is in the past," or "Check-out date is earlier than the check-in date."

Thinking up as many test scenarios as possible is a similar thought process to use-case modeling, when looking for alternate courses. Both are brainstorming activities in which you think: "What else could happen?" "Then what else?" "Anything else?" And you keep on going until the well is dry. The main differences between test scenarios and use-case scenarios (alternate courses) are the following:

So with test scenarios you're often concerned with trying different sorts of boundary conditions.

Note

Remember that controllers are logical software functions. Each logical function will map to one or more "real" code functions/methods.

Another way to view controller test scenarios is that they're a way of specifying a contract for a software function. In other words, given a set of inputs, you expect the function to return this specific value (assuming the inputs are valid), or to throw an exception if the inputs are invalid.

There tend to be a finite number of reasonable inputs into a software function, so the inputs can be readily identified by thinking along these lines:

What are the legal (or valid, expected) input values?

What are the boundaries (the minimum and maximum allowed values)?

Will the function cope with "unexpected" values? (e.g., for a date range, what if the "to" date is before the "from" date?)

What if I pass in some "curve ball" values (null, empty Strings, zero, −1, a super-long String)?

If the function takes an array of values, should the ordering of the values make a difference?

Tip

Remember to add test scenarios that also fall outside the basic path. If you're writing a high throughput, low latency table with thousands of rows of data, everyone will be focused on testing its scalability and responsiveness under heavy load. So remember to also test it with just one or two rows, or even zero rows of data. You'd be surprised how often such edge cases can trip up an otherwise well-tested system.

Naturally, you may well discover even more alternate paths while brainstorming test scenarios. When you discover them, add 'em into the use case... this process is all about comprehensively analyzing all the possible paths through a system. Discovering new scenarios now is far less costly than discovering them after you've completed the design and begun coding. That said, it makes sense to place a cap on this part of the process. It's to be expected that you won't discover every single possible eventuality or permutation of inputs and outputs. Gödel's incompleteness theorem suggests (more or less) that all possible eventualities cannot possibly be defined. So if it can't logically be done for a finite, internally consistent, formal system, then a team stands little chance of completely covering something as chaotic as real life. When you're identifying test scenarios, you'll recognize the law of diminishing returns when it hits, and realize that you've got plenty of scenarios and it's time to move on.[38]

When defining test scenarios, it's worth spending time making sure each "contract" is basically sound:

Soundness is much more important than completeness, in the sense that more harm is usually done if a wrong statement is considered correct, than if a valid statement cannot be shown.[39]

It's worth clarifying exactly where we stand on this point:

You should spend the time needed to thoroughly analyze use-case scenarios, identifying every alternate course or thing that can go wrong that you and the project stakeholders can possibly think of.

You should spend some time analyzing test scenarios, identifying as many as you can think of within a set time frame. But put more effort into checking and making sure the test scenarios you have are actually correct, than in thinking up new and ever more creative ones.

As there's a finite number of reasonable inputs into a software function, it makes sense to limit the time spent on this particular activity.

Now that we've discussed some of the theory about creating test scenarios, let's add some to the Mapplet's "Dates are correct?" test case.

If you bring up the Testing view (press Alt+3) and click the first test case, you can now begin to add individual scenarios to each test case (see Figure 6-6).

As you can see in Figure 6-6, we've replaced the Default Run scenario with four of our own scenarios. Each one represents a particular check that we'll want the code to perform, in order to validate that the dates are correct.

You may be wondering about the tabs running along the bottom of the screen in Figure 6-6: Unit, Integration, System, Acceptance, and Scenario. We've put the controller test scenarios on the Unit tab, as controller tests essentially are just big ol' unit tests. When it comes to writing the code for them and running them, most of the same rules apply (except for the white box/gray box stuff, which we'll discuss elsewhere in this chapter).

If you look at Figure 6-6, each test scenario has its own Description, Input, and Acceptance Criteria fields. These define the inputs and the expected valid outputs (acceptance criteria) that we've been banging on about in this chapter. It's worth spending a little time filling in these details, as they are really the crux of each test scenario. In fact, the time spent doing this will pay back by the bucket-load later. When EA generates the test classes, the notes that you enter into the Description, Input, and Acceptance Criteria fields will be copied right into the test code as comments, making the perfect "test recipe" from which to implement the tests.

Table 6-1 shows the details that we entered into these fields for the "Dates are correct?" test case.

Table 6-1. Test Scenario Details for the "Dates Are Correct?" Controller/Test Case

Scenario | Description | Input | Acceptance Criteria |

|---|---|---|---|

Check-in date earlier than today | Validation should fail on the dates passed in. | Check-in date: Yesterday Check-out date: Any | The dates are rejected as invalid. |

Check-out date earlier than check-in date | Validation should fail on the dates passed in. | Check-in date: Tomorrow Check-out date: Today | The dates are rejected as invalid. |

Check-out date same as checkin date | Validation should fail on the dates passed in. | Check-in date: Today Check-out date: Today | The dates are rejected as invalid. |

Good dates | Validation should succeed for the dates passed in: Check-out date is later than the check-in date, and the check-in date is later than yesterday | Check-in date: Today Check-out date: Tomorrow | The dates are accepted. |

This is where the up-front effort really begins to pay off. You've driven your model and test scenarios from use cases, and you've disambiguated the use case text and gained sign-off from the customer and BAs. You can now make good use of the model and all your new test scenarios. Now is the time to generate classes. As we go to press, EA supports generating JUnit, FlexUnit, and NUnit test classes.

If you're using EA to generate your FlexUnit tests, be sure to configure it to generate ActionScript 3.0 code instead of ActionScript 2.0 (which it is set to by default). Doing this is a simple case of going into Tools -> Options -> Source Code Engineering -> ActionScript. Then change the Default Version from 2.0 to 3.0—see Figure 6-7.

JUnit users also have a bit of setting up to do. Because EA generates setUp() and tearDown() methods, you won't need a default (empty) constructor in the test class. Also, you don't want a finalize() method, as relying on finalize() for tidying up is generally bad practice anyway, and definitely of no use in a unit test class. Luckily, EA can be told not to generate either of these. Just go into Tools -> Options -> Source Code Engineering -> Object Lifetimes. Then deselect the Generate Constructor and Generate Destructor check boxes—see Figure 6-8.

Now that you've filled in the inputs, acceptance criteria, etc., and told EA exactly how you want the test classes to look, it's time to generate some code. There are really two steps to code generation, but there's no additional thought or "doing" in between running the two steps—in fact, EA can run the two steps combined—so we present them here as essentially one step. They are as follows:

Transform your test cases into UML test classes.

Use EA's usual code-generation templates to turn the UML test classes into Flex/Java/C# (etc) classes.

First, open up your test case diagram, right-click a test case, and choose Transform... You'll see the dialog shown in Figure 6-9. Choose the transformation type (Iconix_FlexUnit in this case) and the target package. The target package is where the new test classes will be placed in the model. EA replicates the whole package structure, so the target package should be a top-level package, separate from the main model.

Tip

Create a top-level package called "Test Cases," and send all your test classes there.

Click the "Do Transform" button. To generate code at this stage, also select the "Generate Code on result" check box.

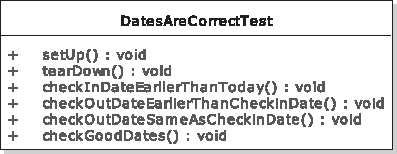

Figure 6-10 shows the generated UML test class. As you can see, each test scenario has been "camel-cased." So "check-in date earlier than today" now has a test method called checkInDateEarlierThanToday().

Note

The original test case had a question mark in its name ("Dates are correct?"). We've manually removed the question mark from the test class, although we hope that a near-future release of EA will strip out non-compiling characters from class names automatically.

If you selected the "Generate Code on result" check box, you'll already be delving through the lovely auto-generated Flex code; if not, just right-click the new test class and choose Generate Code. (EA also has a Synchronize Code option if you've previously generated test code and modified the target class, but now want to re-generate it from the model.) For the Path, point EA directly to the folder containing your unit and controller tests, in the package where you want the tests to go.

Here's the ActionScript code that the ICONIX add-in for EA generates for the "Dates are correct?" test case:

import flexunit.framework.*;

class DatesAreCorrectTest

{

[Before]

public function setUp(): void

{

// set up test fixtures here...

}

[After]

public function tearDown(): void

{

// destroy test fixtures here...

}

/**

* Validation should fail on the dates passed in.

* Input: Check-in date: Yesterday

* Check-out date: Any

* Acceptance Criteria: The dates are rejected as invalid

*/

[Test]

public function checkInDateEarlierThanToday(): void

{

}

/**

* Validation should fail on the dates passed in.

* Input: Check-in date: Tomorrow

* Check-out date: Today

* Acceptance Criteria: The dates are rejected as invalid*/

[Test]

public function checkOutDateEarlierThanCheckinDate(): void

{

}

/**

* Validation should fail on the dates passed in.

* Input: Check-in date: Today

* Check-out date: Today

* Acceptance Criteria: The dates are rejected as invalid

*/

[Test]

public function checkOutDateSameAsCheckinDate(): void

{

}

/**

* Validation should succeed for the dates passed in:

* Check-out date is later than the check-in date,

* and the check-in date is later than yesterday

* Input: Check-in date: Today

* Check-out date: Tomorrow

* Acceptance Criteria: The dates are accepted

*/

[Test]

public function checkGoodDates(): void

{

}

}Notice how each test method is headed-up with the test scenario details from the model. When you start to write each test, this information acts as a perfect reminder of what the test is intended to achieve, what the inputs should be, and the expected outcome that you'll need to test for. Because these tests have been driven comprehensively from the use cases themselves, you'll also have the confidence that these tests span a "complete enough" picture of the system behavior.

Now that the test classes are generated, you'll want to fill in the details—the actual test code. Let's do that next.

Having generated the classes, you can move on to implementing the tests. When doing that, use the test comments to help yourself stay on course.

Let's look at the first generated test function, checkInDateEarlierThanToday():

/**

* Validation should fail on the dates passed in.

*

* Input: Check-in date: Yesterday

* Check-out date: Any

* Acceptance Criteria: The dates are rejected as invalid

*/[Test]

public function checkInDateEarlierThanToday(): void

{

}A programmer staring at this blank method might initially scratch his head and wonder what to do next. Faced with a whole ocean of possibilities, he may then grab a snack from the passing Vol-au-vents trolley and get to work writing some random test code. As long as there are tests and a green bar, then that's all that matters... Luckily, the test comments that you added into the test scenario earlier are right there above the test function, complete with the specific inputs and the expected valid output (acceptance criteria). So the programmer stays on track, and you end up with tightly coded controller tests that can be traced back to the discrete software behavior defined in the original use case.

We have plenty to say on this subject in Part 3. However, for now, it's worth dipping into a quick example to illustrate the difference between an easily tested function and one that's virtually impossible to test.

When the user selects a new check-in or check-out date in the UI, the following ActionScript function (part of the MXML file) is called to validate the date range. If the validation fails, then an alert box is displayed with a message for the user. This is essentially what we need to write a controller test for:

private function checkValidDates () : void

{

isDateRangeValid = true;

var currentDate : Date = new Date();

if ( compareDayMonthYear( checkinDate.selectedDate,currentDate ) < 0 )

{

Alert.show( "Invalid Checkin date. Please enter current or future date."

);

isDateRangeValid = false;

}

else if ( compareDayMonthYear ( checkoutDate.selectedDate, currentDate ) < 1 )

{

Alert.show( "Invalid Checkout date. Please enter future date." );

isDateRangeValid = false;

}

else if ( compareDayMonthYear ( checkoutDate.selectedDate, checkinDate.selectedDate ) < 1 )

{

Alert.show( "Checkout date should be after Checkin date." );

isDateRangeValid = false;

}

}As a quick exercise, grab a pencil and try writing the checkInDateEarlierThanToday() test function to test this code. There are two problems:

Because this is part of the MXML file, you would need to instantiate the whole UI and then prime the checkinDate and checkoutDate date selector controls with the required input values. That's way outside the scope of this humble controller test.

A test can't reasonably check that an Alert box has been displayed. (Plus, the test code would need a way of programmatically dismissing the Alert box—meaning added complication.)

In short, displaying an Alert box isn't an easily tested output of a function. That sort of code is a test writer's nightmare.

Code like this is typically seen when developers have abandoned OO design principles, and instead lumped the business logic directly into the presentation layer (the Flex MXML file, in this example). Luckily, the checkValidDates() function you just saw was really a bad dream, and, in reality, our intrepid coders followed a domain-driven design and created a ReservationDetail class. This class has its own checkValidDates() function, which returns a String containing the validation message to show the user, or null if validation passed.

Here's the relevant part of ReservationDetail, showing the function under test:

public class ReservationDetail

{

private var checkinDate: Date;

private var checkoutDate: Date;

public function ReservationDetail(checkinDate: Date, checkoutDate: Date)

{

this.checkinDate = checkinDate;

this.checkoutDate = checkoutDate;

}

public function checkValidDates(): String

{

var currentDate : Date = new Date();

if ( compareDayMonthYear( checkinDate.selectedDate,currentDate ) < 0 )

{

return "Invalid Checkin date. Please enter today or a date in the future.";

}

if ( compareDayMonthYear ( checkoutDate.selectedDate, currentDate ) < 1 )

{

return "Invalid Checkout date. Please enter a date in the future.";

}

if ( compareDayMonthYear ( checkoutDate.selectedDate, checkinDate.selectedDate ) < 1

)

{

return "Checkout date should be after Checkin date.";

}

isDateRangeValid = true;

return null;

}

}Writing a test for this version is almost laughably easier. Here's our implementation of the checkInDateEarlierThanToday() test scenario:

/**

* Validation should fail on the dates passed in.

*

* Input: Check-in date: Yesterday

* Check-out date: Any

* Acceptance Criteria: The dates are rejected as invalid

*/

[Test]

public function checkInDateEarlierThanToday(): void

{

var yesterday: Date = new Date(); // now

yesterday.hours = int (today.getHours)−24;

var reservation: ReservationDetail = new ReservationDetail(yesterday, new Date());

var result: String = reservation.checkValidDates();

assertNotNull(result);

}This test function simply creates a ReservationDetail with a check-in date of yesterday and a check-out date of today, then attempts to validate the reservation dates, and finally asserts that a non-null (i.e., validation failed) String is returned.

In the software testing world, tests are generally seen as black or white, but they're not often seen in shades of gray. Controller tests, however, are best thought of as "gray box" tests. The less that your tests know about the internals of the code, the more maintainable they'll be.

A "white box" test is one that sees inside the code under test, and knows about the code's internals. As you saw in Chapter 5, unit tests tend to be white box tests. Often the existence of mock objects passed into the method under test signifies that it's a white box test, because the test has to "know" about the calls that the code makes—meaning that if the method's implementation changes, the test will likely need to be updated as well.

At the other end of the scale, scenario-level integration tests (which you'll find out about in Part 3) tend to be black box tests, because they have no knowledge whatsoever of the internals of the code under test: they just set the ball rolling, and measure the result.

Controller tests are somewhere in between the two: a controller test is really a unit test, but it's most effective when it has limited or no knowledge of the code under test—aside from the method signature that it's calling. Because controller tests operate on groups of functions (rather than a single function like a unit test), they need to construct comparatively fewer "walled gardens" around the code under test; so they pass in fewer mock objects.

Tip

Always set out to make your controller tests "black box." But if you find that you need to pass in a mock object to get the software function working in isolation, don't sweat it. We see mock objects as a practical means to "grease the skids" and keep the machine moving along, rather than a semi-religious means to strive for 100% code coverage.

String controller tests together, just like molecular chains. Then verify the results at each step in the chain.

You may have noticed from the robustness diagram in Figure 6-1 that controllers tend to form chains. Each controller is an action performed by the system. It'll change the program state in some way, or simply pass a value into the next controller in the chain.

Note

This particular test pattern should be used with caution. There's the school of thought that says that groups of tests that are dependent on each other are fragile and a "test antipattern," because if something changes in one of the functions, all the tests from that point onwards in the chain will break—a sort of a domino effect. But there are sometimes clear benefits from "chained tests," in that each test doesn't need to set up its own set of simulated test fixtures: it can just use the output or program state from the previous test, like the baton in a relay race.

Think of it in terms of a bubblegum factory. Gum base, sugar, and corn syrup are passed into the mixing machine, and a giant slab of bubblegum rolls out. The next machine in the chain, the "slicer and dicer," expects the slab of bubblegum from the previous machine to be passed in, and we expect little slivers of gum to be the output. The next machine, the wrapping machine, expects slivers of gum, silver foil strips, and paper slips to be passed in... and so on. As you've probably guessed, each machine represents a controller (or "discrete" function), and a controller test could verify that the output is as expected given the inputs.

Figure 6-11 shows some examples of controllers that are linked to form a chain. The first example shows our (slightly contrived) bubblegum factory example; the second is an excerpt from the Advanced Search use case; the third, also from Advanced Search, shows a two-controller chain for the validation example that we're following. In the first two examples, the values being passed along are pretty clear: a tangible value returned from one function is passed along into the next function. At each stage your test could assert that the output value is as expected, given the value returned from the previous function.

You'll normally want to create a test class for each one of these controllers (each test scenario is then a separate test method). Where controllers are linked together, because each controller will effectively have a separate test class, it would make sense to have a separate "program state tracking class," which survives across tests.

However—and this is a pretty major one—JUnit and its ilk don't provide a way of specifying the order in which tests should be run. There are test frameworks that do allow you to specify the run order. JBehave (itself based on JUnit) is one such framework. It has its roots firmly planted in Behavior-Driven Development (BDD), and allows you to specify a test scenario that consists of a series of steps that take place in the specified order.[40] For example, the Bubblegum Factory controller chain would look like this as a BDD scenario, where each line here is a step:

Given I have a bubblegum factory and lots of raw ingredients

When I pour the ingredients into a mixing machine

Then gum slabs should be returned

When I put the gum slabs into the dicing machine

Then gum slivers should be returned

When I put the gum slivers, silver foil strips, and paper slips into the wrapping machine

Then wrapped gum sticks should be returned

Let's go back to the Mapplet. The "Dates are correct?" controller would look like this as a BDD scenario:

Given the user entered a Reservation Detail with a check-in date of Tomorrow and a check-out date of Today

When the dates are validated

Then the system should display a user error dialog with "Check-out date prior to Check-in date"

Each step in these scenarios maps to a test method—so that's very close to the step-by-step execution of a controller chain.

If you're using "vanilla" JUnit, or any test framework that doesn't allow tests to run in a specific order, then you'll need to set up the expected state prior to each individual test method.

Finally, write a separate suite of controller-level integration tests. Just as with unit tests, there are essentially two types of controller tests: isolated tests (the main topic of this chapter), and integration tests.[41] All the comments in "top ten" item #1 for unit tests (see Chapter 5) also apply to controller tests, so, rather than repeating them here, we'll point you straight to Chapter 11, where we show you how to write controller-level integration tests (that is, tests that validate a single logical software function and freely make calls out to remote services and databases).

Note that you may also find that, as you begin to master the technique, you'll write more controller tests as integration/external tests than as isolated tests. As soon as your project is set up to support integration tests, you'll probably find that you prefer to write integrated controller tests, as it's a more "complete" test of a logical software function. This is absolutely fine and won't cause an infection. But do remember to keep the integration tests separate from your main build.

Another important caveat is that, while the integration tests may be run less often than the isolated tests, they must still be run automatically (e.g., on a nightly schedule). As soon as people forget to run the tests, they'll be run less and less often, until they become so out-of-sync with the code base that half of them don't pass anymore; the effort involved to fix them means they'll most likely just be abandoned. So it's really important to set up the integration tests to be run automatically!

In this chapter we walked through a behavioral unit testing technique that is in many ways the core of DDT. Controller tests bridge the gap between programmer-centric, code-level testing and business-centric testing. They cover the logical functions you identified in your conceptual design (during robustness analysis).

Controller tests are best thought of as broad-grained unit tests. They cover many of the functions of unit tests, meaning you can write far fewer tests; unit tests themselves are best kept for algorithmic logic where the test needs to track the internal state of a function.

We also inferred that, while starting out writing controller tests that "isolate" the code being tested, you might well end up writing more of them as integration tests, which is absolutely fine, and, in fact, provides a more thorough test of the logical software function. There will be more about this in Part 3.

So far we've covered "design-oriented" testing, with unit tests and controller tests. In the next couple of chapters we'll cover "analysis-oriented" testing, with acceptance tests that have a different audience—customers, business analysts, and QA testers.

[34] One of the authors may have been watching too much Dr. Who recently.

[35] For more about conceptual design and drawing robustness diagrams to "disambiguate" your use case scenarios, see Chapter 5 of Use Case Driven Object Modeling with UML: Theory and Practice.

[36] An example Flex design pattern that helps you achieve this is "Code Behind": www.insideria.com/2010/05/code-behind-vs-template-compon.html.

[37] In theory you might not worry about running out of time while unit testing, but in practice it often becomes an issue, especially if you're using a SCRUM approach with sprints and timeboxes. So, in practice it's a great idea to get breadth of test coverage first, and then dive down deeper when testing the trickier parts of your code.

[38] This is also an argument against the notion of 100% code coverage, which, in Chapter 5, we suggested to be a self-defeating exercise.

[39] Verification of Object-Oriented Software: The KeY Approach. ISBN: 978-3-540-68977-5

[40] In Chapter 11 we discuss using BDD and JBehave to automatically test your use case scenarios "end-to-end."

[41] To reiterate, for the purposes of this book, "integration tests" are tests where the code calls out to external systems, while "controller tests" operate on a group of units or functions.