In this chapter, you'll learn how to generate customer-facing business requirement tests from the requirements in your model, how to organize those tests, and how to generate a test plan document from them. To illustrate this, we'll once again make use of the "Agile/ICONIX add-in" for Enterprise Architect.

Have a look at the chapter-opening diagram, which should look familiar by now. In Chapter 7 we described the bottom half of this picture: you saw how use cases define the behavioral requirements of the system, and scenario tests are used as "end-to-end" automated tests to verify that the system behaves as expected. In this chapter, we'll describe the top half of the picture: you'll see how business requirements define what you want the system to do—and why.

Here's how business requirements differ from behavioral requirements (from Chapter 7):

Business requirements define the system from the customer's perspective, without diving into details about how the system will be implemented.

Behavioral requirements define how the system will work from the end-user's perspective (i.e. the "user manual" view).

Or, from a testing and feedback perspective:

Requirement tests verify that the developers have implemented the system as specified by the business requirements.

Scenario tests verify that the system behaves as specified by the behavioral requirements.

Requirement tests and scenario tests are really two sides of the same coin, i.e., they're both a form of acceptance tests.

As usual, we'll begin the chapter with our top ten "to-do" list. The rest of the chapter is structured around this list, using the Mapplet 2.0 project as the example throughout. Here's the list:

10. Start with a domain model. Write everything—requirements, tests, use cases—in the business language that your domain model captures.

9. Write business requirement tests. While writing requirements, think about how each one will be tested. How will a test "prove" that a requirement has been fulfilled? Capture this thought within the EA Testing View as the Description of the test. Be sure to focus on the customer's perspective.

8. Model and organize requirements. Model your requirements in EA. Then organize them into packages.

7. Create test cases from requirements. You can use the Agile ICONIX add-in for EA for this step. That add-in makes it easy to create test cases from your requirements.

6. Review your plan with the customer. EA can be used to generate a test report based on the requirements. This makes an ideal "check-list" to walk through with the customer. With it, you're basically asking: "Given that these are our acceptance criteria, have we missed anything?"

5. Write manual test scripts. Write these test scripts as step-by-step recipes that a human can follow easily.

4. Write automated requirement tests. Automated tests won't always make sense. But when they do, write them. Just don't delay the project for the sake of automating the tests.

3. Export the requirement test cases. To help you track progress and schedule work, export your test cases to a task-tracking and scheduling tool such as Jira.

2. Make the test cases visible. Everyone involved in the project should have access to the test cases. Make them available. Keep them up-to-date.

1. Involve your team! Work closely with your testing team in the process of creating requirement tests. Give them ownership of the tests.

When the Mapplet 2.0 project was just getting underway, the ESRI project team and VResorts.com client met to define the project's scope, and to kickstart the project into its initial modeling phase: to define the requirements, and agree on a common set of business terms—the domain model—with which the "techies and the suits" (now there's a dual cliché!) would be able to communicate with each other. Figure 8-1 shows the resultant initial domain model.

Note

The domain model in Figure 8-1 differs from the one we showed you in Chapter 4. The version shown here is from the start of the project, while the one in Chapter 4 evolved during conceptual design. You should find that the domain model evolves throughout the project, as the team's understanding of the business domain evolves.

As you can see in Figure 8-1, the Map Viewer supports a Quick Search and an Advanced Search. The result of a Quick Search is an Area of Interest that can display Hotels, Landmarks, and a Photography Overlay. A Quick Search specifies an Area of Interest via Bookmarks, an Address, or a Point of Interest (which might be a Landmark). An Advanced Search specifies a Hotel Filter that is used to declutter the map display. The Hotel Filter includes an Amenity Filter, an Availability Filter (based on check-in, check-out dates, number of rooms requested, etc.), a Price Range and Star Rating Filter, a Hot Rates Filter, and a Chain Filter.

Once you have a domain model in place, write everything—requirements, tests, use cases—in the business language that you've captured in the domain model. As the requirements are turned into use cases, and the use cases into design diagrams and code, the domain objects you defined should be used for class names, test names, and so forth.

Tip

Don't stop at identifying the names of domain objects: also write a brief definition of each one—one or two sentences each. You'll be surprised how many additional domain objects you discover this way, as you dig into the nooks and crannies of the business domain.[44]

Business requirement tests should be written from the customer's perspective. But before "testing" a requirement, it makes sense to think about what you want to achieve from testing it. Requirements are the documented wants of the customer; so requirement tests are very much from the customer's point of view. To test that a requirement has been implemented, you'll need to put on your "customer hat," so when you're identifying and writing the business requirement tests, it's perfectly reasonable (essential, even) to have the customer in the room.

Here's an example of a business requirement:

17. map/photos -- photographic overlay links

The user can click a photographic overlay icon on the map in order to open a link to the destination web page and/or picture/movie.

And here are the sorts of acceptance criteria that a customer would be thinking about:

Does this work with photos, videos? Does the screen overlay display correctly?

Model your requirements using EA, and then organize them into packages.

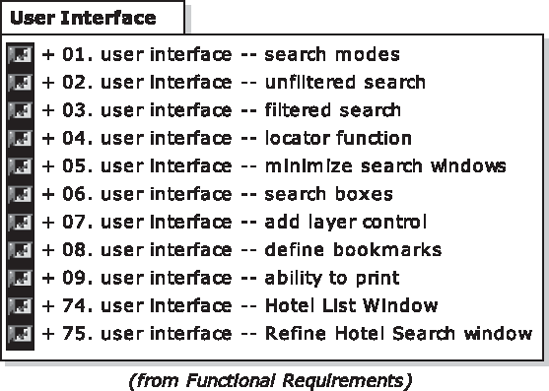

With the Mapplet 2.0 domain model in place, it wasn't long before a comprehensive set of requirements was being captured. These were all modeled in EA (see Figures 8-2 through 8-6), inside these packages:

Functional Requirements

|__ User Interface

|__ Hotel Filter

|__ Map

Non-Functional RequirementsThe team also created a package called Optional Requirements (a euphemism for "Perhaps in the next release"), which we haven't shown here.

You'll often hear people talking about "functional" and "non-functional" requirements, but what exactly makes a requirement one or the other? To answer this question, it helps to think about the criteria often known as the "ilities": capability, maintainability, usability, reusability, reliability, and extendability.[45] Functional requirements represent the capabilities of the system; non-functional requirements represent all the other "ilities" that we just listed.

Tip

There's a huge benefit to storing requirements in a modeling tool rather than a Word document, which is that the requirements model comes to life (in a manner of speaking). You can link use cases and design elements back to individual requirements; you can create test cases for each requirement, too.

Figure 8-3. Mapplet 2.0 user interface requirements, nested inside the Functional Requirements package

You've probably gathered by now that we quite like EA. One reason is that you can easily use EA along with the Agile ICONIX add-in to create test cases from your requirements.

In EA, right-click either a single requirement, a package of requirements, or inside a requirements diagram. Then choose "Create Tests from Requirements"[46] (see Figure 8-7). This will produce something like the diagram shown in Figure 8-8.

In Figure 8-8, the requirement on the left is linked to the test case on the right. The diagram also shows which package (Hotel Filter) the requirement is from. Initially, EA creates one default scenario for the test case. EA has created this as a "Unit" test scenario by default. This isn't what you want right now, though; you actually want to create Acceptance test scenarios (this does matter, as you'll see shortly when you create a Requirements test report). So let's delete the default unit test scenario, and create some Acceptance test scenarios instead—see Figure 8-8.

First switch on EA's Testing View: go to the View menu, and choose Testing—or press Alt+3. In the Testing View, you'll see some tabs at the bottom of the screen: Unit, Integration, System, Acceptance, and Scenario. (Recall from Chapter 7 that we spent most of our time on the Scenario tab, and in Chapter 5, on the Unit tab.) Click the "red X" toolbar button to delete the Default Run scenario from the Unit tab; then click over to the Acceptance tab (see Figure 8-9).

Figure 8-9. Make sure you're creating Acceptance test scenarios for requirements, not Unit test scenarios.

Higher level requirements will aggregate these tests with other "filter" tests; e.g., when testing the Filter Hotels requirement, the test plan should specify testing an "amenity filter set" concurrently with a date range:

Find a golf resort on Maui that's available on the last weekend in May (over Memorial Day weekend).

Figure 8-10 shows the Test View with the Acceptance test scenarios added.

With the requirements tests (or "acceptance tests" in EA nomenclature) added in, wouldn't it be nice if you could generate a clear, uncluttered test report that can be handed straight to the testers and the customer? Let's do that next...

One of the key stages of specifying a business requirement involves asking why the requirement is needed. This question gets asked a second time while the tests are being written. If the customer is involved in either writing or reviewing the test plan, he will think of new things to be tested. Some of these will very likely lead to new requirements that were never thought about in the first place. Writing and reviewing the requirement tests can also help to pick holes in the requirements.

Another way to look at this process is the following:

When you're writing and reviewing the requirement tests, you're validating the requirements themselves.

When you're running (or executing) the requirement tests, you're validating that the finished software matches the requirements.

Because the tests play a key role in shaping and validating the requirements, it pays to write the requirement tests early in the project.

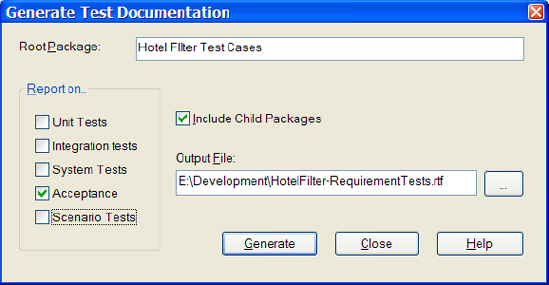

EA can be used to generate a test report based on the requirements. To do this, first select a package of requirements test cases, and then go to Project

Table 8-1. The Work-in-Progress Generated Report for the Hotel Filter Test Cases

Name | Requirement / Description | Input | Acceptance Criteria / Result Details |

|---|---|---|---|

Test for no side-effects | 31. hotel filter -- hotel query by amenities | Amenity Criteria Set Area of Interest (at a "local" map scale) Initial map display (showing hotels within AOI) | All hotels on the decluttered map display must match the selected filter criteria. Verify that amenity selection does not remove too many hotels from the map (i.e., filtering on "Room Service" shouldn't inadvertently affect other amenities). |

Filtering by sets of amenities | 31. hotel filter -- hotel query by amenities A series of "visual inspection" tests will be conducted to verify that amenity filtering is working properly. Each of these tests will involve checking various sets of amenities (for example "Pool, Room Service, Bar," or "Pet-friendly, Restaurant, Babysitting," or "Golf Course, Tennis, Fitness Center"), and then inspecting all hotels that remain on the map after filtering to make sure the amenity filtering criteria are met correctly. Tests should be run in areas of interest that make sense for various "amenity sets"...e.g., Golf resorts in Tucson, AZ; Beachfront resorts in Waikiki; Hotels with bar and concierge in midtown Manhattan, etc. | Amenity Criteria Set Area of Interest (at a "local" map scale) Initial map display (showing hotels within AOI) | All hotels on the decluttered map display must match the selected filter criteria. Decluttered map display (showing a filtered subset of Hotels on the map) |

Individual Amenity Filtering | 31. hotel filter -- hotel query by amenities A series of visual inspection tests exercising each individual amenity, one at a time | Amenity Area of Interest (at a "local" map scale) Initial map display (showing hotels within AOI) | All hotels on the decluttered map display must match the selected filter criteria. Decluttered map display (showing a filtered subset of Hotels on the map) |

30. hotel filter -- filter criteria Starting from a Quick Search result screen showing hotels on the map, filter the display using a combination of price and date ranges. | |||

Test a combination of amenity and price range filtering | 30. hotel filter -- filter criteria Starting from a Quick Search result screen showing hotels on the map, filter the display using a combination of amenities and price ranges. For example, looking for hotels in midtown Manhattan with 24-hour room service and a concierge, for under $200/night, will likely declutter the map display by a great deal. | ||

Test a combination of amenity and date range filtering | 30. hotel filter -- filter criteria Starting from a Quick Search result screen showing hotels on the map, filter the display using a combination of amenities and date ranges. Some creativity may be needed to find a combination of location and date range that will result in "Sold Out" hotels. Examples might be New Orleans hotels during Mardi Gras, hotels in Rio de Janeiro during Carnival, Waikiki Beach on Memorial Day, Times Square at New Year's Eve, etc. | ||

Test with a date range where all hotels are available | 32. hotel filter -- hotel query by date range. | ||

Test with a date range where some hotels are unavailable | 32. hotel filter -- hotel query by date range. | Unavailable hotels should be grayed-out on the map. |

The test report makes an ideal check-list to walk through with the customer. With it, you're basically asking: "Given that these are our acceptance criteria, have we missed anything?"[47] The customer may also use the test report to walk through the delivered product and test to his or her own satisfaction that it's all there. But whatever happens, don't rely on the customer to do this for you. There's nothing worse than having a customer say "Those tests you told me to run... they just confirmed that your software's broken!" In other words, make sure your own testers sign-off on the tests before the product is handed over. This will make for a happier customer.

Given that you want your testers to be able to confirm that the finished software passes the requirement tests, you'll want to make sure that each test has a tangible, unambiguous pass/fail criterion. To that end, write test scripts that your testers can manually execute. Make each script a simple, step-by-step recipe. The test report in Table 8-1 shows some examples: it's easy to see from the Acceptance Criteria column what the tester will need to confirm for each test.

As you identify and write the business requirements, you'll want to think about how the requirements are going to be tested. Some requirements tests can naturally be automated: non-functional requirements such as scalability will benefit from automation, e.g., you could write a script that generates a high volume of web site requests for "max throughput tests." Many business requirement tests, however, are more to do with observation from humans. When possible, write automated tests. However, don't delay the project in order to write the tests.

Note

As with scenario tests, automated requirement tests are really integration tests, as they test the product end-to-end across connected systems. We explore integration testing in Chapter 11.

As we explored earlier in this chapter, the business requirement tests are a customer-facing document: a methodical way of demonstrating that the business requirements have been met. So automating these might defeat their purpose. Admittedly, we've seen great results from projects that have automated requirement tests. However, the use case testing already takes care of "end-to-end" testing for user scenarios. Non-functional requirements—such as scalability or performance requirements—are natural contenders for automated scripts, e.g., to generate thousands of deals per minute, or price updates per second, in an algorithmic trading system; but it wouldn't make sense to try to generate these from the requirements model.

Export your requirement test cases to a task-tracking and scheduling tool. You'll want the test cases to be linked back to your requirements; during development, you'll want to track your requirements as tasks or features within a task-tracking tool such as Jira or Doors.[48] A "live link" back to the EA test cases is ideal, as you then don't need to maintain lists of requirement tests in two places. But failing that, exporting the test cases directly into your task-tracking tool—and then linking the development tasks to them—is an acceptable second-best. The goal is to make sure there's a tight coupling between the requirement tests, the requirements themselves, and the development tasks that your team will use to track progress.

Communication is, as we all know, a vital factor in any project's success. The requirement tests, in particular, are the criteria for the project's completion, so everyone should be well aware of their existence. The requirements tests redefine the business specification in terms of "How do I know this is done?" With that in mind, it's important to make the requirement tests visible, easy for anyone in the project to access, and, of course, up-to-date. The best way to achieve this will vary depending on the project and even your organization's culture. But here are some possibilities, all of which work well in the right environment:

Publish the generated test report to a project wiki.

Email a link to the wiki page each time the test cases are updated (but don't email the test cases as an attachment, as people will just work off this version forever, no matter how out-of-date it's become).

Print the test cases onto individual "story cards" (literally, little cards that can be shuffled and scribbled on) and pin them up on the office wall.

Print the requirements onto individual story cards, with the requirement on one half and the test cases summarized on the other half.

Make sure to involve your testing team closely in the process of creating requirement tests. We've seen many projects suffer from "over the fence syndrome"—that is, the business analysts put together a specification (if you're lucky) and throw it over the fence to the developers. The developers code up the system and throw it over the next fence along to the testers. The testers sign off on the developed system, maybe even referring back to the original requirements spec, and then the system gets released—thrown over the really big fence (with the barbed wire at the top) to the customer waiting outside. That's not the way to operate.

In theory, the sort of project we've just described ticks the boxes of "failsafe development." There are requirements specs, analysts, even a team of testers, and a release process. So what could possibly go wrong? The problem is that in this kind of setup, no one talks to each other. Each department regards itself as a separate entity, with delineated delivery gateways between them. Throw a document into the portal, and it emerges at some other point in the universe, like an alien tablet covered in arcane inscriptions that will take thousands of years for linguistic experts to decipher. Naturally, requirements are going to be misinterpreted. The "sign-off" from the testers will be pretty meaningless, as they were never closely involved in the requirements process: so their understanding of the true business requirements will be superficial at best.

Really effective testers will question the requirements and spot loopholes in business logic. And you'll be eternally grateful to them when they prevent a project from being released into production with faulty requirements. But the testers don't stand a chance of doing this if they're not closely involved with developing the requirement tests.

Tip

Let the testers "own" the requirement tests (i.e., be responsible for their development and maintenance). The customer and your business analysts build the requirements up; the testers write tests to knock them down again.

Another way to think of the business tests suite is that it's an independent audit created by a testing organization to show that the system created by the developers does what the customer intended. If the developers are left to manage the testing themselves, then you end up with the "Green Bar of Shangri-La" that we discussed in Chapter 1: the system tells us there's a green bar, so everything's okay... This is why it's vital to have a separate team—the QA testers—verifying the software. And if the testers are involved early enough, they will also verify the requirements themselves.

In this chapter we looked at the "other half" of acceptance testing, business requirement tests (where the first half was scenario tests, in Chapter 7). We also looked at the business requirements for Mapplet 2.0, which brings us to the conclusion of the Mapplet 2.0 project. Once the requirements were verified, the software was ready for release. We invite you to view the final Mapplet on www.VResorts.com!

Our strategy for requirement testing, combined with the scenario testing we discussed in Chapter 7, supports an independent Quality Assurance organization that provides a level of assurance that the customer's requirements have been met, beyond the development team asserting that "all our unit tests light up green."

The capabilities of Enterprise Architect's testing view, combined with the Agile ICONIX add-in, supports requirement testing by allowing the following:

Automated creation of tests from requirements

Ability to create multiple test scenarios for each requirement test

Ability to populate test scenarios with Description, Acceptance Criteria, etc.

Ability to automatically generate test plan reports

This concludes Part 2 of the book, where we've illustrated both developer testing and acceptance testing for the Mapplet 2.0 project. Part 3 introduces some fairly technical "Advanced DDT" concepts that we hope the developers who are reading will find useful. Less technical folks might want to skip directly to Appendix "A for Alice", which we hope you'll enjoy as much as we do.

[43] See Extreme Programming Refactored - Part IV, "The Perpetual Coding Machine" and Chapter 11, starting on p. 249, for a satirical look at this philosophy. Then, if you'd like to know what we really think, just ask.

[44] Doug recently had one ICONIX training client (with a very complex domain model) report that he identified an additional 30% more domain classes just by writing one sentence definitions for the ones he had. That's a pretty good "bang for the buck".

[45] See Peter de Grace's insightful book The Olduvai Imperative: CASE and the State of Software Engineering Practice (Yourdon Press, 1993). It's unfortunately out of print, but the chapter "An Odyssey Through the Ilities" by itself makes the book well worth hunting down.

[46] At the time of writing, this is under the Add-In... ICONIX Agile Process sub-menu.

[47] In practice, these tests indeed did catch several things on the Mapplet 2.0 project. Testing for no side-effects revealed that always filtering against a default date setting, even when the defaults were unmodified, resulted in too many hotels being removed from the map as "unavailable", and testing by date range where some hotels are unavailable discovered that the "unavailable hotels should be grayed-out" requirement had inadvertently been declared to be "Optional", with (pre-deployment) fixes underway as the book went to press. Are requirement tests important? We vote yes.

[48] If you happen to be using both EA and JIRA, and want a nice way to integrate them, check out the Enterprise Tester product, available from: www.iconix.org. Enterprise Tester is a web-based test management solution that provides traceability between UML requirements and test cases.