18

Evaluation and Testing

Once a commercial videogame is released to the public, the only evaluation and testing the game needs is the return on investment (ROI) it generates. It’s either a hit or a miss. While much focus group testing will be done if a sequel gets placed on the drawing boards, the creators are able to move on quickly. The release date is the finish line.

Pedagogical and training simulations are a different matter. The ROI isn’t measured in dollars, but whether users learn and grow. Precisely measuring inherently internal states has forever been the bane of education experts. While we can more easily evaluate rote memorization and ability and speed in accomplishing certain tasks, quantifying improvements in understanding and experience is much more difficult, even though outcome-based assessments have been a key emphasis of pedagogical evaluation this past decade.

DEVELOPING COHERENT PEDAGOGY

On the plus side, computer simulations offer the potential of gathering and culling rich data sets, which should aid in comparative and contextual evaluations. However, knowing what user behavior and input to log—and how to draw meaning from raw data—doesn’t come so easily.

As discussed earlier in this book, research, need, and observation should drive the formulation of the pedagogy to be transferred to the user. But sometimes the purpose of the training or pedagogy can get lost in the data collection and research. Once teaching points and decision crossroads are forged, they need to be sifted and checked against the “Big Picture” of the pedagogical goals. The training or pedagogy needs a coherent throughline: both for the user, who must navigate the complex pedagogical storyworld and quickly master the transferable content, and for the evaluator, who must effectively measure the content transfer.

Without a clear-cut throughline, pedagogy can easily fragment into disparate pieces of knowledge transmission, some redundant or even contradictory. In attempting to teach a lot, authors and content experts can wind up teaching very little. Users finding contradictory material can begin to feel: “I don’t trust this game. It doesn’t even know when it contradicts itself.” Similarly, redundant or contradictory pedagogy can trigger a loss of user motivation, threatening the integrity of all collected data. (Users who lose the desire to show they’re mastering material may begin behaving inconsistently or irrationally, a sign they’re bored or confused.)

The dangers of unfocused pedagogy increase the more that the desired content transfer is high level. If we’re teaching users how to dismantle a bomb, we can more easily design evaluation tools that measure our success or shortcomings. If we’re teaching executive management skills for a variety of typical situations, measuring progress can become more difficult and unreliable, especially if the skills we’re teaching are too varied or hard to define.

Our goal should be to construct a Big Picture of the pedagogical content. The content itself should have narrative fidelity and coherence, in other words: a storyline. And simulations should focus on the transfer of one or two Big Picture concepts. Given the cost of simulation development (especially in the initial ramp-up), it’s easy to feel compelled to try to cover everything in a single experience. But training and skill advancement should be viewed as a continual process. And if simulation design and construction workflow are in place, bringing new simulations online should become progressively less expensive.

PEDAGOGY MUST DRIVE SIMULATION DESIGN

If simulation design occurs prior to the development of pedagogy, final assessments of the pedagogical transfer may become unreliable. Questions will always arise as to whether the authored content, the user experience, the user input, and the selected evaluation tools were appropriate for the content. Indeed, successful integration of pedagogical content with inappropriate delivery, media, and interactivity would be impossible.

It can be very tempting to use the latest and greatest in technology. Often, the selection of “sexy” technology can aid fundraising and help garner project go-aheads. However, many simulations do not need the latest 3D game engine or virtual reality environment to achieve their goals—in fact, simulations can become less immersive the more they rely on cutting-edge technology.

For example, a simulation located in the stock-trading world might be better served by using a strictly 2D, Internet-delivered platform. While it would be possible to create a navigable 3D world where a user could walk onto the trading floor and interact with other traders, this may be distracting when the primary goal is (let’s say) the improvement of user decision making during a world economic crisis (which is supposed to be based on incoming 2D data). User distraction is bound to negate some of the learning and also offer up potentially misleading data when measuring user comprehension and advancement.

Clearly, project budget, along with available facilities, personnel and technology, will also impact the choice of simulation platform and environment. But given that the least expensive approaches always entail unexpected costs—and that the cost of today’s high-end technology can suddenly plummet—first emphasis still needs to be placed on how the selection of platform, interactivity, and media can further content transfer and assessments of that transfer.

As discussed in the previous section, only a coherent pedagogy will give us a reliable sense of our technology, interface, usability, and measurement needs. With this in hand, we can begin to ask questions about how to achieve effective teaching and about the user experience that may facilitate this.

Are we stressing interaction with fellow humans (whether fellow users or synthetic characters) in a spatial, synchronous (i.e., face-to-face) environment—or is the desired kind of interaction asynchronous and remote (via e-mails, documents, voicemails, etc.)? Are we focusing on the rapid assessment of similar kinds of input (spreadsheets, charts, etc.), or of disparate types of input (spreadsheets, colleague concerns expressed face-to-face, news videos, an unpleasant supervisor encounter, unexpected workplace events [e.g., a layoff, an industrial accident] that unfold, etc.)? Are we more interested in rapid decision making, or in contextual user behavior? What knowledge level are users starting out at, and what knowledge level do we want them to ascend to? Are users comfortable within this simulation environment, or will there be a steep learning curve and likely discomfort throughout the experience? (For example: users younger than 30 will probably be very comfortable in a real-time, 3D, computer graphics environment, and more inclined to enjoy the training; middle-aged users will probably be more comfortable with a 2D environment, and may not be motivated to master a 3D simulation.)

Deconstructing our pedagogy, and exhaustively examining the user experience needed to both simulate the real-world environment and open the door to teaching and modeling behavior and knowledge, should begin to lead us to the appropriate selection of platform, simulation environment, media, and user interactivity. However, we will also need to ask whether the platform will allow us to effectively measure progress, retention, and mastery of material.

Only when we’ve reliably determined how the pedagogy can best be rolled out, what the user interactivity needs to be to advance the pedagogy, and how we can evaluate user learning, can we make a fully informed decision about simulation design.

STORY CONTENT AND INTERACTIVITY AS IT RELATES TO PEDAGOGICAL EVALUATION AND TESTING

In earlier chapters, we’ve provided evidence that story content can help transfer pedagogical content. However, story content needs to be examined in light of the evaluation and testing to be done. Are the pedagogical goals remaining “loud and clear” after the infusion of story, or, are the training elements getting diluted or contradicted by the story elements? Will the story content undermine learning evaluations, or even worse, render them invalid?

The story needs to be analyzed for its ability to capture the attention of the user and its ability to complement, and not overwhelm or distract from, the training goals. Is the story aiding in motivating the user, or is it obscuring the primary intent of the simulation? If the story is viewed as being a drag on the user or getting in the way of the user (as many videogame storylines do), then new effort needs to be placed on creating a more compelling and necessary story. (Perhaps the story has been pasted on top of the primary simulation, or fails to integrate with user interactivity and testing.)

The addition of story content can easily lead to a lengthening of simulation running times and user sessions, and the attention span of a typical user needs to be considered. Complexities of story can diminish user focus and attentiveness, decreasing learning retention and comprehension and threatening in-game evaluations. Story content needs to be “bite-sized,” or easily re-accessed if necessary, to support user attention spans and pedagogical activities.

We need to look at the assessment process as well, particularly if we are considering repurposing previously designed learning assessments. These older assessments will not be focused on how the story content moves the learning narrative along, and may not adequately embrace the added nuances and ambiguities that story content has brought to the experience. Consequently, the assessments may be one-dimensional and misleading with respect to what the user has learned.

Rather than being created in a vacuum, assessments will need to be created with an eye toward what the story content has added to (or subtracted from) the experience. Assessments themselves may need more nuance and precision, and may need a complete redesign, rather than a band-aid modification for the new simulation.

With the further addition of user interactivity into the simulation, evaluation and testing becomes even more complex. If there are branching storylines, will every path offer the same matrix of pedagogical content? If not, will the evaluation tools take this into account and recognize different levels of content transfer? If the simulation offers more nuanced and complex user interaction (where the simulation dynamically adjusts content and builds stories on the fly depending on the simulation state engine), will testing be able to fully evaluate the user’s decision making and performance? If solutions are open ended, how do we measure user reasoning and skill acquisition, rather than simply marking the “correct” solution? If cooperative teamwork and leadership are at the heart of the interactivity, how can we quantify their progress?

In short, the addition of story and interactivity will have tremendous impact on the evaluation and testing we do; and unless we account for this impact, we will fall short of fully evaluating user progress.

FORMATIVE EVALUATION

Once the research and data collection have coalesced into (1) a coherent pedagogical narrative, and (2) discrete teaching points and decision points, a front-end evaluation of the planned user demographic should be done. This is often called a formative evaluation, which typically explores a simple but critical question: Where do our users currently stand in relation to the pedagogical content? We need to set a baseline. Where are they strong, where are they weak, and how do they evaluate their own skills and knowledge in the area?

These formative evaluations should be done on paper and in focus groups, so we can gather a broad spectrum of data sets and have confidence in our understanding of the proposed users. Obviously, the evaluation instruments may vary wildly: Do we need to measure students’ mastery of soft skills, or their ability to plan strategies, or their situational awareness and tactical decision making in a dangerous situation?

USC professor Dr. Patti Riley, who led the evaluation and testing for Leaders, says that testing is more than a matter of putting together a couple of questionnaires and tabulating results: “You need someone with a couple of years of college statistics [to guide the testing and develop useful measurement tools].” This person on the development team should collaborate with a subject matter expert to help shape and guide the evaluations—and not lose sight of the pedagogy’s “Big Picture.”

Aside from knowledge in statistics and analytics, the ideal assessment leader should have a good foundation in psychometrics. Psychometrics studies the seemingly unquantifiable: cultural baggage, quality of life, personality, and other high-level mental abstractions. Psychometric assessments and data collecting will broaden the scope of our initial testing and end-of-simulation testing.

The more we know about our users, the better we can design the simulation around their expectations, behaviors, and needs. Given the addition of story content into the learning arena, we should also find out how comfortable users are with ambiguity and nuances. Can they see the underlying learning principles within the context of story, or are they going to need additional help in navigating the story space?

If users have previously been getting the training content via a classroom setting or assigned readings or quasi-simulation experiences, then attention needs to be paid to the progress they typically made in these situations. Part of the summative evaluation (which we’ll soon discuss) will be determining whether the story-driven simulation has enabled students to make greater progress with content or enabled them to master content more quickly.

Good testing and evaluation practices dictate that summative evaluation commences with the simulation’s “proof of concept.” The proof of concept should dole out enough content transfer that user progress can be measured, and the summative evaluations planned for the full project can be rolled out, tested, and refined.

EVALUATIONS DURING THE SIMULATION

In an interactive environment, it becomes tempting to allow the user complete freedom of action. If the user makes mistake after mistake after mistake . . . well, that’s real life, and the user has the right to do this in an interactive story space.

However, according to Dr. Riley, research has consistently shown that users showing a pattern of incorrect decision making need intervention. Allowing the user to consistently fail, with no feedback on the shortcomings, will actually reinforce the undesired behavior. In addition, user data and evaluations will begin to lose their integrity and value if users are allowed to compound their errors over a sustained period.

In addition, users begin to get demotivated if they are recognizing that their performance is sub par; and if the storyspace environment is giving them no feedback whatsoever on their performance, then that’s an inherent gameplay design flaw (users should be able to see or experience results deriving from their input). Instead, users need to get frequent feedback on their progress, and even more feedback when they’re not engaging with and understanding the pedagogical content.

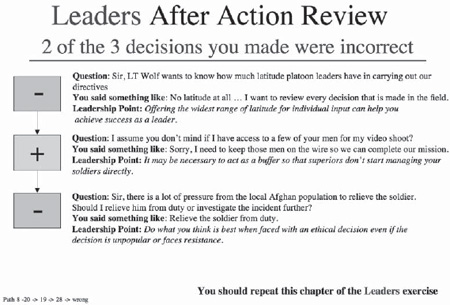

This certainly argues for simulations to be broken up into identifiable chapters or intervals, with “after action reviews” or “in process assessments” concluding each chapter. Ideally, the after action review can be designed to gather further insight into user reasoning and motivation for decisions, and can offer guidance for the user who’s gone astray. The most sophisticated interactive simulations will then be able to customize the next chapter (or an additional chapter) of the story space to give the user further opportunity to master the training material. (See earlier discussions on the Experience Manager (Chapter 10) and on automated story generation (Chapter 16).) An alternative approach is to guide users in repeating the chapter, so they can implement the feedback they’ve received in the after action review.

The instructor-in-the-loop approach may allow for minute interactions with users to keep them on-track, and prevent them from continual repetition of incorrect behaviors. In essence, the after action review would be happening throughout the experience. For certain simulations, it may be preferable to offer nearly continual feedback to users. However, this will clearly diminish the feeling of “free will” that interactivity grants users, and this amount of handholding may reinforce an undesirable dependency on superiors, rather than independent decision making and confidence building.

Dr. Riley recommends that pedagogical content be “chunked,” with smaller issues or less complex material appearing in the early chapters or levels of a simulation, allowing for a user ramp-up in the uses of the system and a more holistic understanding of the story space. Fortunately, this type of rollout complements the classic story structure, which should also ramp up plot developments and character revelations. In addition, this mirrors the training and assessment techniques that commercial videogames already use: most games will start with one or more tutorials, gradually ramping up the level of difficulty and familiarizing the user with the game environment, control behaviors, and game flow.

Figure 18.1 Sample after action review screen from the Leaders simulation.

One critical component in users’ absorption of learning materials in a simulation environment is whether both the learning and the simulation space meets their expectations. Learning that seems disconnected from the simulation environment, or from users’ constructions of their own realities and needs, becomes easy to ignore or distort. User progress will stall, and learning assessments will be less revealing.

In this also, the learning process mirrors the suspension of disbelief necessary for stories. Most narratives carry with them genre expectations: we know the basic rhythms and underlying assumptions of a crime story, a horror story, a teen comedy, and so on. And when these are severely violated, defeating our expectations, we have difficulty enjoying the story.

As discussed earlier in this chapter, the pedagogy needs to drive the simulation design. Designers, training leaders, and evaluators need to collaborate in making sure that the learning is matched to the simulation environment and narrative, and that both are matched to user expectations.

SUMMATIVE EVALUATIONS

Summative evaluations attempt to verify whether defined objectives are met, and whether teaching content has been effectively received by the target audience.

If the simulation has been designed hand-in-hand with testing and evaluation instruments, and if the data logs (and page tagging, if used) are accurately constructing the reality of the story space, the potential exists for deriving extremely rich assessment data: data whose capture could only be dreamed of before. On the simplest level, this can include user elapsed time in meeting objectives or handling tasks, number of self-corrections, number of mistakes made or dead ends taken, and so on.

With the totality of this kind of data, evaluators should be able to study the internal learning process that users undertake: they can measure users against themselves (such as runners are always competing against their previous race times) and see how users change the way they make decisions. Assessments can also get to the underlying reasons for user assumptions and user decisions, via a comprehensive or “final” after action review that would be a full debriefing of the experience.

Dr. Riley envisions the day when evaluators may be able to “slot in” an assessment package with large databases of peer data, means, and standard deviations, making learning assessments for a simulation much easier to design, execute, and evaluate. But for now, each story-driven simulation is likely to require highly customized assessment tools and instruments.

Simultaneously, we can expect to see pedagogy engines and assessment engines that are as robust as today’s game engines. Work in this arena is already underway. But for now, pedagogical and assessment implementation will still need to be “hand-tooled” and will lag behind the game technology itself.

Despite this, we can see that possibly the biggest single advantage of computer-based pedagogical simulations is in the collection and analysis of complex assessment data, allowing training leaders to better refine their teaching, target more precisely the difficult areas of their pedagogy, and build better and more complex simulations. This advancement from multiple-choice exams and computer-read scantron forms is like the advancement from a stone-flaking tool to computer-based 3D modeling.

SUMMARY

For effective, trustworthy evaluations and assessments to be performed on a simulation, a very coherent pedagogy needs to be designed: the “Big Picture” of the intended learning. This pedagogy should drive the design of the simulation. While simulation design is being undertaken, formative testing should commence: gathering data on the expected user population, evaluating previous methods of disseminating training, and assembling a user baseline for the start of the simulation. Assessment instruments need to take into account the challenges that story narratives and user interactivity present: old assessment tools will need substantial redesign, or more likely, replacement. Throughout the simulation, users should get both in-game feedback and after action reviews, particularly to avoid the reinforcement of poor user decision making or incomplete comprehension of pedagogy, as well as user demotivation. If the proper formative testing and in-game feedback have been done, summative testing of story based simulation training holds the potential for gathering very rich assessment data, giving a much better picture of user progress and behavior patterns, and contributing to the building of even better or more complex simulations.