25

Audio

Audio is probably the most underrated and undervalued media asset, yet its functionality, malleability, and power are enormous. Theatrical films ignored sound for three decades, and although most of this was because of technological limitations, the fact is that sound design and sound delivery have been catching up ever since the advent of talkies. While Cinemascope, 3D, and other visual breakthroughs were being delivered in the 1950s, Dolby sound and the THX format came about a quarter century later.

Similarly, until relatively recently, television sound was delivered out of a tinny monaural speaker, long after color broadcasts, chroma-keying, and other visual techniques had matured.

And because computers could initially only deliver sound beeps, and later became equipped with speakers that must have been castoffs from cheap AM radios of the 1960s, sound was an all but ignored component of videogames. Once again, audio has been playing catch-up.

Paying attention to audio design, audio recording and capture, and audio delivery will yield major dividends in any simulation project. The creative use of audio can vastly enhance both the immersiveness and the pedagogical breadth and depth of a project, and can often disguise a constrained production budget and limited production resources. Audio can even salvage segments or entire projects that have fallen short of their goals.

Let’s take a look at different types of audio, and how we can better enhance a simulation environment through their shrewd design and production. These audio types include onscreen nonplayer character (NPC) and user dialogue, offscreen NPC and user dialogue, voiced narration and instruction, sound effects, and music. (Dialogue, as used here, denotes any speech from a character, as it is defined in screenwriting and playwriting. Consequently, an NPC or user soliloquy is still considered dialogue.)

ONSCREEN NPC DIALOGUE

People rarely pay much attention to silent security video monitors stationed in department stores, supermarkets, and so on. However, if audio was suddenly made available on these monitors, and if the monitors began eavesdropping on conversations, passers-by would soon become riveted to the TVs. You’ve seen this behavior in action if you’ve ever shopped for a television set at an electronics store: characters talking onscreen are difficult to ignore, and shoppers have a difficult time pulling their attention away from the demo sets. Similarly, if you’re hosting a party, you’d better make sure the TV is off: strangely, once the sound is turned up and onscreen characters are talking, the TV has an almost hypnotic effect, even when flesh-and-blood people are also in the room!

The power of onscreen characters, actually speaking, is undeniable. The fact that they talk makes them more believable, pulling us deeper into the simulation. Onscreen characters accompanied by popup dialogue balloons (a la comic books) or text windows containing dialogue remind us of the artificiality of the simulation experience, combating the goals we have in applying story elements to simulation.

However, synchronizing (i.e., “syncing”) voiced character dialogue to character lip movement is difficult and expensive to achieve (whether with live-action actors or animated/virtual actors). If we’ve decided to include character dialogue, do we need to sync it to lip movement?

If we are using live-action onscreen actors, the answer is yes. Most of us have seen a badly dubbed foreign film, where dialogue is clearly out-of-sync with lip movement. We know how distancing this is: the immersive movie experience takes a back seat to our constant awareness of the dubbing, and often, even dramatic scenes become comic in the rendering.

However, we seem to be far more forgiving of out-of-sync dialogue when it comes from the mouth of a virtual actor. Nobody expects Homer Simpson’s lips to exactly conform to his dialogue. The same goes with characters from Grand Theft Auto. Some of this has to do with how closely our virtual actors come to looking like human actors; some of it has to do with the size of the screen. One of the objections that some viewers had to The Polar Express and to Final Fantasy: The Movie was the very slight disconnect between lip movement and dialogue. But the virtual actors cast as NPCs in Leaders, though they were three-dimensional and clearly human, didn’t aim to exactly replicate live-action figures. As a consequence, relatively primitive lip movement sufficed for delivery of character dialogue.

Tools to achieve character lip sync keep improving. For example, Di-O-Matic’s Voice-O-Matic (http://www.di-o-matic.com/products/Plugins/ VoiceOMatic) is a 3Ds max plug-in that automates lip sync to 3D models. While the lip syncing isn’t perfect, it’s pretty good. As lip syncing tools continue to develop, the problems with good character lip sync diminish.

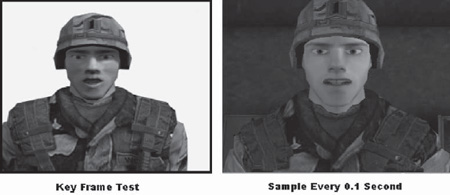

Figure 25.1 Presentation of some of the experimentation with automated lip sync that was carried out as part of the Leaders project. Various automated methods of syncing were tried including random mouth movements, which seemed to work quite well.

Internet delivery of your simulation may complicate the lip sync decision, since users of commercially available DSL may experience latency issues and some lag time in dialogue cueing. Clearly, a proof of concept should precede a final go-ahead in this situation. Similarly, dialogue cueing may be more problematic on a less than robust local area network, particularly if the LAN is on duty in other capacities.

One of the “cheats” that can be used to deliver nonsync onscreen NPC dialogue is the technique of “degraded video.” Back in the 1990s, when audio and video storage and bandwidth were still at a premium, the massive (and highly successful) videogames Wing Commander III and Wing Commander IV used precisely this technique to deliver many hundreds of lines of dialogue. The game’s central conceit turned the player into a space fighter pilot, flying with a wingman. It was believable that, even far in the future, delivering high-grade video between fighter ships approaching the speed of light would be impossible. Thus, postage-stamped video, highly granulated and stepped down in frame rate, conveyed a close-up of a wingman (or a mothership communications officer) who would frequently talk to you. None of the dialogue was lip synced, but the player never knew the difference.

This technique has to “play” believably, of course. But many situations suggest the use of “degraded video” to deliver the presence of a NPC. For example, the NPC could be speaking via a satellite-uplinked videophone (as seen on CNN) or via videoconferencing (where the frame rate is stepped down and the video window is reduced). The NPC could be speaking in a darkened room or via streaming video onto a handheld device. The NPC could be a mechanic working underneath a car or a patient bandaged up because he or she has just had cosmetic facial surgery (very believable in a simulation about cosmetic surgery or bedside manners!).

Some simulations (such as the Wing Commander series) will lend themselves to the degraded-video technique; others will allow for only a very spare use of the cheat. But look for opportunities to exploit this technique: in varying the overall look of the simulation, you’ll enhance the immersiveness of the project. (In the real world, we’re used to contending with different, and not always optimal, visual conditions.)

In sum, there is less excuse than ever for NPC characters’ speech to be presented through text in a simulation. Users expect to hear audio when NPC dialogue is being included, and nonaudio substitutions will reduce the immersion of the experience.

PRERECORDED VS. REAL-TIME SYNTHETIC NPC DIALOGUE

One decision to make is whether to rely on prerecorded comments, responses, questions, and monologues for your NPCs, or whether to attempt delivery of more truly synthetic, AI-driven and assembled speech, based on a library of phrases, phonemes, etc. (See Chapter Sixteen for discussion of on-the-fly narrative construction.)

Clearly, prerecording all NPC dialogue will restrict the flexibility, repeatability, and illusion of spontaneity with the dialogue. This can be somewhat ameliorated with randomization of NPC responses. For example, three or four content-identical pieces of dialogue can be rotated in and out, either on a purely randomized or a sequenced basis (e.g., selection 1 is played the first time, selection 2 the second, etc.), or some combination of the two.

However, this approach will increase the scripting, budgeting, casting, production, asset management, and postproduction of audio dialogue. Consequently, we have to weigh the costs of repetition and lack of content customization against development and production costs. In addition, the game engine will have to parse an ever larger library of audio media assets, which can impact performance.

However, creating truly synthetic dialogue may exact even higher costs. We must either develop an expansive phrase library (that can recombine into almost countless dialogue snippets), or a complete phoneme library. We must also have in place a robust assembly AI, along with a state-of-the-art speech engine capable of seamlessly swapping phrases in and out or blending phonemes on-the-fly, based on a vast vocabulary library. Most of us have experienced some form of synthetic speech, usually on the telephone, and we know that this speech doesn’t sound “human.” The human voice is almost infinitely nuanced, and we haven’t achieved anywhere near this level of sophistication in creating synthetic speech yet.

Consequently, while prerecorded dialogue blocks may defeat immersiveness due to repetition or slight inappropriateness, even state-of-the-art synthetic speech will remind us of the mechanics beneath its use. At this moment, neither approach is fully satisfactory. It’s a fact, however, that prerecording all NPC dialogue is currently the more economic and efficient choice for most projects, though it’s not 100% ideal.

USES OF ONSCREEN NPC DIALOGUE

The uses of onscreen NPC dialogue are many and varied, of course. An NPC can directly address the user, offering backstory, situational exposition, instructions, agendas, plans, choices, and other informational, pedagogical, and testing information. NPCs can also engage in their own dialogues with each other, accomplishing any of the above.

The typical talking-heads scene will involve two NPCs in conversation (perhaps with the user avatar as listener or participant, perhaps as a “movie scene” that cheats point-of-view since the user is not officially “present”). However, we can vary this as much as we want, borrowing from cinematic language.

For example: multiple, simultaneous NPC conversations can quickly immerse us in a world. Robert Altman has used this technique many times in early scenes of his films, as a moving camera eavesdrops on numerous, independent conversations (whether on a studio lot, at a sumptuous dinner table, or elsewhere). Skillfully done, this can quickly convey the rules of the world, the current conflicts, and the central participants in a story-driven simulation. Somewhat paradoxically, the semi-omniscient camera feels “real” to us. All of us have had the experience of moving through a movie theater, ballroom, or sports stadium, hearing snatches of conversation (which we’d often love to hear more of).

Onscreen, this technique feels authentic because it gives us the “feel” of a larger space and larger environment that spills out from the rectangular boundaries of the screen.

The art of NPC dialogue is having it sound natural, rather than didactic and pedagogical. Again, television shows and films are the best examples of this: dialogue is always carrying expository material, character backstory, and other information; but at its best, the dialogue seems driven purely by character agendas and actions and reactions.

NPC dialogue might also be used in the form of a guide or mentor or guardian angel character who enters the screen when the user asks for help (or whose input clearly indicates confusion or regression). To some degree, this may diminish the immersiveness and realism of the simulation, but set up cleverly, this technique may not feel particularly intrusive. Obviously, the angel in It’s a Wonderful Life is a believable character within the context of the fantasy. On the other hand, Clippy, the help avatar in recent versions of Microsoft Office, is an example of a “spirit guide” who breaks the immersive nature of the environment. Rather than an elegant and seamlessly integrated feature, Clippy feelscrude and out of place.

Our mentor character might be a background observer to many or all key actions in our simulation, or could be someone “on call” whenever needed (who also “happens” to be available when the user gets in trouble). A supervisor character can also be a mentor. For example, in the Wing Commander series, the ship’s captain would devise missions and advise the user avatar, while in THQ’s Destroy All Humans, the aliens’ leader teaches the user avatar how to destroy homo sapiens.

Whether the mentor is an NPC or a live instructor, the pros and cons of each approach is the subject of other chapters in this book.

ONSCREEN USER DIALOGUE

But what of user dialogue (i.e., user responses, user questions, user exclamations, etc.) delivered via a headset or from a keyboard (with text then translated to speech) that could then be delivered by an onscreen user avatar and understood and responded to by NPCs?

As previously discussed, the decision made in Leaders was to avoid (for now) any use of audio in rendering user dialogue. The Natural Language Interface had enough difficulty parsing text input from a keyboard. Attempting a realtime translation of text input into digitized speech that would become an active part of the immersive experience was impossible. (The slowness of keyboard input is reason enough to avoid the effort.) Attempting to translate spoken language into textual data that could then be parsed was equally beyond the capabilities of the technology.

One can imagine a simulation that might succeed with the natural language recognition system looking to recognize one or two keywords in user-delivered dialogue—but this seems to suggest very simplistic content and responses. (You may have encountered natural language recognition systems used by the phone company or state motor vehicles department that attempt to understand your speech, which works reasonably well providing you don’t stray past a vocabulary of five or six words.)

Though text-to-speech and speech-to-text translation engines continue to improve, the fact is that the error rate in these translations probably still prohibits robust use of user-voiced responses and questions within the realm of a pedagogically based, story-driven simulation employing NPCs. However, this element has tremendous potential for the future. (See http://www.oddcast.com for demonstration of a text-to-speech engine with animated 2D avatars. The results are surprisingly effective.)

For now, we can probably incorporate user-voiced dialogue only when our simulation characters are primarily other users, rather than NPCs. Indeed, this device is already used in team combat games, where remotely located players can issue orders, confirm objectives, offer information, and taunt enemies and other team players, using their headsets. The human brain will essentially attach a given user’s dialogue (heard on the headset) to the appropriate avatar, even though that avatar has made little or no lip movement.

Providing the dialogue doesn’t need parsing by a text engine or language recognition engine, it can add tremendous realism and immersiveness to an experience. However, without some guidance and monitoring from an instructor or facilitator, this user-created dialogue can deflect or even combat the pedagogical material ostensibly being delivered.

As noted elsewhere, users will always test the limits of an immersive experience. In Leaders, non sequitur responses and unfocused responses would trigger an “I don’t understand” response, and continued off-topic, non sequitur responses would soon trigger a guiding hand that would get the conversation back on track. If most or all dialogue is user created, we can easily imagine conversations devolving into verbal flame wars or taunt fests, with the intended pedagogical content taking a backseat to short-term user entertainment.

In addition, with no textual parsing and logging of this onscreen dialogue, the material becomes useless for evaluation of a user’s knowledge, learning curve, strengths, weaknesses, and tendencies. Its sole value, then, becomes the added user involvement it brings, and must be weighed against the cost of the processing needed to deliver this style of audio.

Clearly, one of the users of our simulation might actually be the instructor or facilitator: the man in the loop. While a live instructor embedded into a simulation might be able to keep dialogue on track and focused on pedagogical content, issues regarding delivery of his or her dialogue remain the same. Does the instructor speak into a mike and have the system digitize the speech? Or is text-to-speech used?

OFFSCREEN NPC AND USER DIALOGUE

It’s easy to overlook opportunities to use offscreen character dialogue, yet this type of audio can add tremendous texture and richness to our simulation. NPCs can talk to the user by phone, intercom, or iPod.

Indeed, if the conceit is constructed correctly, the NPC could still be speaking to us via video, providing they remain offscreen. Perhaps the stationery camera is aimed at a piece of technology, or a disaster site, or something else more important than the speaker. Or, perhaps the video is being shot by the speaker, who remains behind the camera: a first-person vlog (video blog).

Another potential conceit is recorded archival material, e.g., interviews, testimony, meeting minutes, dictation, instructions, and raw audio capture. Any of these may work as a credible story element that will enrich the simulation, and indeed, intriguing characters may be built primarily out of this kind of audio material.

If we have crowd scenes, we can also begin to overlay dialogue snippets, blurring the line between onscreen and offscreen dialogue (depending on where the real or virtual camera is, we would likely be unable to identify who spoke what and when, and whether the speaking character is even onscreen at that moment).

Not surprisingly, we can also use offscreen user (and instructor) speech. Many of the devices suggested above will work for both NPCs and users. But while the offscreen dialogue delivery solves the worries about syncing speech to lips, the other issues discussed earlier remain.

Speech that is merely translated from text, or digitized from spoken audio, is likely to have little pedagogical value (although it can add realism). And the difficulties of real-time natural language processing, in order to extract meta-data that provides input and evaluative material, are nearly as daunting. If we can disguise the inherent lag time of creating digitized speech from typed text (which is more possible when the speaking character is offscreen), this type of audio now becomes more realizable and practical, but it requires an extremely fast typist capable of consistently terse and incisive replies.

VOICED NARRATION

From the beginning of sound in the movies, spoken narration has been a standard audio tool. Certainly, we can use narration in our simulation. However, narration is likely to function as a distancing device, reminding the user of the authoring of the simulation, rather than immersing the user directly in the simulation. Narration might be most useful in introducing a simulation, and perhaps in closing out the simulation. Think of this as a transitional device, moving us from the “real world” to the simulation world, and then back again.

Obviously, narration might also be used to segment chapters or levels in our simulation, and narration can be used to point out issues, problems, solutions, and alternatives. But the more an omniscient sort of narrator is used, the more artificial our simulation will seem. In real life, narrators imposing order and meaning do not exist. Before using the device of a narrator, ask yourself if the information can be conveyed through action, events, and agenda-driven characters confronting obstacles. These elements are more likely to ensure an immersive, story-driven simulation.

SOUND EFFECTS

The addition of sound effects to a project may seem like a luxury, something that can easily be jettisoned for reasons of budget or time. Avoid the temptation. Sound effects are some of the best and cheapest elements for achieving added immersion in a simulation. They are easily authored or secured, and most game engines or audio-processing engines are capable of managing an effects library and delivering the scripted effect.

In Leaders, simple effects like footsteps, trucks moving, shovels hitting dirt, crickets chirping, and background landscape ambience all contributed to the immersive environment. Right now, as this is being written, one can hear the background whir of a refrigerator coolant motor; a slight digital tick-tock from a nearby clock; the barely ambient hum of a television; and the soft sound of a car moving on the street outside. We’re surrounded by sounds all the time: their presence is a grounding in reality.

Whether you need to have leaves rustling, a gurney creaking, first-aid kits rattling, coffee pouring, a television broadcasting a football game, or a garbage disposal grinding, pay attention to the background sounds that seem to be a logical part of your simulation space. If dialogue is being delivered by phone, don’t be afraid to distort the sound occasionally (just as we experience cellphone dropouts and warblings).

Sound effects libraries are easily purchased or licensed, and effective sound effects usage will enhance the realism of your simulation, and perhaps even mask (or divert attention from) other shortcomings.

MUSIC

We might expect music to be an intrusive, artificial element in our simulation. Certain simulations, of course, may demand ambient music: for example, a radio or CD player might be playing in a space or in the background of a telephone call. But most of the time, music will not be a strictly realistic element within the simulation environment.

Nevertheless, soundtrack music may often help to set the tone and help with a user’s transition into the simulation space. Inevitably, a simulation is asking users to suspend their disbelief and surrender to as deep a level of immersion as possible. Because of our understanding of the audio grammar of film and television, soundtrack music may indeed make the simulation more believable.

Hip-hop music might settle the user into an urban environment; a Native American flute might better suggest the wide-open spaces of the Southwest; a quiet guitar might transition the user from the climax of a previous level or chapter into a contemplative mental space for the next chapter or level or evaluation.

In Leaders, introductory Middle Eastern ethnic music immediately helped the users understand that they were in Afghanistan, far away from home. Different snippets helped close out chapters and begin new ones, while reminding the users where they were.

Music libraries are easily purchased or licensed, and a number of rhythm-or music-authoring programs for the nonmusician are available, if you’d like to create your own background tracks. While not every simulation project will call for music, the use of music should never be summarily dismissed. If its use might have the effect of augmenting user immersion, it should be given strong consideration.

SUMMARY

Audio is probably the single most underrated media element. Its use as dialogue, effects, and ambient background will enrich any simulation, and can easily be the most cost-effective production you can undertake. Audio can deliver significant pedagogical content (particularly via dialogue), while encouraging users to spend more time with a simulation and immerse themselves more fully in the space. Many decisions have to be made on what types of audio to use in a project: How will they support the pedagogy and the simulation, and at what cost? Will true synthetic speech be used, or prerecorded analog speech? Will users be able to contribute their own audio? And how deep and complex will the use of effects be (i.e., how dense an environment do I need to achieve)? Effective audio deployment is bound to contribute to the success of a simulation.