Chapter 6. Metadata

Elements of organization

If thorough and consistent metadata has been created, it is possible to conceive of its use in an almost infinite number of new ways …

If thorough and consistent metadata has been created, it is possible to conceive of its use in an almost infinite number of new ways …

Metadata, often described as

data about data, is critical to all forms of organized digital content. It is the means by which all organization in a digital library is achieved, and much of what you have read in this book so far implicitly assumes its existence. Whether in the creation of surrogates (Section 3.3), categories of video browsing (Section 5.4), or usage information (Section 2.2), it is metadata that yields collections that are

organized, rather than digital piles of unstructured and unconnected objects.

Specifically, metadata enables almost all the examples of digital library technology that we have seen in this book, including

• the item displays and surrogates in the Pergamos Digital Library in Figure 1.10;

• the browsing structures of

subjects,

titles a-z,

organization, and

how to of the

Village Brickmaking collection in Figure 3.1;

• the table of contents of the

Otago Witness newspaper in Figure 3.4d;

• the colored tabs marking the chapters in the realistic book

Farming Snails I in Figure 3.5b;

• the entire record display in Figure 3.9a;

• the structured form searching interface in Figure 3.15;

• the searching interface in Figure 3.17;

• the structured subject browsing of Figure 3.20;

• the user log graphs in Figure 2.4.

Metadata is generally taken to be structured information about a particular information resource. Information is “structured” if it can be meaningfully manipulated without understanding its content. For example, given a collection of source documents, bibliographic information about each document would be metadata for the collection—the structure is made plain, in terms of which pieces of text represent author names, which represent titles, and so on. But given a collection of bibliographic information, metadata might also comprise some information about each bibliographic item, such as who compiled it and when.

The role of metadata in your digital library can be clarified by considering such questions as:

• Where does your metadata come from? Is it automatically extracted from digital objects, manually assigned, or imported from an external source?

• How will the metadata affect document display, browsing, searching, and maintenance of the digital library?

• Does the metadata need any extra processing before use? For example, do different versions of people's names need to be harmonized?

• Is the metadata in your digital library affected by the activities of the end-users?

• Can you monitor the metadata in your library to continually assess its quality?

• Is the metadata private to your library or can it be shared with others?

• Can you migrate your metadata to another software application?

This chapter starts with a description of different perspectives on metadata and how these perspectives frame the answers to the questions above. Then we consider the evolution of metadata in library catalog systems, as this has had a lasting influence on digital library systems. Following this, we describe how metadata represents material like multimedia content (Section 6.3) and complex compound objects (Section 6.4). We then consider how to process and assess metadata within the workflow of a digital library. Finally, in Section 6.6 we examine how metadata can be extracted from source documents—a key process in automating the construction of large-scale collections.

6.1. Characteristics of Metadata

Imagine when electronic books are cheap and high-quality enough to begin displacing printed books. Every time a student highlights or annotates a page, that information will be used—with permission—to enhance the public metadata about the book. Even how long it takes people to get through pages or how often they go back to particular pages…. We’ll be able to ask our books to highlight the passages most often read by poets, A [grade] students, professors of literature, or Buddhist priests.

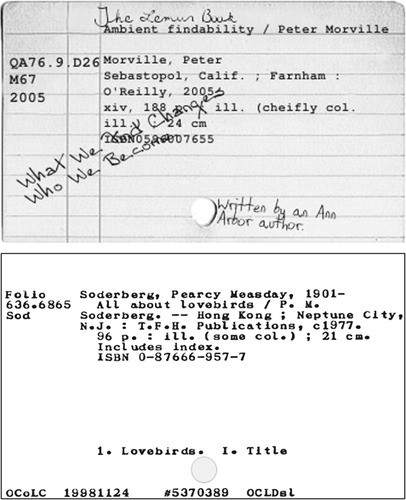

Some of the earliest organized external descriptions for physical objects are found in Sumerian collections of clay tablets used for recording commercial transactions. As the number of items in a collection increased, the need for efficient mechanisms to find particular items and to simply manage the collection grew accordingly. Gradually, physical descriptions grew very complex and very large, as illustrated by the card catalogs of the 20th century (Figure 6.1). As with many other data-intensive applications, computers evolved as the appropriate tool for managing these descriptions. Some argue that descriptions only formally became

metadata when they were moved to an electronic context, but we prefer the inclusive approach that emphasizes the continuity of resource description.

The objects of description have also evolved, to include varied media, and, in museums, a panoply of diverse physical objects. As the described objects become digital, we move from the library to the digital library—but the principles of description for access and management remain largely the same. The topic of metadata for

digital libraries inherits a vast amount of work performed by librarians in creating, sharing, applying, and managing metadata for library collections.

There are several approaches to classifying metadata, depending on its source, purpose, audience, and format. In building a digital library, a practical concern is the source of the metadata: Where do the metadata values come from? How do we know that the author of the Word file on our hard disc is

John Smith? Broadly speaking, there are two possibilities: either a human has declared that Smith is the author, or a computer program has determined the author's identity.

In the case of

human-assigned metadata, a human being examines the digital document (or its physical counterpart) and assigns a particular value (

John Smith) to a particular metadata element (

the author). This person (often a librarian) may consult other documents or other people in the process of determining the metadata value, but the ultimate source of the metadata assignment is the human brain.

In the case of

computationally assigned metadata, a computer program, after processing the digital document (by comparing it with other documents or using remote online resources), outputs a value for the metadata element. If the document was

born digital (such as a word-processed document or an image from a digital camera), metadata may have been embedded within the file at the moment of its creation. If the document was digitized from an analog source (using OCR, for example), the software applications used often will have embedded metadata within the file (e.g., TIFF tags, see Section 6.3). Such embedded metadata is usually easily extracted from digital objects, although it is often of less use than metadata derived from human judgments.

For a specific library system, metadata values may be imported from an external source, but if you follow the metadata creation process back far enough, eventually you will reach either a human being or a computer program.

Irrespective of its source, where does the metadata actually reside? Is it embedded within a digital document, as in HTML or XML files, for example, or is it stored separately in library catalog records or some remote metadata repository? Many file formats contain embedded metadata that needs to be

extracted from the file before it can used to organize items in a digital library. The central issue is whether the file format is clearly documented and available for inspection. Where formats are publicly specified (e.g., PNG, PDF, ODF), it is relatively straightforward to extract metadata. However, when file formats are proprietary, programmers often have to resort to reverse engineering the format, which is time consuming and less reliable.

An alternative perspective is to consider the function of different types of metadata, such as

•

administrative metadata for managing resources, such as rights information;

•

descriptive metadata for describing resources, such as the cards in Figure 6.1;

•

preservation metadata for describing resources, such as recording preservation actions;

•

technical metadata related to low-level system information, such as data formats and any data compression used;

•

usage metadata related to system use, such as tracking user behavior.

One important aspect of this categorization is that it highlights the fact that the end user's view of metadata (Figure 6.1) is only the tip of the iceberg. Much of the metadata is not intended for public display, and in the case of

usage metadata, the ALA Code of Ethics (Figure 2.2) reminds us that some parts may be confidential. The categorization further illustrates that metadata is not

static. Although we might expect that data like author and publisher might not change over the lifetime of the object, metadata related to preservation and use is likely to change, perhaps frequently. We would also expect metadata to be generated by user enhancements such as annotations (see Section 2.5).

6.2. Bibliographic Metadata

Anyone working with digital libraries needs to know about the different standard methods for representing document metadata. Much of the work on metadata in digital libraries is based on practices that have been adopted in library cataloging, particularly the MARC (

machine-readable cataloging) format that has been used by libraries internationally for decades. The spread of electronic resources in the 1990s led to the creation of a new scheme called Dublin Core, which is an intentionally minimalist standard intended to be applied to a wide range of digital library materials by people who are not trained in library cataloging. These two schemes are of interest not only for their practical value, but also for highlighting diametrically opposed underlying philosophies. We also include descriptions of two bibliographic metadata formats: BibTeX and EndNote. The former is common in scientific and technical fields (and a companion to the LaTeX format described in Section 4.6), while the latter is in widespread general use (and integrates with Microsoft Word, for example).

MARC

[The MARC standard] forever changed the relationship of a library to its users, and the relationship of geography to information.

Managing metadata with software tools has a long history in libraries, mostly deriving from the necessity to move from card catalogs to computer-based records. The core work was the development of the MARC standard in the late 1960s by Henriette Avram at the Library of Congress. Once metadata was expressed in computer-based MARC records, it could be copied, distributed, and shared among libraries. MARC is a comprehensive and detailed standard whose use is carefully controlled and transmitted to budding librarians in library science courses. Here we have space to present only an outline of all its richness.

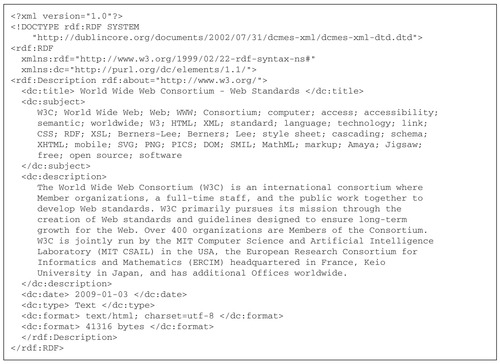

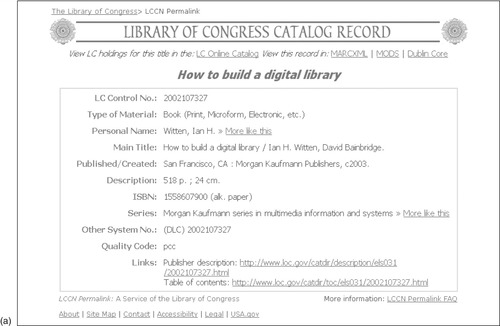

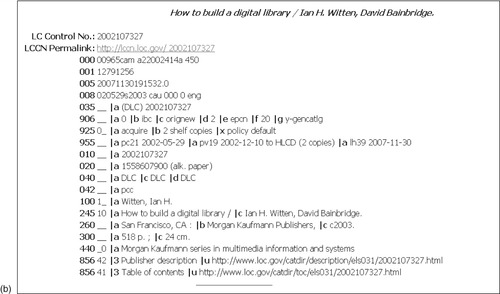

Figure 6.2a shows an entry that was obtained from the Library of Congress online catalog giving metadata for the first edition of this book,

How to Build a Digital Library. It includes information about authorship, topic coverage, details about the physical book itself, the publisher, various identification numbers, and links to more detailed information. This is an electronic record that describes a physical object, presented in a classic, familiar, library catalog style.

|

|

|

|

|

|

|

| Figure 6.2: |

Producing a MARC record for a particular publication is an onerous undertaking that is governed by a detailed set of rules and guidelines called the

Anglo-American Cataloging Rules, familiarly referred to by librarians as

AACR2R (the

2 stands for second edition, the final

R for revised). These rules, inscribed in a formidable handbook, are divided into two parts: Part 1 applies mostly to the description of documents, Part 2 to the description of works. Part 2, for example, treats

Headings, Uniform titles, and References (i.e., entries starting with “See …” that capture relationships between works). Under

Headings there are sections on how to write people's names, geographic names, and corporate bodies. Appendices describe rules for capitalization, abbreviations, and numerals.

The rules in

AACR2R are highly detailed, almost persnickety. It is hard to convey their flavor in a few words. Here is one example: How should you name a local church? Rules under

corporate bodies give the answer. The first choice of name is that “of the person(s), object(s), place(s), or event(s) to which the local church … is dedicated or after which it is named.” The second is “a name beginning with a word or phrase descriptive of a type of local church.” The third is “a name beginning with the name of the place in which the local church … is situated.” Now you know. If rules like this interest you, there are thousands more in

AACR2R.

Internally, MARC records are stored as a collection of tagged fields in a fairly complex format. Figure 6.2b gives something close to the internal representation of the catalog record, while Table 6.1 lists some of the field codes and their meaning. Many of the fields contain identification codes. For example, field 008 contains fixed-length data elements, such as the source of the cataloging for the item and the language in which the book is written. Many of the variable-length fields contain subfields, which are labeled

a,

b,

c, and so on, each with their own distinct meaning (in the computer file they are separated by a special subfield delimiter character). For example, field 100 is the personal name of the author, with subfields indicating the standard form of the name, full forenames, and dates. Field 260 gives the imprint, with subfields indicating the place of publication, publisher, and date. The information in the more legible representation of Figure 6.2a is evident in the coded form of Figure 6.2b. Some fields can occur more than once, such as the subject headings stored in field 650.

The MARC format covers more than just bibliographic records. It is also used to represent authority records—that is, standardized forms that are part of the librarian's controlled vocabulary (see Section 6.5).

The rules and detailed formatting of the MARC standard are what allows these records to be exchanged between different library software systems. Rather than each library creating a new record for a book, it can be created once and then shared. The use of standardized records allows for great efficiencies in metadata creation and also facilitates the construction of so-called union catalogs that combine metadata from several libraries. The WorldCat union catalog at the Online Computer Library Center, a U.S. organization that helps libraries locate, acquire, catalog, and lend library materials, holds more than 125 million records from 112 countries. Cooperation on this scale is based on a consortium approach common in the library community and made practical through the widespread use of standards like MARC.

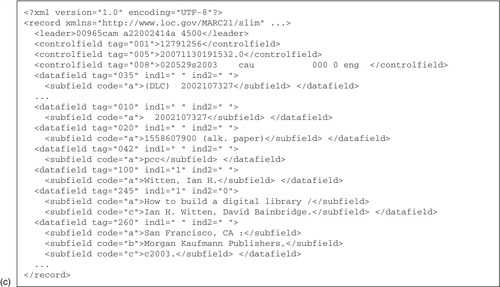

MARCXML

The Library of Congress guided the development of a standard way of representing MARC data in the XML language, called MARCXML. Figure 6.2c shows an example that contains an abbreviated version of the record in Figure 6.2b. Field codes are represented as the values of attributes within the opening tag of

datafield elements. Within these elements,

subfield elements represent the MARC subfields, with

code attributes to distinguish them. For example, the last few lines of Figure 6.2c give the publisher information in MARC field 260, including place of publication, publisher name, and publication date. The simplicity of this transformation emphasizes one of the design goals of MARCXML: lossless transformation between it and MARC in both directions. This round-trip compatibility is useful because there are many digital library applications where data is transformed or migrated between different systems.

MARCXML uses XML in a different way from other XML-based metadata standards, where element names usually convey semantic information (e.g., <title>, <publisher>, etc.). This characteristic makes it hard to read for a human unfamiliar with MARC. For comparison, look at the MODS representation of the same data in Figure 6.2e, also expressed in XML, which you will probably find much easier to understand. However, MARCXML provides a useful mechanism for connecting the wealth of library data in MARC format with XML processing technologies, such as XSL (Section 4.4).

Dublin Core: DC

The Dublin Core is a set of metadata elements that are designed specifically for non-specialist use. It is intended for the description of electronic materials—such as a Web page or site—which will almost certainly not receive a full MARC catalog entry. The result of a collaborative effort by a large group of people, Dublin Core is named for Dublin, Ohio, where the first meeting was held in 1995. It received the approval of ANSI, the American National Standards Institute, in 2001.

Compared with the MARC format, Dublin Core has a refreshing simplicity. Table 6.2 summarizes the metadata elements it contains: just 15, rather than the several hundred used by MARC. As the name implies, the elements are intended to form a “core” set that may be augmented by additional elements for local purposes. In addition, the existing elements can be refined through the use of qualifiers. All elements can be repeated where it is appropriate. Dublin Core uses the general term

resource for what is being described—the term subsumes pictures, illustrations, movies, animations, simulations, and even virtual reality artifacts, as well as textual documents. Indeed,

resource has been defined in Dublin Core documents as “anything that has identity.”Figure 6.2d shows a Dublin Core metadata record (in an XML format) for the book in Figure 6.2a; naturally, it contains only a subset of the full information.

The

Creator might be a photographer, an illustrator, or an author. The

Subject is typically expressed as a keyword or phrase that describes the topic or the content of the resource. The

Description might be an abstract of a textual document, or a textual account of a non-textual resource, such as a picture or an animation. The

Publisher is generally a publishing house, a university department, or a corporation. A

Contributor could be an editor, a translator, or an illustrator. The

Date is the date of resource creation, not the date or dates covered by its contents. For example, a history book will have an associated

Coverage date range that defines the historical time period it covers, as well as a publication

Date. Alternatively (or in addition),

Coverage might be defined in terms of geographical locations that pertain to the content of the resource. The

Type might indicate a home page, research report, working paper, poem, or any of the media types listed above. The

Format is used to help identify software systems needed to access the resource.

Qualified Dublin Core

An extension of the basic 15-element Dublin Core described above was introduced to add greater expressivity and to extend the range of uses. Two forms of qualification were added:

element refinement and

encoding schemes.

Any element can be refined or qualified. For example, the

Date element can be refined as

date created,

date valid,

date available,

date issued, or

date modified. The key principle in element refinement is “dumbing down”; the qualifier can be safely removed and the element value interpreted as a simple element. So the

date created, for instance, can be safely interpreted as a

Date. This principle allows qualified and simple Dublin Core to co-exist easily inside digital libraries.

Encoding schemes address the permissible ranges of element values. For example, a controlled vocabulary for an element could be expressed as an encoding scheme—that is, the Library of Congress Subject Headings could be used for the Dublin Core element

Subject. Given such an encoding scheme, digital library software can expect that only valid subject headings will appear as values in the

Subject element. Alternatives to the Library of Congress Subject Headings include the Dewey Decimal Classification and the Medical Subject Headings (MeSH) designed by the U.S. National Library of Medicine. A different form of encoding scheme can specify that a value should follow a precise structural rule, such as a date format, URL, or language-encoding scheme. The restrictions imposed by encoding schemes are valuable, because it is easier to write software to deal with constrained values than it is to write software for free text.

Table 6.3 gives details of the qualifiers and encoding schemes that are specified in qualified Dublin Core, including some notes on encoding schemes suggested by the official Dublin Core Metadata Initiative Usage Board. Table 6.3a includes qualifiers for

Audience,

Coverage,

Date,

Description,

Format,

Identifier,

Relation, and

Rights metadata fields (among others), and encoding schemes for both spatial and temporal coverage, date, identifiers, languages, and subjects. Table 6.3b gives suggested standards for encoding such things as regions of space, periods of time, particular locations, subjects, and languages.

| Accrual Method, Accrual Periodicity, Accrual Policy, Contributor, Creator, Instructional Method, Provenance, Publisher, and Rights Holder do not have refinements or encoding schemes. | ||||||

| (a) Sample elements, qualifiers, and encoding schemes | ||||||

|---|---|---|---|---|---|---|

| Element | Qualifier | Encoding schemes | ||||

| Audience | Education Level, Mediator | |||||

| Coverage | Spatial, Temporal | DCMI Point, ISO 3166, DCMI Box, TGN | ||||

| Date | Created, Valid, Available, Issued, Modified, Date Accepted, Date Modified, Date Submitted | DCMI Period, W3CDTF | ||||

| Description | Table of Contents | |||||

| Abstract | ||||||

| Format | Extent, Medium | IMT | ||||

| Identifier | Bibliographic Citation | URI | ||||

| Language | ISO 639-2, RFC4646 | |||||

| Relation | 13 different relations are specified as qualifiers, including Is Part Of, Has Version, and Is Referenced By | |||||

| Rights | Access Rights, License | |||||

| Source | URI | |||||

| Subject | LCSH, MeSH, DDC, LCC, UDC | |||||

| Title | Alternative | |||||

| Type | DCMIType | |||||

| (b) Notes on encoding schemes | ||||||

| Scheme | Type | What it encodes | Example | |||

| DCMI Box | Syntax | Region of space | The Western Hemisphere: westlimit=180; eastlimit=0 | |||

| DCMI Period | Syntax | Period of time | World War II: name=World War II; start=1939; end=1945 | |||

| DCMI Point | Syntax | A particular location | Perth, Western Australia: name=Perth, W.A.; east=115.85717; north=−31.95301 | |||

| DCMIType | Vocabulary | Type of resource | Quicktime video file: MovingImage | |||

| DDC | Vocabulary | Dewey Decimal Classification | 599 Mammalia. Mammals | |||

| IMT | Vocabulary | Internet Media Type—also known as MIME types | Quicktime video file: video/quicktime | |||

| ISO 639-2 | Syntax | Three-letter codes for languages | Arabic: ara | |||

| LCC | Vocabulary | Library of Congress Classification | QL700-739.8 | |||

| LCSH | Vocabulary | Library of Congress Subject Headings | Animal Psychology | |||

| MeSH | Vocabulary | Medical Subject Headings | Animals, Wild | |||

| RFC4646 | Syntax | Languages | English as used in the U.S.: en-US | |||

| TGN | Vocabulary | Getty Thesaurus of Geographic Names | Perth in Western Australia: Perth (inhabited place) ID: 7001977 | |||

| UDC | Vocabulary | Universal Decimal Classification | 599 Mammalia. Mammals | |||

| URI | Syntax | Uniform Resource Identifiers | http://www.ietf.org/rfc/rfc3986.txt | |||

| W3CDTF | Syntax | Date and time | November 5, 1994, 8:15:30 a.m., U.S. Eastern Standard Time: 1994-11-05T08:15:30-05:00 | |||

Metadata Object Description Schema: MODS

The Metadata Object Description Schema (MODS) is a bibliographic XML-based metadata format that can be used to represent a subset of MARC (specifically, the MARC21 variant that is a combination of the United States and Canadian MARC formats). Because it uses a subset of a particular MARC variant, general MARC records cannot be converted losslessly to MODS, and so round-trip compatibility is sacrificed.

Figure 6.2e shows a MODS record that describes the first edition of this book. The MARC field 260 publisher information we looked at in the last few lines of Figure 6.2c can be found starting at the tenth line of Figure 6.2e, within the

originInfo element:

<originInfo>

<place>

<placeTerm type="code" authority="marccountry">cau</placeTerm>

</place>

<place>

<placeTerm type="text">San Francisco, CA</placeTerm>

</place>

<publisher>Morgan Kaufmann Publishers</publisher>

<dateIssued>c2003</dateIssued>

<dateIssued encoding="marc">2003</dateIssued>

<issuance>monographic</issuance>

</originInfo>

This extract illustrates a key quality of well-designed XML-based representations: it is easily readable by people as well as by computers. As you can see, MODS allows richer descriptions than Dublin Core and is a useful alternative when qualified Dublin Core is inadequate for describing your resources.

Along with Dublin Core, MODS can be used within the METS standard described in Section 6.4. It does not in itself address issues of authority control, but there is an analog to MARC authority data in the MADS format described in Section 6.5.

BibTeX

Scientific and technical authors, particularly those using mathematical notation, often favor a widely used generalized document-processing system called TeX (pronounced

tech), or a customized version called LaTeX (see Section 4.6). This freely available package contains a subsystem called BibTeX that manages bibliographic data and references within documents.

Figure 6.2f shows a record in BibTeX format. Records are grouped into files, and files can be brought together to form a database. Each field can flow freely over line boundaries—extra white space is ignored. Records begin with the @ symbol followed by a keyword naming the record type: article, book, and so forth. The content follows in braces and starts with an alphanumeric string that acts as a key for the record. Keys in a BibTeX database must be unique. Within a record, individual fields take the form

name=value, with a comma separating entries. Names specify bibliographic entities such as author, publisher, address, and year of publication. Each item type can be included only once, and values are typically enclosed in braces (or double quotation marks) to protect spaces. Certain standard abbreviations, such as abbreviated month names, can be used, and users can define their own abbreviations.

Two items deserve explanation. First, the

author field is used for multiple authors, and names are separated by the word

and, rather than by commas, with a final

and as in ordinary English prose. This is because the tools that process BibTeX files incorporate bibliographic standards for presenting names. The fact that the two names in Figure 6.2f use different conventions is of no consequence: both will be presented correctly in whatever style has been chosen for the bibliography. Second, titles are also presented in whatever style has been chosen for the bibliography—for example, only the first word may be capitalized, or all content words may be capitalized—regardless of how they appear in the BibTeX file. Braces override this and preserve the original capitalization, so that the proper names can appear correctly capitalized in the document.

Unlike in other bibliographic standards, in BibTeX the set of attribute names is determined by a style file named in the source document and is used to formulate citations in the text as well as to format the references as footnotes or in a list of references. The style file is couched in a full programming language and can support any vocabulary. However, there is general consensus in the TeX community about the keywords to use. Advantage can be taken of TeX's programmability to generate XML syntax instead; alternatively, there are many standalone applications that simply parse BibTeX source files.

Academic authors often create BibTeX bibliographic collections for publications in their area of expertise, accumulating references over the years into large and authoritative repositories of metadata. Aided by software heuristics to identify duplicate entries, the repositories constitute a useful resource for digital libraries in scientific and technical areas.

EndNote

A format promoted by the popular bibliographic tool EndNote is illustrated in Figure 6.2g, which shows the same basic bibliographic record. The syntax is based on an earlier format that, like BibTeX, was designed for use by scientific and technical researchers. Today, many systems for maintaining bibliographic databases can export databases in this format. It is formatted line by line, and records are separated by a blank line. Each line starts with a key character, introduced by a percent symbol, that signals the kind of information the line contains. The rest of the line gives the data itself.

The format has a fixed set of keywords, listed in Table 6.4. The keyword

%0, or percent zero, appears as the first line of a record to make the type explicit—Book, in our example. Unlike BibTeX, EndNote repeats the author field (

%A) for multiple authors; the ordering reflects the document's authorship.

6.3. Metadata for Multimedia

Metadata is by no means confined to textual documents. In fact, because it is much harder to search the content of image, audio, or multimedia data than to search full text, flexible ways of specifying metadata become even more important for locating these resources.

The image file formats described in Chapter 5 (Section 5.3) incorporate some limited ways of specifying image-related metadata. For example, GIF and PNG files include the height and width of the image (in pixels), and the number of bits per pixel (up to 8 for GIF, 48 for PNG). PNG specifies the color representation (palletized, grayscale, or true color) and includes the ability to store text strings representing metadata. JPEG also specifies the horizontal and vertical resolution. But these formats do not include provision for other kinds of structured metadata, and when they are used in a digital library, image metadata is usually put elsewhere.

We describe several metadata formats for images and multimedia. The Tagged Image File Format, or TIFF, is a practical scheme for associating metadata with image files that has been in widespread use for well over a decade. TIFF is often used storing for images—including document images—in today's digital libraries. EXIF, XMP/IPTC, and MIX are alternative approaches for image metadata: embedding metadata in the image file itself or separating the metadata from the image data. Temporal multimedia, such as audio and video, offer limited facilities for storing metadata within the files themselves. MPEG-7 is a far more sophisticated and ambitious scheme for defining and storing metadata associated with any multimedia information.

The following sections describe TIFF in specific detail, briefly cover metadata for temporal formats, and proceed to outline the facilities that MPEG-7 provides. Finally, we outline the usage-based framework of the MPEG-21 standard.

Image metadata: TIFF

The Tagged Image File Format, TIFF, described in Section 5.3, incorporates extensive facilities for descriptive metadata. It is used to describe image data that comes from scanners, frame-grabbers, paint programs, and photo-retouching programs. It is a rich format that can take advantage of many image requirements but is not tied to particular input or output devices. It provides numerous options—for example, several different compression schemes and comprehensive information for color calibration. It is designed so that private and special-purpose information can be included.

A single TIFF file can include several images, each of which is characterized by tags whose values define particular properties of the image. Most tags contain integers, but some contain ASCII text—and provision is made for tags containing floating-point and rational numbers. Baseline TIFF has a dozen or so mandatory tags that give physical characteristics and features of images: their dimensions, compression, various metrics associated with the color specification, and information about where they are stored in the file.

Table 6.5 shows some TIFF tags, all of which except the last group are mandatory. The first group specifies the image dimensions in pixels, along with enough information to allow conversion to physical units where possible. All images are rectangular. The second group gives color information. For bilevel images, the color group defines whether they are standard black-on-white or reversed; for grayscale it gives the number of bits per pixel; for palette images, it specifies the color palette. The third group specifies the compression method—baseline TIFF allows only extremely simple schemes. Finally, a TIFF image can be broken into strips for efficient input/output buffering, and the last group of mandatory tags specifies their location and size.

Additional features go far beyond the baseline illustrated in Table 6.5 and users can define new TIFF tags and compression. This makes the TIFF format even more flexible than the official list of extensions given in the standard, although naturally care needs to be taken to ensure that the software used to read and write image files is conversant with the tags they use. To avoid conflict, a registration process is provided for allocating private tags. There are over 70 such tag sets, including support for EXIF (see next subsection). More radical extensions include GeoTIFF, which permits the addition of geographic information associated with cartographic raster data and remote sensing applications, such as projections and datum reference points. Many digital cameras produce TIFF files, and Kodak has a PhotoCD file format based on TIFF with proprietary color space and compression methods.

Most digital library projects of images use the TIFF format to store and archive the original captured images, even though they may convert them to other formats for display. At the bottom of Table 6.5 are some optional fields that are widely used in digital library work. Some, such as the name of the program that generated the image and the date and time it was generated, are usually filled in automatically by scanner programs and other image-creation software. Digital library projects often establish conventions for the use of the other fields. For example, in a digitization project, the

Document name field might contain the catalog ID of the original document. These fields are coded in ASCII, but there is no reason why they should not contain data that is further structured. For example, the

Image description field might contain an XML specification that itself includes several subfields.

Image metadata: EXIF, XMP, IPTC, and MIX

The exchangeable image file format (EXIF) is a standard for embedding technical metadata in image files that many camera manufacturers use and many image-processing programs support. EXIF metadata can be embedded in TIFF and JPEG images. Table 6.6 shows a metadata record extracted from a JPEG image produced by a digital camera: it includes the exposure, resolution, focal length, and whether flash was used. The record shows that the GIMP image-editing program has been used to process the file, which illustrates that embedded metadata expressed in EXIF is only as accurate as the last application that saved the file and may have been altered by several programs before it is added to your digital library. EXIF metadata can include a thumbnail image—in this case the thumbnail is a 196 × 147 pixel JPEG that occupies 3.6 KB, compared with the original 2,048 × 1,536 (262 KB) image. Including the thumbnail, the metadata itself occupies 4.4 KB.

The DIG35 standard provides an extensible XML framework that can be used to embed metadata in the private tags of image formats like TIFF and JPEG. DIG35 groups elements into four main categories: image creation, content description, provenance, and intellectual property rights. Many elements are optional. The JPEG 2000 file format has an XML

Box container that can include any XML data, although DIG35 is suggested.

In 2001, Adobe introduced the

Extensible Metadata Platform (XMP) to provide a general solution for embedding metadata within files like images and PDF documents. XMP values are expressed in XML using a subset of RDF (see Section 6.4), allowing great flexibility in metadata expression. XMP's model is flexible enough to allow structural metadata to be expressed—so that, for example, different pages in a document can be described independently.

Within XMP, metadata can be expressed using different schemas and can be extended by adding new schemas. Schemas can be based on external standards (e.g., Dublin Core and EXIF) or internal schemas (e.g., XMP Rights Management) and can be associated with specific file types (e.g., PDF) or applications (e.g., Adobe Photoshop). Many applications now provide custom dialog windows to simplify the manual entry and editing of XMP metadata.

The XMP metadata itself is embedded in files in a serialized

Packet, optionally surrounded by a wrapper consisting of a Header, Padding, and Trailer sections. The suggested size for the Padding section is 2–4 KB, allowing space for editing and expanding the metadata without overwriting application data. The actual mechanism for embedding the XMP packet varies depending on the particular file format. In PDF, for example, the packet is embedded in a metadata stream stored within a PDF object (see Section 4.5). Using custom embedding techniques allows XMP to be applied to a wide range of file types, including GIF, PNG, JPEG, JPEG 2000, Photoshop, MP3, MPEG-4, HTML, and WAV. When XMP cannot reliably be embedded in the native file format, as with MPEG-2, the packet is placed in a small “sidecar” file with the same filename and the suffix

.xmp.

In 2008 the International Press Telecommunications Council (IPTC) released the

IPTC Photo Metadata 2008 standard, based on requirements gathered from device manufacturers and photographers, which is implemented as a core XMP schema. Many image-processing applications provide support for XMP metadata, and it is likely that support for IPTC embedded metadata will grow quickly.

Metadata for Images in XML (MIX) is a new standard for image metadata that, in contrast to the

de facto use of EXIF, is being coordinated and promoted by the Library of Congress. Like EXIF, it addresses technical rather than descriptive metadata. Its elements represent scanner resolutions, details of camera exposure, and processing software—and nothing about the image content or who took a particular photograph. Its flavor is illustrated by Figure 6.3, which shows a fragment of a MIX document for a photograph.

Both EXIF and MIX-style metadata address the technical and preservation aspects of the five-part metadata classification in Section 6.1. EXIF is almost universal in consumer electronics but is restricted to certain image types, whereas MIX, being relatively new, is not yet widely used. The IPTC Photo Metadata standard is very new but has strong growth potential: it is open, has an extensible metadata model, and is based on the well-known XMP approach.

Audio metadata

Although audio resources are often described using external metadata schemes, such as Dublin Core, several audio formats contain embedded metadata. The ubiquitous MP3 format is an interesting case. Initially it had virtually no internal metadata, apart from the technical audio information needed to play the file.

The first set of metadata tags for MP3 was developed in 1996 and is known as ID3 (for “IDentify an MP3”). ID3 tags were placed at the end of the audio data (in an attempt to minimize disruption to software). They were limited to 128 bytes: 3 for the starting string “TAG,” which identified the metadata block, 30 bytes for the title, 30 for the artist, 30 for the album, 4 for the year, 1 for track number, 1 for genre, and 30 for comments. The genre byte was an index into a list of 80 predefined genres. These limits were found to be too restrictive for describing music metadata and the ID3 format has been evolving ever since to provide more flexible metadata containers.

The current ID3v2 tag format allows many

frames of data of variable size (up to 16 MB each) that can contain text, images, and technical metadata about the audio data. They are normally located at the beginning of the file to assist media streaming software. There are 39 predefined text frames, including composer, length, copyright, publisher, and title. Other frames provide scope for URLs, images (such as CD cover art), synchronized lyrics, and, in a “commercial

” frame, for advertising. All frames are optional, and users can also define their own. MP3 ID3 is not a formal standard, so metadata extracted from MP3 collections is likely to be incomplete and inconsistent.

The Ogg container format that wraps Vorbis audio (Section 5.2, Post-MP3 formats) does not include a structured metadata component, although there is a comment facility for simple textual values. Vorbis comments take the form

fieldname=data, where the

fieldname is a case-insensitive ASCII string and

data is a UTF-8 encoded string. A proposed set of field names includes TITLE, COPYRIGHT, and GENRE, but, as with MP3, none is mandatory and usage is variable. FLAC files support Vorbis comments along with a PICTURE block for storing an image and a CUESHEET block that uses track and index points compatible with the CD audio standard.

WAV audio is a form of RIFF file consisting of

chunks (Section 5.2, Early formats). A LIST chunk with a type of INFO provides a limited facility for embedded metadata. A variety of text chunks are available, including genre, source, and copyright, and there is a display chunk that allows text or images to be associated with the audio data. AIFF files have optional chunks for name, author, copyright, annotation, and comments. Table 5.1 lists some of the chunk types for WAV and AIFF files. The AU format has a single unstructured text field.

The WAV

cue chunk (or the comparable AIFF

marker) allows points of interest and text to be associated with particular points in an audio track internally. These cue points can provide useful structural metadata, and the text can be used to supplement the listening experience for users. Alternatively, the Continuous Media Markup Language (CMML) is a generalized approach to linking metadata with a specific point in temporal media. CMML is an XML format for linking text to time periods through

clip elements, such as

<clip id="painting" start="npt:3.5" end="npt:5:5.9">

<img src="painting1.jpg"/>

<desc>A close up of the painting</desc>

<meta name="Subject" content="painting"/>

</clip>

which defines a description, subject metadata, and image—and associates it with a period of audio. CMML can be used to synchronize lyrics with music, to allow metadata values to be assigned with temporal precision, and to provide subtitling for video. CMML can be serialized into data that can be inserted into container formats such as Ogg.

Neither AAC nor MPEG-4 audio includes a standard metadata format. However, Apple's tagging format has become a

de facto standard for AAC because of its use in the popular iTunes music store. It exploits the generic user-data (“udta”)

atom (or

box), and metadata is stored as tag-value pairs. The values themselves are flexible, textual metadata (e.g., the

aART tag for album artist,

cprt for copyright, and ©

nam for title). They are stored as UTF-8 strings. iTunes also sets a

covr value for a cover art image (typically JPEG or PNG).

Video metadata

Much video data is delivered within container formats like AVI, MPEG-4, Flash, or Ogg, and these containers often provide embedded metadata support. Apple's QuickTime format became the basis for the MPEG-4 container format, so MPEG-4 handles metadata in a similar way to the iTunes AAC audio discussed above. AVI is a form of RIFF file, so it handles metadata in a similar way to WAV. Ogg Theora video uses the same container format as Ogg Vorbis audio, so the same comment structure is supported. However, software support for these video comments is currently limited.

MPEG-2 video has no textual metadata support. However, with some extensions and restrictions, it is used to add subtitles and menu information to DVD video. The added information is put on the DVD as images rather than text—thus avoiding the need for DVD players to include the entire set of Unicode characters as fonts. The lack of metadata support in MPEG-2 led to the MPEG-7 format described in the next section.

The basic idea of CMML—to associate external resources with time points—is used by several subtitling/captioning technologies, such as MPEG-4 Timed Text (supported in Apple's Quicktime tools). Most subtitles are simply plain text rather than structured metadata, although they could be used to enable limited text searching of video collections. The larger idea of coordinating temporal multimedia content is embodied in QuickTime, SMIL, and Flash (Sections 5.4 and 5.5).

The relative lack of structured metadata embedded in video files has been addressed in two ways: expanded container formats with explicit metadata support, and placing the metadata in external storage, such as linked XML files.

The Material Exchange Format (MXF) is a new container format that can hold many types of video data in a platform-independent manner. It is managed by the Society of Motion Picture and Television Engineers (SMPTE), who maintain central lists of metadata labels, metadata dictionaries, and the basic Descriptive Metadata Schema (known as DMS-1). MXF metadata is based on keys (e.g.,

Titles:MainTitle) and values and can be defined either globally or for specific time intervals.

MPEG-7 has an alternative but complementary approach, where external metadata is expanded to include a low-level description of content as well as the familiar Dublin Core-like high-level descriptions.

It is early days for video content, at least in terms of digital library usage, and few tools support metadata editing. Despite the availability of methods for splicing sophisticated metadata into video container file formats, in practice the video material that is actually supplied is often inadequate for the needs of digital libraries. As we have seen, collections like the Open Video project (Section 5.4) augment the video material with external metadata that meets their needs.

Multimedia metadata: MPEG-7

We learned about the MPEG family of standards in Section 5.4. MPEG-7, which is formally called the

multimedia content description interface, is intended to provide a set of tools for describing multimedia content. The aim is to allow the user to search for audiovisual material that has associated MPEG-7 metadata. The material can include still pictures, graphics, 3D models, audio, speech, video, and any combination of these elements in a multimedia presentation. It can be information that is stored or that is streamed from an online real-time source. The MPEG-7 standard was developed by broadcasters, electronics manufacturers, content creators and managers, publishers, and telecommunication service providers under the aegis of the International Organization for Standardization (ISO).

MPEG-7 has exceptionally wide scope. For example, it foresees that metadata may be used to answer queries like:

• The user plays a few notes on a keyboard and retrieves musical pieces with similar melodies, rhythms, or emotions.

• The user draws a few lines on a screen and retrieves images containing similar graphics, logos, or ideograms.

• The user defines objects, including color or texture patches, and retrieves similar examples.

• The user describes movements and relations between a set of given multimedia objects and retrieves animations that exhibit them.

• The user describes actions and retrieves scenarios containing them.

• Using an excerpt of Pavarotti's voice, the user obtains a list of his records and video clips and photographic material portraying him.

We encountered systems that embody the first and third of these examples in Chapter 3. However, it should be noted that the way in which MPEG-7 metadata is used to support such queries is beyond the scope of the standard.

MPEG-7 is based on four components

: Descriptors,

Description Schemes, Description Definition Language, and

Systems Tools. Descriptors represent low-level features, the fundamental qualities of audiovisual content. They range from statistical models of signal amplitude to the fundamental frequency of a signal, from emotional content to parameters of an explicit sound-effect model. Description Schemes specify the types of Descriptors that can be used and the relationships between them or between other Description Schemes. The Description Definition Language (DDL) allows users to extend the predefined description capabilities of Descriptors and Description Schemes. DDL uses XML syntax and is a form of XML Schema (more details appear in an appendix at the book's Web site). In this case, XML Schema alone is not flexible enough to handle low-level audiovisual forms, so DDL was formulated to address these needs. Finally, the Systems Tools are a suite of basic tools to synchronize metadata descriptions with content encoding and transmission.

A Description Scheme, together with instantiated values for a Descriptor, produces an actual Description, such as the one shown in Figure 6.4. The three AttributeValuePairs in the lower half (un-indented) give a trio of values of features (called

HSV_lin-4x4x4-G,

tamuraS-2-A, and

gabor-6-4) that were derived by analyzing the image whose URL is specified in the

MediaUri element. The actual values appear in the three lists of numbers (the

FloatMatrixValue elements). In fact, this example is from the system we described under Content-Based Image Search in Section 3.4 (illustrated in Figure 3.17), which, as we learned there, derives several mysteriously named features from the image content.

The MPEG-7 DDL is able to express spatial, temporal, structural, cardinal and data-type relationships between Descriptors and Description Schemas. For example, structural constraints specify the rules that a valid Description should obey: what child elements must be present for each node, or what attributes must be associated with elements. Cardinality constraints specify the number of times an element may occur. Data-type constraints specify the type and the possible values for data or Descriptors within the Description.

There are different Descriptors for audio, visual, and multimedia data. The audio description framework operates in both the temporal and spectral dimensions, the former for sequences of sounds or sound samples, and the latter for frequency spectra that comprise different components. At the lowest level, you can represent such things as instantaneous waveform and power values, various features of frequency spectra, fundamental frequency of quasi-periodic signals, a measure of spectral flatness, and so on. There is a way to construct a temporal

series of values from a set of individual samples, and a spectral

vector of values, such as a sampled frequency spectrum. At a higher level, description tools are employed for sound effects, instrumental timbre, spoken content, and melodic descriptors to facilitate query-by-humming.

In the visual domain, basic features include color, texture, region-based and contour-based shapes, and camera and object motion. Another basic feature is a notion of

localization in both time and space, and these dimensions can be combined in a space-time trajectory. These basic features can be built into structures like a grid of pixels or a time series of video frames.

Multimedia features include low-level audiovisual attributes like color, texture, motion, and audio energy; high-level features of objects, events, and abstract concepts; and information about compression and storage media. Browsing and retrieval of audiovisual content can be facilitated by defining summaries, partitions, and decompositions. Summaries, for example, allow an audiovisual object to be navigated either hierarchically or sequentially. For hierarchical navigation, material is organized into successive levels that describe it at different levels of detail, from coarse to fine. For sequential navigation, images or video frames can be arranged in sequences and possibly synchronized with audio and text so that they compose a slide show or audiovisual synopsis.

MPEG-7 descriptions can be entered by hand or extracted automatically from the signal (as in Figure 6.4). Some features (color, texture) can best be extracted automatically, while others (“this scene contains three shoes,” “that music was recorded in 1995”) cannot be extracted automatically.

The application areas envisaged for MPEG-7 are many and varied and stretch well beyond the ambit of what most people mean by digital libraries. They include education, journalism, tourist information, cultural services, entertainment, geographical information systems, remote sensing, surveillance, biomedical applications, shopping, architecture, real estate, interior design, film, video and radio archives, and even dating services.

Multimedia application metadata: MPEG-21

MPEG-7 provides a description framework for multimedia content that supports many digital library functions, such as structured browsing and surrogate creation. However, in reality, multimedia like music and video participate in complex international flows of content that are worth vast amounts of money. As we all know, some of this content is illegally distributed at various points in its lifecycle, due to the simplicity and fidelity of digital copying. MPEG-21 is a broad and ambitious standard that links multimedia producers and consumers to address issues of content control. According to the official overview,

MPEG-21 aims at defining a normative open framework for multimedia delivery and consumption for use by all the players in the delivery and consumption chain. This open framework will provide content creators, producers, distributors and service providers with equal opportunities in the MPEG-21 enabled open market. This will also be to the benefit of the content consumer, providing them access to a large variety of content in an interoperable manner.

MPEG-21 is founded upon two concepts: a

Digital Item (such as a video or music album) and the

User's Interaction with it. Because it is concerned with the access, consumption, and exchange of multimedia content, MPEG-21 emphasizes aspects of user interaction that are not considered by other metadata frameworks for digital content. Technically, Digital Items are compound objects that include multimedia content and metadata. Each Digital Item is defined by a Digital Item Declaration (DID) expressed in the Digital Item Declaration Language (DIDL) and written as an XML Schema. Another key part of the MPEG-21 framework is the Rights Expression Language, also written using XML Schema. The Rights Expression Language is based on licenses that grant principals (the users) the right to use a resource (such as a video) under certain conditions (such as a time limit). The intention is that MPEG-21 can link a multimedia resource with a machine-readable statement, such as

Alice permits Bob to play the video “Stupid Pet Tricks vol 26.mpg” during November 2011.

The development of MPEG-21 illustrates two important factors in the future of digital libraries: 1) the computational specification of rights management and 2) increasingly complex compound digital objects.

6.4. Metadata for Compound Objects

As we have seen, the descriptions of digital objects are becoming richer, and they are ever more interlinked with other objects or online resources—and the metadata needed to represent these connections is itself a source of complexity. In this section we provide examples of technologies that allow complex

compound objects to be rigorously defined.

Resource Description Framework: RDF

The Resource Description Framework (RDF) is designed to facilitate the interoperability of metadata, particularly in the realm of the World Wide Web. Because metadata covers too great a variety of information to specify exhaustively and categorically, RDF follows the lead of XML: rather than providing a set of possibilities, it supplies a means for describing a valid system. It is expected that communities of users will assemble to establish RDF systems suited to their collective needs. They will have to agree on a vocabulary, its meaning, and the structures that can be formed from it. This is done by specifying an RDF Schema, just as DTDs and XML Schemas are used to control XML vocabulary and structure.

RDF is a way of modeling as a resource anything that can be represented as a Universal Resource Identifier. What is a URI? Well, officially, according to the W3C standard (RFC 3986), a Universal Resource

Identifier is anything expressed in the appropriate format that serves to identify a Web resource, and thus a URI includes both locators—URLs—and names—URNs. Universal Resource

Locators are a subset of URIs that, in addition to identifying the resource, describe its location on the network. Universal Resource

Name refers to any URI that is guaranteed to remain globally unique and persistent even when the resource is deleted or becomes unavailable. A Web page, part of a document, an FTP site, or an e-mail address are all examples of the former, and the International Standard Book Number (ISBN) is an example of the latter.

RDF resources are described in a machine-readable fashion through a framework for specifying—in digital library terminology—metadata. The framework is compositional: new resources can be built from existing ones.

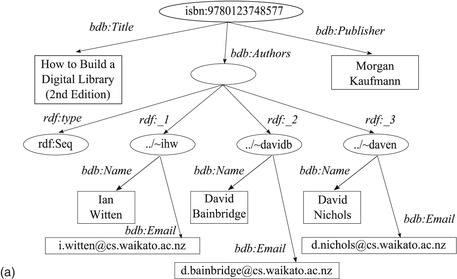

Figure 6.5 uses RDF to give a graphical description of the book you are holding in your hands. The book is represented by its ISBN (International Standard Book Number) in URI syntax. The top-level description comprises a title, three authors, and a publisher. The authors in turn are characterized by a name and an e-mail address.

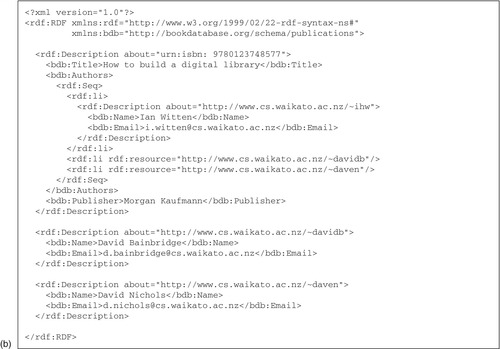

Mapping the graphical description into a character stream is a process of serialization, and for RDF the language of choice is (again) XML. Figure 6.5b shows the description of the example graph. This representation obscures some of the abstract aspects of RDF and makes it seem like just another example of an XML language—especially since we have recently seen so many of them! When considering RDF, you should keep in mind that it is a sister format to XML, not a subsidiary. However, this medium bestows practical benefits: software support for parsing and editing, transparent handling of international characters, and so on.

The basic construction in RDF (indeed as it is in English) is to connect a subject to an object using a predicate. This is known as a statement. Subjects and objects are nodes in the graph. The directed arc that joins them shows the connection; a label on the arc identifies the predicate used. To take an example from Figure 6.5a,

How to Build a Digital Library (object) is the

Title (predicate) of the resource

ISBN: 9780123748577 (subject). The subject is a resource, represented in the diagram as an oval node. An object may be either a resource or a string literal. In our case it is a string, represented as a rectangular node.

Now let's match up the graphical representation with the serialized form in Figure 6.5b. It begins with the familiar processing instruction, and a root node that declares some namespaces. In this case there are two: one for RDF and another for “Book Database,” denoted by the prefix

bdb. This represents a hypothetical organization that has developed an XML schema for representing metadata about books in terms of titles, authors, names, e-mail addresses, and publishers.

The first child of the root node is an

rdf:Description element that includes an

about attribute to specify the resource as a URI that gives the ISBN. The

bdb:Title tag that follows sets the predicate, and its content represents the string literal object that forms the title itself. Two other predicates connected to the ISBN resource are

Authors and

Publisher. The latter, like

Title, declares a string literal object (the string “Morgan Kaufmann”); the former, however, is more complex. Thus we see how the top level of the tree in Figure 6.5 is constructed.

Not only is

bdb:Authors the first object encountered in our explanation that is itself a resource, but also it is an example of an

anonymous resource—an intermediary node that has no specific resource name but is itself the subject of further qualifying statements. The counterpart in the graphical version of the model in Figure 6.5a is the node at the end of the

Authors predicate, which is nameless.

The anonymous resource also demonstrates a new structure called a

container, which is used to group resources together. RDF has three types of container:

bag,

sequence, and

alternative. A

bag is an unordered list of resources or string literals; a

sequence is an ordered list; and an

alternative represents the selection of just one item from the list. Each item in a

container is denoted by an

rdf:li tag. The example uses

rdf:Seq to represent a sequence of authors because the order is significant. The container type is represented by the

rdf:type predicate, and its contents are numbered

rdf:_1, rdf:_2, rdf:_3. These are implicitly inferred from the XML description (Figure 6.5b) but are shown explicitly in the pictorial version (Figure 6.5a).

There are three list items in the example, one for each author, and they happen to be compound resources. They are introduced by the RDF list item tag

rdf:li, and for illustrative purposes they are specified in different ways. The first is embedded in-line by starting a new

rdf:Description tag. The second and third receive a more compact

rdf:li tag that defers the resource's definition through the

rdf:resource attribute. The missing detail is filled in when a resource description is encountered whose

rdf:about attribute matches the named list item. This occurs in the lower third of the example.

RDF is designed to be created and read by machines, rather than people, so its verbosity is less important than its descriptive power and flexibility as a format. Figure 6.6 shows an automatically generated RDF file that describes a Web resource, the home page of the World Wide Web Consortium. The page has been downloaded and analyzed to extract metadata values, which have been expressed as Dublin Core metadata and included in the RDF file by importing the Dublin Core namespace in the

rdf element (the value of attribute

xmlns:dc, near the beginning). This example shows how RDF can interoperate with other metadata schemas to contribute to the Semantic Web. An extension of URIs called Internationalized Resource Identifiers (IRIs) has been proposed to extend RDF to resources that use Chinese, Korean, and Cyrillic characters.

RDF is a rich framework whose design draws upon structured documents, entity relationships, object orientation, and knowledge representation. We cannot illustrate all aspects here, but the above examples convey the flavor of what it does.

Metadata Encoding and Transmission Standard: METS

The Metadata Encoding and Transmission Standard (METS) is designed to permit the representation, maintenance, and exchange of the increasingly complex digital objects that make up digital libraries. Library catalogs have for years used MARC records to fulfill these functions for their materials. The METS initiative aims to do the same for digital collections.

METS is an open standard implemented in XML that encodes three of the metadata types identified in Section 6.1: descriptive, structural, and administrative. It also contains an extension mechanism, through which it can encompass further areas. A METS document consists of six major sections:

Structural Map,

Structural Links, File,

Descriptive Metadata,

Administrative Metadata, and

Behaviors. The Structural Map provides the structure that links content and metadata together; it is the only compulsory element. Structural Links allow METS creators to record the existence of hyperlinks between nodes in the Structural Map. The File section lists the actual files that make up the digital object. Descriptive Metadata and Administrative Metadata come in two possible forms: external metadata, such as a MARC record in a library catalog, and internal metadata, such as a Dublin Core or MODS record for Descriptive Metadata or a MIX or PREMIS (see below) record for Administrative Metadata. Finally, the Behaviors section is used to associate executable behavior with content in a METS object.

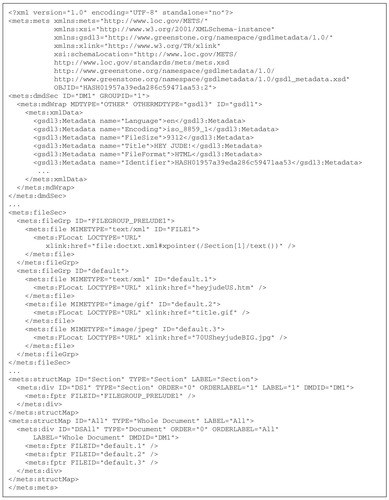

METS is a complex format and can be used in different ways—for example, as the internal format of a digital library, or as an exchange format. Figure 6.7 shows an example document. The simplest part is the File section in the middle (

<mets:fileSec> element), which identifies the content files. The

Flocat elements all use a URL and an XLink pointer (see the appendix at the book's Web site) to locate a file, together with a MIME type and an identifier, so that other parts of the description can refer to it.

The Structural Map parts at the end (<

mets:structMap>) use

div elements to indicate divisions within the document (following a convention established in HTML), and

<mets:fptr> elements to indicate which content files contribute to each division (using the ID values from the

Flocat elements in the File section). There are two Structural Maps in Figure 6.7, each with one

div; the second consists of three content files. Structural Maps could reflect the physical structure of a scanned book in pages or its logical structure in chapters: the indirect way in which they are expressed allows multiple perspectives to be described for the same basic content.

The Descriptive Metadata section (<

mets:dmdSec>) continues the theme of flexibility. Figure 6.7 has embedded metadata near the top (indicated by the <

mdWrap> element) of type

Other—in this case, a specific Greenstone style of metadata. More standard metadata types, such as MODS or Dublin Core, could be included instead (or as well), either embedded within a <

mdWrap> element or linked externally through a <

mdRef> element.

Figure 6.7 does not show the Administrative Metadata section (<

mets:amdSec>), which encodes technical metadata (such as MIX), intellectual property rights, information about a physical source document, and preservation metadata. A typical component for the last is PREMIS, which describes information in terms of Intellectual Entities, Objects, Events, Rights, and Agents. Practically, such metadata will be split into groups to match METS sub-elements of <

mets:amdSec>. The use of PREMIS highlights the redundancy that often occurs when multiple metadata schemes are combined—file sizes and checksums can be part of an

objectCharacteristic element in PREMIS and an attribute of a

file element in METS. Redundancy is not necessarily a bad thing, and here it is reasonable to use both.

Finally, as noted above, the Structural Links section of a METS document includes hyperlinks between parts of the Structural Map, in order to model Web content, while the Behavior section associates executable behavior with METS objects.

One of the consequences of the complexity and richness of METS is that its use requires extensive support in the form of software tools. Even for simple objects, descriptions can easily be 50 lines of XML, and complex objects can produce documents 50 KB or more in size.

A useful component of the METS framework is the ability to express a profile. Profiles represent constraints and requirements that METS documents must fulfill to conform to the profile. Restrictions on the document values make it easier for software tools to process the documents and simplify exchange and aggregation. For example, a profile can require that there is no Behavior section, that the images referenced from the document must be in TIFF v6.0 format, or that subject headings in an embedded MODS section must use Library of Congress Subject Headings. Profile requirements are expressed in natural language, so conformance to a profile cannot be checked automatically.

Collection-level metadata

The examples of metadata seen so far have been for individual items. Typically, many items are grouped together in collections, and it becomes useful to consider metadata at the collection level. Such metadata could include the people who collated the collection, background on why it was created, and who to contact for maintenance requests. Collection-level metadata can also include information derived automatically from the items, such as their total number, the earliest item, or recent additions.

Collection-level metadata helps to contextualize item-level metadata that has been divorced from its collection. The need for this is exemplified by the so-called “on a horse” problem, where a collection of material about

Theodore Roosevelt (collection-level metadata) contains a photograph with the description

on a horse (item-level metadata). The description makes sense within the bounds of the collection, but the item-level metadata will be incomprehensible if it is separated from the collection (e.g., through harvesting via OAI-PMH, as described in Chapter 7).

This problem shows that collection-level metadata can be used to supplement item-level metadata to enhance user understanding, shareability, and searching and browsing. It also allows users to start their information-gathering process one step earlier by interrogating a set of collections to determine which best suits their need. For this idea to work across different digital library systems, standard terms and meanings are required—exactly what RDF, for example, was designed for.

The Research Support Libraries Programme (RSLP) Collection Description project is an example of collection-level metadata. The project uses a model that represents

Collections,

Locations, and

Agents as RDF resources. There are three types of agent—

Collector,

Owner, and

Administrator—reflecting the roles that people or organizations play in providing and maintaining a collection. The bulk of the detail is contained in the collection resource, which may in turn reference further resources, such as a location and a collector.

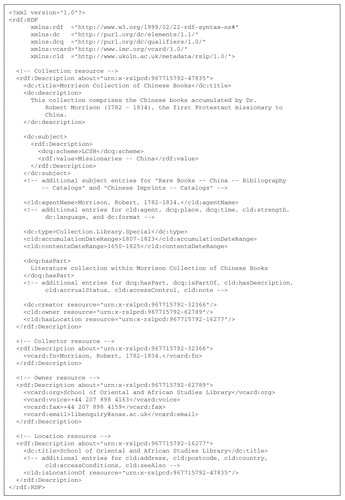

Figure 6.8 shows an abridged version of a description for the Morrison Collection of Chinese Books housed at the School of Oriental and African Studies Library in London, England. Four existing namespaces provide relevant elements and attributes: RDF (naturally), unqualified Dublin Core, qualified Dublin Core, and vCard, which is a namespace devised by the Internet Mail Consortium to represent fax numbers, phone numbers, and so on for electronic business cards. An additional namespace for RSLP covers attributes and elements not defined elsewhere (prefix

cld).

The example contains four top-level resource descriptions:

Collection,

Collector,

Owner, and

Location (marked with XML comments). There is no

Administrator resource in this example. Most of the information supplied in the collection resource description is through Dublin Core. In particular, the

<dc:subject> element shown uses the anonymous resource mechanism mentioned earlier to embed another resource, which is a Library of Congress Subject Heading, expressed using qualified Dublin Core.

About halfway down the collection resource description, the Dublin Core

<dc:type> element is used to give the collection's type. The RSLP collection description defines an enumerated list of possible types, starting with broad classifications, such as Catalog and Index, and then proceeding to more specific items, such as Library, Museum, and Archive, and finally defining even more specific items, such as Text, Image, and Sound. A collection can be given more than one of these types by separating the terms by a period (.), as can be seen in the figure.

Elements

<dq:hacsPart>,

<dcq:isPartOf>, and

<cld:hasDescription> are examples of external relationships. They identify or name other resources that have a bearing on the collection being described. There are seven external relationships in all. The ones appearing here are used to name subcollections (

hasPart), the larger library this collection fits into (

isPartOf), and where a description of the collection appears (

hasDescription).

The lower part of the collection resource description contains references to the collector, owner, and location resources through

dc:creator,

cld:owner, and

cld:hasLocation, respectively. The first two are examples of agents and make use of

vcard elements to supply the necessary information. A location can be electronic or physical, and most of its elements are defined by RSLP namespace elements.

Open Archives Initiative Object Reuse and Exchange: OAI-ORE

The Open Archives Initiative Object Reuse and Exchange (OAI-ORE) is a standard designed expressly for representing aggregations of digital objects. An extension of the OAI project (described in Chapter 7), it can be applied when separate (perhaps disparate) objects need to be treated as a group. Grouping objects is a natural human activity: photos are grouped into albums, music tracks are grouped into playlists, documents are grouped into collections.

OAI-ORE introduces a specific resource, called an

Aggregation, that refers to a set of objects. An aggregation is described by a

Resource Map that associates metadata with it. For example, Figure 6.9 shows an OAI-ORE document that describes this book. The aggregation contains four items: TIFF images of the front and back covers and PDF documents for the two parts of the book. The first <

rdfDescription> element is declared to be an OAI-ORE Aggregation by reference to the term http://www.openarchives.org/ore/terms/Aggregation. This element also contains Dublin Core metadata

dc:creator and

dc:title. The second <

rdfDescription> element is a resource map: it declares that the RDF file at http://www.cs.waikato.ac.nz/~ihw/rdf/htbadl2/ is a description (or resource map) of the aggregation. The remainder of the file attaches Dublin Core metadata to the individual items as item-level metadata.

OAI-ORE is a new framework with many potential uses in representing resources and metadata. It can link together different versions of the same document (e.g., PDF, PostScript, and Word). It can express parallel aggregations like a photograph collection containing themed groups (holiday in France, New Year party, graduation ceremony), all defined without affecting the base collection. The resources are URIs, so items can be defined across collections as well, from anywhere on the Internet that has a URI; thus, virtual collections can be built from remote resources. Because aggregations are themselves resources, they can themselves be aggregated into groups of collections, which can then be further aggregated.

The design of OAI-ORE is reminiscent of the METS Structural Map, which illustrates one of the virtues of the digital world: the ability to break apart, recombine, and restructure content in new and creative ways.

Metadata for education: LOM and SCORM

Libraries have long supported a variety of educational activities, and digital libraries for education have driven both content creation and library technology. Educational technologists talk of

Learning Objects and have devised a metadata scheme called Learning Object Metadata (LOM) to describe them. According to the IEEE Learning Technology Standards Committee,

the LOM standard will specify the syntax and semantics of Learning Object Metadata, defined as the attributes required to fully/adequately describe a Learning Object. Learning Objects are defined here as any entity, digital or non-digital, which can be used, reused or referenced during technology supported learning.

As you can see, the scope of Learning Objects is very wide. Figure 6.10 shows a sample LOM record; it is expressed in XML, is quite readable, and resembles other XML metadata representations, such as MODS.

The resource that this metadata record describes is titled

A Teddy Bear Picnic, which is stated in the <

general> section as the <

title> tag. The previous section gives its subject classification as

Arts. The LOM standard allows information to be provided in more than one language if desired. In this case, the overall language of the resource is set to English through the <

language> tag. Individual <