Chapter 4. Textual documents

The raw material

Documents are the digital library's building blocks. It is time to step down from our high-level discussion of digital libraries—what they are, how they are organized, and what they look like—to nitty-gritty details of how to represent the documents they contain. To do a thorough job for international documents in non-Roman alphabets, we will have to descend even further and look at the representation of the characters that make up textual documents.

Documents are the digital library's building blocks. It is time to step down from our high-level discussion of digital libraries—what they are, how they are organized, and what they look like—to nitty-gritty details of how to represent the documents they contain. To do a thorough job for international documents in non-Roman alphabets, we will have to descend even further and look at the representation of the characters that make up textual documents.

A great practical problem anyone faces when writing about the innards of digital libraries is the high rate of change in the core technologies. Perhaps the most striking feature of the digital library field is the inherent tension between two extremes: the dizzying pace of technological change and the very long-term view that libraries must take. We must reconcile any aspirations to surf the leading edge of technology with the literally static ideal of archiving material “for ever and a day.”

Document formats are where the rubber meets the road. Over the last two decades a cornucopia of different representations have emerged. Some have become established as standards, either official or

de facto, and it is on these that we focus. There are plenty of them: the nice thing about standards, they say, is that there are so many different ones to choose from!

The standard ASCII (American Standard Code for Information Interchange) code used by computers has been extended in dozens of different and often incompatible ways to deal with other character sets. Some Web pages specify the character set explicitly. Internet Explorer recognizes over 100 different character sets, mostly extensions of ASCII and another early standard called EBCDIC, some of which have half a dozen different names. Without a unified coding scheme, search programs must know about all this to work correctly under every circumstance.

Fortunately, there is an international standard for representing characters: Unicode. It emerged over the last 20 years and is now stable and widely used—although it is still being extended and developed to cover languages and character sets of scholarly interest (e.g., archaic ones). It allows the content of digital libraries, and their user interfaces, to be internationalized. The basics of Unicode are introduced in the next section: a more comprehensive account is included in Chapter 8 on internationalization. Of course, Unicode only helps with character

representation. Language translation and cross-language searching are thorny matters of computational linguistics that lie completely outside its scope (and outside this book's scope, too).

Section 4.1 discusses the representation of plain text documents, and related issues. Even the most basic character representation, ASCII, can present ambiguities in interpretation. We also sketch how an index can be created for textual documents in order to facilitate rapid full-text searching. Before the index is created, the input must be split into words. This involves a few mundane practical decisions—and introduces deeper issues for languages that are not traditionally written with spaces between words.

Probably the single most important issue when contemplating a digital library project is whether you plan to digitize the material for the collection from ordinary books and other documents. This inevitably involves manually handling physical material, and perhaps also manual correction of computer-based text recognition. It generally represents the vast bulk of the work involved in building a digital library. Section 4.2 describes the process of digitizing textual documents, by far the most common source of digital library material. Most library builders end up outsourcing the operation to a specialist; this section alerts you to the issues you will need to consider when planning this part of the project.

The next section describes HTML, the Hypertext Markup Language, which was designed specifically to allow references, or

hyperlinks, to other files, including picture files, giving a natural way to embed illustrations in the body of an otherwise textual document

Electronic documents have two complementary aspects: structure and appearance. Structural markup makes certain aspects of the document structure explicit: section divisions, headings, subsection structure, enumerated and bulleted lists, emphasized and quoted text, footnotes, tabular material, and so on. Appearance is controlled by

presentation or

formatting markup that dictates how the document appears typographically: page size, page headers and footers, fonts, line spacing, how section headers look, where figures appear, and so on. Structure and appearance are related by the design of the document, that is, a catalog—often called a

style sheet—of how each structural item should be presented. While HTML supports this separation, it does not enforce it and, worse, given the format's chaotic beginnings it quickly became augmented with a host of other facilities that blurred this distinction.

Whatever its faults, HTML, being the foundation for the Web, is a phenomenally successful way of representing documents. However, when dealing with collections of documents, different ways of expressing formatting in HTML tend to generate inconsistencies—even though the documents may look the same. Although these inconsistencies matter little to human readers, they are a bane for automatic processing of document collections. Next we describe XML, a more modern development that is intended to solve some of the problems that arise with HTML. XML is an

extensible markup language that allows you to declare what syntactic rules govern a particular group of files. More precisely, it is referred to as a

metalanguage—a language used to define other languages. XML provides a flexible framework for describing document structure and metadata, making it ideally suited to digital libraries. It has achieved widespread use in a short period of time—reflecting a great demand for standard ways of incorporating metadata into documents—and underpins many other standards. Among these are ways of specifying style sheets that define how particular families of XML documents should appear on the screen or printed page. There is also XHTML, a recasting of this format using the stricter syntax of XML.

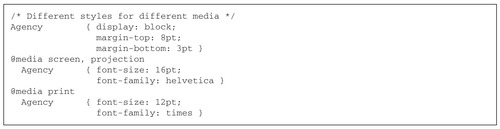

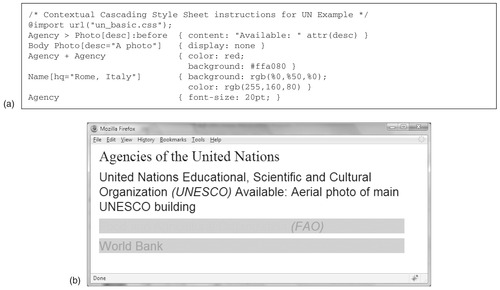

Style sheets have been mentioned several times. Associated with the markup languages HTML and XML are the stylesheet languages CSS (cascading style sheets) and XSL (extensible stylesheet language), and these are introduced in Section 4.4.

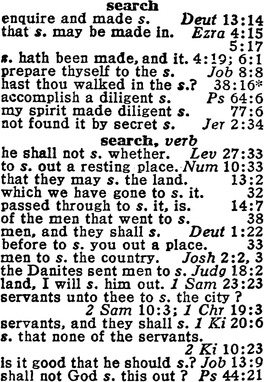

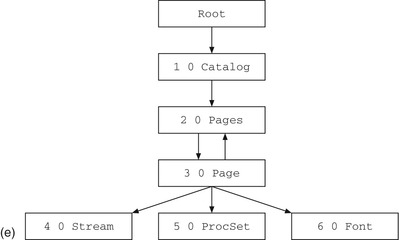

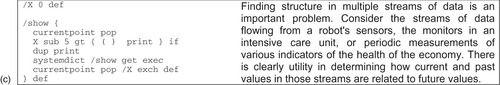

After thoroughly reviewing HTML and XML, we move on to more comprehensive ways of describing finished documents. Desktop publishing empowers ordinary people to create carefully designed and lavishly illustrated documents and to publish them electronically, often dispensing with print altogether. People have quickly become accustomed to reading publication-quality documents online. It is worth reflecting on the extraordinary change in our expectations of online document presentation since, say, 1990. This revolution has been fueled largely by the PostScript language and its successor, the Portable Document Format (PDF). These are both

page description languages: they combine text and graphics by treating the glyphs that comprise text as little pictures in their own right and allowing them to be described, denoted, and placed on an electronic page alongside conventional illustrations.

Page description languages portray finished documents, ones that are not intended to be edited. In contrast, word processors represent documents in ways that are expressly designed to support interactive creation and editing. Society's notion of

document has evolved from painstakingly engraved Chinese steles, literally “carved in stone,” to hand-copied medieval manuscripts; from the interminable revisions of loose-leaf computer manuals in the 1960s and 1970s to continually evolving Web sites whose pages are dynamically composed on demand from online databases, and it seems inevitable that more and more documents will be circulated in word-processor formats.

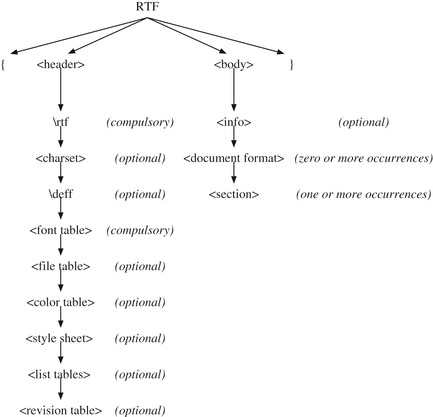

Section 4.6 describes examples of word-processor documents, starting with the ubiquitous Microsoft Word format and Rich Text Format (RTF). Word is intended to represent working documents inside a word processor. It is a proprietary format that depends on the exact version of Microsoft Word that was used and it is not always backward-compatible. RTF is more portable, being intended to transmit documents to other computers and other versions of the software. It is an open standard, defined by Microsoft, for exchanging word-processor documents between different applications.

Winds of change are sweeping through the world of document representation. XML and associated standards are beginning to be adopted for fully fledged word-processor documents. The Open Document Format (ODF) is an XML-based format that can describe word-processor documents and many more: from spreadsheets to charts, from presentations to mathematical formulae. ODF has been developed and promoted by a not-for-profit consortium called the Organization for the Advancement of Structured Information Standards, which is strongly associated with open source software, and whose mission is to drive the development and adoption of open standards for the global information society. Office Open XML (OOXML) is a similar (but, unfortunately, different) XML-based document format being developed by Microsoft to represent documents in its Office line of products. These two standards are vying to be the dominant format for describing word-processor documents, and we discuss the controversy and describe ODF in Section 4.6. We also describe the format used by the LaTeX document processing system, a system which has been around for over 20 years and is widely used to represent documents in the scientific and mathematical community.

Finally, Section 4.7 takes a brief look at other predominantly textual document formats: spreadsheets, presentation files, and e-mail.

This chapter gets down to details, the dirty details (where the devil is). You may find the level uncomfortably low. Why do you need to know all this? The answer is that when building digital libraries you will be presented with documents in many different formats, yet you will yearn for standardization. You have to understand how different formats work in order to appreciate their strengths and limitations. Examples? When converted from PDF to PostScript, documents lose interactive features like hyperlinks. Converting HTML to PostScript is easy (your browser does it every time you print a Web page), but converting an arbitrary PostScript file to HTML is next to impossible if you want a completely accurate visual replica.

Even if your project starts with paper documents, you still need to know about online formats. The optical character recognition process may produce Microsoft Word documents, retaining much of the formatting in the original and leaving illustrations and pictures

in situ. But how easy is it to extract the plain text for indexing purposes? To highlight search terms in the text? To display individual pages? Perhaps another format is preferable?

4.1. Representing Textual Documents

Way back in 1963, at the dawn of interactive computing, the American National Standards Institute (ANSI) began work on a character set that would standardize text representation across a range of computing equipment and printers. (At the time, a variety of codes were in use by different computer manufacturers, such as an extension of a

binary-coded decimal punched card code to deal with letters—EBCDIC, or Extended Binary Coded Decimal for Information Interchange—and the European Baudot code for teleprinters that accommodated mixed-case text by switching between upper- and lowercase modes.) In 1968, ANSI finally ratified the result, called ASCII: American Standard Code for Information Interchange. Until recently, ASCII dominated text representation in computing.

ASCII

Table 4.1 shows the ASCII character set, with code values in decimal, octal, and hexadecimal. Codes 65–90 (decimal) represent the uppercase letters of the Roman alphabet, while codes 97–122 are lowercase letters. Codes 48–57 give the digits zero through nine. Codes 0–32 and 127 are

control characters that have no printed form. Some of these govern physical aspects of the printer—for instance, BEL rings the bell (now downgraded to an electronic beep), BS backspaces the print head (now the cursor position). Others indicate parts of a communication protocol: SOH starts the header, STX starts the transmission. Interspersed between these blocks are sequences of punctuation and other nonletter symbols (codes 33–47, 58–64, 91–96, 123–126). Each code is represented in seven bits, which fits into a computer byte with one bit (the top one) free. In the original vision for ASCII, this was earmarked for a parity check.

ASCII was a great step forward. It helped computers evolve over the following decades from scientific number-crunchers and fixed-format card-image data processors to interactive information appliances that permeate all walks of life. However, it has proved a great source of frustration to speakers of other languages. Many different extensions have been made to the basic character set, using codes 128–255 to specify accented and non-Roman characters for particular languages. ISO 8859-1, from the International Organization for Standardization (the international counterpart of the American standards organization, ANSI), extends ASCII for Western European languages. For example, it represents

é as the single decimal value 233 rather than the ASCII sequence “

e followed by backspace followed by ´.” The latter is alien to the French way of thinking, for

é is really a single character, generated by a single keystroke on French keyboards. For non-European languages like Hebrew and Chinese, ASCII is irrelevant. For them, other schemes have arisen: for example, GB and Big-5 are competing standards for Chinese; the former is used in the People's Republic of China and the latter in Taiwan and Hong Kong.

As the Internet exploded into the World Wide Web and burst into all countries and all corners of our lives, the situation became untenable. The world needed a new way of representing text.

Unicode

In 1988, Apple and Xerox began work on Unicode, a successor to ASCII that aimed to represent all the characters used in all the world's languages. As word spread, the Unicode Consortium, a group of international and multinational companies, government organizations, and other interested parties, was formed in 1991. The result was a new standard, ISO-10646, ratified by the International Organization for Standardization in 1993. In fact, the standard melded the Unicode Consortium's specification with ISO's own work in this area.

Unicode continues to evolve. The main goal of representing the scripts of languages in use around the world has been achieved. Current work is addressing historic languages, such as Egyptian hieroglyphics and Indo-European languages, and notations like music. A steady stream of additions, clarifications, and amendments eventually lead to new published versions of the standard. Of course, backward compatibility with the existing standard is taken for granted.

A standard is sterile unless it is adopted by vendors and users. Programming languages like Java have built-in Unicode support. Earlier ones—C, Perl, Python, to name a few—have standard Unicode libraries. Today's operating systems all support Unicode, and application programs, including Web browsers, have passed on the benefits to the end user. Unicode is the default encoding for HTML and XML. People of the world, rejoice!

Unicode is universal: any document in an existing character set can be mapped into it. But it also satisfies a stronger requirement: the resulting Unicode file can be mapped back to the original character set without any loss of information. This requirement is called

round-trip compatibility with existing coding schemes, and it is central to Unicode's design. If a letter with an accent is represented as a single character in some existing character set, then an equivalent must also be placed in the Unicode set, even though there might be another way to achieve the same visual effect. Because the ISO 8859-1 character set mentioned above includes

é as a single character, it must be represented as a single Unicode character—even though an identical glyph can be generated using a sequence along the lines of “

e followed by backspace followed by ´.”

Round-trip compatibility is an attractive way to facilitate integration with existing software and was most likely motivated by the pressing need for a nascent standard to gain wide acceptance. You can safely convert any document to Unicode, knowing that it can always be converted back again if necessary to work with legacy software. This is indeed a useful property. However, multiple representations for the same character can cause complications. These issues are discussed further in Chapter 8 (Section 8.2), which gives a detailed account of the Unicode standard.

In the meantime, it is enough to know that the most popular method of representing Unicode is called UTF-8. UTF stands for “UCS Transformation Format,” which is a nested acronym: UCS is Unicode Character Set—so called because Unicode characters are “transformed” into this encoding format. UTF-8 is a variable-length encoding scheme where the basic entity is a byte. ASCII characters are automatically 1-byte UTF-8 codes; existing ASCII files are valid UTF-8.

Plain text

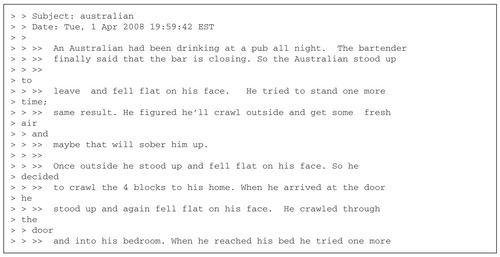

Unicode provides an all-encompassing form for representing characters, including manipulation, searching, storage, and transmission. Now we turn attention to document representation. The lowest common denominator for documents on computers has traditionally been plain, simple, raw ASCII text. Although there is no formal standard for this, certain conventions have grown up.

A text document comprises a sequence of character values interpreted in ordinary reading order: left to right, top to bottom. There is no header to denote the character set used. While 7-bit ASCII is the baseline, the 8-bit ISO ASCII extensions are often used, particularly for non-English text. This works well when text is processed by just one application program on a single computer, but when transferring between different applications—perhaps through e-mail, news, the Web, or file transfer—the various programs involved may make different assumptions. An alphabet mismatch often means that characters in the range 128–255 are displayed incorrectly.

Plain text documents are formatted in simple ways. Explicit line breaks are usually included. Paragraphs are separated by two consecutive line breaks, or else the first line is indented. Tabs are frequently used for indentation and alignment. A fixed-width font is assumed; tab stops usually occur at every eighth character position. Common typing conventions are adopted to represent characters like dashes (two hyphens in a row). Headings are underlined manually using rows of hyphens or equal signs. Emphasis is often indicated by surrounding text with a single underscore (_like this_), or flanking words with asterisks (*like* *this*).

Different operating systems have adopted conflicting conventions for specifying line breaks. Historically, teletypes were modeled after typewriters. The line-feed character (ASCII 10, LF in Table 4.1) moves the paper up one line but retains the position of the print head. The carriage-return character (ASCII 13, CR in Table 4.1) returns the print head to the left margin but does not move the paper. A new line is constructed by issuing

carriage return followed by

line feed (logically the reverse order could be used, but the

carriage return line feed sequence is conventional, and universally relied upon). Microsoft Windows uses this teletype-oriented interpretation. However, Unix and the Apple Macintosh adopt a different convention: the ASCII line-feed character moves to the next line

and returns the print head to the left margin. This difference in interpretation can produce a strange-looking control character at the end of every line:

∧M or “carriage return.” (Observe that

CR and

M are in the same row of Table 4.1. Control characters in the first column are often made visible by prefixing the corresponding character in the second column with

∧.) While the meaning of the message is not obscured, the effect is distracting.

People who use the standard Internet file transfer protocol (FTP) sometimes wonder why it has separate ASCII and binary modes. The difference is that in ASCII mode, new lines are correctly translated when copying files between different systems. It would be wrong to apply this transformation to binary files, however. Modern text-handling programs conceal the difference from users by automatically detecting which new-line convention is being used and behaving accordingly. Of course, this can lead to brittleness: if assumptions break down, all line breaks are messed up and users are mystified.

Plain text is a simple, straightforward, but impoverished representation of documents in a digital library. Metadata cannot be included explicitly (except, possibly, as part of the file name). However, automatic processing is sometimes used to extract title, author, date, and so on. Extraction methods rely on informal document structuring conventions. The more consistent the structure, the easier extraction becomes. Conversely, the simpler the extraction technique, the more seriously things break down when formatting quirks are encountered. Unfortunately you cannot normally expect complete consistency and accuracy in large plain text document collections.

Indexing

Rapid searching is a core function of digital libraries that distinguishes them from physical libraries. The ability to search full text adds great value when large document collections are used for study or reference. Search can be used for particular words, sets of words, or sequences of words. Obviously, it is less important in recreational reading, which normally takes place sequentially, in one pass.

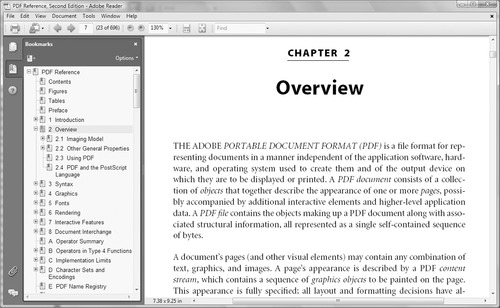

Before computers, full-text searching was confined to highly valued—often sacred—works for which a concordance had already been prepared. For example, some 300,000 word appearances are indexed in Cruden's concordance of the Bible, printed on 774 pages. They are arranged alphabetically, from

Aaron to

Zuzims, and any particular word can be located quickly using binary search. Each probe into the index halves the number of potential locations for the target, and the correct page for an entry can be located by looking at no more than 10 pages—fewer if the searcher interpolates the position of an entry from the position of its initial letter in the alphabet. A term can usually be located in a few seconds, which is not bad considering that simple manual technology is being employed. Once an entry has been located, the concordance gives a list of references that the searcher can follow up. Figure 4.1 shows some of Cruden's concordance entries for the word

search.

|

| Figure 4.1: Cruden's Complete Concordance to the Old and New Testaments by A. Cruden, C. J. Orwom, A. D. Adams, and S. A. Waters. 1941, Lutterworth Press |

In digital libraries searching is done by a computer, rather than by a person, but essentially the same techniques are used. The difference is that things happen faster. Usually it is possible to keep a list of terms in the computer's main memory, and the list can be searched in a matter of microseconds. When the computer's equivalent of the concordance entry becomes too large to store in main memory, secondary storage (usually a disk) is accessed to obtain the list of references, which typically takes a few milliseconds.

A full-text index to a document collection gives, for each word, the position of every occurrence of that word in the collection's text. A moment's reflection shows that the size of the index is commensurate with the size of the text, because an occurrence position is likely to occupy roughly the same number of bytes as a word in the text. (Four-byte integers, which are convenient in practice, are able to specify word positions in a 4-billion-word corpus. Conversely, an average English word has five or six characters and so also occupies a few bytes, the exact number depending on how it is stored and whether it is compressed.) We have implicitly assumed a word-level index, where occurrence positions give actual word locations in the collection. Space will be saved if locations are recorded within a unit, such as a paragraph, chapter, or document, yielding a coarser index—partly because pointers can be smaller, but chiefly because when a particular word occurs several times in the same unit, only one pointer is required for that unit.

A comprehensive index, capable of rapidly accessing all documents that satisfy a particular query, is a large data structure. Size, as well as being a drawback in its own right, also affects retrieval time, for the computer must read and interpret appropriate parts of the index to locate the desired information. Fortunately, there are interesting data structures and algorithms that can be applied to solve these problems. They are beyond the scope of this book, but references can be found in the “Notes and sources” section at the end of the chapter.

The basic function of a full-text index is to provide, for any particular query term, a list of all the units that contain it, along with (for reasons to be explained shortly) the number of times it occurs in each unit on the list. It's simplest to think of the “units” as being documents, although the granularity of the index may use paragraphs or chapters as the units instead—or even individual words, in which case what is returned is a list of the word numbers corresponding to the query term. And it's simplest to think of the query term as a word, although if stemming or case-folding is in effect, the term may correspond to several different words. For example, with stemming, the term

computer may correspond to the words

computer,

computers,

computation,

compute, and so on; and with case-folding it may correspond to

computer,

Computer,

COMPUTER, and even

CoMpUtEr (an unusual word, but not completely unknown—for example, it appears in this book!).

When one indexes a large text, it rapidly becomes clear that just a few common words—such as

of,

the, and

and—account for a large number of the entries in the index. People have argued that these words should be omitted, since they take up so much space and are not likely to be needed in queries, and for this reason they are often called

stop words. However, some index compilers and users have observed that it is better to leave stop words in. Although a few dozen stop words may account for around 30 percent of the references that an index contains, it is possible to represent them in a way that consumes relatively little space.

A query to a full-text retrieval system usually contains several words. How they are interpreted depends on the type of query. Two common types, both explained in Section 3.4, are

Boolean queries and

ranked queries. In either case, the process of responding to the query involves looking up, for each term, the list of documents it appears in, and performing logical operations on these lists. In the case of Boolean queries, the result is a list of documents that satisfy the query, and this list (or the first part of it) is displayed to the user.

In the case of ranked queries, the final list of documents is sorted according to the ranking heuristic that is in place. As Section 3.4 explains, these heuristics gauge the similarity of each document to the set of terms that constitute the query. For each term, they weigh the frequency with which it appears in the document being considered (the more it is mentioned, the greater the similarity) against its frequency in the document collection as a whole (common terms are less significant). This is why the index stores the number of times each word appears in each document. A great many documents—perhaps all documents in the collection—may satisfy a particular ranked query (if the query contains the word

the, all English documents would probably qualify). Retrieval systems take great pains to work efficiently even on such queries; for example, they use techniques that avoid the need to sort the list fully in order to find the top few elements.

In effect, the indexing process treats each document (or whatever the unit of granularity is) as a “bag of words.” What matters is the words that appear in the document and (for ranked queries) the frequency with which they appear. The query is also treated as a bag of words. This representation provides the foundation for full-text indexing. Whenever documents are presented in forms other than word-delineated plain text, they must be reduced to this form so that the corresponding bag of words can be determined.

Word segmentation

Before an index is created, the text must first be divided into words. A word is a sequence of alphanumeric characters surrounded by white space or punctuation. Usually some large limit is placed on the length of words—perhaps 16 characters, or 256 characters. Another practical rule of thumb is to limit numbers that appear in the text to a far smaller size—perhaps four numeric characters, so that only numbers less than 9,999 are indexed. Without this restriction, the size of the vocabulary might be artificially inflated—for example, a long document with numbered paragraphs could contain hundreds of thousands of different integers—which negatively affects certain technical aspects of the indexing procedure. Years, however, which have four digits, should be preserved as single words.

In some languages, plain text presents special problems. Languages like Chinese and Japanese are written without any spaces or other word delimiters (except for punctuation marks). This causes obvious problems in full-text indexing: to get a bag of words, we must be able to identify the words. One possibility is to treat each character as an individual word. However, this produces poor retrieval results. An extreme analogy is an English-language retrieval system that, instead of finding all documents containing the words

digital library, found all documents containing the constituent letters

a b d g i l r t y. Of course the searcher will receive all sought-after documents, but they are diluted with countless others. And ranking would be based on letter frequencies, not word frequencies. The situation in Chinese is not so bad, for individual characters are far more numerous, and more meaningful, than individual letters in English. But they are less meaningful than words. Chapter 8 discusses the problem of segmenting such languages into words.

4.2. Textual Images

Plain text documents in digital libraries are often produced by digitizing paper documents. Digitization is the process of taking traditional library materials, typically in the form of books and papers, and converting them to electronic form, which can be stored and manipulated by a computer. Digitizing a large collection is a time-consuming and expensive process that should not be undertaken lightly.

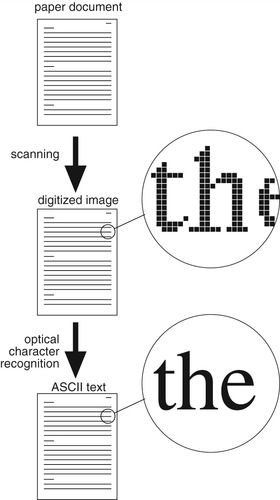

Digitizing proceeds in two stages, illustrated in Figure 4.2. The first stage produces a digitized image of each page using a process known as

scanning. The second stage produces a digital representation of the textual content of the pages using optical character recognition (OCR). In many digital library systems, what is presented to library readers is the result of the scanning stage: page images, electronically delivered. The OCR stage is necessary if a full-text index is to be built that will allow searchers to locate any combination of words, or if any automatic metadata extraction technique is contemplated, such as identifying document titles by seeking them in the text. Sometimes the second stage is omitted, but full-text search is then impossible, which negates a prime advantage of digital libraries.

If, as is usually the case, OCR is undertaken, the result can be used as an alternative way of displaying the page contents. The display will be more attractive if the OCR system not only is able to interpret the text in the page image, but also can retain the page layout as well. Whether it is a good idea to display OCR output depends on how well the page content and format are captured by the OCR process, among other things.

Scanning

The result of the first stage, scanning, is a digitized image of each page. The image resembles a digital photograph, although its picture elements or

pixels may be either black or white—whereas photos have pixels that come in color, or at least in different shades of gray. Text is well represented in black and white, but if the image includes nontextual material, such as pictures, or exhibits artifacts like coffee stains or creases, grayscale or color images will resemble the original pages more closely. Image digitization is discussed more fully in the next chapter.

When scanning page images you need to decide whether to use black-and-white, grayscale, or color, and you also need to determine the resolution of the digitized images—that is, the number of pixels per linear unit. A familiar example of black-and-white image resolution is the ubiquitous laser printer, which generally prints 600–1200 dots per inch. Table 4.2 shows the resolution of several common imaging devices.

The number of bits used to represent each pixel also helps to determine image quality. Most printing devices are black and white: one bit is allocated to each pixel. When putting ink on paper, this representation is natural—a pixel is either inked or not. However, display technology is more flexible, and computer screens allow several bits per pixel. Color displays range up to 24 bits per pixel, encoded as 8 bits for each of the colors red, green, and blue, or even 32 bits per pixel, encoded in a way that separates the chromatic, or color, information from the achromatic, or brightness, information. Color scanners can be used to capture images having more than 1 bit per pixel.

More bits per pixel can compensate for a lack of linear resolution and vice versa. Research on human perception has shown that if a dot is small enough, its brightness and size are interchangeable—that is, a small bright dot cannot be distinguished from a larger, dimmer one. The critical size below which this phenomenon takes effect depends on the contrast between dots and their background, but corresponds roughly to a very low-resolution (640 × 480) display at normal viewing levels and distances.

When digitizing documents for a digital library, think about what you want the user to be able to see. How closely does it need to resemble the original document pages? Are you concerned about preserving artifacts? What about pictures in the text? Will users see one page on the screen at a time? Will they want to magnify the images?

You will need to obtain scanned versions of several sample pages, chosen to cover the kinds and quality of images in the collection, and digitized to a range of different qualities (e.g., different resolutions, different gray levels, and color versus monochrome). You should conduct trials with end users of the digital library to determine what qualities are necessary for actual use.

It is always tempting to say that quality should be as high as it possibly can be. But there is a cost: the downside of accurate representation is increased storage space on the computer and—probably more importantly—increased time required for page access by users, particularly remote users. Doubling the linear resolution quadruples the number of pixels, and although this increase is ameliorated by compression techniques, users still pay a toll in access time. Your trials should take place on typical computer configurations using typical communications facilities, so that you can assess the effect of download time as well as image quality. You might also consider generating thumbnail images, or images at several different resolutions, or using a “progressive refinement” form of image transmission (see Section 5.3), so that users who need high-quality pictures can be sure they’ve got the right one before embarking on a lengthy download.

Optical character recognition

The second stage of digitizing library material is to transform the scanned image into a digitized representation of the page

content—in other words, a character-by-character representation rather than a pixel-by-pixel one. This is known as

optical character recognition (OCR). Although the OCR process itself can be entirely automatic, subsequent manual cleanup is invariably necessary and is usually the most expensive and time-consuming operation involved in creating a digital library from printed material. OCR might be characterized as taking “dumb” page images that are nothing more than images and producing “smart” electronic text that can be searched and processed in many different ways.

As a rule of thumb, a resolution of 300 dpi is needed to support OCR of regular fonts (10-point or greater), and 400 to 600 dpi for smaller fonts (9-point or less). Many OCR programs can tune the brightness of grayscale images appropriately for the text being recognized, so grayscale scanning tends to yield better results than black-and-white scanning. However, black-and-white images generate much smaller files than grayscale ones.

Not surprisingly, the quality of the output of an OCR program depends critically on the quality of the input. With clear, well-printed English, on clean pages, in ordinary fonts, digitized to an adequate resolution, laid out on the page in the normal way, with no tables, images, or other nontextual material, a leading OCR engine is likely to be 99.9 percent accurate or above—say 1 to 4 errors per 2,000 characters, which is a little under a page of this book. Accuracy continues to increase, albeit slowly, as technology improves. Replicating the exact format of the original image is more difficult, although for simple pages an excellent approximation will be achieved.

Unfortunately, the OCR operation is rarely presented with favorable conditions. Problems occur with proper names, with foreign names and words, and with special terminology—like Latin names for biological species. Problems are incurred with strange fonts, and particularly with alphabets that have accents or diacritical marks, or non-Roman characters. Problems are generated by all kinds of mathematics, by small type or smudgy print, and by overly dark characters that have smeared or bled together or overly light ones whose characters have broken up. OCR has problems with tightly packed or loosely set text where, to justify the margins, character and word spacing diverge widely from the norm. Hand annotation interferes with print, as does water-staining, or extraneous marks like coffee stains or squashed insects. Multiple columns, particularly when set close together, are difficult. Other problems are caused by any kind of pictures or images—particularly ones that contain some text; by tables, footnotes, and other floating material; by unusual page layouts; and by text in the image that is skewed, or lines of text that are bowed from the attempt to place book pages flat on the scanner platen, or by the book's binding if it interferes with the scanned text. These problems may sound arcane, but almost all OCR projects encounter them.

The highest and most expensive level of accuracy attainable from commercial service bureaus is typically 99.995 percent, or 1 error in 20,000 characters of text (approximately six pages of this book). Such a level is often most easily achieved by having the text retyped manually rather than by having it processed automatically by OCR. Each page is processed twice, by different operators, and the results are compared automatically. Any discrepancies are resolved manually.

As a rule of thumb, OCR becomes less efficient than manual keying when its accuracy rate drops below 95 percent. Moreover, once the initial OCR pass is complete, costs tend to double with each halving of the accuracy rate. However, in a large digitization project, errors are usually non-uniformly distributed over pages: often 80 percent of errors come from 20 percent of the page images. It may be worthwhile to have the worst of the pages manually keyed and to perform OCR on the remainder.

Human intervention is often valuable for cleaning up both the image before OCR and, afterward, the text produced by OCR. The actual recognition part can be time-consuming—maybe one or two minutes per page—and it is useful to perform interactive preprocessing for a batch of pages, have them recognized offline, and return them to the batch for interactive cleanup. Careful attention to such practical details can make a great deal of difference in a large-scale project.

Interactive OCR involves six steps: image acquisition, cleanup, page analysis, recognition, checking, and saving.

Acquisition, cleanup, and page analysis

Images are acquired either by inputting them from a document scanner or by reading a file that contains predigitized images. In the former case, the document is placed on the scanner platen and the program produces a digitized image. Most digitization software can communicate with a wide variety of image acquisition devices. An OCR program may be able to scan a batch of several pages and let you work interactively on the other steps afterward. This is particularly useful if you have an automatic document feeder.

The cleanup stage applies image-processing operations to the image. For example, a despeckle filter cleans up isolated pixels or “pepper and salt” noise. It may be necessary to rotate the image by 90 or 180 degrees, or to automatically calculate a skew angle and deskew the image by rotating it back by that angle. Images may be converted from white-on-black to the standard black-on-white representation, and double-page spreads may be converted to single-image pages. These operations may be invoked manually or automatically. If you don't want to recognize certain parts of the image, or if it contains large artifacts—such as photocopied parts of the document's binding—you may need to remove them manually by selecting the unwanted area and clearing it.

The page analysis stage examines the layout of the page and determines which parts to process and in what order. Again, page analysis can be either manual or automatic. The result divides the page into blocks of different types, typically text blocks, which will be interpreted as ordinary running text, table blocks, which will be further processed to analyze the layout before reading each table cell, and picture blocks, which will be ignored in the character recognition stage. During page analysis, multicolumn text layouts are detected and are sorted into correct reading order.

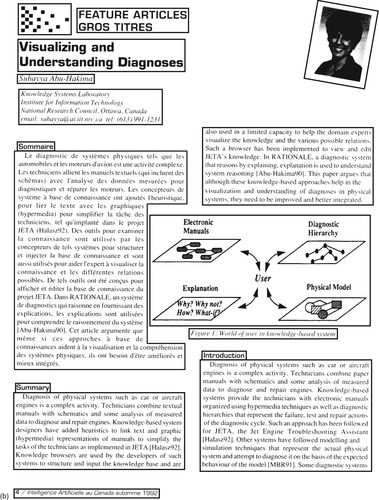

Figure 4.3a shows an example of a scanned document with regions that contain different types of data: text, two graphics, and a photographic image. In Figure 4.3b, bounding boxes have been drawn (manually in this case) around these regions. This particular layout is interesting because it contains a region—the large text block halfway down the left-hand column—that is clearly nonrectangular, and another region—the halftone photograph—that is tilted. Because layouts like this present significant challenges to automatic page analysis algorithms, many interactive OCR systems show users the result of automatic page analysis and offer the option of manually overriding it.

|

|

| Figure 4.3: (a) Document image containing different types of data; (b) the document image segmented into different regions. Copyright © 1992 Canadian Artificial Intelligence Magazine |

It is also useful to be able to manually set up a template that applies to a whole batch of pages. For example, you might define header and footer regions, and specify that each page contains a double column of text—perhaps even give the bounding boxes of the columns. Perhaps the page analysis process can be circumvented by specifying in advance that all pages contain single-column running text, without headers, footers, pictures, or tables. Finally, although word spacing is usually ignored, in some cases spaces may be significant—as in formatted computer programs.

Tables are particularly difficult for page analysis. For each table, the user may be able to specify interactively such things as whether the table has one line per entry or contains multiline cells, and whether the number of columns is the same throughout or some rows contain merged cells. As a last resort, it may be necessary for the user to specify every row and column manually.

Recognition

The recognition stage reads the characters on the page. This is the actual “OCR” part. One parameter that may need to be specified is the typeface (e.g., regular typeset text, fixed-width typewriter print, or dot-matrix characters). Another is the alphabet or character set, which is determined by the language used. Most OCR packages deal with only the Roman alphabet, although some accept Cyrillic, Greek, and Czech as well. Recognition of Arabic text, the various Indian scripts, or ideographic languages like Chinese and Korean calls for specialist software.

Furthermore, character-set variations occur even within the Roman alphabet. While English speakers are accustomed to the 26-letter alphabet, many languages do not employ all the letters—Māori, for example, uses only 15. Documents in German include an additional character, ß or

scharfes s, which is unique because, unlike all other German letters, it exists only in lowercase. (A recent change in the official definition of the German language has replaced some, but not all, occurrences of ß by

ss.) European languages use accents: the German umlaut (

ü); the French acute (

é), grave (

à), circumflex (

ô), and cedilla (

ç); the Spanish tilde (

ñ). Documents may, of course, be multilingual.

For certain document types it may help to create a new “language” to restrict the characters that can be recognized. For example, a particular set of documents may be all in uppercase, or consist of nothing but numbers and associated punctuation.

In some OCR systems, the recognition engine can be trained to attune it to the peculiarities of the documents being read. Training may be helpful if the text includes decorative fonts or special characters like mathematical symbols. It may also be useful for recognition of large batches of text (100 pages or more) in which the print quality is low.

For example, the letters in some particular character sequences may have bled or smudged together on the page so that they cannot be separated by the OCR system's segmentation mechanism. In typographical parlance they form a

ligature: a combination of two or three characters set as a single glyph—such as

fi,

fl, and

ffl in the font in which this book is printed. Although OCR systems recognize standard ligatures as a matter of course, printing occasionally contains unusual ligatures, as when particular sequences of two or three characters are systematically joined together. In these cases it may be helpful to train the system to recognize each combination as a single unit.

Training is accomplished by making the system process a page or two of text in a special training mode. When unrecognized characters are encountered, the user can enter them as new patterns. It may first be necessary to adjust the bounding box to include the whole pattern and exclude extraneous fragments of other characters. Recognition accuracy will improve if several examples of each pattern are supplied. When naming the pattern, its font properties (italic, bold, small capitals, subscript, superscript) may need to be specified along with the actual characters that comprise the pattern.

There is a limit to the amount of extra accuracy that training can achieve. OCR still does not perform well with more stylized typefaces, such as Gothic, that are significantly different from modern ones—and training may not help much.

Obviously, better results can be obtained if a language dictionary is used. It is far easier to distinguish letters like

o,

0,

O, and

Q in the context of the words in which they occur. Most OCR systems include predefined dictionaries and are able to use domain-specific ones containing technical terms, common names, abbreviations, product codes, and the like. Particular words may be constrained to particular styles of capitalization. Regular words may appear with or without an initial capital letter and may also be written in all capitals. Proper names must begin with a capital letter (and may be written in all capitals too). Some acronyms are always capitalized, while others may be capitalized in fixed but arbitrary ways.

Just as the language determines the basic alphabet, it may also preclude many letter combinations. Such information can greatly constrain the recognition process, and some OCR systems let users provide it.

Checking and saving

The next stage of OCR is manual checking. The output is displayed on the screen, with problems highlighted in color. One color may be reserved for unrecognized and uncertainly recognized characters, another for words that do not appear in the dictionary. Different display options can suppress some of this information. The original image is displayed too, perhaps with an auxiliary magnification window that zooms in on the region in question. An interactive dialog, similar to the spell-check mode of word processors, focuses on each error and allows the user to ignore this instance, ignore all instances, correct the word, or add it to the dictionary. Other options allow users to ignore words with digits and other nonalphabetic characters, ignore capitalization mismatches, normalize spacing around punctuation marks, and so on.

You may also want to edit the format of the recognized document, including font type, font size, character properties like italics and bold, margins, indentation, table operations, and so on. Ideally, general word-processor options will be offered within the OCR package, to save having to alternate between the OCR program and a word processor.

The final stage is to save the OCR result, usually to a file (alternatives include copying it to the clipboard or sending it by e-mail). Supported formats might include plain text, HTML, RTF, Microsoft Word, and PDF. There are many possible options. You may want to remove all formatting information before saving, or include the “uncertain character” highlighting in the saved document, or include pictures in the document. Other options control page size, font inclusion, and picture resolution. In addition, it may be necessary to save the original page image as well as the OCR text. In PDF format (described in Section 4.5), you can save the text and pictures only, or save the text under (or over) the page image, where the entire image is saved as a picture and the recognized text is superimposed upon it, or hidden underneath it. This hybrid format has the advantage of faithfully replicating the look of the original document—which can have useful legal implications. It also reduces the requirement for super-accurate OCR. Alternatively, you might want to save the output in a way that is basically textual, but with the image form substituted for the text of uncertainly recognized words.

Page handling

Let us return to the process of scanning the page images and consider some practical issues. Physically handling the pages is easiest if you can “disbind” the documents by cutting off their bindings (obviously, this destroys the source material and is only possible when spare copies exist). At the other extreme, originals cans be unique and fragile, and specialist handling is essential to prevent their destruction. For example, most books produced between 1850 and 1950 were printed on acid paper (paper made from wood pulp generated by an acidic process), and their life span is measured in decades—far shorter than earlier or later books. Toward the end of their lifetime they decay and begin to fall apart (see Chapter 9).

Sometimes the source material has already been collected on microfiche or microfilm, and the expense of manual paper handling can be avoided. Although microfilm cameras are capable of very high resolution, quality is compromised because an additional generation of reproduction is interposed; furthermore, the original microfilming may not have been done carefully enough to permit digitized images of sufficiently high quality for OCR. Even if the source material is not already in this form, microfilming may be the most effective and least damaging means of preparing content for digitization. It capitalizes on substantial institutional and vendor expertise, and as a side benefit the microfilm masters provide a stable long-term preservation format.

Generally the two most expensive parts of the whole process are handling the source material on paper, and the manual interactive processes of OCR. A balance must be struck. Perhaps it is worth the extra generation of reproduction that microfilm involves to reduce paper handling, at the expense of more labor-intensive OCR; perhaps not.

Microfiche is more difficult to work with than microfilm, because it is harder to reposition automatically from one page to the next. Moreover, it is often produced from an initial microfilm, in which case one generation of reproduction can be eliminated by digitizing directly from the film.

Image digitization may involve other manual processes apart from paper handling. Best results may be obtained by manually adjusting settings like contrast and lighting individually for each page or group of pages. The images may have to be manually deskewed. In some cases, pictures and illustrations will need to be copied from the digitized images and pasted into other files.

Planning an image digitization project

Any significant image digitization project will normally be outsourced. The cost varies greatly with the size of the job and the desired quality. For a small simple job, with material in a form that can easily be handled (e.g., books whose bindings can be removed), text that is clear and problem-free, and with few images and tables that need to be handled manually, you could expect to pay

• 50¢/page for fully automated OCR processing

• $1.25/page for 99.9 percent quality (3 incorrect characters per page)

• $2/page for 99.95 percent quality (1.5 incorrect characters per page)

• $4/page for 99.995 percent quality (1 incorrect character every 6 pages)

for scanning and OCR, with a discount for large quantities. If difficulties arise, costs increase to many dollars per page. Using a third-party service bureau eliminates the need for you to become an expert in state-of-the-art image digitization and OCR. However, you will need to set standards for the project and to ensure that they are adhered to.

Most of the factors that affect digitization can be evaluated only by practical tests. You should arrange for samples to be scanned and OCR'd by competing companies and then compare the results. For practical reasons (because it is expensive or infeasible to ship valuable source materials around), the scanning and OCR stages may be contracted out separately. Once scanned, images can be transmitted electronically to potential OCR vendors for evaluation. You should probably obtain several different scanned samples—at different resolutions, different numbers of gray levels, from different sources, such as microfilm and paper—to give OCR vendors a range of different conditions. You should select sample images that span the range of challenges that your material presents.

Quality control is clearly a central concern in any image digitization project. An obvious quality-control approach is to load the images into your system as soon as they arrive from the vendor and to check them for acceptable clarity and skew. Images that are rejected are returned to the vendor for rescanning. However, this strategy is time-consuming and may not provide sufficiently timely feedback to allow the vendor to correct systematic problems. It may be more effective to decouple yourself from the vendor by batching the work. Quality can then be controlled on a batch-by-batch basis, where you review a statistically determined sample of the images and accept or reject whole batches.

Inside an OCR shop

Because it's labor-intensive, OCR work is often outsourced to developing countries like India, the Philippines, and Romania. Ten years ago, one of the authors visited an OCR shop in a small two-room unit on the ground floor of a high-rise building in a country town in Romania. It contained about a dozen terminals, and every day from 7:00 am through 10:30 pm the terminals were occupied by operators who were clearly working with intense concentration. There were two shifts a day, with about a dozen people in each shift and two supervisors—25 employees in all.

Most of the workers were university students who were delighted to have this kind of employment—it compared well with the alternatives available in their town. Pay was by results, not by the hour—and this was quite evident as soon as you walked into the shop and saw how hard people worked. They regarded their shift at the terminal as an opportunity to earn money, and they made the most of it.

This firm uses two different commercial OCR programs. One is better for processing good copy, has a nicer user interface, and makes it easy to create and modify custom dictionaries. The other is preferred for tables and forms; it has a larger character set with many unusual alphabets (e.g., Cyrillic). The firm does not necessarily use the latest version of these programs; sometimes earlier versions have special advantages.

The principal output formats are Microsoft Word and HTML. Again, the latest release of Word is not necessarily the one that is used. A standalone program is used for converting Word documents to HTML because it greatly outperforms Word's built-in facility. The people in the firm are expert at decompiling software and patching it. For example, they were able to fix some errors in the conversion program that affected how nonstandard character sets are handled. Most HTML is edited by hand, although they use a WYSIWYG (What You See Is What You Get) HTML editor for some of the work.

A large part of the work involves writing scripts or macros to perform tasks semiautomatically. Extensive use is made of Visual Basic for Applications (VBA). Although Photoshop is used for image work, they also employ a scriptable image processor for repetitive operations. MySQL, an open-source SQL implementation, is used for forms databases. Java is used for animation and for implementing Web-based questionnaires.

These people have a wealth of detailed knowledge about the operation of different versions of the software packages they use, and they constantly review and reassess the situation as new releases emerge. But perhaps their chief asset is their set of in-house procedures for dividing up work, monitoring its progress, and checking the quality of the result. They claim an accuracy of around 99.99 percent for characters, or 99.95 percent for words—an error rate of 1 word in 2,000. This is achieved by processing every document twice, with different operators, and comparing the result. In 1999, their throughput was around 50,000 pages/month, although the firm's capability is flexible and can be expanded rapidly on demand. Basic charges for ordinary work are around $1 per page (give or take a factor of two), but vary greatly depending on the difficulty of the job.

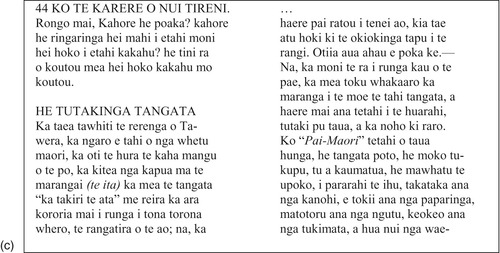

An example project

The New Zealand Digital Library undertook a project to put a collection of historical New Zealand Māori newspapers on the Web, in fully indexed and searchable form. There were about 20,000 original images, most of them double-page spreads. Figure 4.4 shows a sample image, an enlarged version of the beginning, and some of the text captured using OCR. The particular image shown was difficult to work with because some areas are smudged by water-staining. Fortunately, not all the images were so poor. As you can see by attempting to decipher it yourself, high accuracy requires a good knowledge of the language in which the document is written.

The first task was to scan the images into digital form. Gathering together paper copies of the newspapers would have been a massive undertaking, since the collection comprises 40 different newspaper titles that are held in a number of libraries and collections scattered throughout the country. Fortunately, New Zealand's national archive library had previously produced a microfiche containing all the newspapers for the purposes of historical research. The library provided us with access not just to the microfiche result, but also to the original 35-mm film master from which it had been produced. This simultaneously reduced the cost of scanning and eliminated one generation of reproduction. The photographic images were of excellent quality because they had been produced specifically to provide microfiche access to the newspapers.

Once the image source has been settled on, the quality of scanning depends on the scanning resolution and the number of gray levels or colors. These factors also determine how much storage is required for the information. After some testing, it was determined that a resolution of approximately 300 dpi on the original printed newspaper was adequate for the OCR process. Higher resolutions yielded no noticeable improvement in recognition accuracy. OCR results from a good black-and-white image were found to be as accurate as those from a grayscale one. Adapting the threshold to each image, or each batch of images, produced a black-and-white image of sufficient quality for the OCR work. However, grayscale images were often more satisfactory and pleasing for the human reader.

Following these tests, the entire collection was scanned by a commercial organization. Because the images were supplied on 35-mm film, the scanning could be automated and proceeded reasonably quickly. Both black-and-white and grayscale images were generated at the same time to save costs, although it was still not clear whether both forms would be used. The black-and-white images for the entire collection were returned on eight CD-ROMs; the grayscale images occupied 90 CD-ROMs.

Once the images had been scanned, the OCR process began. First attempts used Omnipage, a widely used proprietary OCR package. But a problem quickly arose: the Omnipage software is language-based and insists on utilizing one of its known languages to assist the recognition process. Because the source material was in the Māori language, additional errors were introduced when the text was automatically “corrected” to more closely resemble English. Although other language versions of the software were available, Māori was not among them, and it proved impossible to disable the language-dependent correction mechanism. In previous versions of Omnipage one can subvert the language-dependent correction by simply deleting the dictionary file (and the Romanian organization described above used an obsolete version for precisely this reason.) The result was that recognition accuracies of not much more than 95 percent were achieved at the character level. This meant a high incidence of word errors in a single newspaper page, and manual correction of the Māori text proved extremely time-consuming.

A number of alternative software packages and services were considered. For example, a U.S. firm offered an effective software package for around $10,000 and demonstrated its use on some sample pages with impressive results. The same firm offers a bureau service and was prepared to undertake the basic OCR for only $0.16 per page (plus a $500 setup fee). Unfortunately, this did not include verification, which had been identified as the most critical and time-consuming part of the process—partly because of the Māori language material.

Eventually, we located an inexpensive software package that had high accuracy and allowed for the provision of a tailor-made language dictionary. It was decided that the OCR process would be done in house, and this proved to be an excellent decision; however, it is heavily conditioned on the unusual language in which the collection is written and the local availability of fluent Māori speakers.

A parallel task to OCR was to segment the double-page spreads into single pages for the purposes of display, in some cases correcting for skew and page-border artifacts. Software was produced for segmentation and skew detection, and a semiautomated procedure was used to display segmented and deskewed pages for approval by a human operator. The result of these labors, turned in to a digital library, is described in Section 8.1.

4.3. Web Documents: HTML and XML

HTML, the Hypertext Markup Language, is the underlying document format of the World Wide Web, which makes it an important baseline for interactive viewing. Like all major, long-standing document formats, HTML has undergone growing pains, and its history reflects the anarchy that has characterized the Web's evolution. Since HTML's conception in 1989, its development has been largely driven by software vendors who compete for the Web browser market by inventing new features to make their product distinctive—the so called “browser wars.”

Many of the introduced features played on people's desires to exert more control over how their documents appear. Who gets to control font attributes like typeface and size—writer or reader? If you think this is a trivial issue, imagine what it means for the visually disabled. Allowing authors to dictate details of how their documents appear conflicts sharply with the original vision for HTML, which divorced document structure from presentation and left decisions about rendering documents to the browser itself. It makes the pages less predictable because viewing platforms differ in the support they provide. For example, HTML text can be marked up as “emphasized,” and while it is common practice to render such items in italics, there is no requirement to follow this convention: boldface would convey the same intention.

Out of the maelstrom, a series of HTML standards has emerged, although HTML's evolution continues—indeed, a major new revision, HTML 5, is under development although the warring parties (with vested interests) still clash: on this occasion a key controversy centers on whether or not native support for patent-free audio and video formats should be included in the standard.

The birth of HTML did not occur in a vacuum. Dipping further back in time, during the 1970s and 1980s, a generalized system for structural markup was developed called the Standard Generalized Markup Language (SGML); it was ratified as an ISO international standard in 1986. SGML is not a markup language but a metalanguage for describing markup formats. The original HTML format was expressed using SGML, and large organizations like government offices and the military also made significant use of it; however, SGML is rather intricate, and it has proven difficult to develop flexible software tools for the fully blown standard. This fact was the catalyst for the

extensible markup language, XML.

XML is a simplified version of SGML designed specifically for interoperability over the Web. Informally speaking, it is a dialect of SGML (whereas HTML is an example of a markup language that SGML can describe). XML provides a flexible way of characterizing document structure and metadata, making it well suited to digital libraries. It has achieved widespread use in a very short stretch of time.

XML has strict syntactic rules that prevent it from describing ancient forms of HTML exactly. The differences expose parts of the early specifications that were loosely formed—ones that cause difficulty when parsing and processing documents. However, with a little trickery—for example, judicious placement of white space—it is possible to generate an XML specification of an extremely close approximation to HTML. Put another way, you can take advantage of HTML's sloppy specification to produce files that are valid XML. Such files have twin virtues: they can be viewed in any Web browser, and they can be parsed and processed by XML tools. The idea is formalized in HTML 5, which includes parallel specifications: one for “classic” HTML and another, XHTML, which is XML-compliant.

We start this section by describing the development of markup languages and their relation to stylesheet languages. Then we describe the basics of HTML and explain how it can be used in a digital library. Following that, we describe the XML metalanguage and again discuss its role in digital libraries. Section 4.4 covers stylesheet languages for both HTML and XML.

Markup and stylesheet languages

Web culture has advanced at an extraordinary pace, creating a melee of incremental—and at times conflicting—additions and revisions to HTML, XML, and related standards. Figure 4.5 summarizes the main developments.

Although it has been retrospectively fitted with XML descriptions, HTML was created before XML was conceived and drew on the more general expressive capabilities of SGML. It was also forged in the heat of the browser wars, in which Web browsers sprouted a proliferation of innovative nonstandard features that vendors thought would make their products more appealing. As a result, browsers became forgiving: they process files that flagrantly violate SGML syntax. One example is tag scope overlap—writing <

i>

one <

b>

two </

i>

three </

b> to produce

one

twothree—despite SGML's requirement that tags be strictly nested. During subsequent attempts at standardization, more tags were added that control typeface and layout, features deliberately excluded from HTML's original design.

The notion of

style sheets was introduced to resolve the conflict between presentation and structure by moving formatting and layout specifications to a separate file. Style sheets purify the HTML markup to reflect, once again, nothing but document structure. Different documents can share a uniform appearance by adopting the same style sheet. Equally, different style sheets can be associated with the same document, for instance defining one for on-screen viewing and another for printing Style sheets can specify a sequence—a cascade—of inherited stylistic properties and are dubbed

cascading style sheets.

The first specification of cascading style sheets was in 1996, and this was quickly followed by an expanded backward-compatible version two years later. Style sheets can be adapted to different media by including formatting commands that are grouped together and associated with a given medium—screen, print, projector, handheld device, and so on. Guided by the user (or otherwise), applications that process the document use the relevant set of style commands. A Web browser might choose

screen for online display but switch to

print when rendering the document in PostScript.

Modern versions of HTML promote the use of style sheets. Moreover, they encourage them by officially deprecating formatting tags and other elements that affect presentation rather than structure. This is accomplished through three subcategories to the standard.

Strict HTML expresses layout exclusively through style sheets: frameset commands and all deprecated tags and elements listed in the standard are excluded. In

transitional HTML, style sheets are the principal way of specifying layout, but to provide compatibility with older browsers deprecated commands are permitted, although framesets are prohibited.

Frameset HTML permits frameset commands and deprecated elements. Modern HTML files declare their subcategory at the start of the document. The format also adds improved support for multidirectional text (not just left to right) and enhancements for improved access by people with disabilities.

An HTML subset called XHTML has been defined that obeys the stricter syntactic rules imposed by the XML metalanguage. For instance, tags in XML are case sensitive, so XHTML tags and attributes are defined to be lowercase. Attributes within a tag must be enclosed in quotes. Each opening tag must be balanced by a corresponding closing one (there are also single tags that combine opening and closing, with their own special syntax).

The power and flexibility of XML are further increased by related standards. Three are given in Figure 4.5 (there are many others). The

extensible stylesheet language XSL described in Section 4.4 represents a more sophisticated approach than cascading style sheets: it can also transform data. The

XML linking language XLink provides a more powerful method for connecting resources than HTML hyperlinks: it has bidirectional links, can link more than two entities, and associates metadata with links. Finally, XML Schema provides a rich mechanism for combining components and controlling the overall structure, attributes, and data types used in a document.

From a technical standpoint, it is easier to work with XML and its siblings than HTML because they conform to a strictly defined syntax and are therefore easier to parse. In reality, however, digital libraries have to handle legacy material gracefully. Today's browsers cope remarkably well with the wide range of HTML files: they take backward compatibility to truly impressive levels. To help promote standardization, an open source software utility called HTML Tidy has been developed that converts older formats. The process is largely automatic, but human intervention may be required if files deviate radically from recognized norms.

Basic HTML

Modern markup languages use words enclosed in angle brackets as tags to annotate text. For example, <

title>

A really exciting story</

title> defines the title element of an HTML document. In HTML, tag names are case insensitive—<

Title> is the same as <

title>. For each tag, the language defines a “closing” version, which gives the tag name preceded by a slash character (/). However, closing tags can be omitted in certain situations—a practice that some decry as impure while others endorse as legitimate shorthand. For example, <

p> is used to mark up paragraphs, and subsequent <

p>s are assumed to automatically end the previous paragraph—no intervening </

p> is necessary. The shortcut is possible because nesting a paragraph within a paragraph—the only other plausible interpretation on encountering the second <

p>—is invalid in HTML.

Opening tags can include a list of qualifiers known as

attributes. These have the form

name="value". For example, <

img src="gsdl.gif" width="537" height="17"> specifies an image with source file name

gsdl.gif and dimensions 537 × 17 pixels.

Because the language uses characters such as <, >, and " as special markers, a way is needed to display these characters literally. In HTML these characters are represented as special forms called

entities and given names like &

lt; for “less than” (<) and &

gt; for “greater than” (>). This convention makes ampersand (&) into a special character, which is displayed by &

amp; when it appears literally in documents. The semicolon needs no such treatment because its literal use and its use as a terminator are syntactically distinct. The same kind of special form can be used to specify Unicode characters beyond the ASCII range, such as &

egrave; for

è.

Figure 4.6 shows a sample page that illustrates several parts of HTML, along with a snapshot of how it is rendered by a Web browser. It contains “typical” code that you find on the Web, rather than exemplary HTML. Some attributes miss out double quotes (such as

align and

valign used in some of the table elements), and not all the elements stipulate all the attributes they should (e.g., two of the <

img> tags are missing their

alt attribute through which an alternative text description is given). The example would not even pass the test for transitional HTML, let alone strict HTML; however, as Figure 4.6b shows, the Web browser renders it just fine.

|

|

| Figure 4.6: |

HTML documents are divided into a header and a body. The header gives global information: the title of the document, the character encoding scheme, any metadata. The <

meta> tag is used in Figure 4.6a to acknowledge the New Zealand Digital Library Project as the document's creator.

Creator imitates the Dublin Core metadata element (see Section 6.2) that is used to represent the name of the entity responsible for generating a document, be it a person, organization, or software application; however, there is no requirement in HTML to conform to such standards. Following the header is a comment and a command that sets the background to a Polynesian motif.

This particular page is laid out as two tables. The first controls the main layout. The second, nested within it, lays out the poem and the image of a greenstone pendant. The tags <

tr> and <

td> are used to mark table rows and cells, respectively. The list item <

li> near the end illustrates various special characters. Most take the &

…; form, but the last two ( ; and #) do not need to be escaped because their normal meaning is syntactically unambiguous. To generate the letter

a with a line above (called a