Cryptography Uses and Firewalls |

CHAPTER16 |

N OW THAT WE HAVE COVERED NETWORK SECURITY and some of the basics of cryptography as ways to defend against threats to information from interception as it transits from point to point, or as it is stored on host platforms or laptops, or even smart phones, we will turn our attention to understanding some other uses of cryptography. Also we will elaborate further on how virtual private networks (VPNs) operate, and we will then cover the vanguard of corporation infrastructure: the firewall, including firewall implementations, configuration, management, and architecture.

Chapter 16 Topics

This chapter:

• Discusses certificates (such as X.509) and certificate authorities.

• Presents internal aspects of IPSec.

• Describes various firewall implementations.

• Covers screened subnet firewall architecture.

Chapter 16 Goals

When you finish this chapter, you should:

![]() Understand some of the ways that cryptography is used.

Understand some of the ways that cryptography is used.

![]() Become familiar with how virtual private networks (VPNs) work.

Become familiar with how virtual private networks (VPNs) work.

![]() Know how routers and hosts implement firewalls, and what they are capable of at the various layers of the network protocol stack where they are implemented.

Know how routers and hosts implement firewalls, and what they are capable of at the various layers of the network protocol stack where they are implemented.

![]() Know the differences between filtering and firewalls.

Know the differences between filtering and firewalls.

![]() Understand some characteristics of common firewall architectures.

Understand some characteristics of common firewall architectures.

16.1 Cryptography in Use

Earlier, we hinted at cryptography and network security protocols such as IPSec as ways of defending against interception and unauthorized release of message contents. Unauthorized release of message contents is a name for a security breach where someone obtains information for which they are not authorized. These are complicated topics, so we will devote some (incremental) time to them. In this chapter, we are going to elaborate more on cryptography and discuss how it is used in practice. With this basic understanding of how encryption works, we can now turn to the ways in which it is used to secure information assets. Cryptography can appear at many layers of the network stack, depending on what and how much information must be secured. At the network layer, we can use IPSec as mentioned before. At the transport layer, we will discuss Transport Layer Security (TLS), also known as SSL, and at the application layer, we will present S/MIME. Before we begin with an elaboration on those, however, we need to present some more information about digital certificates. Recall that digital certificates and certificate authorities are frequently used to authenticate the entities involved in a communication session and to securely generate and pass symmetric session keys used to encrypt the communication channel for the life of a session.

16.1.1 Who Knows Whom: X.509 Certificates

When someone sends you a message, how do you know that the “someone” is whom he or she purports to be? Mike might have stolen Dan’s email login and sends to you a message asking you for the company confidential document we were working on because “I” (Dan) lost mine (but note that I am Mike purporting to be Dan). This question is at the heart of why people use digital certificates. X.509 is a standard issued by the International Telecommunications Union (ITU), and it defines a mechanism for establishing trust relationships in public key (asymmetric) cryptography (which we will cover later). Although there are other standards, X.509 is among the most widely adopted. The importance of this standard relates to the use of digital certificates. We introduced the idea of digital certificates previously when we discussed the web of trust and network security, but now we will expand on the topic.

In Focus

There are public key and private key cryptography, which we will cover in the next chapter. For now, realize that a public key cryptography uses a technique to distribute part of a secret to another it trusts, and retains as private the other part (the secret part) to complete a “puzzle,” whereas with private key cryptography, the full secret is shared between the two parties and we must therefore trust that each will keep the full secret safe and secure.

A digital certificate is a verification of an entity by a trusted third party called a certificate authority (CA). It asserts that the public key bound to a certificate belongs to the entity named in the certificate. This bound identity along with the public key are digitally signed by the CA with its private key. The CA’s public key is bound to a special certificate, called a root certificate, which is distributed to anyone who would need to validate the digital signature.

In addition to the establishment of a CA and a root certificate, the infrastructure to handle digital certificates, often referred to as the public key infrastructure, or PKI, requires that there must be some way to revoke a certificate if it is in some way compromised. Revocation of a digital certificate is accomplished using a certificate revocation list (CRL). This special list, issued by a CA, contains information about all certificates that it has digitally signed that are no longer to be trusted. This can occur if certificates are issued to a party that is later found to be fraudulent or, in the case of expiration, because certificates have a “shelf-life.”

In Focus

Microsoft Windows has a set of wizards that facilitate installing certificates from a provider such as VeriSign or Comodo and importing them into applications such as Outlook (email). However, organizations such as Carnegie Mellon University can also set up a “sort of private” CA. The ultimate question is: How much trust does your recipient need regarding your certificate? If we want to sign a document we pass to our colleague, we might just as well use a CMU (or other free trusted intermediary) managed S/MIME certificate, but if we sell products to consumers (who do not know us at all) in the worldwide market, we may want to invest in commercial certificates from VeriSign, Wisekey, or other well-known commercial CA.

16.1.2 IPSec Implementation

As discussed in the network security chapter, IPSec is fundamental to the security paradigm of IPv6 and provides multiple security advantages to IP networks through the use of the Authentication Header (AH) (RFC 4302) and the Encapsulating Security Payload (ESP) (RFC 2406) standards, including “access control, connectionless integrity, data origin authentication, protection against replays (a form of partial sequence integrity), confidentiality (encryption), and limited traffic flow confidentiality” (RFC 2410) [1].

The security architecture document for IPSec is RFC 2401. Both the AH and ESP standards can be used in one of two modes, transport or tunnel. Transport mode is designed to secure communications between a host and another host or gateway, whereas tunnel mode is commonly used to connect two gateways together. In transport mode, the AH or ESP header is inserted after the IP header, providing protection for the data in the packet. In tunnel mode, the entire IP packet to be secured is encapsulated in a new IP packet with AH or ESP headers, providing a means to protect the entire original packet, including the static and non-static fields in the original header.

Whether choosing AH or ESP to provide protection, IPSec requires the use of Security Associations (SA) that specify how the communicating entities will secure the communications between them, with an SA defined for each side of the communication. The SA consists of a 32-bit security parameter index (SPI), the destination address for the traffic, and an indicator of whether the entities will use ESP or AH to secure the communications. The SPI is designed to uniquely identify a given SA between two hosts and is used as an index, along with the destination address and the ESP/AH indicators in a security association database (SAD) for outbound connections, or for a security policy database (SPD) for inbound connections. Minimally, the database must record the authentication mechanism, cryptographic algorithm, algorithm mode, key length, and initialization vector (IV) for each record.

In Focus

Recall that a vector is a value that has both magnitude (displacement) and direction. An initialization vector in the context of IPSec is a block of bits used in a vectoring process by the cipher to produce a unique output from a common encryption key, without having to regenerate the keys.

Whereas ESP provides data confidentiality through payload encryption, AH only provides data integrity, data source authentication, and protection against replay attacks by generating an HMAC (Header Message Authentication) on the packet. The algorithms that are minimally specified for IPSec include DES, HMAC-MD5, and HMAC-SHA, but many implementations also provide support for other cryptographic algorithms such as 3DES and Blowfish. Although it is possible to create all the security associations manually, for a large enterprise, that would require a lot of administrative overhead. To manage the SA (Security Associations) database dynamically, IPSec utilizes the Internet Key Exchange (IKE) specified in RFC 2409.

As stated in RFC 2409, IKE is a hybrid protocol designed “to negotiate, and provide authenticated keying material for, security associations in a protected manner” [2]. IKE accomplishes this through using parts of two key exchange frameworks—Oakley and SKEME—which are used together with the Internet Security Association and Key Management Protocol (ISAKMP). This is a generic key exchange and authentication framework defined in RFC 2408.

16.1.3 IPSec Example

An in-depth examination of cryptographic uses is beyond the scope of this chapter, but it is important to understand the basics of how two entities can negotiate a secure connection, as that is fundamental to most encrypted communications we encounter. There are eight different exchanges that can occur with IKE to authenticate and establish a key exchange, but we will examine the single required one—a shared secret key in main mode. In this exchange, two entities we will call Alice and Bob already share a secret key. This may be the case with an employee working from home who needs to establish a secure connection to the company network.

Although there are some issues with this protocol, it still serves as an example of how key exchange can be accomplished. It begins with Alice sending to Bob a message indicating various combinations of cryptographic parameters (CP) she supports. This would include the encryption algorithms (DES, 3DES, IDEA), hash algorithms (SHA, MD5), authentication methods (pre-shared keys, public key encryption, etc.), and Diffie-Hellman parameters (including a prime and primitive root) that she supports. Bob would receive this message and choose from the sets offered by Alice, and respond with his selection. Once Alice receives Bob’s selection, she will pass to Bob her computed Diffie-Hellman value and a random bit of information called a nonce.

In Focus

In regard to information security, a nonce is an abbreviation of a “number used once” and is usually a (nearly) random number issued in an authentication protocol to help ensure that old communications are not reused in replay attacks perpetrated by an eavesdropper.

The purpose of the nonce is to add a unique value to the communication session so that it cannot be recorded and played back (called a replay attack) to Alice or Bob in the future in a “new” communication session. Bob will receive the information from Alice and send back his Diffie-Hellman computation and a nonce he creates. Both Bob and Alice can then compute a shared session key utilizing their shared secret key, their computed Diffie-Hellman shared secret, the nonce from Alice, the nonce from Bob, and two unique session cookies that are a function of a secret value and their IP addresses. This key is then used for authentication by Alice for encrypting a message proving she is Alice. She then sends this to Bob, and then asks for Bob to do the same [3]. This process results in a (reasonably) secure exchange.

16.1.4 SSL/TLS

Another common cryptographic implementation that we often encounter is the Transport Layer Security (TLS) protocol specified in RFC 5246, as we mentioned earlier in relation to SSH. As with IKE used in IPSec, TLS is used to negotiate a shared session key that secures the communication channel between two entities. Most modern implementations of the https protocol for secure web traffic, Session Initiation Protocol (SIP) for Voice over IP (VoIP) traffic, and Simple Mail Transfer Protocol (SMTP), each make use of TLS to authenticate and secure the communication.

TLS itself is composed of two layers: the TLS record layer and the TLS handshake layer. The TLS record protocol sits on top of the TCP layer and encapsulates the protocols that are “above it” on the network stack. The record layer protocol ensures that the communications are private, through the use of symmetric encryption algorithms such as AES, and are reliable by using message authentication codes (MACs). The handshake protocol ensures that both entities in the communication can authenticate each other, and securely and reliably negotiate the cryptographic protocols and the shared secret [4].

The TLS record protocol is in charge of doing the encryption and decryption of the information that is passed to it. Once it receives information to encrypt, it compresses the information according to the standards defined in RFC3749. The protocol allows for the compressed data to be encrypted using either a block or a stream cipher, but the session key used for the communication is derived from information provided in the handshake. The master secret passed in the handshake protocol is expanded into client and server MAC keys, and the client and server encryption keys (as well as client and server initialization vectors if required) are used in turn to provide the encryption and authentication credentials [5].

Where the TLS handshake protocols allow the communicating entities to “agree on a protocol version, select cryptographic algorithms, optionally authenticate each other, and use public-key encryption techniques to generate shared secrets” [4], the handshake protocol has the following steps, as specified in the RFC [4]:

1. Exchange hello messages to agree on algorithms, exchange random values, and check for session resumption.

2. Exchange the necessary cryptographic parameters to allow the client and server to agree on a premaster secret.

3. Exchange certificates and cryptographic information to allow the client and server to authenticate each other.

4. Generate a master secret from the premaster secret and exchanged random values.

5. Provide security parameters to the record layer.

6. Allow the client and server to verify that their peer has calculated the same security parameters and that the handshake occurred without tampering by an attacker.

In Focus

Unlike the IKE, TLS specifies a limited set of predefined cipher suites, which are addressed by number. Because the sets are predefined, the selection of what to offer can be simpler.

Finally, there exists a cryptographic standard, Secure/Multipurpose Internet Mail Extension (S/MIME), defined in RFC5751. S/MIME allows a compliant mail user agent to add authentication, confidentiality, integrity and non-repudiation of message content through the use of digital certificates [6]. The benefit of using S/MIME over some other means of encrypting and signing email is that S/MIME certificates are normally X.509 standard complaint, and are sent along with the encrypted email. As long as the certificate was issued by a CA that is trusted by both parties, the transfer of digitally signed and encrypted email is relatively easy.

16.1.5 Virtual Private Networks (VPNs)

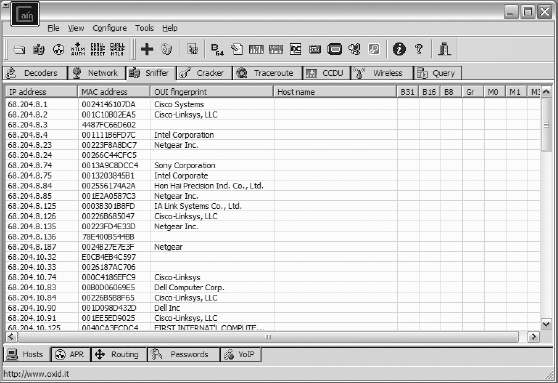

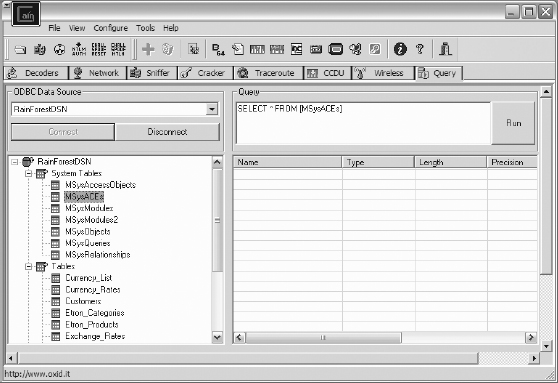

There are many kinds of attacks that can take place across a network, as we have learned. Recall that passive attacks consist of unauthorized interception (eavesdropping) of communication that is carried out covertly to steal information. On the other hand, active attacks (using technologies such as Achilles or Cain) may present attempts to gather information to penetrate defenses or to cause damage, and include protocol (e.g., ARP) poisoning, traffic injection, SQL injection, deletion, delay, and replay, and so forth (Figures 16.1 through 16.4). Even though active attacks may be devastating, passive attacks are the most difficult to defend against because in many if not most cases, people are unaware that the attack is taking place. We have discussed that encryption is the most common form of defense against these passive attacks, but even when using encryption, some information may still be vulnerable.

In Focus

Technologies such as Achilles and Cain are designed for legitimate purposes such as vulnerability scanning. Remember our discussion earlier in our textbook that technologies are value neutral. It is up to people as to whether the technologies are used for good or bad purposes.

Moreover, as discussed earlier, in network security, link-to-link security is formed at the connection level (network layer and below), whereas end-to-end security is at the software level (transport and above). One of the main strengths as well as weaknesses of IP security is that network information is encapsulated in each layer’s header, but header information is needed for routing other controls.

With link-to-link layer, there is no notion of the associations among network layers and processes. Each node must be secured and trusted, which is of course impractical in the Internet. To help mitigate, the secure shell (SSH) protocol, which uses encryption processes, is often used for Internet and web security purposes, but SSH is an application-layer security protocol. Even when using SSH, data are carried in the payload of a TCP segment, and TCP data are in the payload of an IP segment because various border gateway protocols (BGP) and ARP protocols have to be able to read the header data. Thus headers at the routing layer over a WAN such as the Internet may not be encrypted, and therefore a significant amount of information is exposed for exploitation.

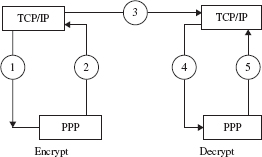

One common exploit at the connection layer is called traffic analysis, where attackers examine how much information is flowing between end-points by examining the contents in headers. To assist with this problem, we can set up a virtual private network (VPN) inside a network, and in that case, the header information is encrypted and then re-encapsulated using a Point-to-Point Protocol (PPP). This is analogous to hiding a real address inside a proxy address. The packet will cycle through the data link layer twice (double loop process), once to encapsulate the VPN address, and once again to encapsulate the BGP address, which allows us to encrypt the VPN address (Figure 16.5).

In Focus

There are many types of VPNs, including intranet VPN, extranet VPN, and remote access VPNs. Most have in common the use of a Point-to-Point Tunneling Protocol (PPTP), which is a form of PPP for VPN. (For more specific details, see [7].)

© Massimilian Montoro

Achilles man-in-the-middle example.

© Massimilian Montoro

FIGURE 16.2

Cain IP/MAC capture example

© Massimilian Montoro

FIGURE 16.3

Cain SQL injection example part 1.

© Massimilian Montoro

FIGURE 16.4

Cain SQL injection example part 2.

FIGURE 16.5

A VPN encryption process.

The IP Security Working Group of the IETF designed the security architecture and protocols for cryptographically based security encapsulation in IPv6 as noted in IPSec. As work has progressed, some of the security architecture proposed for IPv6 has also been applied to IPv4. Retrofitting these IPSec protocols into IPv4 has continued in particular where plaintext packets are encrypted and encapsulated by the outer IP packet; this is the essence of most tunnels in VPNs. This security architecture generally involves two main protocols: the Internet Protocol Security Protocol (IPSP) and the Internet Key Management Protocol (IKMP), but there are a number of IP encapsulation techniques that have emerged such as SwIPe, SP3, NLSP, I-NLSP, and specific implementations of IKMP such as MKMP, SKIP, Photuris, Skeme, ISAKMP, and OAKLEY.

16.2 Firewall Systems

There are many definitions of what constitutes a firewall. Additionally, the term firewall is not used consistently; for example, sometimes the term is used in conjunction with intrusion detection systems. We will strive to clarify by presenting an overview of a variety of firewall types, cover briefly how they work in general, and present a few samples of firewall architecture.

Generally speaking, firewalls do not protect information as it traverses a network, but they do help to neutralize some of the threats that come from connections and through the network infrastructure. Firewalls are implemented in both routers and on host computers, and typically, they use filtering and rules to manage the traffic in and out of a network segment [8]. There are also many kinds of firewalls, ranging from basic filtering to inspection of packet contents, as well as layers of the network protocol stack at which firewalls operate.

16.2.1 Stateless Screening Filters

A screening filter can operate at the network layer or transport layer by packet filtering in a router or other network device. Network layer packet filtering allows (permits) or disallows (denies) packets through the firewall based on what is contained in the IP header. The rules that determine what actions are allowed or disallowed are defined in the device’s security policy and according to access control lists (ACLs). They may also take on one of two stances, either pessimistic or optimistic, in which packets (say, from a particular IP address, port, or protocol type) that are not explicitly permitted are denied (white list) or packets that are not explicitly denied are permitted (black list); the former being a pessimistic stance and the latter optimistic.

In Focus

Lower layer filtering is more efficient to process than filtering at higher layers, but it is less effective. Managers and administrators have to decide the tradeoff depending on the objectives of the filter and performance requirements depending on what lies behind the firewall.

Accordingly, a network administrator may configure a router’s rules to deny any packets that have an internal source address but is coming from the outside (an address spoof), or the administrator may even nest the rules such that any request from an IP address that elicits a response from a router (e.g., a ping) is permitted, but deny any attempt to allow an ICMP redirect from that IP address to a host behind the router [9]. At the transport layer, more information about the data can be inspected by an adversary examining transport protocol header information, such as source and destination port numbers, and in the case of TCP, some information about the connection. As with network layer filtering, transport packet inspection allows the firewall to determine the origin of the packet such as whether it came from the Internet or an internal network, but it also has the ability to determine whether the traffic is a request or a response between a client and a server [10]. However, these stateless types of packet filtering have limitations because they have no “awareness” of upper layer protocols or the exact state of an existing connection. They function simply by applying rules on addressing and protocol types, but many threats are encapsulated at higher layers of the protocol stack that bypass these filters.

The rules utilized in these firewalls are also fairly simplistic, essentially to permit or deny connections or packets through the filtering router. Consequently, it is impossible at these lower layers to completely filter TCP packets that are not valid, or that do not form a complete active connection. In other words, they cannot determine the type of request or response of a TCP connection, such as if the request–response is part of the three-way handshake that may leave a connection “half-open,” which is a particular kind of attack (i.e., half-open connection denial of service attack).

16.2.2 Stateful Packet Inspection

Stateful packet inspection is sometimes called session-level packet filtering. As indicated, before a packet filter is generally implemented by a router using access control lists (ACLs), and is geared toward inspection of packet headers as they traverse both ingress and egress. On the other hand, many applications such as email depend on the state of the underlying TCP protocol; more specifically, the client and server in a session rely on initiating and acknowledging each other’s requests and transfers. Because TCP/IP does not actually implement a session layer as in the OSI model, a stateful packet inspection creates a state table (more shortly) for each established connection to enable the firewall to filter traffic based on the end-point profiles and state of the type of communications to prevent certain kinds of session layer attacks or highjacking [11].

In Focus

Because UDP is stateless, filtering these kinds packets is more difficult for firewalls to manage than is TCP.

As such, stateful packet inspection firewalls are said to be connection-aware and have fine-grained filtering capabilities. The validation in a connection-aware firewall extends up through the three-way handshake of the TCP protocol. As mentioned earlier, TCP synchronizes its session between peer systems by sending a packet with the SYN flag set and a sequence number. The sending system then awaits an acknowledgment from the destination. During the time that a sender awaits an acknowledgment, the connection is “half-open.”

The validation is important to prevent a perpetrator from manipulating TCP packets to create many half-open connections. Because most TCP implementations have limits on how many connections they can service in a period of time, a barrage of half-open connections can lead to an attack called a SYN flood attack, draining the system’s memory and overwhelming the processor trying to service the requests, and this can cause the system to slow down or even crash [10].

To accomplish session-level filtering and monitoring, as noted earlier, stateful packet inspection utilizes a state table called a virtual circuit table that contains connection information for end-point communications. Specifically, when a connection is established, the firewall first records a unique session identifier for the connection that is used for tracking and monitoring purposes. The firewall then records the state of the connection, such as whether it is in the handshake stage, whether the connection has been established, or whether the connection is closing, and it records the sequencing data, the source and destination IP addresses, and the physical network interfaces on which the data arrive and are sent out [11].

Using this virtual table approach, such as with TCP/IP, allows the firewall to perform filtering and sentinel services such that it only allows packets to pass through the firewall when the connection information is consistent with the entries in the virtual circuit table. To ensure this, it checks the header information contained within each packet to determine whether the sender has permissions to send data to the receiver, and whether the receiver has permissions to receive it; when the connection is closed, the entries in the table are removed and that virtual circuit between peer systems is terminated [12].

Although session-level firewalls are an improvement over transport-layer firewalls because they operate on a “session” and therefore they have some capability to monitor connection-oriented protocols such as TCP, they are not a complete solution. Session or connection-oriented firewall security is limited only to the connection. If the connection is allowed, the packets associated with that connection are routed through the firewall according to the routing rules with minimal other security checks [13].

16.2.3 Circuit Gateway Firewalls

In the use of the terms, circuit-level gateways or circuit-level firewalls, it is important to realize that the term “circuit” as used in this context might be a little misleading because technically, there is no “circuit” involved in TCP. The term is applied because TCP is considered a “connection-oriented” protocol because it maintains a session via a setup (three-way handshake) and an exchange of acknowledgments and sequence numbers between client and server. However, to understand a true circuit type of network, we refer back to the asynchronous transmission mode (ATM).

In regard to packet switching networks such as TCP/IP, a circuit-level firewall or gateway is one that validates connections before allowing data to be exchanged. In other words, it uses proxies to broker the “connection” or “circuit” such as with proxy software called SOCKS. Therefore, a circuit-layer firewall or gateway is not just a packet filter that allows or denies packets or connections, it determines whether the connection between end-points is valid according to configurable rules, and then permits a session only from the allowed source through the proxy and perhaps only for a limited period of time [12, 13].

The notion of “circuit level” in firewall terms then means that it executes at the transport layer, but it combines with stateful packet inspection such that it can uniquely identify and track connection pairs between client and server processes. In addition, the circuit proxy (such as SOCKS) has the ability to filter input based on its audit logs and previous transactions, and screen the traffic (both inbound and outbound) accordingly. Therefore, a circuit-level firewall has many of the characteristics of an application-layer firewall such as the ability to perform authentication, but it does not filter on payload contents at the application layer [14]. That function is the domain of a host-based application-layer firewall.

16.2.4 Application-Layer Firewall

Although the term “application-layer firewall” is a little misleading in the context of TCP/IP, as one would expect, it is an end-to-end communications firewall and therefore resides on the host computer to provide firewall services up through the application layer. Hence these are sometimes called application-layer firewall monitors. They filter the information and connections that are passed up through to the application layer and are able to evaluate network packets for valid data at the application layer before allowing a connection, inbound or outbound, similar to circuit gateways.

In Focus

The two core aspects of the application-layer firewall are the services a host provides and the sockets used in the communications.

Beyond this, most application-layer firewalls are able to disguise hosts from outside the private network infrastructure. Because they operate at the application layer, they can be used by programs such as browsers to block spyware, botnets, and malware from sending information from the host to the Internet. They are also designed to work in conjunction with antivirus software, where each can automatically update the other. In other words, if an antivirus application discovers an infection, it may notify the application-layer firewall to try to prevent its signature from passing through the firewall in the future.

This type of dynamic filtering is especially important for email and Web browsing applications—for example, to prevent an infection delivered via an ActiveX control or JAVA component downloaded during a web browsing session. Moreover, application layer firewalls have monitors and audit logs that allow users to view connections made, what protocols were used, and to what IP addresses, as well as the executables that were run. They also typically show what services were invoked, by which protocol, and on what port [9].

Although the capabilities of application-layer firewalls are not deep, meaning they typically do not inspect lower protocol layers because the data have been stripped off by the time the packet reaches the application layer, they are broad in terms of their protections. With complex rule sets, they can thoroughly analyze packet data to provide close security checks and interact with other security technologies such as virus scanners to provide value-added services, such as email filtering and user authentication [13].

Finally, most application layer firewalls present a dialog to a “human-in-the-loop” for a novel or unusual action, where the user then has the option to permit or deny the action. However, studies involving human-computer interaction (e.g., [15]) have shown that people sometimes do not pay close attention to these dialogs, and furthermore, the option to permanently allow or disallow an action can have harmful side effects. For example, a user may be fooled into permanently allowing harmful processes to run, or permanently block a useful action (such as security patch updates) because people are conditioned to ignore some information, and they are reinforced to avoid annoying cues (such as pop-up dialogs because they interfere with their primary goals). Although application-layer firewalls offer a significant amount of control, these are often negated by human action (or inaction), and therefore the importance of the concept of defense in depth comes into play, which we will explore later using the example of a screened subnet firewall architecture. In any case, it is important for security managers and administrators to regularly examine the firewall logs in case alerts were missed or not reported (Figure 16.6).

16.2.5 Bastion Hosts

Given some of the limitations in various firewall technologies and approaches, a bastion host (or gateway) can be a useful complement to them. A bastion host is said to be “hardened” because it serves a specialized purpose, such as to act as a gateway in and out of a protected area known as a DMZ. Therefore, it only exposes specific intended services and has all non-essential software, such as word processing systems, removed from it.

ZoneAlarm” and Check Point are either registered trademarks or trademarks of Check Point Software Technologies, Inc., a wholly owned subsidiary of Check PointSoftware Technologies Ltd., in the United States and/or other countries.

FIGURE 16.6

Example of firewall log (from zone alarm).

As we learned earlier, the Internet enables access to information using a variety of techniques and technologies. In a typical communication over the Internet or LAN, a connection is made by means of a socket. A socket, as we learned, represents a collection of software and data structures and system calls that form end-points between communicating systems based on their IP addresses and port number pairs, and the socket API allows for various operations to be executed by software that form the socket. Port numbers designate various services to which connections are bound. Server processes “listen” on ports for requests from clients. For example, the smtpd server process listens on port 25 for email messages to arrive, and the httpd server listens on port 80 (by default) for connections from browsers. Once a connection is made, communication begins.

For protection, bastion hosts often utilize specialized proxy services for those services they do expose. Proxy services are special-purpose programs that intervene in the communications flow for a given service, such as HTTP or FTP. For example, smap and smapd are proxies for the UNIX sendmail program. Because sendmail in UNIX is notorious for security flaws, smap intercepts messages and places them in a special storage area where they can be electronically or manually inspected before delivery. The smapd scans this storage area at certain intervals, and when it locates messages that have been marked “clean,” it delivers them to the intended user’s email with the regular sendmail system. As seen then, proxy services can provide increased access control and more carefully inspected checks for valid data, and they can be equipped to generate audit records about the traffic that they transfer. Auditing software can be made to trigger alarms, or the administrator can go back through the audit logs when necessary for conducting a trace in a forensic analysis [16].

A bastion host is a system dedicated to providing network services, and is not used for any other purpose such as general-purpose computing.

For additional protection, a bastion host may be configured as a dual-homed bastion gateway. The dual-home refers to having two network cards (homes) that divide an external network from the internal one. This division allows further vetting of communications before traffic is allowed into an internal network, and each of the connections and processes that is allowed is carefully monitored and audited [13]. Still, bastion hosts can represent a single point of failure and a single point of compromise, if left on their own. Moreover, these systems require vigilant monitoring by administrators because they tend to bear the brunt of an attack. Although bastion hosts can enforce security rules on network communications that are separated by network interfaces, because of their visibility, these kinds of systems generally require additional layers of protections, such as with other firewalls and intrusion-detection systems [14]. Let’s now examine firewall architecture and discuss how bastion hosts can be used as part of various firewall designs.

16.3 Firewall Architecture

Firewall architecture is a layering concept. For example, we can use a packet filtering router to screen connections to a bastion host that is used to inspect whatever gets through the screen before delivering it into an interior network and the systems that reside therein. In many cases, this is a sufficient level of protection, such as for a web server exposed to the Internet that displayed only content. However, if systems are to perform transactions such as those in e-commerce, we need to better protect both the customer information and our internal resources. For that kind of activity, we are likely to need more stringent countermeasures.

16.3.1 “Belt and Braces” Architecture

When a network security policy is implemented, policies must be clear and operationalized, which means that they are determinant and measurable, and the boundaries must be well defined so that the policies can be enforced. The boundaries are called perimeter networks, and to establish a collection of perimeter networks, the collection of systems within each network to be protected must be defined along with the network security mechanisms to protect them. A network security perimeter will implement one or more firewall servers to act as gateways for all communications between trusted networks, and between those and distrusted or unknown networks.

The term belt and braces refers to the combination of a screening router and a bastion host with network address translation [11]. The belt portion of this architecture is the screening filter, and the braces involve the NAT and the bastion host. This type of architecture has the following advantages:

1. Filters are generally faster than packet inspection or application-layer firewall technologies because they perform fewer evaluations,

2. A single rule can help protect an entire network by blacklisting (prohibiting) connections between specific network sources such as the Internet and the bastion host, and

3. In conjunction with network address translation and proxy software, we can shield internal IP addresses from exposure to distrusted networks and perform relatively thorough filtering and monitoring. The key here is that it forms a sort of DMZ between the outside world the internal infrastructure.

In Focus

Trusted networks are those that reside inside a bounded security perimeter (such as a DMZ). Distrusted networks are those that reside outside the well-defined security perimeter and are not under control of a network administrator.

If there is an attack, the bastion host will take the brunt of an attack. Thus when monitored closely, it can simplify the administration of the network and its security. Also the bastion host can perform NAT to translate internal and external addresses. This can be done using a static-NAT to map a given private IP address into a given public one on a point-to-point level, which is useful when a system needs access from an outside network such as a web server and also needs accessibility from the Internet.

The bastion host may be configured to use a dynamic-NAT that maps private IP address into public ones that are allocated from a group (which may be assigned using DHCP). In this configuration, there is a point-to-point mapping between public and private addresses, but the assignment of the public IP address is done upon request and released when the connection is closed. This has some administrative advantages, but it is not as secure as static-NAT. The bastion may also use NAT-overloading, which translates multiple private IP addresses into a single public one using different (called rolling) TCP ports. This is useful as a link-to-link filtering technique because the translation hides and also changes the topology and addressing schemes, making it more difficult for an attacker to profile the target network topology and connectivity, or identify the types of host systems and software on the interior network, which helps to reduce IP packet injection, SYN flood DoS attacks, and other similar attacks [10].

In Focus

Firewall architecture, being the blueprints of the network topology and security control infrastructure, must take into account the operational level (technical issues), the tactical level (policies and procedures), and the strategic level (the directives and overall mission) in order to be effective.

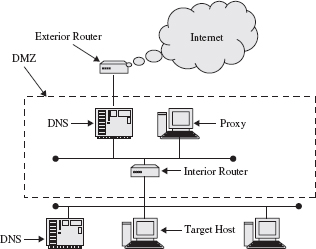

16.3.2 Screened Subnet Architecture

As we discussed, screening packet filters are used to separate trusted autonomous networks from each other, such as those that might host DNS that allocates IP addresses for multiple zones, or one that contains an LDAP or Active Directory. They are also used as a choke point at the ingress gateway into a bounded security perimeter called a DMZ from distrusted networks. This approach is used in combination with screened subnet firewall architecture (Figure 16.7). Although this less secure filtering approach toward distrusted networks such as the Internet seems counterintuitive, the filtering is efficient in processing packets while allowing some control. The main idea behind a screened subnet is that a network can have multiple perimeters, which can be classified into outermost perimeter, internal perimeters, and the innermost perimeter networks. At the gateways of these networks, the defenses are layered so that if one layer is breached, the threat is met at the next layer.

The outermost perimeter network demarcates the separation point between external networks and systems and internal perimeter proxy systems. Here, the filtering firewall assumes a black list (optimistic) stance for efficiency, and yet maintains the ability to block known attacks. Data passed into the internal perimeter network are bounded by the network fabric and systems, which are collectively considered the DMZ. An internal perimeter network typically only exposes the most basic of services such as a hosted web server, and the proxy systems such as bastion hosts and proxy DNS. Because this area is most exposed, it is the most frequently attacked, usually by an attempt to gain access to the internal networks, but also by DoS or defacement.

The screened subnet architecture then uses a white list (pessimistic) firewall to insulate the internal assets of the innermost perimeter network from the internal perimeter network. In other words, traffic intended for a system inside the innermost perimeter network is intercepted by proxy systems in the DMZ before the traffic is relayed to the internal system. Therefore, as seen in the figure, in this configuration at least two sets of screening routers are used to create the DMZ. The exterior router is set up for an optimistic stance, which is to say, it uses a white list ACL, where all connections are allowed except those that are explicitly denied [8]. The interior router is set up for a pessimistic stance in which all connections are explicitly denied except those that are explicitly permitted. Requests from an exterior connection, such as the Internet, pass through the exterior router destined for a host on an interior network. Within the DMZ, a DNS is set up to return to exterior requests an IP address for a DMZ proxy for the interior host. The proxy is a bastion host that acts as a relay system [16].

FIGURE 16.7

Screened subnet architecture.

When communications are passed from the exterior to the proxy, the proxy can then inspect the content of the communications to ensure that it is conventional and free from viruses or other malware before forwarding it to the target interior host. The forwarding from the proxy relay to the target host is done by a relay query of the interior DNS, which is the only connection permitted by the interior router other than the proxy, and this returns the IP address of the target host to the proxy. The transaction is completed by the proxy passing the data to the target host where it is subject to additional inspection before being delivered to the requested application. As seen, the screened subnet architecture is very secure, but is also complex to manage and requires a great deal of processing overhead.

Therefore, as seen in this configuration at least two sets of screening routers are used to create the DMZ. The exterior router is set up for an optimistic stance, which is to say, it uses a white list ACL, where all connections are allowed except those that are explicitly denied. The interior router is set up for a pessimistic stance in which all connections are explicitly denied except those that are explicitly permitted. Requests from an exterior connection, such as the Internet, pass through the exterior router destined for a host on an interior network. Within the DMZ, a DNS is set up to return to exterior requests an IP address for a DMZ proxy for the interior host. The proxy is a bastion host that acts as a relay system. When a communication is passed from the exterior to the proxy, the proxy can then inspect the contents of the communication to ensure it is conventional and free from viruses or other malware before forwarding it to the target interior host.

The forwarding from the proxy relay to the target host is done by a relay query of the interior DNS, which is the only connection permitted by the interior router other than the proxy, and this returns the IP address of the target host to the proxy. The transaction is completed by the proxy passing the data to the target host where it is subject to additional inspection before being delivered to the requested application. As seen, the screened subnet architecture is very secure, but is also complex to manage.

16.3.3 Ontology-Based Architecture

Although ontology-based defenses are not technically firewalls, they can be used to perform many of the screening and monitoring functions of firewalls. In conventional firewall architecture, security policies are predefined for a given security stance and for a given set of platforms. In other cases, using security policy ontologies have become a popular approach to decouple from some underlying technological interdependencies. In this approach, security policy rules are created with a graphical user interface, and the underlying technology generates the ontology markup (e.g., in OWL).

In their interesting work on security incidents and vulnerabilities, Moreira and his colleagues [17] presented a triple-layered set of ontologies (operational, tactical, and strategic) approach to filtering and monitoring. Because ontologies are comprised of markup documents, they can be easily shared among disparate systems. The operational level is comprised of daily transactions and is governed by the tactical level, which consists of the rules that control access to the resources, the technologies that compose the storage and retrieval methods for information and service resources, the processes used in these activities, and the people who use them.

The strategic level is concerned with directives and governance. Using the Moreira et al. [17] approach, security policy ontologies can draw from the Common Vulnerabilities and Exposures (CVE) ontology, which captures and updates with common vulnerabilities and incidents such as reported by the Software Engineering Institute’s CERT. The primary function of the security ontology is to define a set of standards, criteria, and behaviors that direct the efforts of a filtering and monitoring system.

An example of network security policy ontology could consist of threats from remote or local access, the types of vulnerabilities such as SQL injection, buffer overflow, and so forth, and their consequences—including severity and what systems could be affected. The ontology could contain whether a corrective security patch has been released for the vulnerability, and other relevant information. This approach facilitates the automation of the network policy enforcement at a gateway, similar to application-layer firewalls and monitors.

In Focus

Codified into information and communications systems, security policies define the rules and permissions for system operations. For example, a router’s security policy may permit only egress ICMP messages and deny those that are ingress, or a host computer’s security policy may prohibit files from copying themselves, accessing an email address book, or making modifications to the Microsoft Registry.

A web service, agent framework, or object request broker is then used for policy discovery and enforcement at runtime. In other cases, security policies may be learned and generated by running an application in a controlled environment to discover its normal behavior, and then subsequently monitoring the application to determine whether it deviates from this predefined behavior; and if so, the application execution is intercepted, such as in the case where it attempts to make privileged systems calls that are prohibited [18]. This approach is very flexible, and the flexibility makes the ontology approach to filtering and monitoring attractive for mobile networks and peer-to-peer (P2P) network topologies where devices may join and leave the network unpredictably.

Just as there are many forms of cryptography, there are many uses beyond creating ciphertext. As we have seen, cryptography is used to create message digests to indicate whether information has been tampered with, and in authentication of users by comparing secret passwords. Still, the concept of defense in depth requires that additional measures be used in combination. A complement to protecting integrity and authenticity of information with cryptography is the use of firewalls that can help control access to services and network components on host computers.

Administrators must carefully watch the access points to “trusted networks,” and therefore the term trusted network suggests that an administrator has control over it. In some cases, particularly in the government sector, the term trusted network is more specific and refers to a bounded collection of hosts that can only accept packets specifically addressed to them. In this environment, trusted hosts perform extra security checks to ensure that the information contained within the packet is addressed only to and processed only by that host.

A distrusted network might be configured with very tight constraints, and most firewalls allow for a hierarchy in which variable constraints can be placed between zones and on distrusted networks versus unknown networks. Packet filtering is a technique and a set of technologies and not technically a firewall, but the technique is useful for protecting systems and networks from intrusions or to help defend against denial of service attacks from outside the perimeter. Monitoring using security vulnerability ontologies are also not technically firewalls, but are increasingly being used in place of, or complementary to, firewalls, especially for networks comprised of mobile devices.

Topic Questions

16.1: Revocation of a digital certificate is accomplished by using what?

16.2: A “hardened” computer that has at least two LAN cards is called what?

16.3: A combination of exterior filtering routers, proxy systems, network address translation, and interior filtering routers is characteristic of what?

16.4: The RFC 5751 defined:

___ X.509 certificates

___ S/MIME

___ PKI

___ IPSec

16.5: A screening filter operates at:

___ The network layer

___ The transport layer

___ Both the network and transport layer

___ The application layer

16.6: An optimistic stance means:

___ That which is not explicitly permitted is disallowed.

___ Everything not explicitly denied is permitted.

___ No vulnerabilities are expected in a system.

___ No threats to a system are expected.

16.7: IPv6 IPSec can be retrofit into IPv4.

___ True

___ False

16.8: A nonce is:

___ A tunneling mechanism used in virtual private networks for integrity checks

___ A secure key cipher that creates a dual-handshake

___ A symmetric cipher that creates a dual-handshake

___ A Diffie-Hellman value and random data to create a one-time use element

16.9: A dual-homed host refers to:

___ Having two network cards

___ A computer that connects to both a wired and a wireless network

___ A host that has two servers for redundancy

___ A host that has two CPUs for redundancy

16.10: Stateful packet inspection uses:

___ A physical circuit

___ A virtual circuit table

___ A cell-switching technology

___ Only a permit or deny rule

Questions for Further Study

Q16.1: Contrast CHAP with Kerberos for the purpose of authentication.

Q16.2: Distinguish between an application-layer firewall and a filtering technique.

Q16.3: Discuss the concept of false positives in relationship to firewall monitors.

Q16.4: Discuss what the concept of tunneling involves in relation to VPNs.

Q16.5: Explain how to generate a digital signature. Give an example.

Ontology-based defenses are not technically firewalls, but they can perform many of the screening and monitoring functions of firewalls.

Perimeter networks are at the boundaries of an internal network.

Screened subnet has multiple perimeters and uses proxy systems inside a trusted zone (DMZ).

Stateful packet inspection is sometimes called session-level packet filtering.

X.509 is a standard issued by the International Telecommunications Union (ITU).

References

1. Kent, S., & Atkinson, R. (1998). RFC 2401—Security architecture for the Internet protocol. Internet Engineering Task Force. Retrieved from http://www.ietf.org/rfc/rfc2041.txt

2. Harkins, D., & Carrel, D. (1998). RFC 2409—The Internet key exchange (IKE). Internet Engineering Task Force. Retrieved from http://www.ietf.org/rfc/rfc2049.txt

3. Kaufman, C., Perlman, R., & Speciner, M. (2002). Network security: Private communication in a public world. Upper Saddle River, NJ: Prentice Hall.

4. Dierks, T., & Rescorla, E. (2008). RFC 5246— The Transport Layer Security (TLS) protocol version 1.2. Internet Engineering Task Force. Security Paradigms Workshop (pp. 48–60). Langdale: ACM.

5. Solomon, M. G., & Chapple, M. (2005). Information security illuminated. Sudbury, MA: Jones and Bartlett Publishers.

6. Ramsdell, B., & Turner, S. (2010). RFC 5751—Secure/Multipurpose Internet Mail Extensions (S/MIME) Version 3.2 Message Specification. Internet Engineering Task Force. Retrieved from http://tools.ietf.org/html/rfc5751

7. Straub, D. W., Goodman, S., & Baskerville, R. L. (2008). Information security: Policy, processes, and practices. Armonk, NY: Sharpe.

8. Stewart, J. M. (2011). Network security, firewalls, and VPNs. Sudbury, MA: Jones & Bartlett Learning.

9. Habraken, J. (1999). Cisco routers. Indianapolis, IN: Que Books.

10. Thomas, T. (2004). Network security: First step. Indianapolis, IN: Cisco Press.

11. Stallings, W. (2003). Network security essentials: Applications and standards. Upper Saddle River, NJ: Pearson/Prentice Hall.

12. Tjaden, B. C. (2004). Fundamentals of secure computer systems. Wilsonville, OR: Franklin, Beedle & Associates.

13. Chapman, D. B., & Zwicky, E. D. (1995). Building Internet firewalls. Sebastopol, CA: O’Reilly & Associates.

14. Goldman, J. E. (2008). Firewalls. In H. Bidgoli (Ed), Handbook of computer networks (pp. 553–569). Hoboken, NJ: John Wiley & Sons.

15. Ford, R. (2008). Conditioned reflex and security oversights. Unpublished research presentation. Melbourne, FL: Florida Institute of Technology.

16. Zwicky, E. D., Cooper, S., & Chapman, D. B. (2000). Building Internet firewalls. Sebastopol, CA: O’Reilly Media.

17. Moreira, E., Martimiano, L., Brandao, A., & Bernardes, M. (2008). Ontologies for information security management and governance. Information Management & Computer Security, 16, 150–165.

18. Sterne, D., Balasubramanyam, P., Carman, D., Wilson, B., Talpade, R., Ko, C., … Rowe, J. (2005). A general cooperative intrusion detection architecture for MANETs, pp. 57–70. Proceedings of the Third IEEE International Workshop on Information Assurance (IWIA). Washington, DC.