Learning how to use Web performance tests to simulate user activity on your Web site

Testing the capability of your Web site to accommodate multiple simultaneous users with load testing

Understanding how to analyze the results of your Web performance tests and load tests to identify performance and scalability bottlenecks

This chapter continues coverage of the testing features of Visual Studio 2010 by describing Web performance and load tests.

With Web performance testing, you can easily build a suite of repeatable tests that can help you analyze the performance of your Web applications and identify potential bottlenecks. Visual Studio enables you to easily create a Web performance test by recording your actions as you use your Web application. In this chapter, you will learn how to create, edit, and run Web performance tests, and how to execute and analyze the results.

Sometimes you need more flexibility than a recorded Web performance test can offer. In this chapter, you will learn how to use coded Web performance tests to create flexible and powerful Web performance tests using Visual Basic or C# and how to leverage the Web performance testing framework.

Verifying that an application is ready for production involves additional analysis. How will your application behave when many people begin using it concurrently? The load-testing features of Visual Studio enable you to execute one or more tests repeatedly, tracking the performance of the target system. The second half of this chapter examines how to load test with the Load Test Wizard, and how to use the information Visual Studio collects to identify problems before users do.

Finally, because a single machine may not be able to generate enough load to simulate the number of users an application will have in production, you'll learn how to configure your environment to run distributed load tests. A distributed load test enables you to spread the work of creating user load across multiple machines, called agents. Details from each agent are collected by a controller machine, enabling you to see the overall performance of your application under stress.

Web performance tests enable verification that a Web application's behavior is correct. They issue an ordered series of HTTP/HTTPS requests against a target Web application, and analyze each response for expected behaviors. You can use the integrated Web Test Recorder to create a test by observing your interaction with a target Web site through a browser window. Once the test is recorded, you can use that Web performance test to consistently repeat those recorded actions against the target Web application.

Web performance tests offer automatic processing of redirects, dependent requests, and hidden fields, including ViewState. In addition, coded Web performance tests can be written in Visual Basic or C#, enabling you to take full advantage of the power and flexibility of these languages.

Warning

Although you will likely use Web performance tests with ASP.NET Web applications, you are not required to do so. In fact, while some features are specific to testing ASP.NET applications, any Web application can be tested via a Web performance test, including applications based on classic ASP or even non-Microsoft technologies.

Later in this chapter, you will learn how to add your Web performance tests to load tests to ensure that a Web application behaves as expected when many users access it concurrently.

At first glance, the capabilities of Web performance tests may appear similar to those of coded user interface (UI) tests (see Chapter 15). But while some capabilities do overlap (for example, record and playback, response validation), the two types of tests are designed to achieve different testing goals and should be applied appropriately. Web performance tests should be used primarily for performance testing, and can be used as the basis for generating load tests. Coded UI tests should be used for ensuring proper UI behavior and layout. Coded UI tests cannot be easily used to conduct load testing. Conversely, while Web performance tests can be programmed to perform simple validation of responses, coded UI tests are much better suited for this task.

Before creating a Web performance test, you'll need a Web application to test. While you could create a Web performance test by interacting with any live Web site such as Microsoft.com, Facebook, or YouTube, those sites will change and will likely not be the same by the time you read this chapter. Therefore, the remainder of this chapter is based on a Web site created with the Personal Web Site Starter Kit.

The Personal Web Site Starter Kit is a sample ASP.NET application provided by Microsoft. The Personal Web Site Starter Kit first shipped with Visual Studio 2005 and ASP.NET 2.0, but there is a version which is compatible with Visual Studio 2010 and ASP.NET 4.0 at the Web site for this title. If you intend to follow along with the sample provided in this chapter, first visit www.wrox.com to download and install the Personal Web Site Starter Kit project template.

Once you have downloaded and installed the Personal Web Site Starter Kit project template, you must create an instance of this Web site. Open Visual Studio 2010 and click File

Visual Studio will then create a new Web site, with full support for users and authentication and features such as a photo album, links, and a place for a resume.

This site will become the basis of some recorded Web performance tests. Later, you will assemble these Web performance tests into a load test in order to put stress on this site to determine how well it will perform when hundreds of friends and family members converge simultaneously to view your photos.

Before you create tests for your Web site, you must create a few users for the site. You'll do this using the Web Site Administration Tool that is included with ASP.NET applications created with Visual Studio.

Select Website

Note

The first time the site is run, the application start event will also create these roles for you.

You now have two roles into which users can be placed. Click the Security tab again, and then click Create user. You will then see the window shown in Figure 13-1.

Your tests will assume the following users have been created:

Admin — In the Administrator role

Sue — In the Friends role

Daniel — In the Friends role

Andrew — In the Friends role

For purposes of this example, enter @qwerty@ for the Password of each user, and any values you want for the E-mail and Security Question fields.

It is common (but certainly not required) to run Web performance tests against a Web site hosted on the local development machine. If you are testing against a remote machine, you must create a virtual directory or Web site, and deploy your sample application. You may also choose to create a virtual directory on your local machine.

Visual Studio includes a feature called the ASP.NET Development Server. This is a lightweight Web server, similar to (but not the same as) IIS, that chooses a port and temporarily hosts a local ASP.NET application. The hosted application accepts only local requests, and is torn down when Visual Studio exits.

The Development Server defaults to selecting a random port each time the application is started. To execute Web performance tests, you'd have to manually adjust the port each time it was assigned. To address this, you have two options.

The first option is to select your ASP.NET project and choose the Properties window. Change the Use Dynamic Ports property to false, and then select a port number, such as 5000. You can then hard-code this port number into your local Web performance tests.

The second (and more flexible) option is to use a special value, called a context parameter, which will automatically adjust itself to match the server, port, and directory of the target Web application. You'll learn how to do this shortly.

Later in this chapter, you'll see that, unlike Web performance tests, load tests are typically run against sites hosted on machines other than those conducting tests.

There are three main methods for creating Web performance tests. The first (and, by far, the most common) is to use the Web Test Recorder. This is the recommended way of getting started with Web performance testing, and is the approach discussed in this chapter. The second method is to create a test manually, using the Web Test Editor to add each step. Using this approach is time-consuming and error-prone, but may be desired for fine-tuning Web performance tests. Finally, you can create a coded Web performance test that specifies each action via code, and offers a great deal of customization. You can also generate a coded Web performance test from an existing Web performance test. Coded Web performance tests are described later in this chapter.

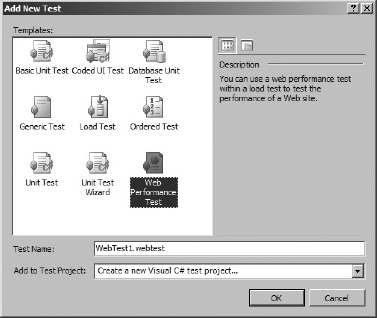

To create a new Web performance test, you may either create a test project beforehand, or allow one to be created for you. If you already have a test project, right-click it and select Add

You have several test types from which to choose. Select Web Performance Test and give your test a name. Web performance tests are stored as XML files with a .webtest extension.

Note

See Chapter 12 for more details on creating and configuring test projects.

After clicking OK, you will be prompted to provide a name for your new test project. For this example, use SampleWebTestProject and click Create.

Note

Once you have a test project, you can quickly create other Web performance tests by right-clicking on your test project and selecting Add

Once your Web performance test is created, an instance of Internet Explorer will be launched with an integrated Web Test Recorder docked window. Begin by typing the URL of the application you wish to test. For the SampleWeb application on a local machine, this will be something like http://localhost:5000/SampleWeb/default.aspx. Remember that supplying a port number is only necessary if you're using the built-in ASP.NET Development Server, and is generally not necessary when using IIS.

The ASP.NET Development Server must also be running before you can navigate to your site. If it isn't already running (as indicated by an icon in the taskbar notification area), you can start it by selecting your SampleWeb project in Visual Studio and pressing Ctrl + F5. This will build and launch your Personal Web Site project in a new browser instance. Take note of the URL being used, including the port number. You may now close this new browser instance (the Development Server will keep running) and return to the browser instance that was launched when you created a new Web test (with the Web Test Recorder docked window). Enter the URL for your Personal Web Site into this browser instance. Be sure to include the default.aspx portion of the URL (using the pattern shown in the previous paragraph).

Note

If you don't see the Web Test Recorder within Internet Explorer at this time, then you might be encountering one of the known issues documented at Mike Taute's blog. See http://tinyurl.com/9okwqp for a list of troubleshooting steps and possible fixes.

Recording a Web performance test is straightforward. Using your Web browser, simply use the Web application as if you were a normal user. Visual Studio automatically records your actions, saving them to the Web performance test.

First, log in as the Admin user (but do not check the "Remember me next time" option). The browser should refresh, showing a "Welcome Admin!" greeting. This is only a short test, so click Logout at the upper-right corner.

Your browser should now appear as shown in Figure 13-3. The steps have been expanded so you can see the details of the Form Post Parameters that were recorded automatically for you.

You'll learn more about these later in this chapter, but, for now, notice that the second request automatically includes ViewState, as well as the Username and Password form fields you used to log in.

Note

The Web Test Recorder will capture any HTTP/HTTPS traffic sent or received by your instance of Internet Explorer as soon as it is launched. This includes your browser's home page, and may include certain browser add-ins and toolbars that send data. For pristine recordings, you should set your Internet Explorer home page to be blank, and disable any add-ins or toolbars that generate excess noise.

The Web Test Recorder provides several options that may be useful while recording. The Pause button in the upper-left corner temporarily suspends recording and timing of your interaction with the browser, enabling you to use the application or get a cup of coffee without affecting your Web performance test. You will learn more about the importance of timing of your Web performance test later, as this can affect playback conditions. Click the X button if you want to clear your recorded list. The other button, Add a Comment, enables you to add documentation to your Web performance test, perhaps at a complex step. These comments are very useful when you convert a Web performance test to a coded Web performance test, as you'll see later.

Note

Calls to Web pages are normally composed of a main request followed by a number of dependent requests. These dependent requests are sent separately to obtain items such as graphics, script sources, and stylesheets. The Web Test Recorder does not display these dependent requests explicitly while recording. You'll see later that all dependent requests are determined and processed automatically when the Web test is run.

When you're finished recording your Web performance test, click Stop and the browser will close, displaying the Web Test Editor with your recorded Web performance test, as shown in Figure 13-4.

The Web Test Editor displays your test as a series of requests to be sent to the Web application. The first request is the initial page being loaded. The second request is the login request being sent. And the third request is the logout request.

Frequently, you'll need to use the Web Test Editor to change settings or add features to the tests you record. This may include adding validation, extracting data from Web responses, and reading data from a source. These topics are covered later in this chapter, but, for now, you'll use this test as recorded.

You may recall from the earlier section "Configuring the Sample Application for Testing," that using the ASP.NET Development Server, while convenient, poses a slight challenge because the port it uses is selected randomly with each run. While you could set your Web site to use a static port, there is a better solution.

Using the Web Test Editor, click on the toolbar button labeled Parameterize Web Servers. (You can hover your mouse cursor over each icon to see the name of each command.) You could also right-click on the Web test name and choose Parameterize Web Servers. In the resulting dialog, click the Change button. You will see the Change Web Server dialog, shown in Figure 13-5.

Use this dialog to configure your Web performance test to target a standard Web application service (such as IIS), or to use the ASP.NET Development Server. In this example, you are using the Development Server, so choose that option. The rest of the parameters are provided for you by default. The Web application root in this case is simply the name of the site, /SampleWeb. Click OK twice.

You will notice the Web Test Editor has automatically updated all request entries, replacing the static Web address with a reference to this context parameter, using the syntax {{WebServer1}}. In addition, the context parameter WebServer1 has been added at the bottom of the Web performance test under Context Parameters. (You'll see later in this chapter the effect of this on the sample Web performance test in Figure 13-10.)

Note

Context parameters (which are named variables that are available to each step in a Web performance test) are described in the section "Extraction Rules and Context Parameters," later in this chapter.

Now, you can run the Web performance test and Visual Studio will automatically find and connect to the port and address necessary when the ASP.NET Development Server is started. If the ASP.NET Development Server is not started, it will be launched automatically. If you have more than one target server or application, you can repeat this process as many times as necessary, creating additional context parameters.

Before you run a Web performance test, you may wish to review the settings that will be used for the test's runs. Choose Test

The option "Fixed run count" enables you to specify a specific number of times your Web performance tests will be executed when included in a test run. Running your test a few times (for example, three to ten times) can help eliminate errant performance timings caused by system issues on the client or server, and can help you derive a better estimate for how your Web site is actually performing. Note that you should not enter a large number here to simulate load through your Web performance test. Instead, you will want to create a load test (discussed later in this chapter) referencing your Web performance test. Also, if you assign a data source to your Web performance test, you may instead choose to run the Web performance test one time per entry in the selected data source. Data-driven Web performance tests are examined in detail later in this chapter.

The browser type setting enables you to simulate using one of a number of browsers as your Web performance test's client. This will automatically set the user agent field for requests sent to the Web performance test to simulate the selected browser. By default, this will be Internet Explorer, but you may select other browsers (such as Netscape or a Smartphone).

Note

Changing the browser type will not help you determine if your Web application will render as desired in a given browser type, since Web performance tests only examine HTTP/HTTPS responses and not the actual rendering of pages. Changing the browser type is only important if the Web application being tested is configured to respond differently based on the user agent sent by the requesting client. For example, a Web application may send a more lightweight user interface to a mobile device than it would to a desktop computer.

Note

If you want to test more than one browser type, you'll need to run your Web performance test multiple times, selecting a different browser each time. However, you can also add your Web performance test to a load test and choose your desired browser distributions. This will cause each selected type to be simulated automatically. You'll learn how to do this in later in this chapter in the section "Load Tests."

The final option here, "Simulate think times," enables the use of delays in your Web performance test to simulate the normal time taken by users to read content, modify values, and decide on actions. When you recorded your Web performance test, the time it took for you to submit each request was recorded as the "think time" property of each step. If you turn this option on, that same delay will occur between the requests sent by the Web performance test to the Web application. Think times are disabled by default, causing all requests to be sent as quickly as possible to the Web server, resulting in a faster test. Later in this chapter, you will see that think times serve an important role in load tests.

Visual Studio also allows you to emulate different network speeds for your tests. From within Test Settings, select "Data and Diagnostics" on the left. Enable the Network Emulation adapter and click Configure. From here you can select a variety of network speeds (such as a dial-up 56K connection) to examine the effect that slower connection speeds have on your Web application.

Note

Note that these settings affect every run of this Web performance test. These settings are ignored, however, when performing a load test. Later in this chapter, you will see that load tests have their own mechanism for configuring settings such as browser type, network speed, and the number of times a test should be run.

To run a Web performance test, click the Run button (the leftmost button on the Web Test Editor toolbar, as shown in Figure 13-4). As with all other test types in Visual Studio, you can use the Test Manager and Test View windows to organize and execute tests. Chapter 12 provides full details on these windows.

Note

You can also run Web performance tests from the command line. See the section "Command-Line Test Execution," later in this chapter.

When the test run is started, a window specific to that Web performance test execution will appear. If you are executing your Web performance test from the Web Test Editor window, you must click the Run button in this window to launch the test. The results will automatically be displayed, as shown in Figure 13-7. You may also choose to step through the Web performance test, one request at a time, by choosing Run Test (Pause Before Starting), available via the drop-down arrow attached to the Run button.

Note that if you choose to run your Web performance tests from Test View or Test Manager, the results will be summarized in the Test Results window, docked at the bottom of the screen. To see each Web performance test's execution details, as shown in Figure 13-7, double-click on the Web performance test's entry in the Test Results window.

This window displays the results of all interactions with the Web application. A toolbar, the overall test status, and two hyperlinked options are shown at the top. The first will rerun the Web performance test and the second allows you to change the browser type via the Web Test Run Settings dialog.

Note

Changes made in this dialog will only affect the next run of the Web performance test and will not be saved for later runs. To make permanent changes, modify the test settings using Test

Below that, each of the requests sent to the application are shown. You can expand each top-level request to see its dependent requests. These are automatically handled by the Web performance test system and can include calls to retrieve graphics, script sources, cascading stylesheets, and more.

Each item in this list shows the request target, as well as the response's status, time, and size. A green check indicates a successful request and response, whereas a red icon indicates failure.

Note

Identifying which requests failed (and why) can be difficult if you have a large number of requests. Unfortunately, there is no summary view to see only failed requests with the reason for failure (for example, violating a validation rule). For large Web performance tests, you must scroll through all of the requests and open failed requests to see failure details.

The lower half of the window enables you to see full details for each request. The first tab, Web Browser, shows you the rendered version of the response. As you can see in Figure 13-7, the response includes "Welcome Admin!" text, indicating that you successfully logged in as the Admin account.

The Request tab shows the details of what was supplied to the Web application, including all headers and any request body, such as might be present when an HTTP POST is made.

Similarly, the Response tab shows all headers and the body of the response sent back from the Web application. Unlike the Web Browser tab, this detail is shown textually, even when binary data (such as an image) is returned.

The Context tab lists all of the context parameters and their values at the time of the selected request. Finally, the Details tab shows the status of any assigned validation and extraction rules. This tab also shows details about any exception thrown during that request. Context parameters and rules are described later in this chapter.

You'll often find that a recorded Web performance test is not sufficient to fully test your application's functionality. You can use the Web Test Editor, as shown in Figure 13-4, to further customize a Web performance test, adding comments, extraction rules, data sources, and other properties.

Warning

It is recommended that you run a recorded Web performance test once before attempting to edit it. This will verify that the test was recorded correctly. If you don't do this, you might not know whether a test is failing because it wasn't recorded correctly or because you introduced a bug through changes in the Web Test Editor.

From within the Web Test Editor, right-click on a request and choose Properties. If the Properties window is already displayed, simply selecting a request will show its properties. You will be able to modify settings such as cache control, target URL, and whether the request automatically follows redirects.

The Properties window also offers a chance to modify the think time of each request. For example, perhaps a co-worker dropped by with a question while you were recording your Web performance test and you forgot to pause the recording. Use the Think Time property to adjust the delay to a more realistic value.

Comments are useful for identifying the actions of a particular section of a Web performance test. In addition, when converting your Web performance test to a coded Web performance test, your comments will be preserved in code.

Because the requests in this example all refer to the same page, it is helpful to add comments to help distinguish them. Add a comment by right-clicking on the first request and choosing Insert Comment. Enter Initial site request. Comment to the second request as Login and the third request as Logout.

A transaction is used to monitor a group of logically connected steps in your Web performance test. A transaction can be tracked as a unit, giving details such as number of times invoked, request time, and total elapsed time.

Note

Don't confuse Web performance test transactions with database transactions. While both are used for grouping actions, database transactions offer additional features beyond those of Web performance test transactions.

To create a transaction, right-click a request and select Insert Transaction. You will be prompted to name the transaction and to select the start and end request from drop-down lists.

Transactions are primarily used when running Web performance tests under load with a load test. You will learn more about viewing transaction details in the section "Viewing and Interpreting Load Test Results," later in this chapter.

Extraction rules are used to retrieve specific data from a Web response. This data is stored in context parameters, which live for the duration of the Web performance test. Context parameters can be read from and written to by any request in a Web performance test. For example, you could use an extraction rule to retrieve an order confirmation number, storing that in a context parameter. Then, subsequent steps in the test could access that order number, using it for verification or supplying it with later Web requests.

Note

Context parameters are similar in concept to the HttpContext.Items collection from ASP.NET. In both cases, you can add names and values that can be accessed by any subsequent step. Whereas HttpContext.Items entries are valid for the duration of a single page request, Web performance test context parameters are accessible through a single Web performance test run.

Referring to Figure 13-4, notice that the first request has an Extract Hidden Fields entry under Extraction Rules. This was added automatically when you recorded the Web performance test because the system recognized hidden fields in the first form you accessed. Those hidden fields are now available to subsequent requests via context parameters.

A number of context parameters are set automatically when you run a Web performance test, including the following:

$TestDir— The working directory of the Web performance test.$WebTestIteration— The current run number. For example, this would be useful if you selected more than one run in the Test Settings and needed to differentiate the test runs.$ControllerNameand$AgentName— Machine identifiers used when remotely executing Web performance tests. You'll learn more about this topic later in this chapter.

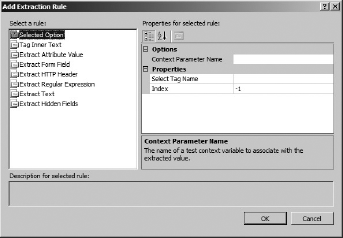

To add an extraction rule to a Web performance test, right-click on any request and select Add Extraction Rule. The dialog shown in Figure 13-8 will appear.

The built-in extraction rules can be used to extract any attribute, HTTP header, or response text. Use Extract Regular Expression to retrieve data that matches the supplied expression. Use Extract Hidden Fields to easily find and return a value contained in a hidden form field of a response. Extracted values are stored in context parameters whose names you define in the properties of each rule.

You can add your own custom extraction rules by creating classes that derive from the ExtractionRule class found in the Microsoft.VisualStudio.TestTools.WebTesting namespace.

Generally, checking for valid Web application behavior involves more than just getting a response from the server. You must ensure that the content and behavior of that response is correct. Validation rules offer a way to verify that those requirements are met. For example, you may wish to verify that specific text appears on a page after an action, such as adding an item to a shopping cart. Validation rules are attached to a specific request, and will cause that request to show as failed if the requirement is not satisfied.

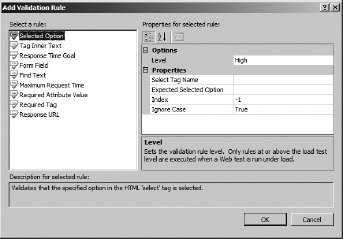

Let's add a validation rule to the test to ensure that the welcome message is displayed after you log in. Right-click on the second request and choose Add Validation Rule. You will see the dialog shown in Figure 13-9.

As with extraction rules, you can also create your own custom validation rules by inheriting from the base ValidationRule class, found in the WebTestFramework assembly, and have them appear in this dialog. Choose the Find Text rule and set the Find Text value to Welcome Admin. Set Ignore Case to false, and Pass If Text Found to true. This rule will search the Web application's response for a case-sensitive match on that text and will pass if found. Click OK and the Web performance test should appear as shown in Figure 13-10.

Verify that this works by running or stepping through the Web performance test. You should see that this test actually does not work as expected. You can use the details from the Web performance test's results to find out why.

View the Details tab for the second request. You'll see that the Find Text validation rule failed to find a match. Looking at the text of the response on the Response tab shows that instead of "Welcome Admin" being returned, there is a tab instead of a space between the words. You will need to modify the validation rule to match this text.

To fix this, you could simply replace the space in the Find Text parameter with a tab. However, you could use a regular expression as well. First, change the Find Text parameter to Welcomes+Admin. This indicates you expect any whitespace characters between the words, not just a space character. To enable that property to behave as a regular expression, set the Use Regular Expression parameter to true.

Save your Web performance test and rerun it. The Web performance test should now pass.

Note

Bear in mind is that the validation logic available within Web performance tests is not as sophisticated as that of coded UI tests (see Chapter 15). With coded UI tests, it is easier to confirm that a given string appears in the right location of a Web page, whereas with Web performance test validation rules, you are generally just checking to confirm that the string appears somewhere in the response.

The functionality that extraction and validation rules provide comes at the expense of performance. If you wish to call your Web performance test from a load test, you might wish to improve performance at the expense of ignoring a number of extraction or validation rules.

Each rule has an associated property called Level. This can be set to Low, Medium, or High. When you create a load test, you can similarly specify a validation level of Low, Medium, or High. This setting specifies the maximum level of rule that will be executed when the load test runs.

For example, a validation level of Medium will run rules with a level of Low or Medium, but will exclude rules marked as High.

You can satisfy many testing scenarios using the techniques described so far, but you can go beyond those techniques to easily create data-driven Web performance tests. A data-driven Web performance test connects to a data source and retrieves a set of data. Pieces of that data can be used in place of static settings for each request.

For example, in your Web performance test, you may wish to ensure that the login and logout processes work equally well for all of the configured users. You'll learn how to do this next.

You can configure your Web performance test to connect to a database (for example, SQL Server or Oracle), a comma-separated value (CSV) file, or an XML file. For this example, a CSV file will suffice. Using Notepad, create a new file and insert the following data:

Username,Password Admin,@qwerty@ Sue,@qwerty@ Daniel,@qwerty@ Andrew,@qwerty@

Save this file as Credentials.csv.

The next step in creating a data-driven Web performance test is to specify your data source. Using the Web Test Editor, you can either right-click on the top node of your Web performance test and select Add Data Source, or click the Add Data Source button on the toolbar.

In the New Test Data Source Wizard, select CSV File and click Next. Browse to the Credentials.csv file you just created and click Next. You will see a preview of the data contained in this file. Note that the first row of your file was converted to the appropriate column headers for your data table. Click Finish. You will be prompted to make the CSV file a part of your test project. Click Yes to continue. When the data source is added, you will see it at the bottom of your Web performance test in the Web Test Editor, and the Credentials.csv file will be added to the Solution Explorer.

Expand the data source to see that there is a new table named Credentials in your Web Test Editor. Click this table and view the Properties window. Notice that one of the settings is Access Method. This has three valid settings:

Sequential — Reads each record in first-to-last order from the source. This will loop back to the first record and continue reading if the test uses more iterations than the source has records.

Random — Reads each record randomly from the source and, like sequential access, will continue reading as long as necessary.

Unique — Reads each record in first-to-last order, but will do so only once.

Use this setting to determine how the data source will feed rows to the Web performance test. For this test, choose Sequential.

Several types of values can be bound to a data source, including form post and URL query parameters' names and values, HTTP headers, and file upload field names. Expand the second request in the Web Test Editor, expand Form Post Parameters, click the parameter for UserName, and view the Properties window. Click the down arrow that appears in the Value box.

You will then see the data-binding selector, as shown in Figure 13-11.

Expand your data source, choose the Credentials table, and then click on the Username column to bind to the value of this parameter. A database icon will appear in that property, indicating that it is a bound value. You can select the Unbind entry to remove any established data binding. Repeat this process for the Password parameter.

Note

When binding to a database you may choose to bind to values from either a table or a view. Binding to the results of stored procedures is not supported for Web performance tests.

Before you run your Web performance test, you must indicate that you want to run the test one time per row of data in the data source. Refer to the earlier section "Test Settings" and Figure 13-6. In the Web Tests section of your test settings, choose the "One run per data source row" option.

The next time you run your Web performance test, it will automatically read from the target data source, supplying the bound fields with data. The test will repeat one time for each row of data in the source. Your test should now fail, however, since you are still looking for the text "Welcome Admin" to appear after the login request is sent.

To fix this, you must modify your validation rule to look for welcome text corresponding to the user being authenticated. Select the Find Text validation rule and view the Properties window. Change the Find Text value to Welcomes+{{DataSource1.Credentials#csv.Username}} and re-run your test. Your test should now pass again.

As flexible as Web performance tests are, there may be times when you need more control over the actions that are taken. Web performance tests are stored as XML files with .webtest extensions. Visual Studio uses this XML to generate the code that is executed when the Web performance test is run. You can tap into this process by creating a coded Web performance test, enabling you to execute a test from code instead of from XML.

Coded Web performance tests enable you to perform actions not possible with a standard Web performance test. For example, you can perform branching based on the responses received during a Web performance test or based on the values of a data-bound test. A coded Web performance test is limited only by your ability to write code. The language of the generated code is determined by the language of the test project that contains the source Web performance test.

A coded Web performance test is a class that inherits from either a base WebTest class for C# tests, or from a ThreadedWebTest base for Visual Basic tests. These classes can be found in the Microsoft.VisualStudio.TestTools.WebTesting namespace. All of the features available to Web performance tests that you create via the IDE are implemented in classes and methods contained in that namespace.

Note

While you always have the option to create a coded Web performance test by hand, the most common (and the recommended) method is to generate a coded Web performance test from a Web performance test that was recorded with the Web Test Recorder and then customize the code as needed.

You should familiarize yourself with coded Web performance tests by creating a number of different sample Web performance tests through the IDE and generating coded Web performance tests from them to learn how various Web performance test actions are accomplished with code.

Using the example Web performance test, click the Generate Code button on the Web Test Editor toolbar. You will be prompted to name the generated file. Open the generated file and review the generated code.

Here is a segment of the C# code that was generated from the example Web performance test (some calls have been removed for simplicity):

public override IEnumerator<WebTestRequest> GetRequestEnumerator()

{

...

// Initial site request

...

yield return request1;

...

// Login

...

WebTestRequest request2 = new

WebTestRequest((this.Context["WebServer1"].ToString() +

"/SampleWeb/default.aspx"));

...

Request2.ThinkTime = 14;

Request2.Method = "POST";FormPostHttpBody request2Body = new FormPostHttpBody();

...

Request2Body.FormPostParameters.Add(

"ctl00$Main$LoginArea$Login1$UserName",

this.Context["DataSource1.Credentials#csv.Username"].ToString());

request2Body.FormPostParameters.Add(

"ctl00$Main$LoginArea$Login1$Password",

this.Context["DataSource1.Credentials#csv.Password"].ToString());

...

if ((this.Context.ValidationLevel >=

Microsoft.VisualStudio.TestTools.WebTesting.ValidationLevel.High))

{

ValidationRuleFindText validationRule3 = new ValidationRuleFindText();

validationRule3.FindText = ("Welcome\s+" +

this.Context["DataSource1.Credentials#csv.Username"].ToString());

validationRule3.IgnoreCase = false;

validationRule3.UseRegularExpression = true;

validationRule3.PassIfTextFound = true;

}

...

yield return request2;

...

// Logout

...

WebTestRequest request3 = new

WebTestRequest((this.Context["WebServer1"].ToString() +

"/SampleWeb/default.aspx"));

Request3.Method = "POST";

...

yield return request3;

...

}This GetRequestEnumerator method uses the yield statement to provide WebTestRequest instances, one per HTTP request, back to the Web test system.

Visual Basic test projects generate slightly different code than C# tests because Visual Basic does not currently support iterators and the yield statement. Instead of having a GetRequestEnumerator method that yields WebTestRequest instances one at a time, there is a Run subroutine that uses the base ThreadedWebTest.Send method to execute each request.

Regardless of the language used, notice that the methods and properties are very similar to what you have already seen when creating and editing Web performance tests in the Web Test Editor. You'll also notice that the comments you added in the Web Test Editor appear as comments in the code, making it very easy to identify where each request begins.

Taking a closer look, you see that the Find Text validation rule you added earlier is now specified with code. First, the code checks the ValidationLevel context parameter to verify that you're including rules marked with a level of High. If so, the ValidationRuleFindText class is instantiated and the parameters you specified in the IDE are now set as properties of that instance. Finally, the instance's Validate method is registered with the request's ValidateResponse event, ensuring that the validator will execute at the appropriate time.

You can make any changes you wish and simply save the code file and rebuild. Your coded Web performance test will automatically appear alongside your other tests in Test Manager and Test View.

Note

Another advantage of coded Web performance tests is protocol support. While normal Web performance tests can support both HTTP and HTTPS, they cannot use alternative protocols. A coded Web performance test can be used for other protocols, such as FTP.

For detailed descriptions of the classes and members available to you in the WebTesting namespace, see Visual Studio's Help topic titled "Microsoft.VisualStudio.TestTools.WebTesting Namespace."

Load tests are used to verify that your application will perform as expected while under the stress of multiple concurrent users. You configure the levels and types of load you wish to simulate and then execute the load test. A series of requests will be generated against the target application, and Visual Studio will monitor the system under test to determine how well it performs.

Load testing is most commonly used with Web performance tests to conduct smoke, load, and stress testing of ASP.NET applications. However, you are certainly not limited to this. Load tests are essentially lists of pointers to other tests, and they can include any other test type except for manual tests and coded UI tests.

For example, you could create a load test that includes a suite of unit tests. You could stress-test layers of business logic and database access code to determine how that code will behave when many users are accessing it concurrently, regardless of which application uses those layers.

As another example, ordered tests can be used to group a number of tests and define a specific order in which they will run. Because tests added to a load test are executed in a randomly selected order, you may find it useful to first group them with an ordered test, and then include that ordered test in the load test. You can find more information on ordered tests in Chapter 12.

The discussion in this section describes how to create a load test using the New Load Test Wizard. You'll examine many options that you can use to customize the behavior of your load tests.

As described earlier in this chapter in the section "Web Performance Tests," a test project is used to contain your tests, and, like Web performance tests, load tests are placed in test projects. You can either use the New Test option of the Test menu and specify a new or existing test project, or you can right-click on an existing test project and choose Add

Whether from a test project or the Test menu, when you add a new load test, the New Load Test Wizard is started. This wizard will guide you through the many configuration options available for a load test.

A load test is composed of one or more scenarios. A scenario is a grouping of Web performance and/or unit tests, along with a variety of preferences for user, browser, network, and other settings. Scenarios are used to group similar tests or usage environments. For example, you may wish to create a scenario for simulating the creation and submission of an expense report by your employees, whereby your users have LAN connectivity and all use Internet Explorer 7.0.

When the New Load Test Wizard is launched, the first screen describes the load test creation process. Click Next and you will be prompted to assign a name to your load test's first scenario, as shown in Figure 13-12.

Note that the New Load Test Wizard only supports the creation of a single scenario in your load test, but you can easily add more scenarios with the Load Test Editor after you complete the wizard.

The second option on this page is to configure think times. You may recall from the earlier section "Web Performance Tests," that think time is a delay between each request, which can be used to approximate how long a user will pause to read, consider options, and enter data on a particular page. These times are stored with each of a Web performance test's requests. The think time profile panel enables you to turn these off or on.

If you enable think times, you can either use them as is, or apply a normal distribution that is centered around your recorded think times as a mean. The normal distribution is generally recommended if you want to simulate the most realistic user load, based on what you expect the average user to do. You can also configure the think time between test iterations to model a user who pauses after completing a task before moving to the next task.

You can click on any step on the left-hand side to jump to that page of the wizard or click Next to navigate through sequential pages.

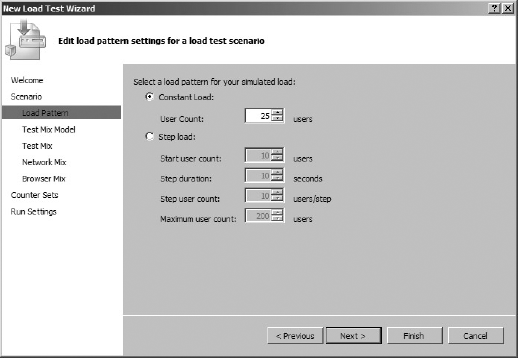

The next step is to define the load pattern for the scenario. The load pattern, shown in Figure 13-13, enables simulation of different types of user load.

In the wizard, you have two load pattern options: Constant and Step. A constant load enables you to define a number of users that will remain unchanged throughout the duration of the test. Use a constant load to analyze the performance of your application under a steady load of users. For example, you may specify a baseline test with 100 users. This load test could be executed prior to release to ensure that your established performance criteria remain satisfied.

A step load defines a starting and maximum user count. You also assign a step duration and a step user count. Every time the number of seconds specified in your step duration elapse, the number of users is incremented by the step count, unless the maximum number of users has been reached. Step loads are very useful for stress-testing your application, finding the maximum number of users your application will support before serious issues arise.

Note

A third type of load profile pattern, called "Goal Based," is available only through the Load Test Editor. See the section "Editing Load Tests," later in this chapter, for more details.

You should begin with a load test that has a small constant user load and a relatively short execution time. Once you have verified that the load test is configured and working correctly, increase the load and duration as you require.

The test mix model (shown in Figure 13-14) determines the frequency at which tests within your load test will be selected from among other tests within your load test. The test mix model allows you several options for realistically modeling user load. The options for test mix model are as follows:

Based on the total number of tests — This model allows you to assign a percentage to each test that dictates how many times it should be run. Each virtual user will run each test corresponding to the percentage assigned to that test. An example of where this might be useful is if you know that the average visitor views three photos on your Web site for every one comment that they leave on a photo. To model that scenario, you would create a test for viewing photos and a test for leaving comments, and assign them percentages of 75 percent and 25 percent, respectively.

Based on the number of virtual users — This model allows you to assign a percentage of virtual users who should run each test. This model might be useful if you know that, at any given time, 80 percent of your visitors are browsing the catalog of your e-commerce Web site, 5 percent are registering for new accounts, and 15 percent are checking out.

Based on user pace — This model executes each test a specified number of times per virtual user per hour. An example of a scenario where this might be useful is if you know that the average user checks email five times per hour, and looks at a stock portfolio once an hour. When using this test mix model, the think time between iterations value from the Scenario page of the wizard is ignored.

Based on sequential test order — If you know that your users generally perform steps in a specific order (for example, logging in, then finding an item to purchase, then checking out) you can use this test mix model to simulate a sequential test behavior for all virtual users. This option is functionally equivalent to structuring your tests as ordered tests.

Note

Don't worry if you are having a difficult time choosing a test mix model right now. You can always play with different test mix models later as you learn more about the expected behavior of your application's users. You may also discover that your application exhibits different usage patterns at different times of the day, during marketing promotions, or during some other seasonality.

The option you select on this dialog will impact the options available to you on the next page of the wizard.

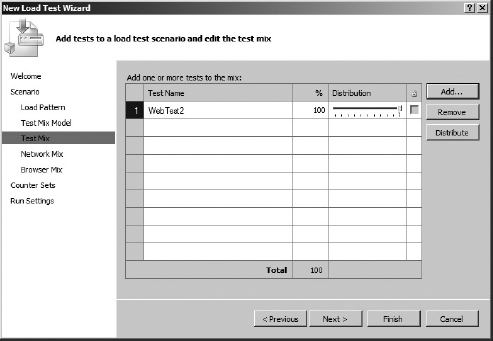

Now, select the tests to include in your scenario, along with the relative frequency with which they should run. Click the Add button and you will be presented with the Add Tests dialog shown in Figure 13-15.

By default, all of the tests (except manual tests and coded UI tests) in your solution will be displayed. You can constrain these to a specific test list with the "Select test list to view" drop-down. Select one or more tests and click OK. To keep this example simple, only add the Web performance test you created earlier in this chapter.

Next, you will return to the test mix step. Remember that this page will vary based on the test mix model you selected in the previous step. Figure 13-16 assumes that you selected "Based on the total number of tests" as your test mix model.

Use the sliders to assign the chance (in percentage) that a virtual user will select that test to execute. You may also type a number directly into the numeric fields. Use the lock checkbox in the far-right column to freeze tests at a certain number, while using the sliders to adjust the remaining "unlocked" test distributions. The Distribute button resets the percentages evenly between all tests. But, since you only have a single test in your test mix right now there is nothing else to configure on this page, and the slider will be disabled.

You can then specify the kinds of network connectivity you expect your users to have (such as LAN, Cable-DSL, and Dial-up). This step is shown in Figure 13-17.

Like the test mix step described earlier, you can use sliders to adjust the percentages, lock a particular percent, or click the Distribute button to reset to an even distribution.

As with the test mix settings, each virtual user will select a browser type at random according to the percentages you set. A new browser type is selected each time a test is chosen for execution. This also applies to the browser mix described next.

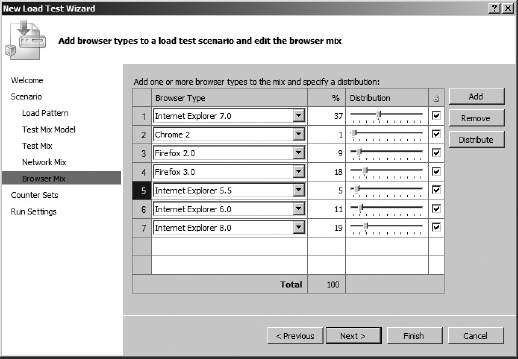

The next step (applicable only when Web performance tests are part of the load test) is to define the distribution of browser types that you wish to simulate. Visual Studio will then adjust the headers sent to the target application according to the selected browser for that user.

As shown in Figure 13-18, you may add one or more browser types, and then assign a percent distribution for their use.

A vital part of load testing is the tracking of performance counters. You can configure your load test to observe and record the values of performance counters, even on remote machines. For example, your target application is probably hosted on a different machine from the one on which you're running the test. In addition, that machine may be calling to other machines for required services (such as databases or Web services). Counters from all of these machines can be collected and stored by Visual Studio.

A counter set is a group of related performance counters. All of the contained performance counters will be collected and recorded on the target machine when the load test is executed.

Note

Once the wizard is complete, you can use the editor to create your own counter sets by right-clicking on Counter Sets and selecting Add Custom Counter Set. Right-click on the new counter set and choose Add Counters. Use the resulting dialog box to select the counters and instances you wish to include.

Select machines and counter sets using the wizard step shown in Figure 13-19. Note that this step is optional. By default, performance counters are automatically collected and recorded for the machine running the load test. If no other machines are involved, simply click Next.

To add a machine to the list, click Add Computer and enter the name of the target machine. Then, check any counter sets you wish to track to enable collection of the associated performance counters from the target machine.

Note

If you encounter errors when trying to collect performance counters from remote machines, be sure to visit Ed Glas's blog post on troubleshooting these problems at http://tinyurl.com/bp39hj.

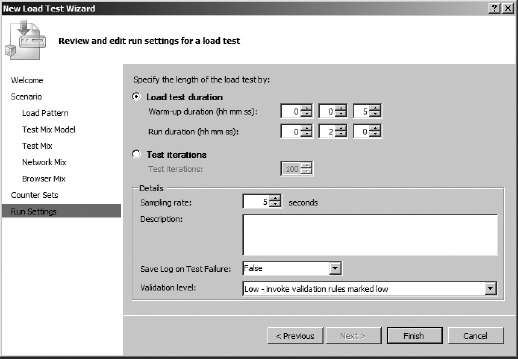

The final step in the New Load Test Wizard is to specify the test's run settings, as shown in Figure 13-20. A load test may have more than one run setting, but the New Load Test Wizard will only create one. In addition, run settings include more details than are visible through the wizard. These aspects of run settings are covered later in the section "Editing Load Tests."

First, select the timing details for the test. "Warm-up duration" specifies a window of time during which (although the test is running) no information from the test is tracked. This gives the target application a chance to complete actions such as just-in-time (JIT) compilation or caching of resources. Once the warm-up period ends, data collection begins and will continue until the "Run duration" value has been reached.

The "Sampling rate" determines how often performance counters will be collected and recorded. A higher frequency (lower number) will produce more detail, but at the cost of a larger test result set and slightly higher strain on the target machines.

Any description you enter will be stored for the current run setting. Save Log on Test Failure specifies whether or not a load test log should be saved in the event that tests fail. Often, you will not want to save a log on test failure, since broken tests will skew the results for actual test performance.

Finally, the "Validation level" setting indicates which Web performance test validation rules should be executed. This is important, because the execution of validation rules is achieved at the expense of performance. In a stress test, you may be more interested in raw performance than you are that a set of validation rules pass. There are three options for validation level:

Low — Only validation rules marked with Low level will be executed.

Medium — Validation rules marked Low or Medium level will be executed.

High — All validation rules will be executed.

Click Finish to complete the wizard and create the load test.

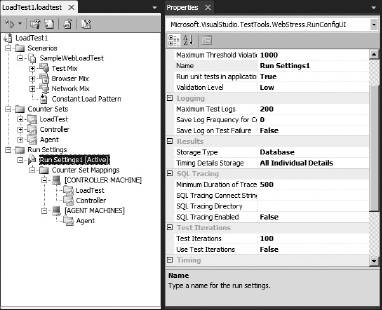

After completing the New Load Test Wizard (or whenever you open an existing load test), you will see the Load Test Editor shown in Figure 13-21.

The Load Test Editor displays all of the settings you specified in the New Load Test Wizard. It allows access to more properties and options than the wizard, including the capability to add scenarios, create new run settings, configure SQL tracing, and much more.

As you've already seen, scenarios are groups of tests and user profiles. They are a good way to define a large load test composed of smaller specific testing objectives.

For example, you might create a load test with two scenarios. The first includes tests of the administrative functions of your site, including ten users with the corporate-mandated Internet Explorer 8.0 on a LAN. The other scenario tests the core features of your site, running with 90 users who have a variety of browsers and connections. Running these scenarios together under one load test enables you to more effectively gauge the overall behavior of your site under realistic usage.

The New Load Test Wizard generates load tests with a single scenario, but you can easily add more using the Load Test Editor. Right-click on the Scenarios node and choose Add Scenario. You will then be prompted to walk through the Add Scenario Wizard, which is simply a subset of the New Load Test Wizard that you've already seen.

Run settings, as shown on the right-hand side of Figure 13-21, specify such things as duration of the test run, where and if results data is stored, SQL tracing, and performance counter mappings.

A load test can have more than one run setting, but as with scenarios, the New Load Test Wizard only supports the creation of one. You might want multiple run settings to enable you to easily switch between different types of runs. For example, you could switch between a long-running test that runs all validation rules, and another shorter test that runs only those marked as Low level.

To add a new run setting, right-click on the Run Settings node (or the load test's root node) and choose Add Run Setting. You can then modify any property or add counter set mappings to this new run setting node.

You can gather tracing information from a target SQL Server instance though SQL Tracing. Enable SQL Tracing through the run settings of your load test. As shown in Figure 13-21, the SQL Tracing group has four settings.

First, set the SQL Tracing Enabled setting to True. Then click the SQL Tracking Connect String setting to make the ellipsis button appear. Click that button and configure the connection to the database you wish to trace.

Use the SQL Tracing Directory setting to specify the path or Universal Naming Convention (UNC) to the directory in which you want the SQL Trace details stored.

Finally, you can specify a minimum threshold for logging of SQL operations. The Minimum Duration of Traced SQL Operations setting specifies the minimum time (in milliseconds) that an operation must take in order for it to be recorded in the tracing file.

As you saw in the New Load Test Wizard, you had two options for load profile patterns: Constant and Step. A third option, Goal Based, is only available through the Load Test Editor.

The goal-based pattern is used to raise or lower the user load over time until a specific performance counter range has been reached. This is an invaluable option when you want to determine the peak loads your application can withstand.

To access the load profile options, open your load test in the Load Test Editor and click on your current load profile, which will be either Constant Load Profile or Step Load Profile. In the Properties window, change the Pattern value to Goal Based. You should now see a window similar to Figure 13-22.

First, notice the User Count Limits section. This is similar to the step pattern in that you specify an initial and maximum user count, but you also specify a maximum user count increment and decrement and minimum user count. The load test will dynamically adjust the current user count according to these settings in order to reach the goal performance counter threshold.

By default, the pattern will be configured against the % Processor Time performance counter. To change this, enter the category (for example, Memory, System, and so on), the computer from which it will be collected (leave this blank for the current machine), and the counter name and instance — which is applicable if you have multiple processors.

You must then tell the test about the performance counter you selected. First, identify the range you're trying to reach using the High-End and Low-End properties. Set the Lower Values Imply Higher Resource Utilization option if a lower counter value indicates system stress. For example, you would set this to True when using the system group's Available MBytes counter. Finally, you can tell the load test to remain at the current user load level when the goal is reached with the Stop Adjusting User Count When Goal Achieved option.

A load test run can collect a large amount of data. This includes performance counter information from one or more machines, details about which test passed, and durations of various actions. You may choose to store this information in a SQL Server database.

To select a results store, you must modify the load test's run settings. Refer back to Figure 13-21. The local run settings have been selected in the Load Test Editor. In the Results section of the Properties window is a setting called Storage Type, which can either be set to None or Database.

In order to use the Database option, you must first configure an instance of SQL Server or SQL Express using a database creation script. The script, LoadTestResultsRepository.sql, is found under the Common7IDE directory of your Visual Studio installation directory. You may run this script any way you choose, such as with Query Manager or SQL Server's SQLCMD utility.

Once created, the new LoadTest database can be used to store data from load tests running on the local machine or even remote machines. Running remote load tests is described later in this chapter in the section "Distributed Load Tests."

There are several ways to execute a load test. You can use various windows in Visual Studio, the Load Test Editor, Test Manager and Test View, or you can use command-line tools. For details on using the command line, see the section "Command-Line Test Execution," later in this chapter.

In the Load Test Editor, you can click the Run button at the upper-left corner, or right-click on any load test setting node and select Run Load Test.

From the Test Manager and Test View windows, check or select one or more load tests and click the Run Tests button. In Test View, you may also right-click on a test and select Run Selection.

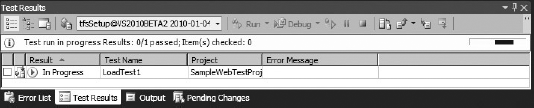

If you ran your test from either Test Manager or Test View, you will see the status of your test in the Test Results window, as shown in Figure 13-23.

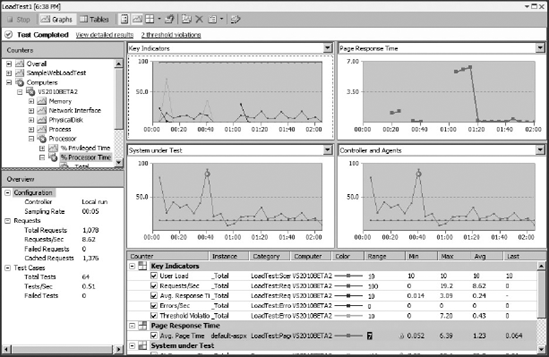

Once the status of your test is In Progress or Complete, you can double-click to see the Load Test Monitor window, shown in Figure 13-24. You may also right-click and choose View Test Results Details. When a load test is run from the Load Test Editor, the Test Results window is bypassed, immediately displaying the Load Test Monitor.

You can observe the progress of your test and then continue to use the same window to review results after the test has completed. If you have established a load test result store, then you will also be prompted to view additional details at the completion of the load test run (see the earlier section, "Storing Load Test Run Data").

At the top of the screen, just under the file tabs, is a toolbar with several view options. First, if you are viewing detailed information from a results store, you will have a Summary view that displays key information about your load test. The next two buttons allow you to select between Graphs and Tables view. The Details (available if you are viewing detailed information from a results store) provides a graphical view of virtual users over time. The Show Counters Panel and graph options buttons are used to change the way these components are displayed.

The most obvious feature of the Load Test Monitor is the set of four graphs, which is selected by default. These graphs plot a number of selected performance counters over the duration of the test.

The tree in the left-hand (Counter) pane shows a list of all available performance counters, grouped into a variety of sets — for example, by machine. Expand the nodes to reveal the tracked performance counters. Hover over a counter to see a plot of its values in the graph. Double-click on the counter to add it to the graph and legend.

Note

Selecting performance counters and knowing what they represent can require experience. With so many available counters, it can be a daunting task to know when your application isn't performing at its best. Fortunately, Microsoft has applied its practices and recommendations to predefine threshold values for each performance counter to help indicate that something might be wrong.

As the load test runs, the graph is updated at each snapshot interval. In addition, you may notice that some of the nodes in the Counters pane are marked with a red error or yellow warning icon. This indicates that the value of a performance counter has exceeded a predefined threshold and should be reviewed. For example, Figure 13-24 indicates threshold violations for the % Processor Time counter. In fact, you can see small warning icons in the graph itself at the points where the violations occurred. You'll use the Thresholds view to review these in a moment.

The list at the bottom of the screen is a legend that shows details of the selected counters. Those that are checked appear in the graph with the indicated color. If you select a counter, it will be displayed with a bold line.

Finally, the bottom-left (Overview) pane shows the overall results of the load test.

When you click the Tables button, the main panel of the load test results window changes to show a drop-down list with a table. Use the drop-down list to view each of the available tables for the load test run. Each of these tables is described in the following sections.

This table goes beyond the detail of the Summary pane, listing all tests in your load test and providing summary statistics for each. Tests are listed by name and containing scenario for easy identification. You will see the total count of runs, pass/fail details, as well as tests per second and seconds per test metrics.

The Pages table shows all of the pages accessed during the load test. Included with each page are details of the containing scenario and Web performance test, along with performance metrics. The Total column shows the number of times that page was rendered during the test. The Page Time column reflects the average response time for each page. Page Time Goal and % Meeting Goal are used when a target response time was specified for that page. Finally, the Last Page Time shows the response time from the most recent request to that page.

A transaction is a defined subset of steps tracked together in a Web performance test. For example, you can wrap the requests from the start to the end of your checkout process in a transaction named Checkout for easy tracking. For more details, see the section "Adding Transactions," earlier in this chapter.

In this table, you will see any defined transactions listed, along with the names of the containing scenario and Web performance test. Details include the count, response time, and elapsed time for each transaction.

The SQL Trace table will only be enabled if you previously configured SQL Tracing for your load test. Details for doing that can be found in the earlier section "SQL Tracing."

This table shows the slowest SQL operations that occurred on the machine specified in your SQL Tracing settings. Note that only those operations that take longer than the Minimum Duration of Traced SQL Operations will appear.

By default, the operations are sorted with the slowest at the top of the list. You can view many details for each operation, including duration, start and end time, CPU, login name, and others.

The top of Figure 13-24 indicates that there are "2 threshold violations." You may either click on that text, or select the Threshold table to see the details. You will see a list similar to the one shown in Figure 13-25.

Each violation is listed according to the sampling time at which it occurred. You can see details about which counter on which machine failed, as well as a description of what the violating and threshold values were.

As with threshold violations, if your test encountered any errors, you will see a message such as "4 errors." Click on this text or the Errors table button to see a summary list of the errors. This will include the error type (such as Total or Exception) and the error's subtype. SubType will contain the specific Exception type encountered — for example, FileNotFoundException. Also shown are the count of each particular error and the message returned from the last occurrence of that error.

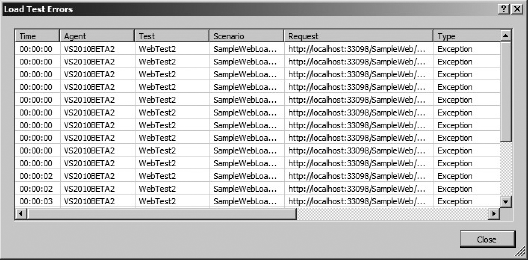

If you configured a database to store the load test results data, you can right-click on any entry and choose Errors. This will show the Load Test Errors window, as shown in Figure 13-26.

This table displays each instance of the error, including stack and details (if available), according to the time at which they occurred. Other information (such as the containing test, scenario, and Web request) is displayed when available.

If you are utilizing a load test results store (see the earlier section "Storing Load Test Run Data"), then you will have the option of viewing detailed results for your load test run. Click on View detailed results (shown at the top of Figure 13-24) to access these additional options as buttons on the top of the Load Test Monitor.

The Summary view provides a concise view of several key statistics about your load test run. The Detail view allows you to see a graph showing the number, duration, and status of every virtual user within your load test at any given point in time. This view can be helpful for pinpointing times when certain behaviors occur, such as a contention between two given virtual user sessions attempting to access the same functionality in your Web application. The Create Excel Report button allows you to export detailed results of your load test run into a spreadsheet for further analysis.

To execute a Web performance or load test from the command line, first launch a Visual Studio 2010 command prompt. From the Start menu, select Programs

The MSTest.exe utility is found under the Common7IDE directory of your Visual Studio installation directory, but will be available from any directory when using the Visual Studio command prompt.

From the directory that contains your solution, use the MSTest program to launch the test as follows:

MSTest /testcontainer:<Name>.<extension>

The target can be a Web performance test, a load test, or an assembly that contains tests such as unit tests. For example, to execute a load test named LoadTest1 in the SampleWebTestProject subfolder, enter the following command:

MSTest /testcontainer:SampleWebTestProjectLoadTest1.loadtest

This will execute the specified test(s) and display details, such as pass/fail, which run configuration was used, and where the result were stored.

You can also run tests that are grouped into a test list. First, specify the /testmetadata:<filename> option to load the metadata file containing the test list definitions. Then, select the test list to execute with the /testlist:<listname> option.

Remember that tests can have more than one test settings file. Specify a test settings file using the /testsettings:<filename> option.

By default, results are stored in an XML-based file called a TRX file. The default form is MSTest.MMDDYYYY.HHMMSS.trx. To store the run results in an alternate file, use the /resultsfile:<filename> option.

More options are available. To view them, run the following command:

MSTest /help

In larger-scale efforts, a single machine may not have enough power to simulate the number of users you need to generate the required stress on your application. Fortunately, Visual Studio 2010 load testing includes features supporting the execution of load tests in a distributed environment.

There are a number of roles that the machines play. Client machines are typically developer machines on which the load tests are created and selected for execution. The controller is the "headquarters" of the operation, coordinating the actions of one or more agent machines. The controller also collects the test results from each associated agent machine. The agent machines actually execute the load tests and provide details to the controller. The controller and agents are collectively referred to as a test rig.

There are no requirements for the location of the application under test. Generally, the application is installed either on one or more machines either outside the rig or locally on the agent machines, but the architecture of distributed testing is flexible.

Before using controllers and agents, you must install the required Windows services on each machine. The Visual Studio Agents 2010 package includes setup utilities for these services. This setup utility allows you to install the test controller and test agent.

Installing the test controller will install a Windows service for the controller, and will prompt you to assign a Windows account under which that service will run. Refrain from registering your test controller with a team project collection if you want to run load tests from Visual Studio. Enable "Configure for Load Testing" and select a SQL Server or SQL Server Express instance where you want to store your load test results.

Use the "Manage virtual user licenses" button to administer your licenses of load agent virtual user packs. Virtual user packs must be licensed based on the number of concurrent virtual users you wish to simulate. You will be unable to run load tests until you have assigned at least one virtual user pack to your test controller.

Note

Install your controller and verify that the Visual Studio Test Controller Windows service is running before configuring your agent machines.

After the controller service has been installed, run the Test Agent setup on each agent machine, specifying a user under whom the service should run and the name of the controller machine.

Your test controller and test agents can be configured later using the respective entries on the Start Menu under Programs

Once you have run the installation packages on the controller and agent machine(s), configure the controller by choosing Test

Type the name of a machine in the Controller field and press Enter. Ensure that the machine you specify has had the required controller services installed. The Agents panel will then list any currently configured agents for that controller, along with each agent's status.

The "Load test results store" points to the repository you are using to store load test data. Click the ellipsis button to select and test a connection to your repository. If you have not already configured a repository, refer to the earlier section "Storing Load Test Run Data."

The Agents panel will show any test agents that have been registered with your test controller. Temporarily suspend an agent from the rig by clicking the Offline button. Restart the agent services on a target machine with the Restart button.

You also have options for clearing temporary log data and directories, as well as restarting the entire rig.

Using the Manage Test Controller dialog just described, select an agent and click the Properties button. You will be able to modify several settings, described in the following sections.

When running a distributed load test, the load test being executed by the controller has a specific user load profile. This user load is then distributed to the agent machines according to their individual weightings.

For example, suppose two agents are running under a controller that is executing a load test with ten users. If the agents' weights are each 50, then five users will be sent to each agent.

This indicates the range of IP addresses to be used for calls from this agent to the target Web application.

Once your controller and agents have been installed and configured, you are ready to execute a load test against them. You specify a controller for the test and agent properties in the test settings. You may recall seeing the test settings when we covered Web performance tests.

Open your test settings by choosing Test

Under "Test execution method," choose Remote execution, and then enter or select the name of a valid controller machine.

The Roles panel and "Agent attributes for selected role" panel are used if you want to restrict the agents that will execute your tests to a subset of those registered with your test controller. Otherwise, all available agents will be utilized.

When you have finished making your changes, you may wish to create a new test settings file especially for remote execution. To do so, choose Save As and specify a new file.