Using Microsoft Test Manager to create and manage test plans

Authoring manual tests and publishing the results

Taking advantage of fast-forward for manual testing to speed up test runs

Across the software development industry, manual testing still makes up about 70 percent of the testing efforts as compared to creating automated tests, or specialized testing efforts such as performance and scale testing. Yet, manual testing has historically been overlooked by most software development tool vendors. Microsoft is seeking to change that disparity with Visual Studio 2010 by building an entirely new set of tools targeted specifically at the generalist tester. A generalist tester is a person who tests software manually by directly interacting with the software in the way a user might, and filing bugs when the tester encounters broken functionality or unexpected behavior.

In this chapter, you will learn how Visual Studio 2010 can make generalist testers more efficient at authoring, managing, and executing manual test cases. You will also gain an understanding of how the new testing framework in Visual Studio 2010 bridges the gap between testers and developers by capturing rich diagnostics during test runs, which can then be analyzed by developers to help them diagnose and resolve bugs.

Microsoft Test Manager is a new tool designed specifically for generalist testing teams. With Test Manager, you can create and manage test plans and test cases, author and execute manual tests, and file rich bugs. In Chapter 16, you will also see how Test Manager can be used to create and manage physical and virtual test environments.

Test Manager requires a connection to Visual Studio Team Foundation Server 2010. Team Foundation Server is used for storing all testing artifacts used by Test Manager, such as test plans, test cases, bugs, and the results of test runs. Test Manager also relies on the use of Team Foundation Build for building the software that you are testing.

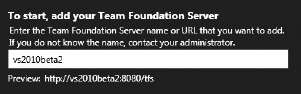

The first time you start Microsoft Test Manager, you will be prompted to connect to Team Foundation Server, as shown in Figure 14-1. Type in the name of your server as provided by your Team Foundation Server administrator, and click Add. If your Team Foundation Server is configured for a non-standard port, type the server name as servername:portnumber. If your Team Foundation Server instance has been configured for a non-standard virtual application directory, you may need to supply the full URI path. Consult with your Team Foundation Server administrator for assistance. Click Add when you are finished.

Next, you will be prompted to connect to a team project, as shown in Figure 14-2. Select your team project and click "Connect now."

A test plan is used within Test Manager to manage your entire testing effort for a given iteration. This includes your test cases, test results, the configurations you plan to test (for example, different operating systems and Web browsers), and several other settings that you will learn about in this chapter.

You will usually have different test plans for different iterations of your application's development lifecycle. For example, early test plans may focus on testing core functionality, whereas future test plans may be targeted at fit-and-finish (such as layout, rendering, spelling, and so on).

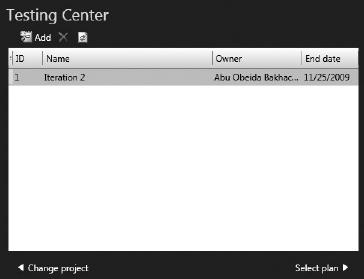

If your team project doesn't already include a test plan, you will need to create one, as shown in Figure 14-3. Click on Add to create a new plan. Once created, select the plan and click "Select plan."

You are now ready to begin working with your test plan. If at any time you want to switch to a different test plan or Team Foundation Server instance, you can click on the name of your test plan in the upper-right hand corner of Test Manager.

You should spend a few minutes familiarizing yourself with the navigation menu at the top of Test Manager. Test Manager is divided into two activity centers, the Testing Center and the Lab Center, which can be toggled by clicking on the text for Testing Center. This chapter will focus on the Testing Center. (You will learn more about the Lab Center in Chapter 16.)

Each activity center consists of several activities. Activities can be accessed by clicking the headings for each activity center, then the subheadings underneath those headings. The Testing Center is divided into the following four main areas of activities:

Plan — The Plan area is used to manage your overall test plan. This includes the plan's properties, as well as the individual test suites and test cases that make up your plan.

Test — The Test area is used to view the list of test cases that are ready to be run. From here, you can launch test runs to execute test cases and save the results, file bugs, and so on.

Track — The Track area allows you to change the build that you are currently testing. This tab also helps testers discover which tests might be most important to run, based on the build in use.

Organize — The Organize area provides an easy way of accessing and modifying all of your test cases, test configurations, and other test plans.

You will learn more about these areas in the remainder of this chapter.

For now, let's focus on configuring the properties of your test plan. Click on Plan and then click on Properties. Test Manager then displays the test plan properties activity for your test plan, as shown in Figure 14-4.

The upper portion of your test plan's properties includes metadata that you can use to describe the plan (such as name, description, and owner). This metadata can be useful for planning purposes, but it won't actually impact the functionality of your test plan. For example, setting your plan's State to Inactive or the Iteration Start Date to occur in the future won't prevent this plan from being used by testers. It's only useful for describing your plan.

Let's take a look at the rest of the properties you can set for your test plan.

Test settings define which data diagnostic adapters will be used when conducting your test runs. Data diagnostic adapters were introduced in Chapter 12. Data diagnostic adapters can collect data from the machines being tested or affect the machines being tested (such as by emulating a slower network connection). This data can be very useful for developers when they receive a bug by providing rich data about how the bug was encountered and even the state of the application at various points in time leading up to the bug discovery.

From within your plan properties, you can select the default test settings, which should be used for both manual and automated test runs. You can also create a new test setting entry, or modify existing test settings. You will learn more about test settings for automated runs in Chapter 16 when you learn about configuring test environments.

Figure 14-5 shows an example of test settings for manual runs. The "Data and Diagnostics" tab allows you to configure which data diagnostic adapters should be enabled when this test setting is used. Note that some data diagnostic adapters have additional options that can be configured (such as the maximum length for video recordings).

Note

Chapter 12 provides more information on data diagnostic adapters.

Note that data diagnostic adapters have varying amounts of overhead, including start-up time, CPU usage, and storage space for the various artifacts that will be included with test results. Also note that some data diagnostic adapters cannot be used simultaneously with other data diagnostic adapters. The product documentation includes a matrix that explains this in greater detail.

It is a good practice for the testing team to work with the development team in defining which data diagnostic adapters should be enabled for each machine within the environment. This helps to ensure that developers have everything they need to diagnose a problem when they receive a bug, along with attachments from the test run.

This may result in creating two different test settings. One "lightweight" test setting can be used for everyday testing and to capture basic diagnostic information. Another "full" test setting can be used to collect any and all diagnostics that may eventually help a developer to troubleshoot an issue. If, after finding an issue using the "lightweight" test setting, a tester believes that additional diagnostic data might be useful to the developer, he or she can run the test again using the "full" test setting.

As your testing progresses, you will periodically select new builds with which to test. From your test plan's properties, you can first configure the filter for your builds to match the build definition (as defined in Team Foundation Build) and, optionally, the build quality to use as a filter from among all available builds.

For example, it is common to have a tester scout a build before the rest of the team tries the build. Scouting usually involves installing the software and running some initial tests to ensure that it's worth using by the rest of the team. Once a build is scouted, the status of that build can be changed to indicate that it's a suitable build candidate to be used by the rest of the team.

After you configure a build definition and filter, you can click on Modify to view the Assign Build dialog shown in Figure 14-6. Start by choosing with which build to begin testing and click "Assign to plan." Note that previous builds are no longer available to assign to your plan after you do this.

After you choose your initial build, you can view newer builds by using the "Available builds" drop-down. When examining a newer build, any work items (such as requirements or bugs) that have been changed since your currently selected build will be displayed in the lower portion of the dialog. This is determined by compiling a list of work items that are linked to changesets from all builds between the current build and the build you are considering.

Note

Chapter 19 provides more information on changesets.

This information can help you decide whether to continue testing with your existing build, or to switch to a newer build (by clicking on "Assign to plan"). For example, maybe your testing efforts for a given feature are blocked until a task is implemented or a bug is fixed. In Figure 14-6, you can see that one user story, three tasks, and two bugs have been impacted since the currently assigned build. Clicking on "Assign to plan" would update the test plan to use that newer build. Afterward, results from test runs would be recorded against this newer build.

Note

Assigning a new build to a test plan affects the entire team working on that test plan. Also note that you can't assign builds older than the one you have already selected. For these reasons, carefully consider which newer builds to assign to your test plan.

You can also access the Assign Build activity by clicking on Test, then Assign Build.

Test impact analysis is a powerful feature that can help improve the productivity of testers by allowing them to quickly identify tests to re-run based on changes to code. Test impact analysis can be enabled to run in the background while tests are being executed. This feature records which sections of code get executed while each test is run. These tests can be automated tests (for example, unit tests, load tests, or coded UI tests) as well as manual tests, but the code you are analyzing must be managed code (that is,.NET Framework 2.0 and above).

Note

Chapter 12 provides more information on test impact analysis.

To use this feature, click Track

This activity works similarly to that of the Assign Build activity by comparing the results of your currently selected build to that of an older build. Use the Recommended Tests activity to quickly compile a list of tests that might be useful to re-run. To mark a test to be re-run, click that test (or select a range of tests), and then click the "Reset to active" button. This causes that test case to appear as Active from the Run Tests activity (which you will learn about later).

Note

You should be careful not to rely too heavily on test impact analysis, since there are certain factors that may impact the tests not captured by test impact analysis. This includes changes to test data (which may result in different paths through a code base), and changes to other libraries or applications with which your test application interacts, but which aren't being analyzed by test impact analysis. For this reason, you should examine your test plan from multiple angles (for example test impact analysis, changes to work items, and so on) and routinely consider re-running all of your tests, regardless of whether they are known to have been impacted.

Often, your software must be supported on a variety of hardware and software configurations. Correspondingly, your test plan should account for these configurations if they have the potential to impact the functionality or behavior of the application you are testing. Test Manager allows you to define test configurations to represent the matrix of environments that you want to test.

The test plan properties page allows you to select the default test configurations that should be applied to tests in your plan. You can override these defaults for an individual test case, but, by default, if you want all of your tests to be run on Windows Vista with Internet Explorer 7 and Windows 7 with Internet Explorer 8, you must specify that in your test plan properties.

Figure 14-7 shows the Test Configuration Manager that is used to build the matrix of test configurations you might wish to include in your test plan. Creating a new test configuration allows you to select one or more configuration variables (such as operating system and browser) and their assigned values.

Configuration variables for operating system and browser are provided to you by default. But you might want to create your own configuration variables, or modify the existing variables to include additional browser and operating system choices. You can do this by clicking on "Manage configuration variables." You can create configuration variables for anything that you want to track for your testing efforts. For example, maybe it's important to test with different operating system languages, service pack levels, or even keyboard layouts. All of these changes in the environment can be represented using configuration variables.

After you have created your configuration variables in Test Configuration Manager, click New to assign variables and their values to a test configuration. These test configurations can then be added to your test plan from within the Test Plan Properties activity.

In Chapter 16, you will learn how the new Lab Management feature of Visual Studio 2010 can be used to help you run tests in a variety of environments to quickly address a wide range of test configurations. For now, you will be running all of your tests locally.

If you click Plan

Test cases are organized into one of the following three types of test suites:

Requirements-based test suite — This includes any test cases that are linked to requirement work items via a "Tests" relationship. For any given iteration of an application's development, you will usually want to start by adding all the requirements that are being implemented in that iteration. This way, you can create and execute test cases that verify an application is on track to deliver the promised functionality. Click on "Add requirements" to add a requirements-based test suite to your plan. You will be prompted to select the requirement to which to bind your suite.

Query-based test suite — This allows you to specify a dynamic work item query for selecting test cases. For example, you might want to include all test cases with a priority of 1, even if they are for requirements that were implemented and tested in earlier iterations. This can help ensure that critical functionality that used to work doesn't break (or regress) as the application progresses. Click New

Static test suite — This is simply a list of test cases that can be added manually to the suite. A static test suite can also be used as a container for other test suites, giving you a hierarchical option for organizing your tests. Click New

You can also copy suites from other plans by clicking on the blue arrow. For example, when you create your Beta 2 test plan, you might want to carry forward some of the Beta 1 test suites.

If you highlight a test suite, you will see all of that test suite's test cases to the right. You will learn how to work with test cases next. For now, note that you can change the State of a test suite by clicking on the State drop-down. Test suites can have one of the following three valid states:

In planning — This indicates that you are still authoring your test cases, and that they aren't yet ready to run.

In progress — This means that test cases in this suite should be run by the testing team. Only test suites that are "In progress" will show up in the Test activity for testers to run. This is the default state for new test suites.

Completed — This should be used when you no longer want to run the test cases that make up this suite. For example, if all of the test cases that make up this suite are passing for current builds, then you may deem it unnecessary to continue to run those tests.

You can add a test case to a requirements-based test suite or a static test suite by first highlighting that suite, and then clicking New or Add on the right side of the activity window. Click New to create a brand new test case, or Add to browse for an existing test case. When you are adding test cases to a requirements-based test case, a "Tests/Tested By" link will be made between your test case work item and the requirement work item.

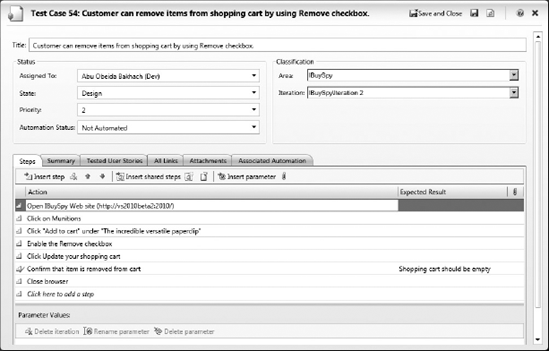

Clicking on New will display a new test case form. Figure 14-9 shows a test case that has already been authored.

The top portion of this form should look familiar if you've worked with any other work items in Team Foundation Server before. But the Steps tab is where the test case form gets interesting, since this is where you can author the steps that a generalist tester should take when running this test case.

You can start by simply typing the actions that you want the tester to perform during the test case. Each step should go on a new row. You can place your cursor on a new row and begin typing, or press Enter when you are ready to type a new row. You can also use the toolbar to manage the insertion/deletion of steps or to move steps up or down in the list of test steps.

The Expected Result column is used to tell the tester what he or she should be verifying as the tester runs the test case. For example, after creating a new account, the tester should see a message indicating that the account creation was successful. Specifying an expected result changes the test step to be a verification step. The tester will be expected to report on the status of each verification step to indicate whether or not that test step was successful.

You can also add attachments (such as an image) to a test step to provide further instructions to a tester about what to do or what the tester should be verifying. To add an attachment, right-click on a test step and click "Manage test step attachments." You will be prompted to upload the files that you want to attach to this test step.

Finally, parameters can be used to provide different values for a test step. For example, you might want to test the process of creating a new user account by trying different values for username, password, and so on. Instead of writing a new test case for each set of values you want to test, you can simply parameterize a single test case with multiple values. Each row of data you specify will result in a separate iteration of the test case during a test run. To create a new parameter, use the "@" symbol within a test step preceding a variable name, as shown here:

Type @username and @password and click OK

This will create two new parameters, username and password, in the Parameter Values table at the bottom of the test case. You can then supply values for these parameters within the table. These values will be used later on when running the test. Each row of your Parameter Values table will correspond to a unique iteration when running this test case.

Note

Parameter Values can also be used by coded UI tests, as you will see in Chapter 15.

There may be times when you have steps within your test plan that are repeated across multiple test cases. A good example of this is the process of creating an account, or signing into a Web site, before completing other steps within a test case. Instead of authoring (and maintaining) these common steps within each test case, you can utilize shared steps.

Shared steps allow you to author and maintain common test steps within a unique container. Like test cases, shared steps are also persisted as work items within Team Foundation Server. Shared steps are most valuable for protecting your test cases in the event that these common test steps change, such as if you modify the process of creating an account or signing into the application. Instead of needing to change these steps within multiple test cases, you can simply update the shared steps work item. Test cases that include those shared steps will be updated automatically. Action recordings, which you will learn about later, are also stored within shared steps. This means that you can update the action recordings for a set of shared steps in a single location, instead of needing to re-create the action recording for each test case that includes those shared steps.

To create shared test steps from within a test case, highlight the first step in the series of common steps that you wish to convert into shared steps. While pressing the Shift key, click on the last step in the list of steps that you wish to convert into shared steps. Now, right-click on this range of steps and select "Create shared steps," as shown in Figure 14-10.

You will be prompted to give your shared steps a name. Afterward, the common steps in your test case will be collapsed into a single, bolded test step, as shown in Figure 14-11. You can open and edit shared steps by right-clicking on them and selecting "Open shared steps." You can also insert other shared steps by right-clicking and choosing "Insert shared steps."

Earlier, you learned how test configurations can be assigned to a test plan. This defines the default test configurations that all test cases in this test plan should utilize. However, you can override your test plan's test configurations setting for individual test cases, or for an individual test suite.

To override the test configuration for an individual test case, first select a test suite from within the Contents planning activity. Then select a test case from the right-hand pane of the activity. Click Configurations to display the Select Test Configurations activity shown in Figure 14-12. Click on "All configurations" to display the full list of configurations available. From here, you can select the test configurations that should be assigned to this test case.

To apply new test configurations to an entire test suite, right-click the test suite and choose "Select test configurations for all tests."

You can assign test cases to the testers who should run them. Do this by selecting a test suite and then clicking on Assign from within Plan Contents activity. The Assign Testers activity will appear, allowing you to assign test cases to individual testers.

Note

Assigning testers to test cases is only used as a guide to help the test team divide up work. Test Manager won't prevent a tester from running test cases that are assigned to another tester.

Now that you know how to work with test plans, it's time to learn how to run test cases and track their results using Test Manager.

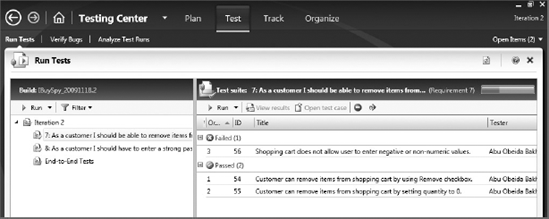

Open the Run Tests activity (click Test

Any test suites that are set to a status of "In progress" will be shown along the left side of this activity pane. Along the right side of this activity pane you will see the test cases within the currently selected test suite.

Note that each test case may be listed multiple times if there are multiple test configurations assigned to that test case. You can use the Filter button to choose which test configurations you are ready to test. This way, you can show just the test configurations that you can support on the machine you are currently testing with.

This view also shows you the status of each test case from the last time it was run (passed, failed, or inclusive for tests that have not yet been run). Tests that are not ready to be run will be marked with a blocked icon. You might want to block certain test cases if they are not yet implemented in the current build you are using, or if you know that they will fail because they depend on other test cases that are failing. For example, a test case that relies on logging in as a new user account could fail if the test case for creating a new account is failing. You can toggle which test cases are blocked by using the "Block test" and "Reset test to active" buttons on the right-hand side of the activity pane.

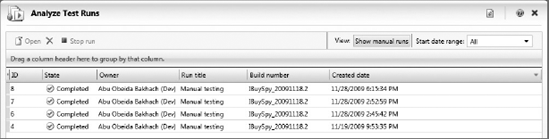

You can learn more about previous runs for a test case by selecting that test case and clicking on "View results." You can also use the Analyze Test Runs activity (click Test

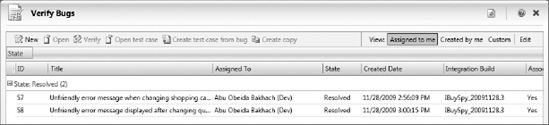

The My Bugs activity (click Test

You can also construct a custom query from this view, such as to build a query composed of the bugs belonging to all of the members of your team.

Microsoft Test Runner is used to exercise test runs. To start a test run, return to the Run Tests activity (Test

Note

You can also run all of the active tests within a test suite by clicking on the Run icon located above the list of test suites. Click the down arrow next to the Run icon and choose "Run with options" if you want to override the test plan's default test settings, build, or test environment for your test run. For example, you may decide to perform most of your testing with a test setting that has a minimum number of data diagnostic adapters enabled. This can minimize system overhead and speed up your test runs. Then, if you find a bug, you can re-run the test with a test setting that is configured to capture more information (for example, a video recording, or IntelliTrace file), which can help the developer diagnose and fix the bug.

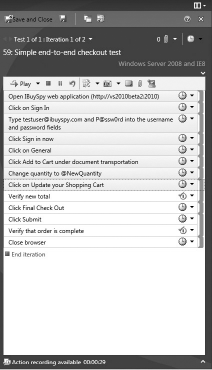

Test Runner will be launched as shown in Figure 14-16. Test Runner is now ready to help you run the test cases that you selected for this run.

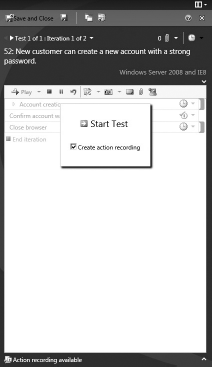

Test Runner allows you to record an action recording that can be used to "fast-forward" through test steps during future test runs. This feature is known as fast-forward for manual testing. Playing back an action recording can dramatically speed up a test run by performing actions far faster than a human could perform them. This can also make a generalist tester's job less mundane by allowing the tester to focus on examining an application for bugs, instead of following a mindless script of clicking and typing repeatedly. Action recordings can even be used as the basis for creating fully automated coded UI tests, as you will see in Chapter 15.

To create an action recording, select "Create action recording" and click on Start Test.

Test Runner will now open a list of test steps for the test case you are currently running, as shown in Figure 14-17. If a step has an Expected Result value, it will be shown here as well to help guide the tester about what he or she should be validating.

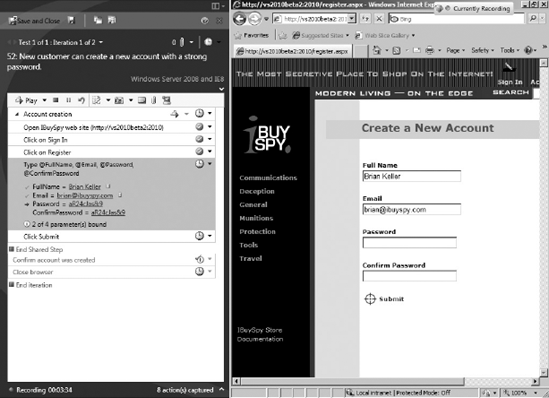

If you chose to create an action recording, then every interaction you have with the applications you are testing will be captured. To gather a clean recording, you should be careful not to perform steps that are not part of your test case. This includes launching other applications or interacting with the desktop. Interactions with the Test Runner user interface are excluded from your action recording by default, so you don't have to worry about these polluting your recording. You can exclude other applications (such as an instant messaging application) by configuring the test settings for Action Recording and Action Log, as shown in Figure 14-5. You can also use the Pause button on the Test Runner toolbar to pause the action recording, allowing you to interact with other applications before returning to your test run.

Note

The capability for Microsoft Test Runner to capture action recordings is limited to the type of application being tested. See the "Supported Technology" section later on in this chapter for more information.

As you are running a test case, you can report on whether each test step passes or fails. Do this by clicking on the drop-down to the right of each test step, or by using the keyboard shortcuts. By default, Windows Key + Shift + P will pass a test step, and Windows Key + Shift + F will fail a test step.

You are only required to report on the status of validation steps, those that are marked with a checkmark icon. Failing to indicate whether or not a validation step has passed will cause the test case to default to a failed state.

If you are capturing an action recording, you should report on the status of each test step as you perform it. This makes it possible for the action recording log to correlate individual test steps with the actions that they are composed of. This is important for playing back individual test steps later on, and when using the action recording log to create coded UI tests.

If your test has parameter values, these will be automatically copied to your clipboard as they are encountered in your test case. This way, you can simply place your cursor where these values should be entered and press Ctrl + V to paste them. If you are capturing an action recording, Test Runner will remember the field that you pasted those values into, and bind that field to the parameter. This binding will be used later during playback. Figure 14-17 shows a test step with parameter values being bound to fields on a Web application.

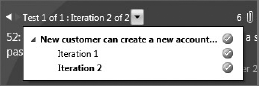

After you have finished running your test case iteration, click on "End iteration." If your test run included multiple test cases, or multiple iterations for a given test case, then you can select the next test case or iteration to run from the drop-down, as shown in Figure 14-18. A test case will consist of multiple iterations if you are using parameter values. Each row of your parameter values will generate a unique iteration.

If an action recording is available for the test case you are running, you will see the text "Action recording available" at the bottom of Test Runner. This means that you can use this action recording to play back one or more test steps.

To play back an action recording, select the first step that you wish to play back. Then, press Shift and click on the last step that you want to play back. Now, click on Play, as shown in Figure 14-19.

Test Runner will begin playing back the actions that you recorded earlier. This includes launching applications, clicking on windows and controls, and entering values. It is important that you don't use your mouse or keyboard while this is being played back, or else you might interfere with the playback.

You can use action recordings to play back an entire test case or just sections of a test case. You can also launch playback multiple times within a test case, selecting a new range of test steps each time. Launching multiple times is helpful to give you a chance to inspect the application and verify that it's behaving properly. You may also choose to play back steps of the recording that you know work, and manually perform actions that may not match the last recording (such as if the user interface changed for a given set of steps). Depending on the type of user interface change, it may eventually become necessary to re-record the action recording for a test case.

Fast-forward for manual testing requires that your application be built using one of several supported technologies. The testing framework requires that it understands the underlying technology so that it can interact with the application being tested. The list of supported technologies is expected to grow over time, and Visual Studio 2010 offers an extensibility framework to allow third parties to build their own testing providers. However, if your application uses a technology for which there is not a testing provider available, you will be unable to benefit from fast-forward for manual testing.

Note

For a complete list of supported technologies and caveats, consult the Visual Studio 2010 product documentation.

The results of test runs conducted with Test Runner can be published to Team Foundation Server. When you are finished with a test run, click on Save and Close to save your test run results. You can alternatively abandon a test run by clicking on the X to close Test Runner. These test results can be viewed later from within Test Manager, as shown in Figure 14-20.

Depending on the test settings you are using, and whether you are capturing an action recording, you may have a variety of attachments included with your test runs. This might include a video recording, action recordings, system information, or any other artifacts that are captured by the data diagnostic adapters you have configured in your test settings. You can also capture additional information (such as screenshots or comments) by using the toolbar above the list of test steps within Test Runner.

Finally, you can use Test Runner to file bugs by clicking on the "Create bug" button. When you file a bug with Test Runner, all of the attachments from your test run will be included with the bug, making it easier for the developers to understand how your bug was discovered, and providing them with richer information that may be helpful for resolving the bug later on. For example, if you opted to capture an IntelliTrace file it will be included here. When the developer opens this bug, he or she can use this data to help diagnose and fix the bug more quickly.

Note

Saving the results of a failed test run does not automatically file a bug. If you don't file a bug for a failed test, then the developer may never learn that there is a problem.

Over time, you may decide to add automated tests to your test plan. Automated tests are more expensive to author and maintain, but they have the benefit of being capable of running without manual interaction, making them suitable for quickly catching bugs caused by changes to source code. In Chapter 12 you learned how you can use Visual Studio to manage automated tests (such as unit tests, coded UI tests, and Web performance tests). But you can also manage automated tests as part of the same test plans that you use within Test Manager.

Automated tests can be run as part of your automated builds, and the status of those tests can be published along with the rest of the tests within your test plan. The main advantage of managing your automated tests along with your manual tests is that you can gain a consolidated view across your entire test plan of how your application's quality is trending.

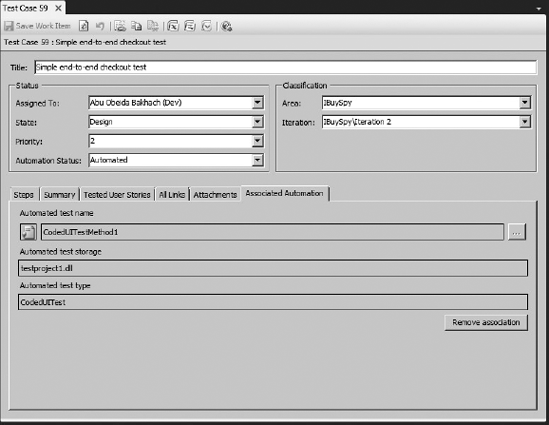

To utilize automated tests within Test Manager, you must first create an automated test in Visual Studio 2010, and check it in as part of your Team Foundation Server source control. Next, from within Visual Studio 2010, open the work item corresponding to the test case that you wish to automate. Click on the Associated Automation tab, as shown in Figure 14-21

Use the ellipsis (...) to the right of the "Associated test name" field to browse for the automated test you wish to use when running this test case. Once selected, the rest of the fields on this form will be populated for you. Save the work item.

Now, when you run this test from within Test Manager, it will be run automatically without requiring user intervention. Additionally, if you configure Team Foundation Build to run this test as part of an automated build (see Chapter 21), then the status of this test will automatically be reported back to your test plan, so there is no need to run this test from within Test Manager unless you want to reproduce a test run.

You will learn how to create a coded UI test in Chapter 15. After creating a coded UI test, you may wish to revisit this topic to wire up your coded UI test as associated automation for an existing test case.

Note

Before you can run automated tests within Test Manager for the first time, you must first define an automated test environment and automated test settings for your test plan. Test settings within Test Manager were first introduced in this chapter; automated test settings and test environments will be covered in greater detail in Chapter 16.

This chapter provided you with a basic understanding of how Microsoft Test Manager can help testers author, manage, and execute manual test plans. You learned how features such as test impact analysis and changesets can help you determine which test cases to run next.

You learned how Microsoft Test Runner guides a generalist tester through the steps that make up a test case, and allows for granular reporting of whether each test step passed or failed. You saw how action recordings can make generalist testers more efficient by helping them "fast forward" through ranges of test steps.

You also learned how Visual Studio 2010 can improve communications between testers and developers by automatically capturing rich information about test runs. This information can help developers understand how bugs were encountered, and can even provide them with information to help them more quickly resolve those bugs.

In Chapter 15, you will discover how you can convert manual test cases into fully automated UI tests by starting with the action recordings you captured using Microsoft Test Runner. In Chapter 16, you will learn how Microsoft Test Manager can be used to create virtual environments for running your tests.