13

Applied Machine Learning: Identifying Credit Default

In recent years, we have witnessed machine learning gaining more and more popularity in solving traditional business problems. Every so often, a new algorithm is published, beating the current state of the art. It is only natural for businesses (in all industries) to try to leverage the incredible powers of machine learning in their core functionalities.

Before specifying the task we will be focusing on in this chapter, we provide a brief introduction to the field of machine learning. The machine learning domain can be broken down into two main areas: supervised learning and unsupervised learning. In the former, we have a target variable (label), which we try to predict as accurately as possible. In the latter, there is no target, and we try to use different techniques to draw some insights from the data.

We can further break down supervised problems into regression problems (where a target variable is a continuous number, such as income or the price of a house) and classification problems (where the target is a class, either binary or multi-class). An example of unsupervised learning is clustering, which is often used for customer segmentation.

In this chapter, we tackle a binary classification problem set in the financial industry. We work with a dataset contributed to the UCI Machine Learning Repository, which is a very popular data repository. The dataset used in this chapter was collected in a Taiwanese bank in October 2005. The study was motivated by the fact that—at that time—more and more banks were giving credit (either cash or via credit cards) to willing customers. On top of that, more people, regardless of their repayment capabilities, accumulated significant amounts of debt. All of this led to situations in which some people were unable to repay their outstanding debts. In other words, they defaulted on their loans.

The goal of the study was to use some basic information about customers (such as gender, age, and education level), together with their past repayment history, to predict which of them were likely to default. The setting can be described as follows—using the previous 6 months of repayment history (April-September 2005), we try to predict whether the customer will default in October 2005. Naturally, such a study could be generalized to predict whether a customer will default in the next month, within the next quarter, and so on.

By the end of this chapter, you will be familiar with a real-life approach to a machine learning task, from gathering and cleaning data to building and tuning a classifier. Another takeaway is understanding the general approach to machine learning projects, which can then be applied to many different tasks, be it churn prediction or estimating the price of new real estate in a neighborhood.

In this chapter, we focus on the following recipes:

- Loading data and managing data types

- Exploratory data analysis

- Splitting data into training and test sets

- Identifying and dealing with missing values

- Encoding categorical variables

- Fitting a decision tree classifier

- Organizing the project with pipelines

- Tuning hyperparameters using grid search and cross-validation

Loading data and managing data types

In this recipe, we show how to load a dataset from a CSV file into Python. The very same principles can be used for other file formats as well, as long as they are supported by pandas. Some popular formats include Parquet, JSON, XLM, Excel, and Feather.

pandas has a very consistent API, which makes finding its functions much easier. For example, all functions used for loading data from various sources have the syntax pd.read_xxx, where xxx should be replaced by the file format.

We also show how certain data type conversions can significantly reduce the size of DataFrames in the memory of our computers. This can be especially important when working with large datasets (GBs or TBs), which can simply not fit into memory unless we optimize their usage.

In order to present a more realistic scenario (including messy data, missing values, and so on) we applied some transformations to the original dataset. For more information on those changes, please refer to the accompanying GitHub repository.

How to do it...

Execute the following steps to load a dataset from a CSV file into Python:

- Import the libraries:

import pandas as pd - Load the data from the CSV file:

df = pd.read_csv("../Datasets/credit_card_default.csv", na_values="") dfRunning the snippet generates the following preview of the dataset:

Figure 13.1: Preview of the dataset. Not all columns were displayed

The DataFrame has 30,000 rows and 24 columns. It contains a mix of numeric and categorical variables.

- View the summary of the DataFrame:

df.info()Running the snippet generates the following summary:

RangeIndex: 30000 entries, 0 to 29999 Data columns (total 24 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 limit_bal 30000 non-null int64 1 sex 29850 non-null object 2 education 29850 non-null object 3 marriage 29850 non-null object 4 age 29850 non-null float64 5 payment_status_sep 30000 non-null object 6 payment_status_aug 30000 non-null object 7 payment_status_jul 30000 non-null object 8 payment_status_jun 30000 non-null object 9 payment_status_may 30000 non-null object 10 payment_status_apr 30000 non-null object 11 bill_statement_sep 30000 non-null int64 12 bill_statement_aug 30000 non-null int64 13 bill_statement_jul 30000 non-null int64 14 bill_statement_jun 30000 non-null int64 15 bill_statement_may 30000 non-null int64 16 bill_statement_apr 30000 non-null int64 17 previous_payment_sep 30000 non-null int64 18 previous_payment_aug 30000 non-null int64 19 previous_payment_jul 30000 non-null int64 20 previous_payment_jun 30000 non-null int64 21 previous_payment_may 30000 non-null int64 22 previous_payment_apr 30000 non-null int64 23 default_payment_next_month 30000 non-null int64 dtypes: float64(1), int64(14), object(9) memory usage: 5.5+ MBIn the summary, we can see information about the columns and their data types, the number of non-null (in other words, non-missing) values, the memory usage, and so on.

We can also observe a few distinct data types: floats (floating-point numbers, such as 3.42), integers, and objects. The last ones are the

pandasrepresentation of string variables. The number next tofloatandintindicates how many bits this type uses to represent a particular value. The default types use 64 bits (or 8 bytes) of memory.The basic

int8type covers integers in the following range: -128 to 127.uint8stands for unsigned integer and covers the same total span, but only the non-negative values, that is, 0 to 255. By knowing the range of values covered by specific data types (please refer to the link in the See also section), we can try to optimize allocated memory. For example, for features such as the month of purchase (represented by numbers in the range 1-12), there is no point in using the defaultint64, as a much smaller type would suffice.

- Define a function for inspecting the exact memory usage of a DataFrame:

def get_df_memory_usage(df, top_columns=5): print("Memory usage ----") memory_per_column = df.memory_usage(deep=True) / (1024 ** 2) print(f"Top {top_columns} columns by memory (MB):") print(memory_per_column.sort_values(ascending=False) .head(top_columns)) print(f"Total size: {memory_per_column.sum():.2f} MB")We can now apply the function to our DataFrame:

get_df_memory_usage(df, 5)Running the snippet generates the following output:

Memory usage ---- Top 5 columns by memory (MB): education 1.965001 payment_status_sep 1.954342 payment_status_aug 1.920288 payment_status_jul 1.916343 payment_status_jun 1.904229 dtype: float64 Total size: 20.47 MBIn the output, we can see that the 5.5+ MB reported by the

infomethod turned out to be almost 4 times more. This is still very small in terms of current machines’ capabilities, however, the memory-saving principles we show in this chapter apply just as well to DataFrames measured in gigabytes.

- Convert the columns with the

objectdata type into thecategorytype:object_columns = df.select_dtypes(include="object").columns df[object_columns] = df[object_columns].astype("category") get_df_memory_usage(df)Running the snippet generates the following overview:

Memory usage ---- Top 5 columns by memory (MB): bill_statement_sep 0.228882 bill_statement_aug 0.228882 previous_payment_apr 0.228882 previous_payment_may 0.228882 previous_payment_jun 0.228882 dtype: float64 Total size: 3.70 MBJust by converting the

objectcolumns into apandas-native categorical representation, we managed to reduce the size of the DataFrame by ~80%!

- Downcast the numeric columns to integers:

numeric_columns = df.select_dtypes(include="number").columns for col in numeric_columns: df[col] = pd.to_numeric(df[col], downcast="integer") get_df_memory_usage(df)Running the snippet generates the following overview:

Memory usage ---- Top 5 columns by memory (MB): age 0.228882 bill_statement_sep 0.114441 limit_bal 0.114441 previous_payment_jun 0.114441 previous_payment_jul 0.114441 dtype: float64 Total size: 2.01 MBIn the summary, we can see that after a few data type conversions, the column that takes up the most memory is the one containing customers’ ages (you can see that in the output of

df.info(), not shown here for brevity). That is because it is encoded using afloatdata type and downcasting using theintegersetting was not applied tofloatcolumns.

- Downcast the

agecolumn using thefloatdata type:df["age"] = pd.to_numeric(df["age"], downcast="float") get_df_memory_usage(df)

Running the snippet generates the following overview:

Memory usage ----

Top 5 columns by memory (MB):

bill_statement_sep 0.114441

limit_bal 0.114441

previous_payment_jun 0.114441

previous_payment_jul 0.114441

previous_payment_aug 0.114441

dtype: float64

Total size: 1.90 MB

Using various data type conversions, we have managed to reduce the memory size of our DataFrame from 20.5 MB to 1.9 MB, which is a 91% reduction.

How it works...

After importing pandas, we loaded the CSV file by using the pd.read_csv function. When doing so, we indicated that empty strings should be interpreted as missing values.

In Step 3, we displayed a summary of the DataFrame to inspect its contents. To get a better understanding of the dataset, we provide a simplified description of the variables:

limit_bal—the amount of the given credit (NT dollars)sex—biological sexeducation—level of educationmarriage— marital statusage—age of the customerpayment_status_{month}—status of payments in one of the previous 6 monthsbill_statement_{month}—the number of bill statements (NT dollars) in one of the previous 6 monthsprevious_payment_{month}—the number of previous payments (NT dollars) in one of the previous 6 monthsdefault_payment_next_month—the target variable indicating whether the customer defaulted on the payment in the following month

In general, pandas tries to load and store data as efficiently as possible. It automatically assigns data types (which we can inspect by using the dtypes method of a pandas DataFrame). However, there are some tricks that can lead to much better memory allocation, which definitely makes working with larger tables (in hundreds of MBs, or even GBs) easier and more efficient.

In Step 4, we defined a function for inspecting the exact memory usage of a DataFrame. The memory_usage method returns a pandas Series with the memory usage (in bytes) for each of the DataFrame’s columns. We converted the output into MBs to make it easier to understand.

When using the memory_usage method, we specified deep=True. That is because the object data type, unlike other dtypes (short for data types), does not have a fixed memory allocation for each cell. In other words, as the object dtype usually corresponds to text, it means that the amount of memory used depends on the number of characters in each cell. Intuitively, the more characters in a string, the more memory that cell uses.

In Step 5, we leveraged a special data type called category to reduce the DataFrame’s memory usage. The underlying idea is that string variables are encoded as integers, and pandas uses a special mapping dictionary to decode them back into their original form. This is especially useful when dealing with a limited number of distinct values, for example, certain levels of education, country of origin, and so on. To save memory, we first identified all the columns with the object data type using the select_dtypes method. Then, we changed the data type of those columns from object to category. We did so using the astype method.

We should know when it is actually profitable (from the memory’s perspective) to use the category data type. A rule of thumb is to use it for variables with a ratio of unique observations to the overall number of observations lower than 50%.

In Step 6, we used the select_dtypes method to identify all numeric columns. Then, using a for loop iterating over the identified columns, we converted the values to numeric using the pd.to_numeric function. This might strike as odd, given that we first identified the numeric columns and then converted them to numeric again. However, the crucial part is the downcast argument of the function. By passing the "integer" value, we have optimized the memory usage of all the integer columns by downcasting the default int64 data type to smaller alternatives (int32 and int8).

Even though we applied the function to all numeric columns, only the ones that contained integers were successfully transformed. That is why in Step 7 we additionally downcasted the float column containing the clients’ ages.

There’s more…

In this recipe, we have mentioned how to optimize the memory usage of a pandas DataFrame. We first loaded the data into Python, then we inspected the columns, and at the end we converted the data types of some columns to reduce memory usage. However, such an approach might not be possible, as the data might simply not fit into memory in the first place.

If that is the case, we can also try the following:

- Read the dataset in chunks (by using the

chunkargument ofpd.read_csv). For example, we could load just the first 100 rows of data. - Read only the columns we actually need (by using the

usecolsargument ofpd.read_csv). - While loading the data, use the

column_dtypesargument to define the data types used for each of the columns.

To illustrate, we can use the following snippet to load our dataset and while doing so indicate that the selected three columns should have a category data type:

column_dtypes = {

"education": "category",

"marriage": "category",

"sex": "category"

}

df_cat = pd.read_csv("../Datasets/credit_card_default.csv",

na_values="", dtype=column_dtypes)

If all of those approaches fail, we should not give up. While pandas is definitely the gold standard of working with tabular data in Python, we can leverage the power of some alternative libraries, which were built specifically for such a case. Below you can find a list of libraries you could use when working with large volumes of data:

Dask: an open-source library for distributed computing. It facilitates running many computations at the same time, either on a single machine or on clusters of CPUs. Under the hood, the library breaks down a single large data processing job into many smaller tasks, which are then handled bynumpyorpandas. As the last step, the library reassembles the results into a coherent whole.Modin: a library designed to parallelizepandasDataFrames by automatically distributing the computation across all of the system’s available CPU cores. The library divides an existing DataFrame into different parts such that each part can be sent to a different CPU core.Vaex: an open-source DataFrame library specializing in lazy out-of-core DataFrames. Vaex requires negligible amounts of RAM for inspecting and interacting with a dataset of arbitrary size, all thanks to combining the concepts of lazy evaluations and memory mapping.datatable: an open-source library for manipulating 2-dimensional tabular data. In many ways, it is similar topandas, with special emphasis on speed and the volume of data (up to 100 GB) while using a single-node machine. If you have worked with R, you might already be familiar with the related package calleddata.table, which is R users’ go-to package when it comes to the fast aggregation of large data.cuDF: a GPU DataFrame library that is part of NVIDIA’s RAPIDS, a data science ecosystem spanning multiple open-source libraries and leveraging the power of GPUs.cuDFallows us to use apandas-like API to benefit from the performance boost without going into the details of CUDA programming.polars: an open-source DataFrame library that achieves phenomenal computation speed by leveraging Rust (programming language) with Apache Arrow as its memory model.

See also

Additional resources:

- Dua, D. and Graff, C. (2019). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.

- Yeh, I. C. & Lien, C. H. (2009). “The comparisons of data mining techniques for the predictive accuracy of probability of default of credit card clients.” Expert Systems with Applications, 36(2), 2473-2480. https://doi.org/10.1016/j.eswa.2007.12.020.

- List of different data types used in Python: https://numpy.org/doc/stable/user/basics.types.html#.

Exploratory data analysis

The second step of a data science project is to carry out Exploratory Data Analysis (EDA). By doing so, we get to know the data we are supposed to work with. This is also the step during which we test the extent of our domain knowledge. For example, the company we are working for might assume that the majority of its customers are people between the ages of 18 and 25. But is this actually the case? While doing EDA we might also run into some patterns that we do not understand, which are then a starting point for a discussion with our stakeholders.

While doing EDA, we can try to answer the following questions:

- What kind of data do we actually have, and how should we treat different data types?

- What is the distribution of the variables?

- Are there outliers in the data and how can we treat them?

- Are any transformations required? For example, some models work better with (or require) normally distributed variables, so we might want to use techniques such as log transformation.

- Does the distribution vary per group (for example, sex or education level)?

- Do we have cases of missing data? How frequent are these, and in which variables do they occur?

- Is there a linear relationship (correlation) between some variables?

- Can we create new features using the existing set of variables? An example might be deriving an hour/minute from a timestamp, a day of the week from a date, and so on.

- Are there any variables that we can remove as they are not relevant for the analysis? An example might be a randomly generated customer identifier.

Naturally, this list is non-exhaustive and carrying out the analysis might spark more questions than we initially had. EDA is extremely important in all data science projects, as it enables analysts to develop an understanding of the data, facilitates asking better questions, and makes it easier to pick modeling approaches suitable for the type of data being dealt with.

In real-life cases, it makes sense to first carry out a univariate analysis (one feature at a time) for all relevant features to get a good understanding of them. Then, we can proceed to multivariate analysis, that is, comparing distributions per group, correlations, and so on. For brevity, we only show selected analysis approaches to selected features, but a deeper analysis is highly encouraged.

Getting ready

We continue with exploring the data we loaded in the previous recipe.

How to do it...

Execute the following steps to carry out the EDA of the loan default dataset:

- Import the libraries:

import pandas as pd import numpy as np import seaborn as sns - Get summary statistics of the numeric variables:

df.describe().transpose().round(2)Running the snippet generates the following summary table:

Figure 13.2: Summary statistics of the numeric variables

- Get summary statistics of the categorical variables:

df.describe(include="object").transpose()Running the snippet generates the following summary table:

Figure 13.3: Summary statistics of the categorical variables

- Plot the distribution of age and split it by sex:

ax = sns.kdeplot(data=df, x="age", hue="sex", common_norm=False, fill=True) ax.set_title("Distribution of age")Running the snippet generates the following plot:

Figure 13.4: The KDE plot of age, grouped by sex

By analyzing the kernel density estimate (KDE) plot, we can say there is not much difference in the shape of the distribution per sex. The female sample is slightly younger, on average.

- Create a pairplot of selected variables:

COLS_TO_PLOT = ["age", "limit_bal", "previous_payment_sep"] pair_plot = sns.pairplot(df[COLS_TO_PLOT], kind="reg", diag_kind="kde", height=4, plot_kws={"line_kws":{"color":"red"}}) pair_plot.fig.suptitle("Pairplot of selected variables")Running the snippet generates the following plot:

Figure 13.5: A pairplot with KDE plots on the diagonal and fitted regression lines in each scatterplot

We can make a few observations from the created pairplot:

- The distribution of

previous_payment_sepis highly skewed—it has a very long tail. - Connected to the previous point, we can observe some very extreme values of

previous_payment_sepin the scatterplots. - It is difficult to draw conclusions from the scatterplots, as there are 30,000 observations on each of them. When plotting such volumes of data, we could use transparent markers to better visualize the density of the observation in certain areas.

- The outliers can have a significant impact on the regression lines.

Additionally, we can separate the sexes by specifying the

hueargument:pair_plot = sns.pairplot(data=df, x_vars=COLS_TO_PLOT, y_vars=COLS_TO_PLOT, hue="sex", height=4) pair_plot.fig.suptitle("Pairplot of selected variables")Running the snippet generates the following plot:

Figure 13.6: The pairplot with separate markers for each sex

While we can gain some more insights from the diagonal plots with the split per sex, the scatterplots are still quite unreadable due to the sheer volume of plotted data.

As a potential solution, we could randomly sample from the entire dataset and only plot the selected observations. A possible downside of that approach is that we might miss some observations with extreme values (outliers).

- The distribution of

- Analyze the relationship between age and limit balance:

ax = sns.jointplot(data=df, x="age", y="limit_bal", hue="sex", height=10) ax.fig.suptitle("Age vs. limit balance")Running the snippet generates the following plot:

Figure 13.7: A joint plot showing the relationship between age and limit balance, grouped by sex

A joint plot contains quite a lot of useful information. First of all, we can see the relationship between two variables on the scatterplot. Then, we can also investigate the distributions of the two variables individually using the KDE plots along the axes (we can also plot histograms instead).

- Define and run a function for plotting the correlation heatmap:

def plot_correlation_matrix(corr_mat): sns.set(style="white") mask = np.zeros_like(corr_mat, dtype=bool) mask[np.triu_indices_from(mask)] = True fig, ax = plt.subplots() cmap = sns.diverging_palette(240, 10, n=9, as_cmap=True) sns.heatmap(corr_mat, mask=mask, cmap=cmap, vmax=.3, center=0, square=True, linewidths=.5, cbar_kws={"shrink": .5}, ax=ax) ax.set_title("Correlation Matrix", fontsize=16) sns.set(style="darkgrid") corr_mat = df.select_dtypes(include="number").corr() plot_correlation_matrix(corr_mat)Running the snippet generates the following plot:

Figure 13.8: Correlation heatmap of the numeric features

We can see that age seems to be uncorrelated to any of the other features.

- Analyze the distribution of age in groups using box plots:

ax = sns.boxplot(data=df, y="age", x="marriage", hue="sex") ax.set_title("Distribution of age")Running the snippet generates the following plot:

Figure 13.9: Distribution of age by marital status and sex

The distributions seem quite similar within marital groups, with men always having a higher median age.

- Plot the distribution of limit balance for each sex and education level:

ax = sns.violinplot(x="education", y="limit_bal", hue="sex", split=True, data=df) ax.set_title( "Distribution of limit balance per education level", fontsize=16 )Running the snippet generates the following plot:

Figure 13.10: Distribution of limit balance by education level and sex

Inspecting the plot reveals a few interesting patterns:

- The largest balance appears in the group with the Graduate school level of education.

- The shape of the distribution is different per education level: the Graduate school level resembles the Others category, while the High school level is similar to the University level.

- In general, there are few differences between the sexes.

- Investigate the distribution of the target variable per sex and education level:

ax = sns.countplot("default_payment_next_month", hue="sex", data=df, orient="h") ax.set_title("Distribution of the target variable", fontsize=16)Running the snippet generates the following plot:

Figure 13.11: Distribution of the target variable by sex

By analyzing the plot, we can say that the percentage of defaults is higher among male customers.

- Investigate the percentage of defaults per education level:

ax = df.groupby("education")["default_payment_next_month"] .value_counts(normalize=True) .unstack() .plot(kind="barh", stacked="True") ax.set_title("Percentage of default per education level", fontsize=16) ax.legend(title="Default", bbox_to_anchor=(1,1))Running the snippet generates the following plot:

Figure 13.12: Percentage of defaults by education level

Relatively speaking, most defaults happen among customers with a high-school education, while the fewest defaults happen in the Others category.

How it works...

In the previous recipe, we already explored two DataFrame methods that are useful for starting exploratory data analysis: shape and info. We can use them to quickly learn the shape of the dataset (number of rows and columns), what data types are used for representing each feature, and so on.

In this recipe, we are mostly using the seaborn library, as it is the go-to library when it comes to exploring data. However, we could use alternative plotting libraries. The plot method of a pandas DataFrame is quite powerful and allows for quickly visualizing our data. Alternatively, we could use plotly (and its plotly.express module) to create fully interactive data visualizations.

In this recipe, we started the analysis by using a very simple yet powerful method of a pandas DataFrame—describe. It printed summary statistics, such as the count, mean, min/max, and quartiles of all the numeric variables in the DataFrame. By inspecting these metrics, we could infer the value range of a certain feature, or whether the distribution is skewed (by looking at the difference between the mean and median). Also, we could easily spot values outside the plausible range, for example, a negative or very young/old age.

We can include additional percentiles in the describe method by passing an extra argument, for example, percentiles=[.99]. In this case, we added the 99th percentile.

The count metric represents the number of non-null observations, so it is also a way to determine which numeric features contain missing values. Another way of investigating the presence of missing values is by running df.isnull().sum(). For more information on missing values, please see the Identifying and dealing with missing values recipe.

In Step 3, we added the include="object" argument while calling the describe method to inspect the categorical features separately. The output was different from the numeric features: we could see the count, the number of unique categories, which one was the most frequent, and how many times it appeared in the dataset.

We can use include="all"to display the summary metrics for all features—only the metrics available for a given data type will be present, while the rest will be filled with NA values.

In Step 4, we showed a way of investigating the distribution of a variable, in this case, the age of the customers. To do so, we created a KDE plot. It is a method of visualizing the distribution of a variable, very similar to a traditional histogram. KDE represents the data using a continuous probability density curve in one or more dimensions. One of its advantages over a histogram is that the resulting plot is less cluttered and easier to interpret, especially when considering multiple distributions at once.

A common source of confusion around the KDE plots is about the units on the density axis. In general, the kernel density estimation results in a probability distribution. However, the height of the curve at each point gives a density, instead of the probability. We can obtain a probability by integrating the density across a certain range. The KDE curve is normalized so that the integral over all possible values is equal to 1. This means that the scale of the density axis depends on the data values. To take it a step further, we can decide how to normalize the density when we are dealing with multiple categories in one plot. If we use common_norm=True, each density is scaled by the number of observations so that the total area under all curves sums to 1. Otherwise, the density of each category is normalized independently.

Together with a histogram, the KDE plot is one of the most popular methods of inspecting the distribution of a single feature. To create a histogram, we can use the sns.histplot function. Alternatively, we can use the plot method of a pandas DataFrame, while specifying kind="hist". We show examples of creating histograms in the accompanying Jupyter notebook (available on GitHub).

An extension of this analysis can be done by using a pairplot. It creates a matrix of plots, where the diagonal shows the univariate histograms or KDE plots, while the off-diagonal plots are scatterplots of two features. This way, we can also try to see if there is a relationship between the two features. To make identifying the potential relationships easier, we have also added the regression lines.

In our case, we only plotted three features. That is because with 30,000 observations it can take quite some time to render the plot for all numeric columns, not to mention losing readability with so many small plots in one matrix. When using pairplots, we can also specify the hue argument to add a split for a category (such as sex, or education level).

We can also zoom into a relationship between two variables using a joint plot (sns.jointplot). It is a type of plot that combines a scatterplot to analyze the bivariate relationship and KDE plots or histograms to analyze the univariate distribution. In Step 6, we analyzed the relationship between age and limit balance.

In Step 7, we defined a function for plotting a heatmap representing the correlation matrix. In the function, we used a couple of operations to mask the upper triangular matrix and the diagonal (all diagonal elements of the correlation matrix are equal to 1). This way, the output is much easier to interpret. Using the annot argument of sns.heatmap, we could add the underlying numbers to the heatmap. However, we should only do so when the number of analyzed features is not too high. Otherwise, they will become unreadable.

To calculate the correlations, we used the corr method of a DataFrame, which by default calculates the Pearson’s correlation coefficient. We did this only for numeric features. There are also methods for calculating the correlation of categorical features; we mention some of them in the There’s more… section. Inspecting correlations is crucial, especially when using machine learning algorithms that assume linear independence of the features.

In Step 8, we used box plots to investigate the distribution of age by marital status and sex. A box plot (also called a box-and-whisker plot) presents the distribution of data in such a way that facilitates comparisons between levels of a categorical variable. A box plot presents the information about the distribution of the data using a 5-number summary:

- Median (50th percentile)—represented by the horizontal black line within the boxes.

- Interquartile range (IQR)—represented by the box. It spans the range between the first quartile (25th percentile) and the third quartile (75th percentile).

- The whiskers—represented by the lines stretched from the box. The extreme values of the whiskers (marked as horizontal lines) are defined as the first quartile − 1.5 IQR and the third quartile + 1.5 IQR.

We can use the box plots to gather the following insights about our data:

- The points marked outside of the whiskers can be considered outliers. This method is called Tukey’s fences and is one of the simplest outlier detection techniques. In short, it assumes that observations lying outside of the [Q1 – 1.5 IQR, Q3 + 1.5 IQR] range are outliers.

- The potential skewness of the distribution. A right-skewed (positive skewness) distribution can be observed when the median is closer to the lower bound of the box, and the upper whisker is longer than the lower one. Vice versa for the left-skewed distributions. Figure 13.13 illustrates this.

Figure 13.13: Determining the skewness of distribution using box plots

In Step 9, we used violin plots to investigate the distribution of the limit balance feature per education level and sex. We created them by using sns.violinplot. We indicated the education level with the x argument. Additionally, we set hue="sex" and split=True. By doing so, each half of the violin represented a different sex.

In general, violin plots are very similar to box plots and we can find the following information in them:

- The median, represented by a white dot.

- The interquartile range, represented as the black bar in the center of a violin.

- The lower and upper adjacent values, represented by the black lines stretched from the bar. The lower adjacent value is defined as the first quartile − 1.5 IQR, while the upper one is defined as the third quartile + 1.5 IQR. Again, we can use the adjacent values as a simple outlier detection technique.

Violin plots are a combination of a box plot and a KDE plot. A definite advantage of a violin plot over a box plot is that the former enables us to clearly see the shape of the distribution. This is especially useful when dealing with multimodal distributions (distributions with multiple peaks), such as the limit balance violin in the Graduate school education category.

In the last two steps, we investigated the distribution of the target variable (default) per sex and education. In the first case, we used sns.countplot to display the count of occurrences of both possible outcomes for each sex. In the second case, we opted for a different approach. We wanted to plot the percentage of defaults per education level, as comparing percentages between groups is easier than comparing nominal values. To do so, we first grouped by education level, selected the variable of interest, calculated the percentages per group (using the value_counts(normalize=True) method), unstacked (to remove multi-index), and generated a plot using the already familiar plot method.

There’s more...

In this recipe, we introduced a range of possible approaches to investigate the data at hand. However, this requires many lines of code (quite a lot of them boilerplate) each time we want to carry out the EDA. Thankfully, there is a Python library that simplifies the process. The library is called pandas_profiling and with a single line of code, it generates a comprehensive summary of the dataset in the form of an HTML report.

To create a report, we need to run the following:

from pandas_profiling import ProfileReport

profile = ProfileReport(df, title="Loan Default Dataset EDA")

profile

We could also create a profile using the new (added by pandas_profiling) profile_report method of a pandas DataFrame.

For practical reasons, we might prefer to save the report as an HTML file and inspect it in a browser instead of the Jupyter notebook. We can easily do so using the following snippet:

profile.to_file("loan_default_eda.html")

The report is very exhaustive and contains a lot of useful information. Please see the following figure for an example.

Figure 13.14: Example of a deep-dive into the limit balance feature

For brevity’s sake, we will only discuss selected elements of the report:

- An overview giving information about the size of the DataFrame (number of features/rows, missing values, duplicated rows, memory size, breakdown per data type).

- Alerts warning us about potential issues with our data, including a high percentage of duplicated rows, highly correlated (and potentially redundant) features, features that have a high percentage of zero values, highly skewed features, etc.

- Different measures of correlation: Spearman’s

, Pearson’s r, Kendall’s

, Pearson’s r, Kendall’s  , Cramér’s V, and Phik (

, Cramér’s V, and Phik ( ). The last one is especially interesting, as it is a recently developed correlation coefficient that works consistently between categorical, ordinal, and interval variables. On top of that, it captures non-linear dependencies. Please see the See also section for a reference paper describing the metric.

). The last one is especially interesting, as it is a recently developed correlation coefficient that works consistently between categorical, ordinal, and interval variables. On top of that, it captures non-linear dependencies. Please see the See also section for a reference paper describing the metric. - Detailed analysis of missing values.

- Detailed univariate analysis of each feature (more details are available by clicking Toggle details in the report).

pandas-profiling is the most popular auto-EDA tool in Python’s vast ecosystem of libraries. However, it is definitely not the only one. You can also investigate the following:

sweetviz—https://github.com/fbdesignpro/sweetvizautoviz—https://github.com/AutoViML/AutoVizdtale—https://github.com/man-group/dtaledataprep—https://github.com/sfu-db/datapreplux—https://github.com/lux-org/lux

Each one of them approaches EDA a bit differently. Hence, it is best to explore them all and pick the tool that works best for your needs.

See also

For more information about Phik (![]() ), please see the following paper:

), please see the following paper:

- Baak, M., Koopman, R., Snoek, H., & Klous, S. (2020). “A new correlation coefficient between categorical, ordinal and interval variables with Pearson characteristics.” Computational Statistics & Data Analysis, 152, 107043. https://doi.org/10.1016/j.csda.2020.107043.

Splitting data into training and test sets

Having completed the EDA, the next step is to split the dataset into training and test sets. The idea is to have two separate datasets:

- Training set—on this part of the data, we train a machine learning model

- Test set—this part of the data was not seen by the model during training and is used to evaluate its performance

By splitting the data this way, we want to prevent overfitting. Overfitting is a phenomenon that occurs when a model finds too many patterns in data used for training and performs well only on that particular data. In other words, it fails to generalize to unseen data.

This is a very important step in the analysis, as doing it incorrectly can introduce bias, for example, in the form of data leakage. Data leakage can occur when, during the training phase, a model observes information to which it should not have access. We follow up with an example. A common scenario is that of imputing missing values with the feature’s average. If we had done this before splitting the data, we would have also used data from the test set to calculate the average, introducing leakage. That is why the proper order would be to split the data into training and test sets first and then carry out the imputation, using the data observed in the training set. The same goes for setting up rules for identifying outliers.

Additionally, splitting the data ensures consistency, as unseen data in the future (in our case, new customers that will be scored by the model) will be treated in the same way as the data in the test set.

How to do it...

Execute the following steps to split the dataset into training and test sets:

- Import the libraries:

import pandas as pd from sklearn.model_selection import train_test_split - Separate the target from the features:

X = df.copy() y = X.pop("default_payment_next_month") - Split the data into training and test sets:

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, random_state=42 ) - Split the data into training and test sets without shuffling:

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, shuffle=False ) - Split the data into training and test sets with stratification:

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, stratify=y, random_state=42 ) - Verify that the ratio of the target is preserved:

print("Target distribution - train") print(y_train.value_counts(normalize=True).values) print("Target distribution - test") print(y_test.value_counts(normalize=True).values)

Running the snippet generates the following output:

Target distribution - train

[0.77879167 0.22120833]

Target distribution - test

[0.77883333 0.22116667]

In both sets, the percentage of payment defaults is around 22.12%.

How it works...

After importing the libraries, we separated the target from the features using the pop method of a pandas DataFrame.

In Step 3, we showed how to do the most basic split. We passed X and y objects to the train_test_split function. Additionally, we specified the size of the test set, as a fraction of all observations. For reproducibility, we also specified the random state. We had to assign the output of the function to four new objects.

In Step 4, we took a different approach. By specifying test_size=0.2 and shuffle=False, we assigned the first 80% of the data to the training set and the remaining 20% to the test set. This might come in handy when we want to preserve the order in which the observations are becoming available.

In Step 5, we also specified the stratification argument by passing the target variable (stratify=y). Splitting the data with stratification means that both the training and test sets will have an almost identical distribution of the specified variable. This parameter is very important when dealing with imbalanced data, such as in cases of fraud detection. If 99% of data is normal and only 1% covers fraudulent cases, a random split can result in the training set not having any fraudulent cases. That is why when dealing with imbalanced data, it is crucial to split it correctly.

In the very last step, we verified if the stratified train/test split resulted in the same ratio of defaults in both datasets. To verify that, we used the value_counts method of a pandas DataFrame.

In the rest of the chapter, we will use the data obtained from the stratified split.

There’s more...

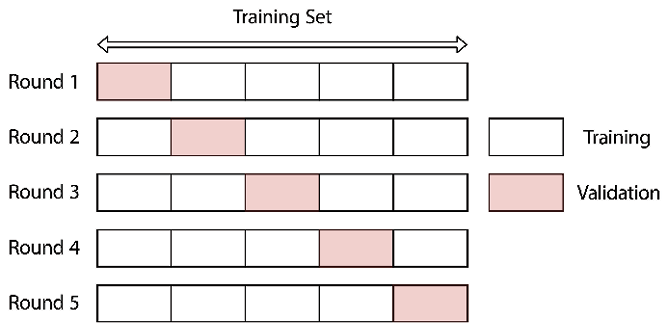

It is also common to split data into three sets: training, validation, and test. The validation set is used for frequent evaluation and tuning of the model’s hyperparameters. Suppose we want to train a decision tree classifier and find the optimal value of the max_depth hyperparameter, which decides the maximum depth of the tree.

To do so, we can train the model multiple times using the training set, each time with a different value of the hyperparameter. Then, we can evaluate the performance of all these models, using the validation set. We pick the best model of those, and then ultimately evaluate its performance on the test set.

In the following snippet, we illustrate a possible way of creating a train-validation-test split, using the same train_test_split function:

import numpy as np

# define the size of the validation and test sets

VALID_SIZE = 0.1

TEST_SIZE = 0.2

# create the initial split - training and temp

X_train, X_temp, y_train, y_temp = train_test_split(

X, y,

test_size=(VALID_SIZE + TEST_SIZE),

stratify=y,

random_state=42

)

# calculate the new test size

new_test_size = np.around(TEST_SIZE / (VALID_SIZE + TEST_SIZE), 2)

# create the valid and test sets

X_valid, X_test, y_valid, y_test = train_test_split(

X_temp, y_temp,

test_size=new_test_size,

stratify=y_temp,

random_state=42

)

We basically ran train_test_split twice. What is important is that we had to adjust the sizes of the test_size input in such a way that the initially defined proportions (70-10-20) were preserved.

We also verify that everything went according to plan: that the size of the datasets corresponds to the intended split and that the ratio of defaults is the same in each set. We do so using the following snippet:

print("Percentage of data in each set ----")

print(f"Train: {100 * len(X_train) / len(X):.2f}%")

print(f"Valid: {100 * len(X_valid) / len(X):.2f}%")

print(f"Test: {100 * len(X_test) / len(X):.2f}%")

print("")

print("Class distribution in each set ----")

print(f"Train: {y_train.value_counts(normalize=True).values}")

print(f"Valid: {y_valid.value_counts(normalize=True).values}")

print(f"Test: {y_test.value_counts(normalize=True).values}")

Executing the snippet generates the following output:

Percentage of data in each set ----

Train: 70.00%

Valid: 9.90%

Test: 20.10%

Class distribution in each set ----

Train: [0.77879899 0.22120101]

Valid: [0.77878788 0.22121212]

Test: [0.77880948 0.22119052]

We have indeed verified that the original dataset was split with the intended 70-10-20 ratio and that the distribution of defaults (target variable) was preserved due to stratification. Sometimes, we do not have enough data to split it into three sets, either because we do not have that many observations in general or because the data can be highly imbalanced, and we would remove valuable training samples from the training set. That is why practitioners often use a method called cross-validation, which we describe in the Tuning hyperparameters using grid search and cross-validation recipe.

Identifying and dealing with missing values

In most real-life cases, we do not work with clean, complete data. One of the potential problems we are bound to encounter is that of missing values. We can categorize missing values by the reason they occur:

- Missing completely at random (MCAR)—The reason for the missing data is unrelated to the rest of the data. An example could be a respondent accidentally missing a question in a survey.

- Missing at random (MAR)—The missingness of the data can be inferred from data in another column(s). For example, a missing response to a certain survey question can to some extent be determined conditionally by other factors such as sex, age, lifestyle, and so on.

- Missing not at random (MNAR)—When there is some underlying reason for the missing values. For example, people with very high incomes tend to be hesitant about revealing it.

- Structurally missing data—Often a subset of MNAR, the data is missing because of a logical reason. For example, when a variable representing the age of a spouse is missing, we can infer that a given person has no spouse.

Some machine learning algorithms can account for missing data, for example, decision trees can treat missing values as a separate and unique category. However, many algorithms either cannot do so or their popular implementations (such as the ones in scikit-learn) do not incorporate this functionality.

We should only impute features, not the target variable!

Some popular solutions to handling missing values include:

- Drop observations with one or more missing values—while this is the easiest approach, it is not always a good one, especially in the case of small datasets. We should also be aware of the fact that even if there is only a small fraction of missing values per feature, they do not necessarily occur for the same observations (rows), so the actual number of rows we might need to remove can be much higher. Additionally, in the case of data missing not at random, removing such observations from the analysis can introduce bias into the results.

- We can opt to drop the entire column (feature) if it is mostly populated with missing values. However, we need to be cautious as this can already be an informative signal for our model.

- Replace the missing values with a value far outside the possible range, so that algorithms such as decision trees can treat it as a special value, indicating missing data.

- In the case of dealing with time series, we can use forward-filling (take the last-known observation before the missing one), backward-filling (take the first-known observation after the missing one), or interpolation (linear or more advanced).

- Hot-deck imputation—in this simple algorithm, we first select one or more of the other features correlated with the one containing missing values. Then, we sort the rows of the dataset by these selected features. Lastly, we iterate over the rows from top to bottom and replace each missing value with the previous non-missing value in the same feature.

- Replace the missing values with an aggregate metric—for continuous data, we can use the mean (when there are no clear outliers in the data) or median (when there are outliers). In the case of categorical variables, we can use mode (the most common value in the set). Potential disadvantages of mean/median imputation include the reduction of variance in the dataset and distorting the correlations between the imputed features and the rest of the dataset.

- Replace the missing values with aggregate metrics calculated per group—for example, when dealing with body-related metrics, we can calculate the mean or median per sex, to more accurately replace the missing data.

- ML-based approaches—we can treat the considered feature as a target, and use complete cases to train a model and predict values for the missing observations.

In general, exploring the missing values is part of the EDA. We briefly touched upon it when analyzing the report generated with pandas_profiling. But we deliberately left it without much coverage until now, as it is crucial to carry out any kind of missing value imputation after the train/test split. Otherwise, we cause data leakage.

In this recipe, we show how to identify the missing values in our data and how to impute them.

Getting ready

For this recipe, we assume that we have the outputs of the stratified train/test split from the previous recipe, Splitting data into training and test sets.

How to do it...

Execute the following steps to investigate and deal with missing values in the dataset:

- Import the libraries:

import pandas as pd import missingno as msno from sklearn.impute import SimpleImputer - Inspect the information about the DataFrame:

X.info()Executing the snippet generates the following summary (abbreviated):

RangeIndex: 30000 entries, 0 to 29999 Data columns (total 23 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 limit_bal 30000 non-null int64 1 sex 29850 non-null object 2 education 29850 non-null object 3 marriage 29850 non-null object 4 age 29850 non-null float64 5 payment_status_sep 30000 non-null object 6 payment_status_aug 30000 non-null object 7 payment_status_jul 30000 non-null objectOur dataset has more columns, however, the missing values are only present in the 4 columns visible in the summary. For brevity, we have not included the rest of the output.

- Visualize the nullity of the DataFrame:

msno.matrix(X)Running the line of code results in the following plot:

Figure 13.15: The nullity matrix plot of the loan default dataset

The white bars visible in the columns represent missing values. We should keep in mind that when working with large datasets with only a few missing values, those white bars might be quite difficult to spot.

The line on the right side of the plot describes the shape of data completeness. The two numbers indicate the maximum and minimum nullity in the dataset. When an observation has no missing values, the line will be at the maximum right position and have a value equal to the number of columns in the dataset (23 in this case). As the number of missing values starts to increase within an observation, the line moves towards the left. The nullity value of 21 indicates that there is a row with 2 missing values in it, as the maximum value for this dataset is 23 (the number of columns).

- Define columns with missing values per data type:

NUM_FEATURES = ["age"] CAT_FEATURES = ["sex", "education", "marriage"] - Impute numerical features:

for col in NUM_FEATURES: num_imputer = SimpleImputer(strategy="median") num_imputer.fit(X_train[[col]]) X_train.loc[:, col] = num_imputer.transform(X_train[[col]]) X_test.loc[:, col] = num_imputer.transform(X_test[[col]]) - Impute categorical features:

for col in CAT_FEATURES: cat_imputer = SimpleImputer(strategy="most_frequent") cat_imputer.fit(X_train[[col]]) X_train.loc[:, col] = cat_imputer.transform(X_train[[col]]) X_test.loc[:, col] = cat_imputer.transform(X_test[[col]])

We can verify that neither the training nor test sets contain missing values using the info method.

How it works...

In Step 1, we imported the required libraries. Then, we used the info method of a pandas DataFrame to view information about the columns, such as their type and the number of non-null observations. The difference between the total number of observations and the non-null ones corresponds to the number of missing observations. Another way of inspecting the number of missing values per column is to run X.isnull().sum().

Instead of imputing, we could also drop the observations (or even columns) containing missing values. To drop all rows containing any missing value, we could use X_train.dropna(how="any", inplace=True). In our sample case, the number of missing values is not large, however, in a real-life dataset it can be considerable or the dataset might be too small for the analysts to be able to remove observations. Alternatively, we could also specify the thresh argument of the dropna method to indicate in how many columns an observation (row) needs to have missing values in order to be dropped from the dataset.

In Step 3, we visualized the nullity of the DataFrame, with the help of the missingno library.

In Step 4, we defined lists containing features we wanted to impute, one list per data type. The reason for this is the fact that numeric features are imputed using different strategies than categorical features. For basic imputation, we used the SimpleImputer class from scikit-learn.

In Step 5, we iterated over the numerical features (in this case, only the age feature), and used the median to replace the missing values. Inside the loop, we defined the imputer object with the correct strategy ("median"), fitted it to the given column of the training data, and transformed both the training and test data. This way, the median was estimated by using only the training data, preventing potential data leakage.

In this recipe, we used scikit-learn to deal with the imputation of missing values. However, we can also do this manually. To do so, for each column with any missing values (either in the training or test set), we need to calculate the given statistic (mean/median/mode) using the training set, for example, age_median = X_train.age.median(). Afterward, we need to use this median to fill in the missing values for the age column (in both the training and test sets) using the fillna method. We show how to do it in the Jupyter notebook available in the book’s GitHub repository.

Step 6 is analogous to Step 5, where we used the same approach to iterate over categorical columns. The difference lies in the selected strategy—we used the most frequent value ("most_frequent") in the given column. This strategy can be used for both categorical and numerical features. In the latter case, it corresponds to the mode.

There’s more...

There are a few more things worth mentioning when covering handling missing values.

More visualizations available in the missingno library

In this recipe, we have already covered the nullity matrix representation of missing values in a dataset. However, the missingno library offers a few more helpful visualizations:

msno.bar—generates a bar chart representing the nullity in each column. Might be easier to quickly interpret than the nullity matrix.msno.heatmap—visualizes the nullity correlation, that is, how strongly the presence/absence of one feature impacts the presence of another. The interpretation of the nullity correlation is very similar to the standard Pearson’s correlation coefficient. It ranges from -1 (when one feature occurs, then the other one certainly does not) through 0 (features appearing or not appearing have no effect on each other) to 1 (if one feature occurs, then the other one certainly does too).msno.dendrogram—allows us to better understand the correlations between variable completion. Under the hood, it uses hierarchical clustering to bin features against one another by their nullity correlation.

Figure 13.16: Example of the nullity dendrogram

To interpret the figure, we need to analyze it from a top-down perspective. First, we should look at cluster leaves, which are linked at a distance of zero. Those fully predict each other’s presence, that is, one feature might always be missing when the other is present, or they might always both be present or missing, and so on. Cluster leaves with a split close to zero predict each other very well.

In our case, the dendrogram links together the features that are present in every observation. We know this for certain, as we have introduced the missing observations by design in only four features.

ML-based approaches to imputing missing values

In this recipe, we mentioned how to impute missing values. Approaches such as replacing the missing values with one large value or the mean/median/mode are called single imputation approaches, as they replace missing values with one specific value. On the other hand, there are also multiple imputation approaches, and one of those is Multiple Imputation by Chained Equations (MICE).

In short, the algorithm runs multiple regression models, and each missing value is determined conditionally on the basis of the non-missing data points. A potential benefit of using an ML-based approach to imputation is the reduction of bias introduced by single imputation. The MICE algorithm is available in scikit-learn under the name of IterativeImputer.

Alternatively, we could use the nearest neighbors imputation (implemented in scikit-learn's KNNImputer). The underlying assumption of the KNN imputation is that a missing value can be approximated by the values of the same feature coming from the observations that are closest to it. The closeness to the other observations is determined using other features and some form of a distance metric, for example, the Euclidean distance.

As the algorithm uses KNN, it comes with some of its drawbacks:

- Requires tuning of the k hyperparameter for best performance

- We need to scale the data and preprocess categorical features

- We need to pick an appropriate distance metric (especially in cases when we have a mix of categorical and numerical features)

- The algorithm is sensitive to outliers and noise in data

- Can be computationally expensive as it requires calculating the distances between every pair of observations

Another of the available ML-based algorithms is called MissForest (available in the missingpy library). Without going into too much detail, the algorithm starts by filling in the missing values with the median or mode imputation. Then, it trains a Random Forest model to predict the feature that is missing using the other known features. The model is trained using the observations for which we know the values of the target (so the ones that were not imputed in the first step) and then makes predictions for the observations with the missing feature. In the next step, the initial median/mode prediction is replaced with the one coming from the RF model. The process of looping through the missing data points is repeated several times, and each iteration tries to improve upon the previous one. The algorithm stops when some stopping criterion is met or we exhaust the allowed number of iterations.

Advantages of MissForest:

- Can handle missing values in both numeric and categorical features

- Does not require data preprocessing (such as scaling)

- Robust to noisy data, as Random Forest makes little to no use of uninformative features

- Non-parametric—it does not make assumptions about the relationship between the features (MICE assumes linearity)

- Can leverage non-linear and interaction effects between features to improve imputation performance

Disadvantages of MissForest:

- Imputation time increases with the number of observations, features, and the number of features containing missing values

- Similar to Random Forest, not very easy to interpret

- It is an algorithm and not a model object we can store somewhere (for example, as a pickle file) and reuse whenever we need to impute missing values

See also

Additional resources are available here:

- Azur, M. J., Stuart, E. A., Frangakis, C., & Leaf, P. J. (2011). “Multiple imputation by chained equations: what is it and how does it work?” International Journal of Methods in Psychiatric Research, 20(1), 40-49. https://doi.org/10.1002/mpr.329.

- Buck, S. F. (1960). “A method of estimation of missing values in multivariate data suitable for use with an electronic computer.” Journal of the Royal Statistical Society: Series B (Methodological), 22(2), 302-306. https://www.jstor.org/stable/2984099.

- Stekhoven, D. J. & Bühlmann, P. (2012). “MissForest—non-parametric missing value imputation for mixed-type data.” Bioinformatics, 28(1), 112-118.

- van Buuren, S. & Groothuis-Oudshoorn, K. (2011). “MICE: Multivariate Imputation by Chained Equations in R.” Journal of Statistical Software 45 (3): 1–67.

- Van Buuren, S. (2018). Flexible Imputation of Missing Data. CRC press.

miceforest—a Python library for fast, memory-efficient MICE with LightGBM.missingpy—a Python library containing the implementation of the MissForest algorithm.

Encoding categorical variables

In the previous recipes, we have seen that some features are categorical variables (originally represented as either object or category data types). However, most machine learning algorithms work exclusively with numeric data. That is why we need to encode categorical features into a representation compatible with the ML models.

The first approach to encoding categorical features is called label encoding. In this approach, we replace the categorical values of a feature with distinct numeric values. For example, with three distinct classes, we use the following representation: [0, 1, 2].

This is already very similar to the outcome of converting to the category data type in pandas. Let’s assume we have a DataFrame called df_cat, which has a feature called feature_1. This feature is encoded as the category data type. We can then access the codes of the categories by running df_cat["feature_1"].cat.codes. Additionally, we can recover the mapping by running dict(zip(df_cat["feature_1"].cat.codes, df_cat["feature_1"])). We can also use the pd.factorize function to arrive at a very similar representation.

One potential issue with label encoding is that it introduces a relationship between the categories, while often there is none. In a three-classes example, the relationship looks as follows: 0 < 1 < 2. This does not make much sense if the categories are, for example, countries. However, this can work for features that represent some kind of order (ordinal variables). For example, label encoding could work well with a rating of service received, on a scale of Bad-Neutral-Good.

To overcome the preceding problem, we can use one-hot encoding. In this approach, for each category of a feature, we create a new column (sometimes called a dummy variable) with binary encoding to denote whether a particular row belongs to this category. A potential drawback of this method is that it significantly increases the dimensionality of the dataset (curse of dimensionality). First, this can increase the risk of overfitting, especially when we do not have that many observations in our dataset. Second, a high-dimensional dataset can be a significant problem for any distance-based algorithm (for example, k-Nearest Neighbors), as—on a very high level—a large number of dimensions causes all the observations to appear equidistant from each other. This can naturally render the distance-based models useless.

Another thing we should be aware of is that creating dummy variables introduces a form of redundancy to the dataset. In fact, if a feature has three categories, we only need two dummy variables to fully represent it. That is because if an observation is neither of the two, it must be the third one. This is often referred to as the dummy-variable trap, and it is best practice to always remove one column (known as the reference value) from such an encoding. This is especially important in unregularized linear models.

Summing up, we should avoid label encoding as it introduces false order to the data, which can lead to incorrect conclusions. Tree-based methods (decision trees, Random Forest, and so on) can work with categorical data and label encoding. However, one-hot encoding is the natural representation of categorical features for algorithms such as linear regression, models calculating distance metrics between features (such as k-means clustering or k-Nearest Neighbors), or Artificial Neural Networks (ANN).

Getting ready

For this recipe, we assume that we have the outputs of the imputed training and test sets from the previous recipe, Identifying and dealing with missing values.

How to do it...

Execute the following steps to encode categorical variables with label encoding and one-hot encoding:

- Import the libraries:

import pandas as pd from sklearn.preprocessing import LabelEncoder, OneHotEncoder from sklearn.compose import ColumnTransformer - Use Label Encoder to encode a selected column:

COL = "education" X_train_copy = X_train.copy() X_test_copy = X_test.copy() label_enc = LabelEncoder() label_enc.fit(X_train_copy[COL]) X_train_copy.loc[:, COL] = label_enc.transform(X_train_copy[COL]) X_test_copy.loc[:, COL] = label_enc.transform(X_test_copy[COL]) X_test_copy[COL].head()Running the snippet generates the following preview of the transformed column:

6907 3 24575 0 26766 3 2156 0 3179 3 Name: education, dtype: int64We created a copy of

X_trainandX_test, just to show how to work withLabelEncoder, but we do not want to modify the actual DataFrames we intend to use later.We can access the labels stored within the fitted

LabelEncoderby using theclasses_attribute.

- Select categorical features for one-hot encoding:

cat_features = X_train.select_dtypes(include="object") .columns .to_list() cat_featuresWe will apply one-hot encoding to the following columns:

['sex', 'education', 'marriage', 'payment_status_sep', 'payment_status_aug', 'payment_status_jul', 'payment_status_jun', 'payment_status_may', 'payment_status_apr']

- Instantiate the

OneHotEncoderobject:one_hot_encoder = OneHotEncoder(sparse=False, handle_unknown="error", drop="first") - Create the column transformer using the one-hot encoder:

one_hot_transformer = ColumnTransformer( [("one_hot", one_hot_encoder, cat_features)], remainder="passthrough", verbose_feature_names_out=False ) - Fit the transformer:

one_hot_transformer.fit(X_train)Executing the snippet prints the following preview of the column transformer:

Figure 13.17: Preview of the column transformer with one-hot encoding

- Apply the transformations to both training and test sets:

col_names = one_hot_transformer.get_feature_names_out() X_train_ohe = pd.DataFrame( one_hot_transformer.transform(X_train), columns=col_names, index=X_train.index ) X_test_ohe = pd.DataFrame(one_hot_transformer.transform(X_test), columns=col_names, index=X_test.index)

As we have mentioned before, one-hot encoding comes with the potential disadvantage of increasing the dimensionality of the dataset. In our case, we started with 23 columns. After applying one-hot encoding, we ended up with 72 columns.

How it works...

First, we imported the required libraries. In the second step, we selected the column we wanted to encode using label encoding, instantiated the LabelEncoder, fitted it to the training data, and transformed both the training and the test data. We did not want to keep the label encoding, and for that reason we operated on copies of the DataFrames.

We demonstrated using label encoding as it is one of the available options, however, it comes with quite serious drawbacks. So in practice, we should refrain from using it. Additionally, scikit-learn's documentation warns us with the following statement: This transformer should be used to encode target values, i.e. y, and not the input X.

In Step 3, we started the preparations for one-hot encoding by creating a list of all the categorical features. We used the select_dtypes method to select all features with the object data type.

In Step 4, we created an instance of OneHotEncoder. We specified that we did not want to work with a sparse matrix (a special kind of data type, suitable for storing matrices with a very high percentage of zeros), we dropped the first column per feature (to avoid the dummy variable trap), and we specified what to do if the encoder finds an unknown value while applying the transformation (handle_unknown='error').

In Step 5, we defined the ColumnTransformer, which is a convenient approach to applying the same transformation (in this case, the one-hot encoder) to multiple columns. We passed a list of steps, where each step was defined by a tuple. In this case, it was a single tuple with the name of the step ("one_hot"), the transformation to be applied, and the features to which we wanted to apply the transformation.

When creating the ColumnTransformer, we also specified another argument, remainder="passthrough", which has effectively fitted and transformed only the specified columns, while leaving the rest intact. The default value for the remainder argument was "drop", which dropped the unused columns. We also specified the value of the verbose_feature_names_out argument as False. This way, when we use the get_feature_names_out method later, it will not prefix all feature names with the name of the transformer that generated that feature.

If we had not changed it, some features would have the "one_hot__" prefix, while the others would have "remainder__".

In Step 6, we fitted the column transformer to the training data using the fit method. Lastly, we applied the transformations using the transform method to both training and test sets. As the transform method returns a numpy array instead of a pandas DataFrame, we had to convert them ourselves. We started by extracting the names of the features using the get_feature_names_out. Then, we created a pandas DataFrame using the transformed features, the new column names, and the old indices (to keep everything in order).

Similar to handling missing values or detecting outliers, we fit all the transformers (including one-hot encoding) to the training data only, and then we apply the transformations to both training and test sets. This way, we avoid potential data leakage.

There’s more...

We would like to mention a few more things regarding the encoding of categorical variables.

Using pandas for one-hot encoding

Alternatively to scikit-learn, we can use pd.get_dummies for one-hot encoding categorical features. The example syntax looks like the following:

pd.get_dummies(X_train, prefix_sep="_", drop_first=True)

It’s good to know this alternative approach, as it can be easier to work with (column names are automatically accounted for), especially when creating a quick Proof of Concept (PoC). However, when productionizing the code, the best approach would be to use the scikit-learn variant and create the dummy variables within a pipeline.

Specifying possible categories for OneHotEncoder

When creating ColumnTransformer, we could have additionally provided a list of possible categories for all the considered features. A simplified example follows:

one_hot_encoder = OneHotEncoder(

categories=[["Male", "Female", "Unknown"]],

sparse=False,

handle_unknown="error",

drop="first"

)

one_hot_transformer = ColumnTransformer(

[("one_hot", one_hot_encoder, ["sex"])]

)

one_hot_transformer.fit(X_train)

one_hot_transformer.get_feature_names_out()

Executing the snippet returns the following:

array(['one_hot__sex_Female', 'one_hot__sex_Unknown'], dtype=object)

By passing a list (of lists) containing possible categories for each feature, we are taking into account the possibility that the specific value does not appear in the training set, but might appear in the test set (or as part of the batch of new observations in the production environment). If this were the case, we would run into errors.

In the preceding code block, we added an extra category called "Unknown" to the column representing sex. As a result, we will end up with an extra “dummy” column for that category. The male category was dropped as the reference one.

Category Encoders library

Aside from using pandas and scikit-learn, we can also use another library called Category Encoders. It belongs to a set of libraries compatible with scikit-learn and provides a selection of encoders using a similar fit-transform approach. That is why it is also possible to use them together with ColumnTransformer and Pipeline.

We show an alternative implementation of the one-hot encoder.

Import the library:

import category_encoders as ce

Create the encoder object:

one_hot_encoder_ce = ce.OneHotEncoder(use_cat_names=True)

Additionally, we could specify an argument called drop_invariant, to indicate that we want to drop columns with no variance, so for example columns filled with only one distinct value. This could help with reducing the number of features.

Fit the encoder, and transform the data:

one_hot_encoder_ce.fit(X_train)

X_train_ce = one_hot_encoder_ce.transform(X_train)

This implementation of the one-hot encoder automatically encodes only the columns containing strings (unless we specify only a subset of categorical columns by passing a list to the cols argument). By default, it also returns a pandas DataFrame (in comparison to the numpy array, in the case of scikit-learn's implementation) with the adjusted column names. The only drawback of this implementation is that it does not allow for dropping the one redundant dummy column of each feature.

A warning about one-hot encoding and decision tree-based algorithms

While regression-based models can naturally handle the OR condition of one-hot-encoded features, the same is not that simple with decision tree-based algorithms. In theory, decision trees are capable of handling categorical features without the need for encoding.

However, its popular implementation in scikit-learn still requires all features to be numerical. Without going into too much detail, such an approach favors continuous numerical features over one-hot-encoded dummies, as a single dummy can only bring a fraction of the total feature information into the model. A possible solution is to use either a different kind of encoding (label/target encoding) or an implementation that handles categorical features, such as Random Forest in the h2o library or the LightGBM model.

Fitting a decision tree classifier

A decision tree classifier is a relatively simple yet very important machine learning algorithm used for both regression and classification problems. The name comes from the fact that the model creates a set of rules (for example, if x_1 > 50 and x_2 < 10 then y = 'default'), which taken together can be visualized in the form of a tree. The decision trees segment the feature space into a number of smaller regions, by repeatedly splitting the features at a certain value. To do so, they use a greedy algorithm (together with some heuristics) to find a split that minimizes the combined impurity of the children nodes. The impurity in classification tasks is measured using the Gini impurity or entropy, while for regression problems the trees use the mean squared error or the mean absolute error as the metric.