5

State Estimation

The theme of the previous two chapters will now be extended to the case in which the variables of interest change over time. These variables can be either real-valued vectors (as in Chapter 4) or discrete class variables that only cover a finite number of symbols (as in Chapter 3). In both cases, the variables of interest are called state variables.

The state of a system is a description of the aspects of the system that allow us to predict its behaviour over time. For example, a system describing elevator controller can have basic enumeration of states such as ‘closed’, ‘closing’, ‘open’ and ‘opening’, which can be the elements of a finite state space. A state space that is described using more than one variable, such as a counter for seconds and a counter for minutes in a clock system, can be described as having more than one state variables (in the example it can be ‘seconds’ and ‘minutes’).

The design of a state estimator is based on a state space model, which describes the underlying physical process of the application. For instance, in a tracking application, the variables of interest are the position and velocity of a moving object. The state space model gives the connection between the velocity and the position (which, in this case, is a kinematical relation). Variables, like position and velocity, are real numbers. Such variables are called continuous states.

Although a system is supposed to move through some sequence of states over time, instead of getting to see the states, usually we only get to make a sequence of observations of the system, where the observations (also known as measurements) give partial, noisy information about the actual underlying state. To infer the information about the current state of the system given the history of observations, we need an observer (also known as a measurement model), which describes how the data of an observation system depends on the state variables. For instance, in a radar tracking system, the measurements are the azimuth and range of the object. Here, the measurements are directly related to the two-dimensional position of the object if represented in polar coordinates.

The estimation of a discrete state variable is sometimes called mode estimation or labelling. An example is in speech recognition where – for the recognition of a word – a sequence of phonetic classes must be estimated from a sequence of acoustic features. Here, too, the analysis is based on a state space model and a measurement model (in fact, each possible word has its own state space model).

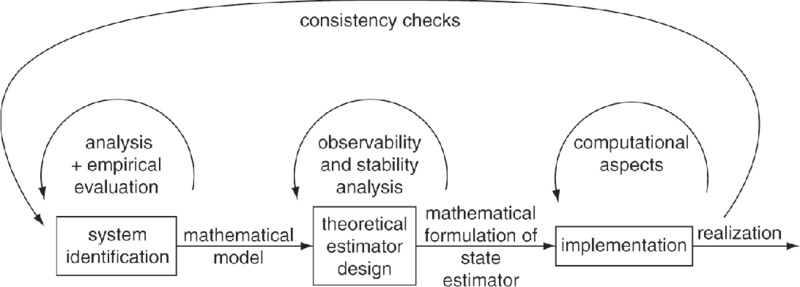

The outline of the chapter is as follows. Section 5.1 gives a framework for estimation in dynamic systems. It introduces the various concepts, notations and mathematical models. Next, it presents a general scheme to obtain the optimal solution. In practice, however, such a general scheme is of less value because of the computational complexity involved when trying to implement the solution directly. Therefore, the general approach needs to be worked out for different cases. Section 5.2 is devoted to the case of infinite discrete-time state variables. Practical solutions are feasible if the models are linear-Gaussian (Section 5.2.1). If the model is not linear, one can resort to suboptimal methods (Section 5.2.2). Section 5.3 deals with the finite discrete-time state case. Sections 5.4 and 5.5 contain introductions to particle filtering and genetic algorithms for state estimation, respectively. Both methods can be used to handle non-linear and non-Gaussian models covering the continuous and the discrete cases, and even mixed cases (i.e. combinations of continuous and discrete states). The chapter finalizes with Section 5.6, which deals with the practical issues such as implementations, deployment and consistency checks. The chapter confines itself to the theoretical and practical aspects of state estimation.

5.1 A General Framework for Online Estimation

Usually, the estimation problem is divided into three paradigms:

- Online estimation (optimal filtering)

- Prediction

- Retrodiction (smoothing, offline estimation).

Online estimation is the estimation of the present state using all the measurements that are available, that is all measurements up to the present time. Prediction is the estimation of future states. Retrodiction is the estimation of past states.

This section sets up a framework for the online estimation of the states of discrete-time processes. Of course, most physical processes evolve in continuous time. Nevertheless, we will assume that these systems can be described adequately by a model where the continuous time is reduced to a sequence of specific times. Methods for the conversion from continuous-time to discrete-time models are described in many textbooks, for instance, on control engineering.

5.1.1 Models

We assume that the continuous time t is equidistantly sampled with period Δ. The discrete time index is denoted by an integer variable i. Hence, the moments of sampling are ti = iΔ. Furthermore, we assume that the estimation problem starts at t = 0. Thus, i is a non-negative integer denoting the discrete time.

5.1.1.1 The State Space Model

The state at time i is denoted by x(i) ∈ X, where X is the state space. For finite discrete state space, X = Ω = {ω1, …, ωk}, where ωk is the kth symbol (label or class) out of K possible classes. For infinite discrete state space, X = Ω = {ω1, …, ωk, …}, where 1 ≤ k < ∞. For real-valued vectors with dimension m, we have ![]() . In all these cases we assume that the states can be modelled as random variables.

. In all these cases we assume that the states can be modelled as random variables.

For a causal system with input vector u(i), an mth-order state space model takes the form

where w(i) is the system noise (also known as process noise).

Suppose for a moment that we have observed the state of a process during its whole history, that is from the beginning of time up to the present. In other words, the sequence of states x(0), x(1), …, x(i) are observed and as such fully known (i denotes the present time). In addition, suppose that – using this sequence – we want to estimate (predict) the next state x(i + 1). We need to evaluate the conditional probability density p(x(i + 1)|x(0), x(1), …, x(i)). Once this probability density is known, the application of the theory in Chapters 3 and 4 will provide the optimal estimate of x(i + 1). For instance, if X is a real-valued vector space, the Bayes estimator from Chapter 4 provides the best prediction of the next state (the density p(x(i + 1)|x(0), x(1), …, x(i)) must be used instead of the posterior density).

Unfortunately, the evaluation of p(x(i + 1)|x(0), x(1), …, x(i)) is a nasty task because it is not clear how to establish such a density in real-world problems. Things become much easier if we succeed to define the state such that the so-called Markov condition applies:

The probability of x(i + 1) depends solely on x(i) and not on the past states. In order to predict x(i + 1), knowledge of the full history is not needed. It suffices to know the present state. If the Markov condition applies, the state of a physical process is a summary of the history of the process.

Example 5.1 Motion tracking

Suppose a particle P is moving on a two-dimensional plane whose position at the time i is denoted by the coordinate vector (x(i), y(i)). Also at the time moment i the speed of P is expressed as (vx(i), vy(i)), where vx(i) and vy(i) represent the velocity components along the x-axis and y-axis, respectively. The continuous time t is equidistantly sampled with period Δ. If Δ is small enough, it can be assumed that P’s moving speed is approximately uniform during a sampling interval. Thus the position of P can be recursively calculated as

where wx(i) and wy(i) are the approximation errors. Define the state variable as

Then we have

where

and

Equation (5.4) is in the form of (5.1). Furthermore, it is easy to see that the Markov condition (5.2) holds if {wx(i)} and {wy(i)} are independent white random sequences with normal distribution (see Equation (5.14) below).

Example 5.2 The density of a substance mixed with a liquid

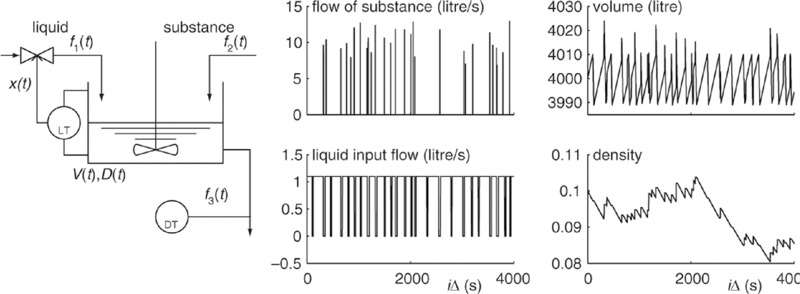

Mixing and diluting are tasks frequently encountered in the food industries, paper industry, cement industry, and so. One of the parameters of interest during the production process is the density D(t) of some substance. It is defined as the fraction of the volume of the mix that is made up by the substance.

Accurate models for these production processes soon involve a large number of state variables. Figure 5.1 is a simplified view of the process. It is made up by two real-valued state variables and one discrete state. The volume V(t) of the liquid in the barrel is regulated by an on/off feedback control of the input flow f1(t) of the liquid: f1(t) = f0x(t). The on/off switch is represented by the discrete state variable x(t) ∈ {0, 1}. A hysteresis mechanism using a level detector (LT) prevents jitter of the switch. The x(t) switches to the ‘on’ state (=1) if V(t) < Vlow and switches back to the ‘off’ state (=0) if V(t) > Vhigh.

The rate of change of the volume is ![]() with f2(t) the volume flow of the substance and f3(t) the output volume flow of the mix. We assume that the output flow is governed by Torricelli's law:

with f2(t) the volume flow of the substance and f3(t) the output volume flow of the mix. We assume that the output flow is governed by Torricelli's law: ![]() . The density is defined as

. The density is defined as ![]() , where VS(t) is the volume of the substance. The rate of change of VS(t) is

, where VS(t) is the volume of the substance. The rate of change of VS(t) is ![]() . After some manipulations the following system of differential equations appears:

. After some manipulations the following system of differential equations appears:

A discrete time approximation (notation: V(i) ≡ V(iΔ), D(i) ≡ D(iΔ), and so on) is

This equation is of the type x(i + 1) = f(x(i), u(i), w(i)) with x(i) = [V(i) D(i) x(i)]T. The elements of the vector u(i) are the known input variables, which is the non-random part of f2(i). The vector w(i) contains the random input, i.e. the random part of f2(i). The probability density of x(i + 1) depends on the present state x(i), but not on the past states.

Figure 5.1 shows a realization of the process. Here, the substance is added to the volume in chunks with an average volume of 10 litres and at random points in time.

Figure 5.1 A density control system for the process industry.

If the transition probability density p(x(i + 1)|x(i)) is known together with the initial probability density p(x(0)), then the probability density at an arbitrary time can be determined recursively:

The joint probability density of the sequence x(0), x(1), …, x(i) follows readily:

5.1.1.2 The Measurement Model

Unfortunately in most cases we cannot directly observe the system states. Instead we can only observe the system outputs such as the data from the sensor in relation to the state. This means, in addition to the state space model, we also need a measurement model that describes the data from the system output. Suppose that at moment i the measurement data are z(i) ∈ Z, where Z is the measurement space. For the real-valued state variables, the measurement space is often a real-valued vector space, that is ![]() for some positive integer n. For the discrete case, one often assumes that the measurement space is also discrete. The measurement model is described by

for some positive integer n. For the discrete case, one often assumes that the measurement space is also discrete. The measurement model is described by

where v(i) is the measurement noise.

The probabilistic model of the measurement system is fully defined by the conditional probability density p(z(i)|x(0), …, x(i), z(0), …, z(i − 1)). We assume that the sequence of measurements starts at time i = 0. In order to shorten the notation, the sequence of all measurements up to the present will be denoted by

We restrict ourselves to memoryless measurement systems which is systems where z(i) depends on the value of x(i), but not on previous states nor on previous measurements. In other words:

The state model (5.1) together with the measurement model (5.8) forms the general discrete-time state space model for state estimation. However, this general model is not very useful until we move to more specific situations, which will be discussed later in this chapter.

5.1.2 Optimal Online Estimation

Figure 5.2 presents an overview of the scheme for the online estimation of the state. The connotation of the phrase online is that for each time index i an estimate ![]() of x(i) is produced based on Z(i), that is, based on all measurements that are available at that time. The crux of optimal online estimation is to maintain the posterior density p(x(i)|Z(i)) for running values of i. This density captures all the available information of the current state x(i) after having observed the current measurement and all previous ones. With the availability of the posterior density, the methods discussed in Chapters 3 and 4 become applicable. The only work to be done, then, is to adopt an optimality criterion and to work this out using the posterior density to get the optimal estimate of the current state.

of x(i) is produced based on Z(i), that is, based on all measurements that are available at that time. The crux of optimal online estimation is to maintain the posterior density p(x(i)|Z(i)) for running values of i. This density captures all the available information of the current state x(i) after having observed the current measurement and all previous ones. With the availability of the posterior density, the methods discussed in Chapters 3 and 4 become applicable. The only work to be done, then, is to adopt an optimality criterion and to work this out using the posterior density to get the optimal estimate of the current state.

Figure 5.2 An overview of online estimation.

The maintenance of the posterior density is done efficiently by means of a recursion. From the posterior density p(x(i)|Z(i)), valid for the current period i, the density p(x(i + 1)|Z(i + 1)), valid for the next period i + 1, is derived. The first step of the recursion cycle is a prediction step. The knowledge about x(i) is extrapolated to the knowledge about x(i + 1). Using Bayes’ theorem for conditional probabilities in combination with the Markov condition (5.2), we have

At this point, we increment the counter i so that p(x(i + 1)|Z(i)) now becomes p(x(i)|Z(i − 1). The increment can be done anywhere in the loop, but the choice to do it at this point leads to a shorter notation of the second step.

The second step is an update step. The knowledge gained from observing the measurement z(i) is used to refine the density. Using – once again – the theorem of Bayes, now in combination with the conditional density for memoryless sensory systems (5.9), gives

where c is a normalization constant:

The recursion starts with the processing of the first measurement z(0). The posterior density p(x(0)|Z(0)) is obtained using p(x(0)) as the prior.

The outline for optimal estimation, expressed in Equations (5.10), (5.11) and (5.12), is useful in the discrete case (where integrals turn into summations). For continuous states, a direct implementation is difficult for two reasons:

- It requires efficient representations for the multidimensional density functions.

- It requires efficient algorithms for the integrations over a multidimensional space.

Both requirements are hard to fulfil, especially if m is large. Nonetheless, many researchers have tried to implement the general scheme. One of the most successful endeavours has resulted in what is called particle filtering. First, however, the discussion will be focused on special cases.

5.2 Infinite Discrete-Time State Variables

As addressed in the last section, for continuous states a direct implementation is difficult. In order to overcome this difficulty, the continuous-time models are converted to infinite discrete-time models by reducing the continuous time to a sequence of specific times. The starting point is the general scheme for online estimation discussed in the previous section and illustrated in Figure 5.2. Suppose that both the state and the measurements are discrete real-valued vectors with dimensions of m and n, respectively. As stated before, in general a direct implementation of the scheme is still difficult even if the variables are discrete-time. Fortunately, there are circumstances that allow a fast implementation. For instance, in the special case, where the models are linear and the disturbances have a normal distribution, an implementation based on an ‘expectation and covariance matrix’ representation of the probability densities is feasible (Section 5.2.1). If the models are non-linear, but the non-linearity is smooth, linearization techniques can be applied (Section 5.2.2). If the models are highly non-linear but the dimensions m and n are not too large, numerical methods are possible (Sections 5.4 and 5.5).

5.2.1 Optimal Online Estimation in Linear-Gaussian Systems

Most literature in optimal estimation in dynamic systems deals with the particular case in which both the state model and the measurement model are linear, and the disturbances are Gaussian (the linear-Gaussian systems). Perhaps the main reason for the popularity is the mathematical tractability of this case.

5.2.1.1 Linear-Gaussian State Space Models

The state model is said to be linear if the state space model (5.1) can be expressed by a so-called linear system equation (or linear state equation, linear plant equation, linear dynamic equation):

where F(i) is the system matrix. It is an m × m matrix where m is the dimension of the state vector; m is called the order of the system. The vector u(i) is the control vector (input vector) of dimension l. Usually, the vector is generated by a controller according to some control law. As such the input vector is a deterministic signal that is fully known, at least up to the present. L(i) is the gain matrix of dimension m × l. Sometimes the matrix is called the distribution matrix as it distributes the control vector across the elements of the state vector.

w(i) is the process noise (system noise, plant noise). It is a sequence of random vectors of dimension m. The process noise represents the unknown influences on the system, for instance, formed by disturbances from the environment. The process noise can also represent an unknown input/control signal. Sometimes process noise is also used to take care of modelling errors. The general assumption is that the process noise is a white random sequence with normal distribution. The term ‘white’ is used here to indicate that the expectation is zero and the autocorrelation is governed by the Kronecker delta function:

where Cw(i) is the covariance matrix of w(i). Since w(i) is supposed to have a normal distribution with zero mean, Cw(i) defines the density of w(i) in full.

The initial condition of the state model is given in terms of the expectation E[x(0)] and the covariance matrix Cx(0). In order to find out how these parameters of the process propagate to an arbitrary time i, the state equation (5.13) must be used recursively:

The first equation follows from E[w(i)] = 0. The second equation uses the fact that the process noise is white, that is E[w(i)wT(j)] = 0 for i ≠ j (see Equation (5.14)).

If E[x(0)] and Cx(0) are known, then Equation (5.15) can be used to calculate E[x(1)] and Cx(1). From that, by reapplying (5.15), the next values, E[x(2)] and Cx(2), can be found, and so on. Thus, the iterative use of Equation (5.15) gives us E[x(i)] and Cx(i) for arbitrary i > 0.

In the special case, where neither F(i) nor C(i) depends on i, the state space model is time invariant. The notation can then be shortened by dropping the index, that is F and Cw. If, in addition, F is stable (i.e. the magnitudes of the eigenvalues of F are all less than one), the sequence Cx(i), i = 0, 1, … converges to a constant matrix. The balance in Equation (5.15) is reached when the decrease of Cx(i) due to F compensates for the increase of Cx(i) due to Cw. If such is the case, then

which is the discrete Lyapunov equation.

5.2.1.2 Prediction

Equation (5.15) is the basis for prediction. Suppose that at time i an unbiased estimate ![]() is known together with the associated error covariance Ce(i), which was introduced in Section 4.2.2. The best predicted value (MMSE) of the state for ℓ samples ahead of i is obtained by the recursive application of Equation (5.15). The recursion starts with

is known together with the associated error covariance Ce(i), which was introduced in Section 4.2.2. The best predicted value (MMSE) of the state for ℓ samples ahead of i is obtained by the recursive application of Equation (5.15). The recursion starts with ![]() and terminates when E[x(i + ℓ)] is obtained. The covariance matrix Ce(i) is a measure of the magnitudes of the random fluctuations of x(i) around

and terminates when E[x(i + ℓ)] is obtained. The covariance matrix Ce(i) is a measure of the magnitudes of the random fluctuations of x(i) around ![]() . As such it is also a measure of uncertainty. Therefore, the recursive usage of Equation (5.15) applied to Ce(i) gives Ce(i + ℓ), that is the uncertainty of the prediction. With that, the recursive equations for the prediction become

. As such it is also a measure of uncertainty. Therefore, the recursive usage of Equation (5.15) applied to Ce(i) gives Ce(i + ℓ), that is the uncertainty of the prediction. With that, the recursive equations for the prediction become

Example 5.3 Prediction of motion tracking Example 5.1 formulated a simple motion tracking problem with the state equation (5.4), where

and

According to Equation (5.17), the one step ahead prediction (ℓ = 0) can be expressed as

where ![]() represents the input term. The prediction error covariance at the time i is

represents the input term. The prediction error covariance at the time i is

where ![]() is the covariance of

is the covariance of ![]() , which is

, which is

if ![]() is a white random sequence. Suppose the initial prediction error covariance is 0, that is

is a white random sequence. Suppose the initial prediction error covariance is 0, that is

Then ![]() can be generated recursively according to Equation (5.19). By Equation (5.4),

can be generated recursively according to Equation (5.19). By Equation (5.4), ![]() can be set to 0. Also suppose the sample interval is 1, that is

can be set to 0. Also suppose the sample interval is 1, that is

This example will be extended to a complete example of online estimation with a discrete Kalman filter by establishing the measurement model in Example 5.4.

5.2.1.3 Linear-Gaussian Measurement Models

A special form of Equation (5.8) is the linear measurement model, which takes the following form:

where H(i) is the so-called measurement matrix, which is an n × m matrix and v(i) is the measurement noise. It is a sequence of random vectors of dimension n. Obviously, the measurement noise represents the noise sources in the sensory system. Examples are thermal noise in a sensor and the quantization errors of an AD converter.

The general assumption is that the measurement noise is a zero mean, white random sequence with normal distribution. In addition, the sequence is supposed to have no correlation between the measurement noise and the process noise:

where Cv(i) is the covariance matrix of v(i). Cv(i) specifies the density of v(i) in full.

The measurement system is time invariant if neither H nor Cv depends on i.

5.2.1.4 The Discrete Kalman Filter

The concepts developed in the previous section are sufficient to transform the general scheme presented in Section 5.1 into a practical solution. In order to develop the estimator, first the initial condition valid for i = 0 must be established. In the general case, this condition is defined in terms of the probability density p(x(0)) for x(0). Assuming a normal distribution for x(0) it suffices to specify only the expectation E[x(0)] and the covariance matrix Cx(0). Hence, the assumption is that these parameters are available. If not, we can set E[x(0)] = 0 and let Cx(0) approach infinity, that is Cx(0) → ∞ I. Such a large covariance matrix represents the lack of prior knowledge.

The next step is to establish the posterior density p(x(0)|z(0)) from which the optimal estimate for x(0) follows. At this point, we enter the loop of Figure 5.2. Hence, we calculate the density p(x(1)|z(0)) of the next state and process the measurement z(1) resulting in the updated density p(x(1)|z(0), z(1)) = p(x(1)|Z(1)). From that, the optimal estimate for x(1) follows. This procedure has to be iterated for all of the next time cycles.

The representation of all the densities that are involved can be given in terms of expectations and covariances. The reason is that any linear combination of Gaussian random vectors yields a vector that is also Gaussian. Therefore, both p(x(i + 1)|Z(i)) and p(x(i)|Z(i)) are fully represented by their expectations and covariances. In order to discriminate between the two situations a new notation is needed. From now on, the conditional expectation E[x(i)|Z(j)] will be denoted by ![]() . It is the expectation associated with the conditional density p(x(i)|Z(j)). The covariance matrix associated with this density is denoted by C(i|j).

. It is the expectation associated with the conditional density p(x(i)|Z(j)). The covariance matrix associated with this density is denoted by C(i|j).

The update, that is the determination of p(x(i)|Z(i)) given p(x(i)|Z(i − 1)), follows from Section 4.1.5, where it has been shown that the unbiased linear MMSE estimate in the linear-Gaussian case equals the MMSE estimate, and that this estimate is the conditional expectation. Application of Equations (4.33) and (4.45) to (5.20) and (5.21) gives

The interpretation is as follows: ![]() is the predicted measurement. It is an unbiased estimate of z(i) using all information from the past. The so-called innovation matrix S(i) represents the uncertainty of the predicted measurement. The uncertainty is due to two factors: the uncertainty of x(i) as expressed by C(i|i − 1) and the uncertainty due to the measurement noise v(i) as expressed by Cv(i). The matrix K(i) is the Kalman gain matrix. This matrix is large when S(i) is small and C(i|i − 1)HT(i) is large, that is when the measurements are relatively accurate. When this is the case, the values in the error covariance matrix C(i|i) will be much smaller than C(i|i − 1).

is the predicted measurement. It is an unbiased estimate of z(i) using all information from the past. The so-called innovation matrix S(i) represents the uncertainty of the predicted measurement. The uncertainty is due to two factors: the uncertainty of x(i) as expressed by C(i|i − 1) and the uncertainty due to the measurement noise v(i) as expressed by Cv(i). The matrix K(i) is the Kalman gain matrix. This matrix is large when S(i) is small and C(i|i − 1)HT(i) is large, that is when the measurements are relatively accurate. When this is the case, the values in the error covariance matrix C(i|i) will be much smaller than C(i|i − 1).

The prediction, that is the determination of p(x(i + 1)|Z(i)) given p(x(i)|Z(i)), boils down to finding out how the expectation ![]() and the covariance matrix C(i|i) propagate to the next state. Using equations (5.13) and (5.15) we have

and the covariance matrix C(i|i) propagate to the next state. Using equations (5.13) and (5.15) we have

At this point, we increment the counter and x(i + 1|i) and C(i + 1|i) become x(i|i − 1) and C(i|i − 1). These recursive equations are generally referred to as the discrete Kalman filter (DKF).

In the Gaussian case, it does not matter much which optimality criterion we select. MMSE estimation, MMAE estimation and MAP estimation yield the same result, that is the conditional mean. Hence, the final estimate is found as ![]() . It is an absolute unbiased estimate and its covariance matrix is C(i|i). Therefore, this matrix is often called the error covariance matrix.

. It is an absolute unbiased estimate and its covariance matrix is C(i|i). Therefore, this matrix is often called the error covariance matrix.

In the time invariant case, and assuming that the Kalman filter is stable, the error covariance matrix converges to a constant matrix. In that case, the innovation matrix and the Kalman gain matrix become constant as well. The filter is said to be in the steady state. The steady state condition simply implies that C(i + 1|i) = C(i|i − 1). If the notation for this matrix is shortened to P, then Equations (5.22) and (5.23) lead to the following equation:

Equation (5.24) is known as the discrete algebraic Ricatti equation. Usually, it is solved numerically. Its solution implicitly defines the steady state solution for S, K and C.

Example 5.4 Application to the motion tracking In this example we reconsider the motion tracking described in Example 5.3. We model the measurements with the model: ![]() where

where ![]() is the constant matrix

is the constant matrix

Figure 5.3 shows the results of the motion tracking. The covariance of the measurement noise is ![]() . The filter is initiated with

. The filter is initiated with ![]() to be the actual position of the object. Recall that in Example 5.3, Cx(0) is set to be 1. It can be seen from Figure 5.3 that if both system error and measurement error are white noise with covariance 1, the motion tracking works quite well.

to be the actual position of the object. Recall that in Example 5.3, Cx(0) is set to be 1. It can be seen from Figure 5.3 that if both system error and measurement error are white noise with covariance 1, the motion tracking works quite well.

Figure 5.3 Motion tracking: both system and measurement errors are white noises with covariance 1.

Listing 5.1 is the code of this example.

Listing 5.1 Discrete Kalman filter for motion tracking

clear

x=(1:80);

for idx = 1: length(x)

y(idx)=(idx-22)*(idx-22)/20;

end

y=2*randn(1,length(x)) + y;

kalman = [];

for idx = 1: length(x)

location = [x(idx),y(idx),0,0];

if isempty(kalman)

stateModel = [1 0 1 0;0 1 0 1;0 0 1 0;0 0 0 1];

measurementModel = [1 0 0 0;0 1 0 0;0 0 1 0;0 0 0 1];

kalman = vision.KalmanFilter(stateModel,measurementModel,

'ProcessNoise',1,'MeasurementNoise',1);

kalman.State = location;

else

trackedLocation = predict(kalman);

plot(location(1),location(2),'k+');

trackedLocation = correct(kalman,location);

pause(0.2);

plot(trackedLocation(1),trackedLocation(2),'ro');

end

endFor comparison, Figure 5.4 depicts the tracking results when the system noise becomes 1 × 10−4 (=0.0001) while the measurement noise becomes 4. This time the performance is much worse after time step 28.

Figure 5.4 Motion tracking: measurement errors are white noise with covariance 4.

5.2.2 Suboptimal Solutions for Non-linear Systems

This section extends the discussion on state estimation to the more general case of non-linear systems and non-linear measurement functions:

The vector f( ·, ·, ·) is a non-linear, time variant function of the state x(i) and the control vector u(i). The control vector is a deterministic signal. Since u(i) is fully known, it only causes an explicit time dependency in f( ·, ·, ·). Without loss of generality, the notation can be shortened to f(x(i), i) because such an explicit time dependency is already implied in that shorter notation. If no confusion can occur, the abbreviation f(x(i)) will be used instead of f(x(i), i) even if the system does depend on time. As before, w(i) is the process noise. It is modelled as zero mean, Gaussian white noise with covariance matrix Cw(i). The vector h( ·, ·) is a nonlinear measurement function. Here too, if no confusion is possible, the abbreviation h(x(i)) will be used covering both the time variant and the time invariant case. v(i) represents the measurement noise, modelled as zero mean, Gaussian white noise with covariance matrix Cv(i).

Any Gaussian random vector that undergoes a linear operation retains its Gaussian distribution. A linear operator only affects the expectation and the covariance matrix of that vector. This property is the basis of the Kalman filter. It is applicable to linear-Gaussian systems and it permits a solution that is entirely expressed in terms of expectations and covariance matrices. However, the property does not hold for non-linear operations. In non-linear systems, the state vectors and the measurement vectors are not Gaussian distributed, even though the process noise and the measurement noise might be. Consequently, the expectation and the covariance matrix do not fully specify the probability density of the state vector. The question is then how to determine this non-Gaussian density and how to represent it in an economical way. Unfortunately, no general answer exists to this question.

This section seeks the answer by assuming that the non-linearities of the system are smooth enough to allow linear or quadratic approximations. Using these approximations, Kalman-like filters become within reach. These solutions are suboptimal since there is no guarantee that the approximations are close.

An obvious way to get the approximations is by application of a Taylor series expansion of the functions. Ignorance of the higher-order terms of the expansion gives the desired approximation. The Taylor series exists by virtue of the assumed smoothness of the non-linear function; it does not work out if the non-linearity is a discontinuity, that is saturation, dead zone, hysteresis, and so on. The Taylor series expansions of the system equations are as follows:

where ei is the Cartesian basis vector with appropriate dimension. The ith element of ei is one; the others are zeros; ei can be used to select the ith element of a vector: eTix = xi. F(x) and H(x) are Jacobian matrices. Fixx(x) and Hjxx(x) are Hessian matrices. These matrices are defined in Appendix B.4. HOT are the higher-order terms. The quadratic approximation arises if the higher-order terms are ignored. If, in addition, the quadratic term is ignored, the approximation becomes linear, e.g. ![]() .

.

5.2.2.1 The Linearized Kalman Filter

The simplest approximation occurs when the system equations are linearized around some fixed value ![]() of x(i). This approximation is useful if the system is time invariant and stable, and if the states swing around an equilibrium state. Such a state is the solution of

of x(i). This approximation is useful if the system is time invariant and stable, and if the states swing around an equilibrium state. Such a state is the solution of

Defining ![]() , the linear approximation of the state equation (5.25) becomes

, the linear approximation of the state equation (5.25) becomes ![]() . After some manipulations:

. After some manipulations:

By interpreting the term ![]() as a constant control input and by compensating the offset term

as a constant control input and by compensating the offset term ![]() in the measurement vector, these equations become equivalent to Equations (5.13) and (5.20). This allows the direct application of the DKF as given in Equations (5.22) and (5.23).

in the measurement vector, these equations become equivalent to Equations (5.13) and (5.20). This allows the direct application of the DKF as given in Equations (5.22) and (5.23).

Many practical implementations of the discrete Kalman filter are inherently linearized Kalman filters because physical processes are seldom exactly linear and often a linear model is only an approximation of the real process.

Example 5.5 A linearized model for volume density estimation In Section 5.1.1 we introduced the non-linear, non-Gaussian problem of the volume density estimation of a mix in the process industry (Example 5.2). The model included a discrete state variable to describe the on/off regulation of the input flow. We will now replace this model by a linear feedback mechanism:

The liquid input flow has now been modelled by f1(i) = α(V0 − V(i)) + w1(i). The constant V0 = Vref + (c − f2)/α (with ![]() realizes the correct mean value of V(i). The random part w1(i) of f1(i) establishes a first-order AR model of V(i), which is used as a rough approximation of the randomness of f1(i). The substance input flow f2(i), which in Example 5.2 appears as chunks at some discrete points of time, is now modelled by

realizes the correct mean value of V(i). The random part w1(i) of f1(i) establishes a first-order AR model of V(i), which is used as a rough approximation of the randomness of f1(i). The substance input flow f2(i), which in Example 5.2 appears as chunks at some discrete points of time, is now modelled by ![]() , that is a continuous flow with some randomness.

, that is a continuous flow with some randomness.

The equilibrium is found as the solution of V(i + 1) = V(i) and D(i + 1) = D(i). The results are

The expressions for the Jacobian matrices are

The considered measurement system consists of two sensors:

- A level sensor that measures the volume V(i) of the barrel.

- A radiation sensor that measures the density D(i) of the output flow.

The latter uses the radiation of some source, for example X-rays, that is absorbed by the fluid. According to Beer–Lambert's law, Pout = Pinexp ( − μD(i)), where μ is a constant depending on the path length of the ray and on the material. Using an optical detector the measurement function becomes z = Uexp ( − μD) + v with U a constant voltage. With that, the model of the two sensors becomes

with the Jacobian matrix:

The best fitted parameters of this model are as follows:

| Volume | Substance | Output | Measurement |

| control | flow | flow | system |

| Δ = 1 (s) | Vref = 4000 (l) | ||

| V0 = 4001 (l) | c = 1 (l/s) | U = 1000 (V) | |

| α = 0.95 (1/s) | μ = 100 | ||

Figure 5.5 shows the real states (obtained from a simulation using the model from Example 5.2), observed measurements, estimated states and estimation errors. It can be seen that:

- The density can only be estimated if the real density is close to the equilibrium. In every other region, the linearization of the measurement is not accurate enough.

- The estimator is able to estimate the mean volume but cannot keep track of the fluctuations. The estimation error of the volume is much larger than indicated by the 1σ boundaries (obtained from the error covariance matrix). The reason for the inconsistent behaviour is that the linear-Gaussian AR model does not fit well enough.

Figure 5.5 Linearized Kalman filtering applied to the volume density estimation problem.

5.2.2.2 The Extended Kalman Filter

A straightforward generalization of the linearized Kalman filter occurs when the equilibrium point ![]() is replaced with a nominal trajectory

is replaced with a nominal trajectory ![]() , recursively defined as

, recursively defined as

Although the approach is suitable for time variant systems, it is not often used. There is another approach with almost the same computational complexity, but with better performance. That approach is the extended Kalman filter (EKF).

Again, the intention is to keep track of the conditional expectation x(i|i) and the covariance matrix C(i|i). In the linear-Gaussian case, where all distributions are Gaussian, the conditional mean is identical to the MMSE estimate (= minimum variance estimate), the MMAE estimate and the MAP estimate (see Section 4.1.3). In the present case, the distributions are not necessarily Gaussian and the solutions of the three estimators do not coincide. The extended Kalman filter provides only an approximation of the MMSE estimate.

Each cycle of the extended Kalman filter consists of a ‘one step ahead’ prediction and an update, as before. However, the tasks are much more difficult now because the calculation of, for instance, the ‘one step ahead’ expectation:

requires the probability density p(x(i + 1)|Z(i)) (see Equation (5.10)). However, as stated before, it is not clear how to represent this density. The solution of the EKF is to apply a linear approximation of the system function. With that, the ‘one step ahead’ expectation can be expressed entirely in terms of the moments of p(x(i)|Z(i)).

The EKF uses linear approximations of the system functions using the first two terms of the Taylor series expansions. Suppose that at time i we have the updated estimate ![]() and the associated approximate error covariance matrix C(i|i). The word ‘approximate’ expresses the fact that our estimates are not guaranteed to be unbiased due to the linear approximations. However, we do assume that the influence of the errors induced by the linear approximations is small. If the estimation error is denoted by e(i), then

and the associated approximate error covariance matrix C(i|i). The word ‘approximate’ expresses the fact that our estimates are not guaranteed to be unbiased due to the linear approximations. However, we do assume that the influence of the errors induced by the linear approximations is small. If the estimation error is denoted by e(i), then

In these expressions, ![]() is our estimate. It is available at time i and therefore is deterministic. Only e(i) and w(i) are random. Taking the expectation on both sides of (5.28), we obtain approximate values for the ‘one step ahead’ prediction:

is our estimate. It is available at time i and therefore is deterministic. Only e(i) and w(i) are random. Taking the expectation on both sides of (5.28), we obtain approximate values for the ‘one step ahead’ prediction:

We have approximations instead of equalities for two reasons. First, we neglect the possible bias of ![]() , that is a non-zero mean of e(i). Second, we ignore the higher-order terms of the Taylor series expansion.

, that is a non-zero mean of e(i). Second, we ignore the higher-order terms of the Taylor series expansion.

Upon incrementing the counter, ![]() becomes

becomes ![]() and we now have to update the prediction

and we now have to update the prediction ![]() by using a new measurement z(i) in order to get an approximation of the conditional mean

by using a new measurement z(i) in order to get an approximation of the conditional mean ![]() . First we calculate the predicted measurement

. First we calculate the predicted measurement ![]() based on

based on ![]() using a linear approximation of the measurement function, that is

using a linear approximation of the measurement function, that is ![]() . Next, we calculate the innovation matrix S(i) using that same approximation. Then we apply the update according to the same equations as in the linear-Gaussian case.

. Next, we calculate the innovation matrix S(i) using that same approximation. Then we apply the update according to the same equations as in the linear-Gaussian case.

The predicted measurements are

The approximation is based on the assumption that E[e(i|i − 1)]≅0 and on the Taylor series expansion of h( · ). The innovation matrix becomes

From this point on, the update continues as in the linear-Gaussian case (see Equation (5.22)):

Despite the similarity of the last equation with respect to the linear case, there is an important difference. In the linear case, the Kalman gain K(i) depends solely on deterministic parameters: H(i), F(i), Cw(i), Cv(i) and Cx(0). It does not depend on the data. Therefore, K(i) is fully deterministic. It could be calculated in advance instead of online. In the EKF, the gains depend upon the estimated states ![]() through

through ![]() , and thus also upon the measurements z(i). Therefore, the Kalman gains are random matrices. Two runs of the extended Kalman filter in two repeated experiments lead to two different sequences of the Kalman gains. In fact, this randomness of K(i) can cause unstable behaviour.

, and thus also upon the measurements z(i). Therefore, the Kalman gains are random matrices. Two runs of the extended Kalman filter in two repeated experiments lead to two different sequences of the Kalman gains. In fact, this randomness of K(i) can cause unstable behaviour.

Example 5.6 The extended Kalman filter for volume density estimation Application of the EKF to the density estimation problem introduced in Example 5.2 and represented by a linear-Gaussian model in Example 5.5 gives the results as shown in Figure 5.6. Compared with the results of the linearized KF (Figure 5.5) the density errors are now much more consistent with the 1σ boundaries obtained from the error covariance matrix. However, the EKF is still not able to cope with the non-Gaussian disturbances of the volume.

Note also that the 1σ boundaries do not reach a steady state. The filter remains time variant, even in the long term.

Figure 5.6 Extended Kalman filtering for the volume density estimation problem.

5.2.2.3 The Iterated Extended Kalman Filter

A further improvement of the update step in the extended Kalman filter is within reach if the current estimate ![]() is used to get an improved linear approximation of the measurement function yielding an improved predicted measurement

is used to get an improved linear approximation of the measurement function yielding an improved predicted measurement ![]() . In turn, such an improved predicted measurement can improve the current estimate. This suggests an iterative approach.

. In turn, such an improved predicted measurement can improve the current estimate. This suggests an iterative approach.

Let ![]() be the predicted measurement in the ℓth iteration and let

be the predicted measurement in the ℓth iteration and let ![]() be the ℓth improvement of

be the ℓth improvement of ![]() . The iteration is initiated with

. The iteration is initiated with ![]() . A naive approach for the calculation of

. A naive approach for the calculation of ![]() simply uses a relinearization of h( · ) based on

simply uses a relinearization of h( · ) based on ![]() :

:

Hopefully, the sequence ![]() , with ℓ = 0, 1, 2, …, converges to a final solution.

, with ℓ = 0, 1, 2, …, converges to a final solution.

A better approach is the so-called iterated extended Kalman filter (IEKF). Here, the approximation is made that both the predicted state and the measurement noise are normally distributed. With that, the posterior probability density (Equation (5.11))

being the product of two Gaussians, is also a Gaussian. Thus, the MMSE estimate coincides with the MAP estimate and the task is now to find the maximum of p(x(i)|Z(i)). Equivalently, we maximize its logarithm. After the elimination of the irrelevant constants and factors, it all boils down to minimizing the following function w.r.t. x:

For brevity, the following notation has been used:

The strategy to find the minimum is to use the Newton–Raphson iteration starting from ![]() . In the ℓth iteration step, we already have an estimate

. In the ℓth iteration step, we already have an estimate ![]() obtained from the previous step. We expand f(x) in a second-order Taylor series approximation:

obtained from the previous step. We expand f(x) in a second-order Taylor series approximation:

where ∂f/∂x is the gradient and ∂2f/∂x2 is the Hessian of f(x) (see Appendix B.4). The estimate ![]() is the minimum of the approximation. It is found by equating the gradient of the approximation to zero. Differentiation of Equation (5.30) w.r.t. x gives

is the minimum of the approximation. It is found by equating the gradient of the approximation to zero. Differentiation of Equation (5.30) w.r.t. x gives

The Jacobian and Hessian of Equation (5.29), in explicit form, are

where ![]() is the Jacobian matrix of h(x) evaluated at

is the Jacobian matrix of h(x) evaluated at ![]() . Substitution of Equation (5.32) in (5.31) yields the following iteration scheme:

. Substitution of Equation (5.32) in (5.31) yields the following iteration scheme:

The result after one iteration, that is ![]() , is identical to the ordinary extended Kalman filter. The required number of further iterations depends on how fast

, is identical to the ordinary extended Kalman filter. The required number of further iterations depends on how fast ![]() converges. Convergence is not guaranteed, but if the algorithm converges, usually a small number of iterations suffice. Therefore, it is common practice to fix the number of iterations to some practical number L. The final result is set to the last iteration, that is

converges. Convergence is not guaranteed, but if the algorithm converges, usually a small number of iterations suffice. Therefore, it is common practice to fix the number of iterations to some practical number L. The final result is set to the last iteration, that is ![]() .

.

Equation (4.44) shows that the factor (C− 1p + HℓTC− 1vHℓ)− 1 is the error covariance matrix associated with x(i|i):

This insight gives another connotation to the last term in Equation (5.33) because, in fact, the term C(i|i)HTℓCv− 1 can be regarded as the Kalman gain matrix Kℓ during the ℓth iteration (see Equation (4.20)).

Example 5.7 The iterated EKF for volume density estimation In the previous example, the EKF was applied to the density estimation problem introduced in Example 5.2. The filter was initiated with the equilibrium state as prior knowledge, that is ![]() . Figure 5.7(b) shows the transient that occurs if the EKF is initiated with E[x(0)] = [2000 0]T. It takes about 40(s) before the estimated density reaches the true densities. This slow transient is due to the fact that in the beginning the linearization is poor. The iterated EKF is of much help here. Figure 5.7(c) shows the results. From the first measurement, the estimated density is close to the real density. There is no transient.

. Figure 5.7(b) shows the transient that occurs if the EKF is initiated with E[x(0)] = [2000 0]T. It takes about 40(s) before the estimated density reaches the true densities. This slow transient is due to the fact that in the beginning the linearization is poor. The iterated EKF is of much help here. Figure 5.7(c) shows the results. From the first measurement, the estimated density is close to the real density. There is no transient.

Figure 5.7 Iterated extended Kalman filtering for the volume density estimation problem. (a) Measurements. (b) Results from the EKF. (c) Results from the iterated EKF (number of iterations = 20).

The extended Kalman filter is widely used because for a long period of time no viable alternative solution existed. Nevertheless, it has numerous disadvantages:

- It only works well if the various random vectors are approximately Gaussian distributed. For complicated densities, the expectation–covariance representation does not suffice.

- It only works well if the non-linearities of the system are not too severe because otherwise the Taylor series approximations fail. Discontinuities are deadly for the EKF's proper functioning.

- Recalculating the Jacobian matrices at every time step is computationally expensive.

- In some applications, it is too difficult to find the Jacobian matrix analytically. In these cases, numerical approximations of the Jacobian matrix are needed. However, this introduces other types of problems because now the influence of having approximations rather than the true values comes in.

- In the EKF, the Kalman gain matrix depends on the data. With that, the stability of the filter is no longer assured. Moreover, it is very hard to analyse the behaviour of the filter.

- The EKF does not guarantee unbiased estimates. In addition, the calculated error covariance matrices do not necessarily represent the true error covariances. The analysis of these effects is also hard.

5.3 Finite Discrete-Time State Variables

We consider physical processes that are described at any time as being in one of a finite number of states. Examples of such processes are:

- The sequence of strokes of a tennis player during a game, for example service, backhand-volley, smash, etc.

- The sequence of actions that a tennis player performs during a particular stroke.

- The different types of manoeuvres of an airplane, for example a linear flight, a turn, a nose dive, etc.

- The sequence of characters in a word and the sequence of words in a sentence.

- The sequence of tones of a melody as part of a musical piece.

- The emotional modes of a person: angry, happy, astonished, etc.

These situations are described by a state variable x(i) that can only take a value from a finite set of states Ω = {ω1, …, ωK}.

The task is to determine the sequence of states that a particular process goes through (or has gone through). For that purpose, at any time measurements z(i) are available. Often the output of the sensors is real valued, but nevertheless we will assume that the measurements take their values from a finite set. Thus, some sort of discretization must take place that maps the range of the sensor data on to a finite set Z = {ϑ1, …, ϑN}.

This section first introduces a state space model that is often used for discrete state variables, that is the hidden Markov model. This model will be used in the next subsections for online and offline estimation of the states.

5.3.1 Hidden Markov Models

A hidden Markov model (HMM) is an instance of the state space model discussed in Section 5.1.1. It describes a sequence x(i) of discrete states starting at time i = 0. The sequence is observed by means of measurements z(i) that can only take values from a finite set. The model consists of the following ingredients:

- The set Ω containing the K states ωk that x(i) can take.

- The set Z containing the N symbols ϑn that z(i) can take.

- The initial state probability p0(x(0)).

- The state transition probability pt(x(i)|x(i − 1)).

- The observation probability pz(z(i)|x(i)).

The expression p0(x(0)) with x(0) ∈ {1, …, K} denotes the probability that the random state variable ![]() takes the value ωx(0). Thus

takes the value ωx(0). Thus ![]() . Similar conventions hold for other expressions, like pt(x(i)|x(i − 1)) and pz(z(i)|x(i)).

. Similar conventions hold for other expressions, like pt(x(i)|x(i − 1)) and pz(z(i)|x(i)).

The Markov condition of an HMM states that p(x(i)|x(0), …, x(i − 1)), that is the probability of x(i) under the condition of all previous states, equals the transition probability. The assumption of the validity of the Markov condition leads to a simple, yet powerful model. Another assumption of the HMM is that the measurements are memoryless. In other words, z(i) only depends on x(i) and not on the states at other time points: p(z(j)|x(0), …, x(i)) = p(z(j)|x(j)).

An ergodic Markov model is one for which the observation of a single sequence x(0), x(1), …, x(∞) suffices to determine all the state transition probabilities. A suitable technique for that is histogramming, that is the determination of the relative frequency with which a transition occurs (see Section 6.2.5). A sufficient condition for ergodicity is that all state probabilities are non-zero. In that case, all states are reachable from everywhere within one time step. Figure 5.8 is an illustration of an ergodic model.

Figure 5.8 A three-state ergodic Markov model.

Another type is the so-called left–right model (see Figure 5.9). This model has the property that the state index k of a sequence is non-decreasing as time proceeds. Such is the case when pt(k|ℓ) = 0 for all k < l. In addition, the sequence always starts with ω1 and terminates with ωK. Thus, p0(k) = δ(k, 1) and pt(k|K) = δ(k, K). Sometimes, an additional constraint is that large jumps are forbidden. Such a constraint is enforced by letting pt(k|ℓ) = 0 for all k > ℓ + Δ. Left–right models find applications in processes where the sequence of states must obey some ordering over time. An example is the stroke of a tennis player. For instance, the service of the player follows a sequence like: ‘take position behind the base line’, ‘bring racket over the shoulder behind the back’, ‘bring up the ball with the left arm’, etc.

Figure 5.9 A four-state left–right model.

In a hidden Markov model the state variable x(i) is observable only through its measurements z(i). Now, suppose that a sequence Z(i) = {z(0), z(1), …, z(i)} of measurements has been observed. Some applications require the numerical evaluation of the probability p(Z(i)) of a particular sequence. An example is the recognition of a stroke of a tennis player. We can model each type of stroke by an HMM that is specific for that type, thus having as many HMMs as the types of strokes. In order to recognize the stroke, we calculate for each type of stroke the probability p(Z(i)|type of stroke) and select the one with maximum probability.

For a given HMM and a fixed sequence Z(i) of acquired measurements, p(Z(i)) can be calculated by using the joint probability of having the measurements Z(i) together with a specific sequence of state variables, that is X(i) = {x(0), x(1), …, x(i)}. First we calculate the joint probability p(X(i), Z(i)):

where it is assumed that the measurement z(i) only depends on x(i). Then p(Z(i)) follows from summation over all possible state sequences:

Since there are Ki different state sequences, the direct implementation of Equations (5.34) and (5.35) requires on the order of (i + 1)Ki + 1 operations. Even for modest values of i, the number of operations is already impractical.

A more economical approach is to calculate p(Z(i)) by means of recursion. Consider the probability p(Z(i), x(i)). This probability can be calculated from the previous time step i − 1 using the following expression:

The recursion must be initiated with p(z(0), x(0)) = p0(x(0))pz(z(0)|x(0)). The probability p(Z(i)) can be retrieved from p(Z(i), x(i)) by

The so-called forward algorithm uses the array F(i, x(i)) = p(Z(i), x(i)) to implement the recursion.

Algorithm 5.1 The forward algorithm

- Initialization:

- Recursion:

- for x(i) = 1, …, K

- for x(i) = 1, …, K

In each recursion step, the sum consists of K terms and the number of possible values of x(i) is also K. Therefore, such a step requires on the order of K2 calculations. The computational complexity for i time steps is on the order of (i + 1)K2.

5.3.2 Online State Estimation

We now focus our attention on the situation of having a single HMM, where the sequence of measurements is processed online so as to obtain real-time estimates ![]() of the states. This problem completely fits within the framework of Section 5.1. Therefore, the solution provided by Equations (5.10) and (5.11) is valid, albeit that the integrals must be replaced by summations.

of the states. This problem completely fits within the framework of Section 5.1. Therefore, the solution provided by Equations (5.10) and (5.11) is valid, albeit that the integrals must be replaced by summations.

However, in line with the previous section, an alternative solution will be presented that is equivalent to the one in Section 5.1. The alternative solution is obtained by deduction of the posterior probability:

In view of the fact that Z(i) are the acquired measurements (and are known and fixed) the maximization of p(x(i)|Z(i)) is equivalent to the maximization of p(Z(i), x(i)). Therefore, the MAP estimate is found as

The probability p(Z(i), x(i)) follows from the forward algorithm.

Example 5.8 Online licence plate detection in videos This example demonstrates the ability of HMMs to find the licence plate of a vehicle in a video. Figure 5.10 is a typical example of one frame of such a video. The task is to find all the pixels that correspond to the licence plate. Such a task is the first step in a licence plate recognition system.

A major characteristic of video is that a frame is scanned line-by-line and that each video line is acquired from left to right. The real-time processing of each line individually is preferable because the throughput requirement of the application is demanding. Therefore, each line is individually modelled as an HMM. The hidden state of a pixel is determined by whether the pixel corresponds to a licence plate or not.

The measurements are embedded in the video line (see Figure 5.11). However, the video signal needs to be processed in order to map it on to a finite measurement space. Simply quantizing the signal to a finite number of levels does not suffice because the amplitudes of the signal alone are not very informative. The main characteristic of a licence plate in a video line is a typical pattern of dark–bright and bright–dark transitions due to the dark characters against a bright background, or vice versa. The image acquisition is such that the camera–object distance is about constant for all vehicles. Therefore, the statistical properties of the succession of transitions are typical for the imaged licence plate regardless of the type of vehicle.

One possibility to decode the succession of transitions is to apply a filter bank and to threshold the output of each filter, thus yielding a set of binary signals. In Figure 5.11, three high-pass filters have been applied with three different cut-off frequencies. Using high-pass filters has the advantage that the thresholds can be zero. Therefore, the results do not depend on the contrast and brightness of the image. The three binary signals define a measurement signal z(i) consisting of N = 8 symbols. Figure 5.12 shows these symbols for one video line. Here, the symbols are encoded as integers from 1 up to 8.

Due to the spatial context of the three binary signals we cannot model the measurements as memoryless symbols. The trick to avoid this problem is to embed the measurement z(i) in the state variable x(i). This can be done by encoding the state variable as integers from 1 up to 16. If i is not a licence plate pixel, we define the state as x(i) = z(i). If i is a licence plate pixel, we define x(i) = z(i) + 8. With that, K = 16. Figure 5.12 shows these states for one video line.

Figure 5.10 Licence plate detection.

Figure 5.11 Definitions of the measurements associated with a video line.

Figure 5.12 States and measurements of a video line.

The embedding of the measurements in the state variables is a form of state augmentation. Originally, the number of states was 2, but after this particular state augmentation, the number becomes 16. The advantage of the augmentation is that the dependence, which does exist between any pair z(i), z(j) of measurements, is now properly modelled by means of the transition probability of the states, yet the model still meets all the requirements of an HMM. However, due to our definition of the state, the relation between state and measurement becomes deterministic. The observation probability degenerates into

In order to define the HMM, the probabilities p0(k) and pt(k|ℓ) must be specified. We used a supervised learning procedure to estimate p0(k) and pt(k|ℓ). For that purpose, 30 images of 30 different vehicles, similar to the one in Figure 5.10, were used. For each image, the licence plate area was manually indexed. Histogramming was used to estimate the probabilities.

Application of the online estimation to the video line shown in Figures 5.11 and 5.12 yields results like those shown in Figure 5.13. The figure shows the posterior probability for having a licence plate. According to our definition of the state, the posterior probability of having a licence plate pixel is p(x(i) > 8|Z(i)). Since by definition online estimation is causal, the rise and decay of this probability shows a delay. Consequently, the estimated position of the licence plate is biased towards the right. Figure 5.14 shows the detected licence plate pixels.

Figure 5.13 Online state estimation.

Figure 5.14 Detected licence plate pixels using online estimation.

5.3.3 Offline State Estimation

In non-real-time applications the sequence of measurements can be buffered before the state estimation takes place. The advantage is that not only ‘past and present’ measurements can be used but also ‘future’ measurements. These measurements can prevent the delay that inherently occurs in online esti-mation.

The problem is formulated as follows. Given a sequence Z(I) = {z(0), …, z(I)} of I + 1 measurements of a given HMM, determine the optimal estimate of the sequence x(0), …, x(I) of the underlying states.

Up to now, the adjective ‘optimal’ meant that we determined the individual posterior probability P(x(i)|measurements) for each time point individually, and that some cost function was applied to determine the estimate with the minimal risk. For instance, the adoption of a uniform cost function for each state leads to an estimate that maximizes the individual posterior probability. Such an estimate minimizes the probability of having an erroneous decision for such a state.

Minimizing the error probabilities of all individually estimated states does not imply that the sequence of states is estimated with minimal error probability. It might even occur that a sequence of ‘individually best’ estimated states contains a forbidden transition, that is a transition for which pt(x(i)|x(i − 1)) = 0. In order to circumvent this, we need a criterion that involves all states jointly.

This section discusses two offline estimation methods. One is an ‘individually best’ solution. The other is an ‘overall best’ solution.

5.3.3.1 Individually Most Likely State

Here the strategy is to determine the posterior probability p(x(i)|Z(I)) and then to determine the MAP estimate: x(i|I) = arg max p(x(i)|Z(I)). As stated earlier, this method minimizes the error probabilities of the individual states. As such, it maximizes the expected number of correctly estimated states.

Section 5.3.1 discussed the forward algorithm, a recursive algorithm for the calculation of the probability p(x(i), Z(i)). We now introduce the backward algorithm, which calculates the probability p(z(i + 1), …, z(I)|x(i)). During each recursion step of the algorithm, the probability p(z(j), …, z(I)|x(j − 1)) is derived from p(z(j + 1), …, Z(I)|x(j)). The recursion proceeds as follows:

The algorithm starts with j = I and proceeds backwards in time, that s I, I − 1, I − 2, … until finally j = i + 1. In the first step, the expression p(z(I + 1)|x(I)) appears. Since that probability does not exist (because z(I + 1) is not available), it should be replaced by 1 to have the proper initialization.

The availability of the forward and backward probabilities suffices for the calculation of the posterior probability:

As stated earlier, the individually most likely state is the one that maximizes p(x(i)|Z(I)). The denominator of Equation (5.36) is not relevant for this maximization since it does not depend on x(i).

The complete forward–backward algorithm is as follows.

Algorithm 5.2 The forward–backward algorithm

- Perform the forward algorithm as given in Section 5.3.1, resulting in the array F(i, k) with i = 0, …, I and k = 1, …, K.

- Backward algorithm:

-

Initialization:

B(I, k) = 1 for k = 1, …, K

-

Recursion:

for i = I − 1, I − 2, …, 0 and x(i) = 1, …, K

-

- MAP estimation of the states:

The forward–backward algorithm has a computational complexity that is on the order of (I + 1)K2. The algorithm is therefore feasible.

5.3.3.2 The Most Likely State Sequence

A criterion that involves the whole sequence of states is the overall uniform cost function. The function is zero when the whole sequence is estimated without any error. It is unity if one or more states are estimated erroneously. Application of this cost function within a Bayesian framework leads to a solution that maximizes the overall posterior probability:

The computation of this most likely state sequence is done efficiently by means of a recursion that proceeds forwards in time. The goal of this recursion is to keep track of the following subsequences:

For each value of x(i), this formulation defines a particular partial sequence. Such a sequence is the most likely partial sequence from time zero and ending at a particular value x(i) at time i given the measurements z(0), …, z(i). Since x(i) can have K different values, there are K partial sequences for each value of i. Instead of using Equation (5.37), we can equivalently use

because p(X(i)|Z(i)) = p(X(i), Z(i))p(Z(i)) and Z(i) is fixed.

In each recursion step the maximal probability of the path ending in x(i) given Z(i) is transformed into the maximal probability of the path ending in x(i + 1) given Z(i + 1). For that purpose, we use the following equality:

Here the Markov condition has been used together with the assumption that the measurements are memoryless.

The maximization of the probability proceeds as follows:

The value of x(i) that maximizes p(x(0), …, x(i), x(i + 1), Z(i + 1)) is a function of x(i + 1):

The so-called Viterbi algorithm uses the recursive equation in (5.38) and the corresponding optimal state dependency expressed in (5.39) to find the optimal path. For that, we define the array

Algorithm 5.3 The Viterbi algorithm

-

Initialization:

for x(0) = 1, …, K

- Q(0, x(0)) = p0(x(0))pz(z(0)|x(0))

- R(0, x(0)) = 0

-

Recursion:

for i = 2, …, I and x(i) = 1, …, K

- Termination:

-

Backtracking:

for i = I − 1, I − 2, …, 0

The computational structure of the Viterbi algorithm is comparable to that of the forward algorithm. The computational complexity is also on the order of (i + 1)K2.

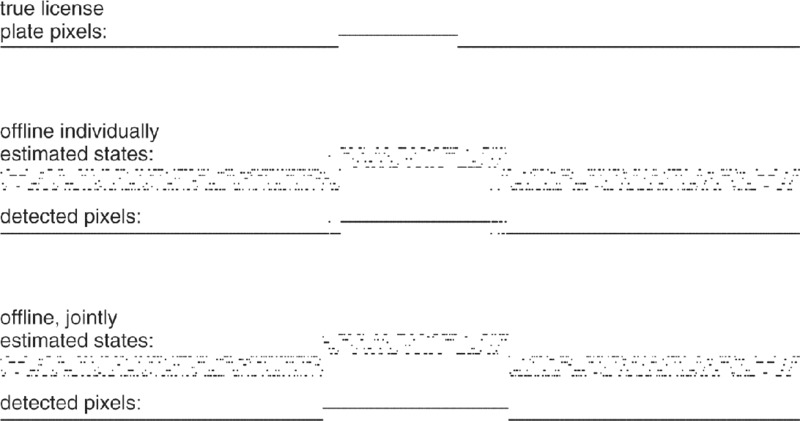

Example 5.9 Offline licence plate detection in videos Figure 5.15 shows the results of the two offline state estimators applied to the video line shown in Figure 5.11. Figure 5.16 provides the results of the whole image shown in Figure 5.10.

Both methods are able to prevent the delay that is inherent in online estimation. Nevertheless, both methods show some falsely detected licence plate pixels on the right side of the plate. These errors are caused by a sticker containing some text. Apparently, the statistical properties of the image of this sticker are similar to the one on a licence plate.

A comparison between the individually estimated states and the jointly estimated states shows that the latter are more coherent and that the former are more fragmented. Clearly, such a fragmentation increases the probability of having erroneous transitions of estimated states. However, generally the resulting erroneous regions are small. The jointly estimated states do not show many of these unwanted transitions. However, if they occur, then they are more serious because they result in a larger erroneous region.

Figure 5.15 Offline state estimation

Figure 5.16 Detected licence plate pixels using offline estimation. (a) Individually estimated states. (b) Jointly estimated states.

5.3.3.3 Matlab® Functions for HMM

The Matlab® functions for the analysis of hidden Markov models are found in the Statistics toolbox. There are five functions:

hmmgenerate: given pt( · | · ) and pz( · | · ), generate a sequence of states and observations.hmmdecode: given pt( · | · ), pz( · | · ) and a sequence of observations, calculate the posterior probabilities of the states.hmmestimate: given a sequence of states and observations, estimate pt( · | · ) and pz( · | · ).hmmtrain: given a sequence of observations, estimate pt( · | · ) and pz( · | · ).hmmviterbi: given pt( · | · ), pz( · | · ) and a sequence of observations, calculate the most likely state sequence.

The function hmmtrain() implements the so-called Baum–Welch algorithm.

5.4 Mixed States and the Particle Filter

Sections 5.2 and 5.3 focused on special cases of the general online estimation problem. The topic of Section 5.2 was infinite discrete state estimation and in particular the linear-Gaussian case or approximations of that. Section 5.3 discussed finite discrete state estimation. We now return to the general scheme of Figure 5.2. The current section introduces the family of particle filters (PF). It is a group of estimators that try to implement the general case. These estimators use random samples to represent the probability densities, just as in the case of Parzen estimation (see Section 6.3.1). Therefore, particle filters are able to handle non-linear, non-Gaussian systems, continuous states, discrete states and even combinations. In the sequel we use probability densities (which in the discrete case must be replaced by probability functions).

5.4.1 Importance Sampling

A Monte Carlo simulation uses a set of random samples generated from a known distribution to estimate the expectation of any function of that distribution. More specifically, let x(k), k = 1, …, K be samples drawn from a conditional probability density p(x|z). Then the expectation of any function g(x) can be estimated by

Under mild conditions, the right-hand side asymptotically approximates the expectation as K increases. For instance, the conditional expectation and covariance matrix are found by substitution of g(x) = x and ![]() , respect-ively.

, respect-ively.

In the particle filter, the set x(k) depends on the time index i. It represents the posterior density p(x(i)|Z(i)). The samples are called the particles. The density can be estimated from the particles by some kernel-based method, for instance, the Parzen estimator discussed in Section 6.3.1.

A problem in the particle filter is that we do not know the posterior density beforehand. The solution is to get the samples from some other density, say q(x), called the proposal density. The various members of the PF family differ (among other things) in their choice of this density. The expectation of g(x) w.r.t. p(x|z) becomes

where

The factor 1/p(z) is a normalizing constant. It can be eliminated as follows:

Using Equations (5.40) and (5.41) we can estimate E[g(x)|z] by means of a set of samples drawn from q(x):

Being the ratio of two estimates, E[g(x)|z] is a biased estimate. However, under mild conditions, E[g(x)|z] is asymptotically unbiased and consistent as K increases. One of the requirements is that q(x) overlaps the support of p(x).

Usually, the shorter notation for the unnormalized importance weights w(k) = w(x(k)) is used. The so-called normalized importance weights are ![]() . Using that, expression (5.42) simplifies to

. Using that, expression (5.42) simplifies to

5.4.2 Resampling by Selection

Importance sampling provides us with samples x(k) and weights w(k)norm. Taken together, they represent the density p(x|z). However, we can transform this representation to a new set of samples with equal weights. The procedure to do that is selection. The purpose is to delete samples with low weights and to retain multiple copies of samples with high weights. The number of samples does not change by this; K is kept constant. The various members from the PF family may differ in the way they select the samples. However, an often used method is to draw the samples with replacement according to a multinomial distribution with probabilities w(k)norm.

Such a procedure is easily accomplished by calculation of the cumulative weights:

We generate K random numbers r(k) with k = 1, …, K. These numbers must be uniformly distributed between 0 and 1. Then, the kth sample x(k)selected in the new set is a copy of the jth sample x(j), where j is the smallest integer for which w(j)cum ≥ r(k).

Figure 5.17 is an illustration. The figure shows a density p(x) and a proposal density q(x). Samples x(k) from q(x) can represent p(x) if they are provided with weights w(k)norm∝p(x(k))/q(x(k)). These weights are visualized in Figure 5.17(d) by the radii of the circles. Resampling by selection gives an unweighted representation of p(x). In Figure 5.17(e), multiple copies of one sample are depicted as a pile. The height of the pile stands for the multiplicity of the copy.

Figure 5.17 Representation of a probability density. (a) A density p(x). (b) The proposal density q(x). (c) The 40 samples of q(x). (d) Importance sampling of p(x) using the 40 samples of q(x). (e) Selected samples from (d) as an equally weighted sample representation of p(x).

5.4.3 The Condensation Algorithm

One of the simplest applications of importance sampling combined with resampling by selection is in the so-called condensation algorithm (‘conditional density optimization’). The algorithm follows the general scheme of Figure 5.2. The prediction density p(x(i)|Z(i − 1)) is used as the proposal density q(x). Therefore, at time i, we assume that a set x(k) is available that is an unweighted representation of p(x(i)|Z(i − 1)). We use importance sampling to find the posterior density p(x(i)|Z(i)). For that purpose we make the following substitutions in Equation (5.40):

The weights w(k)norm that define the representation of p(x(i)|z(i)) are obtained from

Next, resampling by selection provides an unweighted representation x(k)selected. The last step is the prediction. Using x(k)selected as a representation for p(x(i)|Z(i)), the representation of p(x(i + 1)|Z(i)) is found by generating one new sample x(k) for each sample x(k)selected using p(x(i + 1)|x(k)selected) as the density to draw from. The algorithm is as follows.

Algorithm 5.4 The condensation algorithm

- Initialization:

- Set i = 0

- Draw K samples x(k), k = 1, …, K, from the prior probability density p(x(0))

- Update using importance sampling:

- Set the importance weights equal to w(k) = p(z(i)|x(k))

- Calculate the normalized importance weights:

- Resample by selection:

- Calculate the cumulative weights

- For k = 1, …, K:

- Generate a random number r uniformly distributed in [0, 1]

- Find the smallest j such that w(j)cum ≥ r(k)

- Set x(k)selected = x(j)

- Calculate the cumulative weights