Introduction

This chapter covers the concepts of open virtualization on IBM Power Systems. It introduces the IBM PowerKVM version 2 virtualization stack and covers the following topics:

•Quick introduction to virtualization

•Introduction and basic concepts of PowerKVM

•IBM Power Systems

•IBM PowerKVM v2 software stack

•A comparison of IBM PowerKVM and IBM PowerVM®

•Terminology used throughout this book

1.1 IBM Power Systems

IBM Power Systems is a family of servers built for big data solutions by using advanced

IBM POWER processors. This family of servers includes scale-out servers and enterprise class servers from small to very large configurations. Power Systems are known for having high availability and extreme performance, among many other advantages that are covered later in this section.

IBM POWER processors. This family of servers includes scale-out servers and enterprise class servers from small to very large configurations. Power Systems are known for having high availability and extreme performance, among many other advantages that are covered later in this section.

Only a subset of these servers is covered in this book. This subset is referred as IBM scale-out systems, which include servers that run Linux-only operating systems that are based on the IBM POWER8 processor.

1.1.1 POWER8 processors

POWER8 is the most recent family of processors for the Power Systems. Each POWER8 chip is a high-end processor that can have up to 12 cores, two memory controllers per processor, and a PCI generation 3 controller, as shown in Figure 1-1.

The processor also has a 96 MB of L3 shared cache plus 512 KB L2 cache per core.

Figure 1-1 12-core IBM POWER8 architecture

The following features can augment performance of the IBM POWER8 processor:

•Support for DDR3 and DDR4 memory through memory buffer chips that offload the memory support from the IBM POWER8 memory controller

•L4 cache within the memory buffer chip that reduces the memory latency for local access to memory behind the buffer chip (the operation of the L4 cache is apparent to applications running on the POWER8 processor, and up to 128 MB of L4 cache can be available for each POWER8 processor)

•Hardware transactional memory

•On-chip accelerators, including on-chip encryption, compression, and random number generation accelerators

•Coherent Accelerator Processor Interface, which allows accelerators plugged into a PCIe slot to access the processor bus by using a low-latency, high-speed protocol interface

•Adaptive power management

•Microthreading, which allows up to four concurrent VMs to be dispatched simultaneously on a single core

1.1.2 IBM scale-out servers

IBM scale-out systems are a family of servers built for scale-out workloads, including Linux applications that support a complete stack of open software, ranging from the hypervisor to the cloud management.

The scale-out servers provide many benefits for cloud workloads, including security, simplified management, and virtualization capabilities. They are developed using open source methods.

At the time of publication, there are two base system models that are part of this family of servers:

•IBM Power System S812L (8247-21L)

•IBM Power System S822L (8247-22L)

The IBM PowerKVM Version 2 is supported only in this family of machines.

IBM Power System S812L (8247-21L)

The S812L server, seen on Figure 1-2, is a powerful single-socket entry server. This server contains one Power8 dual-chip module (DCM) that offers 3.42 GHz (#EPLP4) or 3.02 GHz (#ELPD). This machine can support up to 12 IBM POWER8 cores, which provide up to 96 CPU threads when using SMT 8 mode.

Figure 1-2 Front view of the Power S812L

For more information about S812L, see IBM Power Systems S812L and S822L Technical Overview and Introduction, REDP-5098:

IBM Power System S8222L (8247-22L)

The S822L server, depicted in Figure 1-3, is a powerful two-socket server. This server supports up to 2 IBM POWER8 processors, so that offers 3.42 GHz (#ELP4) or 3.02 GHz (#ELPD) performance, reaching up to 24 cores and 192 threads when configured with SMT 8.

Figure 1-3 Front view of the Power S822L

Figure 1-4 shows the rear view of both models.

Figure 1-4 S821L and S822L rear view

1.1.3 Power virtualization

IBM Power Systems servers have traditionally been virtualized with PowerVM, and this continues to be an option on IBM scale-out servers.

With the introduction of the Linux-only scale-out systems with POWER8 technology, a new virtualization mechanism is supported on Power Systems. This mechanism is known as a kernel-based virtual machine (KVM), and the port for Power Systems is called PowerKVM.

KVM is known as the de facto open source virtualization mechanism. It is currently used by many software companies.

IBM PowerKVM is a product that leverages the Power resilience and performance with the openness of KVM, which provides several advantages:

•Higher workload consolidation with processors overcommitment and memory sharing

•Dynamic addition and removal of virtual devices

•Microthreading scheduling granularity

•Integration with IBM PowerVC and OpenStack

•Simplified management using open source software

•Avoids vendor lock-in

•Uses POWER8 hardware features, such as SMT8 and microthreading

For more information about IBM PowerKVM capabilities, check section 1.3.2, “KVM” on page 10.

1.1.4 Simultaneous multithreading

Simultaneous multithreading (SMT) is the ability of a single physical core to simultaneously dispatch instructions from more than one hardware thread context. Because there are eight threads per physical core, additional instructions can be executed in parallel. This hardware feature improves overall efficiency of processor use.

In a POWER8 processor configured with SMT 8, up to 96 threads are available per socket, and each of them is represented as a processor in the Linux operating system.

1.1.5 Memory architecture

Power Systems based on the POWER8 processor uses a nonuniform memory access (NUMA) memory architecture. NUMA is a memory architecture on symmetric multiprocessing (SMP) systems. With this architecture, memory in different nodes has different access times, depending on the processor that is using it. The memory access can be local or remote, depending on whether the memory to be accessed is in the same core or in a different memory controller.

On the PowerKVM supported machines, there are up to three different memory nodes, and each of them has different performance when accessing other memory nodes. Figure 1-5 on page 6 shows a two-socket server with a processor accessing local memory and another accessing remote memory.

PowerKVM is aware of the NUMA topology on the virtual machines, and tuning memory access might help the system performance.

To see the machine NUMA topology, use this command:

# virsh nodeinfo

For more information about memory tuning, see 5.3.3, “Configuring NUMA” on page 83.

Figure 1-5 Local and remote memory access in a NUMA architecture

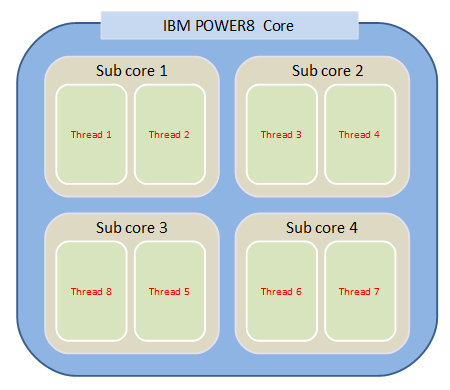

1.1.6 Microthreading

Microthreading is a POWER8 feature that enables a POWER8 core to be split into as many as four subcores. This gives the PowerKVM the capacity to support more than one virtual machine per CPU. Using microthreading has many advantages when the virtual machine does not have enough workload to use a whole core. Up to four guests can run in parallel in the core.

Figure 1-6 shows the threads and the subcores on a POWER8 core when microthreading is enabled and configured to support four subcores per core.

Figure 1-6 IBM POWER8 core with microthreading enabled

1.1.7 RAS features

Power Systems are known for reliability, due to the servers’ reliability, availability, and serviceability (RAS) features. These are some of the features that are available on IBM POWER8 servers:

•Redundant bits in the cache area

•Innovative ECC memory algorithm

•Redundant and hot-swap cooling

•Redundant and hot-swap power supplies

•Self-diagnosis and self-correction of errors during run time

•Automatic reconfiguration to mitigate potential problems from suspected hardware

•Self-heal or automatically substitute functioning components for failing components

For more information about the Power Systems RAS features, see IIBM Power Systems S812L and S822L Technical Overview and Introduction, REDP-5098.

1.2 Virtualization

For practical purposes, this book focuses on server virtualization, especially ways to run an operating system inside a virtual machine and how this virtual machine acts. There are many advantages when the operating system runs in a virtual machine rather than in a real machine, as later sections of this book explain.

With virtualization, there are two main pieces of software, the hypervisor and the guest:

•Hypervisor

The hypervisor is the operating system and firmware that runs directly on the hardware and provides support. One traditional example of hypervisor for Power Systems is PowerVM server virtualization software.

•Guest

Guest is the usual name for the virtual machine. As Figure 1-7 illustrates, a guest always runs inside a hypervisor. In the PowerVM and IBM System z® world, a guest is called a logical partition (LPAR).

Figure 1-7 Guests and hypervisor

|

Notes:

The terms virtual machine and guest are used interchangeably in this book.

Also see 1.3.2, “KVM” on page 10. |

1.2.1 PowerKVM hardware

At the time of writing, only IBM PowerLinux Systems built on POWER8 processors support PowerKVM as the hypervisor. As described on 1.1.2, “IBM scale-out servers” on page 3, these models make up part of the class of servers that support PowerKVM:

•8247-21L: IBM Power System S812L

•8247-22L: IBM Power System S822L

1.2.2 PowerKVM versions

Table 1-1 shows the available versions of the PowerKVM stack.

Table 1-1 PowerKVM versions

|

Product name

|

Program number

|

Sockets

|

|

IBM PowerKVM 2.1

|

5765-KVM

|

up to 2

|

|

IBM PowerKVM 2.1, 1-year maintenance

|

5771-KVM

|

up to 2

|

|

IBM PowerKVM 2.1, 3-year maintenance

|

5773-KVM

|

up to 2

|

1.2.3 PowerKVM 2.1 considerations

There are several things to consider related to the first PowerKVM release:

•PowerKVM does not support IBM AIX® or IBM i operating systems.

•PowerKVM cannot be managed by the Hardware Management Console (HMC).

•The SPICE graphic model is not supported.

•PowerKVM supports a subset of the I/O adapters (as PowerKM is developed, adapter support continually changes)

•Only one operating system is allowed to run on the host system. PowerKVM does not provide multiboot support.

PowerKVM guest limits

Table 1-2 lists the guest limits, or the maximum amount of resources to assign to PowerKVM virtual machines.

Table 1-2 Maximum amount of resources per virtual machine

|

Resources

|

Recommended maximum

|

|

Guest memory

|

512 GB

|

|

Guest vCPUs

|

160

|

|

Virtual network devices

|

8

|

|

Number of virtual machines per core

|

20

|

|

Number of para-virtualized devices

|

32 PCI device slots per virtual machine and

8 PCI functions per device slot |

1.2.4 Where to download PowerKVM

You can download IBM PowerKVM from the IBM Fix Central web page:

After you download the ISO, you can use the ISO to install it from a NetBoot server or burn a DVD and use it to install. Both methods are covered in Chapter 2, “Host installation and configuration” on page 19.

1.3 Software stack

This section covers all of the major software in a common IBM PowerKVM deployment.

1.3.1 QEMU

QEMU is an open source software that hosts the virtual machines on a KVM hypervisor. It is the software that manages and monitors the virtual machines and performs the following basic operations:

•Create virtual image disks.

•Change the state of a virtual machine:

– Start virtual machine.

– Stop a virtual machine.

– Suspend a virtual machine.

– Resume a virtual machine.

– Take and restore snapshots.

– Delete a virtual machine.

•Handle the I/O between guests and the hypervisor.

In a simplified view, you can consider the QEMU as the user space tool for handling virtualization and KVM the kernel space module.

QEMU can also work as an emulator, but that situation is not covered in this book.

QEMU monitor

QEMU provides a virtual machine monitor that helps control the virtual machine and performs most of the operations required by a virtual machine. The monitor can inspect the virtual machine low-level operations, such as details about the CPU registers, I/O device states, ejects a virtual CD and many other things.

You can use the command shown in Figure 1-8 to see the block devices that are attached to a QEMU image.

Figure 1-8 QEMU monitor screen example

To run a QEMU monitor by using the virsh command, use the following parameters:

# virsh qemu-monitor-command --hmp <domain> <monitor command>

1.3.2 KVM

A kernel-based virtual machine (KVM) is a part of open source virtualization infrastructure that turns the Linux kernel into an enterprise-class hypervisor.

QEMU is another part of this infrastructure, and KVM is usually referred as the QEMU and KVM stack of software. Throughout this publication, KVM is used as the whole infrastructure on the Linux operating system to turn it into a usable hypervisor.

The whole stack used to enable this infrastructure is described in 1.3, “Software stack” on page 9.

KVM requires hardware virtualization extensions, as described in section 5.1.2, “Hardware-assisted virtualization” on page 73.

KVM performance

Because KVM is a very thin layer over the firmware, it can deliver an enterprise-grade performance to the virtual machines and can consolidate a huge amount of work on a single server. One of the important advantages of virtualization is the possibility of using resource overcommitment.

Resource overcommitment

Overcommitment is a mechanism to expose more CPU, I/O, and memory to the guest machine than exists on the real server, thereby increasing the resource use and improving the server consolidation.

SPEC performance

KVM is designed to deliver the best performance on virtualization. There are virtualization-specific benchmarks. Possibly the most important one at the moment is called SPECvirt, which is part of the Standard Performance Evaluation Corporation (SPEC) group.

SPECvirt is a benchmark that addresses performance evaluation of data center servers that are used in virtualized server consolidation. It measures performance of all of the important components in a virtualized environment, from the hypervisor to the application running in the guest operating system.

For more information about the benchmark as the KVM results, check the SPEC web page:

1.3.3 Open Power Abstraction Layer

IBM Open Power™ Abstraction Layer (OPAL) is a small layer of firmware that is available on the POWER8 machines. It provides support for the PowerKVM software stack.

OPAL is part of the firmware that interacts with the hardware and exposes it to the PowerKVM hypervisor.

1.3.4 Guest operating system

The following operating systems are supported as guests in the PowerKVM environment:

•Red Hat Enterprise Linux Version 6.5 or later

•SUSE Linux Enterprise SLES 11 SP3 or later

•Ubuntu 14.04 or later

1.3.5 Libvirt software

Libvirt software is the open source infrastructure to provide the low-level virtualization capabilities in most hypervisors that are available, including KVM, Xen, VMware, IBM PowerVM. The purpose of libvirt is to provide a more friendly environment for the end users.

Libvirt provides different ways of access, from a command line called virsh to a low-level API for many programming languages.

The main component of the libvirt software is the libvirtd daemon. This is the component that interacts directly with QEMU or KVM software.

This book covers only the command-line interface. See chapter Chapter 4, “Managing guests from a CLI” on page 59. There are many command-line tools to handle the virtual machines, such as virsh, guestfish, virt-df, virt-clone, virt-df, and virt-image.

For more information about the libvirt commands, see the online documentation:

1.3.6 Virsh interface

Virsh is the command-line interface used to handle the virtual machines. It works by connecting to the libvirt API that connects to the hypervisor software (QEMU in the PowerKVM scenario) as Figure 1-9. Virsh is the main command when managing the hypervisor using command line.

Figure 1-9 Virsh and libvirt architecture

For more information about virsh, see Chapter 4, “Managing guests from a CLI” on page 59.

Virtual machine definition

A virtual machine can be created directly using virsh commands. All the virtual machines managed by virsh are represented by an XML file. It means that all the virtual machine settings, as the amount of CPU and memory, disks and I/O are defined in an XML file.

For more information, see 6.3, “Storage pools” on page 95.

1.3.7 Intelligent Platform Management Interface

Intelligent Platform Management Interface (IPMI) is an out-of-band communication interface and a software implementation that controls the hardware operation. It is a layer below the operating system, so the system is still manageable even if the machine does not have an operating system installed. It is also used to get the console to install an operating system in the machine.

On an IBM Power System, the IPMI server is hosted in the service processor controller. This means that the commands directed to the server should use the service processor IP address, not the hypervisor IP address.

These are some of the IPMI tools that work with PowerKVM:

•OpenIPMI

•FreeIPMI

•IPMItool

Table 1-3 shows the most-used IPMI commands:

Table 1-3 Frequently used IPMI commands

|

Command

|

Description

|

|

sol

|

Serial over LAN console

|

|

power

|

Control the machine power state

|

|

sensor

|

Show machine sensors, as memory and CPU faults

|

|

fru

|

Information about machine FRU (Field Replaceable Units) parts.

|

|

user

|

Configure users accounts on IPMI server

|

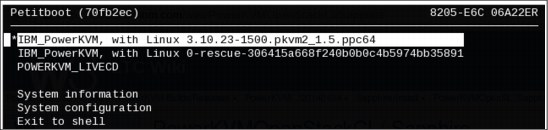

1.3.8 Petitboot

Petitboot is an open source platform independent boot loader based on Linux. It is used in the PowerKVM hypervisor stack, and is used to boot the hypervisor operating system.

Petitboot includes graphical and command-line interfaces, and can be used as a client or server boot loader. For this document, only the basic use will be covered.

Figure 1-10 Petitboot screen

For more formation about this software, check the Petitboot web page.

1.3.9 Kimchi

Kimchi is a local management tool meant to manage a few servers virtualized with PowerKVM. Kimchi has been integrated into PowerKVM and allows initial host configuration, as well as managing virtual machine using the web browser through an HTML5 interface.

The main goal of Kimchi is to provide a friendly user interface for PowerKVM, allowing them to operate the server by using a browser most of the time. These are some of the other Kimchi features:

•Firmware update

•Backup of the configuration

•Host monitoring

•Virtual machine templates

•VM guest console

•VM guest VNC

•Boot and Install from a data stream

To connect to the Kimchi page, the browser should be pointed to the hypervisor using the HTTPS port 8001, as shown in example Figure 1-11.

Figure 1-11 Kimchi home panel

For more information about the Kimchi project, check at Chapter 3, “Managing guests from a web interface” on page 41 or the project web page:

1.3.10 Slimline Open Firmware

Slimline Open Firmware (SLOF) is an open source firmware used on PowerKVM to boot the guest OS.

SLOF is also machine independent firmware based on the IEEE-1275 standard, also known as the Open Firmware Standard. It executes during boot time and then it is not necessary any more in the system, so it is removed from the memory. You can see the SLOF architecture in Figure 1-12.

Figure 1-12 SLOF during VM boot

For more information, see the SLOF web page:

1.3.11 Virtio drivers

Virtio is a virtualization standard that enables high-performance communication between guests and hypervisor. This is based on the guest being virtual machine-aware and, as a result, cooperating with the hypervisor.

Figure 1-13 Virtio architecture for network stack

There are many Virtio drivers that are supported by QEMU. The main ones are described in Table 1-4.

Table 1-4 Virtio drivers

|

Device driver

|

Description

|

|

virtio-blk

|

Virtual device for block devices

|

|

virtio-net

|

Virtual device for network driver

|

|

virtio-pci

|

Low-level virtual driver to allow PCI communication

|

|

virtio-scsi

|

Virtual storage interface that supports advanced SCSI hardware

|

|

virtio-balloon

|

Virtual driver that allows dynamic memory allocation

|

|

virtio-console

|

Virtual device that allows console handling.

|

|

virtio-serial

|

Virtual device driver for multiple serial ports

|

|

virtio-rng

|

Virtual device driver that exposes hardware number generator to the guest

|

For the guest point of view, the drivers need to be loaded in the operating system.

|

Note: ibmveth and ibmvscsi are also paravirtualized drivers.

|

1.3.12 RAS stack

The RAS tools is a set of applications to manage Power Systems that is part of the PowerKVM stack. This set of tools works on low-level configurations. The primary goal is to change POWER processor configuration, such as enabling and disabling SMT, enabling microthreading, discovering how many cores are configured in the machine, determining the CPU operating frequency and others. The following tools are a subset of the packages in powerpc-utils:

•ppc64_utils

•drmgr

•lsslot

•update_flash

•bootlist

•opal_errd

•lsvpd

•lsmcode

1.4 Comparison of PowerVM and PowerKVM features

Table 1-5 compares IBM PowerVM and IBM PowerKVM features.

Table 1-5 Comparison of IBM PowerKVM and IBM PowerVM features

|

Feature

|

IBM PowerVM

|

IBM PowerKVM

|

|

Micropartitioning

|

Yes

|

Yes

|

|

Dynamic logical partition

|

Yes

|

Partial

|

|

SR-IOV support

|

Yes

|

No

|

|

Shared storage pools

|

Yes

|

Yes

|

|

Live partition mobility

|

Yes

|

Yes

|

|

Memory compression

|

Yes (IBM Active Memory™ Exploitation)

|

No (zswap can be installed manually)

|

|

Memory page sharing

|

Yes (Described as Active Memory Deduplication)

|

Yes (Described as Kernel SamePage Merging, or KSM)

|

|

NPIV

|

Yes

|

No

|

|

License

|

Proprietary

|

Open source

|

|

PCI passthrough

|

Yes

|

Yes

|

|

Supported machines

|

All IBM Power Systems

|

IBM scale-out systems only

|

|

Supported operating systems in the guest

|

IBM AIX

IBM i

Linux

|

Linux

|

|

Different editions

|

Yes (Express, Standard, and Enterprise)

|

No

|

|

Sparse disk storage

|

Yes (thin provisioning)

|

Yes.(qcow2 image)

|

|

Adding devices to the guest

|

DLPAR

|

Hot plug

|

1.5 Terminology

Table 1-6 lists the terms used for PowerKVM and the counterpart terms for KVM on x86. Also see “Abbreviations and acronyms” on page 119.

Table 1-6 Terminology comparing KVM and PowerVM

|

IBM PowerKVM

|

KVM on x86

|

IBM PowerVM

|

|

flexible service processor (FSP)

|

integrated management module (IMM))

|

flexible service processor (FSP)

|

|

guest, virtual machine

|

guest, virtual machine

|

LPAR

|

|

hot plug

|

hot plug

|

dynamic LPAR

|

|

hypervisor, host

|

hypervisor, host

|

hypervisor

|

|

Image formats: qcow2, raw, nbd, and other image formats

|

acow2, raw, nbd, and other image formats

|

proprietary

|

|

IPMI

|

IPMI

|

HMC

|

|

kernel samepage merging (KSM)

|

kernel samepage merging

|

Active Memory Deduplication

|

|

Kimchi and virsh

|

Kimchi and virsh

|

HMC and IVM (Integrated virtualization manager)

|

|

KVM host user space (QEMU)

|

KVM host user space (QEMU)

|

VIOS (Virtual I/O Server)

|

|

Open Power Abstraction Layer (OPAL)

|

UEFI (Unified Extensible Firmware Interface) and BIOS

|

PowerVM hypervisor driver (pHyp)

|

|

Preboot Execution Environment (PXE)

|

Preboot eXecution Environment

|

BOOTP and TFTP, NIM

|

|

SLOF (Slimline Open Firmware)

|

SeaBIOS

|

Open Firmware, SMS

|

|

Virtio drivers, ibmvscsi, and ibmveth

|

Virtio drivers

|

ibmvscsi, ibmveth

|

|

VNC (Virtual Network Computing)

|

VNC

|

None

|

|

zswap

|

zswap

|

Active Memory Expansion (AME)

|

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.