Interfaces and interactions

6.1 Introduction

6.2 Paradigms

6.3 Interface types

6.4 Which interface?

The main aims of this chapter are:

- To introduce the notion of a paradigm and set the scene for how the various interfaces have developed in interaction design.

- To overview the many different kinds of interfaces.

- To highlight the main design and research issues for each of the different interfaces.

- To consider which interface is best for a given application or activity.

6.1 Introduction

Until the mid-1990s, interaction designers concerned themselves largely with developing efficient and effective user interfaces for desktop computers aimed at the single user. This involved working out how best to present information on a screen such that users would be able to perform their tasks, including determining how to structure menus to make options easy to navigate, designing icons and other graphical elements to be easily recognized and distinguished from one another, and developing logical dialog boxes that are easy to fill in. Advances in graphical interfaces, speech and handwriting recognition, together with the arrival of the Internet, cell phones, wireless networks, sensor technologies, and an assortment of other new technologies providing large and small displays, have changed the face of human–computer interaction. During the last decade designers have had many more opportunities for designing user experiences. The slew of technological developments has encouraged different ways of thinking about interaction design and an expansion of research in the field. For example, innovative ways of controlling and interacting with digital information have been developed that include gesture-based, tactile-based, and emotion-based interaction. Researchers and developers have also begun combining the physical and digital worlds, resulting in novel interfaces, including ‘mixed realities,’ ‘augmented realities,’ ‘tangible interfaces,’ and ‘wearable computing.’ A major thrust has been to design new interfaces that extend beyond the individual user: supporting small- and large-scale social interactions for people on the move, at home, and at work.

While making for exciting times, having so many degrees of freedom available within which to design can be daunting. The goal of this chapter is to consider how to design interfaces for different environments, people, places, and activities. To begin with, we give an overview of paradigmatic developments in interaction design. We then present an overview of the major interface developments, ranging from WIMPs (windows, icons, menus, pointer) to wearables. For each one, we outline the important challenges and issues confronting designers, together with illustrative research findings and products.

It is not possible to describe all the different types of interface in one book, let alone one chapter, and so we have necessarily been selective in what we have included. There are many excellent practitioner-oriented handbooks available that cover in more detail design concerns for a particular kind of interface or technology/application (see end of the chapter for examples). These include web, multimedia, and more recently handheld/mobile technology design. Here, we provide an overview of some of the key research and design concerns for a selection of interfaces, some of which are only briefly touched upon while others, that are more established in interaction design, are described in depth. Nevertheless, the chapter is much longer than the others in the book and can be read in sections or simply dipped into to find out about a particular type of interface.

6.2 Paradigms

Within interaction design, a paradigm refers to a particular approach that has been adopted by the community of researchers and designers for carrying out their work, in terms of shared assumptions, concepts, values, and practices. This follows from the way the term has been used in science to refer to a set of practices that a community has agreed upon, including:

- The questions to be asked and how they should be framed.

- The phenomena to be observed.

- The way findings from experiments are to be analyzed and interpreted (Kuhn, 1962).

In the 1980s, the prevailing paradigm in human–computer interaction was how to design user-centered applications for the desktop computer. Questions about what and how to design were framed in terms of specifying the requirements for a single ‘user’ interacting with a screen-based ‘interface.’ Task analytic and usability methods were developed based on an individual user's cognitive capabilities. The acronym WIMP was used as one way of characterizing the core features of an interface for a single user: this stood for Windows, Icons, Menus, and Pointer. This was later superseded by the GUI (graphical user interface), a term that has stuck with us ever since.

Within interaction design, many changes took place in the mid- to late 1990s. The WIMP interface with its single thread, discrete event dialog was considered to be unnecessarily limiting, e.g. Jacob, 1996. Instead, many argued that new frameworks, tools, and applications were needed to enable more flexible forms of interaction to take place, having a higher degree of interactivity and parallel input/output exchanges. At the same time, other kinds of non-WIMP interfaces were experimented with. The shift in thinking, together with technological advances, led to a new generation of user–computer environments, including virtual reality, multimedia, agent interfaces, pen-based interfaces, eye-movement-based interfaces, tangible interfaces, collaborative interfaces, and ubiquitous computing. The effect of moving interaction design ‘beyond the desktop’ resulted in many new challenges, questions, and phenomena being considered. New methods of designing, modeling, and analyzing came to the fore. At the same time, new theories, concepts, and ideas entered the stage. A turn to the ‘social,’ the ‘emotional,’ and the ‘environmental’ began shaping what was studied, how it was studied, and ultimately what was designed. Significantly, one of the main frames of reference—the single user—was replaced by a set of others, including people, places, and context.

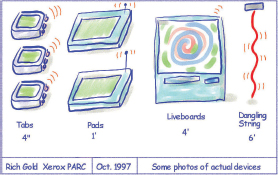

One of the most influential developments that took place was the birth of ubiquitous computing (Weiser, 1991). A main idea was that the advent of ubiquitous computing (or ‘UbiComp’ as it is commonly known) would radically change the way people think about and interact with computers. In particular, computers would be designed to be part of the environment, embedded in a variety of everyday objects, devices, and displays (see Figure 6.1). The idea behind Weiser's vision was that a ubiquitous computing device would enter a person's center of attention when needed and move to the periphery of their attention when not, enabling the person to switch calmly and effortlessly between activities without having to figure out how to use a computer in performing their tasks. In essence, the technology would be unobtrusive and largely disappear into the background. People would be able to get on with their everyday and working lives, interacting with information and communicating and collaborating with others without being distracted or becoming frustrated with technology.

Figure 6.1 Examples of sketches for the new ubiquitous computing paradigm

The grand vision of ubiquitous computing has led to many new challenges, themes, and questions being articulated in interaction design and computer science. These include:

- How to enable people to access and interact with information in their work, social, and everyday lives, using an assortment of technologies.

- How to design user experiences for people using interfaces that are part of the environment but where there are no obvious controlling devices.

- How and in what form to provide contextually-relevant information to people at appropriate times and places to support them while on the move.

- How to ensure that information that is passed around via interconnected displays, devices, and objects, is secure and trustworthy.

The shift in thinking about ubiquitous computing has resulted in many new research and design activities and an extended vocabulary. Other terms, including pervasive computing, the disappearing computer, and ambient intelligence, have evolved that have different emphases and foci (we view them here as overlapping). In the next section, we describe the many new (and old) forms of interfaces that have been developed.

6.3 Interface Types

There are many kinds of interface that can be used to design for user experiences. A plethora of adjectives have been used to describe these, including graphical, command, speech, multimodal, invisible, ambient, mobile, intelligent, adaptive, and tangible. Some of the interface types are primarily concerned with a function (e.g. to be intelligent, to be adaptive, to be ambient), while others focus on the interaction style used (e.g. command, graphical, multimedia), the input/output device used (e.g. pen-based, speech-based), or the platform being designed for (e.g. PC, microwave). Here, we describe a selection of established and novel interfaces, outlining their benefits, and main design and research issues. We also include descriptions of illustrative products or prototypes that have been developed for each. The interface types are broken down into three decades loosely ordered in terms of when they were developed (see Table 6.1). It should be noted that this classification is not strictly accurate since some interface types emerged across the decades. The purpose of breaking them down into three sections is to make it easier to read about the many types.

1980s Interfaces

|

1990s interfaces

|

2000s interfaces

|

Table 6.1 The selection of interfaces, grouped into three decades, covered in this chapter that have evolved during the last 30 years

6.3.1 1980s interfaces

In this section we cover command and WIMP/GUI interfaces.

Command Interfaces

Command line driven interfaces require the user to type in commands that are typically abbreviations, e.g. ls, at the prompt symbol appearing on the computer display to which the system responds, e.g. by listing current files using a keyboard. Another way of issuing commands is through pressing certain combinations of keys, e.g. Shift+ Alt+ Ctrl. Some commands are also a fixed part of the keyboard, for example, ‘delete,’ ‘enter,’ and ‘undo,’ while other function keys can be programmed by the user as specific commands, e.g. F11 standing for ‘print’.

Activity 6.1

If you have not previously had any experience of a command-based interface, ask someone to set up a session for you on a UNIX terminal. Try using the UNIX commands SORT and ctrl D (to stop).

First type at the prompt sort

Then type in some names of animals, e.g.

Tiger

Elephant

Antelope

Snake

Then use the end of input command

^D (Ctrl D)

What happens on the screen? Do the same for a list of random numbers and see what happens.

Comment

The command SORT alphabetically or numerically sorts the typed input from the keyboard and will display your typed-in list of animals in alphabetical order and your list of numbers in numerical order. The same is true for a list of numbers. It is a very quick and efficient way of sorting and does not require selecting and specifying what to sort in a dialog box (as is required in GUI interfaces).

Advantages of command line based interfaces are their efficiency, precision, and speed. Users can issue a large number of commands that require one-step actions. Histories of interactions can be readily viewed at the prompt, allowing the user to see a trace of their interactions. However, efficiency and speed come at a cost. As you yourself may have experienced, there is a huge overhead in having to learn and remember all the key combinations or abbreviations or names used in a command line based system. While some people are able to remember the meaning of a large number of command names/keys and able to recall them when needed, many users find it difficult. This is especially so for infrequently used commands, where the user has to look them up in a manual or table. This can be time-consuming and break the flow of the interaction. Furthermore, selecting the wrong function key or combination of keys can occur when commands have been designed arbitrarily, e.g. F10 for save, or follow inconsistent rules, e.g. using Ctrl plus first letter of an operation for some commands and not others, such as Ctrl + F keys for Find, and Ctrl +V for Paste.

Box 6.1: Expert Use of Commands in AutoCAD

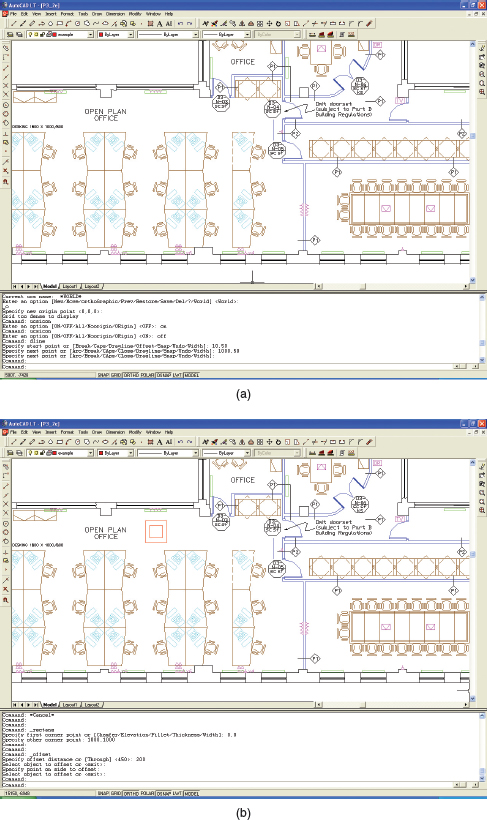

AutoCAD LT is a Windows version of a popular drafting package used by a variety of professionals in the building and engineering trade. It is designed to be used by expert draughtsmen/women and offers a sophisticated interface that combines a number of styles. It is a good example of using the different interaction styles for the most appropriate actions. Figure 6.2 illustrates the application's interface. The user has the option of issuing commands using the menus at the top of the screen, the toolbar just below the menus, or by entering the commands directly in the window offered at the bottom (note that this window can be resized, and most expert users keep this portion of the screen to only 3 or 4 lines at most). In addition, entry of point locations on the drawing can be done using a pointing device or by entering coordinates at the command line. The keyboard function keys offer shortcuts for other commands, and there is a set of buttons at the bottom of the screen offering further commands.

The command line portion of the screen has several purposes. First, commands issued using the toolbar, menus, function keys, or pointing device are echoed in the command line window. Second, the user is prompted regarding the appropriate options or other entries needed to complete a command. Third, the command line can be used to enter commands, point locations, or dimensions directly from the keyboard. Fourth, error messages are displayed here.

The two screens shown in Figure 6.2 illustrate some of the steps used to draw the two red squares in the top left of the drawing in Figure 6.2(b) (this was part of the action necessary to add some filing cabinets to the office layout shown). The first screen illustrates some of the commands issued in order to set up a coordinate system in the correct location on the drawing. This is reflected in the first 9 lines of the command line portion of the screen. Note the different responses recorded in the dialog window. The option ‘o’ was chosen using another menu command, the default for the new origin point was accepted by hitting ‘return’ at the keyboard. The user then attempted to turn the grid on by pressing the GRID button at the bottom of the screen, but this resulted in the error message “Grid too dense to display.” The command ‘ucsicon’ was typed via the keyboard. This command displays the coordinate origin on the drawing, and the user first turned this ‘on’ to check that it was in the right place, and then turned it ‘off.’ Both ‘on’ and ‘off’ were typed at the keyboard.

The second screen shows some of the dialog for drawing the two squares. The rectangle and offset commands this time were issued using the toolbar, and the coordinates were entered using the keyboard.

Figure 6.2 (a) The first step in drawing the new cabinets is to set up the coordinate system in the correct location. (b) Screen dump showing some of the commands issued in order to draw the red squares at the top of the drawing

In the early 1980s, much research investigated ways of optimizing command-based interfaces. The form of the commands (e.g. use of abbreviations, full names, familiar names), syntax (e.g. how best to combine different commands), and organization (e.g. how to structure options) are examples of some of the main areas that have been investigated (Shneiderman, 1998). A further concern was which names to use as commands that would be the easiest to remember. A number of variables were tested, including how familiar users were with the chosen names. Findings from a number of studies, however, were inconclusive; some found specific names were better remembered than general ones (Barnard et al., 1982), others showed names selected by users themselves were preferable (e.g. Ledgard et al., 1981; Scapin, 1981), while yet others demonstrated that high-frequency words were better remembered than low-frequency ones (Gunther et al., 1986).

The most relevant design principle is consistency (see Chapter 1). The method used for labeling/naming the commands should be chosen to be as consistent as possible, e.g. always use first letters of operation when using abbreviations.

Command-line interfaces have been largely superseded by graphical interfaces that incorporate commands as menus, icons, keyboard shortcuts, and pop-up/predictable text commands as part of an application. Where command line interfaces continue to have an advantage is when users find them easier and faster to use than equivalent menu-based systems (Raskin, 2000) and for performing certain operations as part of a complex software package, as we saw in Box 6.2. They also provide scripting for batch operations and are being increasingly used on the Web, where the search bar acts as a general purpose command line facility, e.g. www.yubnub.org. Many programmers prefer managing their files at the DOS/UNIX shell level of an operating system, while using command line text editors, like vi, when coding and debugging.

WIMP/GUI Interfaces

The Xerox Star interface (described in Chapter 2) led to the birth of the WIMP and subsequently the GUI, opening up new possibilities for users to interact with a system and for information to be presented and represented at the interface. Specifically, new ways of visually designing the interface became possible, that included the use of color, typography, and imagery (Mullet and Sano, 1995). The original WIMP comprises:

- Windows (that could be scrolled, stretched, overlapped, opened, closed, and moved around the screen using the mouse).

- Icons (that represented applications, objects, commands, and tools that were opened or activated when clicked on).

- Menus (offering lists of options that could be scrolled through and selected in the way a menu is used in a restaurant).

- Pointing device (a mouse controlling the cursor as a point of entry to the windows, menus, and icons on the screen).

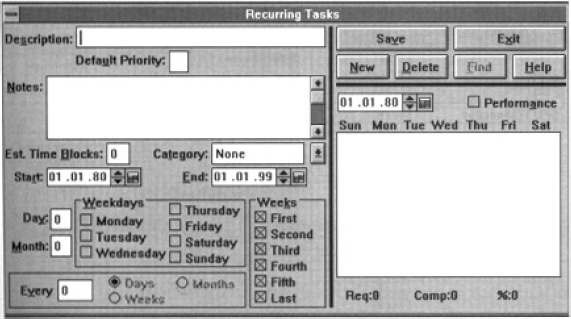

The first generation of WIMP interfaces was primarily boxy in design; user interaction took place through a combination of windows, scroll bars, checkboxes, panels, palettes, and dialog boxes that appeared on the screen in various forms (see Figure 6.3). Application programmers were largely constrained by the set of widgets available to them, of which the dialog box was most prominent. (A widget is a standardized display representation of a control, like a button or scroll bar, that can be manipulated by the user.)

Figure 6.3 The boxy look of the first generation of GUIs. The window presents several checkboxes, notes boxes, and options as square buttons

The basic building blocks of the WIMP are still part of the modern GUI, but have evolved into a number of different forms and types. For example, there are now many different types of icons and menus, including audio icons and audio menus, 3D animated icons, and 2D icon-based menus. Windows have also greatly expanded in terms of how they are used and what they are used for; for example, a variety of dialog boxes, interactive forms, and feedback/error message boxes have become pervasive. In addition, a number of graphical elements that were not part of the WIMP interface have been incorporated into the GUI. These include toolbars and docks (a row or column of available applications and icons of other objects such as open files) and rollovers (where text labels appear next to an icon or part of the screen as the mouse is rolled over it). Here, we give an overview of the design issues concerning the basic building blocks of the WIMP/GUI: windows, menus, and icons.

Window design. Windows were invented to overcome the physical constraints of a computer display, enabling more information to be viewed and tasks to be performed at the same screen. Multiple windows can be opened at any one time, e.g. web pages and word documents, enabling the user to switch between them, when needing to look or work on different documents, files, and applications. Scrolling bars within windows also enable more information to be viewed than is possible on one screen. Scrollbars can be placed vertically and horizontally in windows to enable upwards, downwards, and sideways movements through a document.

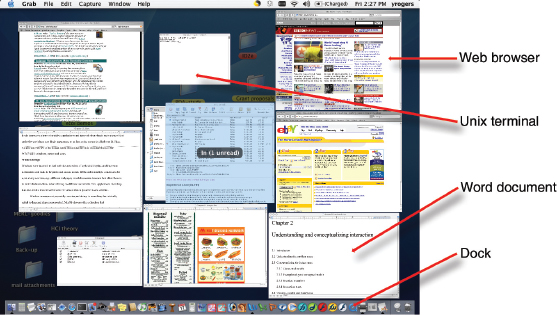

One of the disadvantages of having multiple windows open is that it can be difficult to find specific ones. Various techniques have been developed to help users locate a particular window, a common one being to provide a list as part of an application menu. MacOS also provides a function that shrinks all windows that are open so they can be seen side by side on one screen (see Figure 6.4). The user needs only to press one function key and then move the cursor over each one to see what they are called. This technique enables the user to see at a glance what they have in their workspace and also enables them to easily select one to come to the forefront. Another option is to display all the windows open for a particular application, e.g. Word.

Figure 6.4 A window management technique provided in MacOS: pressing the F9 key causes all open windows to shrink and be placed side by side. This enables the user to see them all at a glance and be able to rapidly switch between them

A particular kind of window that is commonly used in GUIs is the dialog box. Confirmations, error messages, checklists, and forms are presented through them. Information in the dialog boxes is often designed to guide user interaction, with the user following the sequence of options provided. Examples include a sequenced series of forms (i.e. Wizards) presenting the necessary and optional choices that need to be filled in when choosing a PowerPoint presentation or an Excel spreadsheet. The downside of this style of interaction is that there can be a tendency to cram too much information or data entry fields into one box, making the interface confusing, crowded, and difficult to read (Mullet and Sano, 1995).

Box 6.2: The joys of filling in forms on the web

For many of us, shopping on the Internet is generally an enjoyable experience. For example, choosing a book on Amazon or flowers from Interflora can be done at our leisure and convenience. The part we don't enjoy, however, is filling in the online form to give the company the necessary details to pay for the selected items. This can often be a frustrating and time-consuming experience. It starts with having to create an account and a new password. Once past this hurdle, a new interactive form pops up for the delivery address and credit card details. The standard online form has a fixed format making it cumbersome and annoying to fill in, especially for people whose address does not fit within its constraints. Typically, boxes are provided (asterisked for where they must be filled in) for: address line 1 and address line 2, providing no extra lines for addresses that have more than two lines; a line for the town/city; and a line for the zip code (if the site is based in the USA) or other postal code (if based in another country). The format for the codes is different, making it difficult for non-US residents (and US residents for other country sites) to fill in this part. Further boxes are provided for home, work, and cell phone number, fax number, and email address (is it really necessary to provide all of these?) and credit card type, name of the owner, and credit card number.

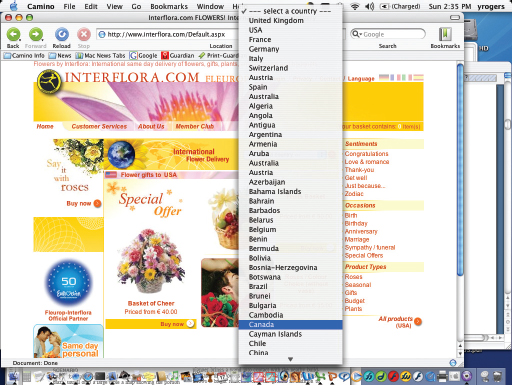

One of the biggest gripes about online registration forms is the country of residence box that opens up as a never-ending menu, listing all of the countries in the world in alphabetical order. Instead of typing in the country they live in, users are forced to select the one they are from, which is fine if they happen to live in Australia or Austria but not if they live in Venezuela or Zambia. Some menus place the host site country first, but this can be easily overlooked if the user is primed to look for the letter of their country (see Figure 6.5).

This is an example of where the design principle of recognition over recall (see Chapter 3) does not apply and where the converse is true. A better design might be to have a predictive text option, where users need only to type in the first two or so letters of the country they are from to cause a narrowed down list of choices to appear that they can then select from at the interface.

Activity 6.2

Go to the Interflora site (interflora.co.uk) and click on the international delivery option at the top of the homepage. How are the countries ordered? Is it an improvement to the scrolling pop-up menu?

At the time of writing this chapter, eight countries were listed at the top starting with the United Kingdom, then the USA, France, Germany, Italy, Switzerland, Austria, and Spain (see Figure 6.5). This was followed by the remaining set of countries listed in alphabetical order. The reason for having this particular ordering could be that the top eight are the countries who have most customers, with the UK residents using the service the most. More recently, the website had changed to using a table format grouping all the countries in alphabetical order using four columns across the page (see Table 6.2). Do you think this is an improvement? It took me about 8 seconds to select Sri Lanka, having overshot the target the first time I scrolled through, and 6 seconds to scroll through the more recent table (see below) using the web browser scrollbar.

Table 6.2 An excerpt of the listing of countries in alphabetical order using a table format

Research and design issues

A key research concern is window management—finding ways of enabling users to move fluidly between different windows (and monitors) and to be able to rapidly switch their attention between them to find the information they need or to work on the document/task within each of them—without getting distracted. Studies of how people use windows and multiple monitors have shown that window activation time (i.e. the time a window is open and interacted with) is relatively short, an average of 20 seconds, suggesting that people switch frequently between different documents and applications (Hutchings et al., 2004). Widgets like the taskbar in the Windows environment are used as the main method of switching between windows.

Microsoft and Apple are also continuously researching new ways of making switching between applications and documents simpler and coming up with new metaphors and organizing principles. An example is the ‘galleries’ concept (part of Microsoft Office 12), which provides users with a set of options to choose from (instead of a dialog box) when working on a document, spreadsheet, presentation, etc.

To increase the legibility and ease of use of information presented in windows, the design principles of spacing, grouping, and simplicity should be used (discussed in Chapter 3). An early overview of window interfaces—that is still highly relevant today—is Brad Myers's taxonomy of window manager interfaces (Myers, 1988).

Menu design. Just like restaurant menus, interface menus offer users a structured way of choosing from the available set of options. Headings are used as part of the menu to make it easier for the user to scan through them and find what they want. Figure 6.6 presents two different styles of restaurant menu, designed to appeal to different cultures: the American one is organized into a number of categories including starters (“new beginnings”), soups and salads (“greener pastures”) and sandwiches, while the Japanese burger menu is presented in three sequential categories: first the main meal, next the side order, and lastly the accompanying drink. The American menu uses enticing text to describe in more detail what each option entails, while the Japanese one uses a combination of appetizing photos and text.

Figure 6.6 Two different ways of classifying menus designed for different cultures

Interface menu designs have employed similar methods of categorizing and illustrating options available that have been adapted to the medium of the GUI. A difference is that interface menus are typically ordered across the top row or down the side of a screen using category headers as part of a menu bar. The contents of the menus are also for the large part invisible, only dropping down when the header is selected or rolled over with a mouse. The various options under each menu are typically ordered from top to bottom in terms of most frequently used options and grouped in terms of their similarity with one another, e.g. all formatting commands are placed together.

There is a number of menu interface styles, including flat lists, drop-down, pop-up, contextual, and expanding ones, e.g. scrolling and cascading. Flat menus are good at displaying a small number of options at the same time and where the size of the display is small, e.g. PDAs, cell phones, cameras, iPod. However, they often have to nest the lists of options within each other, requiring several steps to be taken by a user to get to the list with the desired option. Once deep down in a nested menu the user then has to take the same number of steps to get back to the top of the menu. Moving through previous screens can be tedious.

Expanding menus enable more options to be shown on a single screen than is possible with a single flat menu list. This makes navigation more flexible, allowing for the selection of options to be done in the same window. However, as highlighted in Figure 6.5, it can be frustrating having to scroll through tens or even hundreds of options. To improve navigation through scrolling menus, a number of novel controls have been devised. For example, the iPod provides a physical scrollpad that allows for clockwise and anti-clockwise movement, enabling long lists of tunes or artists to be rapidly scrolled through.

The most common type of expanding menu used as part of the PC interface is the cascading one (see Figure 6.7), which provides secondary and even tertiary menus to appear alongside the primary active drop-down menu, enabling further related options to be selected, e.g. selecting ‘track changes’ from the ‘tools’ menu leads to a secondary menu of three options by which to track changes in a Word document. The downside of using expanding menus, however, is that they require precise mouse control. Users can often end up making errors, namely overshooting or selecting the wrong options. In particular, cascading menus require users to move their mouse over the menu item, while holding the mouse pad or button down, and then when the cascading menu appears on the screen to move their cursor over to the next menu list and select the desired option. Most of us (even expert GUI users) have experienced the frustration of under- or over-shooting a menu option that leads to the desired cascading menu and worse, losing it as we try to maneuver the mouse onto the secondary or tertiary menu. It is even worse for people who have poor motor control and find controlling a mouse difficult.

Contextual menus provide access to often-used commands associated with a particular item, e.g. an icon. They provide appropriate commands that make sense in the context of a current task. They appear when the user presses the Control key while clicking on an interface element. For example, clicking on a photo in a website together with holding down the Control key results in a small set of relevant menu options appearing in an overlapping window, such as ‘open it in a new window,’ ‘save it,’ or ‘copy it.’ The advantage of contextual menus is that they provide a limited number of options associated with an interface element, overcoming some of the navigation problems associated with cascading and expanding menus.

Activity 6.3

Open an application that you use frequently (e.g. wordprocessor, email, web browser) and look at the menu header names (but do not open them just yet). For each one (e.g. File, Edit, Tools) write down what options you think are listed under each. Then look at the contents under each header. How many options were you able to remember and how many did you put in the wrong category? Now try to select the correct menu header for the following options (assuming they are included in the application): replace, save, spelling, and sort. Did you select the correct header each time or did you have to browse through a number of them?

Comment

Popular everyday applications, like wordprocessors, have grown enormously in terms of the functions they now offer. My current version of Word, for example, has 12 menu headers and 18 toolbars. Under each menu header there are on average 15 options, some of which are hidden under subheadings and only appear when they are rolled over with the mouse. Likewise, for each toolbar there is a set of tools that is available, be it for drawing, formatting, web, table, or borders. I find I can remember best the location of frequently used commands like spelling and replace. However, this is not because I remember which header is associated with which command, but more because of their spatial location. For infrequently used commands, like sorting a list of references into alphabetical order, I spend time flicking through the menus to find the command ‘sort.’ It is difficult to remember that the command ‘sort’ should be under the ‘table’ heading since what I am doing is not a table operation but using a tool to organize a section of my document. It would be more intuitive if the command was under the ‘tool’ header along with similar tools like ‘spelling.’ What this example illustrates is just how difficult it can be to group menu options into clearly defined and obvious categories. Some fit into several categories, while it can be difficult to group others. The placement of options in menus can also change between different versions of an application as more functions are added.

Research and design issues

Similar to command names, it is important to decide which are the best terms to use for menu options. Short phrases like ‘bring all to front’ can be more informative than single words like ‘front.’ However, the space for listing menu items is often restricted, such that menu names need to be short. They also need to be distinguishable, i.e. not easily confused with one another so that the user does not choose the wrong one by mistake. Operations such as ‘quit’ and ‘save’ should also be clearly separated to avoid the accidental loss of work.

The choice of which type of menu to use will often be determined by the application and type of system. Which is best will depend on the number of options that are on offer and the size of the display to present them in. Flat menus are best for displaying a small number of options at one time, while expanding menus are good for showing a large number of options, such as those available in file and document creation/editing applications.

Many guidelines exist for menu design, emphasizing the structuring, the navigation, and the number of items per menu. For example, an excerpt from ISO 9241, a major international standard for interaction design, considers grouping in menu design, as shown in Figure 6.8.

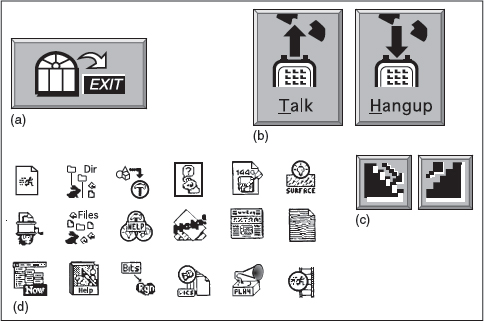

Icon design. The appearance of icons at the interface came about following the Xerox Star project (see Figure 2.1). They were used to represent objects as part of the desktop metaphor, namely, folders, documents, trashcans, and in- and out-trays. An assumption behind using icons instead of text labels is that they are easier to learn and remember, especially for non-expert computer users. They can also be designed to be compact and variably positioned on a screen.

Icons have become a pervasive feature of the interface. They now populate every application and operating system, and are used for all manner of functions besides representing desktop objects. These include depicting tools (e.g. paintbrush), applications (e.g. web browser), and a diversity of abstract operations (e.g. cut, paste, next, accept, change). They have also gone through many changes in their look and feel: black and white, color, shadowing, photorealistic images, 3D rendering, and animation have all been used.

While there was a period in the late 1980s/early 1990s when it was easy to find poorly designed icons at the interface (see Figure 6.9), icon design has now come of age. Interface icons look quite different; many have been designed to be very detailed and animated, making them both visually attractive and informative. The result is the design of GUIs that are highly inviting and emotionally appealing, and that feel alive. For example, Figure 6.10 contrasts the simple and jaggy Mac icon designs of the early 1990s with those that were developed as part of the Aqua range for the more recent operating environment Mac OSX. Whereas early icon designers were constrained by the graphical display technology of the day, they now have more flexibility. For example, the use of anti-aliasing techniques enables curves and non-rectilinear lines to be drawn, enabling more photo-illustrative styles to be developed (anti-aliasing means adding pixels around a jagged border of an object to visually smooth its outline).

Figure 6.9 Poor icon set from the early 1990s. What do you think they mean and why are they so bad?

Figure 6.10 Early and more recent Mac icon designs for the TextEdit application

Icons can be designed to represent objects and operations at the interface using concrete objects and/or abstract symbols. The mapping between the representation and underlying referent can be similar (e.g. a picture of a file to represent the object file), analogical (e.g. a picture of a pair of scissors to represent ‘cut’), or arbitrary (e.g. the use of an X to represent ‘delete’). The most effective icons are generally those that are isomorphic since they have direct mapping between what is being represented and how it is represented. Many operations at the interface, however, are of actions to be performed on objects, making it more difficult to represent them using direct mapping. Instead, an effective technique is to use a combination of objects and symbols that capture the salient part of an action through using analogy, association, or convention (Rogers, 1989). For example, using a picture of a pair of scissors to represent ‘cut’ in a wordprocessing application provides sufficient clues as long as the user understands the convention of ‘cut’ for deleting text.

The greater flexibility offered by current GUI interfaces has enabled developers to create icon sets that are distinguishable, identifiable, and memorable. For example, different graphical genres have been used to group and identify different categories of icons. Figure 6.11 shows how colorful photo-realistic images have been used, each slanting slightly to the left, for the category of user applications, e.g. email, whereas monochrome straight-on and simple images have been used for the class of utility applications, e.g. printer set-up. The former have a fun feel to them, whereas the latter have a more serious look about them.

Figure 6.11 Contrasting genres of Aqua icons used for the Mac. The top row of icons have been designed for user applications and the bottom row for utility applications

Another approach has been to develop glossy, logo-style icons that are very distinctive, using only primary colors and symbols, having the effect of making them easily recognizable, such as those developed by Macromedia and Microsoft to represent their popular media applications (see Figure 6.12).

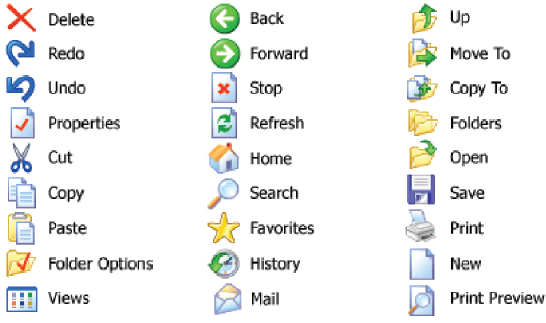

Icons that appear in toolbars or palettes as part of an application or presented on small device displays, e.g. PDAs, cell phones, digital cameras, have much less screen estate available. Because of this, they are typically designed to be simple, emphasizing the outline form of an object or symbol and using only grayscale or one or two colors. They tend to convey the tool and action performed on them using a combination of concrete objects and abstract symbols, e.g. a blank piece of paper with a plus sign representing a new blank document, an open envelope with an arrow coming out of it indicating a new message has arrived. Again, the goal should be to design a palette or set of icons that are easy to recognize and distinguishable from one another. Figure 6.13 provides examples of simple toolbar icons from Windows XP.

Figure 6.12 Logo-based icons for Microsoft and Macromedia applications (Powerpoint, Word, Dreamweaver, Flash) that are distinctive

Figure 6.13 Examples of simple and distinguishable icons used in Windows XP toolbar. A combination of objects, abstract symbols, and depictions of tools is used to represent common objects and operations

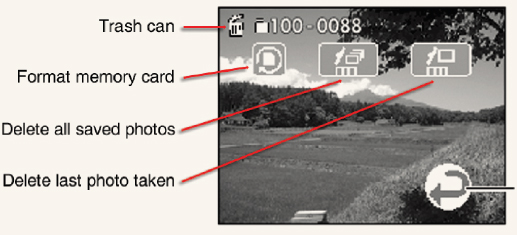

Sketch simple icons to represent the following operations to appear on a digital camera LCD screen:

- Delete last picture taken.

- Delete all pictures stored.

- Format memory card.

Show them to your peers or friends, tell them that they are icons for a new digital camera intended to be really simple to use, and see if they can understand what each represents.

Comment

Figure 6.14 shows Toshiba's icons based on analogy and convention that are presented on the LCD display of the camera.

Figure 6.14 Icons used by Toshiba for three of its digital camera operations

The trashcan, which has become the conventional GUI icon to represent the command ‘to delete,’ has been used in combination with a representation of a single photo or a stack of photos, indicating what is deleted. The icon (to the left of them) uses a combination of an object and symbol: the image is of a memory card and the arrow conveys a complete circle. (The reason why one occasionally needs to format a memory card is to remove any residual memory files that can accumulate.) A key design issue is to make the three icons distinct from one another, especially the ‘delete last photo taken’ from the ‘delete all saved photos.’

Various books on how to design icons (e.g. Caplin, 2001; Horton, 1994) are now available together with sets of guidelines, standards, and style guides. There are also many icon builders and icon sets, e.g. ClipArt, providing a wealth of resources for designers, so that they do not have to draw or invent icons from scratch. Apple Computer Inc. has always been very good at providing their developers with style guides, explaining why certain designs are preferable to others and how to design icon sets. On its developers' website (developer.apple.com), advice is given on how and why certain graphical elements should be used when developing different types of icon. Among the various guidelines, it suggests that different categories of application (e.g. user, utility) should be represented by a different genre (see Figure 6.11) and recommends displaying a tool to communicate the nature of a task, e.g. a magnifying glass for searching, a camera for a photo editing tool. Microsoft has also begun providing more extensive guidance and step-by-step procedures on how to design icons for its applications on its website.

To help disambiguate the meaning of icons, text labels can be used under, above, or to the side of their icons (see Figure 6.13). This method is effective for toolbars that have small icon sets, e.g. those appearing as part of a web browser) but is not as good for applications that have large icon sets, e.g. photo editing or wordprocessing, since the screen can get very cluttered and busy; and conversely, making it sometimes harder and longer to find an icon. To prevent text/icon clutter at the interface, a rollover function can be used, where a text label appears adjacent to or above an icon, after one second of the user holding the cursor over it and for as long as the user keeps the cursor on it. This method allows identifying information to be temporarily displayed when needed.

6.3.2 1990s Interfaces

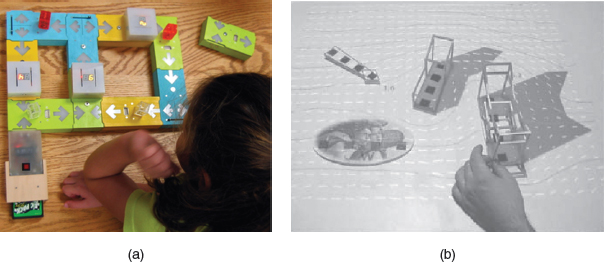

In this section we cover advanced graphical interfaces (including multimedia, virtual reality, and information visualization), speech-based, pen, gesture, and touch interfaces, and appliance interfaces.

Advanced Graphical Interfaces

A number of advanced graphical interfaces exist now that extend how users can access, explore, and visualize information. These include interactive animations, multimedia, virtual environments, and visualizations. Some are designed to be viewed and used by individuals; others by a group of users who are collocated or at a distance. Many claims have been made about the benefits they bring compared with the traditional GUI. Below we describe two major developments: multimedia and virtual environments, and then briefly touch upon visualizations.

Multimedia. Multimedia, as the name implies, combines different media within a single interface, namely, graphics, text, video, sound, and animations, and links them with various forms of interactivity. It differs from previous forms of combined media, e.g. TV, in that the different media can be interacted with by the user (Chapman and Chapman, 2004). Users can click on hotspots or links in an image or text appearing on one screen that leads them to another part of the program where, say, an animation or a video clip is played. From there they can return to where they were previously or move on to another place.

Many multimedia narratives and games have been developed that are designed to encourage users to explore different parts of the game or story by clicking on different parts of the screen. An assumption is that a combination of media and interactivity can provide better ways of presenting information than can either one alone. There is a general belief that ‘more is more’ and the ‘whole is greater than the sum of the parts’ (Lopuck, 1996). In addition, the ‘added value’ assumed from being able to interact with multimedia in ways not possible with single media (i.e. books, audio, video) is easier learning, better understanding, more engagement, and more pleasure (see Scaife and Rogers, 1996).

One of the distinctive features of multimedia is its ability to facilitate rapid access to multiple representations of information. Many multimedia encyclopedias and digital libraries have been designed based on this multiplicity principle, providing an assortment of audio and visual materials on a given topic. For example, if you want to find out about the heart, a typical multimedia-based encyclopedia will provide you with:

- One or two video clips of a real live heart pumping and possibly a heart transplant operation.

- Audio recordings of the heart beating and perhaps an eminent physician talking about the cause of heart disease.

- Static diagrams and animations of the circulatory system, sometimes with narration.

- Several columns of hypertext, describing the structure and function of the heart.

Hands-on interactive simulations have also been incorporated as part of multimedia learning environments. An early example is the Cardiac Tutor, developed to teach students about cardiac resuscitation, that required students to save patients by selecting the correct set of procedures in the correct order from various options displayed on the computer screen (Eliot and Woolf, 1994). A more recent example is BioBLAST®, a multimedia environment for high school biology classes, that incorporates simulation models based on NASA's research to enable students to develop and test their own designs for a life support system for use on the Moon (see Figure 6.15). The learning environment provides a range of simulators that are combined with online resources.

Multimedia CD-ROMs (and more recently interactive websites) have mainly been developed for training, educational, and entertainment purposes. It is generally assumed that learning (e.g. reading and scientific inquiry skills) and playing can be enhanced through interacting with engaging multimedia interfaces. But what actually happens when users are given unlimited, easy access to multiple media and simulations? Do they systematically switch between the various media and ‘read’ all the multiple representations on a particular subject? Or, are they more selective in what they look at and listen to?

Figure 6.15 Screen dump from the multimedia environment BioBLAST

Anyone who has interacted with an educational CD-ROM knows just how tempting it is to play the video clips and animations, while skimming through accompanying text or static diagrams. The former are dynamic, easy and enjoyable to watch, whilst the latter are viewed as static, boring, and difficult to read from the screen. For example, in an evaluation of Don Norman's CD-ROM of his work (First Person), students consistently admitted to ignoring the text at the interface in search of clickable icons of the author, which when selected would present an animated video of him explaining some aspect of design (Rogers and Aldrich, 1996). Given the choice to explore multimedia material in numerous ways, ironically, users tend to be highly selective as to what they actually attend to, adopting a ‘channel hopping’ mode of interaction. While enabling the users to select for themselves the information they want to view or features to explore, there is the danger that multimedia environments may in fact promote fragmented interactions where only part of the media is ever viewed. This may be acceptable for certain kinds of activities, e.g. browsing, but less optimal for others, e.g. learning about a topic. One way to encourage more systematic and extensive interactions (when it is considered important for the activity at hand) is to require certain activities to be completed that entail the reading of accompanying text, before the user is allowed to move on to the next level or task.

Box 6.3: Accessible Interactive TV Services for all

TV now provides many digital channels, of which sports, news, and movie channels are very popular. In addition, a range of interactive TV services are being offered that enable users to browse the web, customize their viewing choices, play interactive games, do their banking and shopping, and take an active part in a number of broadcast shows, e.g. voting. Besides offering a wide diversity of choices to the general public, there is much potential for empowering disabled and elderly users, by enabling them to access the services from the comfort of their own armchair. But it requires a new sensitivity to ‘interactive’ design, taking into account specific usability issues for those with impaired motor control, poor vision, and hearing difficulties (Newell, 2003). For example, remote controls need to be designed that can be manipulated with poor dexterity, text/icons need to be readable for those with poor eyesight, while navigation methods need to be straightforward for viewers who are not experienced with multimedia-based interfaces.

Activity 6.5

Go to the interactivities section on our accompanying website (http://www.id-book.com) and try to design the interface for a cell phone. How did the multimedia representations and interactivity help you to create a design?

Comment

The multimedia interactivity provides a constrained way of completing the task, involving a hands-on physical design activity and the selection of contextually relevant guidelines that are meant to help you think about the rationale behind your choices. However, rather than go through them step-by-step, it can be tempting simply to add a widget component to the template and move on to the next screen without reading or reflecting upon the guidelines. Sometimes, one can be so focused on comparing the visual interface components provided on the right-hand side of the screen that it is easy to forget to look at the left-hand side where the guidelines are.

A key research question is how to design interactive multimedia to help users explore, keep track of, and integrate the multiple representations of information provided, be it a digital library, a game, or learning material. As mentioned above, one technique is to provide hands-on interactivities and simulations at the interface that require the user to complete a task, solve a problem, or explore different aspects of a topic. Specific examples include electronic notebooks that are integrated as part of the interface, where users can copy, download, or type in their own material; multiple-choice quizzes that give feedback on how the user has done; interactive puzzles where the user has to select and position different pieces in the right combination; and simulation-type games where the user has to follow a set of procedures to achieve some goal for a given scenario. Another approach is to employ ‘dynalinking,’ where information depicted in one window explicitly changes in relation to what happens in another. This can help users keep track of multiple representations and see the relationship between them (Scaife and Rogers, 1996).

Specific guidelines are available that recommend how best to combine multiple media in relation to different kinds of task, e.g. when to use audio with graphics, sound with animations, and so on for different learning tasks. For example, Alty (1991) suggests that audio information is good for stimulating imagination, movies for action information, text for conveying details, whilst diagrams are good at conveying ideas. From such generalizations it is possible to devise a presentation strategy for learning. This can be along the lines of: first, stimulate the imagination through playing an audio clip; then, present an idea in diagrammatic form; then, display further details about the concept through hypertext. Sutcliffe and his colleagues have also developed guidelines, based on cognitive engineering principles, that recommend how to link different media together to give coherent and comprehensive presentations (Faraday and Sutcliffe, 1997; Sutcliffe, 2003). Quintana et al. (2002) have developed a set of guidelines for learner-centered design (LCD) that outline various features that can be used to guide and prompt students in multimedia learning environments. Examples include process maps and flow diagrams.

Virtual reality and virtual environments. Virtual reality and virtual environments are computer-generated graphical simulations, intended to create “the illusion of participation in a synthetic environment rather than external observation of such an environment” (Gigante, 1993, p. 3). Virtual reality (VR) is the generic term that refers to the experience of interacting with an artificial environment, which makes it feel virtually real. The term ‘virtual environment’ (VE) is used more specifically to describe what has been generated using computer technology (although both terms are used interchangeably). Images are displayed stereoscopically to the users—most commonly through shutter glasses—and objects within the field of vision can be interacted with via an input device like a joystick. The 3D graphics can be projected onto CAVE (Cave Automatic Virtual Environment) floor and wall surfaces (see Figure 2.12), desktop machines, or large shared displays, e.g. IMAX screens.

One of the main attractions of VRs/VEs is that they can provide opportunities for new kinds of experience, enabling users to interact with objects and navigate in 3D space in ways not possible in the physical world or a 2D graphical interface. The resulting user experience can be highly engaging; it can feel as if one really is flying around a virtual world. People can become immersed in and highly captivated by the experience (Kalawsky, 1993). For example, in the Virtual Zoo project, Allison et al. (1997) found that people were highly engaged and very much enjoyed the experience of adopting the role of a gorilla, navigating the environment, and watching other gorillas respond to their movements and presence (see Figure 6.16).

Figure 6.16 The Virtual Gorilla Project. On the left a student wears a head-mounted display and uses a joystick to interact with the virtual zoo. On the right are the virtual gorillas she sees and which react to her movements

One of the advantages of VRs/VEs is that simulations of the world can be constructed to have a higher level of fidelity with the objects they represent compared with other forms of graphical interface, e.g. multimedia. The illusion afforded by the technology can make virtual objects appear to be very life-like and behave according to the laws of physics. For example, landing and take-off terrains developed for flight simulators can appear to be very realistic. Moreover, it is assumed that learning and training applications can be improved through having a greater fidelity with the represented world. A sense of ‘presence’ can also make the virtual setting seem convincing. By presence is meant “a state of consciousness, the (psychological) sense of being in the virtual environment” (Slater and Wilbur, 1997, p. 605), where someone is totally engrossed by the experience, and behaves in a similar way to how he/she would if at an equivalent real event.

Another distinguishing feature of VRs/VEs is the different viewpoints they offer. Players can have a first-person perspective, where their view of the game or environment is through their own eyes, or a third-person perspective, where they see the world through a character visually represented on the screen, commonly known as an avatar. An example of a first-person perspective is that experienced in first-person shooter games such as DOOM, where the player moves through the environment without seeing a representation of themselves. It requires the user to imagine what he/she might look like and decide how best to move around. An example of a third-person perspective is that experienced in the game Tomb Raider, where the player sees the virtual world above and behind the avatar of Lara Croft. The user controls Lara's interactions with the environment by controlling her movements, e.g. making her jump, run, or crouch. Avatars can be represented from behind or from the front, depending on how the user controls its movements. First-person perspectives are typically used for flying/driving simulations and games, e.g. car racing, where it is important to have direct and immediate control to steer the virtual vehicle. Third-person perspectives are more commonly used in games, learning environments, and simulations where it is important to see a representation of self with respect to the environment and others in it. In some virtual environments it is possible to switch between the two perspectives, enabling the user to experience different viewpoints on the same game or training environment.

Early VRs/VEs were developed using head-mounted displays. However, they have been found to be uncomfortable to wear, sometimes causing motion sickness and disorientation. They are also expensive and difficult to program and maintain. Nowadays, desktop VRs/VEs are mostly used; software toolkits are now available that make it much easier to program a virtual environment, e.g. VRML, 3D Alice. Instead of moving in a physical space with a head-mounted display, users interact with a desktop virtual environment—as they would any other desktop application—using mice, keyboards, or joysticks as input devices. The desktop virtual environment can also be programmed to present a more realistic 3D effect (similar to that achieved in 3D movies shown at IMAX cinemas), requiring users to wear a pair of shutter glasses.

Research and design issues

VRs/VEs have been developed to support learning and training for numerous skills. Researchers have designed them to help people learn to drive a vehicle, fly a plane, and perform delicate surgical operations—where it is very expensive and potentially dangerous to start learning with the real thing. Others have investigated whether people can learn to find their way around a real building/place before visiting it by first navigating a virtual representation of it, e.g. Gabrielli et al., (2002). VEs have also been designed to help people practice social skills, speaking skills, and confront their social phobias, e.g. Cobb et al., (1999); Slater et al., (1999). An underlying assumption is that the environment can be designed as a ‘safe’ place to help people gently overcome their fears (e.g. spiders, talking in public) by confronting them through different levels of closeness and unpleasantness, e.g. seeing a small virtual spider move far away, seeing a medium one sitting nearby, and then finally touching a large one. Studies have shown that people can readily suspend their disbelief, imagining a virtual spider to be a real one or a virtual audience to be a real audience. For example, Slater et al. (1999) found that people rated themselves as being less anxious after speaking to a virtual audience that was programmed to respond to them in a positive fashion than after speaking to virtual audiences programmed to respond to them negatively.

Core design issues that need to be considered when developing virtual environments are: what are the most effective ways of enabling users to navigate through them, e.g. first versus third person; how to control their interactions and movements, e.g. use of head and body movements; how best to enable them to interact with information in them, e.g. use of keypads, pointing, joystick buttons; and how to enable users to collaborate and communicate with others in the virtual environment. A central concern is the level of realism to aim for. Is it necessary to design avatars and the environments they inhabit to be life-like, using ‘rich’ graphics, or can simpler and more abstract forms be used, but which nonetheless are equally capable of engendering a sense of presence? For more on this topic see the dilemma box below.

Guidelines are available for helping to design virtual environments, that focus on how best to support navigation and user control, including where to place landmarks and objects in large-scale environments to ease navigation (Vinson, 1999) and the rules of everyday life that can be contravened, e.g. enabling avatars to walk through virtual walls and buildings (Sutcliffe, 2002).

Dilemma Realism versus abstraction?

One of the challenges facing interaction designers is whether to use realism or abstraction when designing an interface. This means designing objects either to (i) give the illusion of behaving and looking like real-world counterparts or (ii) appear as abstractions of the objects being represented. This concern is particularly relevant when implementing conceptual models that are deliberately based on an analogy with some aspect of the real world. For example, is it preferable to design a desktop to look like a real desktop, a virtual house to look like a real house, or a virtual terrain to look like a real terrain? Or, alternatively, is it more effective to design representations as simple abstract renditions, depicting only a few salient features?

One of the main benefits of using realism at the interface is that it can enable people, especially computer phobics and novices, to feel more comfortable when learning an application. The rationale behind this is that such representations can readily tap into people's understanding of the physical world. Hence, realistic interfaces can help users initially understand the underlying conceptual model. In contrast, overly schematic and abstract representations can appear to be too computer-like and may be off-putting to the newcomer. The advantage of more abstract interfaces, however, is that they can be more efficient to use. Furthermore, the more experienced users become, the more they may find ‘comfortable’ interfaces no longer to their liking. A dilemma facing designers, therefore, is deciding between creating interfaces to make novice users feel comfortable (but more experienced users less comfortable) and designing interfaces to be effective for more experienced users (but maybe harder to learn by novices).

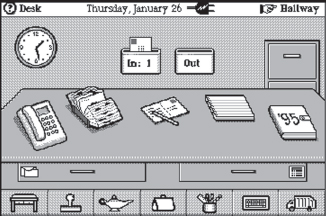

One of the earliest attempts at using realism at the interface was General Magic's office system Magic Cap, which was rendered in 3D. To achieve this degree of realism required using various perceptual cues such as perspective, shadowing, and shading. The result was a rather cute interface (see Figure 6.17). Although their intentions were well-grounded, the outcome was less successful. Many people commented on how childish and gawky it looked, having the appearance of illustrations in a children's picture book rather than a work-based application.

Mullet and Sano (1995) also point out how a 3D rendition of an object like a desk nearly always suffers from both an unnatural point of view and an awkward rendering style that ironically destroy the impression of being in a real physical space. One reason for this is that 3D depictions conflict with the effective use of display space, especially when 2D editing tasks need to be performed. As can be seen in Figure 6.17, these kinds of task were represented as ‘flat’ buttons that appear to be floating in front of the desk, e.g. mail, program manager, task manager.

For certain kinds of applications, using realism can be very effective for both novices and experienced users. Computer-based games fall into this category, especially those where users have to react rapidly to dynamic events that happen in a virtual world in real time, say flying a plane or playing a game of virtual football. Making the characters in the game resemble humans in the way they look, move, dress, and behave also makes them seem more convincing and lifelike, enhancing the enjoyment and fun factor.

Activity 6.6

Many games have been ported from the PC platform to the cell phone. Because of the memory and screen size limitations of the phone device, however, much simpler and more abstract representations have to be used. To what extent does this adaptation of the interface affect the experience of playing the same game?

Comment

The most effective games to have been ported over to the cell phone are highly addictive games that use simple graphics and do not require the user to navigate between different windows. Examples are Snake (see Figure 6.18), Tetris, and Snood, where the goal of the game is to move an object (e.g. a snake, abstract shapes, a shooter) small distances in order to eat food, fill a container, or delete shapes. More complex games, like World of Warcraft—which are very popular on the PC platform—do not port over nearly as well. It is simply too difficult to navigate and engage in the same level of interaction that makes the game enjoyable and addictive when played on a PC. Similar to the debate over text-based command games versus advanced graphical games, the extent to which the interaction style affects the user experience varies in terms of how engaging they are; but both can be equally enjoyable (see Activity 2.7).

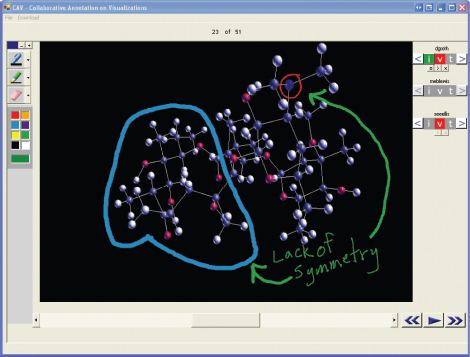

Information visualization. Information visualization is a growing field concerned with the design of computer-generated visualizations of complex data that are typically interactive and dynamic. The goal is to amplify human cognition (see Chapter 3), enabling users to see patterns, trends, and anomalies in the visualization and from this to gain insight (Card et al., 1999). Specific objectives are to enhance discovery, decision-making, and explanation of phenomena. Most interactive visualizations have been developed for use by experts to enable them to understand and make sense of vast amounts of dynamically changing domain data or information, e.g. satellite images or research findings, that take much longer to achieve if using only text-based information.

Figure 6.18 Two screenshots from the game Snake—the one on the left is played on a PC and the one on the right on a cell phone. In both games, the goal is to move the snake (the blue thing and the black squares, respectively) towards targets that pop up on the screen (e.g. the bridge, the star) and to avoid obstacles (e.g. a flower, the end of the snake's tail). When a player successfully moves his snake head over or under a target, the snake increases its length by one blob or block. The longer the snake gets the harder it is to avoid obstacles. If the snake hits an obstacle the game is over. On the PC version there are lots of extra features that make the game more complicated, including more obstacles and ways of moving. The cell phone version has a simple 2D bird's eye representation, whereas the PC version adopts a 3D third-person avatar perspective

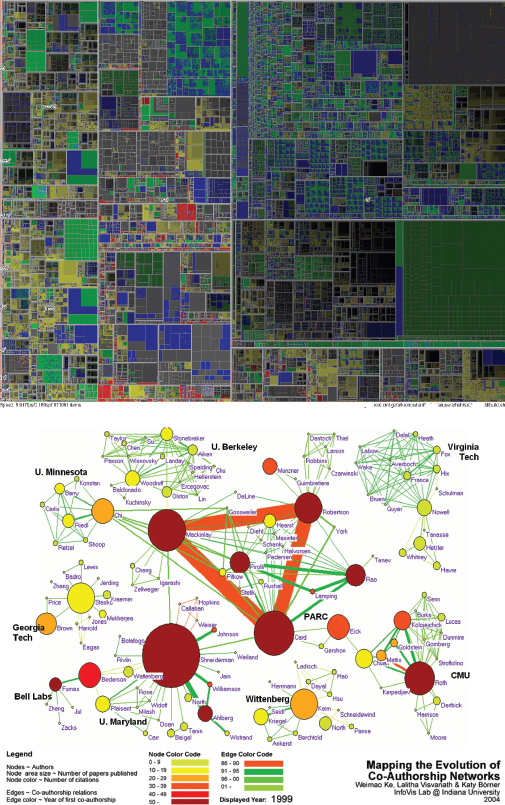

Common techniques that are used for depicting information and data are 3D interactive maps that can be zoomed in and out of and which present data via webs, trees, clusters, scatterplot diagrams, and interconnected nodes (Bederson and Shneiderman, 2003; Chen, 2004). Hierarchical and networked structures, color, labeling, tiling, and stacking are also used to convey different features and their spatial relationships. At the top of Figure 6.19 is a typical treemap, called MillionVis, that depicts one million items all on one screen using the graphical techniques of 2D stacking, tiling, and color (Fekete and Plaisant, 2002). The idea is that viewers can zoom in to parts of the visualization to find out more about certain data points, while also being able to see the overall structure of an entire data set. The treemap has been used to visualize file systems, enabling users to understand why they are running out of disk space, how much space different applications are using, and also for viewing large image repositories that contain Terabytes of satellite images. Similar visualizations have been used to represent changes in stocks and shares over time, using rollovers to show additional information, e.g. Marketmap on SmartMoney.com

The visualization at the bottom of Figure 6.19 depicts the evolution of co-authorship networks over time (Ke et al., 2004). It uses a canonical network to represent spatially the relationships between labeled authors and their place of work. Changing color and thickening lines that are animated over time convey increases in co-authoring over time. For example, the figure shows a time slice of 100 authors at various US academic institutions, in which Robertson, Mackinlay, and Card predominate, having published together many times more than with the other authors. Here, the idea is to enable researchers to readily see connections between authors and their frequency of publishing together with respect to their location over time. (Note: Figure 6.19 is a static screen shot for 1999.) Again, an assumption is that it is much easier to read this kind of diagram compared with trying to extract the same information from a text description or a table.

Research and design issues

Much of the research in information visualization has focused on developing algorithms and interactive techniques to enable viewers to explore and visualize data in novel ways. There has been less research on how visualizations are used in practice and whether they can amplify cognition, enabling people to discover and make better informed decisions, about policy or research. Key design issues include whether to use animation and/or interactivity, what form of coding to use, e.g. color or text labels, whether to use a 2D or 3D representational format, what forms of navigation, e.g. zooming or panning, and what kinds and how much additional information, e.g. rollovers or tables of text, to provide. The type of metaphor to be used is also an important concern, e.g. one based on flying over a geographical terrain or one that represents documents as part of an urban setting. There are, at the time of writing, no clear-cut guidelines on how to design effective visualizations; designers often apply relevant research findings from cognitive psychology (see Chapter 3) and graphical design, e.g. Tufte (1999). An overriding principle is to design a visualization that is easy to comprehend and easy to make inferences from. If too many variables are depicted in the same visualization it can make it much more difficult for the viewer to read and make sense of what is being represented.

Web-based Interfaces

Early websites were largely text-based, providing hyperlinks to different places or pages of text. Much of the design effort was concerned with how best to structure information at the interface to enable users to navigate and access it easily and quickly. Jakob Nielsen (2000) adapted his and Ralf Molich's usability guidelines (Nielsen and Molich, 1990) to make them applicable to website design, focusing on simplicity, feedback, speed, legibility, and ease of use. He has also stressed how critical download time is to the success of a website. Simply, users who have to wait too long for a page to appear are likely to move on somewhere else. One of Nielsen's recommendations is that it is best to have very few graphics on the homepage of a site but offer users the chance to see pictures of products, or maps, etc., only when they explicitly ask for them. This can be achieved by using thumbnails—miniaturized versions of the full picture—as links.

Figure 6.19 Two types of visualizations, one using flat colored blocks and the other animated color networks that expand and change color over time

Nielsen has become renowned for his doggedness on insisting that ‘vanilla’ websites are the most usable and easiest to navigate. True to his word, to this day, Nielsen has resisted including any graphics or photos on his homepage (useit.com). Instead he provides a series of hyperlinks to his alertboxes, books, reports, consulting services, and news articles. Other interaction designers, however, do not support his stance, arguing that it is possible to have both aesthetically pleasing and usable sites. A main reason for emphasizing the importance of graphical design is to make web pages distinctive, striking, and pleasurable for the user when they first view them and also to make them readily recognizable on their return.

It is not surprising, therefore, to see that nearly all commercial, public service, and personal websites have adopted more of a ‘multi-flavor’ rather than a ‘vanilla’ approach; using a range of graphics, images, and animations on their homepages. Website design took off in a big way in the early 2000s when user-centered editing tools, e.g. Dreamweaver, and programming languages, e.g. php, Flash and XML, emerged providing opportunities for both designers and the general public to create websites to look and behave more like multimedia environments. Groups of technologies, such as Ajax (asynchronous Javascript and XML) also started to appear, enabling applications to be built that are largely executed on a user's computer, allowing the development of reactive and rich graphical user interfaces. Many web-based interactivities and applications have been developed, including online pop quizzes, agents, recommenders, chatrooms, interactive games, and blogs. There is also an increasing number of PC-based applications that have become web-based, such as email, e.g. Gmail, and photo storing and sharing, e.g. Flickr. Web browsers also started to be developed for a range of platforms besides the PC, including interactive TV, cell phones, and PDAs.

Steve Krug (2000) has characterized the debate on usability versus attractiveness in terms of the difference between how designers create websites and how users actually view them. He argues that web designers create sites as if the user was going to pore over each page, reading the finely crafted text word for word, looking at the use of images, color, icons, etc., examining how the various items have been organized on the site, and then contemplating their options before they finally select a link. Users, however, behave quite differently. They will glance at a new page, scan part of it, and click on the first link that catches their interest or looks like it might lead them to what they want. Much of the content on a web page is not read. In his words, web designers are “thinking great literature” (or at least “product brochure”) while the user's reality is much closer to a “billboard going by at 60 miles an hour” (Krug, 2000, p. 21). While somewhat of a caricature of web designers and users, his depiction highlights the discrepancy between the meticulous ways designers create their websites with the rapid and less than systematic approach that users take to look at them.

Similar to newspapers, magazines, and TV, working out how to brand a web page to catch and keep ‘eyeballs’ is central to whether a user will stay on it and, importantly, return to it. We have talked about the need to keep screens uncluttered so that people can find their way around and see clearly what is available. However, there may be occasions when the need to maintain a brand overrides this principle. For example, the website for the Swedish newspaper Aftonbladet, while very busy and crowded (see Figure 6.20), was designed to continue the style of the paper-based version, which also has a busy and crowded appearance.

Figure 6.20 The front web page of the Aftonbladet newspaper

Advertisers also realize how effective flashing ads and banners can be for promoting their products, similar to the way animated neon light adverts are used in city centers, such as London's Piccadilly Circus. The homepage of many online newspapers, including the Aftonbladet is full of flashing banners and cartoon animations, many of which are adverts for other products (see http://www.aftonbladet.se for the full animation effects). Music and other sounds have also begun to be used to create a certain mood and captivate users. While online adverts are often garish, distracting, and can contravene basic usability principles, they are good at luring users' attention. As with other media, e.g. TV, newspapers and magazines, advertisers pay significant revenues to online companies to have their adverts placed on their websites, entitling them to say where and how they should appear.