6.3. Image-Based Relighting

The image-based lighting techniques we have seen so far are useful for lighting synthetic objects, lighting synthetic environments, and rendering synthetic objects into real-world scenes with consistent lighting. However, we have not yet seen techniques for creating renderings of real-world objects illuminated by captured illumination. Of particular interest would be to illuminate people with these techniques, since most images created for film and television involve people in some way. Certainly, if it were possible to create a very realistic computer model of a person, then this model could be illuminated using the image-based lighting techniques already presented. However, since creating photoreal models of people is still a difficult process, a more direct route is desirable.

There actually is a more direct route, and it requires nothing more than a special set of images of the person in question. The technique is based on the fact that light is additive, which can be explained by thinking of a person, two light sources, and three images. Suppose that the person sits still and is photographed lit by both light sources, one to each side. Then, two more pictures are taken, each with only one of the lights on. If the pixel values in the images are proportional to the light levels, then the additivity of light dictates that the sum of the two one-light images will look the same as the two-light image. More usefully, the color channels of the two images can be scaled before they are added, allowing us to create an image of a person illuminated with a bright orange light to one side and a dim blue light to the other. I first learned about this property from Paul Haeberli's Grafica Obscura web site [15].

In a complete lighting environment, the illumination comes not from just two directions, but from a whole sphere of incident illumination. If there were a way to light a person from a dense sampling of directions distributed across the whole sphere of incident illumination, it should be possible to recombine tinted and scaled versions of these images to show how the person would look in any lighting environment. The light stage device [8] shown in Figure 6.29 was designed to acquire precisely such a data set. The device's 250-watt halogen spotlight is mounted on a two-axis rotation mechanism such that the light can spiral from the top of the sphere to the bottom in approximately 1 minute. During this time, one or more digital video cameras can record the subject's appearance as illuminated by nearly 2000 lighting directions distributed throughout the sphere. A subsampled light stage data set of a person's face can be seen in Figure 6.30a.

Figure 6.29. (a) Light Stage 1, a manually operated device for lighting a person's face from every direction in the sphere of incident illumination directions. (b) A long-exposure photograph of acquiring a Light Stage 1 data set. As the light spirals down, one or more video cameras record the different illumination directions on the subject in approximately 1 minute. The recorded data is shown in Figure 6.30.

Figure 6.30. (a) A data set from the light stage, showing a face illuminated from the full sphere of lighting directions. Ninety-six of the 2,048 images in the full data set are shown. (b) The Grace Cathedral light probe image. (c) The light probe sampled into the same longitude-latitude space as the light stage data set. (d) The images in the data set scaled to the same color and intensity as the corresponding directions of incident illumination. Figure 6.31a shows a rendering of the face as illuminated by the Grace Cathedral environment obtained by summing these scaled images.

Figure 6.30c shows the Grace Cathedral lighting environment remapped to be the same resolution and in the same longitude-latitude coordinate mapping as the light stage data set. For each image of the face in the data set, the resampled light probe indicates the color and intensity of the light from the environment in the corresponding direction. Thus, we can multiply the red, green, and blue color channels of each light stage image by the amount of red, green, and blue light in the corresponding direction in the lighting environment to obtain the modulated data set that appears in Figure 6.30d. Figure 6.31a shows the result of summing all of the scaled images in Figure 6.30d, producing an image of the subject as he would appear illuminated by the light of Grace Cathedral. Results obtained for three more lighting environments are shown in Figure 6.31b-d.

Figure 6.31. Renderings of the light stage data set from Figure 6.30 as illuminated by four image-based lighting environments. (a) Grace Cathedral. (b) Eucalyptus Grove. (c) Uffizi Gallery. (d) St. Peter's Basilica.

The process of computing the weighted sum of the face images is simple computation, but it requires accessing hundreds of megabytes of data for each rendering. The process can be accelerated by performing the computation on compressed versions of the original images. In particular, if the images are compressed using an orthonormal transform such as the discrete cosine transform, the linear combination of the images can be computed directly from the basis coefficients of the original images as shown in [25]. The Face Demo program written by Chris Tchou and Dan Maas (Figure 6.32) uses DCT-compressed versions of the face data sets to allow interactive face relighting with either light probe images or user-controlled light sources in real time. More recent work has used spherical harmonics [24, 23] and wavelets [22] to perform real-time image-based lighting on virtual light stage data sets of 3D computer graphics models.

Figure 6.32. The Face Demo program written by Chris Tchou and Dan Maas allows light stage data sets to be interactively reilluminated using DCT-compressed data. The program is available at http://www.debevec.org/FaceDemo/.

The face renderings in Figure 6.31 are highly realistic and require no use of 3D models or recovered reflectance properties. However, the image summation technique that produced them works only for still images of the face. A project in our group's current work involves capturing light stage data sets of people in different expressions and from different angles, and blending between these expressions and viewpoints to create 3D animated faces. For this project, we have built a second light stage device that uses a rotating semicircular arm of strobe lights to capture a 500-image data set in less than 10 seconds. We have also used the device to record the reflectance properties of various cultural artifacts, described in [16].

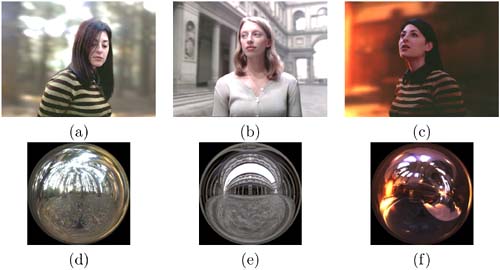

Our most recent lighting apparatus, Light Stage 3, is a lighting reproduction system consisting of a full sphere of color LED light sources [10]. Each of the 156 light sources can be independently driven to any RGB color, which allows an approximate reproduction of a captured lighting environment to be recreated in a laboratory or studio. Figure 6.33 shows a subject standing within Light Stage 3 illuminated by a reproduction of the Grace Cathedral lighting environment. Figure 6.34 shows three subjects rendered into three different lighting environments. In these renderings, the subject has been composited onto an image of the background environment using a matte obtained from an infrared matting system.

Figure 6.33. Light Stage 3. A subject is illuminated by a real-time reproduction of the light of Grace Cathedral.

Figure 6.34. Light Stage 3 composite images. (a-c) Live-action subjects composited into three different environments using Light Stage 3 to reproduce the environment's illumination on the subject. (d-f) Corresponding lighting environments used in the composites. The subjects were composited over the backgrounds using an infrared compositing system [10].