Now, let's take a quick look at our application. You should have the sample solution loaded into Microsoft Visual Studio:

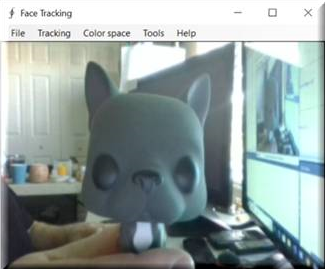

And here's a look at our sample application running. Say Hi to Frenchie everyone!

As you can see, we have a very simple screen that is dedicated to our video capture device. In this case, the laptop camera is our video capture device. Frenchie is kindly posing in front of the camera for us, and as soon as we enable facial tracking, look what happens:

The facial features of Frenchie are now being tracked. What you see surrounding Frenchie are the tracking containers (white boxes), and our angle detector (red line) displayed. As we move Frenchie around, the tracking container and angle detector will track him. That's all well and good, but what happens if we enable facial tracking on a real human face? As you can see in the following screenshot, the tracking containers and angles are tracking the face of our guest poser, just like it did for Frenchie:

As our poser moves his head from side to side, the camera tracks this, and you can see the angle detectors adjusting to what it recognizes as the angle of the face. In this case you will notice that the color space is in black and white and not color. This is a histogram back projection and is an option that you can change:

Even as we move farther away from the camera where other objects come into view, the facial detector can keep track of our face among the noise. This is exactly how the facial recognition systems you see in movies work, albeit more simplistic, and within minutes you can be up and running with your own facial recognition application too!:

Now that we've seen the outside, let's look under the hood at what is going on.

We need to ask ourselves exactly what the problem is that we are trying to solve here. Well, we are trying to detect (notice I did not say recognize) facial images. While easy for a human, a computer needs very detailed instruction sets to accomplish this feat. Luckily for us there is a very famous algorithm called the Viola-Jones algorithm that will do the heavy lifting for us. Why did we pick this algorithm?:

- Very high detection rates and very low false positives.

- Very good at real-time processing.

- Very good at detecting faces from non-faces. Detecting faces is the first step in facial recognition.

This algorithm requires that the camera has a full-frontal upright view of the face. To be detected, the face will need to be pointing straight towards the camera, not tilted, not looking up or down. Remember, for the moment, we are just interested in facial detection.

To delve into the technical side of things, our algorithm will require four stages to accomplish its job. They are:

- Haar feature selection

- Creating an integral image

- Adaboost training

- Cascading classifiers

We must start by stating that all human faces share some similar properties, such as the eye being darker than the upper cheeks, the nose bridge being brighter than the eyes, your forehead may be lighter than the rest of your face, and so on. Our algorithm matches these up by using what is known as Haar Features. We can come up with matchable facial features by looking at the location and size of the eyes, mouth, and bridge of the nose, and so forth. However, here's our problem.

In a 24x24 pixel window, there are a total of 162,336 possible features. Obviously, to try and evaluate them all would be prohibitively expensive, if it would work at all. So, we are going to work with a technique known as adaptive boosting, or more commonly, AdaBoost. It's another one for your buzzword list, you've heard it everywhere and perhaps even read about it. Our learning algorithm will use AdaBoost to select the best features and train classifiers to use them. Let's stop and talk about it for a moment.

AdaBoost can be used with many types of learning algorithm and is considered the best out-of-the-box algorithm for many tasks. You usually won't notice how good and fast it is until you switch to another algorithm and time it. I have done this countless times, and I can tell you the difference is very noticeable.

Boosting takes the output from other weak-learning algorithms and combines them with a weighted sum that is the final output of the boosted classifier. The adaptive part of AdaBoost comes from the fact that subsequent learners are tweaked in favor of those instances that have been misclassified by previous classifiers. We must be careful with our data preparation though, as AdaBoost is sensitive to noisy data and outliers (remember how we stressed those in Chapter 1, A Quick Refresher). The algorithm tends to overfit the data more than other algorithms, which is why in our earlier chapters we stressed data preparation for missing data and outliers. In the end, if weak learning algorithms are better than random guessing, AdaBoost can be a valuable addition to our process.

With that brief description behind us, let's look under the covers at what's happening. For this example, we will again use the Accord framework and we will work with the Vision Face Tracking sample. You can download the latest version of this framework from its GitHub location: https://github.com/accord-net/framework.

We start by creating a FaceHaarCascade object. This object holds a collection of Haar-like features' weak classification stages, or stages. There will be many stages provided, each containing a set of classifier trees that will be used in the decision-making process. We are now technically working with a decision tree. The beauty of the Accord framework is that FaceHaarCascade automatically creates all these stages and trees for us without exposing us to the details.

Let's take a look at what a particular stage might look like:

List<HaarCascadeStage> stages = new List<HaarCascadeStage>();

List<HaarFeatureNode[]> nodes;

HaarCascadeStage stage;

stage = new HaarCascadeStage(0.822689414024353); nodes = new List<HaarFeatureNode[]>();

nodes.Add(new[] { new HaarFeatureNode(0.004014195874333382, 0.0337941907346249, 0.8378106951713562, new int[] { 3, 7, 14, 4, -1 }, new int[] { 3, 9, 14, 2, 2 }) });

nodes.Add(new[] { new HaarFeatureNode(0.0151513395830989, 0.1514132022857666, 0.7488812208175659, new int[] { 1, 2, 18, 4, -1 }, new int[] { 7, 2, 6, 4, 3 }) });

nodes.Add(new[] { new HaarFeatureNode(0.004210993181914091, 0.0900492817163467, 0.6374819874763489, new int[] { 1, 7, 15, 9, -1 }, new int[] { 1, 10, 15, 3, 3 }) });

stage.Trees = nodes.ToArray(); stages.Add(stage);

As you can see, we are building a decision tree underneath the hood by providing the nodes for each stage with the numeric values for each feature.

Once created, we can use our cascade object to create our HaarObjectDetector, which is what we will use for detection. It takes:

- Our facial cascade objects

- The minimum window size to use when searching for objects

- Our search mode—in our case, we are searching for only a single object

- The re-scaling factor to use when re-scaling our search window during the search

HaarCascade cascade = new FaceHaarCascade();

detector = new HaarObjectDetector(cascade, 25, ObjectDetectorSearchMode.Single, 1.2f,

ObjectDetectorScalingMode.GreaterToSmaller);

Once created, we are ready to tackle the topic of our video collection source. In our examples, we will simply use the local camera to capture all images. However, the Accord.Net framework makes it easy to use other sources for image capture, such as .avi files, animated .jpg files, and so forth.

We connect to the camera, select the resolution, and are ready to go:

foreach (var cap in device?.VideoCapabilities)

{

if (cap.FrameSize.Height == 240)

return cap;

if (cap.FrameSize.Width == 320)

return cap;

}

return device?.VideoCapabilities.Last();

With the application now running and our video source selected, our application will look like the following. Once again, enter Frenchie the bulldog! Please excuse the mess, Frenchie is not the tidiest of pets!:

For this demonstration, you will notice that Frenchie is facing the camera, and in the background, we have 2 x 55" monitors, as well as many other items my wife likes to refer to as junk (we'll be proper and call it noise)! This is done to show how the face detection algorithm can distinguish Frenchie's face amongst everything else! If our detector cannot handle this, it is going to get lost in the noise and will be of little use to us.

With our video source now coming in, we need to be notified when a new frame is received so that we can process it, apply our markers, and so on. We do this by attaching to the NewFrameReceived event handler of the video source player, as follows. .NET developers should be very familiar with this:

this.videoSourcePlayer.NewFrameReceived += new Accord.Video.NewFrameEventHandler(this.videoSourcePlayer_NewFrame);

Let's look at what happens each time we are notified that a new video frame is available.

The first thing that we need to do is downsample the image to make it easier to work with:

ResizeNearestNeighbor resize = new ResizeNearestNeighbor(160, 120);

UnmanagedImagedownsample = resize.Apply(im);

With the image in a more manageable size, we will process the frame. If we have not found a facial region, we will stay in tracking mode waiting for a frame that has a detectable face. If we have found a facial region, we will reset our tracker, locate the face, reduce its size in order to flush away any background noise, initialize the tracker, and apply the marker window to the image. All of this is accomplished with the following code:

if (regions != null&®ions.Length>0)

{

tracker?.Reset();

// Will track the first face found

Rectangle face = regions[0];

// Reduce the face size to avoid tracking background

Rectangle window = new Rectangle((int)((regions[0].X + regions[0].Width / 2f) * xscale),

(int)((regions[0].Y + regions[0].Height / 2f) * yscale), 1, 1);

window.Inflate((int)(0.2f * regions[0].Width * xscale), (int)(0.4f * regions[0].Height * yscale));

if (tracker != null)

{

tracker.SearchWindow = window;

tracker.ProcessFrame(im);

}

marker = new RectanglesMarker(window);

marker.ApplyInPlace(im);

args.Frame = im.ToManagedImage();

tracking = true;

}

else

{

detecting = true;

}

If a face was detected, our image frame now looks like the following:

If Frenchie tilts his head to the side, our image frame now looks like the following: