All features and labels need to be added to the schema as mentioned in step 1 and step 2. If we don't do that, then DataVec will throw runtime errors during data extraction/loading.

In the preceding screenshot, the runtime exception is thrown by DataVec because of unmatched count of features. This will happen if we provide a different value for input neurons instead of the actual count of features in the dataset.

From the error description, it is clear that we have only added 13 features in the schema, which ended in a runtime error during execution. The first three features, named Rownumber, Customerid, and Surname, are to be added to the schema. Note that we need to tag these features in the schema, even though we found them to be noise features. You can also remove these features manually from the dataset. If you do that, you don't have to add them in the schema, and, thus, there is no need to handle them in the transformation stage

For large datasets, you may add all features from the dataset to the schema, unless your analysis identifies them as noise. Similarly, we need to add the other feature variables such as Age, Tenure, Balance, NumOfProducts, HasCrCard, IsActiveMember, EstimatedSalary, and Exited. Note the variable types while adding them to schema. For example, Balance and EstimatedSalary have floating point precision, so consider their datatype as double and use addColumnDouble() to add them to schema.

We have two features named gender and geography that require special treatment. These two features are non-numeric and their feature values represent categorical values compared to other fields in the dataset. Any non-numeric features need to transform numeric values so that the neural network can perform statistical computations on feature values. In step 2, we added categorical variables to the schema using addColumnCategorical(). We need to specify the categorical values in a list, and addColumnCategorical() will tag the integer values based on the feature values mentioned. For example, the Male and Female values in the categorical variable Gender will be tagged as 0 and 1 respectively. In step 2, we added the possible values for the categorical variables in a list. If your dataset has any other unknown value present for a categorical variable (other than the ones mentioned in the schema), DataVec will throw an error during execution.

In step 3, we marked the noise features for removal during the transformation process by calling removeColumns().

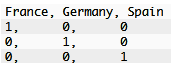

In step 4, we performed one-hot encoding for the Geography categorical variable.

Geography has three categorical values, and hence it will take the 0, 1, and 2 values after the transformation. The ideal way of transforming non-numeric values is to convert them to a value of zero (0) and one (1). It would significantly ease the effort of the neural network. Also, the normal integer encoding is applicable only if there exists an ordinal relationship between the variables. The risk here is we're assuming that there exists natural ordering between the variables. Such an assumption can result in the neural network showing unpredictable behavior. So, we have removed the correlation variable in step 6. For the demonstration, we picked France as a correlation variable in step 6. However, you can choose any one among the three categorical values. This is to remove any correlation dependency that affects neural network performance and stability. After step 6, the resultant schema for the Geography feature will look like the following:

In step 8, we created dataset iterators from the record reader objects. Here are the attributes for the RecordReaderDataSetIterator builder method and their respective roles:

- labelIndex: The index location in the CSV data where our labels (outcomes) are located.

- numClasses: The number of labels (outcomes) from the dataset.

- batchSize: The block of data that passes through the neural network. If you specify a batch size of 10 and there are 10,000 records, then there will be 1,000 batches holding 10 records each.

Also, we have a binary classification problem here, and so we used the classification() method to specify the label index and number of labels.

For some of the features in the dataset, you might observe huge differences in the feature value ranges. Some of the features have small numeric values, while some have very large numeric values. These large/small values can be interpreted in the wrong way by the neural network. Neural networks can falsely assign high/low priority to these features and that results in wrong or fluctuating predictions. In order to avoid this situation, we have to normalize the dataset before feeding it to the neural network. Hence we performed normalization as in step 9.

In step 10, we used DataSetIteratorSplitter to split the main dataset for a training or test purpose.

The following are the parameters of DataSetIteratorSplitter:

- totalNoOfBatches: If you specify a batch size of 10 for 10,000 records, then you need to specify 1,000 as the total number of batches.

- ratio: This is the ratio at which the splitter splits the iterator set. If you specify 0.8, then it means 80% of data will be used for training and the remaining 20% will be used for testing/evaluation.