Value Types (Structures)

Value types aren't as versatile as reference types, but they can provide better performance in many circumstances. The core value types (which include the majority of primitive types) are Boolean, Byte, Char, DateTime, Decimal, Double, Guid, Int16, Int32, Int64, SByte, Single, and TimeSpan. These are not the only value types, but rather the subset with which most Visual Basic developers consistently work. As you've seen, value types by definition store data on the stack.

Value types can also be referred to by their proper name: structures. The underlying principles and syntax of creating custom structures mirrors that of creating classes, covered in the next chapter. This section focuses on some of the built-in types provided by the .NET Framework — in particular, the built-in types known as primitives.

Boolean

The .NET Boolean type represents true or false. Variables of this type work well with the conditional statements that were just discussed. When you declare a variable of type Boolean, you can use it within a conditional statement directly. Test the following sample by creating a Sub called BoolTest within ProVB2012_Ch03 (code file: MainWindow.xaml.vb):

Private Sub BoolTest()

Dim blnTrue As Boolean = True

Dim blnFalse As Boolean = False

If (blnTrue) Then

TextBox1.Text = blnTrue & Environment.NewLine

TextBox1.Text &= blnFalse.ToString

End If

End Sub

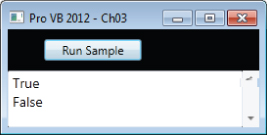

The results of this code are shown in Figure 3.4. There are a couple things outside of the Boolean logic to review within the preceding code sample. These are related to the update of Textbox1.Text. In this case, because you want two lines of text, you need to embed a new line character into the text. There are two ways of doing this in Visual Basic. The first is to use the Environment.Newline constant, which is part of the core .NET Framework. Alternatively, you may find a Visual Basic developer leveraging the Visual Basic–specific constant vbCRLF, which does the same thing.

Figure 3.4 Boolean results

The second issue related to that line is that you are concatenating the implicit value of the variable blnTrue with the value of the Environment.Newline constant. Note the use of an ampersand (&) for this action. This is a best practice in Visual Basic, because while Visual Basic does overload the plus (+) sign to support string concatenation, in this case the items being concatenated aren't necessarily strings. This is related to not setting Option Strict to On. In that scenario, the system will look at the actual types of the variables, and if there were two integers side by side in your string concatenation you would get unexpected results. This is because the code would first process the “+” and would add the values as numeric values.

Thus, since neither you nor the sample download code has set Option String to On for this project, if you replace the preceding & with a +, you'll find a runtime conversion error in your application. Therefore, in production code it is best practice to always use the & to concatenate strings in Visual Basic unless you are certain that both sides of the concatenation will always be a string. However, neither of these issues directly affect the use of the Boolean values, which when interpreted this way provide their ToString() output, not a numeric value.

Unfortunately, in the past developers had a tendency to tell the system to interpret a variable created as a Boolean as an Integer. This is referred to as implicit conversion and is related to Option Strict. It is not the best practice, and when .NET was introduced, it caused issues for Visual Basic, because the underlying representation of True in other languages doesn't match those of Visual Basic.

Within Visual Basic, True has been implemented in such a way that when converted to an integer, Visual Basic converts a value of True to -1 (negative one). This different from other languages, which typically use the integer value 1. Generically, all languages tend to implicitly convert False to 0, and True to a nonzero value.

To create reusable code, it is always better to avoid implicit conversions. In the case of Booleans, if the code needs to check for an integer value, then you should explicitly convert the Boolean and create an appropriate integer. The code will be far more maintainable and prone to fewer unexpected results.

Integer Types

There are three sizes of integer types in .NET. The Short is the smallest, the Integer represents a 32-bit value, and the Long type is an eight-byte or 64-bit value. In addition, each of these types also has two alternative types. In all, Visual Basic supports the nine Integer types described in Table 3.2.

Table 3.2 Visual Basic Integer Types

Short

A Short value is limited to the maximum value that can be stored in two bytes, aka 16 bits, and the value can range between –32768 and 32767. This limitation may or may not be based on the amount of memory physically associated with the value; it is a definition of what must occur in the .NET Framework. This is important, because there is no guarantee that the implementation will actually use less memory than when using an Integer value. It is possible that in order to optimize memory or processing, the operating system will allocate the same amount of physical memory used for an Integer type and then just limit the possible values.

The Short (or Int16) value type can be used to map SQL smallint values when retrieving data through ADO.NET.

Integer

An Integer is defined as a value that can be safely stored and transported in four bytes (not as a four-byte implementation). This gives the Integer and Int32 value types a range from –2147483648 to 2147483647. This range is adequate to handle most tasks.

The main reason to use an Int32 class designation in place of an Integer declaration is to ensure future portability with interfaces. In future 64-bit platforms, the Integer value might be an eight-byte value. Problems could occur if an interface used a 64-bit Integer with an interface that expected a 32-bit Integer value, or, conversely, if code using the Integer type is suddenly passed to a variable explicitly declared as Int32.

Unless you are working on an external interface that requires a 32-bit value, the best practice is to use Integer so your code is not constrained by the underlying implementation. However, you should be consistent, and if using Int32 use it consistently throughout your application.

The Visual Basic .NET Integer value type matches the size of an Integer value in SQL Server.

Long

The Long type is aligned with the Int64 value. The Long has an eight-byte range, which means that its value can range from –9223372036854775808 to 9223372036854775807. This is a big range, but if you need to add or multiply Integer values, then you need a large value to contain the result. It's common while doing math operations on one type of integer to use a larger type to capture the result if there's a chance that the result could exceed the limit of the types being manipulated.

The Long value type matches the bigint type in SQL.

Unsigned Types

Another way to gain additional range on the positive side of an Integer type is to use one of the unsigned types. The unsigned types provide a useful buffer for holding a result that might exceed an operation by a small amount, but this isn't the main reason they exist. The UInt16 type happens to have the same characteristics as the Character type, while the Uint32 type has the same characteristics as a system memory pointer on a 32-byte system.

However, never write code that attempts to leverage this relationship. Such code isn't portable, as on a 64-bit system the system memory pointer changes and uses the Uint64 type. However, when larger integers are needed and all values are known to be positive, these values are of use. As for the low-level uses of these types, certain low-level drivers use this type of knowledge to interface with software that expects these values, and they are the underlying implementation for other value types. This is why, when you move from a 32-bit system to a 64-bit system, you need new drivers for your devices, and why applications shouldn't leverage this same type of logic.

Decimal Types

Just as there are several types to store integer values, there are three implementations of value types to store real number values, shown in Table 3.3.

Table 3.3 Memory Allocation for Real Number Types

Single

The Single type contains four bytes of data, and its precision can range anywhere from 1.401298E-45 to 3.402823E38 for positive values and from –3.402823E38 to –1.401298E-45 for negative values.

It can seem strange that a value stored using four bytes (like the Integer type) can store a number that is larger than even the Long type. This is possible because of the way in which numbers are stored; a real number can be stored with different levels of precision. Note that there are six digits after the decimal point in the definition of the Single type. When a real number gets very large or very small, the stored value is limited by its significant places.

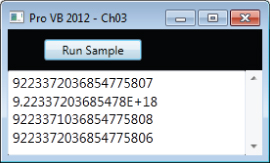

Because real values contain fewer significant places than their maximum value, when working near the extremes it is possible to lose precision. For example, while it is possible to represent a Long with the value of 9223372036854775805, the Single type rounds this value to 9.223372E18. This seems like a reasonable action to take, but it isn't a reversible action. The following code demonstrates how this loss of precision and data can result in errors. To run it, a Sub called Precision is added to the ProVB2012_Ch03 project and called from the Click event handler for the ButtonTest control (code file: MainWindow.xaml.vb):

Private Sub Precision()

Dim l As Long = Long.MaxValue

Dim s As Single = Convert.ToSingle(l)

TextBox1.Text = l & Environment.NewLine

TextBox1.Text &= s & Environment.NewLine

s -= 1000000000000

l = Convert.ToInt64(s)

TextBox1.Text &= l & Environment.NewLine

End Sub

The code creates a Long that has the maximum value possible, and outputs this value. Then it converts this value to a Single and outputs it in that format. Next, the value 1000000000000 is subtracted from the Single using the -= syntax, which is similar to writing s = s – 1000000000000. Finally, the code assigns the Single value back into the Long and then outputs both the Long and the difference between the original value and the new value. The results, shown in Figure 3.5, probably aren't consistent with what you might expect.

Figure 3.5 Showing a loss of precision

The first thing to notice is how the values are represented in the output based on type. The Single value actually uses an exponential display instead of displaying all of the significant digits. More important, as you can see, the result of what is stored in the Single after the math operation actually occurs is not accurate in relation to what is computed using the Long value. Therefore, both the Single and Double types have limitations in accuracy when you are doing math operations. These accuracy issues result from storage limitations and how binary numbers represent decimal numbers. To better address these issues for precise numbers, .NET provides the Decimal type.

Double

The behavior of the previous example changes if you replace the value type of Single with Double. A Double uses eight bytes to store values, and as a result has greater precision and range. The range for a Double is from 4.94065645841247E-324 to 1.79769313486232E308 for positive values and from –1.79769313486231E308 to –4.94065645841247E-324 for negative values. The precision has increased such that a number can contain 15 digits before the rounding begins. This greater level of precision makes the Double value type a much more reliable variable for use in math operations. It's possible to represent most operations with complete accuracy with this value. To test this, change the sample code from the previous section so that instead of declaring the variable s as a Single you declare it as a Double and rerun the code. Don't forget to also change the conversion line from ToSingle to ToDouble. The resulting code is shown here with the Sub called PrecisionDouble (code file: MainWindow.xaml.vb):

Private Sub PrecisionDouble()

Dim l As Long = Long.MaxValue

Dim d As Double = Convert.ToDouble(l)

TextBox1.Text = l & Environment.NewLine

TextBox1.Text &= d & Environment.NewLine

d -= 1000000000000

l = Convert.ToInt64(d)

TextBox1.Text &= l & Environment.NewLine

TextBox1.Text &= Long.MaxValue – 1

End Sub

The results shown in Figure 3.6 look very similar to those from Single precision except they almost look correct. The result, as you can see, is off by just 1. On the other hand, this method closes by using the original MaxValue constant to demonstrate how a 64-bit value can be modified by just one and the results are accurate. The problem isn't specific to .NET; it can be replicated in all major development languages. Whenever you choose to represent very large or very small numbers by eliminating the precision of the least significant digits, you have lost that precision. To resolve this, .NET introduced the Decimal, which avoids this issue.

Figure 3.6 Precision errors with doubles

Decimal

The Decimal type is a hybrid that consists of a 12-byte integer value combined with two additional 16-bit values that control the location of the decimal point and the sign of the overall value. A Decimal value consumes 16 bytes in total and can store a maximum value of 79228162514264337593543950335. This value can then be manipulated by adjusting where the decimal place is located. For example, the maximum value while accounting for four decimal places is 7922816251426433759354395.0335. This is because a Decimal isn't stored as a traditional number, but as a 12-byte integer value, with the location of the decimal in relation to the available 28 digits. This means that a Decimal does not inherently round numbers the way a Double does.

As a result of the way values are stored, the closest precision to zero that a Decimal supports is 0.0000000000000000000000000001. The location of the decimal point is stored separately; and the Decimal type stores a value that indicates whether its value is positive or negative separately from the actual value. This means that the positive and negative ranges are exactly the same, regardless of the number of decimal places.

Thus, the system makes a trade-off whereby the need to store a larger number of decimal places reduces the maximum value that can be kept at that level of precision. This trade-off makes a lot of sense. After all, it's not often that you need to store a number with 15 digits on both sides of the decimal point, and for those cases you can create a custom class that manages the logic and leverages one or more decimal values as its properties. You'll find that if you again modify and rerun the sample code you've been using in the last couple of sections that converts to and from Long values by using Decimals for the interim value and conversion, your results are accurate.

Char and Byte

The default character set under Visual Basic is Unicode. Therefore, when a variable is declared as type Char, Visual Basic creates a two-byte value, since, by default, all characters in the Unicode character set require two bytes. Visual Basic supports the declaration of a character value in three ways. Placing a $c$ following a literal string informs the compiler that the value should be treated as a character, or the Chr and ChrW methods can be used. The following code snippet shows how all three of these options work similarly, with the difference between the Chr and ChrW methods being the range of available valid input values. The ChrW method allows for a broader range of values based on wide character input.

Dim chrLtr_a As Char = "a"c Dim chrAsc_a As Char = Chr(97) Dim chrAsc_b As Char = ChrW(98)

To convert characters into a string suitable for an ASCII interface, the runtime library needs to validate each character's value to ensure that it is within a valid range. This could have a performance impact for certain serial arrays. Fortunately, Visual Basic supports the Byte value type. This type contains a value between 0 and 255 that matches the range of the ASCII character set.

In Visual Basic, the Byte value type expects a numeric value. Thus, to assign the letter “a” to a Byte, you must use the appropriate character code. One option to get the numeric value of a letter is to use the Asc method, as shown here:

Dim bytLtrA as Byte = Asc("a")

DateTime

You can, in fact, declare date values using both the DateTime and Date types. Visual Basic also provides a set of shared methods that provides some common dates. The concept of shared methods is described in more detail in the next chapter, which covers object syntax, but, in short, shared methods are available even when you don't create an instance of a class.

For the DateTime structure, the Now method returns a Date value with the local date and time. The UtcNow method works similarly, while the Today method returns a date with a zero time value. These methods can be used to initialize a Date object with the current local date, or the date and time based on Universal Coordinated Time (also known as Greenwich Mean Time), respectively. You can use these shared methods to initialize your classes, as shown in the following code sample (code file: MainWindow.xaml.vb):

Private Sub Dates()

Dim dtNow = Now()

Dim dtToday = Today()

TextBox1.Text = dtNow & Environment.NewLine

TextBox1.Text &= dtToday.ToShortDateString & Environment.NewLine

TextBox1.Text &= DateTime.UtcNow() & Environment.NewLine

Dim dtString = #12/13/2009#

TextBox1.Text &= dtString.ToLongDateString()

End Sub

Running this code results in the output shown in Figure 3.7.

Figure 3.7 Date formats

As noted earlier, primitive values can be assigned directly within your code, but many developers seem unaware of the format, shown previously, for doing this with dates. Another key feature of the Date type is the capability to subtract dates in order to determine a difference between them. The subtract method is demonstrated later in this chapter, with the resulting Timespan object used to output the number of milliseconds between the start and end times of a set of commands.