Chapter 2

Quality

“It's baby alpaca. You like?”

“No me gusta.” (I don't like it.)

“But it's very fine cloth. Very soft…come feeeeeel!” There's nothing like having dusty alpaca thrust in your face, seconds after you've summarily rejected it. I sprang from my crouched position in the market stall, narrowly escaping the blow.

“No necessitoooo!” (No, I don't need it!)

“Que buscas, papi?” (What are you looking for?)

“Buscando por algo mas antigua.” (I'm looking for something older…)

Temuco, Chile. No, no one was trying to sell me a baby alpaca, but I was in yet another street-side mercado (market) perusing textiles and trinkets amid cloistered, canopied stalls. The vendors were Mapuche, an Andean people famed for, among other things, the intricate textiles they've meticulously produced for more than a millennium. With my broken Spanish and their broken English, we bartered through the afternoon as I was shown one manta (Andean Spanish for poncho) after another, each woven from alpaca, wool, or cotton. To the Mapuche, baby alpaca is code for quality but, to me, it means only you're going to pay a lot for this manta!

“Ay otras mas antiguas, con diseños o figuras?” (Anything older, with designs or figures?) As I disapprovingly rolled my eyes, thumbing over and pulling at the poor stitching of the last manta I'd been handed, Maria realized she wasn't dealing with the average tourist. You see, I'm the manta ringer…

I'd been backpacking for months throughout Central and South America, touring textile museums in Cusco, La Paz, Santiago, Valparaiso, and elsewhere, and spending my days filming and interviewing indigenous artisans as they worked and wove, observing their techniques and learning how they value and distinguish textile quality.

“Baby alpaca?!”

I spun around, wincing at the tired phrase, but thankfully the attention of the manta-mongers had shifted to newly arrived gringos who immediately began exclaiming over the first poncho they were shown, “Oh, that's so soft!”

I audibly groaned. Suckers.

It was a machine-made poncho that definitely wasn't baby alpaca, would probably disintegrate when washed, and whose colors would definitely bleed—but they were satisfied and departed just as quickly as they came.

Maria returned to me with undivided attention and a small stack of antique mantas that had been recessed from view. Beautiful stitching, natural colors, intricate designs, historic figures: I'd finally found the high-quality textiles for which the Mapuche are famous and which had enticed me to Patagonia.

My pursuit of quality ponchos has remarkable similarities to the pursuit of software quality, excepting of course the distinction that I'm a user of ponchos but a producer of software. Several general tenets about quality can be inferred from the previous scenario:

- Quality, like beauty, is in the eye of the beholder, in that one person's quality may not be another's. Maria believed (or at least was trying to convey) that “baby alpaca” denoted quality, while I valued stitching and classic design. Software quality also doesn't represent a tangible construct that can be unambiguously identified when separated from stakeholder perspective or intent.

- Quality comprises many disparate characteristics, not all of which are valued by any one person. Characteristics can describe functionality, which first and foremost for a poncho is to keep you warm and dry, or can describe performance, such as durability over time or the ability to be washed without colors bleeding. Software quality models also include functional and performance dimensions that specify not only what the software should accomplish but also how well it should accomplish it. Just like a poorly stitched poncho, software can sometimes seem to meet functional needs, but unravel over time or under duress.

- Quality (or the lack thereof) isn't always recognized and, without an organized understanding of the product or product type, it may be impossible to accurately determine (and prioritize) quality. I had studied textiles extensively and had objective criteria against which I could measure quality, but the tourists I encountered were not similarly knowledgeable. Dozens of characteristics that describe software quality exist in quality models, and the extent to which these dimensions and their interactions are understood can provide a framework and vocabulary that facilitate a defensible, standardized assessment of quality against internationally recognized criteria.

- Quality standards should be measurable, in that while quality standards are beneficial, they are typically useless if they can't be measured and validated against a baseline. I can't distinguish baby alpaca fibers from adult alpaca fibers, so being told I'm paying a premium for something I can't even identify provides no value and is nonsensical. Software development similarly benefits from measurable requirements that not only guide development itself, but also enable demonstration of software quality to customers and stakeholders. Without measurable standards, how do SAS practitioners even know when they've completed their software or if it meets product intent? They don't!

- Quality isn't always being pursued, because it has inherent trade-offs. As the tourists were shopping, I actually did interject and try to lead them to a “higher quality”—at least in my mind—poncho, to which they politely explained their rationale. The poncho was for a child so durability and performance were not valued—they just needed something. Moreover, they were running to catch a bus and had only a minute to shop, thus expedience outweighed product quality. Trade-offs always exist in software development, and the decision to choose one construct over another should be intentional, not made haplessly or out of ignorance. Function and performance define quality but inherently compete for a stake therein. Software quality, moreover, competes with project schedule and project cost, so many development projects may have goals that outpace quality, or embrace requirements that reference only function but not performance. The decision to exclude dimensions of quality is often justified but should be made judiciously.

- The lack of quality incurs inherent risks, whether quality is negligently omitted or intentionally deprioritized. As the tourists were leaving, I hollered after them “Make sure you wash that thing in Woolite so it doesn't disintegrate!” I was alerting them to the risk posed by the threat of a rough washer that could exploit weaknesses (vulnerabilities) in their cheap poncho. Risks are inherent in all software development projects, but, especially where other constructs are prioritized over quality, developers should understand software vulnerabilities and the specific risks they pose. In this way, the benefits and business value of quality inclusion can be weighed against the risks of quality exclusion.

DEFINING QUALITY

In a general, nontechnical sense, quality can be defined as “how good or bad something is or a high level of value or excellence.”1 A tourist might exclaim “That's a quality poncho!” while having no knowledge of pre-Columbian textiles. In a technical sense, however, quality is always assessed with respect to needs or requirements. The Institute of Electrical and Electronics Engineers (IEEE) defines quality as “the degree to which a system, component, or process meets specified requirements.”2 The International Organization for Standardization (ISO) defines software quality as the “degree to which the software product satisfies stated and implied needs when used under specified conditions.”3 This technical distinction is critical to understanding the context in which quality is discussed throughout this text, since software quality cannot be evaluated without knowledge of the functional and performance intent of software. Quality cannot be judged in a vacuum.

An important aspect of software quality is its inclusion of both functional and performance requirements. Functional requirements specify the expected behaviors and objective of software, or what it does. Performance requirements (once termed nonfunctional requirements) specify characteristics or attributes of software operation, such as how well the expected behaviors are performed. For example, if SAS analytic software is designed to produce an HTML report, the content, accuracy, completeness, and formatting of that report would be specified as functional requirements, as would any data cleaning operations, transformations, or other processes. But the ability of the software to perform efficiently with big data (i.e., scalability) or the ability of the software to run across both Windows and UNIX environments (i.e., portability) instead demonstrate aspects of its performance.

To develop software effectively, SAS practitioners should understand the dimensions of quality that may be required, whether inferred through implied needs or prescribed through formal requirements. Quality begins during software planning and design, when needs are identified and technical requirements are specified. Without requirements, developers won't know whether they are developing a reliable, enduring product or an ephemeral solution intended to be run once by a single user for a noncritical system. Moreover, without an accepted software quality model that defines dimensions of quality and the ways in which they interact, it's difficult to demonstrate when software development has been completed and whether its ultimate intent was achieved.

Avoiding the Quality Trap

Quality gets bandied about so frequently in everyday conversation that it's important to differentiate its very specific definition and role within software development. In general, when you speak of quality in isolation such as that's a quality alpaca, you're expressing appreciation for product or service excellence. And, similarly, when you discover a high-quality Thai restaurant, you want to dine there often because the drunken noodle is delicious! In this sense, quality can represent an isolated assessment of the food, or it could represent a comparison of the high-quality Thai food to other Thai restaurants whose food was not exceptional. Thus, in assessing or describing quality, a comparison may intrinsically exist, but may be inferred rather than specified outright.

As a software user, I find this comparative use of quality is common. For example, I use Gmail because it offers greater functionality and performance than other email applications. In terms of functionality, I appreciate the threaded emails, overall layout, and ability to thoroughly customize the environment. In terms of performance, the high-availability service is extremely reliable and I trust the security of the Gmail servers and infrastructure. But in exclaiming that Gmail is high quality, I'm inherently making a comparison between this email application and email applications that I've previously used that offered less functionality or performance. Thus, a software user often determines quality based on his needs and his requirements and possibly a comparison of how well one software application meets those needs as compared to another.

As a software developer, however, I recognize that software quality is consistently defined by organizations like ISO and IEEE, which specify that quality must always be assessed against needs or requirements, rather than in isolation or comparatively to other software. These definitions are in line with the view espoused in product and project management literature: that quality represents “the degree to which a set of inherent characteristics fulfill requirements.”4 Without knowledge of the intent with which software was created, the software function and performance can be described, but it's impossible to determine software quality—this is the quality trap to which we fall victim, due to the chasm between generalized and industry-specific usage and definitions of quality.

So if the term quality carries so much baggage, how are you supposed to assess software quality or even discuss it? How do you tell a coworker that his software sucks? Software quality models are recognized as an industry standard because of the organized, standardized nomenclature that fully describes software performance. The primary objective of this text is to demonstrate the ISO software product quality model, its benefits, its limitations, and its successful implementation into SAS data analytic development and into the lexicon with which we discuss software and software requirements.

A New Quality Vocabulary

To begin a rudimentary assessment of software quality, imagine the following SAS data set exists:

data sample;

length char1 $20 char2 $20;

char1="I love SAS";

char2="SAS loves me";

run;You're now asked to assess the quality of the following SAS code, which simulates a much larger data transformation module within extract-transform-load (ETL) or other software:

data uppersample;

set sample;

char1=upcase(char1);

char2=upcase(char2);

run;So is the code high quality, low quality, or somewhere in the middle? In other words, would you rehire the SAS practitioner who wrote it, or fire him for being a screwup? Therein lies the quality trap. Given ISO and IEEE quality definitions, it's impossible to know whether this represents high- or low-quality software without first understanding the objective, needs, and requirements that spawned it.

You subsequently find an email from a manager requesting that the previous code be developed:

At this point, you can understand the ultimate project need and can assess that the code is high quality because it meets all requirements and was developed quickly. The only functional requirement given was capitalization, and the only performance requirement that can be inferred is speed of processing, since the manager needed the code written and executed quickly. It's not fancy, but it works and delivers the business value specified by the customer.

But now imagine that the manager instead had sent the following alternative email to request the software:

This alternative requirements definition specifies software with identical functionality (at least when applied to the Sample data set), but is more dynamic and complex. The manager requested a modular solution, so a SAS macro probably should be created. Moreover, given that the macro requires that the data set and variable names be dynamic, the module should be able to be reused to perform the same function in other contexts and on future data sets. This would be of tremendous advantage if the same team later required hundreds of variables to be capitalized in a larger data set, which would take much longer to code manually.

Given the alternative more complex requirements, the original transformation code developed could be assessed to be of poor quality. It meets the ultimate functional objective (all variables are capitalized), but lacks performance requirements that specified it be modular, dynamic, and reusable. The SAS solution that does meet these additional performance requirements is demonstrated in the “Reusability Principles” section in chapter 18, “Reusability.” The increased code complexity also demonstrates the correlation between the inclusion of quality characteristics and increased complexity and development time, an inherent tradeoff in software development.

Dimensions of Quality

Given the specific use of quality within software development circles, the need still arises to describe software that exhibits a high (or low) degree of quality characteristics (i.e., performance requirements), irrespective of software needs or requirements. For example, back to the original question: If I'm unaware of the email that the manager sent the developer prescribing software requirements, how do I describe the quality of the code in a vacuum?

If backed into a corner, I might describe the code as relatively low quality, essentially making a comparison between this code and theoretical code that could have been written having the same functionality. More likely, I might state that the code is not production software, essentially evaluating the code against theoretical requirements that would likely exist if the code underpinned critical infrastructure, had dependencies, had multiple users, or was expected to have a long lifespan. However, a tremendous benefit of embracing a software quality model is that you can discuss the performance of software without dragging poor old quality into the fray. For example, I can instead say that the code lacks stability, reusability, and modularity—which concisely evaluates the software irrespective of needs and requirements.

The lexicon established through the adoption of software quality models benefits development environments because the derived vocabulary is essential to software performance evaluation. Only with unambiguous technical requirements that implement consistent nomenclature can quality be incorporated and later measured in software. It's always more precise and useful to state that software “lacked modularity and reusability” than to vaguely assert only that it “lacked quality.” Even when stakeholders choose not to implement dimensions of software quality in specific products, they will have gained a recognized model and vocabulary about which to discuss quality and consider its inclusion.

Data Quality and Data Product Quality

Within SAS literature, when quality is referenced, it nearly always describes data quality and, to a lesser extent, the quality of data products such as analytic reports. In fact, in data analytic development, quality virtually never describes software or code quality. This contrasts sharply with traditional software development literature, which describes characteristics of software quality and the methods through which that quality can be designed and developed in software.

This shift in quality focus is somewhat expected, given that data analytic development environments usually consider a data product to be the ultimate product and source of business value, as contrasted with traditional software development environments in which software itself is the ultimate product. The misfortune is that by allowing data quality and data product quality to eclipse (or replace) code quality, data analytic development environments unnecessarily fail to incorporate performance requirements throughout the SDLC. Thus, code quality takes a backseat to data quality during requirements definitions, design, development, testing, acceptance, and operation.

If the software that undergirds data products lacks quality, though, how convincingly can those data products really be shown to demonstrate quality? To illustrate this all-too-common paradox, I'll borrow an analogy from a friend who, while working for the Department of Defense (DoD), encountered a three-star general who was adamant that some trendlines in a SAS-produced analytic report were “not purple enough.” He was focused on the quality of the data product, to the total exclusion of interest in the quality of the underlying software. Was the software reliable? Was it robust, or could it be toppled by known vulnerabilities? Was it extensible in that it could be easily modified and repurposed if the general had a tactical need that required subtle variation of the analysis? No, none of these questions about code quality were asked, only “Can you make this more purple?!”

This text doesn't aim to diminish the importance of data quality and data product quality. Rather, it places code quality on par with data quality, with the understanding that any house built upon a poor foundation will fail.

SOFTWARE PRODUCT QUALITY MODEL

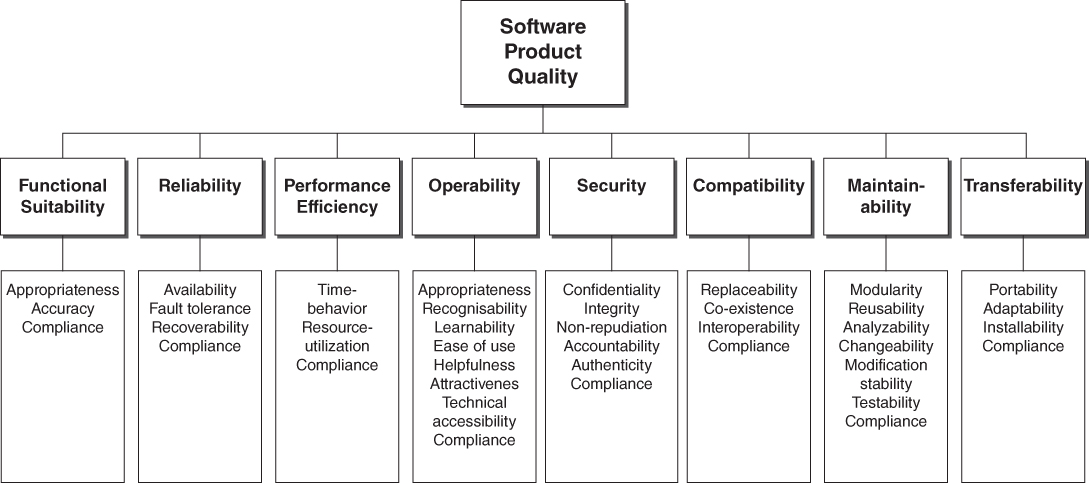

The software product quality model is a “defined set of characteristics, and of relationships between them, which provides a framework for specifying quality requirements and evaluating quality.”5 The ISO model, demonstrated in Figure 2.1, lists eight components and 38 subcomponents.

Figure 2.1 ISO Software Product Quality Model

(reproduced from ISO/IEC 25000:2014).

An important distinction of the ISO model is that functionality is viewed as equivalent to seven other quality components. Because functionality varies by each specific SAS software product, functionality is not a focus of this text despite lying at the core of every software project. Thus, unless explicitly stated, all SAS code examples are assumed to meet software functional requirements. Many of the quality model components and subcomponents of the software product quality model are described later in detail and represent individual chapters.

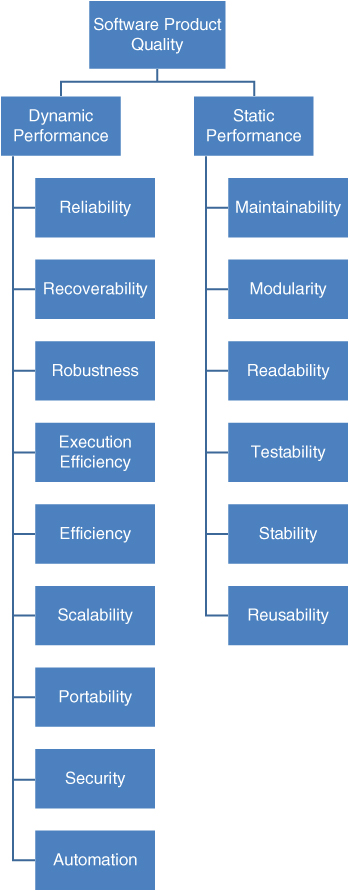

The software product quality model presented in Figure 2.1 differs slightly from but does not contradict the model inherently present in the organization of this text. The external quality dimensions, referenced as dynamic performance requirements, include reliability, recoverability, robustness, execution efficiency, efficiency, scalability, portability, security, and automation. The internal quality dimensions, referenced as static performance requirements, include maintainability, modularity, readability, testability, stability, and reusability. Figure 2.2 demonstrates the structure of this text as it fits within the ISO software product quality model.

Figure 2.2 Software Quality Model Demonstrated in Chapter Organization

Two quality dimensions—stability and automation—included in this text are not in the ISO model, so an explanation is warranted. Software stability describes software that resists maintenance and can be run without or with minimal modifications. In traditional software development environments, because software is produced for third-party users, developers have no access to their software once it has been tested, validated, and placed into operation. Thus, testing is valued because all defects and vulnerabilities should be eliminated before software is released. Failure to test software will necessitate that patches or updates are distributed to users, which can be costly and time-consuming and cause users to lose faith in software.

Base SAS software, unlike many software applications, can be run from an interactive mode in which the SAS application is opened manually, code is executed manually, and the log is reviewed manually. While sufficient for some purposes and environments, the interactive nature of SAS code encourages developers to continually modify code simply because they can, thus discouraging software stability. For this reason, an entire chapter distills the benefits of software stability, including its role as a prerequisite to code testing, reuse, extensibility, and automation.

Software automation describes software that can be run without (or with minimal) human intervention, and, in traditional software development, this is required because users expect to interact with an executable program rather than raw code they have to compile. The SAS interactive mode may be sufficient for executing some production software but, when SAS software must be reliably and regularly run, automation (and subsequent scheduling of batch jobs) can best achieve this objective. Automation of SAS software also includes spawning SAS sessions through software that improves performance through parallel processing.

External Software Quality

External software quality is “the degree to which a software product enables the behavior of a system to satisfy stated and implied needs when the system including the software is used under specified conditions.”6 External quality characteristics are those that can be observed by executing software. For example, software is shown to be reliable because it does not fail. Software is shown to be scalable because it is able to process big data effectively without failure or inefficiency.

External software quality is sometimes referred to as the black-box approach to quality, because the assessment of quality is made through examination of software execution alone, rather than inspection of the code. Thus, a black-box view of software efficiency can assess metrics such as execution time or resource utilization, but cannot determine whether SAS technical best practices that support efficient execution were implemented. Black-box testing is discussed in the “Functionality Testing” section in chapter 16, “Testability.”

In traditional software applications, users have no access to the underlying code and are only able to assess external software quality through a black-box approach. Thus, while internal software quality characteristics are important to building solid, enduring code that will be more easily maintained, only external software quality characteristics can be demonstrated to customers, users, and other stakeholders who may not have access to code. Because SAS software users often do have access to underlying code, the distinctions between black- and white-box testing (that is, testing internal software quality, which is further discussed in a later section) are less significant in SAS software development than in other languages.

Functionality

Functional suitability is “the degree to which the software product provides functions that meet stated and implied needs when the software is used under specified conditions.”7 Throughout this text, however, functionality is referenced rather than functional suitability, in keeping with prior ISO standards and the majority of literature.8 Moreover, functional requirements describe technical rather than performance specifications within requirements documentation—for example, specifying the exact hue of purple on analytic reporting to satisfy the general.

The central characteristic of external software quality is functionality, without which software would have no purpose. Functionality is omitted from this and many other software development texts because it describes specific software intent while performance quality characteristics can be generalized. With functionality removed, the remaining external software characteristics comprise dynamic performance attributes, such as reliability or efficiency, but without function, there is nothing to make reliable or efficient.

Dynamic Performance Requirements

A performance requirement is “the measurable criterion that identifies a quality attribute of a function or how well a functional requirement must be accomplished.”9 Dynamic performance requirements are performance attributes that can be observed during software execution. In other words, they represent all characteristics of external software quality except functionality, as demonstrated in Figure 2.3. Dynamic refers to the fact that software must be executing (i.e., in motion) to be assessed, whereas static performance attributes must be assessed through code inspection when software is at rest.

Figure 2.3 Interaction of Software Quality Constructs and Dimensions

Throughout this text, performance requirements are sometimes referred to as performance attributes, especially where the use is intended to demonstrate a quality characteristic that may not be required by specific software. For example, if a SAS practitioner is considering implementing scalability and security principles but decides to omit security from a software plan, security would be referenced as a performance attribute—not a performance requirement—to avoid confusion since the quality characteristic was not actually required by or implemented into the software. In general, however, the terms are interchangeable.

Because dynamic performance requirements can be observed by all stakeholders, they are traditionally more valued than static requirements, and are thus more readily prioritized into software design and requirements. For example, a customer can more easily comprehend the benefits of faster software because speed is measurable. However, unless the same customer has a background in software development, he may have a more difficult time comprehending the benefits of incorporating modularity or testability into software because these attributes cannot be directly measured or their effects observed during execution.

Internal Software Quality

Internal software quality is defined as the “degree to which a set of static attributes of a software product satisfy stated and implied needs when the software product is used under specified conditions.”10 These characteristics can only be observed through static examination of code and, sometimes in the case of readability, through additional software documentation that may exist. Testability sometimes can also be assessed through a formalized test plan and test cases, as discussed in chapter 16, “Testability.”

Internal software quality is sometimes referred to as the white-box (or glass-box) approach to quality, because the assessment of quality is made through code inspection rather than execution. A white-box view of software reusability assesses to what extent software can be reused based on reusability principles, but requires that code be reviewed either manually or through third-party software that parses code. Because users have no access to underlying code in traditional software applications that are encrypted, they have no way to assess internal software quality; if they lack technical experience, they may even have no awareness of its concepts.

Static Performance Requirements

Static performance requirements describe internal software quality, such as maintainability, modularity, or stability. Due to the inherent lack of visibility of internal software quality characteristics, in some organizations and for some software projects, it may be more difficult to encourage stakeholders to value and prioritize these characteristics into software as compared with the more observable dynamic performance requirements. For example, it's easy to demonstrate to a customer the benefits of increased speed, but to demonstrate the benefits of increased reusability requires a conversation not only about reusability but also software reuse.

In addition to being more difficult to observe, static performance requirements also provide less immediate gratification to stakeholders and instead represent an investment in the future of a software product. For example, when dynamic performance is improved to make software faster, the effect is not only observable but also immediate. When static performance is improved to make software more readable or modular, the changes are not observable (through software performance), and moreover, the improvement is only beneficial the next time the software needs to be inspected or modified. Modularity can facilitate improved dynamic performance, as discussed throughout chapter 7, “Execution Efficiency,” but, in general, static performance requirements are neither observable nor immediate. As the anticipated lifespan of software increases, however, static performance requirements become increasingly more valuable because they improve software maintainability, one of the most critical characteristics in promoting software longevity.

Commingled Quality

The organization of individual dimensions of software quality is straightforward; however, placement of quality dimensions within superordinate structures (such as quality models) can become complex due to nomenclature variations. For example, external software quality comprises both functional and performance requirements, a dichotomy commonly made in software literature. This is reflected in the ISO software product quality model, which subsumes functional suitability (i.e., functionality) under software product quality.11

Other quality models, however, include performance characteristics but omit functionality. For example, many definitions of the iron triangle—the nexus of project scope, schedule, and cost—define scope as having separate quality and functional components, thus intimating a model in which quality omits functionality. As one example of this somewhat divergent view of quality, the International Institute of Business Analysis in its Guide to the Business Analysis Body of Knowledge® (BABOK Guide®) distinguishes quality from functionality in its requirements definitions:

- “Functional requirements: describe the capabilities that a solution must have in terms of the behavior and information that the solution will manage.”12

- “Non-functional requirements or quality of service requirements: do not relate directly to the behavior of functionality of the solution, but rather describe conditions under which a solution must remain effective or qualities that a solution must have.”13

More quality model commingling occurs because software performance requirements comprise both dynamic and static performance requirements, with the former representing external software quality characteristics (with functionality omitted) and the latter representing internal software quality characteristics. Because this text focuses on software performance and excludes functionality (in assuming that functional requirements have been attained in all scenarios), the quality structure consistently referenced throughout this text reflects the dichotomy of dynamic versus static performance.

These commingled quality constructs are demonstrated in Figure 2.3, which highlights the roles of functionality, reliability, and maintainability through these various interpretations of quality. Whether functionality is a component of quality, or functionality and quality are both components of scope, one thing is clear—software functionality and software performance do compete for resources in a struggle to be prioritized into software product requirements. This and other tradeoffs of quality are discussed in the following section.

The Cost of Quality

As demonstrated in Figure 2.3, inherent trade-offs exist whether developing software or other products, often referred to as constraints. The PMBOK® Guide includes quality and five other constructs as the principal constraints to projects, but states that these should not be considered to be all-inclusive.14 As a constraint, quality competes against other constructs for value and prioritization within software projects during planning and design. In fact, when developers omit performance from software, look at all the amazing things they can get instead!

- Increased Scope Without the necessity to code performance requirements into software, developers instead can focus on additional functionality. For example, rather than making SAS software robust to failure, additional analytic reports could be developed.

- Schedule The inclusion of performance requirements almost always makes code more lengthy and complex; as a result, designing, developing, and testing phases each will be lengthened. Without performance prioritized, the customer and other stakeholders can receive their software much faster.

- Budget Because performance requirements take time to implement, and because time is money, project budgets typically increase with the increase in performance requirements. By omitting performance from software, a development team can either reduce budget or focus that money toward other priorities. Happy hour, anyone?

- Resources Project resources include not only personnel but also maintaining the heat and power. By sacrificing performance requirements, fewer developers may be required to work on a project to achieve the same functionality so fewer resources will be consumed.

- Risk Risk is not a benefit but rather the often-unintended consequence when performance is not prioritized. Because many performance requirements are designed to mitigate or eliminate vulnerabilities in software, risk typically increases as performance requirements are devalued or omitted.

While constraint implies a limiting or restrictive effect, constraints can also be viewed from the opportunity cost perspective. For example, developers can implement performance requirements into software, or, if they choose not to, they can produce quicker software, cheaper software, or software with increased functionality. To assess an opportunity, however, its value must be known. Thus, an objective of this text is to familiarize developers with the benefits of incorporating performance within software so that the opportunity cost of quality truly can be gauged. For example, if a stakeholder has decided to implement additional functionality (thus excluding additional performance), the stakeholder at least will have understood the value of the performance that could have been added, and thus will have made an informed decision based on the opportunity cost of that performance. Only when stakeholders understand the value of quality and the risks of the lack of quality can quality be measured against other project constraints such schedule, scope, or budget.

Establishing the value of quality can be difficult, especially where stakeholders disagree about the prioritization of constructs within a software development project. Sponsors who are funding software development will want it done inexpensively while users waiting for the software will want it developed quickly. Some developers may be more focused on the function of software and less concerned with performance attributes while other SAS practitioners may insist that the software be reliable, robust, maintainable, and modular. Thus, establishing consensus on what characteristics contribute to software quality and how to value that quality (against other project constructs) can be insurmountable. Nevertheless, stakeholders—including developers and non-developers alike—will be better positioned to discuss the inclusion of quality characteristics when they can utilize shared terminology demonstrated within the software product quality model.

QUALITY IN THE SDLC

The phases of the SDLC are introduced in the “Software Development Life Cycle (SDLC)” section in chapter 1, “Introduction.” Quality is best incorporated into software throughout the SDLC, including software planning before a single line of code has been written. In an oversimplified example, consider that you need to sort a data set to retain only unique observations. Functional needs such as this (but obviously more complex) often spawn software projects and, in many cases, only functional objectives are stated at the project outset.

But industry, organizational, and other regulations and standards, as well as overall software objectives, will necessitate not only what software must accomplish but also how well it must perform. Performance objectives include the manner in which software is intended to be run and, while they can change over the software lifespan, initial objectives and needs should be considered and discussed for inclusion as potential software performance requirements. Table 2.1 enumerates examples of performance-related questions that can be asked during software planning to spur performance inclusion.

Table 2.1 Common Objectives and Questions

| Performance Objective | Performance Question |

| Reliability, Longevity | Will the software be run only once, a few times, or is it intended to be enduring? |

| Reliability, Robustness | Will the software pose a risk if it fails? |

| Efficiency, Execution Efficiency | Does the software have any resource limitations or execution time constraints? |

| Scalability | How large are the input data sets and will they increase over time? |

| Portability | Should the software be able to run on different versions of the SAS or on different operating systems? |

| Reusability | Should components of the software be intended to be reused in the future? |

| Testability | Should the software be written in a testable fashion, for example, if a formalized test plan is going to be implemented? |

Only through both function- and performance-related questions can stakeholders truly conceptualize software and all its complexities. Once the true needs of software are established in planning, other questions such as build-versus-buy can be discussed. For example, do you write (or modify) SAS software to sort the data set or purchase this functionality from a third-party vendor?

Another benefit of a formal design phase—even if for smaller software projects, this represents only an hour-long conversation—is the ability to redefine and prioritize needs of the project. In the initial scenario, the ultimate objective was stated to be the identification of unique observations that could be accomplished through sorting. However, a SAS index could also accomplish this objective without sorting any data, so a design phase would facilitate the discussion about the pros and cons of proposed technical solutions. The distinction between planning and design is sometimes made in that planning is needs-focused whereas design and development phases are solutions- and software-focused. Thus, the customer needs and objectives should be defined and understood during software planning, but the specific technical methods to achieve those objectives will materialize over time.

Requiring Quality

Everything about quality begins and ends with requirements. Requirements specify the technical objectives that software must achieve, guiding software through design and development. Whether those same requirements have been achieved is determined through testing that validates software completion and demonstrates intended quality.

In chapters that introduce dynamic performance requirements, a recurring Requiring section describes how technical requirements for the respective quality characteristic can be incorporated into requirements. The section is intended to demonstrate that, while the incorporation of performance is important and can occur throughout the SDLC, it's best implemented with planning and foresight and in response to specific needs and requirements.

As the number of stakeholders for a software project increases, the importance of formalized requirements commensurately increases. Where stakeholders represent developers who may be working together to build separate components of software, requirements help ensure they are developing toward identical specifications and a common goal. The extent to which requirements can unambiguously describe the body of development work enables developers to rely on information rather than interpretation. Stakeholders rarely enter project planning with identical conceptualizations of intended software function and performance; however, through codification and acceptance of requirements, stakeholders can inaugurate a software project with a shared view of software objectives and future functionality and performance.

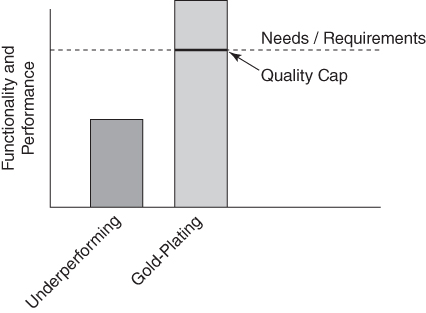

Without collective software requirements, at software completion, stakeholders may disagree about whether software is high quality, low quality, or even complete. Without an accepted body of requirements, stakeholders have nothing to assess software against except their own needs, which may vary substantially by individual. Moreover, undocumented needs have a tendency to morph throughout the SDLC through scope creep. Software requirements help avoid the misalignment that occurs when the software produced either exceeds or fails to meet customer needs, discussed in the following sections and demonstrated in Figure 2.4.

Figure 2.4 Underperformance and Gold-Plating

Note that while gold-plating may provide additional functionality or performance, this does not result in increased software quality because the customer did not need, had not required, or would not gain additional business value from this additional effort.

Avoid Underperformance

Underperforming doesn't make you an underachiever; at some point in your career, a customer will review your software and tell you it's not up to par. You failed to meet functional or performance objectives that were either implied or specified through technical requirements, so your software lacks anticipated quality. Underperformance represents a misalignment between the expected and delivered quality. However, when underperformance occurs in environments that rely primarily on performance needs that are implied rather than explicitly stated through technical requirements, this ambiguity can leave all stakeholders consternated. Developers may believe they delivered the intended software while customers and users feel otherwise, leaving a functional or performance gap.

A performance gap occurs when your manager requested efficient software and specified thresholds for run time, speed, and memory utilization, but the software you delivered fails to meet those objectives. The software is functionally sound, but its quality is diminished because performance is reduced. What if expected performance was only implied, not defined through requirements? Maybe the manager believed you understood that the software needed to complete in less than 15 minutes, but that information was never actually conveyed, or was confounded by other needs presented during software planning. The project manager wanted “fast SAS,” and you thought you delivered “fast SAS,” but because performance requirements were not defined or accepted (so they could be measured and validated), whose definition of “fast SAS” should be used to measure the quality of the software at completion?

Requirements that are stated and measurable can avoid underperformance, essentially by providing a checklist by which SAS practitioners can evaluate their code. Does it meet all functional requirements? Done. Does it meet all performance requirements? Done. Does it meet all test cases specified in a formalized test plan? Done. Establishing requirements ensures that quality is collectively defined during software planning and collectively measured and validated at software completion.

Avoid Gold-Plating

Continuing the development scenario in the “Quality in the SDLC” section, you're a researcher writing SAS software to sort data to select unique observations. You've asked yourself several performance-related questions during software planning and, in this end-user development scenario, business value is delivered almost exclusively through functionality, not performance. Thus, what matters is that the SAS software selects unique observations and produces an accurate data set. If the program fails, you as an end-user developer can restart it, so robustness is not that important. Because the data sets aren't very large, efficiency during execution also is not a priority because the processes will complete in seconds. And, because the data sets aren't expected to increase in size over time, data scalability should not be a priority in development.

This isn't to assert that quality doesn't matter in this scenario or in end-user development in general, but rather that if the incorporation of performance attributes pose no value and their absence poses a negligible (or at least acceptable) risk, then they shouldn't be included. Thus, in this scenario, if you spent hours empirically testing whether a SAS index, SQL procedure, or combined SORT procedure and DATA step would produce the fastest running code (or the most efficient use of system resources), that would have been a waste of time—at least for this project, because it would have delivered no additional business value while substantially delaying completion. Even if you did successfully achieve increased efficiency, the software quality by definition would not have increased because the additional performance fulfilled no performance requirements.

Gold-plating often occurs when software requirements lack clarity, leaving room for interpretation or assumption. A proud SAS practitioner might deliver an impressively robust and efficient program, expecting to be lauded by a manager, only to receive condemnation because the software didn't need to be robust. Rather, it needed to be completed yesterday, and the inclusion of the performance actually decreased software value because it delayed analysts' ability to use resultant data sets or data products. By creating technical requirements at project outset, a shared understanding of software value can be achieved that can guide SAS practitioners to focus on delivering only universally valued function and performance.

Gold-plating essentially answers the question: Can you ever have too much quality? Yes, you can. Delivering unanticipated performance can sometimes endear you to customers—your boss didn't ask you to make the software faster, but you did because you're just that good, and he heartily thanked you for the improvement. But delivering unwanted performance doesn't increase software quality and, as demonstrated, can decrease the value of software by causing schedule delays, budget overages, or the loss of valued function or performance that had to be eliminated because rogue developers gold-plated their software. Rogue is risky; don't do it.

Saying No to Quality

One technique to avoid gold-plating is not only to specify performance attributes that should be included in software, but also to specify quality characteristics that should not be included. Not all software is intended for production status or a long, happy life. Often in analytical environments, snippets of SAS code are produced for some tactical purpose and, after the subsequent results are analyzed, either discarded or empirically modified. This expedient software might not benefit from the incorporation of many performance characteristics. If its purpose shifts over time from tactically to strategically focused, however, or from supporting ancillary to critical infrastructure, commensurate performance should later be incorporated into the software.

This again illustrates the concept that some SAS software will be defined by functional requirements alone, to the exclusion of performance requirements. In cases in which the intent of software changes over time, reevaluation should determine whether the software requirements—including functional and performance—are still appropriate. But in cases where performance would provide no intrinsic value to software, performance characteristics should not be included and opportunity costs—such as additional functionality or reduced project cost—should instead be prioritized.

Saying Yes to Quality

Certain aspects of software intent or the development environment can warrant higher-performing software. In some circumstances, performance can be as highly valued and essential to software as functionality. This value may spur from additional performance being viewed as beneficial or, conversely, from a risk management perspective in which performance is prioritized to mitigate or eliminate specific risks. The following circumstances often dictate software projects requiring higher performance.

- Industry Regulations Certain industries have specific software development regulations that must be followed; often, the software can or will be audited by government agencies. SAS software developed to support clinical trials research, for example, must typically follow the Federal Department of Agriculture (FDA) General Principles of Software Validation to ensure quality and performance standards are met.15

- Organizational Guidelines Beyond industry regulations, organizations or teams often codify programmatic best practices that must be followed in software development and that often dictate the inclusion of quality characteristics. For example, some teams require that all SAS macros must include a return code that can demonstrate the success or failure of the macro to facilitate exception handling and robustness.

- Software Underpinning Critical Infrastructure ETL or other SAS software that supports critical components of a data analytic infrastructure should demonstrate increased performance commensurate to the risk of the unavailability of the system, software, or resultant data products.

- Scheduled Software SAS software that is automated and scheduled for recurring execution should demonstrate increased performance. Inherent in automating and scheduling SAS jobs is the understanding that SAS practitioners will not be manually reviewing SAS logs, so reliability and robustness should be high.

- Software Having Dependent Processes or Users If SAS software is trusted enough to have dependent processes or users rely on it, its level of performance should reflect the risk of failure not only of the software itself, but also of all dependencies.

- Software with Expected Longevity SAS software intended to be operational for a longer duration should demonstrate increased performance. Static performance requirements are especially valuable in enduring software products because they support software maintainability, an investment that increases in value with the intended software lifespan.

Implied Requirements

All this talk of requirements may have some readers concerned that quality is synonymous with endless documentation. Some software development environments do produce significant documentation out of necessity, especially when several or all of the characteristics in the “Saying Yes to Quality” section are present. However, from the ISO definition of quality, it's clear that software quality can address implied as well as explicit needs. Moreover, the second of four Agile software development values states that “[w]orking software [is valued] over comprehensive documentation.”16 Thus, at the organizational or team level, stakeholders will need to assess the quantity and type of requirements that should have actual documentation versus those that might be equally served through an implied understanding of performance needs and requirements.

To provide one example, SAS practitioners on a close-knit team hopefully recognize individual strengths and weaknesses, and know and trust each other's technical capabilities. If team members are highly qualified and have a keen awareness of quality characteristics that can facilitate high-performing software, a requirement that software be “built modularly through dynamic SAS macro code” would probably be gratuitous and possibly offensive if it landed in requirements documentation. Especially in teams that have performed together efficiently and effectively (and who have implemented a quality model against which to judge the inclusion of technical requirements in software definitions), many references to performance can be omitted from technical specifications without detriment.

Measuring Quality

Quality is a respected pursuit but even ambition cannot overcome an aimless journey toward an unknown or undefined destination. Backpacking through Central and South America, I rarely knew which chicken bus or taxi I was taking or where I would be sleeping, but I always knew the end-game: a flight out of Buenos Aires months later. Because the flight was defined, it was a clear metric that I would achieve, miss, or possibly modify to extend the journey. While backpacking, I could continually alter my route, travel modalities, and sightseeing to ensure I arrived in Buenos Aires on time.

The quest for software quality can be a similarly convoluted and tortuous pursuit but it should have a defined destination. Through performance requirements, SAS practitioners are made aware of the necessary quality characteristics that must be built into software. Even within Agile development environments that implement iterative, rapid development, the expected functionality and performance are defined and stable within each time-boxed iteration. Performance requirements not only guide and spur design and development, but also should be used to evaluate the attainment of quality at software completion and during operation.

Throughout the dynamic performance requirement chapters, the Measuring sections demonstrate ways that performance can be quantitatively and qualitatively measured. Dynamic performance requirements more readily lend themselves to quantitative measurement than static performance requirements. You can specify that software should complete in 15 minutes, be able to process 3 million observations per hour, only fail less than two times per month, or be operational within Windows but not UNIX. Dynamic performance requirements are not only measurable but also observable; thus, they are typically prioritized over static performance attributes in requirements documentation.

Static performance requirements are often more appropriately measured qualitatively or indirectly. For example, modularity might be approximated by counting lines per macro, or ensuring that all child processes generate return codes to signal success or failure to parent processes. However, modularity is more likely to be expressed as a desired trait of software and thus measured qualitatively. Some development environments similarly track how many times specific modules of code are reused and in what capacity or software, which can provide reuse metrics from which reusability can be inferred. But the best measurement of the inclusion (or exclusion) of static performance requirements is an astute SAS practitioner who can inspect the code and discern quality characteristics.

WHAT'S NEXT?

In chapter 3, “Communication,” techniques are demonstrated that support reliability, robustness, and modular software design by facilitating software communication. The robustness of software is facilitated through the identification and handling of return codes for individual SAS statements and automatic macro variables. User-generated return codes are demonstrated that facilitate communication between SAS parent and child modules, as are control tables that facilitate communication between different SAS sessions and batch jobs.