Basically, the MapReduce model can be implemented in several languages, but apart from that, Hadoop MapReduce is a popular Java framework for easily written applications. It processes vast amounts of data (multiterabyte datasets) in parallel on large clusters (thousands of nodes) of commodity hardware in a reliable and fault-tolerant manner. This MapReduce paradigm is divided into two phases, Map and Reduce, that mainly deal with key-value pairs of data. The Map and Reduce tasks run sequentially in a cluster, and the output of the Map phase becomes the input of the Reduce phase.

All data input elements in MapReduce cannot be updated. If the input (key, value) pairs for mapping tasks are changed, it will not be reflected in the input files. The Mapper output will be piped to the appropriate Reducer grouped with the key attribute as input. This sequential data process will be carried away in a parallel manner with the help of Hadoop MapReduce algorithms as well as Hadoop clusters.

MapReduce programs transform the input dataset present in the list format into output data that will also be in the list format. This logical list translation process is mostly repeated twice in the Map and Reduce phases. We can also handle these repetitions by fixing the number of Mappers and Reducers. In the next section, MapReduce concepts are described based on the old MapReduce API.

The following are the components of Hadoop that are responsible for performing analytics over Big Data:

The four main stages of Hadoop MapReduce data processing are as follows:

- The loading of data into HDFS

- The execution of the Map phase

- Shuffling and sorting

- The execution of the Reduce phase

The input dataset needs to be uploaded to the Hadoop directory so it can be used by MapReduce nodes. Then, Hadoop Distributed File System (HDFS) will divide the input dataset into data splits and store them to DataNodes in a cluster by taking care of the replication factor for fault tolerance. All the data splits will be processed by TaskTracker for the Map and Reduce tasks in a parallel manner.

Also, there are some alternative ways to get the dataset in HDFS with Hadoop components:

- Sqoop: This is an open source tool designed for efficiently transferring bulk data between Apache Hadoop and structured, relational databases. Suppose your application has already been configured with the MySQL database and you want to use the same data for performing data analytics, Sqoop is recommended for importing datasets to HDFS. Also, after the completion of the data analytics process, the output can be exported to the MySQL database.

- Flume: This is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of log data to HDFS. Flume is able to read data from most sources, such as logfiles, sys logs, and the standard output of the Unix process.

Using the preceding data collection and moving the framework can make this data transfer process very easy for the MapReduce application for data analytics.

Executing the client application starts the Hadoop MapReduce processes. The Map phase then copies the job resources (unjarred class files) and stores it to HDFS, and requests JobTracker to execute the job. The JobTracker initializes the job, retrieves the input, splits the information, and creates a Map task for each job.

The JobTracker will call TaskTracker to run the Map task over the assigned input data subset. The Map task reads this input split data as input (key, value) pairs provided to the Mapper method, which then produces intermediate (key, value) pairs. There will be at least one output for each input (key, value) pair.

Mapping individual elements of an input list

The list of (key, value) pairs is generated such that the key attribute will be repeated many times. So, its key attribute will be re-used in the Reducer for aggregating values in MapReduce. As far as format is concerned, Mapper output format values and Reducer input values must be the same.

After the completion of this Map operation, the TaskTracker will keep the result in its buffer storage and local disk space (if the output data size is more than the threshold).

For example, suppose we have a Map function that converts the input text into lowercase. This will convert the list of input strings into a list of lowercase strings.

To optimize the MapReduce program, this intermediate phase is very important.

As soon as the Mapper output from the Map phase is available, this intermediate phase will be called automatically. After the completion of the Map phase, all the emitted intermediate (key, value) pairs will be partitioned by a Partitioner at the Mapper side, only if the Partitioner is present. The output of the Partitioner will be sorted out based on the key attribute at the Mapper side. Output from sorting the operation is stored on buffer memory available at the Mapper node, TaskTracker.

The Combiner is often the Reducer itself. So by compression, it's not Gzip or some similar compression but the Reducer on the node that the map is outputting the data on. The data returned by the Combiner is then shuffled and sent to the reduced nodes. To speed up data transmission of the Mapper output to the Reducer slot at TaskTracker, you need to compress that output with the Combiner function. By default, the Mapper output will be stored to buffer memory, and if the output size is larger than threshold, it will be stored to a local disk. This output data will be available through

Hypertext Transfer Protocol (HTTP).

As soon as the Mapper output is available, TaskTracker in the Reducer node will retrieve the available partitioned Map's output data, and they will be grouped together and merged into one large file, which will then be assigned to a process with a Reducer method. Finally, this will be sorted out before data is provided to the Reducer method.

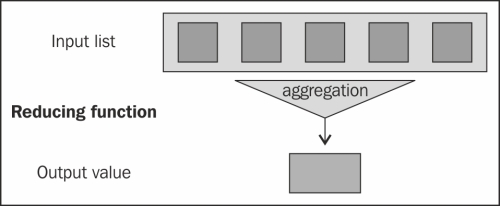

The Reducer method receives a list of input values from an input (key, list (value)) and aggregates them based on custom logic, and produces the output (key, value) pairs.

Reducing input values to an aggregate value as output

The output of the Reducer method of the Reduce phase will directly be written into HDFS as per the format specified by the MapReduce job configuration class.

Let's see some of Hadoop MapReduce's limitations:

- The MapReduce framework is notoriously difficult to leverage for transformational logic that is not as simple, for example, real-time streaming, graph processing, and message passing.

- Data querying is inefficient over distributed, unindexed data than in a database created with indexed data. However, if the index over the data is generated, it needs to be maintained when the data is removed or added.

- We can't parallelize the Reduce task to the Map task to reduce the overall processing time because Reduce tasks do not start until the output of the Map tasks is available to it. (The Reducer's input is fully dependent on the Mapper's output.) Also, we can't control the sequence of the execution of the Map and Reduce task. But sometimes, based on application logic, we can definitely configure a slow start for the Reduce tasks at the instance when the data collection starts as soon as the Map tasks complete.

- Long-running Reduce tasks can't be completed because of their poor resource utilization either if the Reduce task is taking too much time to complete and fails or if there are no other Reduce slots available for rescheduling it (this can be solved with YARN).

Since this book is geared towards analysts, it might be relevant to provide analytical examples; for instance, if the reader has a problem similar to the one described previously, Hadoop might be of use. Hadoop is not a universal solution to all Big Data issues; it's just a good technique to use when large data needs to be divided into small chunks and distributed across servers that need to be processed in a parallel fashion. This saves time and the cost of performing analytics over a huge dataset.

If we are able to design the Map and Reduce phase for the problem, it will be possible to solve it with MapReduce. Generally, Hadoop provides computation power to process data that does not fit into machine memory. (R users mostly found an error message while processing large data and see the following message: cannot allocate vector of size 2.5 GB.)

There are some classic Java concepts that make Hadoop more interactive. They are as follows:

- Remote procedure calls: This is an interprocess communication that allows a computer program to cause a subroutine or procedure to execute in another address space (commonly on another computer on shared network) without the programmer explicitly coding the details for this remote interaction. That is, the programmer writes essentially the same code whether the subroutine is local to the executing program or remote.

- Serialization/Deserialization: With serialization, a Java Virtual Machine (JVM) can write out the state of the object to some stream so that we can basically read all the members and write out their state to a stream, disk, and so on. The default mechanism is in a binary format so it's more compact than the textual format. Through this, machines can send data across the network. Deserialization is vice versa and is used for receiving data objects over the network.

- Java generics: This allows a type or method to operate on objects of various types while providing compile-time type safety, making Java a fully static typed language.

- Java collection: This framework is a set of classes and interfaces for handling various types of data collection with single Java objects.

- Java concurrency: This has been designed to support concurrent programming, and all execution takes place in the context of threads. It is mainly used for implementing computational processes as a set of threads within a single operating system process.

- Plain Old Java Objects (POJO): These are actually ordinary JavaBeans. POJO is temporarily used for setting up as well as retrieving the value of data objects.