2.4. FURTHER READING 13

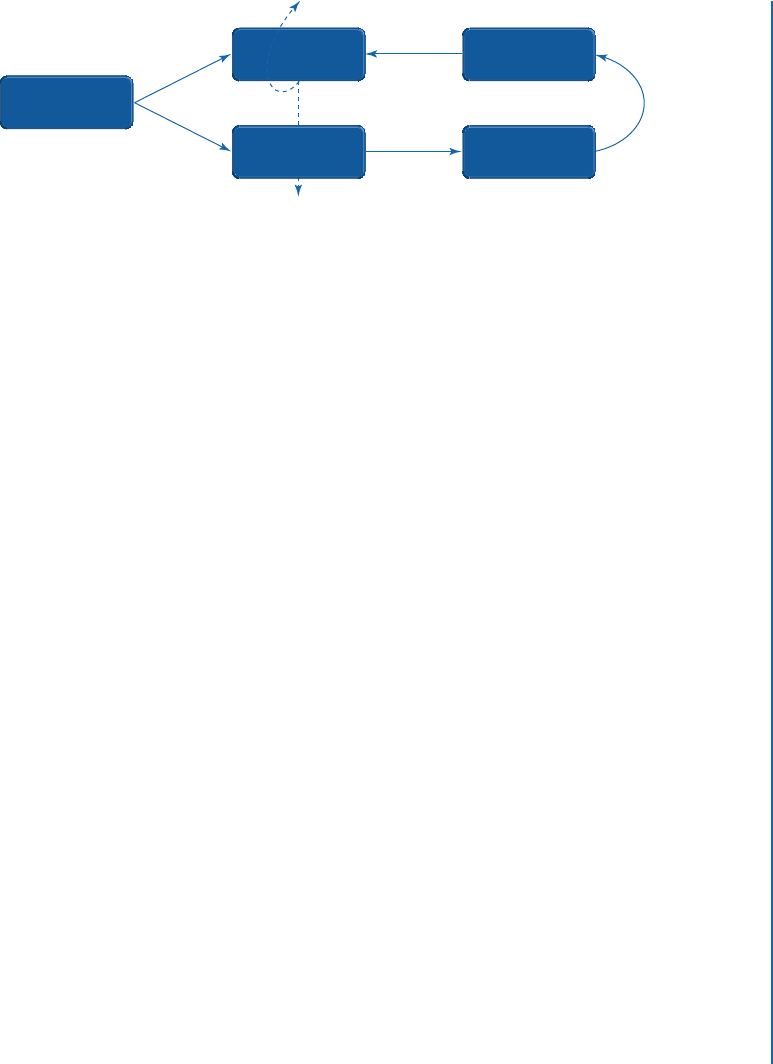

Critic

s

t

s

t

r

t

a

t

s

t+1

Actor

Observation

Reward

Environment

Figure 2.7: An actor-critic agent interacting with the environment. Dashed lines represent a

parameter update.

and based on the actions chosen by the actor network, the agent is given a reward. e value

function estimated by the critic network is then used to update the parameters of both networks.

2.4 FURTHER READING

In this chapter, a brief overview of relevant deep learning concepts was given. After reading this

chapter, you should have a basic understanding of how deep learning works, and the different

general approaches one can use to tackle deep learning problems. However, this chapter only

touched on the concepts relevant to later sections of the book, since a full review of deep learning

theory is out of the scope of this book. For a broader and more in-depth view of deep learning

theory, we provide the interested reader with some useful books in this field. For beginners,

Neural Networks and Deep Learning by Nielsen [77] provides an intuitive introduction to neu-

ral networks. For more in-depth reading, Deep Learning by Goodfellow et al. [78] provides a

comprehensive view of deep learning theory. For an introduction to reinforcement learning, we

recommend Reinforcement Learning: An Introduction by Sutton and Barto [56]. For a deeper look

at the mathematical background in machine learning, we recommend the books by Deisenroth

et al. [79] and Hastie et al. [80]. For a more hands-on introduction to deep learning, the books

by Chollet [81] and Géron [82] provide useful examples with code for those looking to learn

how to implement deep learning algorithms.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.