3.2. RESEARCH CHALLENGES 25

and a mid-to-mid learning approach were key to allowing the model to learn to drive the vehicle

while avoiding undesirable behaviors.

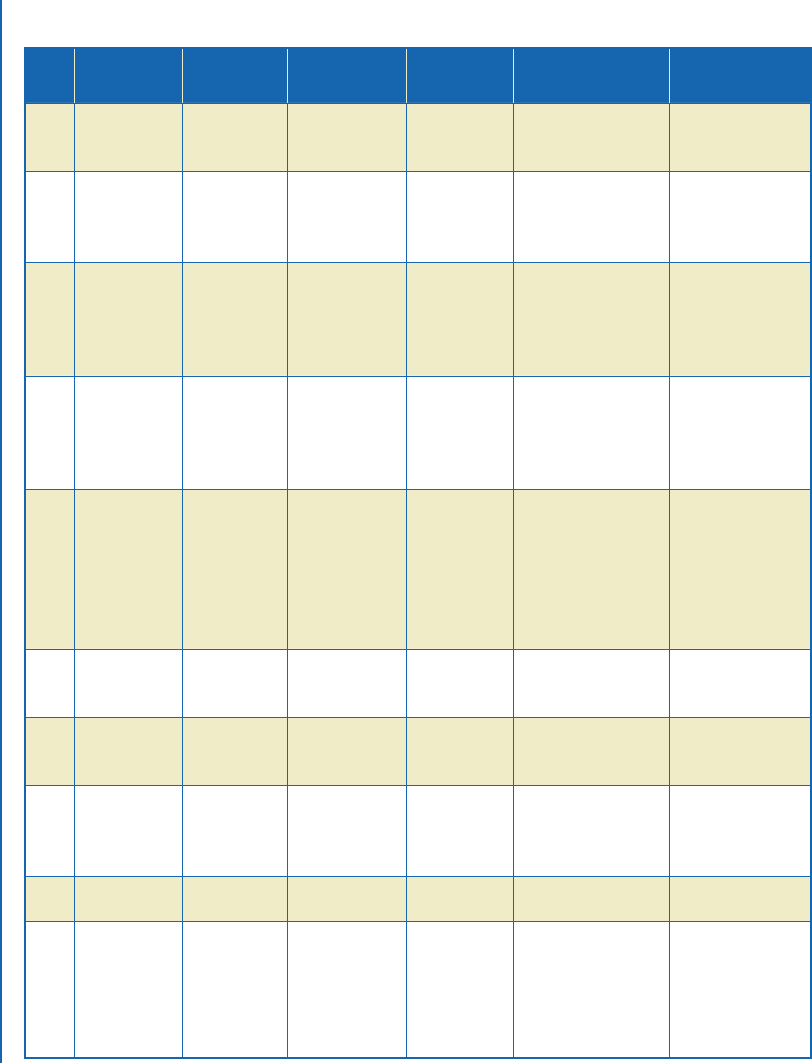

A summary of the full vehicle control techniques presented in this chapter can be seen

in Table 3.3. In contrast to the previous sections, a variety of learning and implementation

approaches were used in this section. Machine learning approaches have demonstrated strong

results for driving tasks such as lane keeping or vehicle following. While these results are impor-

tant, for fully automated driving, more complex tasks need to be considered. e network should

be able to consider low-level goals (e.g., lane keeping, vehicle following) alongside high-level

goals (e.g., route following, traffic lights). Early research has been carried out integrating high-

level context to the machine learning models, but these models are still far from human-level

performance.

3.2 RESEARCH CHALLENGES

e discussion in previous sections has shown that important results have been achieved in the

field of autonomous vehicle control using deep learning techniques. However, research in this

area is on-going, and there exists multiple research challenges that prevent these systems from

achieving the performance levels required for commercial deployment. In this section, we will

discuss some key research challenges that need to be overcome before the systems are ready

to be deployed. It is worth remembering that besides these research challenges, there are also

more general challenges preventing the deployment of autonomous vehicles such as legislative,

user acceptance, and economic challenges. However, since the focus here is on the research

challenges, for information on more general challenges we refer the interested readers to the

discussions in [115–120].

3.2.1 COMPUTATION

Computation requirements are an issue for any deep learning solution due to the vast amount of

training data (supervised learning) or training experience (reinforcement learning) required. Al-

though the increase in parallelisable computational power has (partly) sparked the recent interest

in deep learning, with deeper models and more complex problems the computational require-

ments are becoming an obstacle for deployment of deep neural network driven autonomous ve-

hicles [121–123]. Furthermore, the sample inefficiency, which many state-of-the-art deep rein-

forcement learning algorithms suffer from [124], means that a vast number of training episodes

are required to converge to an optimal control policy. Since training the policy in the real world

is often unfeasible, simulation is used as a solution. However, this results in high computation

requirements for reinforcement learning.

26 3. DEEP LEARNING FOR VEHICLE CONTROL

Table 3.3: A comparison of full vehicle control techniques

Ref.

Learning

Strategy

Network Inputs Outputs Pros Cons

[104] Supervised

learning

RNN Relative distance,

relative velocity

Desired accel-

eration

Adapts to driving

styles of other vehicles

Only considers a

simplifi ed roundabout

scenario

[

105] Supervised

reinforcement

learning

Feedforward

network with

two hidden

layers

Not mentioned Steering, accel-

eration,

braking

Fast training Unstable (can

steer off the road)

[

106] Reinforcement

learning

Feedforward/

Actor-Critic

Network

Position in lane,

velocity

Steering, gear,

brake, and ac-

celeration val-

ues (discretized

for DQN)

Continuous policy

provides smooth

steering

Simple simulation

environment

[107] Supervised

learning

CNN/Feedfor-

ward

Simulated camera

image

Steering angle,

binary

braking deci-

sion

Estimates safety of

the policy in any given

state, DAgger provides

robustness to

compounding errors

Simple simulation

environment, simpli-

fi ed longitudinal

output

[

109] Supervised

learning

CNN Camera image Steering and

throttle

High-speed driving,

learns to drive on low

cost cameras, robustness

of DAgger

to compounding errors

Trained only for

elliptical race tracks

with no other vehi-

cles, requires itera-

tively building the

dataset with the

reference policy

[110] Supervised

learning

CNN Image nine discrete

actions for

motion

Object-centric policy

provides attention to

important objects

Highly simplifi ed

action space

[

111] Reinforcement

learning

VAE-RNN Semantically seg-

mented image

Steering, accel-

eration

Improves collision

rates over braking only

policies

Only considers

imminent collision

scenarios

[113] Supervised

learning

CNN Camera image,

navigational

command

Steering angle,

acceleration

Takes navigational

commands into

account, generalizes to

new environments

Occasionally fails

to take correct turn

on fi rst attempt

[

112] Supervised

learning

CNN 360° view camera

image, route plan

Steering angle,

velocity

Takes route plan

into account

Lack of live testing

[114] Supervised

learning

CNN-RNN Pre-processed

top-down image

of surroundings

Heading, veloc-

ity, waypoint

Ease of transfer from

simulation to the

real world, robust

to deviations from

trajectory

Can output way-

points which make

turns infeasible, can

be over aggressive

with other vehicles in

new scenarios

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.