8

CODING FOR IMPROVED COMMUNICATIONS

8.1 INTRODUCTION

Before delving into the details of error detection and forward error correction (FEC) techniques, it is useful to describe several baseband coding techniques. In the following sections, various forms of baseband pulse code modulation (PCM) are discussed. PCM codes and data compression codes are source coding techniques. Following the description of PCM waveforms, a variety of important coding‐related topics are introduced: gray coding1 in Section 8.3 and differential coding in Section 8.4; pseudo‐random noise (PRN) sequences in Section 8.5; binary cyclic codes in Section 8.6; cyclic redundancy check (CRC) codes in Section 8.7; data randomizing, or scrambling in Section 8.8; data interleaving in Section 8.9. Following these topics, several forms of FEC channel coding are discussed; Wagner coding and decoding in Section 8.10; convolutional coding and Viterbi decoding in Section 8.11; turbo codes (TCs), parallel concatenated convolutional codes (CCCs) (PCCCs), serially CCCs (SCCCs), double parallel CCCs (DPCCCs), and double serially CCCs (DSCCCs) with reference to the related hybrid CCCs (HCCCs), and self‐concatenated codes (SCCs) in Section 8.122 (the nonturbo codes are collectively referred to as turbo‐like codes); low‐density parity‐check (LDPC) codes, product codes (PCs), and turbo product codes (TPCs) are introduced in Section 8.13; and Bose–Chaudhuri–Hocquenghem (BCH) codes, including M‐ary Reed–Solomon (RS) codes and RS Viterbi (RSV) codes, are discussed in Section 8.14.

The subject of coding to improve the performance of communication systems is broad and, in certain instances, requires knowledge in specialized areas like matrix and Boolean algebra and the theory of groups, rings, and fields. For example, an understanding of polynomials in the Galois field (GF) is crucial in the coding and decoding of both block and cyclic codes. These disciplines will be engaged only to the extent that they can be applied in a practical way to the coding and decoding of the various techniques considered. Several coding techniques are listed in Figure 8.1.

FIGURE 8.1 Channel coding techniques.

8.2 PULSE CODE MODULATION

Baseband signals are characterized as those signals with the signal energy concentrated around zero frequency, although the zero‐frequency power spectral density, S(f = 0), may be zero. This is contrasted to bandpass signals that are associated with a carrier frequency around which the signal energy is concentrated. The data from an information source is typically formatted as binary data with a mark bit representing logic 1 and space bit representing logic 0 data. In cases involving analog information sources, the information is time‐sampled and amplitude‐quantized and represented as binary data. In either event, the resulting binary data can be represented as a form of PCM [2] that contains all of the source information. The binary formatted data is typically denoted as a series of pulses, or bits, of duration Tb with amplitude levels of 1 and 0. A series of data defined in this manner is referred to as unipolar non‐return‐to‐zero (NRZ) formatted data [3], and with the binary amplitude levels of 1 and −1 the data format is referred to as polar NRZ. These and other baseband data formats are the subject of this section. There are a number of PCM codes that have been devised to address issues unique to certain applications; however, the most significant characteristics of the PCM codes are the power spectral density (PSD), bit synchronization properties, and the bit‐error performance. Other important characteristics involve the error detection and correction (EDAC) capabilities; however, the more powerful techniques in this regard use redundancy through increased bandwidth or decision space. A review of 25 baseband PCM codes and their properties is presented by Deffebach and Frost [4] and much of this section is based on their work. Another treatment of this subject is given by Stallings [5].

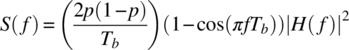

The PSD, with units of watt‐seconds/Hz, of binary PCM modulated random data sequences, with mark and space probabilities given by p and 1 − p, respectively, is evaluated by Bennett [6], Titsworth and Welch [7], and Lindsey and Simon [8] as

where Tb is the bit interval and Hm(f) and Hs(f) are the underlying spectrums of the mark and space bits. With unipolar formatted data, the mark data spectrum is defined as ![]() and the space data spectrum is zero, that is,

and the space data spectrum is zero, that is, ![]() , and upon substituting these results into (8.1) yields the unipolar PSD

, and upon substituting these results into (8.1) yields the unipolar PSD

With polar formatted data the space data spectrum is defined as ![]() so the polar PSD is given by

so the polar PSD is given by

These results are applied in the followings sections to evaluate the spectrums of various forms of unipolar and polar formatted PCM waveforms. Houts and Green [9] compare the spectral bandwidth utilization in terms of the percent of total power in bandwidths of {0.5,1,2,3}Rb for a variety of binary baseband coded waveform. In Section 8.2.5, the PCM demodulator bit‐error performance is examined.

8.2.1 NRZ Coded PCM

The designation NRZ denotes the binary format that characterizes a mark bit as being one amplitude level and space bit as another. When the space bit is represented by a zero level, the format is called unipolar NRZ. In contrast, when the level of the space bit is the negative of the mark bit level, the designation polar NRZ is used. The designation NRZ‐L indicates that the level of the binary format is changed whenever the level of the source data changes. These two PCM data formats are shown in Figure 8.2. The bipolar NRZ‐L format is a special tri‐level code for which a space source bit corresponds to a zero output level, while the level corresponding to the mark source data is bipolar, alternating between plus and minus levels.

FIGURE 8.2 Unipolar, polar, and bipolar NRZ‐L formatted data.

The designation NRZ‐M (NRZ‐S) indicates that changes in the formatted data occur when the source data is a mark (space); otherwise, the coded level remains the same. The unipolar NRZ‐M and NRZ‐S coded waveforms are depicted in Figure 8.3. This baseband coding results in differentially encoded data and is discussed in more detail in Section 8.4.

FIGURE 8.3 Unipolar NRZ‐M,‐S formatted data.

The advantage of differentially encoded data is that correct bit detection is maintained in the demodulator with a 180° error in the carrier phase as might occur in the channel or the demodulator carrier tracking loops. The penalty for using differential data coding is a degradation of the bit‐error performance with a 2 : 1 increase in the bit errors at high signal‐to‐noise ratios. Because the source data is encoded in the transitions of the PCM coded data, the absolute level is not as important; however, polar NRZ data with binary phase shift keying (BPSK) modulation results in the optimum detection performance through an additive white Gaussian noise (AWGN) channel.

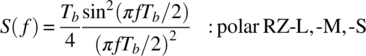

The PSD of the unipolar NRZ level, mark, space NRZ‐L,‐M,‐S formatted data is evaluated using (8.2) with the underlying spectrum characterized by the unit amplitude pulse rect(t/Tb − 1/2) with the square of the spectrum magnitude expressed as

The sampled spectrum expressed by (8.4) results in zero magnitude at ![]() for all n except n = 0, in which case, the magnitude is

for all n except n = 0, in which case, the magnitude is ![]() . With random source data, corresponding to p = 1/2, (8.2) becomes

. With random source data, corresponding to p = 1/2, (8.2) becomes

The PSD for the polar NRZ coded waveform is evaluated in a similar way using (8.3) and (8.4) and, assuming random data with p = 1/2, the result is

Therefore, the polar NRZ coded waveform has 6 dB more energy in the underlying spectrum, and there is no zero‐frequency impulse.

The spectrum for the bipolar NRZ‐L,‐M,‐S is evaluated by Sunde [10] as

The PSD in (8.4) is based on the PCM coded data having a constant amplitude over the entire bit interval, that is, the there is no pulse shaping, and, assuming random source data with p = 1/2, upon substituting |H(f)|2 using (8.4) into (8.7) yields the PSD for bipolar NRZ coded PCM expressed as

Equations (8.5), (8.6), and (8.8) are normalized by Tb and plotted in Figure 8.4 as the PSD for the NRZ coded PCM waveforms. The spectrum of the bipolar NRZ code does not have a direct current (DC) component so transmission over lines that are not DC coupled is possible as, for example, over long‐distance lines that use repeater amplifiers.

FIGURE 8.4 Power spectral density for NRZ coded PCM waveforms.

8.2.2 Return‐to‐Zero Coded PCM

Another form of baseband coding is return‐to‐zero (RZ) coding that is characterized by the NRZ‐L coding in Figure 8.2 with the exception that the bit interval is split, that is, the coded pulse level is nonzero over the first Tb/2 data interval and returns to zero over the remaining interval.3 The RZ coded PCM waveforms are depicted in Figure 8.5. In the unipolar case, the space bit is always zero and the mark bit is split. The polar case is similar; however, the space bit is split with the opposite polarity of the mark bit. The polar RZ code allows the bit timing to be established simply by full‐wave rectifying and narrowband filtering the received bit stream.

FIGURE 8.5 RZ formatted data.

In the bipolar case, the mark bit is split with alternating polarities and the space bit is always zero. By alternating the polarity of the mark bits, the bipolar RZ code provides for one bit‐error detection [11]. The bipolar RZ coded PCM is used in the Bell Telephone T1‐carrier system [12].

The PSD of the unipolar and polar RZ coded PCM waveforms are evaluated using (8.2) and (8.3) in a manner similar to that used for the unipolar and polar NRZ code; however, in these cases, the mark bit is split and characterized by the pulse rect(2t/Tb − 1/2) with the underlying square of the spectrum magnitude given by

Substituting (8.9) into (8.2) and recognizing that the spectrum is zero for all n ≠ 0, the PSD of the unipolar RZ coded waveform with random data is evaluated as

In a similar manner, the polar RZ PSD is evaluated using (8.3) with the result

The PSD of the bipolar RZ coded PCM waveform is characterized by Sunde [10] as in (8.7), with ![]() given by (8.9) so that, with random data,

given by (8.9) so that, with random data,

The PSD of the bipolar RZ coded PCM waveform is plotted in Figure 8.6.

FIGURE 8.6 Power spectral density for RZ coded PCM waveforms.

8.2.3 Biphase (Biϕ) or Manchester Coded PCM

Biphase (Biϕ), or Manchester, coded PCM is a commonly used waveform because of the robust bit timing recovery even without random data. For example, in an idle mode that uses either mark or space hold data, the demodulator timing can be maintained. The biphase‐level, ‐mark, ‐space (Biϕ‐L,‐M,‐S) coded waveforms are depicted in Figure 8.7. For the Biϕ‐L code, the mark bit is split and the space bit is the inverse of the mark bit. With the Biφ‐M code, a transition occurs at the beginning of each source data bit and the mark bit is split; however there is no change in the code‐bit level when a space source bit occurs. The Biϕ‐S code is similar to the Biϕ‐M with the role of the mark and space bits reversed.

FIGURE 8.7 Biphase Biϕ‐L,‐M,‐S polar formatted data.

The PSD of the biphase coded PCM waveform, using random data with p = 1/2, is evaluated by Batson [13] as

Equation (8.13) is normalized by Tb and plotted in Figure 8.8.

FIGURE 8.8 Power spectral density for Biϕ‐L,‐M,‐S coded PCM waveforms.

8.2.4 Delay Modulation or Miller Coded PCM

Delay modulation (DM), or Miller code, is a form of PCM where, for DM‐M coding, the code bit corresponding to each mark source bit is split, that is, the level changes in middle of each mark bit. The level of the code bit corresponding to a space source bit is unchanged unless it is followed by another space source bit in which case it is changed at the beginning of the space source bit. The DM‐S coding reverses the roles of the mark and space source bits, that is, the code bit corresponding to each space source bit is split and the level of the code bit corresponding to a mark source bit is unchanged unless it is followed by another mark source bit in which case it is changed at the beginning of the mark source bit. DM‐M,‐S coding is shown in Figure 8.9 for the source data sequence (1,0,0,1,1,0,1). To establish the correct phase of the bit timing in the demodulator an alternating mark‐space preamble must be transmitted.

FIGURE 8.9 Delay Modulation DM‐M,S coded PCM waveforms.

The PSD of the DM coded PCM waveform is evaluated for random data by Hecht and Guida [14] as

Equation (8.14) is normalized by Tb and plotted Figure 8.10.

FIGURE 8.10 Power spectral density for DM coded PCM waveforms.

8.2.5 Bit‐Error Performance of PCM

The bit‐error performance of phase modulated4 (PM), PCM, and (PCM/PM) coded waveforms [15] are expressed in terms of Q(x), the complement of the probability integral, where x is a function of the signal‐to‐noise ratio ![]() measured in the bandwidth corresponding to the information bit rate Rb. The approximate functional dependencies [16] of the indicated PCM/PM coded waveforms are expressed in (8.15) and are based on ideal demodulator bit timing. The approximations improve with increasing signal‐to‐noise ratio and the bit‐error results are shown in Figure 8.11.

measured in the bandwidth corresponding to the information bit rate Rb. The approximate functional dependencies [16] of the indicated PCM/PM coded waveforms are expressed in (8.15) and are based on ideal demodulator bit timing. The approximations improve with increasing signal‐to‐noise ratio and the bit‐error results are shown in Figure 8.11.

FIGURE 8.11 Bit‐error performance of PCM/PM coded waveforms.

The performance of frequency modulated (FM) PCM (PCM/FM) coded waveforms is dependent on the normalized frequency deviation Δf/Rb, pre‐detection filter bandwidth Bif /Rb, and post‐detection or video bandwidth Bv/Rb, where Rb is the information bit rate. Based these parameters and the pre‐detection signal‐to‐noise ratio γb and constants k and k1, the approximate bit‐error probability is expressed [16] as

The parameters in (8.16) are dependent on the format of the PCM/FM modulation, for example, NRZ‐L,‐M and Biϕ‐L, as indicated in Table 8.1. The signal‐to‐noise ratio, ![]() , is measured in a bandwidth corresponding to the bit rate Rb.

, is measured in a bandwidth corresponding to the bit rate Rb.

TABLE 8.1 Typical Parameter Sets for PCM/FM Coded Waveforms

| PCM/FM | k | k1 | Δf/Rb | Bv/Rb | Bif /Rb |

| NRZ‐L | 2.31 | 1 | 0.35 | 1/2 | 1 |

| NRZ‐M,‐S | 2.22 | 1 | 0.35 | 1/2 | 1 |

| Biφ‐La | 1.89 | 1/4 | 0.65 | 1 | 2 |

aManchester code.

The peak deviation, Δf, is the modulation frequency deviation from the carrier frequency fc with +Δf usually assigned to binary 1 (Mark) data and −Δf assigned to binary 0 (Space) data; the peak‐to‐peak deviation is 2Δf. Using a five‐pole, phase‐equalized, Butterworth intermediate frequency (IF) pre‐detection filter, the optimum peak deviation [17] for NRZ PCM/FM is ![]() and for Biφ PCM/FM

and for Biφ PCM/FM ![]() . The pre‐detection filter 3‐dB bandwidth [18, 19] is denoted as Bif with the condition Bif /Rb ≥ 1. The range of the normalized video bandwidth is 0.5 ≤ Bv/Rb ≤ 1; however, the recommended range is 0.7 ≤ Bv/Rb ≤ 1. The best values of these parameters are selected by examining the bit‐error performance of hardware tests or Monte Carlo computer simulations. Typical parameters sets are shown in Table 8.1 [20] for the indicated PCM/FM coded waveforms and the approximate bit‐error results are shown in Figure 8.12 [21, 22]. The bit‐error performance results apply to bit‐by‐bit detection corresponding to a filter matched to the bit duration. Performance improvements can be achieved using a maximum‐likelihood detector spanning multiple bits in the form of a decoding trellis [23, 24].

. The pre‐detection filter 3‐dB bandwidth [18, 19] is denoted as Bif with the condition Bif /Rb ≥ 1. The range of the normalized video bandwidth is 0.5 ≤ Bv/Rb ≤ 1; however, the recommended range is 0.7 ≤ Bv/Rb ≤ 1. The best values of these parameters are selected by examining the bit‐error performance of hardware tests or Monte Carlo computer simulations. Typical parameters sets are shown in Table 8.1 [20] for the indicated PCM/FM coded waveforms and the approximate bit‐error results are shown in Figure 8.12 [21, 22]. The bit‐error performance results apply to bit‐by‐bit detection corresponding to a filter matched to the bit duration. Performance improvements can be achieved using a maximum‐likelihood detector spanning multiple bits in the form of a decoding trellis [23, 24].

FIGURE 8.12 Approximate bit‐error performance of PCM/FM coded waveforms.

A supportive test, used to establish best parameter values, is the evaluation of the PSD of the modulated waveform. An analytical formulation of the PSD of the NRZ PCM/FM modulated waveform is expressed as [25]

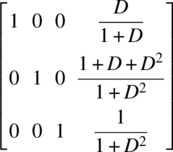

The parameters in (8.17) are defined in Table 8.2 and the spectrums are plotted in Figure 8.13 for peak deviations Δf/Rb = 0.25, 0.35, and 0.45. This analytical expression does not include the influence of the pre‐detection filter. The radio frequency (RF) bandwidth is defined as the occupied bandwidth corresponding to 99% spectral containment and the objective is to select a set of parameters that minimize the bit‐error performance and provide an acceptably low occupied bandwidth [26–29]. Spectral masks are discussed in Section 4.4.1 and examples of spectral containment for various modulations are given in Section 4.4.3 and following. Section 5.6 discusses binary FSK (BFSK) modulation with an emphasis on the PSD using various modulation indices.

TABLE 8.2 NRZ PCM/FM PSD Parameters Used in Equation (8.17)

| Normalized Parameter | Description |

| S(u) | Power spectral density w/r carrier powera |

| Bsa/Rbb | Spectrum analyzer resolution = 0.003 for Q ≅ 0.99 (used); =0.03 for Q ≅ 0.9 |

| D = 2Δf/Rb | Peak‐to‐peak deviation |

| X = 2u/Rb | Frequency deviation from carrier u = f − fc Hz |

aExpressed in decibels, the dimension is dBc.

bQ is related to narrowband spectral peaking when D ≅ integer value.

FIGURE 8.13 NRZ PCM/FM PSD sensitivity to peak deviation.

8.3 GRAY CODING

The performance benefits of gray coding is demonstrated in Section 4.2 where it is stated that the conversion from symbol‐error to bit‐error probability is expressed by the approximation

where k is the number of bits mapped into a single symbol. On the other hand, when the source bits are randomly assigned to a symbol interval, that is, when gray coding is not used, the conversion is bounded by

The bit‐error performance for these two symbol‐to‐bit‐error mapping techniques is shown in Figure 4.5 for multiphase shift keying (MPSK) modulated waveforms.

The function of gray coding is to minimize the number of bit errors in the process of converting from detected symbols to receive bits. For PSK modulation, gray coding ensures that adjacent symbols will differ in only one bit position. For example, consider the 8PSK modulated signal mapped into the two possible phase constellations shown in Figure 8.14.

FIGURE 8.14 Mapping of source bits to 8PSK phase constellation.

The most probable error condition occurs when the detected symbol is adjacent to the correctly transmitted symbol. For example, referring to Figure 8.14, if the correct symbol is (000), then the most likely error conditions are the detection of symbols (001) and (111) resulting, on average, in two bit errors. On the other hand, the Gray coded data results in symbol errors (001) and (100) with an average of only one bit error. In general, a k‐tuple of source bits ![]() results in the Gray coded k‐tuple

results in the Gray coded k‐tuple ![]() established using the encoding rule:

established using the encoding rule:

where ![]() denotes the exclusive‐or operation and b0 is considered to be the least significant bit (LSB).

denotes the exclusive‐or operation and b0 is considered to be the least significant bit (LSB).

The modulation of gray coded quadrature PSK (QPSK) unipolar binary data b′:{1,0} is accomplished in a relatively straightforward manner by translating to bi‐polar data d:{−1,1} as ![]() : i = 0, 1 as illustrated by the QPSK phase constellation in Figure 8.15. This mapping results in detected bipolar data estimates corresponding to the sign of the quadrature decisions {x,y} such that

: i = 0, 1 as illustrated by the QPSK phase constellation in Figure 8.15. This mapping results in detected bipolar data estimates corresponding to the sign of the quadrature decisions {x,y} such that ![]() :

:![]() where

where ![]() and

and ![]() . The gray decoded received bit estimates are determined as

. The gray decoded received bit estimates are determined as ![]() and the final step is the decoding of the gray coded received bit estimates. The decoding of a unipolar k‐tuple of Gray coded bits is accomplished using the following rule:

and the final step is the decoding of the gray coded received bit estimates. The decoding of a unipolar k‐tuple of Gray coded bits is accomplished using the following rule:

FIGURE 8.15 Quadrature phase constellation using gray coding.

8.4 DIFFERENTIAL CODING

The need for differential encoding arises to combat catastrophic error propagation resulting from inadvertent initialization or subsequent false‐lock conditions in the demodulator acquisition or phase tracking process. Although an initial phaselock error can be overcome by using a known preamble, subsequent phase slips, caused by channel noise or phase hits are effectively overcome by using differential encoding. Without differential encoding a phase hit, causing a false‐lock condition to occur, will result in a continual stream of data errors until the phase error is detected and corrected. For example, with MPSK modulation, if the receiver phaselock loop were to settle on a conditionally stable track point π radians out of phase, then all of the received bits will be in error—referred to as a catastrophic error condition. Differential encoding and decoding avoids catastrophic error events and is implemented in the modulator as

and, in the demodulator, as

where • represents the differential encoding operator and • ′ is the inverse operator. For binary data, the • and • ′ operators are represented by the exclusive‐or operation, denoted as ![]() .

.

Consider the simple case of differentially encoded BPSK (DEBPSK)5 to prevent catastrophic errors. The encoding, using the exclusive‐or operation, is shown in Figure 8.16 and an example of the received data sequence, with a received error forced at bit i = 6, is shown in Table 8.3. The phase error is assumed to result from a 180° phase‐step in the carrier frequency caused by the channel or a phase‐slip in the demodulator phaselock loop. This example shows that instead of continuing to output inverted data following the phase‐step, a single error occurs because of the differential encoding.

FIGURE 8.16 Differential encoding for DEBPSK modulation.

TABLE 8.3 Transmitted Data Sequence Demonstrating DEBPSK

| i | 0 1 2 3 4 5 6 7 8 9 10 11 … |

| di | ‐‐ 0 1 0 0 1 1 0 1 0 1 0 … |

| Di | 0 0 1 1 1 0 1 1 0 0 1 1 … |

| 0 0 1 1 1 0 0 0 1 1 0 0 … | |

| ‐‐ 0 1 0 0 1 0 0 1 0 1 0 … | |

In Table 8.3, the encoder and decoder are identically initialized. In Problem 3, the error events are examined under two cases: for a single received bit error in ![]() , that is, the phaselock loop tracking continues uninterrupted.

, that is, the phaselock loop tracking continues uninterrupted.

As a second example, consider differentially encoded QPSK (DEQPSK) with input data ![]() where Ii and Qi are the inphase and quadrature bit assignments, respectively. The objective is to encode the data so that receiver phase errors of π and ±π/2 will not result in a catastrophic error condition. The encoding and decoding functions shown in Figure 8.17 provide the correct received data for a fixed unknown phase of 0 or π radians provided that unique I and Q symbol synchronization sequences precede the data symbols. Although the differential decoder is self‐synchronizing, without the correct initialization,6 the first received symbol will be in error and this error is absorbed by the synchronization symbol. In this case, there are two, phase‐dependent, correct differential decoder initialization symbols, and because the phase is unknown the correct initialization symbol is unknown.

where Ii and Qi are the inphase and quadrature bit assignments, respectively. The objective is to encode the data so that receiver phase errors of π and ±π/2 will not result in a catastrophic error condition. The encoding and decoding functions shown in Figure 8.17 provide the correct received data for a fixed unknown phase of 0 or π radians provided that unique I and Q symbol synchronization sequences precede the data symbols. Although the differential decoder is self‐synchronizing, without the correct initialization,6 the first received symbol will be in error and this error is absorbed by the synchronization symbol. In this case, there are two, phase‐dependent, correct differential decoder initialization symbols, and because the phase is unknown the correct initialization symbol is unknown.

FIGURE 8.17 Differential encoding for DEQPSK modulation.

To accommodate unknown phases of nπ/2: n = 0, …, 3, caused by a channel phase hit or a false‐lock condition in the demodulator, the differential encoding and decoding algorithms in (8.24) and (8.25) provide the correct received data and are self‐synchronizing. As in the preceding example, a synchronization symbol is required to absorb the error in the first received symbol because of the unknown correct initialization state; in this case, there are four possible initialization states.

The decoding algorithm follows logically from the encoding process and is best seen by examining all of the discrete combinations as shown in Table 8.4. For example, when the data is the same in each channel, that is, Ii ⊕ Qi = 0, the phase error affects each channel identically and differential encoding of the I and Q channels individually is required; this is the situation depicted in Figure 8.17. However, when the data is different on each channel, that is, Ii ⊕ Qi = 1, the I and Q channels appear to be interchanged so differential encoding across the I and Q channels is required.

TABLE 8.4 Discrete Coding and Decoding of DEQPSK for Zero Channel Phase Shift

| IiQi | |||||

| 00 | 00 | 00 | 00 | 00 | 00 |

| 00 | 01 | 01 | 01 | 01 | 00 |

| 00 | 10 | 10 | 10 | 10 | 00 |

| 00 | 11 | 11 | 11 | 11 | 00 |

| 01 | 00 | 01 | 01 | 00 | 01 |

| 01 | 01 | 11 | 11 | 01 | 01 |

| 01 | 10 | 00 | 00 | 10 | 01 |

| 01 | 11 | 10 | 10 | 11 | 01 |

| 10 | 00 | 10 | 10 | 00 | 10 |

| 10 | 01 | 00 | 00 | 01 | 10 |

| 10 | 10 | 11 | 11 | 10 | 10 |

| 10 | 11 | 01 | 01 | 11 | 10 |

| 11 | 00 | 11 | 11 | 00 | 11 |

| 11 | 01 | 10 | 10 | 01 | 11 |

| 11 | 10 | 01 | 01 | 10 | 11 |

| 11 | 11 | 00 | 00 | 11 | 11 |

The simulated bit‐error performance of differentially encoded BPSK and QPSK are shown in Figure 8.18. These results are obtained using Monte Carlo simulations involving 100 Kbits for signal‐to‐noise ratios ≤6 dB: 1,000 Kbits for 6 dB < signal‐to‐noise ratios <10 dB, and 10,000 Kbits for signal‐to‐noise ratios ≥10 dB. The results are essentially the same for both modulations because gray coding is used with the QPSK modulation ensuring that one bit error occurs for each of the most probable symbol‐error conditions.

FIGURE 8.18 Performance of differentially encoded BPSK and QPSK.

8.5 PSEUDO‐RANDOM NOISE SEQUENCES

The subject of PRN7 sequences [30, 31] is an important topic in applications involving ranging, FEC coding, information and transmission security, anti‐jam, and code division multiple‐access (CDMA) communications. Furthermore, PRN sequences fill an important role in the generation of acquisition preambles for the determination of signal presence, frequency, and symbol timing for data demodulation. The noise‐like characteristics of binary PRN sequences exhibit random properties similar to that generated by the tossing of a coin with the binary outcomes: heads and tails. In this example, the random properties for a long sequence of j events are characterized as having a correlation response ci characterized by the delta function response ci = δ(j − i). A random sequence also exhibits unique run length properties; in that, defining a run of length ℓ as having ℓ contiguous events with identical outcomes, a run of length ℓ occurs with a probability of occurrence approaching Pr(ℓ) = 2−ℓ as the number of trials increases. This property also indicates that the probability of the number of heads or tails (ℓ = 1) in the tossing of a coin approaches 1/2 as the number of trials increases. Binary PRN sequences, generated using a linear feedback shift register [32] (LFSR) with module‐2 feedback from selected registers, exhibit these noise‐like characteristics. A useful property of LFSR generators is that the noise characteristics can be repeated simply by reinitializing the generator.

Sequences can be generated as polynomials over a GF with properties analogous to those of integers. Binary sequences contained in the GF(2)8 are particularly useful because of their simplicity and predictable pseudo‐random properties. The maximal linear PRN sequences, referred to as m‐sequences, generate the longest possible codes for a given number of m shift registers. The code length9 is Lm = 2m − 1 and repeats with a repetition time TL = Lm/fck where fck is the shift register clock frequency. The linear characteristic of the LFSR implementation results because of the property: for a given set of initial conditions SIj with outcomes SOj: j = 1, 2 then, for the initial conditions SI = SI1 ⊕ SI2, the output sequence is SO = SO1 ⊕ SO2 where ⊕ denotes modulo‐2 addition.

The LFSR PRN generators are characterized by m‐degree polynomials in x expressed as

with gm = g0 = 1. Some useful properties [33] of g(x) are as follows:

- A generator polynomial g(x) of degree m that is not divisible by any polynomial of degree less than m but if not divisible by a polynomial of any degree greater than 0, then g(x) is an irreducible polynomial.

- A polynomial g(x) of degree m is primitive if it generates all 2m distinct elements; a field with 2m distinct elements is called a GF(2m) field.

- An element, or root, α of g(x) in the GF(2m) is primitive if all powers αj: j ≠ 0 generates all nonzero elements of the field.

- An irreducible polynomial is primitive if g(α) = 0 where α is a primitive element.

- Polynomials that are both irreducible and primitive result in m‐sequences.

For each m, there exits at least one primitive polynomial of degree m. Irreducible and primitive polynomials are difficult to determine; however, Peterson and Weldon [34] have tabulated extensive list10 of irreducible polynomials over GF(2), that is, with binary coefficients, for degrees m ≤ 34; polynomials that are also primitive are identified.

The m‐sequences are generated based on the specific LFSR feedback connections; otherwise, non‐maximal sequences will result with periods less than TL. A feedback connection that does not result in an m‐sequence can result in one of several possible non‐maximal sequences of varying lengths <Lm depending on the initial LFSR settings. For a sequence of length Lm = 2m − 1 that conforms to an irreducible primitive polynomial, the maximum number m‐sequences that can be generated is determined as [35, 36]

where φ(Lm) Euler’s phi function evaluated as

Table 8.5 lists the number of m‐sequences for degrees m = 2 through 14.

TABLE 8.5 The Number of m‐Sequences and Polynomial Representationa

| Degree m | Lm | Nm | Prime Factors of Lm |

| 2 | 3 | 1 | Prime |

| 3 | 7 | 2 | Prime |

| 4 | 15 | 2 | 3*5 |

| 5 | 31 | 6 | Prime |

| 6 | 63 | 6 | 3*3*7 |

| 7 | 127 | 18 | Prime |

| 8 | 255 | 16 | 3*5*17 |

| 9 | 511 | 48 | 7*73 |

| 10 | 1,023 | 60 | 3*11*31 |

| 11 | 2,047 | 176 | 23*89 |

| 12 | 4,095 | 144 | 3*3*5*7*13 |

| 13 | 8,191 | 630 | Prime |

| 14 | 16,383 | 756 | 3*43*127 |

aRistenbatt [35]. Reproduced by permission of John Wiley & Sons, Inc.

For a given order, m, the irreducible primitive generator polynomial expressed by (8.26) is established by converting the octal notation to the equivalent binary notation and then associating the taps with the nonzero binary coefficients corresponding (bm = 1, bm−1, …, b1, b0 = 1). The LFSR feedback taps are the nonzero taps for the coefficient orders less than m. For example, from Table 8.6, the octal notation for the irreducible primitive generator polynomial corresponding to m = 3 is (13)o and the binary notation is (1011)b, so the generator polynomial is ![]() . The LFSR is implemented with descending coefficient order from left to right as shown in Figure 8.19. The generator clock frequency fck is also shown in the figure and the shift register delays correspond to the clock interval τ = 1/fck, which is denoted as the unit sample delay z−1. The generator must be initialized by the selection of the parameters (b2, b1, b0) recognizing that, for this example,

. The LFSR is implemented with descending coefficient order from left to right as shown in Figure 8.19. The generator clock frequency fck is also shown in the figure and the shift register delays correspond to the clock interval τ = 1/fck, which is denoted as the unit sample delay z−1. The generator must be initialized by the selection of the parameters (b2, b1, b0) recognizing that, for this example, ![]() where

where ![]() is the exclusive‐or operation. The initialization parameters characterize the state of the encoder, generally defined as (bn−1, bn−2, …, b0). If g(x) is irreducible and primitive, as in this example, the selection of the initial conditions simply results in one of the L = 2n − 1 cyclic shifts of the output PN sequence.11 If, however, g(x) is not primitive then mutually exclusive subsequences with length <Lm are generated and, taken collectively, the subsets contain all of the 2m − 1 states of the encoder (see Problem 5).

is the exclusive‐or operation. The initialization parameters characterize the state of the encoder, generally defined as (bn−1, bn−2, …, b0). If g(x) is irreducible and primitive, as in this example, the selection of the initial conditions simply results in one of the L = 2n − 1 cyclic shifts of the output PN sequence.11 If, however, g(x) is not primitive then mutually exclusive subsequences with length <Lm are generated and, taken collectively, the subsets contain all of the 2m − 1 states of the encoder (see Problem 5).

TABLE 8.6 Partial List of Binary Irreducible Primitive Polynomials of Degree ≤ 21 with the Minimum Number of Feedback Connectionsa

| m | g(x)b | m | g(x)b |

| 2 | 7 | 12 | 10123 |

| 3 | 13 | 13 | 20033 |

| 4 | 23 | 14 | 42103 |

| 5 | 45 | 15 | 100003 |

| 6 | 103 | 16 | 210013 |

| 7 | 211 | 17 | 400011 |

| 8 | 435 | 18 | 1000201 |

| 9 | 1021 | 19 | 2000047 |

| 10 | 2011 | 20 | 4000011 |

| 11 | 4005 | 21 | 10000005 |

aPeterson and Weldon [34]. Appendix C, Table C.2, © 1961 Massachusetts Institute of Technology. Reproduced by permission of The MIT Press.

bMinimum polynomial with root α in octal notation.

FIGURE 8.19 Third‐order LFSR implementation of PN sequence generator g(x) = x3 + x + 1.

8.6 BINARY CYCLIC CODES

Cyclic codes are a subset of linear codes that form the basis for a large variety of codes for detecting and correcting isolated single errors, multiple independent errors, and the more general situation involving bursts of errors. Cyclic code encoders are implemented using shift registers with appropriate feedback connections that are easily implemented and operate efficiently at high data rates. The following discussion of cyclic codes focuses on the encoding of systematic cyclic codes that are used for error checking of demodulated received data prior to passing the message data to the user. If errors are detected in the received data, the message may be blocked, sent to the user marked as containing errors, or scheduled for retransmission using an automatic repeat request (ARQ) protocol. In these applications, the cyclic code is referred to as a CRC code.

A systematic code is characterized as containing the uncoded message data followed by the parity‐check information as depicted in Figure 8.20. In the following description, the parity and message data, ri and mj, are based on the field elements in GF(2) and correspond to the binary bits {1,0}. The notation involving the parameter x denotes the polynomial representation of the cyclic‐code block of n transmitted bits, k information bits, and r = n − k parity bits. The information and parity polynomials are expressed as

and

so the cyclic coded message block, or code polynomial, in Figure 8.20 is constructed as

FIGURE 8.20 Coded message block.

The structure of the (n,k) cyclic code is based on the following properties; the proof of the last three properties are given by Lin [37].

- An (n,k) linear code, described by the code polynomial(8.32)is a cyclic code if every polynomial, described by shifting each element by i = 1, …, n − 1 places, is also a code polynomial of the (n,k) linear code. For example, the polynomial

(8.33)is also a code polynomial of the (n,k) linear code.

(8.33)is also a code polynomial of the (n,k) linear code.

- For an (n,k) cyclic code, there exists a unique polynomial g(x) of degree n − k expressed aswith g0, gn−k ≠ 0. Furthermore, every code polynomial v(x) is a multiple of g(x) and every polynomial of degree < = n − 1, which is a multiple of g(x) is a code polynomial.

- The generator polynomial of a (n,k) cyclic code v(x) is a factor of xn + 1,12 that is,(8.35)

- If g(x) is a polynomial of degree n − k and is a factor of xn + 1, then g(x) is the generator polynomial of the (n,k) cyclic code.

The generator polynomials are derived from irreducible polynomials that are difficult to determine; however, as mentioned previously, the tables13 of Peterson and Weldon [38] provide a list of all irreducible polynomials over GF(2) of degree ≤35.

The following description of constructing systematic cyclic codes from the message polynomial follows the development by Lin [39]. However, for simplicity and clarity, the construction is described using the (5,3) single‐error correcting code with generator polynomial given in octal form as (7)o; the binary equivalent (111)b corresponds to the polynomial

In (8.36), the LSB is the rightmost bit.14 The following example evaluates the cyclic code polynomial for the three‐bit message ![]() using the (5,3) code. In this case, the message polynomial is

using the (5,3) code. In this case, the message polynomial is ![]() and the corresponding code polynomial is obtained by dividing

and the corresponding code polynomial is obtained by dividing ![]() by the generator polynomial, that is, in this example, dividing

by the generator polynomial, that is, in this example, dividing ![]() by

by ![]() as follows:

as follows:

The remainder in (8.37) is r(x) = x and, using (8.31), the code polynomial for the message m4 is evaluated as

Therefore, the message bits (001) map into the cyclic code polynomial bits (01001). This result is shown in Table 8.7 with the other seven cyclic coded message blocks. The encoder for the example (5,3) cyclic code corresponding to Table 8.7 is shown in Figure 8.21 and the generalized r = n − k order encoder, generated using (8.34), is shown in Figure 8.22. As the uncoded message bits pass by the encoder, the bits are applied to the taped delay line according to the generator coefficients, 1 or 0 in the binary case. When the last message bit enters the generator, the switches, indicated by the dashed lines, are changed and the parity information contained in the registers is appended to the information bits forming the coded message block.15 These concepts are applied to the generation and performance evaluation of CRC codes discussed in Section 8.7.

TABLE 8.7 Code Polynomial Coefficients Corresponding to the (5,3) Cyclic Code

| i | mi | vi | |

| ri | mi | ||

| 0 | 0 0 0 | 0 0 | 0 0 0 |

| 1 | 1 0 0 | 1 1 | 1 0 0 |

| 2 | 0 1 0 | 1 0 | 0 1 0 |

| 3 | 1 1 0 | 0 1 | 1 1 0 |

| 4 | 0 0 1 | 0 1 | 0 0 1 |

| 5 | 1 0 1 | 1 0 | 1 0 1 |

| 6 | 0 1 1 | 1 1 | 0 1 1 |

| 7 | 1 1 1 | 0 0 | 1 1 1 |

FIGURE 8.21 Binary (5,3) second‐order encoder.

FIGURE 8.22 Generalized r‐th‐order encoder.

8.7 CYCLIC REDUNDANCY CHECK CODES

The CRC code of degree r is generated by multiplying an irreducible primitive polynomial, p(x), by (x + 1) resulting in the CRC code generator polynomial

The polynomial p(x), with degree r − 1, corresponds to the maximal length, or m‐sequence, of 2r−1 bits containing 2r−2 ones and 2r−2 − 1 zeros. Therefore, the underlying structure of the (n,k) CRC code is the r − 1 degree m‐sequence. CRC code parity bits are appended to the message information bits to form a systematic code; however, the purpose is to detect errors in the received (n,k) cyclic code for a variety of message lengths <k, referred to a shortened codes. The performance measure of CRC codes is the undetected error probability (Pue). The theoretical computation of Pue requires the Hamming weight distribution over the n‐bits corresponding to the 2k code words. For large values of n and k, evaluation of the Hamming weight distribution is impractical; however, using MacWilliams’ theorem [40, 41], the dual code (n,n − k) = (n,r) requires evaluation of the weight distribution (Bi) over the n‐bits corresponding to 2r code words. In this case, the undetected error probability, over the binary symmetric channel (BSC), with bit‐error probability p, is expressed as

The theoretical average undetected error probability of a binary (n,k) code, with equally probable messages, is expressed as [42]

and is plotted in Figure 8.23 for r = 16, 24, and 32 order CRC code generators and shortened messages corresponding to k = 24 and 256 bits.

FIGURE 8.23 Ensemble average of undetected error probability.

From (8.41), the average value of Pue at p = 0.5 is evaluated as

Equation (8.40) is used by Fujiwara et al. [43] to evaluate the performance of two International Telecommunication Union Telecommunication Standard (ITU‐T X.25)16 CRC codes, referred to as the frame check sequence (FCS) codes. Their evaluations provide detailed plots of Pue as a function of p, for the 16th‐order generators and various shortened code lengths; however, the results listed in Table 8.8 show the intersection of the bit‐error probability p corresponding to Pue = 1e−15. When compared to the r = 16th‐order generators in Figure 8.23, it is evident that these CRC codes perform considerably better than the average performance of ![]() . However, the performance trends are similar; in that, the performance for p = 0.5 is identical to the value predicted by (8.42) and the trend in the performance degradation as the shortened code length is increased approaching the natural, or underlying, code length of 2r is consistent with (8.42).

. However, the performance trends are similar; in that, the performance for p = 0.5 is identical to the value predicted by (8.42) and the trend in the performance degradation as the shortened code length is increased approaching the natural, or underlying, code length of 2r is consistent with (8.42).

TABLE 8.8 Simulated FCS Bit‐Error Probability Corresponding to Undetected Error Probability of 1e−15

| FCS Code | g(x)a | Shortened Length k | ||||

| 24 | 128 | 512 | 2048 | 16,384 | ||

| p at Pue = 1e−15 | ||||||

| ITU‐1 | 170037 | 1e−4 | 4e−5 | 1e−5 | 2.7e−6 | 3.2e−7 |

| ITU‐2 | 140001 | 1e−4 | 3e−5 | 1e−5 | 2.7e−6 | 3.2e−7 |

ag(x) designations in octal notation.

Using a simplified approach in the computation of the Hamming weight distribution (Bi) in (8.40), Wolf and Blakeney [44] verify the simulated performance of the ITU‐1 code in Table 8.8 and provide the performance of the additional codes listed in Table 8.9. Upon comparing the performance of the CRC‐16 code with that of the ITU‐1 code, there is not a significant difference; however, the CRC‐16Q code performance crosses the Pue = 1e−15 threshold at a lower bit‐error probability by about two and one orders of magnitude, respectively, for the 24‐ and 32‐bit shortened codes; this advantage diminishes rapidly as k increases. As seen from Figure 8.23 and demonstrated in Table 8.9 for the CRC‐24Q and ‐32Q codes, the most significant performance improvement over the range of shortened codes is obtained by increasing the degree of the generator polynomial.

TABLE 8.9 Simulated FCS Bit‐Error Probability Corresponding to Undetected Error Probability of 1e−15

| CRC Codes | g(x)a | Shortened Length k | |||||

| 24 | 32 | 64 | 256 | 512 | 1024 | ||

| p at Pue = 1e−15 | |||||||

| CRC‐16 | 100003 | 1e−4 | 8e−5 | 4e−5 | 1.8e−5 | 1e−5 | — |

| CRC‐16Q | 104307 | 9e−3 | 2.3e−3 | 9e−5 | 2.2e−5 | 1e−5 | — |

| CRC‐24Q | 404356 | — | 3e−2 | 2.3e−3 | 5.2e−3 | 2.8e−3 | — |

| 51 | |||||||

| CRC‐32Q | 200601 | — | — | 2.2e−2 | 1.3e−3 | 7e−4 | 4e−4 |

| 40231 | |||||||

ag(x) designations in octal notation.

The CRC message decoder is shown in Figure 8.24. As the decoded message data passes by the CRC decoder, the processing is identical to that of the CRC encoder creating parity, or check data, corresponding to the received message. Upon completion of the message, the switches, indicated by the dashed lines, are changed and the newly created message check data is compared to those appended to the received message. If a disagreement between the two sets of check data is detected, the message is declared to be in error.

FIGURE 8.24 CRC decoder.

8.8 DATA RANDOMIZING CODES

Data randomization, or scrambling, is required in many applications to avoid long source data sequences of ones, zeros, and other nonrandom data patterns that may disrupt the demodulator automatic gain control (AGC), symbol and frequency tracking functions, and other adaptive processing algorithms. Nonrandom data patterns may also result in transmitted spectrums containing harmonics that interfere with adjacent frequency channels and randomizers ensure that the transmitted signal spectral energy conforms to the theoretical spectrum of the modulated waveform that is based on random data. Randomizers can be implemented using nonbinary PRN generators initialized with a known starting seed [45]; however, in this section, the focus is on binary PRN generators that use LFSRs that conform to irreducible primitive polynomials [38]. The randomization of the data can be viewed as low‐level data security; however, the subject of data encryption provides a high level of communication security and involves specialized and often classified topics regarding implementation and performance evaluation.

Data randomization is implemented using either synchronous or asynchronous configurations. With the binary data randomizer initialized to a known state, the randomizer is synchronized by performing the exclusive‐or logical operation of the source bits with the PRN or LFSR generated feedback bits. The derandomization, in the demodulator, is accomplished in the same way; however, the derandomizer must be synchronized to the start‐of‐message (SOM) or message frame and initialized to the known state used in the modulator. In this case, the loss of demodulator timing during the message results in catastrophic errors and missed messages. The asynchronous randomizer is a self‐synchronizing configuration that avoids the synchronization issues and catastrophic error conditions; however, error multiplication by the number of LFSR taps occurs for each received bit error.

The following example of an asynchronous randomizer generated using the 7th‐order irreducible primitive polynomial ![]() is shown in Figure 8.25a and the derandomizer is shown in Figure 8.25b. Table 8.10 lists some generator polynomials with four or less feedback taps. These generators generate m‐sequences with periods of (2r − 1)Tb where r is the order of the generator.

is shown in Figure 8.25a and the derandomizer is shown in Figure 8.25b. Table 8.10 lists some generator polynomials with four or less feedback taps. These generators generate m‐sequences with periods of (2r − 1)Tb where r is the order of the generator.

FIGURE 8.25 Binary 7th‐order data randomization (Tb = bit interval).

TABLE 8.10 Some Randomizer Generator Polynomials with ≤ 4 LFSR Tapsa

| Order | Generatorb | Taps | Order | Generatorb | Taps |

| 6 | 103 | 2 | 13 | 20033 | 4 |

| 7 | 203 | 2 | 20065 | 4 | |

| 8 | 703 | 4 | 14 | 42103 | 4 |

| 543 | 4 | 15 | 100003 | 2 | |

| 9 | 1021 | 2 | 110013 | 4 | |

| 1131 | 4 | 122003 | 4 | ||

| 10 | 2011 | 2 | 16 | 210013 | 4 |

| 3023 | 4 | 200071 | 4 | ||

| 2431 | 4 | 312001 | 4 | ||

| 11 | 4005 | 2 | 17 | 400011 | 2 |

| 5007 | 4 | 400017 | 4 | ||

| 12 | 10123 | 4 | 400431 | 4 | |

| 11015 | 4 | 18 | 1000201 | 2 |

aPeterson and Weldon [34]. Appendix C, Table C.2, © 1961 Massachusetts Institute of Technology. Reproduced by permission of The MIT Press.

bOctal notation.

8.9 DATA INTERLEAVING

Controlled correlation between adjacent symbols of a modulated waveform is often used to provide significant system performance advantages for both spectrum control and detection gains. Some examples are partial‐response modulation (PRM), continuous phase modulation (CPM), tamed frequency modulation (TFM) [46–49], and Gaussian minimum shift keying (GMSK). Using these techniques significant system performance improvements are realized through waveform spectral control. On the other hand, communication channels can result in symbol correlation causing significant system performance degradation if mitigation techniques are not included in the system design. The communication channels that result in symbol correlation include narrowband channel (filters) resulting in intersymbol interference (ISI), fading channels resulting in signal multipath and scintillation, and various forms of correlated noise channels including impulse noise cause by lightning and man‐made interference including sophisticated jammers that have the potential to result in significant performance losses.

Various types of equalizers provide excellent protection against ISI and multipath. Also, burst‐error correction codes, like the RS code, can be used to mitigate these correlated channel errors [37]. However, many FEC codes, including the most commonly used convolutional codes, require randomly distributed errors entering the decoder. Data interleavers and deinterleavers provide an effective way to mitigate the impact of correlated channel errors. An important application of data interleavers, that cannot be understated, is their role in providing coding gain in the construction of turbo and turbo‐like codes discussed in Section 8.12.

Interleavers typically operate on source or coded bits; however, deinterleavers may also operate on demodulator soft decisions and require several bits of storage for each symbol. The interleaver accepts an ordered sequence of data and outputs a reordered sequence in which contiguous input symbols are separated by some minimum number of symbols. The minimum interleaved symbol interval between contiguous input symbols is the span of the interleaver. At the deinterleaver, the reverse operation is performed restoring the original order of the data. The utility of the interleaver lies in the fact that bursts of contiguous channel errors appearing on the interleaved data will appear as individual or isolated random errors at the output of the deinterleaver. Interleavers are characterized in terms of the block length, delay, and the span of adjacent source bits in the interleaved data sequence. A good interleaver design provides a large span with uniformly distributed symbol errors in the deinterleaved sequence. Interleaver frame synchronization must be established at the demodulator. Commonly used interleavers are the block interleaver and the convolutional interleaver [50, 51], which are discussed in the following sections. The convolutional interleaver is a subset of a more general class of interleavers described by Ramsey [50] and Newman [52].

8.9.1 Block Interleavers

Block interleavers are described in terms of an (M,N) matrix of stored symbols where consecutive input symbols are written to the matrix column by column until the matrix is filled17 and then forming the interleaved data sequence by reading the contents of the matrix row by row. A block interleaver is shown in Figure 8.26 and the following examples are specialized for a (4 × 3) matrix. In these examples, the matrix elements are initialized to zero and the input symbols are represented by a sequence of decimal integers {1,2,3,…}. Upon interleaving a block of NM symbols, if the process is repeated, the last symbol of the previous block will be followed by the first symbol of the next block resulting in zero span between these two contiguous input symbols. This problem can be circumvented by randomly reordering the addressing of rows and columns from block‐to‐block. Using two data interleaver matrices in a ping‐pong fashion is often preferred to the complications associated with clocking the data in and out of the same memory area. The deinterleaving is performed in the reverse order; in that, the received symbols are written to the deinterleaver matrix row by row and read to the output column by column. With block interleavers, bursts of contiguous channel errors longer than N symbols will result in short data bursts in the deinterleaved sequence. For example, if the interleaved sequence contains a burst of errors of length JM bits, then the errors in the deinterleaved sequence can be grouped as N bursts of length ≤J bits. However, in this characterization, some of the N deinterleaved output bursts may be contiguous. In general, block interleavers do not result in a uniform distribution of deinterleaved symbol errors.

FIGURE 8.26 (M,N) block interleaver.

The following example characterizes the block interleaver for M = 4 and N = 3.

The block interleaver characteristics are summarized as follows:

The expression for the delay simplifies to delay ≥2[NM + 1] − (N + M). As mentioned previously, the span is defined as the minimum interleaved symbol interval between contiguous input symbols.

Block interleavers are conveniently applied to block codes of length n by choosing N = n and then selecting the number of interleaver rows M to correspond to the channel correlation time, that is, the channel burst‐error length, in view of the error correction capability of the FEC code. For example, consider a t‐error correcting M‐ary block code denoted as (n,k,t). In this case, choose the interleaver span as N = n with M ≥ 2 chosen to provide adequate decoder error correction in view of the burst‐error length of the channel errors. Large values of M, however, will result in long data throughput delays.

8.9.2 Convolutional Interleavers

The convolutional interleaver is a special case of four types of (N1, N2) interleavers discussed by Ramsey and denoted as types I, II, III, and IV. The major difference in the implementation between the different types is the assignment of the input and output taps and the direction of commutation of the input and output data taps. The utility of the Ramsey interleavers is that, with the proper selection of the parameters N1 and N2, the output error distribution resulting from a burst of input errors is nearly uniform. The convolutional interleaver is similar to the type II Ramsey interleaver that requires that N1 + 1 and N2 be relatively prime; however, the convolutional interleaver requires that N2 = K (N1 − 1) where K is a constant integer. An advantage of convolutional interleavers over Ramsey interleavers is that they are relatively easy to implement.

The characteristics of convolutional interleavers are similar to those of block interleavers; however, the amount of storage required at the modulator and demodulator is NK(N + 1)/2 or about one‐half of that required by a block interleaver with similar characteristics. The interleaver parameters N and K are defined in Figure 8.27. Also, the end‐to‐end delay, that is, the delay from the interleaver input to the deinterleaver out, is NK(N − 1). The operation of the convolutional interleaver is characterized by a commutator that switches the input bits through N positions with the first position passed to the output without delay. With the deinterleaver commutator properly synchronized the first interleaved symbol is switched into the (N − 1)K register, while the content of the last storage element is shifted to the deinterleaver output. The convolutional interleaver characteristics are summarized as follows:

Here, the span is defined as the interleaved symbol interval between corresponding contiguous input symbols.

FIGURE 8.27 Convolutional Interleaver Implementation.

An example of the convolutional interleaver operation is shown in the following data sequences. In this example, the interleaver and deinterleaver are initialized with all zeros and a sequence of symbols consisting of decimal integers {1,2,3,…}, is applied to the input. The resulting out sequences are tabulated below for the case N = 3, K = 1 and the case N = 3, K = 2.

N = 3, K = 1 Interleaver:

| Input Sequence: | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, … |

| Int’l Out: | 1, 0, 0, 4, 2, 0, 7, 5, 3, 10, 8, 6, 13, 11, 9, 16, 14, 12, 19, 17, 15, 22, 20, 18, … |

| Deint’l Out: | 0, 0, 0, 0, 0, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, … |

N = 3, K = 2 Interleaver:

| Input sequence: | 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, … |

| Int’l out: | 1, 0, 0, 4, 0, 0, 7, 2, 0, 10, 5, 0, 13, 8, 3, 16, 11, 6, 19, 14, 9, 22, 17, 12, … |

| Deint’l out: | 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, … |

An example of the burst‐error characteristics of the convolutional interleaver is shown in the following data sequences for the N = 3, K = 2 interleaver with a burst of ten (10) contiguous channel errors following the 30th source symbol. In this example, the error bits are denoted as an X and the data record begins at the 30th symbol.

N = 3, K = 2 interleaver with 10 bit‐error burst, denoted as X, beginning at bit 30:

| Input sequence: | 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, |

| Deint’l input: | 18, X, X, X, X, X, X, X, X, X, X, 35, 30, 43, 38, 33, 46, 41, 36, 49, |

| Deint’l out: | 18, 19, 20, X, 22, 23, X, 25, X, X, 28, X, 30, X, X, 33, X, 35, 36, X, 38, 39, X, 41, 42, 43, … |

Evaluations of the interleaver performance include the uniformity of deinterleaved error events for various channel burst‐error lengths and the probabilities associated with the span of the interleaved sequence relative to the contiguous source symbols. However, the ultimate evaluation of the interleaver performance is the bit‐error probability for a specified waveform modulation and FEC code under various channel fading conditions.

8.10 WAGNER CODING AND DECODING

The Wagner code is a single‐error‐correcting block code denoted by (N, N − 1) where N is the block length containing N − 1 information bits. A typical block structure for the (N, N − 1) Wagner code is shown in Figure 8.28 where di = {0,1}, i = 1, 2, …, N − 1 represents the binary information bits and the single parity bit dN is determined from the modulo‐2 sum of the information bits as ![]() . The Wagner decoding process is quite simple. A hard decision is made to obtain an estimate of each received bit and the corresponding soft decisions are saved. A modulo‐2 addition is then performed on the five information bits and the parity bit. If the resulting parity bit is zero, the character is assumed to be correct. However, if the parity is one, the bit estimate corresponding to the smallest soft‐decision magnitude is inverted and the character is declared as being correct.

. The Wagner decoding process is quite simple. A hard decision is made to obtain an estimate of each received bit and the corresponding soft decisions are saved. A modulo‐2 addition is then performed on the five information bits and the parity bit. If the resulting parity bit is zero, the character is assumed to be correct. However, if the parity is one, the bit estimate corresponding to the smallest soft‐decision magnitude is inverted and the character is declared as being correct.

FIGURE 8.28 Wagner code structure.

The (6,5) Wagner code is sometimes applied to 7‐bit American Standard Code for Information Interchange (ASCII) character transmission. The first bit of the ASCII character is the start bit, which is followed by five information bits, and the seventh bit is the stop bit as shown in Figure 8.29. The start and stop bits are space or binary zero bits which are normally used for synchronization. In this situation, the Wagner coding is performed by replacing the stop bit with the parity bit formed as ![]() with

with ![]() , so the (6,5) Wagner code includes to the information bits and the stop bit. With this coding structure, the start bit is available for character synchronization.

, so the (6,5) Wagner code includes to the information bits and the stop bit. With this coding structure, the start bit is available for character synchronization.

FIGURE 8.29 Seven‐bit ASCII character with Wagner coding.

When differential coding is applied to the source data, as for example with compatible shift keying (CSK), the demodulation processing will result in two contiguous bit errors for every single‐error event. Therefore, to ensure detection of single‐error events, the parity check must be performed on every other bit. In this case, the start bit is used as the parity bit and is chosen to satisfy odd parity as ![]() with

with ![]() . Note that the parity check will detect all single‐error events with differential encoding; however, special consideration must be given to pairs of bit errors that span two adjacent characters because a detection error in the stop bit position will result in an error in the start (parity) bit in the next character. To ensure proper decoding in this case, a total of eight magnitude bits must be compared prior to the error correction. When the parity check fails on the current character block and the smallest magnitude is associated with the stop bit of the preceding character block, then the current parity bit is also assumed to be in error also and no error correction is performed on the current information bits.

. Note that the parity check will detect all single‐error events with differential encoding; however, special consideration must be given to pairs of bit errors that span two adjacent characters because a detection error in the stop bit position will result in an error in the start (parity) bit in the next character. To ensure proper decoding in this case, a total of eight magnitude bits must be compared prior to the error correction. When the parity check fails on the current character block and the smallest magnitude is associated with the stop bit of the preceding character block, then the current parity bit is also assumed to be in error also and no error correction is performed on the current information bits.

In the following sections, the character‐error performance of the Wagner code is examined. First, approximate expressions for the character‐error probability are developed for the three embodiments of the Wagner code discussed earlier, namely, the raw (N, N − 1) Wagner code, and Wagner coding applied to the 7‐bit ASCII character with and without differential encoding. The results of these analyses are approximate because the location of the bit in error is assumed to be known. The theoretical character‐error performance is then established based on using the soft decisions to identify the most likely bit to be in error. This analysis is exact in the sense that the soft decisions are used to locate the most probable bit in error.

8.10.1 Wagner Code Performance Approximation

The character‐error performance of a Wagner coded block can be approximated by assuming that all single errors are corrected and that all other error conditions are uncorrected and result in a character error. In this case, the character‐error probability of the raw (N, N − 1) Wagner code is simply

where the probability of a correct character, under the assumption of independent error events with probability Pbe, is given by

This result is based on the binomial distribution [53] and Pbe is the bit‐error probability of the underlying waveform modulation.

For the Wagner coded 7‐bit ASCII character without differential coding, the probability of a correct character is given by

With differential coding, the 7‐bit ASCII character is correctly decoded if a single bit‐error occurs among the six information bits. An error in the parity bit results in an error in the following information bits so the ASCII character cannot be corrected. The resulting probability of a correct character is then

The approximate performance results for the raw (N, N − 1) Wagner code are shown in Figure 8.30 for antipodal waveform modulation with AWGN. Figure 8.31 shows the approximate performance when the Wagner coded is applied to the 7‐bit ASCII character as discussed before. The signal‐to‐noise ratio (γc) for the underlying antipodal signaling of the coded bits is expressed in term to the code‐bit bandwidth 1/Tc, where Tc is the duration of the coded bit. The performance, however, is plotted as a function of the signal‐to‐noise ratio (γb) measured in the information bit bandwidth 1/Tb and the relationship is given by

FIGURE 8.30 Approximate performance of Wagner coded data (N‐bit character).

FIGURE 8.31 Approximate performance of Wagner coded data (7‐bit ASCII character).

For the raw Wagner code, ![]() and for the Wagner coded 7‐bit ASCII character Tb/Tc = 7/5 (= 1.46 dB).

and for the Wagner coded 7‐bit ASCII character Tb/Tc = 7/5 (= 1.46 dB).

8.10.2 (N, N − 1) Wagner Code Performance

In this section, the relationship between the bit‐error probability at the input to the Wagner decoder and the resulting character‐error probability at the decoder output is established. The theoretical performance in the AWGN channel is examined and the results are compared to Monte Carlo simulation results. The theoretical results apply for antipodal signaling such as BPSK, QPSK, OQPSK, or minimum shift keying (MSK); however, the simulation results are based on MSK waveform modulation with ideal symbol timing and phase tracking in the demodulator.

The general expression for the probability of a correctly received Wagner coded block is given by

When the noise is independent and identically distributed (iid) from bit to bit, this result can be expressed as

In the remainder of this section, the probability of correctly locating a single‐error event is analyzed using the stored soft decisions out of the optimally sampled matched filter.

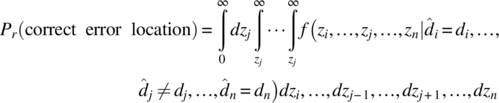

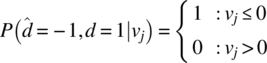

The location of a single‐error event in the j‐th bit is based upon the magnitude zj of the j‐th decision variable vj being less than the magnitudes zi of all the other bit decision variables vi, i = 1, …, N such that i ≠ j. Given the transmitted bit sequence {di} and the received sequence {![]() }, the probability of correctly locating the error is given by the general expression

}, the probability of correctly locating the error is given by the general expression

where f(−) is the conditional joint density function of the random variables ![]() . With independent source data, a memoryless channel, and ideal receiver timing, the AWGN channel decision variables, vi, are iid with distribution N(di, σn) for which the earlier result specializes to

. With independent source data, a memoryless channel, and ideal receiver timing, the AWGN channel decision variables, vi, are iid with distribution N(di, σn) for which the earlier result specializes to

The two conditioned distributions are found from a form of Bayes rule given by

Conditioning on an error event, that is, ![]() , the following relationships apply

, the following relationships apply

and

Upon combining these results with the normal distribution ![]() , the numerator in (8.58) becomes

, the numerator in (8.58) becomes

Using this result to evaluate the denominator in (8.58) conditioned on an error, it is noted that the denominator is simply the probability of a bit error, that is,

Since these results apply for vj > 0, upon substituting zj for vj in (8.61) the solution to (8.58) becomes

Evaluation of (8.58) conditioned on a correct decision, that is, ![]() , and proceeding in a similar manner leads to the conditional distribution

, and proceeding in a similar manner leads to the conditional distribution

Using (8.64) to evaluate the second integral in (8.57) results in

where Q(x) = 1 − Φ(x) and Φ(x) is the probability integral. Substituting (8.65) and (8.63) into (8.57) and, in turn, substituting (8.57) into the expression (8.55) for Pcc gives

This expression is computed numerically and the result is used to compute the character‐error probability expressed as

The solid curves in Figures 8.32 and 8.33 show the performance as a function of Eb/No for the (6,5) and (13,12) Wagner codes, respectively. The numerical integration is based on a resolution of 20 samples for each standard deviation σn over a range of 10 standard deviations above the mean value at zj = |dj|. The discrete circled data points for the (6,5) Wagner code are based on Monte Carlo simulations using 500 Kbits at each signal‐to‐noise ratio. Upon comparing these results with the approximations in Figure 8.30, it is seen that the approximate results are optimistic by about 1.0 dB at Pce = 10−5; owing to the fact that the bit‐error locations were assumed to be known.

FIGURE 8.32 Performance of MSK demodulator with (6,5) Wagner code.

FIGURE 8.33 Performance of MSK demodulator with (13,12) Wagner code.

The dotted curve in Figures 8.32 and 8.33 represent the character‐error performance if the information bits were not coded with the parity bit. These results are evaluated as

for N = 5 and 12, respectively, and they provide a reference for determining the coding gain of the Wagner coded characters which is about 2.0 dB at Pce = 10−5. The dotted curve in these figures is simply the uncoded bit‐error performance of antipodal signaling and is used to compute uncoded (5,5) and (12,12) Pce as a point of reference.

8.11 CONVOLUTIONAL CODES

The convolutional decoding discussed in this section focuses on the Viterbi decoding algorithm as it applies to a trellis decoding structure. Prior to the discovery of the trellis decoding structure, sequential decoding of convolutional codes, described by Wozencraft [54] and Fano [55], was used. Sequential decoding is based on metric computations on various branches through a code‐tree decoding structure [56]. In this case, the decoded data is associated with the branches or paths through the code tree that yield the largest metric. Heller and Jacobs [57] compare the advantages and disadvantages of the Viterbi and sequential decoding techniques in consideration of error performance, decoder delay, decoder termination sequences, code rates, quantization, and the sensitivity to channel conditions. The processing complexity is a major factor that distinguishes these two decoding procedures and, by this measure, Viterbi decoding is applicable to short constraint length codes (K ≤ 9); otherwise, the sequential decoding is more processing efficient.

The following description of convolutional coding draws upon the vast resource of research and publications on the subject [58–64] and other references cited throughout this section. Convolutional codes, unlike block codes, do not involve algebraic concepts in the decoding process and, therefore, result in more intuitive and, to some extent, straightforward processing algorithms. Throughout the following descriptions, binary data is considered and sequences are represented by polynomials ![]() with binary coefficients bi ∈ {1,0}. In this case, multiplication and summation of polynomials is performed using GF(2) arithmetic. For example, multiplication of two polynomials f(x) and f′(x) corresponds the coefficient multiplication

with binary coefficients bi ∈ {1,0}. In this case, multiplication and summation of polynomials is performed using GF(2) arithmetic. For example, multiplication of two polynomials f(x) and f′(x) corresponds the coefficient multiplication ![]() and summation is simply the modulo‐2 summation of the bi and

and summation is simply the modulo‐2 summation of the bi and ![]() coefficients.

coefficients.

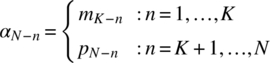

Convolutional encoders accept a continuous stream of source data at the rate Rb bps and generate a continuous stream of code bits, at a rate of ![]() where rc is the code rate, defined as

where rc is the code rate, defined as

The parameter k represents the number of source data bits, corresponding to a q‐ary symbol (q = 2k), that are entered into the encoder each code block and n represents the corresponding number of coded bits.

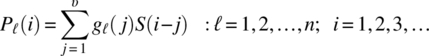

A single parity bit is influenced by υ = kK source bits and the n encoder parity bits are generated by the convolutional sum, expressed as

where gℓ(j) represents the ℓ‐th subgenerator of the code, S(j) is the stored array of source bits, and Pℓ(i) are the output parity bits corresponding to the ℓ‐th subgenerator. The parameter K is the constraint length of the code and corresponds to the number of stored M‐ary symbols during each code block. The index i, corresponding to the source data, can be arbitrarily long, distinguishing the convolutional codes from fixed‐length block codes. The encoder described by (8.70) can be viewed as a transversal, or finite impulse response (FIR), filter with unit‐pulse response described by the code generator.

The following description of the convolutional encoder is characterized in terms of the coding interval, or code block,18 defined as the encoder processing required to generate n parity bits in response to an input symbol of k source bits. This significantly simplifies the convolutional encoding and decoding description and notation. In the demodulator, the convolutional decoding is analogous to characterizing the trellis decoding as a recursion of multistate symbol decoding intervals.19 The encoding and decoding recognizes the correlative properties of preceding symbols, for example, the preceding K − 1 source symbols are processed in the encoder as described in the following.

In general, the convolutional encoder is implemented using binary arithmetic to compute the parity symbols by clocking a block of k source bits into shift registers and computing the corresponding n parity bits over the υ source bits stored in the encoder memory. To maintain real‐time operation, the duration of the n‐tuple of parity bits must equal the input symbol duration. The implementation of a GF(2k) convolution encoder is shown in Figure 8.34 where the binary arithmetic involves exclusive‐or operations.

FIGURE 8.34 Generalized GF(2k) nonsystematic convolutional encoder (rate rc = k/n, constraint length K).

The implementation in Figure 8.34 corresponds to one code block with the most recent k‐bit source symbol corresponding to the storage location 1 with the previous K − 1 symbols in the storage locations 2 through K. Following the message of length Tm seconds, the kK flush bits are used to return the encoder to the initial encoder state. Typically, the encoder is initialized to the zero state. The flush bits are also used in the decoder as described in Section 8.11.2. Each source symbol corresponds to a (k − 1)‐dimensional polynomial

where the bit bl0 is the rightmost or LSB of the input symbol. Taken collectively, the entire K symbols are denoted as the (υ − 1)‐dimensional polynomial

In Figure 8.34, the dotted lines, connecting the stored symbols to the subgenerators of the code, denote multiple connections from each subgenerator corresponding to the binary‐one coefficients of the (υ − 1)‐dimensional generator polynomial, expressed as

In the context of the code‐block processing, the subgenerators and stored data bits can be viewed as vectors with the parity formed by the scalar product ![]() .

.

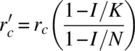

An important consideration in the modulator encoding is the mapping of the code bits20 to the waveform modulation symbol as depicted in Figure 8.35 [65, 66]. The encoder shown in Figure 8.34 results in a nonsystematic code, in that the coded output contains only parity‐check bits; however, the code‐bit mapping also applies to systematic codes that include the k source bits plus n − k appended parity‐check bits. In either case, the information bits or the most significant bit (MSB) parity‐check bits must be mapped to the most protected states of the modulated waveform. If an interleaver is used, the number of interleaver columns is selected to accommodate an integer number of transmitted symbol. For example, for a rate rc = 1/n code using MPSK symbol modulation with modulation efficiency ![]() and n = multiples of rm, a row‐column interleaver may be designed with rm columns and n/rm rows. In this example, rate matching is not necessary; however, puncturing of low‐rate codes has many advantages and requires rate matching to the symbol modulation waveform.