Understanding the profiling features in Visual Studio 2010

Understanding available profiling types

Using Performance Explorer to configure profiling sessions

Profiling reports and available views

Profiling JavaScript

One of the more difficult tasks in software development is determining why an application performs slowly or inefficiently. Before Visual Studio 2010, developers were forced to turn to external tools to effectively analyze performance. Fortunately, Visual Studio 2010 Ultimate Edition includes profiling tools that are fully integrated with both the IDE and other Visual Studio 2010 features.

This chapter introduces Visual Studio 2010's profiling tools. Note that the profiling features discussed in this chapter are available in Visual Studio 2010 Premium Edition or higher.

You'll learn how to use the profiler to identify problems such as inefficient code, over-allocation of memory, and bottlenecks. You will learn about the two main profiling options — sampling and instrumentation — including how to use each, and when each should be applied. In Visual Studio 2010, there are now two sampling options: one for CPU sampling, and the other for memory allocation sampling. This chapter examines both of these. This chapter also briefly reviews the new profiling method, introduced in Visual Studio 2010, to see thread contentions using concurrency profiling.

After learning how to run profiling analyzers, you will learn how to use the detailed reporting features that enable you to view performance metrics in a number of ways, including results by function, caller/callee inspection, call tree details, and other views.

Not all scenarios can be supported when using the Visual Studio 2010 IDE. For times when you need additional flexibility, you will learn about the command-line options for profiling applications. This will enable you to integrate profiling with your build process and to use some advanced profiling options.

Profiling is the process of observing and recording metrics about the behavior of an application. Profilers are tools used to help identify application performance issues. Issues typically stem from code that performs slowly or inefficiently, or code that causes excessive use of system memory. A profiler helps you to more easily identify these issues so that they can be corrected.

Sometimes, an application may be functionally correct and seem complete, but users quickly begin to complain that it seems "slow." Or, perhaps you're only receiving complaints from one customer, who finds a particular feature takes "forever" to complete. Fortunately, Visual Studio 2010 profiling tools can help in these situations.

A common use of profiling is to identify hotspots, sections of code that execute frequently, or for a long duration, as an application runs. Identifying hotspots enables you to turn your attention to the code that will provide the largest benefit from optimization. For example, halving the execution time of a critical method that runs 20 percent of the time can improve your application's overall performance by 10 percent.

Most profiling tools fall into one (or both) of two types: sampling and instrumentation.

A sampling profiler takes periodic snapshots (called samples) of a running application, recording the status of the application at each interval, including which line of code is executing. Sampling profilers typically do not modify the code of the system under test, favoring an outside-in perspective.

Think of a sampling profiler as being like a sonar system. It periodically sends out sound waves to detect information, collecting data about how the sound refracts. From that data, the system displays the locations of detected objects.

The other type, an instrumentation profiler, takes a more invasive approach. Before running analysis, the profiler adds tracing markers (sometimes called probes) at the start and end of each function. This process is called instrumenting an application. Instrumentation can be performed in source code or, in the case of Visual Studio, by directly modifying an existing assembly. When the profiler is run, those probes are activated as the program execution flows in and out of instrumented functions. The profiler records data about the application and which probes were hit during execution, generating a comprehensive summary of what the program did.

Think of an instrumentation profiler as the traffic data recorders you sometimes see while driving. The tubes lie across the road and record whenever a vehicle passes over. By collecting the results from a variety of locations over time, an approximation of traffic flow can be inferred.

A key difference between sampling and instrumentation is that sampling profilers will observe your applications while running any code, including calls to external libraries (such as the .NET Framework). Instrumentation profilers gather data only for the code that you have specifically instrumented.

Visual Studio 2010 offers powerful profiling tools that you can use to analyze and improve your applications. The profiling tools offer both sampling and instrumented approaches. Like many Visual Studio features, profiling is fully integrated with the Visual Studio IDE and other Visual Studio features, such as work item tracking, the build system, version control check-in policies, and more.

Note

The profiling tools in Visual Studio can be used with both managed and unmanaged applications, but the object allocation tracking features only work when profiling managed code.

The profiling tools in Visual Studio are based upon two tools that have been used for years internally at Microsoft. The sampling system is based on the Call Attributed Provider (CAP) tool, and the instrumentation system is based on the Low-Overhead Profiler (LOP) tool. Of course, Microsoft did not simply repackage existing internal tools and call it a day. They invested considerable development effort to add new capabilities and to fully integrate them with other Visual Studio features.

The Visual Studio developers have done a good job making the profiler easy to use. You follow four basic steps to profile your application:

Create a performance session, selecting a profiling method (CPU sampling, instrumentation, memory sampling, or concurrency) and its target(s).

Use the Performance Explorer to view and set the session's properties.

Launch the session, executing the application and profiler.

Review the collected data as presented in performance reports.

Each step is described in the following sections.

Before describing how to profile an application, let's create a sample application that you can use to work through the content of this chapter. Of course, this is only for demonstration, and you can certainly use your own existing applications instead.

Create a new C# Console Application and name it DemoConsole. This application will demonstrate some differences between using a simple class and a structure.

First, add a new class file called WidgetClass.cs with the following class definition:

namespace DemoConsole

{

public class WidgetClass

{

private string _name;

private int _id;

public int ID

{

get { return _id; }

set { _id = value; }

}

public string Name

{

get { return _name; }

set { _name = value; }

}

public WidgetClass(int id, string name)

{

_id = id;

_name = name;

}

}

}Now, let's slightly modify that class to make it a value type. Make a copy of the WidgetClass.cs file named WidgetValueType.cs and open it. To make WidgetClass into a structure, change the word class to struct. Now, rename the two places you see WidgetClass to WidgetValueType and save the file.

You should have a Program.cs already created for you by Visual Studio. Open that file and add the following two lines in the Main method:

ProcessClasses(2000000);ProcessValueTypes(2000000);

Add the following code to this file as well:

public static void ProcessClasses(int count){ArrayList widgets = new ArrayList();for (int i = 0; i < count; i++)widgets.Add(new WidgetClass(i, "Test"));string[] names = new string[count];for (int i = 0; i < count; i++)names[i] = ((WidgetClass)widgets[i]).Name;}

public static void ProcessValueTypes(int count){ArrayList widgets = new ArrayList();for (int i = 0; i < count; i++)widgets.Add(new WidgetValueType(i, "Test"));string[] names = new string[count];for (int i = 0; i < count; i++)names[i] = ((WidgetValueType)widgets[i]).Name;}} }

You now have a simple application that performs many identical operations on a class and a similar structure. First, it creates an ArrayList and adds 2 million copies of both WidgetClass and WidgetValueType. It then reads through the ArrayList, reading the Name property of each copy, and storing that name in a string array. You'll see how the seemingly minor differences between the class and structure affect the speed of the application, the amount of memory used, and its effect on the .NET garbage collection process.

To begin profiling an application, you must first create a performance session. This is normally done using the Performance Wizard, which walks you through the most common settings. You may also create a blank performance session or base a new performance session on a unit test result. Each of these methods is described in the following sections.

The easiest way to create a new performance session is to use the Performance Wizard. In Visual Studio 2010, there is a new menu item called Analyze. That is where you will find the Performance Wizard and other profiler menu items. Select Analyze

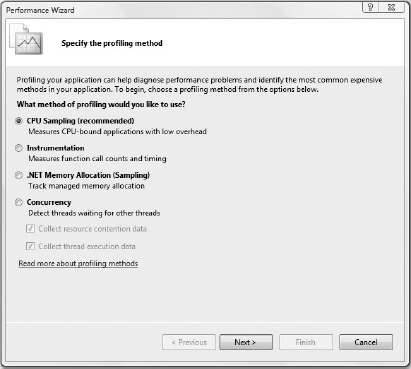

The first step, shown in Figure 9-1, is to select the profiling method.

Those of you familiar with this screen from Team System 2008 will notice the new profiling options. As mentioned earlier, Visual Studio 2010 has the following four profiling options:

CPU Sampling is the recommended method to get started, and is chosen by default, as you see in Figure 9-1.

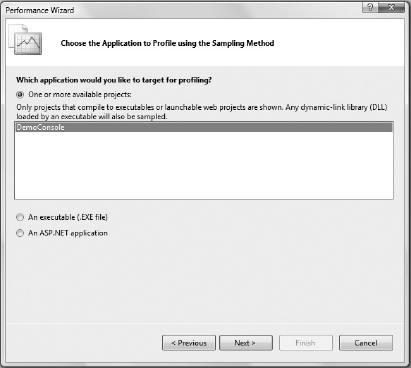

The second step, shown in Figure 9-2, is to select the application you will profile. In this case, you will profile the recently created DemoConsole application. You should see the DemoConsole application listed under "One or more available projects." If there are multiple applications listed there, you can select more than one to profile.

As you see in Figure 9-2, with Visual Studio 2010 you can also profile an executable (or .exe file) or an ASP.NET application. If you choose to profile an executable, then you must provide the path for the executable with any command-line arguments and the start-up directory. If you choose to profile an ASP.NET application, you must supply the URL for the Web application. Select the DemoConsole application as the target for profiling.

The final step in the wizard summarizes the selection in Step 1 and Step 2. Click Finish to complete the wizard and create your new performance session. Note that, in Visual Studio 2010, the profiling session is set to start after the wizard is finished. This is because the check box labeled, "Launch profiling after the wizard finishes," is enabled by default, as shown in Figure 9-3. To just save the settings and start a profiling session at a later time, disable this check box and click Finish. This is a new addition in Visual Studio 2010.

Although you can now run your performance session, you may want to change some settings. These settings are described later in this chapter in the section, "Setting General Session Properties."

There may be times (for example, when you're profiling a Windows Service) when manually specifying all of the properties of your session would be useful or necessary. In those cases, you can skip the Performance Wizard and manually create a performance session.

Create a blank performance session by selecting Analyze

After creating the blank performance session, you must manually specify the profiling mode, target(s), and settings for the session. As mentioned previously, performance session settings are described later in this chapter in the section "Setting General Session Properties."

The third option for creating a new performance session is from a unit test. Refer to Chapter 13 for a full description of the unit testing features in Visual Studio 2010.

There may be times when you have a test that verifies the processing speed (perhaps relative to another method or a timer) of a target method. Perhaps a test is failing because of system memory issues. In such cases, you might want to use the profiler to determine what code is causing problems.

To create a profiling session from a unit test, first run the unit test. Then, in the Test Results window, right-click on the test and choose Create Performance Session from the context menu, as shown in Figure 9-4.

Visual Studio 2010 then creates a new performance session with the selected unit test automatically assigned as the session's target. When you run this performance session, the unit test will be executed as normal, but the profiler will be activated and collect metrics on its performance.

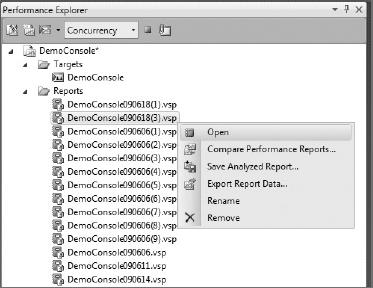

Once you have created your performance session, you can view it using the Performance Explorer. The Performance Explorer, shown in Figure 9-5, is used to configure and execute performance sessions and to view the results from the performance sessions.

The Performance Explorer features two folders for each session: Targets and Reports. Targets specifies which application(s) will be profiled when the session is launched. Reports lists the results from each of the current session's runs. These reports are described in detail later in this chapter.

Performance Explorer also supports multiple sessions. For example, you might have one session configured for sampling, and another for instrumentation. You should rename them from the default "Performance X" names for easier identification.

If you accidentally close a session in Performance Explorer, you can reopen it by using the Open option of the File menu. You will likely find the session file (ending with .psess) in your solution's folder.

Whether you used the Performance Wizard to create your session or added a blank one, you may want to review and modify the session's settings. Right-click on the session name (for example, DemoConsole) and choose Properties (refer to Figure 9-5). You will see the Property Pages dialog for the session. It features several sections, described next.

Note

This discussion focuses on the property pages that are applicable to all types of profiling sessions. These include the General, Launch, Tier Interactions, CPU Counters, Windows Events, and Windows Counters pages. The other pages each apply only to a particular type of profiling. The Sampling page is described later in this chapter in the section "Configuring a Sampling Session," and the Binaries, Instrumentation, and Advanced pages are described in the section "Configuring an Instrumentation Session," later in this chapter.

Figure 9-6 shows the General page of the Property Pages dialog.

The "Profiling collection" panel of this dialog reflects your chosen profiling type (that is, Sampling, Instrumentation, or Concurrency).

The ".NET memory profiling collection" panel enables the tracking of managed types. When the first option, "Collect.NET object allocation information," is enabled, the profiling system will collect details about the managed types that are created during the application's execution. The profiler will track the number of instances, the amount of memory used by those instances, and which members created the instances. If the first option is selected, then you can choose to include the second option, "Also collect.NET object lifetime information." If selected, additional details about the amount of time each managed type instance remains in memory will be collected. This will enable you to view further impacts of your application, such as its effect on the .NET garbage collector.

The options in the ".NET memory profiling collection" panel are off by default. Turning them on adds substantial overhead, and will cause both the profiling and report-generation processes to take additional time to complete. When the first option is selected, the Allocation view of the session's report is available for review. The second option enables display of the Objects Lifetime view. These reports are described later in this chapter in the section "Reading and Interpreting Session Reports."

In the "Data collection control" panel, you can toggle the launch of data collection control while the profiling is launched. If you have checked the "Launch data control collection" checkbox, then, during the profiling session, you will see the "Data Collection Control" window, as shown in Figure 9-7.

Using this window, you can specify marks that could become handy while analyzing the report after the profiling session is completed. You will do that by choosing the "Marks" view while viewing the report.

Finally, you can use the Report panel to set the name and location for the reports that are generated after each profiling session. By default, a timestamp is used after the report name so that you can easily see the date of the session run. Another default appends a number after each subsequent run of that session on a given day. (You can see the effect of these settings in Figure 9-17 later in this chapter, where multiple report sessions were run on the same day.)

For example, the settings in Figure 9-6 will run a sampling profile without managed type allocation profiling, and the data collection control will be launched. If run on January 1, 2010, it will produce a report named DemoConsole100101.vsp. Another run on the same day would produce a report named DemoConsole100101(1).vsp.

While the sample application has only one binary to execute and analyze, your projects may have multiple targets. In those cases, use the Launch property page to specify which targets should be executed when the profiling session is started or "launched." You can set the order in which targets will be executed using the "move up" and "move down" arrow buttons.

Targets are described later in this chapter in the section "Configuring Session Targets."

Tier Interaction profiling is a new capability introduced in Visual Studio 2010. This method captures additional information about the execution times of functions that interact with the database.

Multi-tier architecture is commonly used in many applications, with tiers for presentation, business, and database. With Tier Interaction profiling, you can now get a sense of the interaction between the application tier and the data tier, including how many calls were made and the time of execution.

As of this writing, Tier Interaction profiling only supports the capturing of execution times for synchronous calls using ADO.NET. It does not support native or asynchronous calls.

To start collecting tier interaction data, select the "Enable tier interaction profiling" checkbox, as shown in Figure 9-8.

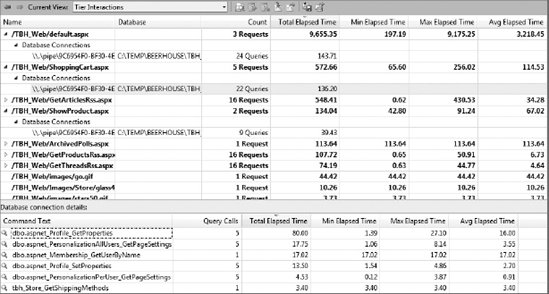

After you run the profiling with this selection turned on, you will be presented with the profiling report. Select the Tier Interactions view from the current view drop-down, shown in Figure 9-9. This example shows the results of running the profiling on the "TheBeerHouse" sample application. You can download this application from http://thebeerhouse.codeplex.com/.

This view shows the different ASPX pages that are called, and the number of times they were called. For example, the "default.aspx" page was requested three times, and the "ShoppingCart.aspx" page was requested five times.

In addition, this view also shows the associated database connections, and how many queries were called with each of the Web pages. For example, the "ShoppingCart.aspx" page made 22 queries. You will get this detail by expanding the "ShoppingCart.aspx" line.

The bottom window shows the details of the queries that were called, and the number of times each of these queries was called. This view also includes information on the timing of these queries. You can quickly see that the information captured about the interaction between the application tier and data tier can come in handy in debugging performance and bottleneck issues associated with the interaction between these two tiers.

The CPU Counters property page (shown in Figure 9-10) is used to enable the collecting of CPU-related performance counters as your profiling sessions run. Enable the counters by checking the Collect CPU Counters checkbox. Then, select the counters you wish to track from the "Available counters" list, and click the right-pointing arrow button to add them to the "Selected counters" list.

The Windows Events property page enables you to collect additional trace information from a variety of event providers. This can include items from Windows itself, such as disk and file I/O, as well as the .NET CLR. If you're profiling an ASP.NET application, for example, you can collect information from IIS and ASP.NET.

The Windows Counters property page (shown in Figure 9-11) is used to enable the collection of Windows counters. These are performance counters that can be collected at regular intervals. Enable the counters by checking the Collect Windows Counters box. Then, select the Counter Category you wish to choose from. Select the counters from the list, and click the right-pointing arrow button to add them to the list on the right.

If you used the Performance Wizard to create your session, you will already have a target specified. You can modify your session's targets with the Performance Explorer. Simply right-click on the Targets folder and choose Add Target Binary. Or, if you have a valid candidate project in your current solution, choose Add Target Project. You can also add an ASP.NET Web site target by selecting Add Existing Web Site.

Each session target can be configured independently. Right-click on any target and you will see a context menu like the one shown in Figure 9-12.

Note

The properties of a target are different from those of the overall session, so be careful to right-click on a target, not the performance session's root node.

If the session's mode is instrumentation, an Instrument option will also be available instead of the "Collect samples" option. This indicates that when you run this session, that target will be included and observed.

The other option is "Set as Launch." When you have multiple targets in a session, you should indicate which of the targets will be started when the session is launched. For example, you could have several assembly targets, each with launch disabled (unchecked), but one application .exe that uses those assemblies. In that case, you would mark the application's target with the "Set as Launch" property. When this session is launched, the application will be run, and data will be collected from the application and the other target assemblies.

If you select the Properties option, you will see a Property Pages dialog for the selected target (shown in Figure 9-13). Remember that these properties only affect the currently selected target, not the overall session.

If you choose Override Project Settings, you can manually specify the path and name of an executable to launch. You can provide additional arguments to the executable and specify the working directory for that executable as well.

Note

If the selected target is an ASP.NET application, this page will instead contain a "Url to launch" field.

The Tier Interactions property page will show up here if you have chosen the tier interaction for the performance session.

The Instrumentation property page (shown in Figure 9-14) has options to run executables or script before and/or after the instrumentation process occurs for the current target. You may exclude the specified executable from instrumentation as well.

Note

Because the instrumentation of an assembly changes it, when you instrument signed assemblies it will break them because the assembly will no longer match the signature originally generated. To work with signed assemblies, you must add a post-instrument event, which calls to the strong-naming tool, sn.exe. In the "Command-line" field, call sn.exe, supplying the assembly to sign and the key file to use for signing. You must also check the "Exclude from Instrumentation" option. Adding this step will sign those assemblies again, allowing them to be used as expected.

The Advanced property page is identical to the one under the General project settings. It is used to supply further command-line options to VSInstr.exe, the utility used by Visual Studio to instrument assemblies when running an instrumentation profiling session.

The Advanced property page is where you will specify the .NET Framework run-time to profile, as shown in Figure 9-15. As you see in the figure, the machine being used for demonstration purposes here has .NET 2.0 and .NET 4.0 Beta installed; hence, those two options can be seen in the drop-down.

Sampling is a very lightweight method of investigating an application's performance characteristics. Sampling causes the profiler to periodically interrupt the execution of the target application, noting which code is executing and taking a snapshot of the call stack. When sampling completes, the report will include data such as function call counts. You can use this information to determine which functions might be bottlenecks or critical paths for your application, and then create an instrumentation session targeting those areas.

Because you are taking periodic snapshots of your application, the resulting view might be inaccurate if the duration of your sampling session is too short. For development purposes, you could set the sampling frequency very high, enabling you to obtain an acceptable view in a shorter time. However, if you are sampling against an application running in a production environment, you might wish to minimize the sampling frequency to reduce the impact of profiling on the performance of your system. Of course, doing so will require a longer profiling session run to obtain accurate results.

By default, a sampling session will interrupt the target application every 10 million clock cycles. If you open the session property pages and click the Sampling page, as shown in Figure 9-16, you may select other options as well.

You can use the "Sampling interval" field to adjust the number of clock cycles between snapshots. Again, you may want a higher value (resulting in less frequent sampling) when profiling an application running in production, or a lower value for more frequent snapshots in a development environment. The exact value you should use will vary, depending on your specific hardware and the performance of the application you are profiling.

If you have an application that is memory-intensive, you may try a session based on page faults. This causes sampling to occur when memory pressure triggers a page fault. From this, you will be able to get a good idea of what code is causing those memory allocations.

You can also sample based on system calls. In these cases, samples will be taken after the specified number of system calls (as opposed to normal user-mode calls) has been made. You may also sample based on a specific CPU performance counter (such as misdirected branches or cache misses).

Instrumentation is the act of inserting probes or markers in a target binary, which, when hit during normal program flow, cause the logging of data about the application at that point. This is a more invasive way of profiling an application, but because you are not relying on periodic snapshots, it is also more accurate.

Note

Instrumentation can quickly generate a large amount of data, so you should begin by sampling an application to find potential problem areas, or hotspots. Then, based on those results, instrument specific areas of code that require further analysis.

When you're configuring an instrumentation session (refer to Figure 9-1 for the profiling method options), three additional property pages can be of use: Instrumentation, Binaries, and Advanced. The Instrumentation tab is identical to the Instrumentation property page that is available on a per-target basis, as shown in Figure 9-14. The difference is that the target settings are specific to a single target, whereas the session's settings specify executables to run before/after all targets have been instrumented.

Note

You probably notice the Profile JavaScript option in Figure 9-14. That option will be examined a little later in this chapter.

The Binaries property page is used to manage the location of your instrumented binaries. By checking "Relocate instrumented binaries" and specifying a folder, Visual Studio will take the original target binaries, instrument them, and place them in the specified folder.

For instrumentation profiling runs, Visual Studio automatically calls the VSInstr.exe utility to instrument your binaries. Use the Advanced property page to supply additional options and arguments (such as /VERBOSE) to that utility.

.NET memory allocation profiling method interrupts the processor for every allocation of managed objects. The profiler will collect details about the managed types that are created during the application's execution. (See Figure 9-1 for the profiling method options.) The profiler will track the number of instances, the amount of memory used by those instances, and which members created the instances.

When you check the "Also collect .NET object lifetime information" option in the General properties page (Figure 9-6), additional details about the amount of time each managed type instance remains in memory will be collected. This will enable you to view further impacts of your application, such as its effect on the .NET garbage collector.

Concurrency profiling is a method introduced in Visual Studio 2010. Using this method, you can collect the following two types of concurrency data:

Once you have configured your performance session and assigned targets, you can execute (or launch) that session. In the Performance Explorer window (Figure 9-5), right-click on a specific session, and choose "Launch with profiling."

Note

Before you launch your performance session, ensure that your project and any dependent assemblies have been generated in Release Configuration mode. Profiling a Debug build will not be as accurate, because such builds are not optimized for performance and will have additional overhead.

Because Performance Explorer can hold more than one session, you will designate one of those sessions as the current session. By default, the first session is marked as current. This enables you to click the green launch button at the top of the Performance Explorer window to invoke that current session.

You may also run a performance session from the command line. For details, see the section "Command-Line Profiling Utilities," later in this chapter.

When a session is launched, you can monitor its status via the output window. You will see the output from each of the utilities invoked for you. If the target application is interactive, you can use the application as normal. When the application completes, the profiler will shut down and generate a report.

When profiling an ASP.NET application, an instance of Internet Explorer is launched, with a target URL as specified in the target's "Url to launch" setting. Use the application as normal through this browser instance, and Visual Studio will monitor the application's performance. Once the Internet Explorer window is closed, Visual Studio will stop collecting data and generate the profiling report.

Note

You are not required to use the browser for interaction with the ASP.NET application. If you have other forms of testing for that application (such as the Web and load tests described in Chapter 13), simply minimize the Internet Explorer window and execute those tests. When you're finished, return to the browser window and close it. The profiling report will then be generated and will include usage data resulting from those Web and load tests.

When a session run is complete, a new session report will be added to the Reports folder for the executed session. The section "Setting General Session Properties," earlier in this chapter (as well as Figure 9-6), provides more details about how to modify the report name, location, and other additional properties in the General property page description.

As shown in Figure 9-17, the Reports folder holds all of the reports for the executions of that session.

Double-click on a report file to generate and view the report. Or, you can right-click on a report and select "Open" to view the report within Visual Studio (as shown in Figure 9-17).

In Visual Studio 2010, you can also compare two performance reports. With this capability, you can compare the results from a profiling session against a base line. This will help, for example, in tracking the results from profiling sessions from one build to the next. To compare reports, right-click on a report name and select "Compare Performance Reports..." (as shown in Figure 9-17).

This will open up a dialog to select the baseline report and the comparison report, as shown in Figure 9-18.

Choose the baseline file and the comparison file, and click OK. This will generate an analysis that shows the delta between the two reports, and an indicator showing the directional move of the data between these two reports (Figure 9-19). This gives you a clear sense of how the application profile is changing between two runs.

Another useful option to consider when you right-click on a report is "Export Report Data...". When you select this option, it will display the Export Report dialog box shown in Figure 9-20. You can then select one or more sections of the report to send a target file in XML or comma-delimited format. This can be useful if you have another tool that parses this data, or for transforming via XSL into a custom report view.

A performance session report is composed of a number of different views. These views offer different ways to inspect the large amount of data collected during the profiling process. The data in many views are interrelated, and you will see that entries in one view can lead to further detail in another view. Note that some views will have content only if you have enabled optional settings before running the session.

The amount and kinds of data collected and displayed by a performance session report can be difficult to understand and interpret at first. The following sections examine each section of a report, describing its meaning and how to interpret the results.

In any of the tabular report views, you can select which columns appear (and their order) by right-clicking in the report and selecting Choose Columns. Select the columns you wish to see, and how you want to order them, by using the move buttons.

The specific information displayed by each view will depend on the settings used to generate the performance session. Sampling and instrumentation will produce different contents for most views, and including .NET memory profiling options will affect the display as well. Before exploring the individual views that make up a report, it is important to understand some key terms.

Elapsed time includes all of the time spent between the beginning and end of a given function. Application time is an estimate of the actual time spent executing your code, subtracting system events. Should your application be interrupted by another during a profiling session, elapsed time will include the time spent executing that other application, but application time will exclude it.

Inclusive time combines the time spent in the current function with time spent in any other functions that it may call. Exclusive time will remove the time spent in other functions called from the current function.

Note

If you forget these definitions, hover your mouse pointer over the column headers and a tool tip will give you a brief description of the column.

When you view a report, Summary view is displayed by default. There are two types of summary reports, depending on whether you ran a sampling or instrumented profile. Figure 9-21 shows a Summary view from a sampling profile of the DemoConsole application.

The Summary view in Visual Studio 2010 has three data sections (on the left of the screen), one Notifications section (in the top right of the screen), and a Report section (in the lower-right portion of the screen), as shown in Figure 9-21.

The first data section you see in the Summary view is the chart at the top showing the percentage of CPU usage. This chart provides a quick visual cue into any spikes you have in CPU usage. You can select a section of the chart (for example, a spike in the chart), and then you can either zoom in by selecting the "Zoom by Selection" link to the right of the chart, or you can filter the data by selecting the "Filter by Selection" link, also to the right of the chart.

The second section in the Summary view is the Hot Path. This shows the most expensive call paths. (They are highlighted with a flame icon next to the function name.) It's not a surprise that the call to "ProcessClasses" and to "ProcessValueTypes" were the expensive calls in this trivial example.

The third data section shows a list of "Functions Doing Most Individual Work." A large number of exclusive samples here indicate that a large amount of time was spent on that particular function.

Note

Notice that several of the functions aren't function names, but names of DLLs — for example, [clr.dll]. This occurs when debugging samples are not available for a function sampled. This frequently happens when running sampling profiles, and occasionally with instrumented profiles. The section, "Common Profiling Issues," later in the chapter describes this issue and how to correct it.

For the DemoConsole application, this view isn't showing a lot of interesting data. At this point, you would normally investigate the other views. For example, you can click on one of the methods in the Hot Path to take you to the function details page, but because the DemoConsole application is trivial, sampling to find hotspots will not be as useful as the information you can gather using instrumentation. Let's change the profiling type to instrumentation and see what information is revealed.

At the top of the Performance Explorer window (Figure 9-17), change the drop-down field on the toolbar to Instrumentation. Click the Launch button (it's the third one with the green arrow) on the same toolbar to execute the profiling session, this time using instrumentation. Note that instrumentation profiling will take longer to run. When profiling and report generation are complete, you will see a Summary view similar to that shown in Figure 9-22.

The Summary view of an instrumented session has three sections similar to the Summary view of a sampling session.

You can also get to the Call Tree view (which will be examined shortly) or Functions view using the shortcut link provided below the Hot Path information.

The Summary view has an alternate layout that is used when the ".NET memory profiling collection" options are enabled on the General page of the session properties. Figure 9-23 shows this view.

Notice that the three main sections in this view are different. The first section, Functions Allocating Most Memory, shows the functions in terms of bytes allocated. The second section, Types With Most Memory Allocated, shows the types by bytes allocated, without regard to the functions involved. Finally, Types With Most Instances shows the types in terms of number of instances, without regard to the size of those instances.

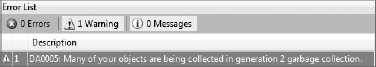

Also note the Notifications section and the Report section to the right of the CPU usage chart. If you click on the View Guidance link in the Notifications section, you will be shown any available errors or warnings. In this case, as shown in Figure 9-24, there is one warning. You'll learn what this means later in this chapter under the section, "Objects Lifetime View."

Using the Summary view, you can quickly get a sense of the most highly used functions and types within your application. In the following discussions, you'll see how to use the other views to dive into further detail.

Let's switch to the "Functions" view. You do that by selecting "Functions" from the "Current View" drop-down at the top of the report. In this view, you can begin to see some interesting results.

The Functions view shown in Figure 9-25 lists all functions sampled or instrumented during the session. For instrumentation, this will be functions in targets that were instrumented and called during the session. For sampling, this will include any other members/assemblies accessed by the application.

Note that ArrayList.Add and ArrayList.get_Item were each called 4 million times. This makes sense, because ProcessValueTypes and ProcessClasses (which use that method) were each called 2 million times. However, if you look at the Hot Path information in the Summary views, there is a noticeable difference in the amount of time spent in ProcessingValueTypes over ProcessClasses. Remember that the code for each is basically the same — the only difference is that one works with structures, and the other with classes. You can use the other views to investigate further.

From the Functions view, right-click on any function, and you will be able to go to that function's source, see it in module view, see the function details, or see the function in Caller/Callee view (discussed in detail shortly). You can double-click on any function to switch to the Functions Details view. You can also select one or more functions, right-click, and choose Copy to add the function name and associated data to the clipboard for use in other documents.

As with most of the views, you can click on a column heading to sort by that column. This is especially useful for the four "Time" columns shown in Figure 9-25. Right-clicking in the Functions view and selecting "Show in Modules view" will show the functions grouped under their containing binary.

In this view, you can see the performance differences between functions, which could help you to focus in on an issue.

Double-clicking on a function from the "Function View" loads up the "Function Details" view. Figure 9-26 shows the section of this view that is a clickable map with the calling function, the called functions, and the associated values.

The "Caller/Callee" view presents this data in a tabular fashion.

As shown in Figure 9-27, the Caller/Callee view displays a particular function in the middle, with the function(s) that call into it in the section above it, and any functions that it calls in the bottom section.

This is particularly useful for pinpointing the execution flow of your application, helping to identify hotspots. In Figure 9-27, the ProcessClasses method is in focus and shows that the only caller is the Main method. You can also see that ProcessClasses directly calls four functions. The sum of times in the caller list will match the time shown for the set function. For example, select the ArrayList.get_Item accessor by double-clicking or right-clicking it, and choose Set Function. The resulting window will then display a table similar to what is shown in Figure 9-28.

You saw ArrayList.get_Item in the main Functions view, but couldn't tell how much of that time resulted from calls by ProcessValueTypes or ProcessClasses. Caller/Callee view enables you to see this detail.

Notice that there are two callers for this function, and that the sum of their time equals the time of the function itself. In this table, you can see how much time that the ArrayList.get_Item method actually took to process the 2 million requests from ProcessValueTypes versus those from ProcessClasses. This allows you to analyze the processing time differences, and, if it is substantially different, to drill down on the differences to find out what could be causing the performance difference.

The Call Tree view shows a hierarchical view of the calls executed by your application. The concept is somewhat similar to the Caller/Callee view, but in this view, a given function may appear twice if it is called by independent functions. If that same method were viewed in Caller/Callee view, it would appear once, with both parent functions listed at the top.

By default, the view will have a root (the function at the top of the list) of the entry point of the instrumented application. To quickly expand the details for any node, right-click and choose Expand All. Any function with dependent calls can be set as the new root for the view by right-clicking and choosing Set Root. This will modify the view to show that function at the top, followed by any functions that were called directly or indirectly by that function. To revert the view to the default, right-click and choose Reset Root.

Another handy option in the context menu is "Expand Hot Path." This will expand the tree to show the hot paths with the flame icon. This is a very helpful shortcut to jump right into the functions that are potential bottlenecks.

If you configured your session for managed allocation profiling by choosing "Collect .NET object allocation information" on the General property page for your session (Figure 9-6), you will have access to the Allocation view. This view displays the managed types that were created during the execution of the profiled application.

You can quickly see how many instances, the total bytes of memory used by those instances, and the percentage of overall bytes consumed by the instances of each managed type.

Expand any type to see the functions that caused the instantiations of that type. You will see the breakdown of instances by function as well, so, if more than one function created instances of that type, you can determine which created the most. This view is most useful when sorted by Total Bytes Allocated or "Percent of Total Bytes." This tells you which types are consuming the most memory when your application runs.

Note

An instrumented profiling session will track and report only the types allocated directly by the instrumented code. A sampling session may show other types of objects. This is because samples can be taken at any time, even while processing system functions (such as security). Try comparing the allocations from sampling and instrumentation sessions for the same project. You will likely notice more object types in the sampling session.

As with the other report views, you can also right-click on any function to switch to an alternative view, such as source code, Functions view, or Caller/Callee view.

The Objects Lifetime view is available only if you have selected the "Also collect .NET object lifetime information" option of the General properties for your session (Figure 9-6). This option is only available if you have also selected the "Collect.NET object allocation information" option.

Note

The information in this view becomes more accurate the longer the application is run. If you are concerned about the results you see, increase the duration of your session run to help ensure that the trend is accurate.

Several of the columns are identical to those in the Allocation view table, including Instances, Total Bytes Allocated, and "Percent of Total Bytes." However, in this view, you can't break down the types to show which functions created them. The value in this view lies in the details about how long the managed type instances existed and their effect on garbage collection.

The columns in this view include the number of instances of each type that were collected during specific generations of the garbage collector. With COM, objects were immediately destroyed, and memory freed, when the count of references to that instance became zero. However, .NET relies on a process called garbage collection to periodically inspect all object instances to determine whether the memory they consume can be released.

Objects are placed into groups, called generations, according to how long each instance has remained referenced. Generation zero contains new instances, generation one instances are older, and generation two contains the oldest instances. New objects are more likely to be temporary or shorter in scope than objects that have survived previous collections. So, having objects organized into generations enables .NET to more efficiently find objects to release when additional memory is needed.

The view includes Instances Alive At End and Instances. The latter is the total count of instances of that type over the life of the profiling session. The former indicates how many instances of that type were still in memory when the profiling session terminated. This might be because the references to those instances were held by other objects. It may also occur if the instances were released right before the session ended, before the garbage collector acted to remove them. Having values in this column does not necessarily indicate a problem; it is simply another data item to consider as you evaluate your system.

Having a large number of generation-zero instances collected is normal, fewer in generation one, and the fewest in generation two. Anything else indicates there might be an opportunity to optimize the scope of some variables. For example, a class field that is only used from one of that class's methods could be changed to a variable inside that method. This would reduce the scope of that variable to live only while that method is executing.

Like the data shown in the other report views, you should use the data in this view not as definitive indicators of problems, but as pointers to places where improvements might be realized. Also, keep in mind that, with small or quickly executing programs, allocation tracking might not have enough data to provide truly meaningful results.

Visual Studio abstracts the process of calling several utilities to conduct profiling. You can use these utilities directly if you need more control, or if you need to integrate your profiling with an automated batch process (such as your nightly build). The general flow is as follows:

Configure the target (if necessary) and environment.

Start the data logging engine.

Run the target application.

When the application has completed, stop the logging data engine.

Generate the session report.

These utilities can be found in your Visual Studio installation directory under Team ToolsPerformance Tools. For help with any of the utilities, supply a /? argument after the utility name.

Table 9-1 lists the performance utilities that are available as of this writing:

Table 9.1. Performance tools

UTILITY NAME | DESCRIPTION |

|---|---|

| Used to instrument a binary |

| Used to launch a profiling session |

| Starts the monitor for the profiling sessions |

| Used to generate a report once a profiling session is completed |

| Used to set environment variables required to profile a .NET application |

Note

Refer to MSDN documentation at http://msdn.microsoft.com/en-us/library/bb385768(VS.100).aspx for more information on the command-line profiling tools.

Before Visual Studio 2010, if you were running your application from within a virtual machine (such as with Virtual PC, VMWare, or Virtual Server), you were not be able to profile that application. (Profiling relies upon a number of performance counters that are very close to the system hardware.) But, such is no longer the case. With Visual Studio 2010, you can now profile applications that are running within a virtual environment.

In Visual Studio 2010, you can now profile JavaScript. With this option, you can now collect performance data for JavaScript code. To do that, you will start by setting up an instrumentation session. Then, in the Instrumentation property page, select the Profile JavaScript option, as shown in Figure 9-29.

When you run this profiling session, the profiler will include performance information on JavaScript functions, along with function calls in the application. This example again uses the "Beer House" application. (You can find this application source code at http://thebeerhouse.codeplex.com.)

Figure 9-30 shows the Function Details view with the called functions and the elapsed times. It also shows the associated JavaScript code in the bottom pane, and that helps in identifying any potential issues with the script. This feature will be very helpful to assess the performance of JavaScript functions and identify any issues with the scripts.

When you run a sampling session, the report includes profiling data from all the code in the project. In most cases, you are only interested in the performance information of your code. For example, you don't need to have the performance data of .NET Framework libraries, and, even if you have it, there is not a lot you can do with that data. In the Summary view of the profiling report, you can now toggle between viewing data for all code, or just the application code. The setting for that is in the Notifications section in the Summary view, as shown in Figure 9-31.

Profiling is a complex topic, and not without a few pitfalls to catch the unwary. This section documents a number of common issues you might encounter as you profile your applications.

When you review your profiling reports, you may notice that some function calls resolve to unhelpful entries such as [ntdll.dll]. This occurs because the application has used code for which it cannot find debugging symbols. So, instead of the function name, you get the name of the containing binary.

Debugging symbols, files with the .pdb extension (for "program database"), include the details that debuggers and profilers use to discover information about executing code. Microsoft Symbol Server enables you to use a Web connection to dynamically obtain symbol files for binaries as needed.

You can direct Visual Studio to use this server by choosing Tools

Note

The first time you render a report with symbols set to download from Microsoft Symbol Server, it will take significantly longer to complete.

If, perhaps because of security restrictions, your profiling system does not have Internet access, you can download and install the symbol packages for Windows from the Windows Hardware Developer Center. As of this writing, this is www.microsoft.com/whdc/devtools/debugging/symbols.mspx. Select the package appropriate for your processor and operating system, and install the symbols.

When running an instrumentation profile, be certain that you are not profiling a target for which you have previously enabled code coverage. Code coverage, described in Chapter 7, uses another form of instrumentation that observes which lines of code are accessed as tests are executed. Unfortunately, this instrumentation can interfere with the instrumentation required by the profiler.

If your solution has a test project and you have previously used code coverage, open your Test Run Configuration under Test

In this chapter, you learned about the value of using profiling to identify problem areas in your code. Visual Studio 2010 has extended the capabilities for profiling.

This chapter examined the differences between sampling and instrumentation, when each should be applied, and how to configure the profiler to execute each type. You learned about the different profiling methods. You saw the Performance Explorer in action, and learned how to create and configure performance sessions and their targets.

You then learned how to invoke a profiling session, and how to work with the reports that are generated after each run. You looked at each of the available report types, including Summary, Function, Call Tree, and Caller/Callee.

While Visual Studio 2010 offers a great deal of flexibility in your profiling, you may find you must specify further options or profile applications from a batch application or build system. You learned about the available command-line tools available. Profiling is a great tool that you can use to ensure the quality of your application.

In Chapter 11, you will learn about a great new capability introduced in Visual Studio 2010 called "IntelliTrace," and Chapter 10 introduces you to other nifty debugging capabilities (including data tips and breakpoints). You will see the capabilities of Visual Studio 2010 to track and manage changes to database schemas using source control, generate test data, create database unit tests, utilize static analysis for database code, and more.