There are a million ways to do a code review, but there’s one component that’s common to all—a single developer critically examining code. So it makes sense to ask what the data tells us about developers diving into code by themselves, without distraction and without discussion.

What are the best practices during quiet contemplation?

How much time should you spend on a code review in one sitting?

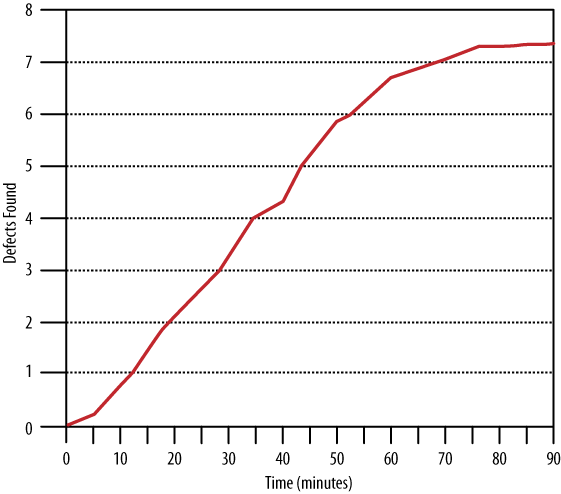

Figure 18-1 maps how efficient we are at finding defects over time [Dunsmore 2000]. The horizontal axis plots time passing; the vertical is the average number of defects a person found at this time index.

Looking early on in the code review, there’s a clear linear relationship between time and defects: every 10 minutes another defect is found (on average). This is encouraging—the more time spent in the review, the more defects are found.

That’s really efficient if you stop and think about it. What other software development processes do you know in which a new bug is found (and often fixed) every 10 minutes? Certainly not in the usual development/QA loop by the time you count the effort by both the tester and the developer.

But around the 60-minute mark, there’s a sudden drop-off. Now another 10 minutes doesn’t necessarily turn up another defect. The efficiency of finding defects drops sharply.

The usual theory explaining this effect is that around one hour we get mentally fatigued; it’s just a long time to be concentrating on a technical task. But whatever the cause, it’s clear that we shouldn’t review code for more than an hour at a time.

It’s logical that the more time you spend on a piece of code, the deeper and more nuanced your analysis will be and the more bugs you’ll find. It’s similarly logical that if you race through a review, you’ll find only the shallowest errors.

Where is the balance between spending enough time to root out important problems but not wasting time dwelling on decent code?

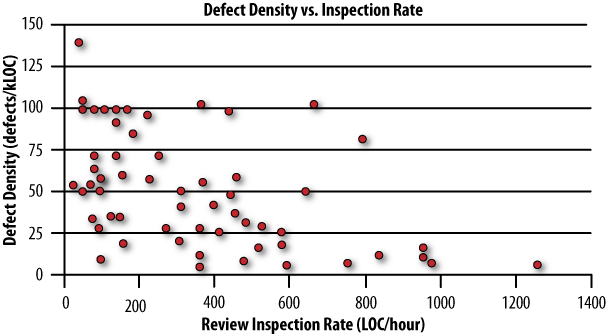

Figure 18-2 shows the effect of speed on ability to find defects in a study of 2,500 reviews [Cohen 2006].

On the horizontal axis we have the speed of the review measured in lines of code (LOC) per hour. The vertical axis shows defect density, which is the number of defects found per 1000 LOC (or kLOC). “Density” is used rather than “number of defects” because reviews with more lines of code will naturally have more defects, so this normalizes the results across reviews of different sizes.

Between zero and about 400 LOC/hour, you can see the defect density is evenly distributed between zero and 100 defects/kLOC. This distribution is expected. To see why, consider a review consisting of adding documentation to an interface. Three hundred lines of code might contain few or no defects—a low defect density. Now consider a review of brain-twisting, multithreaded, high-performance code in a core module that the entire application depends on. Perhaps just four lines of code were changed, but because of the complexity of the problem and the necessity of getting it right, several reviewers criticized the code and nitpicked the smallest of problems, and two defects were found—a high defect density.

In short, reviews will naturally have a range defect densities, so that spread is normal.

The problem comes with reviews faster than 400‒500 LOC/hour. Suddenly we rarely see a review with a high defect density. Of course that’s not because there are no defects, but because the reviewer is going too fast to find those defects.

Let’s put the previous two results together. If we should spent at most one hour in review, and if we can review at most 400 LOC/hour, then we conclude that we shouldn’t review more than 400 LOC at one time.

A sensible theory; let’s put it to the test.

Figure 18-3. As the size of the code under review increases, our ability to find all the defects decreases. Don’t review more than 400 lines of code at a time.

In Figure 18-3, we see how defect density is affected by the amount of code under review [Cohen 2006]. As in the last section, we expect to see a defect density spread between zero and 100 defects/kLOC, and we do see that with the smaller reviews. But as the review size increases, defect density drops off. As predicted, at around 300‒500 LOC, it’s clear that the reviews aren’t being effective.

The fact that this data matches the expected result so well lends credibility to all three results.

The typical version control “diff” utility displays modifications with only a few lines of surrounding context. Even more sophisticated tools typically display one file at a time.

Tools that limit your view result in similarly myopic code reviews. After all, you review what’s put in front of you!

Upon reflection, this seems like a bad practice. Consider what happens when you’re looking at a bug fix inside a single function. You inspect the change and see that it does fix the problem, but that’s not the end of the story! The next question is: what other code depends on this function, and might depend on the original behavior? If there are side effects, what other code is affected by that? Are there accompanying unit tests? Did unit tests for completely different functions have to change because of this modification, and is that acceptable?

So how important is it to perform code reviews with additional files, classes, documentation, and other context, as opposed to just looking at the immediate changes? In an experiment, reviewers were given various amounts of “context”—files related to the change at hand [Dunsmore 2000]. With a simple, automated method for generating the list of “related files” based on methods called or called by the code in question, 15% more defects were found. When the automated process was replaced by a human being making a judgment call about what the right associated files were, a full 30% more defects were found.

This suggests that you shouldn’t review snippets of code in isolation. At the very least you need to be in front of your IDE with the entire codebase at your fingertips. To the extent that the author of the code can highlight related code, that’s probably a good use of time as well.