The Information Integration System K2

In 1993, the invitational Department of Energy (DOE) workshop on genome informatics published a report that claimed that until all sequence data is gathered in a standard relational database, none of the queries in the appendix to the report could be answered (see Figure 8.1 for a listing of the queries) [1]. While the motivation for the statement was largely political, the gauntlet had been laid in plain view for database researchers: The data to answer the queries in the appendix were (by and large) available, but they were stored in a number of physically distributed databases. The databases represented their data in a variety of formats using different query interfaces. The challenge was, therefore, one of integrating heterogeneous, distributed databases and software programs in which the type of data was complex, extending well beyond the capabilities of relational technology.

As an example of the type of genomic data that is available online, consider the EMBL-format Swiss-Prot entry shown in Figure 8.2. Each line begins with a two-character code, which indicates the type of data contained in the line. For example, each entry is identified by an accession number (AC) and is timestamped by up to three dates (DT). The create date is mandatory, while the sequence update and annotation update dates only appear if the sequence or annotation has been modified since the entry was created. The sequence (SQ), a list of amino acids, appears at the end of the entry; the rest of the core data includes citation information (bibliographical references, lines beginning with R), taxonomic data (OC), a description of the biological source of the protein, and database references (DR), explicit links to entries in other databases: EMBL (annotated nucleotide sequence database); HSSP (homology derived secondary structure of proteins); Wormpep (predicted proteins from the Caenorhabditis elegans genome sequencing project); InterPro, Pfam, PRINTS, PROSITE (databases of protein families and domains, among other things). Annotation information, which is obtained by publications reporting new sequence data, review articles, and external experts, is mainly found in the feature table (FT), keyword lines (KW), and comment lines (CC), which do not appear in this example due to lack of space. Note that the bibliographical references are nested structures; there are two references, and the RP (Reference Position), RC (Reference Comment), RA (Reference Author), and RL (Reference Location) fields are specific to each reference. Similarly, the FT (Feature Table) is a nested structure in which each line contains a start and end position (e.g., 14 to 21), a type of feature (e.g., NP_BIND), and a description. The entry is designed to be read easily by a human being and structured enough to be machine parsed. However, several lines still contain a certain amount of structure that could be separated out during parsing. For example, the author list is a string, which could be parsed into a list of strings so as to be able to index into the individual authors. Similarly, the taxonomic data is also a string spread over several lines and could again be parsed into a list.

As shown in Figure 8.2, the type system for genomic data naturally goes beyond the sets of records of relational databases and include sequential data (lists), deeply nested record structures, and union types (variants). As an example of a union type, the format of the RL line depends on the type of publication: An unpublished entry contains a brief comment; a journal citation includes the journal abbreviation, the volume number, the page range, and the year; the format of a book citation includes the set of editor names, the name of the book, an optional volume, the page range, the publisher, city, and year. The structure of this SwissProt entry can be described precisely in a data definition language with sufficiently rich types. Such a description will be shown later on in Figure 8.5.

The database group at the University of Pennsylvania responded to the DOE challenge by developing a view integration (or integration on-the-fly) environment. In such an environment, the schemas of a collection of underlying data sources are merged to form a global schema in some common model (e.g., relational, complex value, or object-oriented). Users query this global schema using a high-level query language, such as Structured Query Language (SQL) [2], Object Query Language (OQL) [3], or Collection Programming Language (CPL) [4]; the system then determines what portion of the global query can be answered by which underlying data source, ships local queries off to the underlying data sources, and then combines answers from the underlying data sources to produce an answer to the global query. The initial view-integration environment developed by our group was called Kleisli, and it was designed and implemented by Limsoon Wong. Wong later re-designed and re-implemented the Kleisli system at Singapore’s Institute of Systems Science; this new version of Kleisli is described in Chapter 6 of this book. About the same time, other information integration projects were also developed [5, 6, 7], including the system based on the Object Protocol Model (OPM) [8].

K2 vs. Kleisli

K2 is a successor system to Kleisli that was designed and implemented at the University of Pennsylvania by Jonathan Crabtree, Scott Harker, and Val Tannen. Like Kleisli, K2 uses a complex value model of data and is based on the so-called monad approach (see Section 8.4). However, the design of K2 also contains a number of new ideas and redirections: First, the model incorporates a notion of dictionaries, which allows a natural representation of object-oriented classes [9] as well as Web-based data. Second, the internal language features a new approach to aggregate and collection conversion operations [10]. Third, the syntax of the language follows a mainstream query language for object-oriented databases called OQL [3] rather than the elegant but less familiar comprehension-style syntax originally used in CPL [4] (Kleisli now uses an adapted SQL syntax). Fourth, a separation is made between the mediator (global schema) level and the query level by introducing a mediator definition language for K2, K2MDL. K2MDL combines an Object Definition Language (ODL) specification of the global schema with OQL statements that describe the data mapping. The ability to specify intermediate mediators allows a large integration environment to be created in layers and componentized. Finally, to improve its portability, K2 is implemented in Java and makes use of several of the standard protocols and application programming interfaces (APIs) that are part of the Java platform,1 including Remote Method Invocation (RMI)2 and Java Data Base Connectivity (JDBC).3 Thus, K2 is not an extension of Kleisli, but rather a system implemented from scratch that shares with Kleisli some of its design principles, while featuring a number of distinct developments just outlined.

Overall, the goal of K2 is to provide a generic and flexible view integration environment appropriate for the complex data sources and software systems found throughout genomics, which is portable and appeals to common practices and standards.

8.1 APPROACH

A number of other techniques have also been developed over the past 11 years in response to the DOE challenge, including link-driven federations and warehouses. In a link-driven federation, users start by extracting entries of interest at one data source and then hop to other related data sources via Web links that have been explicitly created by the developers of the system. The Sequence Retrieval System (SRS) [11] presented in Chapter 5, LinkDB [12], and GeneCards [13] are examples of this approach. While the federation approach is easy to use, especially for novices, it does not scale well: When a new data source is added to the federation, connections between its entries and entries of all existing federation data sources must be added; this is commonly referred to as the N2 problem. Furthermore, if users are interested in a join between two data sources in the federation, they must manually perform the join by clicking on each entry in the first data source and following all connections to the second data source.4 In contrast, a join can be expressed in a single high-level query in a view or warehouse integration strategy. In general, the query languages supporting view or warehouse integration approaches are much more powerful and allow arbitrary restructuring of the retrieved data.

A warehouse strategy creates a central repository of information and annotations. One such example is the Genomics Unified Schema (GUS) [14], which integrates and adds value to data obtained from GenBank/EMBL/DDBJ, dbEST, and Swiss-Prot (and others) and contains annotated nucleotide (dioxyribonucleic acid [DNA], ribonucleic acid [RNA]), and amino acid (protein) sequences. Note that view integration systems can also be used to create warehouses, which are instantiations of the global schema. The advantage of a warehouse approach over a view integration is one of speed and reliability; because all data are local, delays and failures associated with networks can be avoided. Furthermore, there is greater control over the data. However, a warehouse is not dynamic: Not only must it be kept up-to-date with respect to the underlying data sources, but including a new data source or algorithm is time-consuming. A more extended discussion of the problems and benefits of the link, warehouse, and view integration approaches can be found in articles in the IBM Systems Journal and the Journal of Digital Libraries [14, 15]. K2 is a system for generating mediators. Mediators are middleware components that integrate domain-specific data from multiple sources, reducing and restructuring data to an appropriate virtual view [16]. A major benefit of mediation is the scalability and long-term maintenance of the integration systems structure.

Figure 8.3 is an example of how mediators can help the data integration task. In this example, each of the boxes represents a machine on which a copy of K2 is used to provide a mediator for some local, as well as external, data sources and views. Mediator 1 resides behind a company firewall and was built to integrate data from a local database and local copies of Swiss-Prot and GenBank, accessed through SRS, with the application program BLAST. Mediator 2 was then built outside the firewall to integrate data from some external data sources—PubMed and a patent database, which can only be accessed through a Web interface. An external copy of PubMed was used due to its size and the fact that the most recent version was always needed. Mediator 3 was then built within the company firewall to integrate data from Mediators 1 and 2, and Mediator 1 was enlarged to integrate data from Mediator 3.

K2 (together with Tsimmis [17]) distinguishes itself among approaches based on mediation in that it generates mediators starting from a concise, high-level description. This makes K2 especially appropriate for configurations in which many mediators are needed or in which mediators must be frequently changed due to instability in the data sources or in the client needs. Some of the salient features of K2’s mediation environment are:

![]() K2 has a universal internal data model with an external data exchange format for interoperation with similar components.

K2 has a universal internal data model with an external data exchange format for interoperation with similar components.

![]() It has interfaces based on the Object Data Management Group (ODMG) standard [3] for both data definition and queries.

It has interfaces based on the Object Data Management Group (ODMG) standard [3] for both data definition and queries.

![]() It integrates nested data, while offering a Java-based interface (JDBC) to relational database systems and an ODMG interface to object-oriented database systems.

It integrates nested data, while offering a Java-based interface (JDBC) to relational database systems and an ODMG interface to object-oriented database systems.

![]() It offers a new way to program integration/transformation/mediation in a very high-level declarative language (K2MDL) that extends ODMG.

It offers a new way to program integration/transformation/mediation in a very high-level declarative language (K2MDL) that extends ODMG.

![]() It has an extensible rule-based and cost-based optimizer.

It has an extensible rule-based and cost-based optimizer.

![]() External or internal decision-support systems can easily be included.

External or internal decision-support systems can easily be included.

![]() It is written entirely in Java, with corresponding consequences about portability.

It is written entirely in Java, with corresponding consequences about portability.

The basic functionality of a K2-generated mediator is to implement a data transformation from one or more data sources to one data target. The component contains a high-level (in ODMG/ODL) description of the schemas (for sources and the target) and of the transformation (in K2MDL). From the target’s perspective, the mediator offers a view that, in turn, can become a data source for another mediator.

An overview of the K2 architecture is given in Figure 8.4. In this diagram, clients can issue OQL queries or other commands against an integration schema constructed using K2MDL. The queries are then translated to the K2 internal language using K2MDL and query translators. This internal language expression is then optimized and executed using data drivers to ship sub-queries to external data sources and return results.

The remainder of this chapter walks through the architecture by describing the data model, illustrating K2MDL and OQL, and briefly discussing the internal language, data drivers, query optimization, and user interfaces. The chapter closes with a discussion of scalability and impact.

8.2 DATA MODEL AND LANGUAGES

ODMG was founded by vendors of object-oriented database management systems and is affiliated with the Object Management Group (OMG), who created the Common Object Request Broker Architecture (CORBA). The ODMG standard has two main components: The first is ODL, a data definition language that is used to define data elements. ODL is an extension of CORBA’s Interface Definition Language (IDL). The second is OQL, an enhanced SQL92-like language that is used for querying. By building on these standards, K2 leverages the following features:

![]() Seamless interoperability with relational, object-oriented, information retrieval (dictionaries), and electronic data interchange (EDI) formats (e.g., ASN.l)

Seamless interoperability with relational, object-oriented, information retrieval (dictionaries), and electronic data interchange (EDI) formats (e.g., ASN.l)

![]() Compatibility with the Universal Modeling Language (UML)

Compatibility with the Universal Modeling Language (UML)

![]() Integration with extensible markup language (XML) documents with a given Document Type Definition (DTD)

Integration with extensible markup language (XML) documents with a given Document Type Definition (DTD)

![]() Official bindings to Java, C++, and Smalltalk

Official bindings to Java, C++, and Smalltalk

![]() Industrial support from ODMG members (Ardent, Poet, Object Design)

Industrial support from ODMG members (Ardent, Poet, Object Design)

K2 uses ODMG’s ODL to represent the data sources to be integrated. It turns out that many biological data sources can be described as dictionaries whose keys are simple strings and whose entries are complex values.

A dictionary is simply a finite function. Therefore, it has a domain that is a finite set and associates to each element of the domain (called a key) a value (called an entry). The type of dictionary in ODL is denoted by dictionary<T1, T2> where T1 is the type of the keys and T2 is the type of the entries. In OQL, the entry in the dictionary, L, corresponding to the key, k, is denoted by L [k]. Because OQL has no syntax for the domain of a dictionary L, dom (L) is an addition to OQL for this purpose. Note that if L has type dictionary<T1, T2> then dom (L) has type set<Tl>.

Complex value data are built by arbitrarily nesting records (tuples); collections—such as sets, bags (multisets), and lists; and variants. Variants are pieces of data representing tagged alternatives (also known as tagged unions). To illustrate complex values (including variants), Figure 8.5 presents an ODL declaration for a class whose objects correspond to (parts of) Swiss-Prot entries. The attribute Ref returns complex values obtained by nesting sets, lists, records (struct in ODL), and variants, the latter identified by the keyword choice.

K2’s approach to data integration consists of two stages. In the first stage users specify data transformations between multiple sources and a single target. The target is virtual (unmaterialized) and is, in effect, a new view. The sources may consist of materialized data or virtual views that have been defined previously through similar data transformations.

In the second stage users formulate queries against the virtual views. OQL is an excellent vehicle for the second stage, but because it does not construct new classes as output, it is not expressive enough for the first stage. Hence, for defining sources-target transformations, K2 uses a new language, (K2MDL), which combines the syntax of ODL and OQL to express high-level specifications of middleware components called mediators, as explained previously. Some examples of K2MDL syntax are in the next section.

The rich type system used in K2 has allowed us to model a large range of practical data sources in a transparent and friendly manner.

8.3 AN EXAMPLE

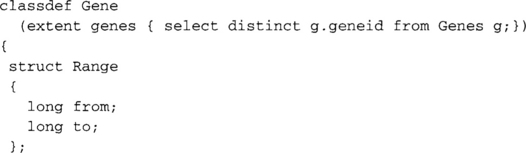

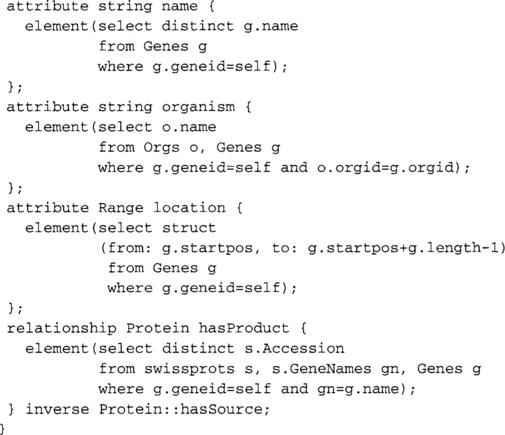

The K2 approach is illustrated with an example where the target data could be called an ontology, that is, a schema agreed upon by a class of users. It shows how a mediator generated by K2 could implement it in terms of standard data sources. Consider the following data description, which is given in ODL syntax:5

Data about proteins is recorded using a Swiss-Prot accession number, a recommended name, a set of alternate names, the protein sequence and its length, and a set of references to the genes that code for the protein. Data about genes consists of the name of the gene, the name of the organism from which it comes, the location of the gene in the genome, and a reference to the protein for which it codes. While most of our attributes have simple values, strings, and integers, alternateNames is a set of strings and location is a record. The schema also specifies that hasSource in Protein and hasProduct in Gene are more than just attributes; they form a relationship between the extents of the two classes and are inverses. This means that the two following statements are validated:

![]() Given a protein P, for each of the genes G in the set P. hasSource it is the case that G.hasProduct = P.

Given a protein P, for each of the genes G in the set P. hasSource it is the case that G.hasProduct = P.

![]() Given a gene G, it is the case that G belongs to the set (G.hasProduct). hasSource.

Given a gene G, it is the case that G belongs to the set (G.hasProduct). hasSource.

Now assume that the data about proteins and genes reside in (for illustration purposes) four materialized data sources: Swiss-Prot, Orgs, Genes, and ProteinSynonyms. Swiss-Prot contains some protein data, which can be accessed through an SRS driver that presents an object-oriented schema (i.e., a class). Orgs and Genes contain organism and gene data, respectively, in two relations (in the same or in separate relational databases). The SQL data description is given here for these relations, but in fact K2 uses an equivalent description in ODL syntax, based on the observation that relations are simply sets of records. Finally, a Web-based data source contains protein name synonyms and is modeled as a dictionary.

The K2MDL description of the integration and transformation that is performed when the sources Swiss-Prot, Orgs, Genes, and ProteinSynonyms are mapped into the ontology view. K2MDL descriptions look like the ODL definition in the ontology, enhanced with OQL expressions that compute the class extents, the attribute values, and the relationship connections (a related idea appears in a paper from the 1991 International Conference on Management of Data [18]).

The definition of the class Protein as a K2MDL classdef starts with an OQL statement that shows how to compute the extent proteins of this class by collecting the accession numbers from SwissProt. The elements of the extent are used as object identifiers (OIDs) for the objects in the class. The rest of the definition shows how to compute the value of each attribute for a generic object identified by the OID (denoted by the keyword self). The OQL function element (c) extracts the unique element of the collection, c, when c is a singleton, and raises an exception otherwise.

Note, for example, the computation of the value of the attribute alternateNames. For an object identified by self, find the Swiss-Prot entry, s, whose accession number is self, then use the description of s as a key in the dictionary ProteinSynonyms. The entry retrieved from the dictionary ProteinSynonyms [s.Description] is a set of records. Select from this set the records with names in English and collect those names into the answer. The value of the attribute is a set of strings. A further query posed against the class Protein may, for example, select objects whose alternateNames attribute contains a given synonym.

This example illustrates that relatively complex integrations and transformations can be expressed concisely and clearly, and can be easily modified.

8.4 INTERNAL LANGUAGE

The key to making ODL, OQL, and K2MDL work well together is the expressiveness of the internal framework of K2, which is based on complex values and dictionaries. ODL classes with extents are represented internally as dictionaries with abstract keys (the object identities). This framework opens the door to interesting optimizations that make the approach feasible.

The K2 internal language is organized by its type structure. There are base types such as string and number; record and variant types; collection types, namely sets, bags, and lists; and dictionary types. For each type construction there are two classes of operations: constructors, such as empty set and set union, and deconstructors, such as record field selection. The operations for collection types are inspired from the theory of monads [19] and are outlined in an article in Theoretical Computer Science [20]. For details specific to aggregates and collection conversions see Proceedings of the 7th International Conference on Category Theory and Computer Science [10]; the operations on dictionaries are described in the 1999 Proceedings of the International Conference on Database Theory [9].

The internal language derives its expressiveness from its flexibility. The primitives are chosen according to the principle of orthogonality, which says that their meaning should not overlap and that one should not be able to simulate one primitive through a combination of the others. This produces a language with fewer, but better understood, primitives. As an example, consider the following basic query statement:

This translates internally into

![]()

where SetU (x in S) T (x) is the set deconstructor suggested by the theory of monads and sngset (e) is a singleton set (i.e., the set with just one element, e).

The semantics of the set deconstructor is that of the union of a family of sets. For example, if S = {a1, …, an} then

![]()

This approach increases the overall language expressiveness by allowing any type-correct combination of the primitives (the language is fully compositional). At the same time it provides a systematic approach to the identification of optimization transformations by yielding an equational theory for the internal language. The formulas of such a theory are equalities between equivalent parts of queries, and they are used for rewriting queries in several of the stages of the optimizer. Finally, K2 exploits known efficient physical algorithms for operations such as joins by automatically identifying within queries the groups of primitives that compute these operations.

8.5 DATA SOURCES

K2 maps data from external sources into its internal language, as described previously. K2 also has a notion of functions, which are used to provide access both to stand-alone applications such as BLAST (a sequence similarity package) and to pre-defined and user-defined data conversion routines. This flexibility allows K2 to represent most data sources faithfully and usefully.

K2 accesses external information through data drivers. This is an intermediate layer between the K2 system proper and the actual data sources. There are two kinds of drivers in K2: those that are tightly integrated with the server and those that are more loosely connected.

Integrated data drivers (IDDs) are created by extending the two abstract Java classes that form K2’s driver API. The IDDs export a set of entry points to K2, connect to the data source, send queries to it, receive results from it, and package the results for use in the rest of the K2 system. The tight coupling of IDDs with the K2 system minimizes the overhead associated with connecting to the data source and allows for additional optimizations. K2 can also cache results of queries sent to the IDDs to improve overall speed.

K2 comes with an IDD that can connect to any relational database system that implements Sun’s JDBC API. A Sybase-specific IDD is also available, which takes advantage of some features of Sybase that are not available through JDBC. Oracle- and MySQL-specific versions are currently under development. To connect to a new relational database that supports JDBC, one merely needs to add the connection information to a configuration file, and K2 will automatically expose the underlying schema for querying. When the underlying schema changes, the K2 administrator must restart the IDD so it can rediscover the new schema. A procedure for automatically detecting and rediscovering schema changes is planned as a future enhancement.

Another IDD provided with K2 makes use of the World Wide Web Wrapper Factory (W4F), also developed at the University of Pennsylvania.6 W4F is a toolkit for the generation of wrappers for Web sources. New wrappers can easily be generated using W4F’s interface and can then be converted automatically to K2 drivers. Changes to the format of Web sources are partly handled by W4F’s declarative wrapper specification language. Large format changes require human intervention to re-define and re-generate wrappers.

A very powerful feature of K2 is its ability to distribute query execution using its IDD for Java RMI. This IDD can make an RMI connection to a remote K2 server and send it part of the local query for processing. Therefore, all that is required to connect to the remote K2 server and start distributing queries is a change to the local K2 configuration file.

Sometimes it is difficult to develop an IDD for a new type of data source. For example, to treat a group of flat files as a data source it is often easier to write a Perl script to handle the string manipulations involved rather than implementing them in Java, as would be required in an IDD. In fact, some data sources cannot be accessed at all from Java but only through APIs in other languages. To handle this, K2 has an IDD called the PipeDriver that does not connect to the data source directly, but to a decoupled data driver (DDD).

A DDD is a simple, stand-alone application, written in any language at all, that accepts queries through its standard input stream and writes results to its standard output. The PipeDriver takes care of sending queries to the DDD and converting the results into K2’s internal representation. It can run multiple DDDs at once to take advantage of parallelism, and it can make use of the caching mechanism built into IDDs, all of which simplifies the job of the DDD writer.

The DDD is responsible for establishing a connection to the data source (often nothing is required in this step), telling the PipeDriver it has made the connection, and waiting for a query to come in. When the DDD receives a query, it extracts the appropriate result from the data source and writes it out in a simple data exchange format. It then returns to waiting for the next query. This loop continues until the K2 server is brought down, at which point the PipeDriver tells the DDD to terminate.

DDDs have been written to connect with SRS, KEGG, and BLAST, as well as a number of Web-based sources. The time it takes to create a new DDD depends greatly on the capabilities of the data source for which it is being written and on how much intelligence is to be built into the DDD. For example, it only takes an hour or two to write a Perl script to connect to a simple document storage system; the script must be written to receive an ID, retrieve the document, and print it out in K2’s exchange format, taking into account any error conditions that might occur.

However, the DDD writer has the flexibility to create special-purpose DDDs of any complexity. One DDD has been written that performs queries over a collection of documents that come from a remote Web site. This DDD maintains a local disk cache of the documents to speed access. It is responsible for downloading new versions of out-of-date documents, taking concurrency issues into account, and parsing and indexing documents on the fly. It also supports a complex language for querying the documents and for retrieving structured subsets of their components. This DDD was written over the course of two weeks and has been expanded periodically since.

8.6 QUERY OPTIMIZATION

K2 has a flexible, extensible query optimizer that uses both rewrite rules and a cost model. The rewrite rules are used to transform queries into structurally minimal forms whose execution is always faster than the original query; this is independent of the nature of the data. On the other hand, a cost model uses information about the nature of the data, such as the size of the data sets, selectivity of joins, available bandwidth, and latency of the data sources. The cost information is used to choose between minimal queries that are incomparable with respect to the rewrite rules.

After translating the query into an abstract syntax tree, which K2 uses to represent queries internally, it is manipulated by applying a series of rewrite rules. This is where the bulk of K2’s optimization work is done.

First, a collection of rules is applied that simplifies the query by taking pieces expressed using certain kinds of tree nodes and replacing them with others. This reduces the number of types of nodes needed to deal with, thus reducing the number and complexity of the rewrite rules that follow.

Next, the query is normalized. Normalization rules include steps such as taking a function applied to a conditional structure and rewriting it so the function is applied to each of the expressions in the condition. Another normalization rule removes loops that range over collections known to be empty. Repeated application of the normalization rules, which currently number more than 20, reduces the query to a minimal, or normal, form.

A final set of rewrite rules are then applied to the normalized query. These rules include parallelizing the scanning of external data sources and pushing selections, projections, and joins down to the drivers where possible.

Even after all the rewrite rules have been applied, there may still be room for further optimization. In particular, a query may have a family of minimal forms rather than a single one. To choose between the minimal forms, the expected execution time of each version of the query is estimated using a cost model, and the fastest query form is chosen. The current cost model is still in the development stage. While it is functional and works well most of the time, it does not always choose the optimal form of the query.

8.7 USER INTERFACES

K2 has been developed using a client–server model. The K2 server listens for connections either through a socket or through Java RMI. It is easy to develop a client that can connect to K2 through one of these paths, issue queries, and receive results. Three basic clients come with K2: a text-based client, an RMI client, and one that runs as a servlet.

The interactive, text-based client connects to a K2 server through a socket connection. It accepts a query in OQL through a command-line-style interface, sends it to the server, gets the result back as formatted text, and displays it; then it waits for the next query to be entered. This simple client generally is used to test the socket connection to K2 and to issue simple queries during the development process. It is not intended to be an interface for end users.

The other type of user connection is through RMI. These connections are capable of executing multiple queries at once and can halt execution of queries in progress. This is the connection method that K2 servers use to connect to other K2 servers to distribute the execution of a query.

There is a client that makes an RMI connection to a K2 server with administrator privileges. The server restricts these connections to certain usernames connecting from certain IP addresses and requires a password. Currently, an administrator can examine the state of the server, add and remove connections to individual drivers, stop currently running queries, disconnect clients, and bring the server to a state where it can be stopped safely. More functionality is planned for administrators in the future.

A client that runs as a servlet is also included with K2. Using code developed at the Computational Biology and Informatics Laboratory (at the University of Pennsylvania), this servlet allows entry of ad hoc K2 queries and maintains the results for each username individually.

A major component of any user interface is the representation of the data to the user. As exemplified previously, a user (perhaps one serving a larger group) can define in K2MDL a transformed/integrated schema for a class of users and applications and can specify how the objects of this schema map to the underlying data sources. Users of this schema (called an ontology by some) can issue vastly simplified queries against it, without knowledge of the data sources themselves.

8.8 SCALABILITY

In theory, the K2 system can be used to interconnect an arbitrarily large number of data sources. In practice, the system has been configured with up to 30 data sources and software packages, including GUS, PubMed, MacOStat, GenBank, Swiss-Prot, BLAST, KEGG, and several relational databases maintained in Oracle, Sybase, and MySQL. Even when querying using this configuration, however, it has been rare to access more than five data sources and software packages in the same query.

The primary obstacles to scaling K2 to a larger system are (1) writing data drivers to connect to new data sources and (2) peak memory consumption. The difficulty of writing data drivers to external data sources has been mitigated to some extent by the fact that they are type specific rather than instance specific. For example, once an Oracle driver is written, it can be used for any Oracle data source. On the other hand, an AceDB driver must take into account the schema of the AceDB source and is therefore not generic. Drivers are also relatively simple in K2 because they merely perform data translation. Any complex, semantic transformations are performed by K2MDL code, which is high-level and therefore more maintainable.

While peak memory consumption has not as yet been an issue for the queries handled by K2 in the past, it could become a problem as applications become larger. K2 provides a means of limiting the number of queries it will run simultaneously and the number of data source connections it will maintain. This allows an administrator to tune the system to the capabilities of the machine on which it is running.

Because K2 is a view integration environment, it does not store any data locally; however, it may need to store intermediate results for operations that cannot be streamed (i.e., processed on the fly). At present, intermediate results are stored in main memory.

Examples of operations that cannot be streamed include sorting, set difference, nesting, and join. For example, when the difference of two data sets is taken, no output can be issued until both data sets are read; one of the data sets may have to be cached while the other is streamed and the difference is calculated. Similarly, although a join output can be produced as soon as a match is found between elements of the two data sets, data cannot be discarded until it is known not to match any future input from the other data set (see two International Conference on the Management of Data (SIGMOD) articles [21, 22] for discussions of implementations of operators in streaming environments). Thus, when data sets are large, these operations may need to cache temporary results in persistent memory. Such techniques are not currently part of the K2 system and require futher development.

Another difficulty of scaling to an arbitrarily large environment is the sheer complexity of understanding what information is available. To mitigate this, smaller mediated components can be composed to form larger mediated components. Thus, users need not be aware of the numerous underlying data sources and software systems, but they can interact with the system through a high-level interface representing the ontology of data.

8.9 IMPACT

As with Kleisli, a tremendous enhancement in productivity is gained by expressing complicated integrations in a few lines of K2MDL code as opposed to much larger programs written in Perl or C++. What this means for the system integrator is the ability to build central client-server or mix-and-match components that interoperate with other technologies. Among other things, K2 provides:

![]() Enhanced productivity (50 lines of K2MDL correspond to thousands of lines of C++)

Enhanced productivity (50 lines of K2MDL correspond to thousands of lines of C++)

![]() Maintainability and easy transitions (e.g., warehousing)

Maintainability and easy transitions (e.g., warehousing)

![]() Re-usability (structural changes easy to make at the mediation language level)

Re-usability (structural changes easy to make at the mediation language level)

K2 has been used extensively in applications within the pharmaceutical company GlaxoSmithKline. Some of the major benefits of the system exploited in these applications have been the ease with which ontologies can be represented in K2MDL and the ability to conveniently compose small mediators into larger mediators.

K2 was also used to implement a distributed genomic-neuroanatomical database. The system combines databases and software developed at the Center for Bioinformatics at the University of Pennsylvania—including databases of genetic and physical maps, genomic sequences, transcribed sequences, and gene expression data, all linked to external biology databases and internal project data (GUS [14])—with mouse brain atlas data and visualization packages developed at the Computer Vision Laboratory and Brain Mapping Center at Drexel University. The biological and medical value of the activity lay in the ability to correlate specific brain structures with molecular and physiological processes. The technological value of the activity was that K2 was ported to a Macintosh operating system environment (MacOSX), visualization packages were tightly integrated into the environment, and both an ethernet and a gigabit network were used in the application. The work was significantly facilitated by the fact that K2 is implemented in Java.

K2 now runs on Linux, Sun Solaris, Microsoft Windows, and Apple MacOS platforms.

8.10 SUMMARY

This chapter presented the K2 system for integrating heterogeneous data sources. K2 is general purpose, written in Java, and includes JDBC interfaces to relational sources as well as interfaces for a variety of special-format bioinformatics sources. The K2 system has a universal internal data model that allows for the direct representation of relational, nested complex value, object-oriented, information retrieval (dictionaries), and a variety of electronic data interchange (EDI) formats, including XML-based sources. The internal language features a set of equivalence laws on which an extensible optimizer is based. The system has user interfaces based on the ODMG standard, including a novel ODL–OQL combined design for high-level specifications of mediators.

ACKNOWLEDGMENTS

The authors would like to acknowledge the contributions of David Benton, Howard Bilofsky, Peter Buneman, Jonathan Crabtree, Carl Gustaufson, Kazem Lellahi, Yoni Nissanov, G. Christian Overton, Lucian Popa, and Limsoon Wong.

REFERENCES

[1] Robbins R.J., ed. Report of the Invitational DOE Workshop on Genome Informatics. Baltimore, MD: DOE, 1993.

[2] Melton, J., Simon, A. Understanding the New SQL. San Francisco: Morgan Kaufman; 1993.

[3] Cattell R.G.G., Barry D., eds. The Object Database Standard: ODMG 3.0. San Francisco: Morgan Kaufmann, 2000.

[4] March Buneman, P., Libkin, L., Suciu, D., et al. Comprehension Syntax. Special Interest Group on the Management of Data (SIGMOD) Record. 1994;23(no. 1):87–96.

[5] Chawathe, S., Garcia-Molina, H., Hammer, J., et al, The TSIMMIS Project: Integration of Heterogeneous Information Sources, Proceedings of the 10th Meeting of the Information Processing Society of Japan Conference. Tokyo, Japan. 1994.

[6] Levy, A., Srivastava, D., Kirk, T. Data Model and Query Evaluation in Global Information Systems. Journal of Intelligent Information Systems. 1995;5(no. 2):121–143.

[7] Haas, L.M., Schwarz, P.M., Kodali, P., et al. DiscoveryLink: A System for Integrated Access to Life Sciences Data Sources. IBM Systems Journal. 2001;40(no. 2):489–511.

[8] Chen, I-M.A., Markowitz, V.M. An Overview of the Object-Protocol Model (OPM) and OPM Data Management Tools. Information Systems. 1995;20(no. 5):393–418.

[9] Popa, L., Tannen, V. An Equational Chase for Path Conjunctive Queries, Constraints, and Views. In: Proceedings of the International Conference on Database Theory (ICDT), Lecture Notes in Computer Science. Heidelberg, Germany: Springer Verlag; 1999:39–57.

[10] Lellahi, K., Tannen, V., A Calculus for Collections and Aggregates. Moggi, E., Rosolini, G., eds. Proceedings of the 7 th International Conference on Category Theory and Computer Science, CTCS’97, Lecture Notes in Computer Science. Heidelberg, Germany: Springer-Verlag; 1997;vol. 1290:261–280.

[11] Etzold, T., Argos, P. SRS: An Indexing and Retrieval Tool for Flat File Data Libraries. Computer Applications of Biosciences. 1993;9(no. 1):49–57.

[12] Fujibuchi, W., Goto, S., Migimatsu, H., et al, DBGET/LinkDB: An IntegratedDatabase Retrieval System, Pacific Symposium on Biocomputing. 1998:683–694.

[13] Rebhan, M., Chalifa-Caspi, V., Prilusky, J., et al, GeneCards: Encyclopedia for Genes, Proteins and Diseases. Rehovot, Israel: Weizmann Institute of Science, Bioinformatics Unit and Genome Center; 1997. http://www.bioinformatics.weizmann.ac.il/cards

[14] Davidson, S., Crabtree, J., Brunk, B., et al. K2/Kleisli and GUS: Experiments in Integrated Access to Genomic Data Sources. IBM Systems Journal. 2001;40(no. 2):512–531.

[15] November Davidson, S., Overton, C., Tannen, V., et al. BioKleisli: A Digital Library for Biomedical Researchers. Journal of Digital Libraries. 1996;1(no. 1):36–53.

[16] March Wiederhold, G. Mediators in the Architecture of Future Information Systems. IEEE Computer. 1992;25(no. 3):38–49.

[17] Garcia-Molina, H., Papakonstantinou, Y., Quass, D., et al, The TSIMMIS Approach to Mediation: Data Models and Languages, Proceedings of the Second International Workshop on Next Generation Information Technologies and Systems. 1995:185–193.

[18] Abiteboul, S., Bonner, A. Objects and Views. In: Proceedings of the 1991 ACM SIGMOD International Conference on the Management of Data. San Francisco: ACM Press; 1991:238–247.

[19] MacLane, S. Categories for the Working Mathematician. Berlin, Germany: Springer-Verlag; 1971.

[20] Buneman, P., Naqvi, S., Tannen, V., et al. Principles of Programming with Collection Types. Theoretical Computer Science. 1995;149(no. 1):3–48.

[21] Ives, Z., Florescu, D., Friedman, M., et al. An Adaptive Query Execution System for Data Integration. In: Proceedings of the 1999 ACM SIGMOD International Conference on Management of Data. San Francisco: ACM Press; 1999:299–310.

[22] Chen, J., DeWitt, D., Tian, F., et al. NiagaraCQ: A Scalable Continuous Query System for Internet Databases. In: Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data. San Francisco: ACM Press; 2000:379–390.

1See http://www.javasoft.com/j2se/.

2See http://www.javasoft.com/products/rmi-iiop/.

3See http://java.sun.com/products/jdbc/.

4A counterexample to this is SRS, in which a linking operator is provided to retrieve linked entries to a set of entries.

5This is, of course, a very simplified model of proteins and genes, but the intent of this example is to demonstrate K2MDL, not to develop a scientifically viable model.

6Information about W4F is available at http://db.cis.upenn.edu/Research/w4f.html.