3

Fundamentals of Statistical Data Analysis

Introduction

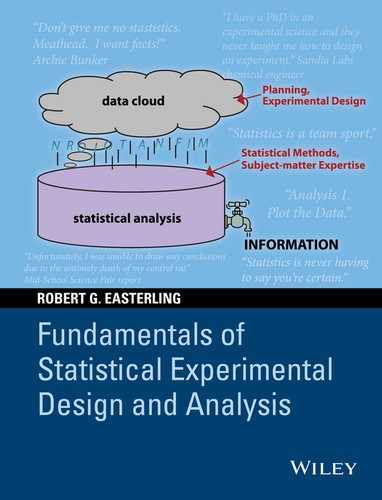

Once an experiment has been conducted and the data collected, the next task is to extract and communicate the information contained in the data (as depicted in the cloud cartoon; Fig. 1.1). The structure of an experiment dictates, to a large extent, the nature of the statistical data analysis to be carried out. (Indeed, careful planning of an experiment includes the anticipated analyses and even anticipated results.) In the remaining chapters in this book, detailed statistical data analyses will be discussed and illustrated in conjunction with the different experimental designs addressed. Some general principles and basic analyses, though, are set forth in this chapter and illustrated with simple two-treatment experiments. Two types of intertwined analysis are discussed—graphical and quantitative. My general approach is as follows:

Analysis 1. Plot the data! An analysis will often cycle between plots and calculations related to the plots as the message in the data is extracted and communicated.

Analysis 2. Do appropriate number crunching to characterize patterns seen in data plots, to separate and measure what is real from what could just be random variation, and to point the way to further data displays and analyses.

The two experiments addressed in some detail in this chapter are from the classic experimental design text, Box, Hunter, and Hunter (1978, 2005). The first, an experiment on the wear of boys’ shoes, was introduced in the preceding chapter. In this chapter, we continue that story through several layers of analyses and issues. The story gets a little lengthy, but it illustrates that there can be several legitimate ways to extract and communicate information from that data cloud.

Next, we take up the story of a gardener’s experiment that compares two tomato fertilizers. We also address what happens after the analysis: business decisions and consequences. Both of these examples are very simple experiments, but they illuminate fundamental issues and concepts that come into play in all experimental contexts.

Boys’ Shoes Experiment

Experimental design

Consider again the boys’ shoes experiment introduced in Chapter 2. The data from that BHH experiment are given in Table 3.1. Recall that each of 10 boys in the experiment wore one shoe of each sole material, A or B, randomly assigned to the left and right feet. They wore the shoes for some period of time after which the percent wear was measured. Thus, and this is important, each measured response, the percent wear on a shoe sole, is associated with a boy, a foot, and a material. This association is shown in Table 3.1.

Table 3.1 Boys’ Shoes Example: Percent Wear for Soles of Materials A and B; Material A on the Indicated Foot.

Source: Box, Hunter, and Hunter (2005, p. 81); reproduced with permission from John Wiley & Sons.

| Boy | A-Foot | A | B |

| 1 | L | 13.2 | 14.0 |

| 2 | L | 8.2 | 8.8 |

| 3 | R | 10.9 | 11.2 |

| 4 | L | 14.3 | 14.2 |

| 5 | R | 10.7 | 11.8 |

| 6 | L | 6.6 | 6.4 |

| 7 | L | 9.5 | 9.8 |

| 8 | L | 10.8 | 11.3 |

| 9 | R | 8.8 | 9.3 |

| 10 | L | 13.3 | 13.6 |

The reason for pointing out the association is that any data plot (Analysis 1) should initially reflect this association—in all its dimensions, if possible. If, however, the data show no evidence of a particular association, then subsequent displays need not maintain the linkage. In the shoe experiment, the assignment of materials to feet was done by flipping a coin, with the result that seven boys wore material A on the left foot and three wore B, as is also shown in Table 3.1.

Here’s a design issue right off: an alternative experimental design would have balanced the left/right (L/R) assignments—five randomly selected boys would have been assigned material A to their left feet, and the other five would have B on their left feet. If the experimenters had thought the L/R choice might have an appreciable effect, they might have incorporated such a balancing constraint in the design (it can be shown mathematically that equal replication maximizes the precision with which any L/R difference can be estimated). The experimenters (relying on subject-matter expertise—knowledge that both feet must experience very similar conditions) may not have expected a bias toward one foot or the other and so did not balance the L/R assignments, but because they took the precaution of recording the assignments, we can check that possibility.

(Though not given in the example, for a carefully carried out experiment, one might expect or hope that other, “ancillary,” data pertaining to the boys would have been obtained, such as age, weight, and the number of days the shoes were worn. The analysis would also look for relationships between these variables and shoe wear.)

Graphical displays

There are several ways to display the shoe-wear data. Because there is a pair of data for each boy, one appropriate plot is a scatter plot (an “XY (Scatter)” plot in Excel terminology, “Scatterplot” in Minitab) of the data pairs. Figure 3.1 shows a scatter plot of the B-material wear (Y axis) versus the A-material wear (X axis), for the 10 boys, with separate plotting symbols used for the foot on which the material A shoe was worn. If it was important to know which point corresponded with which boy, the points could have been labeled with the boy number. Figure 3.1 also shows an overlay of the equal-wear (45°) line. This line facilitates the visual comparison of shoe sole materials. Points above the line are cases for which there was more wear on the B-material sole than on the A-material sole and vice versa for points below the line.

Figure 3.1 Scatter Plot of B-Material Wear versus A-Material Wear; separate plotting symbols for the left and right foot assignments of material A.

From Figure 3.1, it is fairly clear that material A generally wore better (less thickness loss) than B in this experiment: in eight of the 10 cases, there was less wear with A than with B (the points above the diagonal line). In the two situations in which B wore less than A, the difference was comparatively small—these two points being quite close to the diagonal line. Furthermore, there is no evident separation of the three “A-right” points and the seven “A-left” points, so the expectation that there would be no foot bias is supported by the data. Subsequent plots will therefore not maintain the L/R distinction.

Although the data favor material A, the differences appear to be small, especially in comparison with the variation among boys. The wear percentages range from about 6 to 14% across the 10 boys, but the A–B differences, as can be seen from Table 3.1, are generally less than a percentage point. The important questions of whether the wear differences are statistically or practically or economically significant will be addressed later in this analysis.

Two other plots make it easier to see the differences between A and B for the 10 boys (compared to reading the distances of the points in Fig. 3.1 from the diagonal line). One is a scatter plot of the A and B wear percents versus boy number, shown in Figure 3.2. Now, there is no intrinsic ordering of boys—it could have been alphabetical ordering, or by the date they turned in their shoes, or completely haphazard. So, the purpose of the plot is not to look for a relationship between shoe wear and boy number. The purpose is to facilitate the comparison of materials A and B across the 10 boys. (Note that if the boys had been characterized by variables such as age, weight, or number of days the shoes were worn, then it would have been meaningful, and maybe informative, to have plotted the A and B wear data vs. such variables.)

Figure 3.2 Wear % by Material and Boy.

Figure 3.2 shows clearly that A “won” eight of the 10 comparisons (less wear! more often!) and that in the two cases in which B won (boys 4 and 6), the difference was quite small in comparison with A’s winning margins. Details are still to come, but the thoughtful reader may have some intuition that the probability, say, of getting as many as eight heads (wins for A) in 10 fair tosses (boys) of a fair coin is fairly small, so, by comparison, the fact that A won eight of 10 comparisons is at least a hint that the material difference is “real,” not just random.

One other plot that can be used to show these data is a line plot. This plot (Fig. 3.3) is simply a collection of lines connecting the A and B wear data for each boy separately. We see that eight of 10 of the lines slope upward, again indicating more (worse) wear for B than A in eight of the 10 cases, while two lines have slightly negative slopes, reflecting the two cases in which B had less wear than A. The line plot is useful for a small number of comparisons, but if we had many more than 10 cases, the plot would become unreadable.

Figure 3.3 Line Plot of Wear Data for Materials A and B.

Figure 3.3 also shows quite markedly again the substantial differences among boys. The amount of sole wear ranged roughly from 6 to 14%. A shoe manufacturer is probably more interested in extremes than in average wear. If the company conducting this experiment could identify the factors leading to relatively high sole wear, say, physical characteristics of the boys or their activities, they might be able to design a more robust shoe and expand their market. Or they could print a warning on the box: Your Momma says, “Pick your feet up!” Figure 3.3 also shows the close association between shoe sole wear on a boy’s two feet. The differences between feet are small relative to the differences among boys. Everywhere one foot goes the other goes, too.

Significance testing

Displays of the data from the shoe experiment (Figs. 3.1–3.3) showed that sole material A, the currently used material, tended to wear better than the cheap potential substitute, material B. The differences varied, though, among the 10 boys, which is not surprising: shoe sole manufacturing and, especially, shoe wear are not perfectly repeatable or perfectly measured processes. There is bound to be some inherent variability of wear, even if the two shoes worn by a boy both had the same sole material and even if the two shoes traveled the same terrain. The question to be addressed is: Even in light of this inherent process and product variability, is there evidence that one material is better than the other? And if so, how much better?

Statistical methods address these questions by making comparisons:

We compare the “data we got” to a probability distribution of “data we might have gotten” (under specific assumptions).

This comparison is the basic idea of statistical “significance testing.” To develop this technique, the concept of a “probability distribution of data we might have gotten” needs to be explained. This requires a discussion of probability. Probability provides the framework against which an experiment’s data can be evaluated.

Probability and probability distributions

It is natural to think of probability in terms of games of chance. In a single fair toss of a fair coin, there are a probability of .5 that the result is a head and, consequently, a probability of .5 that the result is a tail (a 50–50 chance in common lingo). In lotteries, assuming a fair physical or computerized method of generating random numbers, the probability of a winning combination of numbers can be calculated (by the number of combinations that are winners divided by the total number of possible combinations). What the term probability means is that if, say, coin tossing was repeated an infinite number of times, a head would occur in half of the trials. Further, the sequence of heads and tails would be “random”; each outcome is independent of all the others. In the other example, the lottery would be won in the calculated fraction of a conceptual infinite replay of the lottery.

What, though, can we expect in a limited number of trials, say, 10? If a fair coin is fairly tossed 10 times, the 11 possible outcomes range from 10 heads and zero tails to zero heads and 10 tails. Intuitively, we know that some outcomes, such as 5 heads and 5 tails, are more likely (or probable) than other outcomes in that they would occur more often in repeated sets of 10 tosses than the extreme results of 10 heads or 10 tails. Probability theory supports and quantifies this intuition. Numerous science fair projects have tested the underlying theory.

Probability theory tells us the following: under the assumption of n “independent trials,” each with probability p of a particular outcome, the probability of observing exactly x of these outcomes is given by what is called the binomial probability distribution (Wikipedia 2014a and numerous statistical texts). (Independent trials mean that the outcome of one trial does not affect the outcome of other trials.) This probability distribution is a mathematical function that gives the probability of all of the possible outcomes, x = 0, 1, 2, …, n − 1, n. The mathematical expression for the binomial probability distribution is given in many statistical texts, and the distribution is available in spreadsheet software such as Excel and in statistical packages such as Minitab. Appendix 3.A to this chapter gives the formula for the binomial distribution and discusses statistical aspects of the distribution. For our present purposes, we will rely on software and forego the mathematics. Trust us.

For the fair coin-tossing case of n = 10 and p = .5, the binomial probability distribution of the number of heads is tabulated and plotted in Figure 3.4. Figure 3.4 shows that the probability of all heads (or all tails) is .001 (actually .510 = 1/1024 = .00098). The most likely outcome, 5 heads and 5 tails, has a probability of almost 25%. This means that if a fair coin was fairly flipped 10 times, over and over, the proportion of cases in which five heads and five tails would result is .246. Other outcomes have lower probabilities that decrease as possible outcomes differ more and more from the most likely outcome of five heads and five tails. The probabilities of the 11 possible outcomes sum to 1.0. (It is a property of probability distributions that the sum of the probabilities for all possible outcomes must equal 1.0.) Note also that this distribution is symmetric: the probability of, say, 3 heads and 7 tails is the same as the probability of 7 heads and 3 tails, namely, .117. For a biased coin, in which case the probability of a head on a single toss is not .5, the distribution would not be symmetric.

Figure 3.4 Binomial Distribution. B(x:10, .5) denotes the probability of x heads in 10 fair tosses of a fair coin.

Sign test

Why do we care about this particular binomial distribution? Our interest is comparing shoe sole materials, not flipping coins. Well, if there really is NO difference between materials, the outcome, “A wears less than B,” would be expected to occur half the time, like the heads outcome for a fair coin toss. In this case, the wear test results for 10 boys would then be analogous to, or comparable to, 10 fair tosses of a fair coin. To evaluate the viability of the “hypothesis” of no difference between materials, it is thus appropriate to compare the experimental outcome (“the data we got”), namely, that eight of 10 cases had the result, A wears less than B, to the “probability distribution of data we might have gotten under the assumption of no real difference in materials.” This distribution is the binomial probability distribution with p = .5 portrayed in Figure 3.4.

Figure 3.5 shows the comparison. In statistical terminology, the binomial distribution to which the experimental outcome is compared is called the “reference distribution.” We “refer” the data (we got) to this probability distribution (of data we might have gotten) to evaluate the degree of agreement of the data with the situation of no real difference between materials.

Figure 3.5 Comparison of Shoe Experiment Results to the Binomial Distribution for n = 10, p = .5.

The message from the comparison in Figure 3.5 is that the outcome, A wins eight times in 10 trials, is fairly unlikely, just by chance, if there was no underlying difference between A and B. In particular, the probability of that particular outcome is only .044.

So, have we proved that A is better than B, beyond a reasonable doubt, as is required in jury trials? No, not at all. The evidence supporting that claim is strong, but not absolute. If A had won nine of the comparisons, the evidence would be stronger; if A had won all 10 comparisons, we would still not be absolutely certain that A was better—just by chance there is still a .001 probability of that extreme result just by chance. This is the sort of uncertainty we have to cope with in interpreting experimental data and making decisions based on the data and our subject-matter knowledge (statistics means never having to say you’re certain). In spite of this uncertainty, we are obviously more informed having done the experiment than if we had not.

Figure 3.5 shows the comparison of data we got to the distribution of data we might have gotten. The picture tells the story. The picture is a little indefinite, with respect to an unequivocal decision about the equality of the materials, but that’s because of the limited amount of data available to test the hypothesis of equality. As shown in the following subsections, by other analyses, we can sharpen the comparison substantially, but not eliminate all uncertainty.

Graphical comparisons of the data we got to a reference distribution of data we might have gotten can become a little unwieldy and take up too much space in a report or text. Statistical convention is to summarize this picture by a number called the “P-value.” The P-value tells the reader how far out on one tail or the other of the reference distribution that the data we got fall. (Most distributions we deal with, such as the upcoming “bell-shaped curve” and the Normal distribution, are shaped so that the occurrence probability of possible outcomes decreases in the tails of the distribution.) More technically:

- The P-value equals the probability of an outcome that disagrees with the hypothesis, or assumption, used to construct the reference distribution, by as much as or more than the observed data do.

In our case, the outcomes that define the P-value are the cases when A wins 8, 9, or 10 of the comparisons. The outcome of 8 wins for A is what was observed; 9 and 10 are the other outcomes that define the upper tail: more decisive wins for A. Thus, the probability of these outcomes, by Figure 3.8, is P = .044 + .010 + .001 = .055. Because we considered only those cases for eight or more wins by A, which corresponds to the upper tail of the probability distribution in Figure 3.8, this would be called an upper one-tail P-value. The P-value tells us that the data we got correspond to the outcome that defines the upper .055 (or 5.5%) tail of its reference distribution. That is, reporting that the upper-tail P-value is .055, in this case, is numerical shorthand for the picture showing that the data we got fell at the .055 point of the upper tail of its reference distribution of possible data, calculated under the assumption of no real difference between the two materials.

In this situation, subject-matter knowledge (presumably) tells us that A should wear better than the cheaper material, B. That’s why B is cheaper. So, it is appropriate to focus our interest and analysis on the cases in which A won eight cases or more—the upper tail of the reference distribution. (If A had won only two of the 10 comparisons, or fewer, the message would be that B is cheaper and wears better. Changing to B is then a win–win situation.)

The process we have just gone through is called a “significance test” in statistical literature. Because this analysis only considered the direction, or sign (positive or negative) of the A versus B comparisons, the particular test used here is called the “sign test” (Wikipedia 2014b). The reference distribution for the sign test, in this example, is the binomial distribution of positive or negative outcomes, or heads and tails by analogy, for the case of n = 10 and p = .5.

Misinterpretation of P-values

There is a tendency to misinterpret a P-value as the probability, in this example, that the A and B shoe sole materials wear the same. However, “A and B shoe sole materials wear the same” is not a random variable, like the outcome of 10 tosses of a fair coin. This proposition doesn’t have a probability distribution, so you cannot make probability statements about it.

The P-value is simply a numerical summary of the comparison of the data we got (8 wins out of 10 trials for A) to the binomial distribution of data we might have gotten, if the proposition of no difference was true, in which case that distribution is the binomial distribution with p = .5. The P-value summarizes that comparison by telling us how far out on the tail of that distribution that the experiment’s outcome fell. The smaller the P-value, the sharper the level of disagreement between the data we got and the distribution of data we might have gotten.

As will be seen in the following sections, there can be more than one way to summarize the data we got and make the comparison to data we might have gotten, under the situation in which A and B wear the same.

Also, a P-value does not tell one anything about the magnitude of the effect that is being estimated from the data. For example, the small P-value for the boys’ shoes sign test does not indicate how much difference there is between the underlying probability that A wears better than B and the hypothesized value of p = .5. Statistically significant and practically meaningful are not the same thing. P-values have been a subject of much discussion in the scientific literature. For a good summary of the issues, see Nuzzo (2014).

Randomization test

The sign test we just carried out was based on considering only the sign of the B–A differences, case by case. In eight of the 10 cases, that difference was positive; B wore more than A. Summarizing the data in this way ignores the sizes of the differences in wear percentages. A large difference is not distinguished from a small difference with the same sign. The magnitudes of the differences tell us more about the A versus B difference. As we saw in the data plots, material A generally won by a larger margin in its eight wins than B did in its two wins. We can make a more sensitive comparison of the two materials if we consider the sizes of the differences. Size matters.

Think again about the hypothesis (assumption) of no real difference between the two materials. If that assumption is true, then the observed differences just reflect the random outcomes of assigning A and B to left and right feet. Boy 1 had A on his left foot and recorded 13.2% wear for A and 14.0% for B on his right foot. If the randomization had put B on his left foot, then, assuming no difference between materials, boy 1’s data would have been 14.0% for A and 13.2% for B. That is, his observed B–A difference in wear could have been either .8 or −.8%, each with probability .5. Similarly, for the rest of the 10 boys, each of their B–A differences would have been changed in sign if the foot assignment had been reversed. There are thus 210 = 1024 possible (and equally likely) outcomes for the signed differences between B and A under the assumption of no real difference between materials.

To compare the data we got to the distribution of data we might have gotten, if there was no difference between materials A and B, we need a summary statistic that reflects the size of the difference. A natural statistic is the average difference. For the observed data, the average difference between the B and A wear percentages (taking B–A) is .41%. We will call this average difference dbar. Now, for each of the 1024 possible A/B foot assignments, we can calculate the resulting d-values and their average, dbar. For example, if all 10 assignments were the opposite of the assignments in this experiment, dbar would equal −.41%. BHH (2005) did the full set of calculations to create the probability distribution of possible dbar’s, compared the “dbar we got” to this reference distribution, and found that only three of the 1024 possible average differences were greater than .41%; four of them were exactly .41%. With a “continuity correction,” they counted half of the latter four outcomes to obtain a (one-tail) P-value of 5/1024 = .005. The picture that this P-value summarizes is a histogram of all 1024 possible dbars, under the assumption of no difference between A and B materials, with the outcome we got, dbar = .41%, corresponding to an upper-tail probability of .005. This P-value is substantially stronger evidence of a real difference than the P-value of .055 for the sign test. This smaller P-value tells us that the data we got are more extreme with respect to the randomization test than they were with respect to the sign test. This smaller P-value means that the evidence against the assumption of no real difference between materials is stronger using the randomization test than it is using the sign test.

This calculation of the randomization test (Wikipedia 2014c) is particularly appropriate when in fact the experiment was conducted by randomly assigning materials to feet, as was the case here. Random assignment of treatments to experimental units establishes the validity of the randomization test. That’s important. It justifies comparing “the data we got” to a reference distribution of “data we might have gotten” based on the assumption of random treatment assignments. Similarly, randomization established the validity of the sign test. The two tests gave different result because two different summary statistics and corresponding reference distributions were used in the analyses.

The small P-value of .005 means that the observed outcome is in the extreme upper tail of the distribution of average differences generated under the assumption (hypothesis) that there is no difference between materials A and B. So, it is quite unusual (though still not impossible) to get a result, just by chance, in which the average B–A difference is as large as or larger than the experiment’s result of dbar = .41%. We have quite strong evidence that there is a real difference, on average, between the sole materials. Whether that average difference is important in selling shoes, the question that motivated this experiment, remains to be determined. We’ll get to it. But first, let’s consider one other approach to choosing the distribution of “data we might have gotten.”

Normal distribution theory t-test

The Normal distribution is a mathematical function that defines a “bell-shaped curve.” This curve, shown in Figure 3.6, is an example of a probability density function. The vertical axis is probability density. The density function has the property that the total area under the curve is 1.0, just as the sum of the probabilities of the eleven discrete outcomes of the binomial distribution in Figure 3.4 is 1.0. (The vertical axis for the other probability density functions illustrated in this text will not be labeled because it is not of intrinsic interest.) The Normal distribution, however, pertains to the distribution of a continuous variable, x. If you draw, or generate, a random value of x from a Normal distribution, the probability that x falls in a particular interval, say, from a to b, is given by the area under the curve between a and b. Software or the use of widely available tables of the Normal distribution can be used to calculate these probabilities.

Figure 3.6 The Standard Normal Distribution. Statistical convention is to denote a variable that has the standard normal distribution by z.

The Normal distribution is a mathematical ideal, but real-world populations may be adequately approximated by it. The more important characteristic, though, for analyzing real-world data is that random samples from a Normal distribution (e.g., computer generated) often look like real data, whether small numbers of observations or large. There are gaps, clusters, apparent outliers, longer tails in one direction or the other, etc. That is, real data we get from experiments and other sources can often look like a “random sample” from a Normal distribution (meaning independent observations generated, e.g., by a computer programmed to do so; see Appendix 3.B for a demonstration of random sampling from a Normal distribution). This is quite fortunate because an awful lot of statistical theory has been built on the model (assumption) of data obtained by random sampling from a Normal distribution. So, random samples from a Normal distribution can serve as a frame of reference and source of reference distributions for the “data we got.”

The Normal distribution, as a mathematical function, is characterized by two parameters—two quantities in the mathematical expression for the distribution. These parameters determine where the curve is centered and how spread out it is. Conventional symbols used for these parameters are μ (mu) for the distribution mean (center) and σ (sigma) for the distribution standard deviation (spread). The standard Normal distribution in Figure 3.6 corresponds to μ = 0 and σ = 1.0. (By way of comparison, the two parameters of the binomial distribution are n and p, and they define a particular binomial distribution and together determine the center and spread and shape of a particular binomial distribution.)

The Normal distribution is symmetric about its center, which is the distribution mean, μ. The Normal distribution also has the properties that 95% of the distribution falls in the interval, μ ± 1.96σ (typically rounded to μ ± 2σ), and 68% of the distribution falls in the interval, μ ± 1.0σ. Thus, the larger σ is, the more spread out the distribution is. As mentioned, software can calculate any probabilities of interest for a Normal distribution, given input values of μ and σ. Textbooks generally have a table of standard Normal distribution probabilities and percentiles. Much more extensive discussions of the Normal distribution can be found in many statistical texts. My focus in this chapter is on how the Normal distribution can help us evaluate the difference between shoe sole materials and, still to come, tomato fertilizers.

Figure 3.7 shows an individual value plot (from Minitab) of the 10 differences—yet another way to display this experiment’s data. As we have noted before, two of the differences are negative; the other eight are positive. The pattern of variability among these 10 data points is not at all unusual when sampling from a Normal distribution (see Appendix 3.A), so to develop a reference distribution based on the Normal distribution model is a reasonable thing to do. (Ties, which are unusual for a continuous variable, result from the resolution of the measurements—rounded to one-tenth of a percent.)

Figure 3.7 Individual Value Plot of Shoe-Wear Differences (%). The average difference is indicated by the blue symbol.

Now, if there is no real difference between materials A and B, the appropriate Normal distribution model for measured differences would have a mean of zero. Thus, to address the question whether it is real or random, we will compare the data in Figure 3.7 to data that could have come from a Normal distribution with a mean of zero (but with unspecified standard deviation).

Eyeball-wise, is it easy to imagine a Normal distribution centered at zero yielding a random sample as off-centered from zero as the data in Figure 3.7? I don’t think so, but that’s a subjective impression based on my vast experience. The following analysis calibrates this visual impression.

As in the previous two analyses (the sign test and the randomization test), the comparison of the data we got to the distribution of data we might have gotten from a Normal distribution will be done using a summary statistic. In this case (theory tells us—Trust me!), the appropriate summary statistic is what statisticians call the t-statistic. This statistic is a function of the sample size, n, the data average, dbar, and s, the standard deviation of the observed differences. The sample standard deviation is equal to

where di represents the ith wear difference, B–A, for the ith boy. For a random sample of data from a Normal distribution, s is an estimate of σ.

In particular, Normal distribution theory tells us that in random sampling from a Normal distribution with mean μ, the statistic

has a known probability distribution, known as the “Student’s t-distribution.” This relationship is what links the data we got to the distribution of data we might have gotten for a particular value of μ. The t-distribution depends only on a parameter called the degrees of freedom (generally abbreviated df) associated with the standard deviation, s, namely, n − 1. That is, the distribution does not depend on the Normal distribution’s unknown mean, μ, or standard deviation, σ. For moderately large n (say, n > 30), the t-distribution is closely approximated by the standard Normal distribution.

The term “degrees of freedom” needs some explanation. The deviation of the ith difference from dbar is di − dbar. The above formula for s involves the sum of the squares of these deviations. A mathematical property of the unsquared deviations is that they sum to zero. This means that if you arbitrarily specified n − 1 of these deviations, the remaining deviation would be determined by subtraction (because the sum of all the deviations has to be zero). Hence, in engineering terminology applied to statistics, there are n − 1 degrees of freedom associated with the standard deviation, s.

If there is no real, underlying, difference between the two materials, then μ = 0. Substituting 0 for μ in the above expression for t leads to the test statistic, ![]() . The distribution of t-values we might have gotten when μ = 0 is the t-distribution with n − 1 degrees of freedom. Thus, calculating the t-statistic based on the data we got and comparing calculated t to the t-distribution with n − 1 df provide another significance test for the comparison of the wear qualities of the two materials.

. The distribution of t-values we might have gotten when μ = 0 is the t-distribution with n − 1 degrees of freedom. Thus, calculating the t-statistic based on the data we got and comparing calculated t to the t-distribution with n − 1 df provide another significance test for the comparison of the wear qualities of the two materials.

For the shoe data,

The t-distribution, more appropriately the family of t-distributions, is widely tabulated and available in software. Figure 3.8 displays the t-distribution with 9 df and shows where our observed t-value of 3.4 falls on this distribution and the corresponding tail probability, the P-value. Under this distribution, the probability of a t-value greater than or equal to 3.4 is .004. This one-tail P-value summarizes the graphical comparison in Figure 3.8 and indicates that the t-value we got is rather unusual if there is no difference between shoe materials. This t-test P-value is quite close to the .005 obtained under the randomization test, which hints at another reason that an analysis based on the Normal distribution can often be used: the t-test based on Normal distribution theory often provides a good approximation to the “exact” randomization test, a test which depended only on the assumption of random assignment of treatments to experimental units. Thus, we can often use the extensive Normal distribution-based analysis methods in place of the less available and sometimes complex randomization analyses.

Figure 3.8 Comparison of the Observed t-Value (3.4) to the t-Distribution with 9 df.

Figure 3.8 and the P-value summarizing that comparison tell us that the evidence is strongly against concluding that the observed (average) difference between materials is purely random. The evidence strongly indicates that the difference is real because it is very rare that a random sample from a Normal distribution with a mean of zero could yield data as far offset from zero as our observed shoe material differences (see Fig. 3.7).

This analysis is known as the “paired t-test analysis,” and it can be carried out by various statistical software packages. Table 3.2 shows the Minitab output for this analysis.

Table 3.2 Minitab Output for Paired t-Test: Boys’ Shoes.

| Paired T for B–A | ||||

| N | Mean | StDev | SE Mean | |

| B | 10 | 11.04 | 2.52 | .796 |

| A | 10 | 10.63 | 2.45 | .775 |

| Difference | 10 | .41 | .387 | .122 |

| 95% CI for mean difference: (.13, .69). | ||||

| t-test of mean difference = 0 (vs. not = 0): t-value = 3.35, P-value = .009. | ||||

Table 3.2 introduces some new terminology: the column labeled SE Mean, which denotes the standard error of the mean, discussed in more detail below and in Appendix 3.B. The mean of interest in this analysis is the average wear difference, dbar = .41. The standard error associated with dbar is simply the denominator of the above t-statistic: ![]() .

.

The ratio of dbar to its standard error is t = .41/.122 = 3.4. The t-value of 3.4 means that the difference between the observed average wear difference of .41% and zero is equal to 3.4 standard errors.

(For reasons to be discussed later, Table 3.2 pertains to the case of a two-tailed significance test: the P-value is the tail above t = 3.4 and below t = −3.4. Thus, the P-value in Table 3.2 is twice the upper-tail P-value, rounded. Minitab’s two-tail analysis also includes a 95% confidence interval on the underlying average difference which will be discussed in the following and used in subsequent analyses.)

Excel’s® analysis of the shoe data is shown in Table 3.3.

Table 3.3 Excel Paired t-Test Analysis of the Boys’ Shoe Data.

| t-Test: Paired Two Samples for Means | ||

| B | A | |

| Mean | 11.04 | 10.63 |

| Variance | 6.34 | 6.01 |

| Observations | 10 | 10 |

| Pearson correlation | .99 | |

| Hypothesized mean diff. | 0 | |

| df | 9 | |

| t Stat | 3.35 | |

| P(T ≤ t) one tail | .004 | |

| P(T ≤ t) two tail | .009 | |

In Table 3.3, Pearson’s correlation is a summary statistic that measures the linear association of the A and B results. Graphically, it measures the linearity of the A–B data scatter plot in Figure 3.1. If the data points fell exactly on a straight line with a positive slope, the correlation coefficient would be 1.0. Perfect linearity with a negative slope would have a correlation coefficient of −1.0.

Summary and discussion: Significance tests

For the boys’ shoes experiment, we have illustrated the process of comparing “the data we got” to the distribution of “data we might have gotten” in three ways—three summary statistics giving rise to three reference distributions.

Sign test

The summary statistic used was the number of boys (eight), out of 10, for which B had more wear than A. The reference distribution was the binomial distribution based on the assumption (hypothesis) that the underlying probability of A winning the wear comparison was p = .5. This comparison is shown in Figure 3.5 and the test’s P-value was .055.

Randomization test

The summary statistic was the average wear difference, B–A, of .41%. The reference distribution was the collection of all 1024 possible average differences corresponding to all possible random assignments of plus or minus signs to the observed 10 differences between left and right shoe wear. BHH generated that distribution and this test’s one-tail P-value was .005.

t-Test

The summary statistic was the t-statistic, calculated under the hypothesis that the underlying mean difference in wear was μ = 0. The reference distribution was the t-distribution with nine degrees of freedom, generated from the assumption of an underlying Normal distribution of wear differences, centered on μ = 0, and the resulting one-tail P-value was .004.

Now, it should not be surprising or a concern that three ways of summarizing the data and creating corresponding reference distributions yield different answers. The messages, though, are all complementary: the experimental data all point to the conclusion that, for different ways of looking at the data, the apparent differences between materials are not just due to chance.

Nor is there any reason to expect or insist that only one answer is “right.” Theory would dictate a best answer only under specific assumptions. If it is assumed that the boys were a random sample from an assumed population and that the wear differences for that population have a Normal distribution, then the t-test is optimum. But, as has been discussed, the boys were likely a “judgment sample,” not a random sample from a defined population. Also, the assumption of Normality, while plausible, is at best an approximation to the ill-defined population’s actual distribution. If it is assumed that the shoe wear on the boys’ left and right feet would have been the same as they were in this experiment, even if the shoe was on the other foot, so to speak, then the randomization test is valid. The sign test rests on the weakest assumptions: nothing is assumed about the magnitude of the shoe-wear percentages; only the A or B winner on each boy is considered. So, this test ignores pertinent information, which is not an optimum thing to do. Nevertheless, the sign test is an easy, readily communicated, first way to look at the data and that is a valuable asset.

One further point is that in all three analyses, the conclusions apply just to the boys in the experiment: the shoe-wear differences among these 10 boys are unusual just due to chance; there must be a real underlying difference and that difference is large enough to stand out from the inherent variability of the experimental units. Any inference that these results apply to the general population of shoe-wearing boys depends on knowledge about these boys relative to that population. That knowledge is subject-matter knowledge, not statistical inference or magic. It is based on the way the boys were selected and what a shoe manufacturing company knows about their shoe-wearing habits relative to those of the general population. This dependence of the experiment’s meaningfulness and utility on subject-matter knowledge puts pressure on the experimental design to assure that the experiment involves meaningful experimental units—both in number and nature—as we discussed in Chapter 2. Good statistics relies on good subject-matter involvement. Understanding this interaction creates buy-in from all involved in planning and conducting experiments, interpreting the results, and acting on the information obtained.

The reader should not despair. This example and its analyses do not support the old saw that “Statisticians can get any answer you want.” Statistics is about dealing with uncertainty. It must be recognized, as just illustrated, that there can generally be more than one way to measure uncertainty. But, nevertheless, we learn from data; we advance the state of knowledge. We have learned that it would be unusual for the observed differences in this experiment to occur “just by chance.” We need to examine the implications of that difference. Should we switch to the cheaper material? Will the customer notice the difference and stop buying our shoes? Is it right to sacrifice quality for profits? These questions, which are much more important than whether we should use a randomization test or a t-test, are addressed in the following.

The data analysis process illustrated here, at some length, is generally called “significance testing” and sometimes “hypothesis testing.” The formal approach to hypothesis testing (well covered in many statistical texts) is to express the problem in terms of decision-making. A “null hypothesis” is stated (such as μ = 0) and, in essence, the decision rule is that if the resulting test statistic falls in a particular region of the reference distribution (generally either one selected tail or two tails) having a specified occurrence probability (often .05), the hypothesis will be rejected. Some regulatory or quality control applications, in which the “false-positive” probability must be controlled, call for this formality. Information-gathering, knowledge-generating experiments have a less prescribed objective. It’s what we learn from the comparisons of data we got to the distribution of data we might have gotten that is the objective in this context. Decisions, such as what shoe sole material to use, require (in Archie Bunker’s terminology) “facts,” not just “statistics.”

Economic analysis: The bigger picture

Material B is cheaper than A and it doesn’t wear as well. If the shoe manufacturer switches to B, the company will save money but may lose customers if it becomes apparent that the shoes do not wear as well as what customers have come to expect. Let’s examine that trade-off.

In the experiment, the average percent wear over the duration of the experiment was about 10%, and the B–A average difference was about .4%. Suppose that shoe wear-out is operationally defined as 50% wear. That amount of wear, according to shoe lore, let us say, is the approximate condition that would prompt a boy’s parents to buy new shoes. Let’s project forward and suppose that the B–A average difference would at that level of wear would also be a factor of five larger, namely, 5 × (.4%) = 2%. Thus, if material A would provide 1 year of wear, material B would wear out 2% sooner, that is, by .02(365) = 7 days sooner. Surely, no one would notice that difference (“Don’t call me Shirley,” Airplane 1980). Let’s tell the boss to go with the cheaper material and expect a nice bonus from the cost savings achieved.

But don’t be hasty. There are other characteristics of a shoe that are important to customers. What if material B soles don’t sound as good (I once had a pair of sneakers that were so squeaky I donated them to charity), look as good, or feel as good as material A soles? (Were the boys asked to score these attributes? Careful planning before the experiment would have seen that they were.) If the new sole has any of these characteristics, we may lose customers for these reasons.

One other consideration is that the difference in wear-out times for the two materials varies. For some boys, the difference in shoe lifetimes (50% wear-out times) would be larger than the average value of 2%. Suppose we’re willing to make the working assumption that the differences would vary among our customer population with approximately the standard deviation observed among the 10 boys in the experiment. That standard deviation was about .4%. Thus, for the “plus two-sigma” person (only about 2.5% of the distribution exceeds the mean plus two-sigma point on the Normal distribution), the wear difference at a nominal 10% wear would be .4 + 2(.4) = 1.2%. Projecting forward to 50% wear-out by multiplying by five means that wear-out time in such instances would be about 6% less for B than for A. For a 1-year life, this means material B would wear out about 3 weeks sooner. That may be noticeable and cost us some customers. The bottom line is that manufacturing cost savings and increased profits could be wiped out by the loss of customers. One can envision further cost/benefit analysis that would address this possibility.

Note further that this sort of back-of-the-envelope economic analysis is based on the observed mean and standard deviation of wear differences for only 10 boys. Even without dealing with this additional uncertainty technically (by methods discussed later), it’s apparent that this limited amount of data raises the risk of making a wrong decision.

Then there’s ethics. Suppose the shoe company’s slogan is “Always the best,” meaning they pride themselves on using the best available materials and methods to produce shoes. If they cut corners on shoe sole material, what’s next? More cheap substitutes for other parts of the shoe, nearly as good as the original material? The product could gradually lose its quality, lose its reputation, lose business, go bankrupt!! (Schlitz beer experienced just this sort of decline in the early 1970s: “The reformulated product resulted in a beer that not only lost much of the flavor and consistency of the traditional formula but spoiled more quickly, rapidly losing public appeal” Wikipedia 2014d). Does the shoe design team want to risk starting the company down this slippery slope? Maybe the prudent thing is a larger, more informative experiment that is capable of resolving some of the questions arising from this experiment. Maybe the boss wants a decision right now, though. What’s a body to do?

This may be an overly dramatic turn in my story, but it makes this point: there are often more than mere technical issues involved in designing, conducting, and analyzing the data from an experiment. Bosses, customers, thesis advisors, regulators, and others can all have a stake in the outcome and can all have agendas. For example, if the suppliers of sole materials A and B knew about the experiment being planned to compare their materials, they would want to assure that the experiment was not in some subtle or inadvertent way biased against their material. When the Department of Defense tests a proposed new multimillion dollar system to determine its combat readiness, there will be many interested parties with a stake in how the experiment is designed, conducted, and analyzed. Such interest is not sinister, only realistic. In fact, you want people to be interested in the experiment. That’s the best way to assure that subject-matter knowledge is fully engaged in the project. The humble statistician working this project has to know how to work ethically, intelligently, and effectively in this environment, not just crunch numbers.

Statistical confidence intervals

A significance test can tell you whether an observed difference, for example, between means, is real or could easily be random, but it doesn’t tell you how large or small an actual underlying difference could be. For example, for the 10 boys in the shoe sole experiment, the average wear difference was .4%. The significance test told us that an underlying average difference (this underlying difference being the parameter, μ, in the “distribution of data we might have gotten”) of zero would not be consistent with the data. But how large or how small might that underlying difference be, consistent with the data? There is uncertainty in drawing conclusions based on data. We need to look at that uncertainty before deciding which sole material to use.

The degree of agreement of the data with any hypothesized or conjectured value of μ, not just zero, can be evaluated using the t-statistic:

For example, if the supplier of material B claimed, “We think our material will wear more than material A, on average, by only .5%,” then we would evaluate the data against that claim by the statistic

By comparing this t-value to the t-distribution with 9 df (shown in Fig. 3.8), we can see that this t-value is not far from the middle of the distribution; the software for the t-distribution shows that it falls at the lower .24 point, not particularly unusual. There is no evidence in the data to contradict the supplier’s claim.

Someone representing the shoe manufacturer might say, “If material B wears more than 1% more than material A, though, we wouldn’t like that.” (Perhaps this person has already done the cost/benefit study mentioned earlier.) Is it possible to get the data we got if indeed the underlying value of μ was 1.0? The t-statistic for evaluating this conjecture is

This value is far out on the lower tail of the t(9) distribution (P-value <.001), so the data put to rest the shoe rep’s worry about a 1% increase in wear using material B.

These calculations show that values of μ of 0 or 1% are not at all consistent with the data. But μ = .5% is. In general, and intuitively, values of μ close to .41, the data average, are more consistent with the data than values of μ further away from .41. This notion of closeness, or consistency, is characterized in statistics by the calculation of statistical confidence intervals. The objective of confidence intervals is to define a “ballpark” of values of μ that are consistent (in agreement) with the observed data to a specified degree.

To derive a confidence interval, we start with the test statistic for characterizing the agreement of the data with any possible value of μ:

Values of μ that are consistent with the data are those that lead to a t-value in the middle of the t-distribution with n − 1 df. To be specific about “middle,” let’s consider the question: What values of μ lead to a t-value in the middle 95% of the t-distribution? The answer, algebraically, is given by this inequality:

where t .025 is the upper .025 point on the t-distribution with n − 1 df. Rearranging this inequality leads to the following inequality for μ:

This inequality on μ defines what is called a 95% statistical confidence interval on μ, the underlying mean of the Normal distribution used as a reference model for our experimental data. The end points of this interval are the “confidence limits.” By changing the percentile of the distribution used to characterize “middle” and thus changing the t-value in the inequality, we can obtain 90%, 75%, etc. confidence intervals.

For the shoe data, with n = 10, there are 9 df, so from tables or software, we find that t .025 = 2.26. Thus, the 95% confidence interval on μ for the shoe data is given by

In round numbers, we can summarize this calculation by saying that the data indicate that the average wear difference between the materials is about .4%, but it could be as low as .1% or as high as .7%, at the 95% confidence level.

This confidence interval is shown graphically in Figure 3.9. The t-statistic, as a function of μ, is given by

so t is a linear function of μ.

Figure 3.9 Illustration of Confidence Interval: Boys’ Shoes Experiment.

The solid line in Figure 3.9 plots t versus μ. At the right side of the figure is the t-distribution with 9 df. Its center 95% is the interval from −2.26 to 2.26. The two horizontal arrows in the figure correspond to these end points. These arrows intersect the line at μ = .13 and μ = .69. Thus, as defined earlier in this section, for mu between these two values, the resulting t-statistic is in the middle 95% of the t(9) distribution. The interval (.13%, .69%) is the 95% confidence interval on μ. Values of μ in this interval are consistent with the data to the extent that corresponding t-values are in the middle 95% of the t(9) distribution.

Discussion

The denominator of the t-statistic is ![]() , which is equal to .12% in this case. This quantity, as discussed earlier with respect to Table 3.2, is called the “standard error of the difference” and might be denoted by SE(dbar). (Technically, a standard error is the estimate of the standard deviation of the distribution of the estimate. That is, the distribution of an average difference, dbar, has a standard deviation of

, which is equal to .12% in this case. This quantity, as discussed earlier with respect to Table 3.2, is called the “standard error of the difference” and might be denoted by SE(dbar). (Technically, a standard error is the estimate of the standard deviation of the distribution of the estimate. That is, the distribution of an average difference, dbar, has a standard deviation of ![]() , where σ is standard deviation of the distribution of differences. Replacing the unknown σ by its estimate, s, provides the standard error.) The standard error pretty well determines the width of the confidence interval on the underlying difference, μ, because the t-value multiplier, for a given confidence level, for at least moderate degrees of freedom is fairly constant. For example, for 95% confidence and a sample size of 30 or more, the half-width of the confidence interval is essentially 2.0 times the standard error of the difference.

, where σ is standard deviation of the distribution of differences. Replacing the unknown σ by its estimate, s, provides the standard error.) The standard error pretty well determines the width of the confidence interval on the underlying difference, μ, because the t-value multiplier, for a given confidence level, for at least moderate degrees of freedom is fairly constant. For example, for 95% confidence and a sample size of 30 or more, the half-width of the confidence interval is essentially 2.0 times the standard error of the difference.

Algebraically and graphically, we have found that an underlying average wear difference, μ, between .13 and .69% is consistent with the data to the degree that a calculated t-value for μ in this interval will fall in the middle 95% of the t(9) distribution. In statistical terminology, the 95% confidence interval for μ, based on this experiment’s data, is the interval (.13%, .69%). Now, what do we do with this information?

Earlier (p. 48), to analyze the effect of the findings of this experiment, we multiplied the average wear difference in this experiment (.4%) by a factor of 5.0 to estimate the average wear difference in a shoe’s lifetime. To get a conservative upper bound on the average lifetime wear difference, we multiply the upper end of the confidence interval, .69%, by 5.0 to get 3.5%. Thus, the average wear difference could be as high as 3.5% at the 97.5% confidence level (the upper end of a two-sided 95% confidence interval is an upper 97.5% confidence limit). Applied to a year’s time, this means that the average difference could be .035 × 365 = 13 days, essentially two weeks. One might suppose this average wear difference would still not be too noticeable by customers.

Earlier (p. 49), we also considered an upper percentile on the wear difference distribution as another way to interpret this experiment’s results. It is possible, by the method known as statistical tolerance limits, to obtain a confidence interval on a percentile of interest, but doing so is beyond the scope of this chapter. The essential point is that statistical confidence limits can be used to characterize the uncertainty with which this experiment can estimate characteristics of the underlying wear difference distribution.

The selected confidence level in this example was the 95% level for the two-sided interval. Other choices are, of course, possible. It is sometimes useful to calculate confidence intervals at a variety of confidence levels. Choice of confidence level corresponds to how conservative one wants to be in defining the range of plausible parameter values to consider. There are no definitive rules for choosing a confidence level, but subject-matter considerations, like the cost of adopting a seriously unsatisfactory material, should help make the determination. Conventional confidence levels are 90, 95, and 99%.

Why calculate statistical confidence limits?

Confidence limits are not just for perfunctory reporting—the “statistically correct” thing to do. They are to be used to help guide subsequent decisions. In particular, they provide a ballpark for economic or other analyses. Here, we found that, nominally, B would wear out about a week sooner than A. Using the upper end of a 95% confidence interval on the underlying average wear difference led to the conclusion that, conservatively, B would wear out 2 weeks sooner than A. If management’s view is that neither of these differences is likely to affect sales, the nominal and the conservative analysis would lead to the same conclusion and action: switch to the cheaper material.

In general, confidence intervals provide limits for subsequent parametric analyses pertaining to subsequent actions or decisions. If the same decision would be reached for any value of μ in its confidence interval, then this decision is robust to the uncertainty inherent in the limited amount of data from the experiment. If the same decision would not be made at both ends of the confidence interval, then management is either faced with a risky decision they may not want to make or a decision that more data are needed in order to reduce the risk to a tolerable level.

Sample size determination

Because of the small number of subjects (boys) in this experiment and the resulting uncertainty about what the underlying average wear difference between materials B and A might be, one possible course of action would be to run a follow-up experiment designed to provide more definitive information. More precision will also require a larger number of participants. How many subjects do we need and how do we decide?

Consider the 95% confidence limits on the underlying mean difference, μ:

For our 10 boys, that confidence interval was (.13%, .69%). Suppose a cost/benefit analysis by the company’s green-eyeshade analysts and lawyers led to the conclusion that if the underlying mean difference in wear (B–A) was no more than .5%, then the company would be comfortable replacing material A by B: the risk of the reduced wear leading to loss of customers would be minimal.

Let’s translate that objective into this statistical criterion: if the data from this follow-up experiment result in the upper end of the 95% confidence interval on μ being .5% or less, we will conclude the case for changing materials is adequately made. That is, we want to choose n so that

Of course, we don’t know what values of dbar and s will result in the follow-up experiment. For planning purposes, though, let’s use the results from the first experiment (I’m assuming that the second experiment follows basically the same protocol as the first): dbar = .41% and s = .39%. How large would n have to be so that the upper 97.5% confidence limit on μ would be equal to .5%?

To answer this question, we need to solve for n in the equation

For a first cut, set the t-value, which is a function of n, equal to 2.0. Then, the equation to solve is

which leads to n = 75. For 74 df, t .025 = 1.99, close enough to 2.0 that there is no need to refine the analysis.

Thus, by the objective we set, our follow-up experiment would require 75 boys. Of course, there is no guarantee that the same dbar and s would be obtained, so we might want to repeat this sample size analysis with somewhat conservative working values of these summary statistics. We might also want to consider other confidence levels. Out of a suite of such analyses, we could arrive at the number of boys to recruit. Also, while we’re at it, we ought to fix the shortcomings in the original experiment—collect data on boy characteristics and evaluate other characteristics of the shoe materials. We also want to be careful on how we select the boys to assure that they will fairly represent the customer population.

Of course, some bright engineer might say, “Why go to all the trouble to recruit a bunch of unruly, unreliable boys whose shoe-wearing habits we can’t really control. Give me your budget and I’ll build a shoe-wear testing machine and we’ll control the variables that affect shoe sole wear.” Never discount technology. Never underestimate, though, the value of realistic testing.

There are more detailed ways to address sample size determination, but I will defer those to the next experiment.

Tomato Fertilizer Experiment

Experimental design

In another example from BHH (1978, 2005), an experiment to compare two fertilizers was conducted as follows: the experimental units were 11 tomato plants (presumably all of the same variety, approximate size, and health), planted in one row of a garden. The experimenter randomly assigned five of the plants to get Fertilizer A and six to get a possibly improved Fertilizer B. Experimental protocol and plant spacing, let us further assume, assured that the fertilizer used on one plant would not bleed into the adjacent plant sites. The tomatoes were harvested when ripe and weighed resulting in the total weight of tomatoes, by plant, given in Table 3.4. The question of interest is whether these data suggest choosing one fertilizer over the other. Analysis 1: Let’s plot the data.

Table 3.4 Results of Tomato Fertilizer Experiment.

| Position | Fertilizer | Yield (lbs.) |

| 1 | A | 29.9 |

| 2 | A | 11.4 |

| 3 | B | 26.6 |

| 4 | B | 23.7 |

| 5 | A | 25.3 |

| 6 | B | 28.5 |

| 7 | B | 14.2 |

| 8 | B | 17.9 |

| 9 | A | 16.5 |

| 10 | A | 21.1 |

| 11 | B | 24.3 |

Box, Hunter, and Hunter (2005, p. 78); reproduced with permission from John Wiley & Sons.

Analysis 1: Plot the data

How should these data be plotted? We cannot plot A yields versus B yields, a la the boys’ shoes, because the experimental units are not paired and they’re randomly distributed along the row. In this case, each yield is associated with a fertilizer and, thanks to BHH(!), a row position. (I applaud that inclusion because some experimenters do not keep track of such ancillary information. Recall in Chapter 1 that ancillary data pertaining to wire bonding led to Ed Thomas finding and then helping to correct problems in the integrated circuit bonding and testing processes.) As we will soon see, having this ancillary variable recorded is a key to interpreting the data and to extracting (kicking and screaming) the message in these data. There is a tendency in textbooks not to record such information. Never ignore ancillary data.

A straightforward way to display (all) the data in a way that captures all the dimensions in the data is shown in Figure 3.10, which is a scatter plot of yield versus position, with different plotting symbols for the two fertilizers. This plot shows all three dimensions of the data: yield, fertilizer, and row position. Right away, as should be the case with a good display, we “see” important information about the relationship of yield to fertilizer and row position: there appears to be a distinct trend in soil quality or fertility, resulting in tomato yield that generally drops off from left to right along the row. If we had not kept track of the tomato yields by position as well as fertilizer, we would not have learned about the fertility trend. Further, the plant in position 2 had unusually low yield, relative to its neighbors. Perhaps tomato worms or disease infected that plant; perhaps there was a recording error—the actual yield might have been 21.4 lbs., not 11.4. If we had not been able to associate the yields with position, this “outlier” would have not been detected: the yield on that plant would look consistent with the variability among all the plants that got Fertilizer A, similar to the case of the Opel and Chevette in the car data in Chapter 1.

Figure 3.10 Data from Tomato Fertilizer Experiment. Yield, in pounds, is plotted versus row position, by fertilizer.

There is a principle involved here:

- Outliers need to be explained, if possible.

Statistical techniques such as plotting the data and statistical significance tests for outliers (see, e.g., Barnett and Lewis 1994) can identify apparent outliers; subject-matter insight is needed to explain them. Note that in this case, plotting the yields versus position allowed the possible outlier to be identified. Plots of the A and B data that ignore position would not (could not) identify position 2 as a possible outlier. If an outlier cannot be explained, my practice is to analyze the data with and without the outlier(s) to see if it makes a difference in the conclusions and subsequent actions.

Now, with respect to the reason the experiment was run—Fertilizer A versus B—the yields from the two fertilizers are pretty well intermingled in Figure 3.10 (setting aside position 2 from this assessment). There’s no evidence of an advantage of one fertilizer over the other. The message from this experiment in this garden is that:

- It is more important where you plant your tomatoes than what fertilizer you use!

And note that we arrived at this finding from just the picture, no number crunching required. The experiment is not a failure, though, unless you are the developer of Fertilizer B who expected it to do better than the competition, Fertilizer A. The gardener has learned that she could increase yield by improving and equalizing the soil quality in her garden and that she can choose fertilizer next year based on other considerations, such as cost or environmental impact.

The value of randomization

One further important point to make is the value of randomization. If the gardener had run a convenient experiment, such as Fertilizer A on the left half of the row and Fertilizer B on the right half, misleading results would have been obtained. The soil-quality trend would have been wrongly interpreted as a fertilizer difference. In statistical terminology, row position would be confounded with fertilizer type. Randomization mixed up the fertilizer assignments so that we had an essentially fair comparison of the two fertilizers. (Note though that randomization could have resulted in the convenient allocation, but with small probability. A prudent experimenter would have rerandomized the assignments just to provide protection against possible soil-quality trends.)

If the experimenter had suspected a fertility trend beforehand, the experiment could have been designed differently to minimize the effect of such a trend. For example, as Box, Hunter, and Hunter (2005) suggest, fertilizer assignment could have been done randomly within each adjacent pair of plants (which would require an even number of plants). Such a design approach would be based on subject-matter knowledge that fertility generally varies gradually over an area such as a garden, not erratically from plot to plot. Thus, if there is a trend, adjacent plots would be more similar than nonadjacent plots. The resulting experiment would then have been structured like the boys’ shoes example. There would be five or six pairs (blocks) of experimental units in the experiment, based on proximity.

The importance of ancillary data

There is a lesson in both the boys’ shoes experiment and the tomato fertilizer experiment—the need to think about and record ancillary data (also called concomitant data) pertaining to the experimental units and experimental conditions, in addition to the treatments and responses of primary interest. Row position and L/R foot assignment are two such ancillary variables in these two examples. Then, such data need to be used in investigating possible, perhaps unanticipated, relationships—relationships that could either enhance understanding or invalidate findings. In the shoe example, we were left wondering if boy variables, such as age and weight, influenced shoe wear. Better to have thought of these possibilities before the experiment was run, recorded the data, and then did an analysis to see if such factors contribute to shoe wear.

A New Tomato Experiment

Back to the tomato story: suppose that the gardener rototills nutrients into her garden, works the soil, and then has soil testing done that confirms that she has achieved improved and more uniform soil quality in her garden. Suppose she also expands her tomato patch so that the following year she can plant 16 plants and then, being a good scientist, always looking for ways to improve things, she conducts an experiment to compare Fertilizer A to a new candidate, Fertilizer C.

Analysis 1: Plot the data

Data (randomly generated by the author’s computer) from the gardener’s year 2 experiment are given in Table 3.5 and plotted in Figure 3.11. Note the larger and more consistent yields than were obtained in Experiment 1. She learned from her experience—the previous year’s data. Her designed experiment was the basis for that learning.

Table 3.5 Results of Year 2 Tomato Experiment.

| Position | Fertilizer | Yield |

| 1 | A | 30.5 |

| 2 | A | 28.8 |

| 3 | C | 32.0 |

| 4 | A | 29.0 |

| 5 | A | 27.1 |

| 6 | A | 30.1 |

| 7 | C | 26.6 |

| 8 | C | 34.2 |

| 9 | C | 28.7 |

| 10 | C | 32.8 |

| 11 | C | 30.6 |

| 12 | A | 30.8 |

| 13 | A | 26.9 |

| 14 | C | 32.8 |

| 15 | C | 29.4 |

| 16 | A | 28.8 |

Yield in Pounds.

Figure 3.11 Tomato Yield by Position and Fertilizer: Experiment 2.

Figure 3.11 (in contrast to Fig. 3.10) shows no evidence of a fertility trend along the row of tomato plants (but it’s good that we could check this). There is some (visual) evidence that Fertilizer C produces higher yield than A: the top four yields are all from Fertilizer C (but so was the lowest single yield). There is substantial overlap among plant yields for the two fertilizers, though, so it remains to be seen whether these results indicate a real difference in fertilizers or could easily be due only to the variability of tomato yields in this garden. Stay tuned.

Figure 3.11 shows that tomato yield is not associated with position, so the data display can be simplified by ignoring position. Figure 3.12 displays a “side-by-side dot plot” of the tomato yields—the yields are simply plotted along a single axis, with separate plots for the two fertilizers. The average yields for the two fertilizers are also indicated and connected. Figure 3.12 tells us that Fertilizer C apparently leads to higher yields, on average by about two pounds, but with more variability. The A data are covered by the C data. Are these apparent differences between A and C real or could they just be random? It is now time to carry out some quantitative analyses—to calibrate our eyeball impression by evaluating the extent to which the observed difference between fertilizers could be “real or random.”

Figure 3.12 Dot Plot of Yields by Fertilizer: Experiment 2.

Significance tests

As with the boys’ shoes experiment, there are a variety of significance tests that are appropriate for the second tomato fertilizer experiment. In Experiment 2, the experimental units were more homogeneous: yield was not a function of row position as it was in Experiment 1. The analysis methods we will illustrate are based on models that assume we have homogeneous data, as would occur in independent random samples from each of two distributions. The Experiment 1 data were not consistent with this model. Instead, tomato yield depended on location as well as, possibly, fertilizer. Thus, in a model for this situation, there would be a different distribution of possible yields at each position in the row. The yields would be position dependent, not random across positions. It is possible to construct a model and analysis for this situation, called the “analysis of covariance,” but that analysis is beyond the scope of this text. It should be noted, however, that the randomization test is valid even in the presence of a fertility trend and the t-test is still valid as a useful approximation to the randomization test, even though the assumptions on which it is based are not a particularly good model for these data.

One’s intent in a statistical analysis should go beyond conducting a significance test and finding a P-value. (Statistical) life does not end with a P-value. The analysis goal, as with the boys’ shoes, is a more general description of the relationships found in the data and the implications of those relationship for further actions. Our graphical analysis of Experiment 1 showed that yield was dependent on position, an important finding that led to further spadework and experimentation.

The above plot of the Experiment 2 data (Fig. 3.12) shows some evidence of a real difference between fertilizers. Similar to the boys’ shoes experiment, the analysis objective is to compare the data we got with a reference distribution of data we might have gotten, if there was no difference in fertilizers.

If there is no difference between fertilizers, then the 16 yields obtained would have been obtained regardless of what fertilizer was used—the observed yields reflect only the intrinsic quality of the soil and plant at each site. Any additional effect of fertilizer (it is assumed) would be the same for the two fertilizers, so a different random assignment would have resulted in the same 16 yields, but with different labels corresponding to the two fertilizers. Thus, we can choose a summary statistic that measures the difference between fertilizers and then generate the reference distribution of that statistic by calculating it for all the 12 870 (= the number of combinations of 16 objects selected 8 at a time = 16!/(8!8!)) possible random assignments of fertilizers to experimental units. As with the boys’ shoes experiment, we will consider three summary statistics: one that reflects just the ordering of the yields and two that consider the magnitudes of the yields for the two fertilizers.

Rank sum test

The rank sum significance test, like the sign test for paired data, is based only on the data ordering, not the yields themselves. To do this test, first rank the combined data (the 16 tomato yields in Table 3.5) from low to high, with the smallest observation having rank 1 and the largest observation having rank 16. The summary statistic is then the sum of the ranks of the observations in one of the groups. (The summed ranks of one group determine the summed ranks of the other group, so there is no need to sum the ranks of the second group; it can be determined by subtraction.) If, for example, the group being counted generally has the smallest yields, the rank sum will be small relative to the sum of the ranks of the other group. For the tomato fertilizer data, the ranks of the eight Fertilizer A results are 2, 3, 4, 6, 7, 9, 10, and 12, which sum to 53. Is this unusually small or large? Don’t know. We need a reference (probability) distribution against which to compare this result.

As a reference distribution for the rank sum statistic for Experiment 2, consider a random selection of eight integers, without replacement, from the numbers 1, 2, …, 16. For example, one could shuffle 16 cards numbered one through 16, then deal the top eight cards (physically or via computer simulation), and calculate their sum. Carrying out this randomization repeatedly would generate a reference distribution for the rank sum statistic. If there is no difference between fertilizers, then “the data we got,” as summarized by the “rank sum” statistic for those data, should be like data we could get from this random selection and summing of eight ranks.