This chapter will cover various workflow methods. © Disney.

The previous chapter focused on what tools the artist has available in software packages. This chapter will focus on how to use them. It will stress the importance of establishing a lighting workflow that enables the artist to be as efficient and effective as possible. This workflow will be explained from pre-lighting to work-in-progress lighting and through final render. The more efficient the workflow, the more lighting iterations the artist will be able to output before the deadline which ultimately leads to the best-looking images.

Before beginning any project, the first thing the artist should do is create or gather reference. The specifics of how to gather, organize, and process reference were discussed in Chapter 2, but the importance of this step cannot be reiterated enough. Always use reference!

Creating Color Keys and Mockups

After reference images have been gathered, it is important for the artist to create mockups and/or color keys as a blueprint for the lighting he or she hopes to achieve. At larger studios an art department or design artists could provide this, but in many cases the lighter will design and create these on his or her own.

Color keys show the overall color palette for a sequence of shots or a project in terms of the key direction and overall lighting quality through a handful of example images. They can be done digitally as well as in fine art mediums such as watercolors, colored charcoal, colored pencils, and even in crayons. Color keys are standard at larger animation houses but all projects, regardless of scope, can benefit from these visual designs. They certainly don’t have to be highly detailed or specific because they are meant to give an overall impression. Art directors have even suggested keeping the color keys thumbnail sized since, if their structure will work at that scale, they will work at a larger size. Color keys are important assets that will ultimately give the artist a strong understanding of the visual script of the project.

Figure 4.1 Color Key and Concept Art from the animated short The Fantastic Flying Books of Mr. Morris Lessmore. Property of Moonbot Studios.

Once a color key is established, the next stage is to mockup and design the lighting for the shot. The fastest and most accessible mockup is the quick sketch. A quick sketch is a good way to get thoughts onto paper to begin fleshing out ideas. These sketches could be simple diagrams from a bird’s eye view laying out the positions of the lights or even crude stick people. The purpose is to start expressing the idea visually to better communicate the possibilities for the overall look.

Preparing the Shot

The set is built, the character is animated, and through the use of reference and color keys the artist has a good idea of how the image will look. The shot is ready to go! So what is next? Whether one is a new lighter or a seasoned pro, this moment can be daunting.

The first step is to modify the render settings to allow the shot to function as efficiently as possible during the early testing process. When first setting up the shot, render settings should be lowered. Anti-aliasing can be scaled back, render samples can be reduced, and motion blur turned off. The main goal of these modifications is to ensure the iterated, work-in-progress (WIP) renders will run as quickly as possible. In these beginning stages of lighting, the artist wants to make many adjustments to establish the general look of the shot without being hindered by waiting for long renders to see the results. Having slow work-in-progress renders is deflating to the artist because it leads to lack of focus and a slow turnaround.

The artist also needs to determine the image aspect ratio. The aspect ratio is the relative size of the height and width of the image. Video resolution is a 4:3 (640 × 480) aspect ratio, but that is being used less frequently. HD aspect ratios have become increasingly more common among monitors and other displays. These aspect ratios are closer to 16:9 and are normally 1280 × 720 or 1920 × 1080. In film, these aspect ratios can stretch even wider to allow for a more cinematic and dramatic look. In Ben Hur (1959), the screen was stretched to a ratio of 2.75:1.

Figure 4.2a Common aspect ratios for film and television.

Figure 4.2b Wide aspect ratios like this one can provide a more cinematic and dramatic look to a film. © mptvimages.com.

Figure 4.3 By reducing the size of the image the artist can greatly reduce render times and optimize his or her workflow.

Figure 4.4 Isolating the render to only the area the artist is focused on is also an effective optimization tool.

Once the resolution and aspect ratio is determined, the artist should decrease the size of the overall render to reduce the render time when doing test renders. Generally, WIP frames do not need to be larger than 600 pixels in any dimension in order for the artist to make accurate observations about most of the lighting. This is often a decrease in size of the final render setting by 50–75 percent, but that is completely normal. Scaling the image down to 50 percent of the height and 50 percent of the width can reduce render times down to a quarter of the original. This step can save the artist huge amounts of time, allowing him or her to focus on the lighting and spend less time waiting for renders.

Most renderers also give the artist the option of rendering only sections of the frame. If the artist is only working on the lights for a character on the far left side of the screen, rendering the entire frame is unproductive. By isolating the area that is required, turnaround time on the frames increases rapidly due to eliminating unnecessary processing and analysis.

At this point, the artist would also be wise to turn off any geometry that is not influencing the look of the scene. If the scene is taking place in the forest, make sure the forest is pruned down to exclude extra, unnecessary set pieces. Only the objects that are visible to the camera or are close enough to cast shadow into the scene should be included. All other set pieces can be removed. This can be done for cities and even interiors as rooms are not visible in the scene and can have their geometry removed to make the scene more efficient.

Linear vs. sRGB

Fully understanding linear workflow is an incredibly dense topic that could populate an entire book on its own. From the artist’s perspective, working with the linear workflow produces a greater range of values in the image. The dark values will contain more variation and a softer transition to true black. The lighter parts of the image will also have a greater range of white values as well, which will give the artist more control when compositing the image and doing final color adjustments.

To understand the linear workflow one must understand gamma. Gamma is an adjustment to any image that only influences the midpoints and leaves the white point set to 1 and black to 0. Gamma controls start off by 1 at default. If that value is raised the midpoints darken, and if it is lowered the midpoints brighten. By default most monitors or displays users interact with are adjusted and given a gamma setting of 2.2.

In other words, all the images seen on almost every computer monitor are adjusted darker than they should be. So why do humans not perceive this? It is because every digitized image is counter-balanced against this adjustment and therefore appears relatively correct on screen. By working in linear workspace, the artist is choosing to break this double correction and work without it.

It is during this pre-lighting phase that the artist should determine whether to work with a linear workflow or sRGB. This may depend on the software package being used or the final destination for the images being created. Either way, since the rendered image will look distinctively different using these two methods, the artist should make this decision prior to lighting. Switching to a linear workflow while a shot is being lit will require the artist to go back and make adjustments to all colored texture maps, which is incredibly time consuming and tedious.

Figure 4.5 Normally, an image is gamma corrected to match the monitor’s shifting color. By working in a linear workflow the artist is able to bypass that process and generate a greater range of values.

Figure 4.6 The image on the left is using the linear workflow while the image on the right is not.

Many major animation studios work with some form of a linear workflow and can have it embedded into the pipeline. Although some software packages work in linear workflow out of the box, most individual users and small studios will be required to set up that workflow themselves. Every software package has a different way of creating this workflow and often requires making adjustments to both the lights and the materials.

Understanding the Roles of Each Light

In the beginning it is essential to understand the tools that are available to the lighter. Next is understanding how each of these lights will be utilized. How will each light be placed around the scene in order for the artist to achieve the aesthetic goals? There is a standard way of lighting that was born out of photography and film that serves as the basis for CG terminology of lighting and its practices.

Key

Anyone familiar with film, theater, or photographic lighting will know the term “key light.” The key light is the main or primary light source in the scene. It generally dominates the overall look and is responsible for the largest portion of illumination as well the dominant shadow in most circumstances. If it is an outdoor, daytime scene, then it is generally going to be the sun. For an interior scene, it will be whatever artificial lamp or window is generating the majority of the overall lighting. The overall quality of the key light is the biggest contributor to the look of a shot. If the position of the key light is flattening the subject then it will be almost impossible to generate good shaping. Key lights are generally positioned to the side of the main subject relative to the camera in order to create variation. The lighter is obviously obligated to position the light where the story dictates but the goal is to never have the key too frontal because this will make the subject flat, except for the extremely rare case where that is desired. The color of the key is also incredibly important. If the color of the key light is off and makes the scene appear unappealing there is very little the other lights can do to counteract that. In other words, establishing a good key light with proper settings is the most important thing a lighter will do with each shot.

Figure 4.7 The image on the left is the character with all white materials and only the key light. The right image is the key as it appears in the final image.

Fill

The fill light is the key’s complement and serves to add light value to shadow cast from the key. If the key light is coming in from one side, then the fill will complement that by “filling” in the dark parts on the screen-right side. This will enable the key’s shadow to be lifted above pure black and allow it to achieve a look of believability.

The intensity and color of the fill light is completely dependent on the scene. Less intense fill lights are necessary for scenes with higher contrast levels while more intense fill lights can decrease contrast. That is why the overall contrast level of a shot can be termed as the “key to fill ratio.”

The color of the fill light is dependent on the color of the environment surrounding the subject. Take, for example, the bright sunny day with a blue sky surrounding the subject. The fill light in this instance will be that same sky blue color and the shadows will be filled accordingly.

Bounce

Generally speaking, the key light comes from above the subject in most lighting scenarios. In outdoor scenes being lit by the sun or moon this is always the case. This is because the sun and moon are always above the horizon line. Characters, unless being depicted as evil, are not generally lit from underneath since this the look is unnatural and unflattering.

In natural lighting scenarios light from above the subject hits the ground below and causes secondary light rays to bounce up, filling in the dark areas on the underside of the subject. This type of secondary light is simulated with a light called the bounce light. Bounce light is the broad, soft light that comes up from below. The intensity and color of the bounce is dependent on the intensity of the key and the surface it is bouncing off. The stronger the key and the more reflective the surface, the more pronounced the bounce light will be and the greater the color of the ground surface will influence the color of the bounce.

Figure 4.8 The image on the left is the character with all white materials and just the fill light. The right image is the key and the fill light as they appear in the final image.

Figure 4.9 The image on the left is the character with all white materials and only the bounce light. The right image is the key light, the fill light, and the bounce light as they appear in the final image.

Rim/Kick

Here is where an artist can get into lights that exist merely for aesthetic reasons. The rim light exists to help separate the subject from the background and make its outline readable and prominent. Often the rim light is not driven by anything practical in the scene, but just a desire to make the subject’s outline more readable.

This is particularly true when a character is holding a small object like a pencil or pointing his or her finger. A rim light can be implemented to better read the outline of that object and ensure the audience can see what is happening. Usually the rim light exists on the side opposite the key, but in a given situation it can also be successfully used on the key side as well.

Kick lights also fall into the category of lights normally based on strictly aesthetic goals and are similar to rim lights. They are lights positioned at low angles to help add additional variation to flatly lit areas. Kick lights are often utilized on the fill side of an object when the fill light becomes too diffuse or too flat in one region. They have been known to exist on the key side as well to provide a bit of additional shaping. Often the kick light will add just a bit of value, especially in regard to specular values, to the fill side of the subject.

Figure 4.10 The image on the left is the character with all white materials and only the bounce light. The right image is the key light, the fill light, the bounce light, the kick light, and the rim light as they appear in the final image.

Utility

A utility light is any additional, practical light that exists in a scene that needs to be accounted for. These can be anything from glowing cell phone screens, flashlights, or police sirens. They are simply any other light in the scene used to show integration between all the light emitting sources and objects in the scene.

Figure 4.11 Four different shots that all benefit from utility lights. Still from the animated short The Tale of Mr. Revus (top left and bottom right), property of Marius Herzog. Still from the animated short Edmond était un âne (Edmond was a Donkey) (top right). Property of Papy3d/ONF NFB/ARTE. Still from the animated short Little Freak (bottom left). Property of Edwin Schaap.

The prep work has been completed and now it is time to light. Beginning artists get overly excited by this process and start quickly adding all the lights they think they will need to the scene. They have a rough vision of how the scene is supposed to look and expect the first render to match this vision. Lighting does not work that way. Lighting takes time and patience. There may be a vision in the artist’s mind, but it takes meticulous placement and adjustments to the settings for each light to reach that goal. To accomplish this, artists must establish a routine of slowly adding lights to the scene to systematically control the look of each and every one.

Creating Lights

Now that there is a firm understanding of the functions of the lights, it is time to start creating the light rig. The first step is to create the key light. There are many ways to accomplish this, but the following steps are a common method of positioning the key light.

1. Decide which light type works best to simulate the key light in the reference. Spotlight? Area light? Create the key light.

2. Put the key light in roughly the desired location within the scene.

3. Set the intensity and color to match the color key. (Do not feel pressured to get these attributes perfect. They will almost definitely need to be updated and modified once additional lights are added.)

4. Check the shadow being cast by the light to ensure it matches the reference’s shape and softness.

5. Double check to ensure the previous adjustments did not hinder the shaping. There should be variations in values as the light moves across objects to show volume and space.

6. Verify that there are no extreme highlights or bright patches caused by the key light. The key should not be causing any area of the subject to be overly bright or blown out. This is because once all the other lights are added (fills, bounces, kicks, rims, and so on) their values will be added on top of the key’s contribution and create a large, flat section of bright whites.

Once the key light is in place, move onto the next light in the scene and repeat until all of the basic lights have been created. A good order is to begin with the key then complement that light with a fill. Move on to the bounce light coming up from beneath and the sky light from above. Then finish up with any rims or kicks to help add definition and volume to the object. Also, construct any necessary utility lights at this point as they will greatly influence the attribute settings of the other lights and the overall final look.

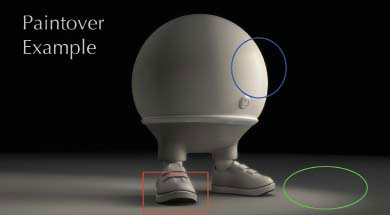

Each light gets positioned with the same care as the key. Is the light creating any overly flat areas? Does the light match the intended look? Does the light’s intensity cause any areas to go too bright and blow out? Does the color of the light accurately represent the surroundings? Some artists even prefer to make the light an exaggerated value like a bright red while positioning to help get a better understanding of that light’s contribution. By adding lights one at a time, the artist should always be able to maintain control of each light’s influence on the scene.

Figure 4.12 Example of adding each light to the scene individually and leaving them on as the artist adds the next one.

Another method of working is to turn off all lights except the one being created so the artist knows exactly where it’s being placed. For example, the artist would create the key as in the previous workflow. Then, when it is time to create the fill light, instead of leaving the key light on, the key light is turned off and the artist can just focus on the positioning of the fill light. The artist would repeat this process for each light until the end when all the lights are turned back on.

Once all the lights are roughly in place, it is time to analyze the overall look. Adjustments will be made to get all the lights to work together well. When making these adjustments, it is wise to change them one at a time to better understand how each modification is impacting the entire shot.

Figure 4.13 Example of adding each light to the scene individually and then turning it off before adding the next one.

Figure 4.14 Once the image is complete the artist can analyze the results, critique the work, and begin to identify areas that can be improved upon.

Interactive Light Placement

Developing an efficient system for positioning lights is incredibly important to the process. One effective method commonly used is interactive light placement. This involves moving and positioning from the vantage point of the light. In some software packages, the artist can select the light object and then choose to treat that light as if it were the camera. The artist can tumble, dolly, or zoom the light the same way the artist would normally work with the camera. This is especially useful whenever a spotlight needs to be in a specific spot, like in a situation when the light needs to shine just past an object or the light’s shadow needs to be shaped in a specific way to frame the scene. By using interactive placement, the artist is able to see through the light and control exactly where the light is illuminating. Then wherever the camera is looking is where the light is illuminating. In some software packages, the artist will even have the ability to see and control the edge and penumbra settings of the spotlight. This is not possible with all light types, but it can be a very effective method of light placement.

Isolating Lights

Even after the lights are created it is still the best workflow to continue isolating lights whenever tweaks are needed. Isolating lights allows in-progress render times to be reduced creating faster turnarounds. The artist will also need to remain in constant connection with how each light is influencing the scene and isolating lights allows that control. Losing sight of a light’s contribution will cause the artist to lose control of the shot and the look of the final image.

Never Light over Black

While working and implementing all these workflows, the artist might find a situation where he or she is lighting one object or character over a black background. This is because only that object’s lighting needs to be adjusted so everything else has been hidden and the subject in question can now be the focus. This is a great workflow for saving time, but aesthetically the lighting artist needs to be mindful and cautious to avoid lighting against a black background. Lighting over black can give an artist an inaccurate read of the character or object being lit especially in the darker tones around the edges. These dark edges will blend with the background and go unnoticed until the final image is pieced back together.

A solution to this problem is to light the character over a previously rendered still of the background. The still can either be loaded as a backdrop in the render view or as an image plane behind the character in the 3D environment. An image plane is just a single plane facing the camera with the image of the background applied as its texture. The plane is normally parented to the camera and always visible no matter where the camera is pointing. This way the artist can still benefit from the reduced render time while maintaining the visual integrity of the shot.

Saving Frames and Comparing Changes

Software packages are often able to cache or save rendered frames, allowing the artist to easily refer back to previous images. This is an incredibly useful tool since the ability to compare back to previous versions allows the lighter to see exactly what changed from the previous version and avoid any unexpected, unintended alterations to the look. The basic workflow when lighting is to render the frame, save that image, make one adjustment, re-render, see if the change is what was expected and repeat.

Isolating Problems through Exaggerated Values

At times it is necessary to identify which light is causing problems in the scene. Perhaps a light is casting a stray shadow or an undesired specular highlight. In a complex scene, it can be difficult to identify which light is causing the issue. A quick diagnostic method is to give lights exaggerated values to help identify the source of the problem. If the artist is trying to identify which light is causing a shadow across a character’s face, he or she would select a few suspected lights and change their shadow color parameter to pure red, green, orange, blue, etc. In the subsequent render, if the problematic shadow appears red, the artist knows it was the light with the red shadow color. If none of the colors show up, more digging is required. This method can help save valuable time.

Figure 4.15 Exaggerating values can help the artist identify problematic areas and solve them more quickly.

Creating Wedges

A wedge is a tool that allows the artist to set up a series of frames where everything is identical except for one variable that is changed, rendered, and later analyzed. It is like running a science experiment on the shot. There is the single variable (the individual attribute being tested) and the control (everything else in the shot that remains the same.) The goal is to create a series of rendered frames the artist can go through frame by frame to determine which image looks the best and lock the value of that attribute to whatever the value was at that frame.

Animating the value of the intensity of a light is a simple wedge that can be run. The first thing to do is lock the animation so the render is working with a static pose. Then animate the value of the light between the minimum and maximum value reasonable given the scenario. Normally ten frames are enough to achieve a good breakdown and to find a suitable value.

A wedge can also be used when determining the position of a light. The most common wedge for positioning is to create a rotation wedge around the Y-axis. This can be done when first positioning the key light and will be easy to accomplish by following these steps:

1. Create a spotlight with the aiming point constrained to the character or focal point of the scene.

2. Place the spotlight the correct distance away and raise it to the pitch desired.

3. Reposition the axis point of the light to the same spot as the aim of the spotlight.

4. Set a key frame on the Y-rotation value on frame 1.

5. Move thirty-six frames ahead, and spin the light around the character or focal point by 360 degrees. Set another key frame. Now when the artist scrubs through those frames in between, the light should be circling the subject.

Render those frames and the artist will be able to see how the subject looks when the key light is in various positions (every 10 degrees) around the subject. Use this lighting test to determine the best key position for the shot. If the general key direction for the shot has been estimated by a color key, then the artist should focus the wedge on only that region.

Figure 4.16 Wedges are a great way to analyze an image and make aesthetic decisions. Here is an example of both an intensity wedge (top) and a light rotation wedge (bottom).

Wedges are especially useful when working with a director, art director, or other supervisor. Normally these interactions end with the directors giving an abstract note such as making an object brighter or darker. By rendering a wedge of the brightness, the artist and the directors can go through the frames to come to a consensus of a concrete value that will work best for the scene.

Light Linking

In computer graphics, lighting artists have the ability to have certain lights influence only certain objects. This is known as light linking and can used in a variety of situations. Sometimes the character looks better with a key light in one position while the background looks better with the key light in a slightly different position. Other times there is a light that needs to shine through a wall or ceiling in order to properly simulate a bounce or fill light and illuminate the scene as accurately as possible.

Light linking can be very dangerous. If not used correctly, light linking can immediately make an image begin to look disjointed. Objects will not appear to interact correctly and a character could pass through a shadow in the environment and not get darker. It is therefore important for artists to understand that light linking should only be used when absolutely necessary. In some studios light linking controls have been taken away from the artists in a move designed to make the images more cohesive and structured more realistically.

Breaking a scene down into render layers is a big part of the lighter’s job in many production situations. Render layers can be used to take a very large, complex scene that is difficult to render and break it down into smaller, more manageable chunks. They are also necessary for certain types of compositing procedures that may need to be done on a shot. Render layers can also increase work efficiency. Adjustments to the lighting of one element may only require one render layer to be redone instead of re-rendering the entire scene. Additionally, render layers give the artist increased control in postproduction.

Render layers do come with their negatives. Adding render layers certainly adds to the complexity of the scene and good organization must be utilized. If not done well, render layers can easily be combined incorrectly in the composite and cause false layering or other aesthetic mistakes. Also, more render layers means more storage necessary on the file system and more content management. All of these negatives can be overcome, but the artist must be trained, organized, and must understand the craft.

Setting Up Render Layers

There are several different methods of breaking shots out into render layers. Layers can be broken out based on objects. In this case the characters can be rendered separately from each other and the foreground of the set can be rendered separately from the background. Layers can also be broken out by individual components of the scene, where all the diffuse values, the specular values and the cast shadows have separate render layers.

Figure 4.17 Artists must take great care when assembling render layers to ensure they are working together properly.

There are countless combinations to the methodology of these breakouts. Certain studios have pipelines that must be followed while individuals also have their own preferences. Ultimately, as long as the artist is organized and mindful, any breakout of layers can be assembled into an aesthetically pleasing final image.

Beauty Render Layers

The first method of breaking renders into layers is by separating them into their own beauty passes. Beauty passes consist of each individual object or group of objects in the scene rendered separately. This includes individual render layers for the foreground, background, characters, and any other element in the scene worth separating.

Careful consideration must be taken into account whenever these layer breakouts occur. The artist cannot just cut elements out and paste them back together later without considering how they will work with one another. Doing so without great care may lead to layers looking segmented and separated from one another.

The first component to analyze is how these rendered elements interact with each other and influence one another. These interactions must be accounted for. For example, if the character is standing over the environment, does his or her shadow cast on the ground and surrounding elements? If so, that shadow must be taken into account in the environment layer. If the character were turned off completely, the background layers would be missing the shadow of the character cast on the floor.

This is achieved by having the character calculated into the background layer. The render settings are adjusted so the character itself is not visible but the cast shadow is. Each software package has a different way of handling these settings, but all should have the functionality to do so.

Conversely, the background would need to be accounted for in the character layer. Does the character’s animation include the feet slightly penetrating the floor? If that is the case, the floor is held out of the character’s render to account for this. This is often done by assigning a shader to the ground that will punch that area out of the character’s alpha channel. Otherwise, the character will appear to be floating above the ground when the two images are pieced together.

Separating beauty layers can be extremely beneficial in postproduction. When the artist wants to make adjustments isolated only to the characters, background, or other layers, he or she no longer is required to go back into the rendering software. These adjustments can quickly be made in the compositor and no re-renders are required, thus saving time.

Lighting Attribute Layers

Another way to break out render layers for a shot is to separate out the individual lighting attributes like diffuse, specular, and shadows. The main benefit of breaking these out into separate layers is to have more control over the detailed look of the shot. If more specularity is needed on a certain component, the artist can increase the value in the compositor as opposed to having to go back into the 3D scene, relighting, and doing a costly re-render. The artist could potentially waste an entire day waiting on a render that would have been unnecessary if he or she had broken the shot out as a separate lighting attribute layer.

Figure 4.18 Example of beauty render layers.

Diffuse/Specular Layers

Diffuse and specular layers are very common lighting attribute layers. They are generally simple to set up and can give a huge amount of control. Creating a specular only or diffuse only pass can be done a few different ways. The first is to create a render layer where all the lights in that layer only emit either diffuse or specular light. The second is a similar method, but requires all the diffuse and specular values of the materials in the scene to be adjusted according to what layer is being rendered. Either way, the goal of the render is to have these two elements as separate components.

Figure 4.19 Example of diffuse and specular passes.

Shadow Passes

Shadow passes are another very common attribute layer. In the beauty layers section the shadows of the character were embedded into the background layer but the artist also has the option of working with shadows separately. To do this, all the objects in the scene receiving shadows should have their visibility turned on and all of their materials set to a flat, white lambert material. Any objects casting shadow are to be included in the scene with their normal materials applied, but their visibility turned off and set to cast shadows. This will result in an image that allows the user to isolate the shadowed areas and gives them the freedom to adjust the shadow contribution in postproduction if needed.

Figure 4.20 Example of beauty renders of the character and background with the shadow pass broken out separately.

Figure 4.21 In this shot, the artist altered the shadow to showcase the character’s inner animal. Image from the animated film Feral, property of Daniel Sousa.

Other than allowing for greater aesthetic control, shadow passes could provide an opportunity to save on render time. If the camera for the scene is locked off and the environment being rendered is stationary, the artist can choose to render only a single frame of the background and then apply the shadow pass of the animated character to give it all the elements needed. The character pass then can be layered on top, finishing the shot. By not rendering every frame of the background for the entire shot, a significant amount of time can be saved.

Shadow passes also allow the opportunity to have greater control over silhouettes and shadows that are the center of interest for a given shot. For example, situations arise when the artist would want to have greater control of the shadow in the compositor in order to distort the shape or modify it in some way. It is also possible for the shadow pass to be from a completely different version of the character and applied to the scene if the story calls for that. This second version of the character could be behaving completely differently as a storytelling point in an animated film.

Specialty Layers

In CG lighting, there are other layers that are prevalent but do not necessarily coincide with specific lighting attributes. These layers are common to the lighter’s workflow and should be treated with the same care.

Figure 4.22 An ambient occlusion render is one that calculates the proximity from one object to the next and displays the results with a visual representation of values from 0 to 1, or black to white.

Ambient Occlusion

Ambient occlusion is a layer available in most render packages. An ambient occlusion render is one that calculates the proximity from one object to the next and displays the results with a visual representation of values from 0 to 1, or black to white. In other words, ambient occlusion, or AO for short, calculates how many points on a surface are occluded from light by other objects in a scene. Normally, this can be achieved by applying specialty shaders to the objects in the scene. These values are determined from rays that are fired into the scene looking to hit an object. Once they hit that object, they reflect off and see how long it takes to hit another bit of geometry. The nearer those two objects are, the darker the resulting visual image is. The greater the distance it takes that bouncing ray to hit another object, the closer to white the image becomes.

In the composite, this image can be used in a couple of ways. The most common is to simulate the contact shadow where two objects meet. This is normally done by multiplying the ambient occlusion pass by the beauty render. This will allow the darker parts of the ambient occlusion render to darken the appropriate areas of the beauty render. Additionally, ambient occlusion renders can be given color to simulate a stronger bounce value between two objects. More will be discussed about integrating ambient occlusion passes in later chapters.

The setting most used to fine tune ambient occlusion shaders is the spread angle. This is the angle the ray takes after hitting the initial surface. The spread angle will control how hard or soft to make the ambient occlusion render look. The artist will normally change the spread angle to allow the look to echo reflective qualities of materials.

Ambient occlusion renders also have a tendency to be noisy and grainy. This is a result of too few rays being fired into the scene. In most cases, the ambient occlusion shader gives the artist the option of increasing the number of rays, but be careful as it can dramatically escalate render times.

Reflection Passes

Reflections are an element that will often need to be controlled independently. This comes up in a variety of circumstances. Often it is just a simple reflection in a mirror, a window, or in a character’s eye. The artist will often want to control these reflections on his or her own since reflections often need to be fine tuned to meet an aesthetic goal. The result is a reflection pass that can be composited in later.

The best way to set up a reflection layer is to first turn off reflections in the original render. Then the material for the reflective element will need to be set up in a way to allow for pure reflections with the accurate amount of reflective blur applied. The reflection contribution can always be toned down in the post process if a pure reflection is too strong. To obtain a pure reflection, the object must be given a material that can calculate the reflective surface. A blinn shader is often the easiest and most straightforward way of approaching this. The settings are as follows:

Figure 4.23 By creating a separate pass for the reflections the artist is able to quickly adjust their contribution to the final image.

Now the reflective object will have a pure reflection assigned to it. The artist must be sure to stay consistent with the material attributes to the original object. For example if there is a bump map or spec matte associated with the object, it should also have those attributes in the reflection pass. For some reflection passes to feel convincing, an artist may need to render a facing ratio pass to compensate for the fact that different materials reflect different amounts based on a viewed angle. The final step is to add the desired surrounding lights and geometry to be reflected and rendered.

Figure 4.24 A reflection pass can be created by using a blinn shader with these settings.

Figure 4.25 Mattes and RGB mattes are an excellent way to isolate areas of the render for adjustments in postproduction.

Matte/RGB Matte Layers

A valuable and inexpensive render layer that will give the artist additional control in the composite is the matte pass. Matte passes are a way to isolate the alpha channel of a particular object or section of an object with the intention of tweaking it in postproduction. An example might be to create a matte layer for the shirt of a particular character with the plan of slightly shifting its color in the composite. This method is incredibly useful when trying to make minor tweaks while trying to avoid a re-render of the entire shot. Since matte passes normally only need isolated elements and usually lighting isn’t required, the render times are much, much faster.

The easiest way to set one up is to use a simple shader that does not have reflectivity or specularity. Assign that material only to the object to be isolated. The only other objects calculated are those that come between that object and the camera. For those, just assign a shader that will punch out the alpha (black hole) along with no reflection or specularity either. Then, once in the composite, the alpha channel generated in this render can be used to make the necessary adjustments.

For greater control over more elements in the shot, artists will often create RGB matte layers in order to handle up to three different mattes in one render. Instead of applying a white surface shader (or similar) material to the objects, the artist will apply either a pure red (1 0 0), green (0 1 0), or blue color (0 0 1). Then, once in the compositor, each one of these matte layers can be isolated by pulling an alpha from only the red, green, or blue color channels of the image. This method will reduce render times even further by limiting the renders to one RGB pass instead of three regular matte passes and give the artist more control over the final image. Implementing matte passes is discussed further in later chapters.

RGB Light Layers

Lighting properties can be controlled in much the same way as an RGB matte layer. The lights within the scene can be altered to emit either pure red, green, or blue light. The resulting image can be controlled identically to the RGB matte pass except this time they control the areas of the image each light is influencing. Additionally, the lights can be broken down even further into their diffuse and specular values. RGB lights can isolate just these components to be controlled independently as well. This will ultimately give the artists even more control in the postproduction process. This method can be beneficial, especially when time is pressing and additionally, like RGB mattes, the artist can save render time and file storage by controlling three separate elements with one render.

Shadows are another element that can be broken down into RGB elements and either controlled or applied in the comp. The layer would be set up the same way as the normal render layer, but the shadow pass will be altered so the shadow color is a pure red, green, or blue.

Ultimately, there are an infinite number of render layers that can be created. The methodologies in this section should be viewed as general guidelines to some of the most popular ones, but certainly not hardened rules. RGB mattes and light attribute layers, for example, can actually be run as subcomponents of shaders through buffers or AOVs rather than render layers in some software packages. There are layers that will be shot specific and will not fall into one of these norms. The only limitation is the creativity of the artist to construct a way to control the different elements of the scene.

All that being said, it is important to note that in most situations more is not better. Just because an artist has the ability to break out each element separately, it is not always the best method. More render layers can mean more complexity that could lead to a disjointed final image if not controlled skillfully. The artist must always be sure to be mindful when deciding what layers need to be broken out. By harnessing this ability, the artist will gain ultimate control and focus solely on building the look he or she wants.

Figure 4.26 The same principle for isolating RGB channels can be applied to beauty, diffuse, specular, reflection, and many other passes. In these example images the rendered image is above with each red, green, and blue channel isolated in the image below.

As a lighter, rendering is a crucial part of the job. Whether it is generating quick test renders or running high resolution beauty frames for a giant billboard, understanding and utilizing the ins and outs of this process is mandatory.

Image File Types

Part of rendering an image is determining the file type. This file type can have a huge impact on the size of the file being generated and the overall quality. There are different file types that can be used and they each have their advantages and disadvantages.

JPEG

JPEG images are compressed images designed to give decent image quality within a small file size. JPEGs are generally used for posting on the Internet, sending through emails, or any other time a smaller file size is required. This smaller file size comes with a price. The amount of compression is substantial and can cause artifacts in the image. This is simply because getting the smaller file means dropping information from the image which can lead to a loss of detail causing visual problems. JPEGs are great in many situations but should never be used when delivering a final image.

TIFF or TARGA

When making the leap from test renders to final images, the artist wants to move into “lossless” file formats like TIFFs or TARGAs. These formats are referred to as lossless since they do not lose data when being saved, which is different than “lossy” formats like JPEGs, which compress the image. While these formats are larger file sizes, they also contain the maximum amount of image information possible and are therefore more versatile once the image is being composited. TIFFs generally are more common than TARGAs since they are compatible with more programs that are generally used more frequently.

OpenEXR

OpenEXR is a high dynamic range file format created by Industrial Light and Magic specifically for computer graphics. These files can save either as lossless or lossy images. More impressively, Open-EXR files have the capability to store more data than just the RGBA channels of a normal image. They have the capacity to store many render layers and/or right and left eye stereo frames in one image file. This capability makes OpenEXR files very attractive to high end companies and has become more and more common since its release in 2003.

Figure 4.27 Upon closer inspection, JPEG images can appear much lower resolution than TIFFs.

Bit Depth

Another render setting to consider is bit depth. Bit depth refers to the number of memory bits devoted to storing each pixel of an image’s color information. The greater the bit depth, the more color data the image has to handle finer gradations. Generally speaking, images are normally stored at 8-, 16-, or 32-bit color depths depending on the need; 32-bit images are known as floating point images because of their ability to save seemingly countless numbers of colors and also have the ability to store white values above 1.

Generally speaking, 8-bit images are a decent render quality, but will contain some banding and image artifacting; 16-bit images are usually free of banding and, visually speaking, have a continuing uninterrupted tone; 32-bit or floating-point images are commonly used because of their ability to store the most data and a high dynamic range. Obviously, file size will increase as bit depth goes up and images become more complex.

Render Quality

Image quality and compression are not the only things that can cause artifacts in an image. These artifacts include the common CG problems of aliasing (stair-stepping) and noise (or chatter). Depending on the renderer, render quality is a blanket term that will center on the number of samples used during the render. They are often termed something like “low, medium, and high” or “draft and production.” The lower settings are meant for work-in-progress tests while the higher settings are intended for final images. Renderers will often default to these lower settings assuming the artist will initially be testing the image in the renderer. Increasing these render quality settings can greatly increase render times since more calculations are necessary but can also greatly improve image quality.

Figure 4.28 Aliased edges and render “noise” are common problems in CG renders that must be addressed.

Image Outputting Structure

Once the image and file settings are in place, it is time to set up a structure to organize the outputting images. This is a crucial step that often gets overlooked by artists first starting out. By creating an organized structure for outputting the images, the artist always knows where the rendered images are being stored and can access them quickly and easily. This will save hours of time later when trying to find old frames or re-rendering images already created but lost in the mass.

Each artist or project has their own structure and naming convention. These naming conventions must be followed exactly, especially on collaborative projects, so all participants are on the same page. Generally speaking, these conventions include having an image folder for each shot and subfolders for each rendered layer and their corresponding z-depth render or other secondary image frames. Then, within those directories, the individual image files live with their own naming convention, usually something like the name of the render layer and the frame number. It is important to pad the frame numbers so they will always play in sequence regardless of the software being used to process the images. Many software packages and renderers allow the artist to automate these output settings through variables like “%f” or “%l” to designate the current frame or layer being rendered.

Figure 4.29 By creating an organized image file structure the artist can save lots of time and headaches in the long run.

Overnight Render Settings and Tricks

There are additional tricks and techniques that can be used during work-in-progress renders that can speed up one’s workflow. The obvious one is just to reduce the resolution and image size. As previously mentioned with work-in-progress lighting renders, it is common for daily renders to be around 33–50 percent the total size of the final. This generally leaves enough data to be viewed and analyzed while allowing the rendered frames to complete overnight.

The second method is intended to aid longer shots. Often, animated shots can be quite long and last for twenty to thirty seconds or several hundred frames. These shots can be driven by dialogue so the characters are in a fixed position. It is therefore possible to only render every second, fourth, eighth, or even tenth frame depending on how slow and how long the shot is. By rendering incremental frames in this way, the artist can significantly increase overnight render efficiency and overall quality of the work while still being able to analyze samples of the entire shot.

During production, there are also little tests the artist can complete to ensure there are no problems when a shot is sent to final render. This includes running a segment of frames (usually five to ten frames) with the final render settings to verify that the final image will look as expected. Additionally, the artist can choose to run those frames with various render settings which will determine the best look with the most efficient render times. By doing this, the artist is hopefully able to identify any problems early on so there isn’t a panic as the deadline approaches.

Final Render Settings and Tricks

The first thing that must be done when preparing the shot for final render is to enable motion blur (if it is being used in the shot) and to increase all the anti-alias and sample settings to final render quality. These settings will be tweaked based on the project requirements since often projects with very tight schedules will choose to bypass motion blur because the additional render time is too much to absorb. Once these settings are determined, the final renders are launched and the shot is done, right? Unfortunately, this is rarely the case.

Despite the fact that testing has occurred, it is almost inevitable that render artifacts will emerge. A major problem in animated projects is the noise and chatter. This book has addressed this previously but there is another way of tackling this problem other than increasing the render quality. In many cases, the best way to solve a noisy scene is to render it at a higher image size and then scale it down in postproduction. This increased size will create more data and ultimately less noise once the image is scaled back down. Also, if only a small segment of the screen is problematic, some renderers give the artist the option of only rendering a small section of the frame. In this case, the artist can render that section at a higher resolution, scale it down, and layer it over the original in postproduction to eliminate the illusion of chatter.

There are countless other adjustments to render settings that can be made on a per shot or per show basis. If the background is exceptionally noisy but the camera doesn’t move, is it possible to only render a still frame and use that for the duration of the shot? Is it a 2D camera move that can be tracked in postproduction so only one frame of the background can be rendered? Can certain objects have lower render qualities and still appear the same visually? There may be objects that are either highly motion blurred, too far from the camera, or too out of focus to see clearly so reducing their render size could help. These are the types of questions lighters must learn to ask during the final render process to make it go as smoothly and efficiently as possible.

Ultimately, each artist develops his or her own system for working through an animated project. But with some pre-planning and attention to detail, the artist can work efficiently, save precious time, and eliminate much of the stress associated with being disorganized and unknowing. Following this type of workflow will make an artist better, faster, and much happier.

|

Q. What is your current job/role at your company?

A. I am an Art Director at Blue Sky Studios. My background is in illustration, storyboarding, set and character design, as well as color theory. I’ve been art directing at Blue Sky for the last nine years.

Q. What inspired you to work in this industry?

A. Even as an illustrator, I was always inspired as much by film composition and lighting as I was fine art and illustration. I’ve always been fascinated by the marriage of art and technology, so when the opportunity presented itself to design on Blue Sky’s Robots I jumped at the chance. It became clear to me very quickly that this medium was a perfect fit for my sensibilities. I love the process and the collaborative nature of filmmaking (which was very refreshing after seven years of freelancing as an illustrator in solitude).

Q. What non-CG artwork inspires you?

A. Lately, I’ve been really inspired by the work of Cartoon Saloon—first by Secret of Kells and more recently by the gorgeous and charming Song of the Sea. The graphic motifs, the designs and intricate compositions … The beautiful use of textures and shapes. It all appeals to what drew me to illustration and animation in the first place. I find a lot of inspiration in European comics, children’s books, graphic novels, and experiments in sequential art narratives such the work of Brian Selznick or Shaun Tan. My friends and co-workers constantly inspire me with their personal projects—one of the perks of working at a studio like Blue Sky is being surrounded by such strong and varied talent. Movies have always been a great source of inspiration for me—especially the old Ridley Scott and Spielberg films from the 70s and 80s, and then animated classics like The Secret of Nimh, Sleeping Beauty and The Iron Giant.

Q. Can you give us an idea of what your process is when you are designing a color key for a sequence? In other words, how do you take a scene that is meant to be dramatic or emotional and translate that to visuals?

A. There are a lot of factors involved in planning color keys for a sequence: time of day, weather conditions in the scene, the emotional content or theme of the scene … It all starts from the story and what the scene is trying to convey. How are the characters feeling? Do they feel alone in their situation? How do we as storytellers want the audience to feel? Do we want them to feel like they’re in the scene with the characters? Or is the shot more objective, and are we trying to put some distance between the audience and the characters?

The answers to these questions (and many more) help determine whether a shot is backlit, beauty-lit, front three-quarter lit, diffusely lit to create atmosphere … Bouncing soft warm light into the face of a character can romanticize a shot. Harsh top light can make the character feel oppressed in the scene. Is the character’s intent clear, thus calling for direct lighting on the face with a clear view of their eyes? Or are they hiding something that would call for us to throw shadow across their face? Will the character read as a light shape over a dark background? Or vice versa? If the scene is emotionally intense, we may use a lot of contrast in the scene. We might use a limited value range if we’re trying to convey a specific mood.

There are a lot of possibilities for any scenario and no absolute right answers, but part of my job as art director is working closely with the directors to get a good feeling for the “pulse” of the film and making my creative choices based on that. There might be sequences in multiple films that I work on that might be familiar to one another, but I’ll make completely different decisions based on what comes before them and what happens after.

In terms of the nuts and bolts of designing a color key, we start as simply as possible—basic figure/ground relationship between the character and background. What is the main source of light in the scene? Is there anything in the story which dictates the light direction or a light source? What direction is the key light coming from and does that direction work for all the shots in a sequence? If it’s an exterior, how does the sky light affect the scene? If it’s an interior, are there direct light sources in the environment or diffuse light sources? The set design really dictates how we will light the scene, so planning ahead is critical. If we want dappled light to set a peaceful mood for a forest scene, we need enough trees to motivate that treatment. If the scene takes place in an interior setting, we need practical light sources to help us feature our characters.

Once the basics in a color key are solid, then we can start looking at whether we need to use a rim light. Do we need any volumetrics? How much atmosphere or dust in the air is present? How will we use depth of field?

We plan color keys thinking about how all the shots flow together as an entire sequence rather than a series of shots. This forces us to think about one main idea for the lighting setup in a scene, and then if we need to deviate from that setup for a specific shot we’ll find ways to do it without it jarring the audience.

Q. Can you tell us some of the techniques you use to get the audience to focus on the character or main action of the sequence?

A. This really begins with the set design and set dressing. We design and arrange our sets so that even when the characters aren’t there, your eye wants to go to the right place. The character becomes the final piece to the puzzle of any given composition. When it feels appropriate, we create a pool of light in the scene where the characters will be staged. If this feels too manufactured, we might use the ground material to vignette the focal area of a shot instead (some well-placed moss can be very helpful in a case like this). We use set dressing elements (trees, rocks, furniture …) to help guide your eye to the right place either by balancing out a composition or by framing the subject of the shot.

Q. Is there one sequence or project that you are particularly proud of?

A. One of the most challenging and satisfying sequences I’ve worked on was the pod patch sequence in Epic. We had to transform one location from an idyllic pond to a frantic battleground, build the set and plan the lighting to work from any angle, whether on the water’s surface or up in the trees looking down, and slowly evolve the lighting from majestic dappled sunlight to stormy skies over the course of over eight minutes of film. All this while populating the scene with hundreds if not thousands of characters who (by design) blended in with their natural surroundings. It was some of the hardest and most gratifying work on any film I’ve worked on.

Q. If you could tell yourself one piece of advice when you were first starting out in this industry, what would it be?

A. It’s funny—I never actively pursued a career in animation. An opportunity presented itself and I thought it seemed like a good idea at the time (that was fifteen years ago!). I thought I was going to be an illustrator all my life, so I’m not sure any advice would have done me a whole lot of good early on. That said, here’s something I wish I had learned earlier—it would have saved me a lot of grief:

Don’t buy into the idea that anyone has everything figured out. Making these films is an incredibly complex undertaking, and no one place has a perfect process. Always look for ways to do things better and smarter and learn to trust your fellow artists. I think this applies to both the technical and the creative aspects of making CG films.

Q. In your opinion, what are some of the things you would say make a successful lighting artist?

A. Needless to say, there’s a technical threshold that must be met by any successful lighting artist, but from my non-technical point of view, here are a few things that I’ve found to be important:

First and foremost, the ability to break a shot down into its simplest form and make it structurally sound. A lot of lighting (and traditional) artists get caught up in the details early and might create a shot or image with a lot of pretty things in it, but it will be fundamentally flawed. Once the shot works in its simplest form, then the bells and whistles will make it that much more successful.

The successful lighting artists I’ve worked with have fantastic observational skills and notice the little things that make an image convincing: the way light blooms at the edge of frame, the reflected light cast by a brushed metal surface, where to let the focus soften in a shot so it isn’t perfectly CG crisp, etc. In our films, realism isn’t necessarily the goal. I prefer the term “naturalism.” If the shot looks and feels right while conveying the emotional tone of the sequence, that can be more convincing than pure realism. This goes hand in hand with understanding visual storytelling and having a strong sense of what details help tell the story and which ones distract from it.

I also think that the most successful artists (of any collaborative medium) are good communicators and learn to interpret creative notes in ways that capture their intent and spirit, not just their literal aspects. Communication is also key in figuring out the aesthetic tastes of the person giving the notes (which can be incredibly helpful).