Creating an interlanguage of the social web

The discursive practice of markup

Untagged digital text is a linear data-type, a string of sequentially ordered characters. Such a string is regarded as unmarked text. Markup adds embedded codes or tags to text, turning something which is, from the point of view of computability, a flat and unstructured data-type, into something which is potentially computable (Buzzetti and McGann 2006).

Tagging or markup does two things. First, it does semantic work. It notates a text (or image, or sound) according to a knowledge representation of its purported external referrents. It connects a linear text with a formal conceptual scheme. Second, it does structural work. It notates a text with one particular semantics, the semantics of text itself, referring specifically to the text’s internal structures.

In the first, semantic agenda, a tag schema constitutes a controlled vocabulary describing a particular field in a formally defined and conceptually rigorous way. The semantics of each tag is defined with as little ambiguity as possible in relation to the other tags in a tag schema. Insofar as the tags relate to each other—they are indeed a language—they can be represented by means of a tag schema making structural connections (a < Person > is named by < GivenNames > and < Surname >) and counter distinctions against each other (the < City > Sydney as distinct from the < Surname > of the late eighteenth-century British Colonial Secretary after which the city was named). Schemas define tags paradigmatically.

To take Kant’s example of the willow and the linden tree, and express it the way a tagging schema might, we could mark up these words semantically as < tree > willow </tree > and < tree > linden tree </tree >. The tagging may have a presentational effect if these terms need highlighting, if they appear as keywords in a scientific text, for instance; or it may assist in search. This markup tells us some things about the structure of reality, and with its assistance we would be able to infer that a < tree > beech </tree > falls into the same category of represented meaning. Our controlled vocabulary comes from somewhere in the field of biology. In that field, a < tree > is but one instance of a < plant >. We could represent these structural connections visually by means of a taxonomy. However, < tree > is not an unmediated element of being; rather, it is a semantic category. How do we create this tag-category? How do we come to name the world in this way?

Eco (1999) provides Kant’s answer:

I see, for example, a willow and a linden tree. By comparing these objects, first of all, I note they are different from each other with regard to the trunk, branches, leaves etc.; but then, on reflecting only upon what they have in common: the trunk, branches and the leaves themselves, and by abstracting from their size, their shape, etc., I obtain the concept of a tree.

What follows is a process Kant calls ‘the legislative activity of the intellect’. From the intuition of trees, the intellect creates the concept of tree. ‘[T]o form concepts from representations it is… necessary to be able to compare, reflect, and abstract; these three logical operations of the intellect, in fact, are the essential and universal conditions for the production of any concept in general’ (quoted in Eco 1999, pp. 74–75).

Trees exist in the world. This is unexceptionable. We know they exist because we see them, we name them, we talk about them. We do not talk about trees because they are mere figment of conceptual projection, the result of a capricious act of naming. There is no doubt that there is something happening, ontologically speaking. However, we appropriate trees to thought, meaning, representation and communication through mental processes which take the raw material of sensations and from these construct abstractions in the form of concepts and systems of concepts or schemas. These concepts do not transparently represent the world; they represent how we figure the world to be.

And how do we do this figuring? When we use the concept ‘tree’ to indicate what is common to willows, linden trees and beeches, it is because our attention has been fixed on specific, salient aspects of apprehended reality—what is similar (though not the same) between the two trees, and what is different from other contiguous realities, such as the soil and the sky. But equally, we could have fixed our attention on another quality, such as the quality of shade, in which respect a tree and a built shelter share similar qualities.

Tags and tag schemas build an account of meaning through mental processes of abstraction. This is by no means an ordinary, natural or universal use of words. Vygotsky and Luria make a critical distinction between complex thinking and conceptual thinking. Complex thinking collocates things that might typically be expected to be found together: a tree, a swing, grass, flower beds, a child playing and another tree—for their circumstantial contiguity, the young child learns to call these a playground. From the point of view of consciousness and language, the world hangs together through syncretic processes of agglomeration. A playground is so named because it is this particular combination of things. The young child associates the word ‘playground’ with a concrete reference point. Conceptual thinking also uses a word, and it is often the same word as complex thinking. However, its underlying cognitive processes are different. Playground is defined functionally, and the word is used ‘as a means of actively centring attention, of abstracting certain traits, and symbolising them by the sign’ (Vygotsky 1962; also referred to in Cope and Kalantzis 1993 and Luria 1981).

Then, beyond the level of the word-concept, a syntax of abstraction is developed in which concept relates to concept. This is the basis of theoretical thinking, and the mental construction of accounts of a reality underlying what is immediately apprehended, and not even immediately visible (Cope and Kalantzis 1993). The way we construct the world mentally is not just a product of individual minds; it is mediated by the acquired structures of language with all its conceptual and theoretical baggage—the stuff of socialised worldviews and learned cultures.

Conceptual thinking represents a kind of ‘reflective consciousness’ or metaconsciousness. Markup tags are concepts in this sense and tag schemas are theories that capture the underlying or essential character of a field. When applied to the particularities of a specific piece of content, they work as a kind of abstracting metacommentary, relating the specifics of a piece of content to the generalised nature of the field. Academic, disciplinary work requires a kind of socio-semantic activity at a considerable remove from commonsense associative thinking.

Markup tags do not reflect reality in an unmediated way, as might be taken to be the case in a certain sense of the word ‘ontology’. Nor do they represent it comprehensively. Rather, they highlight focal points of attention relevant to a particular expressive domain or social language. In this sense, they represent worldviews. They are cultural artefacts. A tag does not exhaustively define the meaning function of the particular piece of content it marks up. Rather, it focuses on a domain-specific aspect of that content, as relevant to the representational or communicative purposes of a particular social language. In this sense ‘schema’ is a more useful concept than ‘ontology’, which tends to imply that our representations of reality embody unmediated truths of externalised being.

Notwithstanding these reservations, there is a pervasive underlying reality, an ontological grounding, which means that schemas will not work if they are mere figments of the imagination. Eco characterises the relationship between conceptualisation and the reality to which it refers as a kind of tension. On the one hand

being can be nothing other than what is said in many ways… every proposition regarding that which is, and that which could be, implies a choice, a perspective, a point of view… [O]ur descriptions of the world are always perspectival, bound up with the way we are biologically, ethnically, psychologically, and culturally rooted in the horizon of being (Eco 1999).

But this does not mean that anything goes. ‘We learn by experience that nature seems to manifest stable tendencies… [S]omething resistant has driven us to invent general terms (whose extension we can always review and correct)’ (Eco 1999). The world can never be simply a figment of our concept-driven imaginations. ‘Even granting that the schema is a construct, we can never assume that the segmentation of which it is the effect is completely arbitrary, because… it tries to make sense of something that is there, of forces that act externally on our sensor apparatus by exhibiting, at the least, some resistances’ (Eco 1999). Or as Latour says of the work of scientists, the semantic challenge is to balance facticity with social constructivism of disciplinary schemata (Latour 2004).

Structural markup

Of the varieties of textual semantic markup, one is peculiarly self-referential, the markup of textual structure, or schemas which represent the architectonics of text. A number of digital tagging schemas have emerged, which provide a functional account of the processes of containing, describing, managing and transacting text. They give a functional account of the world of textual content. Each tagging schema has its own functional purpose. A number of these tagging schemas have been created for the purpose of describing the structure of text, and to facilitate its rendering to alternative formats. These schemas are mostly derivatives of SGML, HTML and XHTML, and are designed primarily for rendering transformations through web browsers. Created originally for technical documentation, DocBook structures book text for digital and print renderings. The Text Encoding Initiative is ‘an international and interdisciplinary standard that helps libraries, museums, publishers, and individual scholars represent all kinds of literary and linguistic texts for online research and teaching’ (http://www.tei-c.org/).

Although the primary purpose of each schema may be a particular form of rendering, this belies the rigorous separation of semantics and structure from presentation. Alternative stylesheet transformations could be applied to render the marked up text in a variety of ways on a variety of rendering devices.

These tagging schemas do almost everything conceivable in the world of the written word. They can describe text comprehensively, and they support the manufacture of variable renderings of text on the fly by means of stylesheet transformations. The typesetting and content capture schemas provide a systematic account of structure in written text, and through stylesheet transformations they can render text to paper, to electronic screens of all sizes and formats, or to synthesised audio.

Underlying this is a fundamental shift in the processes of text work, described in Chapter 4, ‘What does the digital do to knowledge making?’. A change of emphasis occurs in the business of signing—broadly conceived as the design of meaning—from configuring meaning form (the specifics of the audible forms of speaking and the visual form of written text) to ‘marking up’ for meaning function in such a way that alternative meaning forms, such as variable visual (written) and audio forms of language, can be rendered by means of automated processes from a common digital source.

In any digital markup framework that separates the structure from presentation, the elementary unit of meaning function is marked by the tag, specifying the meaning function for the most basic ‘chunk’ of represented content. Tags, in other words, describe the meaning function of a unit of content. For instance, a word or phrase may be tagged as < Emphasis >, < KeywordTerm > or < OtherLanguageTerm >. These tags describe the peculiar meaning function of a piece of content. In this sense, a system of tags works like a functional grammar; it marks up key features of the information architecture of a text. Tags delineate critical aspects of meaning function, and they do this explicitly by means of a relatively consistent and semantically unambiguous metalanguage. This metalanguage acts as a kind of running commentary on meaning functions which are otherwise embedded, implicit or to be inferred from context.

Meaning form follows mechanically from the delineation of meaning function, and this occurs in a separate stylesheet transformation space. Depending on the stylesheet, for instance, each of the three functional tags < Emphasis >, < KeywordTerm > and < OtherLanguageTerm > may be rendered to screen or print either as boldface or italics, or as an audible intonation in the case of rendering as synthesised voice.

Given the pervasiveness of structural markup, one might expect that an era of rapid and flexible transmission of content would quickly dawn. But this has not occurred, or at least not yet, and for two reasons. The first is the fact that, although almost all content created over the past quarter of a century has been digitised, the formats are varied and incompatible. Digital content is everywhere, but most of it has been created, and continues to be created, using typographically oriented markup frameworks. These frameworks are embedded in software packages that provide tools for working with text which mimic the various trades of the Gutenberg universe: an author may use Word; a desktop publisher or latter-day typesetter may use inDesign; and a printer will use a PDF file as if it were a virtual forme or plate. The result is sticky file flow and intrinsic difficulties in version control and digital repository maintenance. How and where is a small correction made to a book that has already been published? Everything about this relatively simple problem, as it transpires, remains complex, slow and expensive. However, in a fully comprehensive, integrated file flow, things that are slow and expensive today should become easier and cheaper—a small change by an author to the source text could be approved by a publisher so that the very next copy of that work could include that change.

To return to the foundational question of the changed means of production of meaning in semantic and structural text-work environment, we want to extend the distinction of ‘meaning form’ from ‘meaning function’. Signs are the elementary components of meaning. And ‘signs’, say Kress and Leeuwen, are ‘motivated conjunctions of signifiers (forms) and signifieds (meanings)’ (Kress and van Leeuwen 1996). Rephrasing, we would call motivated meanings, the products of the impulse to represent the world and communicate those representations, ‘meaning functions’. The business of signing, motivated as it is by representation (meaning interpreted oneself) and communication (meaning interpreted by others), entails an amalgam of function (a reason to mean) and form (the use of representational resources which might adequately convey that meaning).

The meaning function may be a flower in a garden on which we have fixed our focus for a moment through our faculties of perception and imagination. For that moment, this particular flower captures our attention and its features stand out from its surroundings. The meaning function is our motivation to represent this meaning and to communicate about it. How we represent this meaning function is a matter of meaning form. The meaning form we choose might be iconic—we could draw a sketch of the flower, and in this case, the act of signing (form meets function) is realised through a process of visual resemblance. Meaning form—the drawing of the flower—looks like meaning function, or what we mean to represent: the flower. Or the relation between meaning form and function may be, as is the case for language, arbitrary. The word ‘flower’, a symbolic form, has no intrinsic connection with the meaning function it represents. In writing or in speech the word ‘flower’ conventionally represents this particular meaning function in English. We can represent the object to ourselves using this word in a way which fits with a whole cultural domain of experience (encounters with other flowers in our life and our lifetime’s experience of speaking about and hearing about flowers). On the basis of this conventional understanding of meaning function, we can communicate our experience of this flower or any aspect of its flower-ness to other English speakers.

This, in essence, is the stuff of signing, the focal interest of the discipline of semiotics. It is an ordinary, everyday business, and the fundamental ends do not change when employing new technological means. It is the stuff of our human natures. The way we mean is one of the distinctive things that makes us human.

One of the key features of the digital revolution is the change in the mechanics of conjoining meaning functions with meaning forms in structural and semantic markup. We are referring here to a series of interconnected changes in the means of production of signs. Our perspective is that of a functional linguistics for digital text. Traditional grammatical accounts of language trace the limitlessly complex structures and patterns of language in the form of its immediately manifest signs. Only after the structure of forms has been established is the question posed, ‘what do these forms mean?’. In contrast, functional linguistics turns the question of meaning around the other way: ‘how are meanings expressed?’. Language is conceived as a system of meanings; its role is to realise or express these meanings. It is not an end in itself; it is a means to an end (Halliday 1994). Meaning function underlies meaning form. An account of meaning form must be based on a functional interpretation of the structures of meaning. Meaning form of a linguistic variety comprises words and their syntactical arrangement, as well as the expressive or presentational processes of phonology (sounding out words or speaking) and graphology (writing). Meaning form needs to be accounted for in terms of meaning function.

Structural and semantic markup adds a second layer of meaning to the process of representation in the form of a kind of meta-semantic gloss. This is of particular value in the deployment of specialised disciplinary discourses. Such discourses rely on a high level of semantic specificity. The more immersed you are in that particular discourse—the more critical it is to your livelihood or identity in the world, for instance—the more important these subtle distinctions of meaning are likely to be. Communities of practice identify themselves by the rigorous singularity of purpose and intent within their particular domain of practice, and this is reflected in the relative lack of terminological ambiguity within the discourse of disciplinary practice of that domain. In these circumstances semantic differences between two social languages in substantially overlapping domains is likely to be absolutely critical. This is why careful schema mapping and alignment is such an important task in the era of semantic and structural markup.

Metamarkup: developing markup frameworks

We now want to propose in general terms an alternative framework to the formalised semantic web, a framework that we call an instance of ‘semantic publishing’. The computability of the web today is little better than the statistical frequency analyses of character clusters that drive search algorithms, or the flat and featureless world of folksonomies and conceptual popularity rankings in tag clouds. Semantic publishing is a counterpoint to these simplistic modes of textual computability. We also want to advocate a role for conceptualisation and theorisation in textual computability, against an empiricism which assumes that the right algorithms are all we need to negotiate the ‘data deluge’—at which point, it is naively thought, all we need to do is calculate and the world will speak for itself (Anderson 2009). In practical terms, we have been working through and experimenting with this framework in the nascent CGMeaning online schema making and schema matching environment.

Foundations to the alternative framework to the formalised semantic web

The framework has five foundations.

Foundation 1 Schema making should be a site of social dialectic, not ontological legislation

Computable schemas today are made by small groups of often nameless experts, and for that are inflexibly resistant to extensibility and slow to change. The very use of the word ‘ontology’ gives an unwarranted aura of objectivity to something that is essentially a creature of human-semantic configuration.

Schemas, however, are specific constructions of reality within the frame of reference of highly particularised social languages that serve disciplinary, professional or other specialised purposes. Their reality is a social reality. They are no more and no less than a ‘take’ on reality, which reflects and represents a particular set of human interests. These interests are fundamentally to get things done—funnels of commitment, to use Scollon’s words (Scollon 2001)—rather than mere reflections of inert, objectified ‘being’. Schemas, in other words, are ill served by the immutable certainty implied by the word ‘ontology’. Reality does not present itself through ontologies in an unmediated way. Tagging schemas are better understood to be mediated relationships of meaning rather than static pictures of reality.

However, the socio-technical environments of their construction today do not support the social dialectic among practitioners that would allow schemas to be dynamic and extensible. CGMeaning attempts to add this dimension. Users can create or import schemata—XML tags, classification schemes, database structures—and establish a dialogue between a ‘curator’ who creates or imports a schema ready for extension or further schema alignment—and the community of users which may need additional tags, finer or distinctions or new definitions of tag content, and clarifications of situations of use by exemplification. In other words, rather than top-down imposition as if any schema ever deserved the objectifying aura of ‘ontology’, and rather than the fractured failure to discuss and refine meanings of ‘folksonomies’, we need the social dialectic of curator-community dialogue about always provisional, always extensible schemata. This moves the question of semantics from the anonymous hands of experts into the agora of collective intelligence.

Foundation 2 From a one-layered linear string, to a double-layered string with meta-semantic gloss

Schemas should not only be sites of social dialectic about tag meanings and tag relations. Semantic markup practices establish a dialectic between the text and its markup. These practices require that authors mark up or make explicit meanings in their texts. Readers may also be invited to mark up a text in a process of computable social-notetaking. A specialised text may already be some steps removed from vernacular language. However, markup against a schema may take these meanings to an even more finely differentiated level of semantic specificity. The text-schema dialectic might work like this: an instance mentioned in the text may be evidence of a concept defined in a schema, and the act of markup may prompt the author or reader to enter into critical dialogue in their disciplinary community about the meanings as currently expressed in the tags and tag relations. The second layer, in other words, does not necessarily and always formally fix meanings, or force them to be definitive. Equally, at times, it might open the schema to further argumentation and clarification, a process Brandom calls ‘giving and asking for reasons’ (Brandom 1994). ‘Such systems develop and sustain themselves by marking their own operations self-reflexively; [they] facilitate the self-reflexive operations of human communicative action’ (Buzzetti and McGann 2006).

In other words, we need to move away from the inert objectivity of imposed ontologies, towards a dialogue between text and concept, reader and author, specific instance and conceptual generality. Markup can stabilise meaning, bring texts into conformance with disciplinary canons. Equally, in the dialogical markup environment we propose here, it can prompt discussions about concepts and their relations which have dynamically incremental or paradigm-shifting consequences on the schema.

In this process, we will also move beyond the rigidities of XML, in which text is conceived as an ‘ordered hierarchy of content objects’, like a nested set of discrete Chinese boxes (Renear, Mylonas and Durand 1996). Meaning and text, contrary to this representational architecture, ‘are riven with overlapping and recursive structures of various kinds just as they always engage, simultaneously, hierarchical as well as non-hierarchical formations’ (Buzzetti and McGann 2006). Our solution in CGMeaning is to allow authors and readers to ‘paint’ overlapping stretches of text with their semantic tags.

Foundation 3 Making meanings explicit

Markup schemas—taxonomies as well as folksonomies—rarely have readily accessible definitions of tag meanings. In natural language, meanings are given and assumed. However, in the rather unnatural language of scholarly technicality, meanings need to be described with higher degree of precision, to novices and also for expert practitioners at points of conceptual hiatus or questionable applicability. For this reason, CGMeaning provides and infrastructure for dictionary-formation, but with some peculiar ground rules.

Dictionaries of natural language capture the range of uses, nuances, ambiguities and metaphorical slippages. They describe language as a found object, distancing themselves from any normative judgement about use (Jackson 2002). However, even for their agnosticism about the range of situations of use, natural language dictionaries are of limited value given discourse and context-specific range of possible uses. Fairclough points out that ‘it is of limited value to think of a language as having a vocabulary which is documented in “the” dictionary, because there are a great many overlapping and competing vocabularies corresponding to different domains, institutions, practices, values and perspectives’ (Fairclough 1992). Gee calls these domain-specific discourses ‘social languages’ (Gee 1996). The conventional dictionary solution to the problem of ambiguity is to list the major alternative meanings of a word, although this can only reflect gross semantic variation. No dictionary could ever capture comprehensively the never-ending subtleties and nuances ascribed differentially to a word in divergent social languages.

The dictionary infrastructure in CGMeaning is designed so there is only one meaning per concept/tag, and this is on the basis of a point of salient conceptual distinction that is foundational to the logic of the specific social language and the schema that supports it, for instance: ‘Species are groups of biological individuals that have evolved from a common ancestral group and which, over time, have separated from other groups that have also evolved from this ancestor.’ Definitions in this dictionary space shunt between natural language and unnatural language, between (in Vygotsky’s psychological terms) complex association and the cognitive work of conceptualisation. In the unnatural language of disciplinary use, semantic saliences turn on points of generalisable principle. This is how disciplinary work is able at times to uncover the not-obvious, the surprising, the counter-intuitive. This is when unnatural language posits singularly clear definitions that are of strategic use, working with abstractions that are powerfully transferable from one context of application to another, or in order to provide a more efficient pedagogical alternative for novices than the impossible expectation of having to reinvent the world by retracing the steps of its every empirical discovery. Schemata represent the elementary stuff of theories and paradigms, the congealed sedimentations of collective intelligence.

Moreover, unlike a natural language dictionary, a dictionary for semantic publishing defines concepts—which may be represented by a word or a phrase—and not words. Concepts are not necessarily nouns or verbs. In fact, in many cases concepts can be conceived as either states or processes, hence the frequently easy transliteration of nouns into verbs and the proliferation in natural language of non-verb hybrids such as gerunds and particles. For consistency’s sake and to reinforce the idea of ‘concept’, we would use the term ‘running’ instead of ‘run’ as the relevant tag in a hypothetical sports schema. In fact, the process of incorporating actions into nouns, or ‘nominalisation’, is one of the distinctive discursive moves of academic disciplines and typical generally of specialised social languages (Martin and Halliday 1993). As Martin points out, ‘one of the main functions of nominalisation is in fact to build up technical taxonomies of processes in specialised fields. Once technicalised, these nominalisations are interpretable as things’ (Martin 1992). This, incidentally, is also a reason why we would avoid the belaboured intricacies of RDF (Resource Description Framework), which attempts to build sentence-like propositions in the subject - > predicate - > object format.

Moreover, this kind of dictionary accommodates both ‘lumpers’ (people who would want to aggregate by more general saliences) and ‘splitters’ (people who would be more inclined to make finer conceptual distinctions)—to employ terms used to characterise alternative styles of thinking in biological taxonomy. Working with concepts allows for the addition of phrases which can do both of these things, and to connect them, in so doing adding depth to the conceptual dialogue and delicacy to its semantics.

Another major difference is that this kind of dictionary is not alphabetically ordered (for which, in any event, in the age of digital search, there is no longer any need). Rather, it is arranged in what we call ‘supermarket order’. In this way, things can be associated according to the rough rule of ‘this should be somewhere near this’, with the schema allowing many other formal associations in addition to the best-association shelving work of a curator. There is no way you can know the precise location of the specific things you need purchase among the 110,000 different products in a Wal-Mart store, but mostly you can find them without help by a process of rough association. More rigorous connections will almost invariably be multiple and cross-cutting, and with varying contiguities according to the interest of the author or reader or the logic of the context. However, an intuitive collocation can be selected for the purpose of synergistic search.

Furthermore, this kind of dictionary does a rigorous job of defining concepts whose order of abstraction is higher (on what principle of salience is this concept a kind of or a part of a superordinate concept?), defining by distinction (what differentiates sibling concepts?) and exemplifying down (on what principles are subsidiary concepts instances of this concept?).

A definition may also describe essential, occasional or excluded properties. It may describe these using a controlled vocabulary of quantitative properties (integers, more than, less than, nth, equal to, all/some/none of, units of measurement etc.) and qualitative properties (colours, values). A definition may also shunt between natural language and more semantically specified schematic language by making semantic distinctions between a technical term and its commonsense equivalent in natural language.

Finally a definition may include a listing of some or all exemplary instances. In fact, the instances become part of the dictionary as well, thus (and once more, unlike a natural language dictionary) including any or every possible proper noun. This is a point at which schemata build transitions between the theoretical (conceptual) and the empirical (instances).

Foundation 4 Positing relations

A simple taxonomy specifies parent, child and sibling relations. However, it does this without the level of specificity required to support powerful semantics. For example, in parent–child relations we would want to distinguish hyponymy ‘a kind of’ from meronymy ‘a part of’. In addition, a variety of cross-cutting and intersecting relations can be created, including relations representing a range of semantic primitives such as ‘also called’, ‘opposite of’, ‘is not’, ‘is like but [in specified respects] not the same as’, ‘possesses’, ‘causes’, ‘is an example of’, ‘is associated with’, ‘is found in/at [time or place]’, ‘is a [specify quality] relation’ and ‘is by [specify type of] comparison’. Some of these relations may be computable using first order logic or description logics (Sowa 2000, 2006), others not.

Foundation 5 Social schema alignment

Schemata with different foci sometimes have varying degrees of semantic overlap. They sometimes talk, in other words, about the same things, albeit with varied perspectives and serving divergent interests. CGMeaning builds a social space for schema alignment, using an ‘interlanguage’ mechanism. Although initially developed in the case of one particular instantiation of problem of interoperability—for the electronic standards that apply to publishing (Common Ground Publishing 2003)—the core technology is applicable to the more general problem of interoperability characterised by the semantic publishing.

Developing an interlanguage mechanism

By filtering schemata through the ‘interlanguage’ mechanism, a system is created that allows conversation and information interchange between disjoint schemas. In this way, it is possible to create functionalities for data framed within the paradigm of one schema which extend well beyond those originally conceived by that schema. This may facilitate interoperability between schemas, allowing data originally designed for use in one schema for a particular set of purposes to be used in another schema for a different set of purposes.

The interlanguage mechanism means that metadata newly created through its apparatus to be interpolated into any number of metadata schemas. It also provides a method by means of which data harvested in one metadata schema can be imported into another. From a functional point of view, some of this process can be fully automated, and some the subject of automated queries requiring a human-user response.

The interlanguage mechanism, in sum, is designed to function in two ways:

![]() for new data, a filter apparatus provides full automation of interoperability on the basis of the semantic and syntactical rules

for new data, a filter apparatus provides full automation of interoperability on the basis of the semantic and syntactical rules

![]() for data already residing in an schema, data automatically passes through a filter apparatus using the interlanguage mechanism, and passes on into other schemas or ontologies even though the data had not originally been designed for the destination schema.

for data already residing in an schema, data automatically passes through a filter apparatus using the interlanguage mechanism, and passes on into other schemas or ontologies even though the data had not originally been designed for the destination schema.

The filter apparatus is driven by a set of semantic and syntactical rules as outlined below, and throws up queries whenever an automated translation of data is not possible in terms of those semantic rules.

The interlanguage apparatus is designed to be able to read tags, and thus interpret the data which has been marked up by these tags, according to two overarching mechanisms, and a number of submechanisms. The two overarching mechanisms are the superordination mechanism and the composition mechanism—drawing in part here on some distinctions made in systemic-functional linguistics (Martin 1992).

The superordination mechanism constructs tag-to-tag ‘is a…’ relationships. Within the superordination mechanism, there are the submechanisms of hyponymy (‘includes in its class…’), hyperonymy (‘is a class of…’), co-hyperonoymy (‘is the same as…’), antinomy (‘is the converse of…’) and series (‘is related by gradable opposition to…’).

The composition mechanism constructs tag-to-tag ‘has a.’ relationships. Within the composition mechanism, there are the submechanisms of meronymy (‘is a part of…’), co-meronymy (‘is integrally related to but exclusive of…’), consistency (‘is made of…’), collectivity (‘consists of…’).

These mechanisms can be fully automated in the case of new data formation within any schema, in which case, deprecation of some aspects of an interoperable schema may be required as a matter of course at the point of data entry. In the case of legacy data generated in schemas without anticipation of, or application of, the interlanguage mechanism, data can be imported in a partially automated way. In this case, tag-by-tag or field-by-field queries are automatically generated according to the filter mechanisms of:

![]() taxonomic distance (testing whether the relationships of composition and superordination are too distant to be necessarily valid)

taxonomic distance (testing whether the relationships of composition and superordination are too distant to be necessarily valid)

![]() levels of delicacy (testing whether an aggregated data element needs to be disaggregated and re-tagged)

levels of delicacy (testing whether an aggregated data element needs to be disaggregated and re-tagged)

![]() potential semantic incursion (identifying sites of ambiguity)

potential semantic incursion (identifying sites of ambiguity)

![]() the translation of silent into active tags or vice versa (at what level in the hierarchy of composition or superordination data needs to be entered to effect superordinate transformations).

the translation of silent into active tags or vice versa (at what level in the hierarchy of composition or superordination data needs to be entered to effect superordinate transformations).

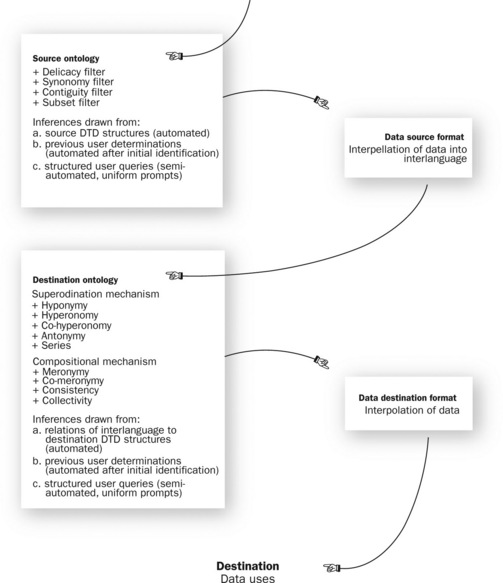

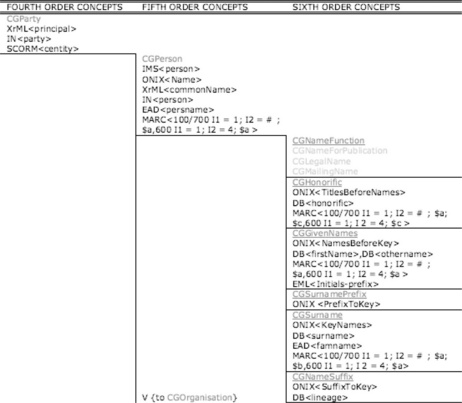

The interlanguage mechanism (Figure 13.1) is located in CGMeaning, a schema-building and alignment tool. This software defines and determines:

![]() database structures for storage of metadata and data

database structures for storage of metadata and data

![]() synonyms across the tagging schemas for each schema being mapped

synonyms across the tagging schemas for each schema being mapped

![]() two definitional layers for every tag: underlying semantics and application-specific semantics; in this regard, CGMeaning creates the space for application-specific paraphrases can be created for different user environments; the underlying semantics necessarily generates abstract dictionary definitions which are inherently not user-friendly; however, in an application, each concept-tag needs to be described and defined in ways that are intelligible within that domain; it is these application-specific paraphrases that render to the application interface in the first instance

two definitional layers for every tag: underlying semantics and application-specific semantics; in this regard, CGMeaning creates the space for application-specific paraphrases can be created for different user environments; the underlying semantics necessarily generates abstract dictionary definitions which are inherently not user-friendly; however, in an application, each concept-tag needs to be described and defined in ways that are intelligible within that domain; it is these application-specific paraphrases that render to the application interface in the first instance

![]() export options into an extensible range of electronic standards expressed as XML schemas

export options into an extensible range of electronic standards expressed as XML schemas

![]() CGMeaning, which manages the superordination and compositional mechanisms described above, as well as providing an interface for domain-specific applications in which interoperability is required.

CGMeaning, which manages the superordination and compositional mechanisms described above, as well as providing an interface for domain-specific applications in which interoperability is required.

Following are some examples of how this mechanism may function. In one scenario, new data might be constructed according to a source schema which has already become ‘aware’ by means of previous applications of the interlanguage mechanism as a consequence of the application of the mechanism. In this case, the mechanism commences with the automatic interpellation of data, as the work of reading and querying the source schema has already been performed. In these circumstances, the source schema in which the new data is constructed becomes a mere facade for the interlanguage, taking the form of a user interface behind which the processes of subordination and composition occur.

In another scenario, a quantum of legacy source data is provided, marked up according to the schematic structure of a particular source schema. The interlanguage mechanism then reads the structure and semantics immanent in the data, interpreting this from schema and the way the schema is realised in that particular instance. It applies four filters: a delicacy filter, a synonymy filter, a contiguity filter and a subset filter. The apparatus is able to read into the schema and its particular instantiation an inherent taxonomic or schematic structure. Some of this is automated, as the relationships of tags is unambiguous based on the readable structure of the schema and evidence drawn from its instantiation in a concrete piece of data. The mechanism is also capable of ‘knowing’ the points at which it is possible there might be ambiguity, and in this case throws up a structured query to the user. Each human response to a structured query becomes part of the memory of the mechanism, with implications drawn from the user response and retained for later moments when interoperability is required by this or another user. On this basis, the mechanism interpellates the source data into the interlanguage format, while at the same time automatically ‘growing’ the interlanguage itself based on knowledge acquired in the reading of the source data and source schema.

Having migrated into the interlanguage format, the data is then reworked into the format of the destination schema. It is rebuilt and validated according to the mechanisms of superordination (hyponymy, hyperonymy, co-hyperonomy, antonymy and series) and composition (meronymy, co-meronymy, consistency, collectivity). A part of this process is automated, according to the inherent structures readable into the destination schema, or previous human readings that have become part of the accumulated memory of the interlanguage mechanism. Where the automation of the rebuilding process cannot be undertaken by the apparatus with assurance of validity (when a relation is not inherent to the destination schema, nor can it be inferred from accumulated memory in which this ambiguity was queried previously), a structured query is once again put to the user, whose response in turn becomes a part of the memory of the apparatus, for future use. On this basis, the data in question is interpolated into its destination format. From this point, it can be used in its destination context or schema environment, notwithstanding the fact that the data had not been originally formatted for use in that environment.

Key operational features of this mechanism include:

![]() the capacity to absorb effectively and easily deploy new schemas which refer to domains of knowledge, information and data that substantially overlap (vertical ontology-over-ontology integration); the mechanism is capable of doing this without the exponential growth in the scale of the task characteristic of the existing ‘crosswalk’ method

the capacity to absorb effectively and easily deploy new schemas which refer to domains of knowledge, information and data that substantially overlap (vertical ontology-over-ontology integration); the mechanism is capable of doing this without the exponential growth in the scale of the task characteristic of the existing ‘crosswalk’ method

![]() the capacity to absorb schemas representing new domains that do not overlap with the existing range of domains and ontologies representing these domains (horizontal ontology-beside-ontology integration)

the capacity to absorb schemas representing new domains that do not overlap with the existing range of domains and ontologies representing these domains (horizontal ontology-beside-ontology integration)

![]() the capacity to extend indefinitely into finely differentiated subdomains within the existing range of domains connected by the interlanguage, but not yet this finely differentiated (vertical ontology-within-ontology integration).

the capacity to extend indefinitely into finely differentiated subdomains within the existing range of domains connected by the interlanguage, but not yet this finely differentiated (vertical ontology-within-ontology integration).

In the most challenging of cases—in which the raw digital material is created in a legacy schema, and in which that schema is not already known to the interlanguage from previous interactions—the mechanism:

![]() interprets structure and semantics from the source schema and its instantiation in the case of the particular quantum of source data, using the filter mechanisms described above

interprets structure and semantics from the source schema and its instantiation in the case of the particular quantum of source data, using the filter mechanisms described above

![]() draws inferences in relation to the new schema and the particular quantum of data, applying these automatically and presenting structured queries in cases where the apparatus and its filter mechanism ‘knows’ that supplementary human interpretation is required

draws inferences in relation to the new schema and the particular quantum of data, applying these automatically and presenting structured queries in cases where the apparatus and its filter mechanism ‘knows’ that supplementary human interpretation is required

![]() stores any automated or human-supplied interpretations for future use, thus building knowledge and functional useability of this schema into the interlanguage.

stores any automated or human-supplied interpretations for future use, thus building knowledge and functional useability of this schema into the interlanguage.

These inferences then become visible to subsequent users, and capable of amendment by users, through the CGMeaning interface, which:

![]() interpellates the data into the interlanguage format

interpellates the data into the interlanguage format

![]() creates a crosswalk from new schema into a designated destination schema, for instance a new format for structuring or rendering text, using the superordination and composition mechanisms; these are automated in cases where the structure and semantics of the destination schema are self-evident, or they are the subject of structured queries where they are not, or they are drawn from the CGMeaning repository in instances where the same query has been answered by an earlier user

creates a crosswalk from new schema into a designated destination schema, for instance a new format for structuring or rendering text, using the superordination and composition mechanisms; these are automated in cases where the structure and semantics of the destination schema are self-evident, or they are the subject of structured queries where they are not, or they are drawn from the CGMeaning repository in instances where the same query has been answered by an earlier user

To give another example, the source schema is already known to the interlanguage, by virtue of automated validations based not only on the inherent structure of the schema, but also many validations against a range of data instantiations of that schema, and numerous user clarifications of queries. In this case, by entering data in an interface that ‘knowingly’ relates to an interlanguage which has been created using the mechanisms provided here, there is no need for the filter mechanisms nor the interpolation processes that are necessary in the case of legacy data and unknown source schemas; rather, data is entered directly into the interlanguage format, albeit through the user interface ‘facade’ of the source schema. The apparatus then interpolates the data onto the designated destination schema.

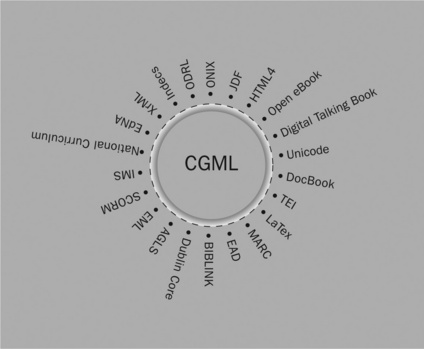

Schema alignment for semantic publishing: the example of Common Ground Markup Language

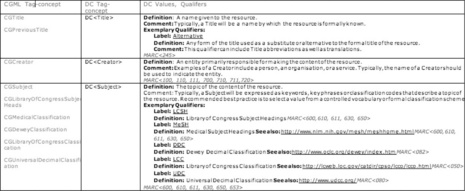

Common Ground Markup Language (CGML) is a schema for marking up and storing text as structured data, created in the tag definition and schema alignment environment, CGMeaning. The storage medium can be XML files, or it can be a database in which fields are named by tags, and from which exports produce XML files marked up for structure and semantics, ready for rendering through available stylesheet transformations. The result is text that is more easily located by virtue of the clarity and detail of metadata markup, and capable of a range of alternative renderings. CGML structures and stores data on the basis of a functional account of text, not just as an object but as a process of collaborative construction. The focal point of CGML is a functional grammar of text, as well as a kind of grammar (in the metaphorical sense of generalised reflection) of the social context of text work. However, with CGML, ‘functional’ takes on a peculiarly active meaning. The markup manufactures the text in the moment of rendering, through the medium of stylesheet transformation in one or several rendering processes or media spaces.

In CGML, as is the case for any digital markup framework that separates structure and semantics from presentation, the elementary unit of meaning function is marked by the tag. The tag specifies the meaning function for the most basic ‘chunk’ of represented content. Tags, in other words, describe the meaning function of a unit of content. For instance, a word or phrase may be tagged as < Emphasis >, < KeywordTerm > or < OtherLanguageTerm >. These describe the peculiar meaning function of a piece of content. In this sense, a system of tags works like a partial functional grammar: they mark up key features of the information architecture of a text. Tags delineate critical aspects of meaning function, and they do this explicitly by means of a relatively consistent and semantically unambiguous metalanguage. This metalanguage acts as a kind of running commentary on meaning functions, which are otherwise embedded, implicit or to be inferred from context.

Meaning form follows mechanically from the delineation of meaning function, and this occurs in a separate stylesheet transformation space. Depending on the stylesheet, each of the three functional tags < Emphasis >, < KeywordTerm > and < OtherLanguageTerm > may be rendered to screen or print either as boldface or italics, or as a particular intonation in the case of rendering as synthesised voice. Stylesheets, incidentally, are the exception to the XML rule strictly to avoid matters of presentation; meaning form is their exclusive interest.

CGML, in other words, is a functional schema for authorship and publishing. CGML attempts to align the schemas we will describe shortly, incorporating their varied functions. CGML is an interlanguage. Its concepts constitute a paradigm for representational work, drawing on a historically familiar semantics, but adapting this to the possibilities of the internet. Its key devices are thesaurus (mapping against functional schemas) and dictionary (specifying a common ground semantics). These are the semantic components for narrative structures of text creation, or the retrospective stories that can be told of the way in which, for instance, authors, publishers, referees, reviewers, editors and the like construct and validate text. The purpose of this work is both highly pragmatic (such as a description of an attempt to create a kind of functional grammar of the book) and highly theoretical (a theory of meaning function capable of assisting in the partially automated construction and publication of variable meaning forms).

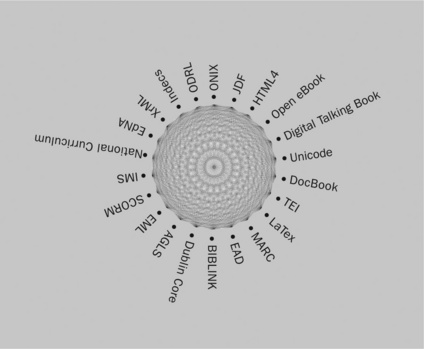

In the era of digital media, the social language of textuality is expressed in a number of schemas. It is increasingly the case that these schemas perform a wide ranging, fundamental and integrated set of functions. They contain the content—the electronic files that provide structural and semantic shape for the data which will be rendered as a book. They describe the content—for the purposes of data transfer, warehousing and retrieval. They manage the content—providing a place where job process instructions and production data are stored. And they transact the content.

A number of digital tagging schemas have emerged which provide a functional account of these processes of containing, describing, managing and transacting text. More broadly, they provide a functional account of the world of textual content in general. Each tagging schema has its own functional purpose, or ‘funnel of commitment’, to use Scollon’s terminology. We will briefly describe a few of these below, categorising them into domains of professional and craft interest: typesetting and content capture, electronic rendering, print rendering, resource discovery, cataloguing, educational resource creation, e-commerce and digital rights management. The ones we describe are those we have mapped into CGML.

Typesetting and content capture

Unicode (http://www.unicode.org) appears destined to become the new universal character encoding standard, covering all major language and scripts (Unicode 2010), and replacing the American Standard Code for Information Interchange (ASCII), which was based solely on Roman script.

A number of tagging schemas have been created for the purpose of describing the structure of text, and to facilitate its rendering to alternative formats. These schemas are mostly derivatives of SGML. HTML (W3C 2010a) and XHTML (W3C 2010b) are designed primarily for rendering transformations through web browsers. The OASIS/UNESCO sanctioned DocBook standard is for structuring book text, which can subsequently be rendered electronically or to print (DocBook Technical Committee 2010). The Text Encoding Initiative is ‘an international and interdisciplinary standard that helps libraries, museums, publishers and individual scholars represent all kinds of literary and linguistic texts for online research and teaching’ (http://www.tei-c.org).

Although the primary purpose of each schema may be a particular form of rendering, this belies the rigorous separation of semantics and structure from presentation. Alternative stylesheet transformations could be applied to render the marked up text in a variety of ways. Using different stylesheets, it is possible, for instance, to render DocBook either as typesetting for print or as HTML.

Electronic rendering

Electronic rendering can occur in a variety of ways—as print facsimiles in the form of Portable Document Format (PDF), or as HTML readable by means of a web browser. Other channel alternatives present themselves as variants or derivatives of HTML: the Open eBook Standard for handheld electronic reading devices (International Trade Standards Organization for the eBook Industry 2003) and Digital Talking Book (ANSI/NISO 2002), facilitating the automated transition of textual material into audio form for the visually impaired or the convenience of listening to a text rather than reading it.

Print rendering

The Job Definition Format (JDF) appears destined to become universal across the printing industry (http://www.cip4.org/). Specifically for variable print, Personalised Print Markup Language (PPML) has also emerged (PODi 2003).

Created by a cross-industry international body, the Association for International Cooperation for the Integration of Processes in Pre-Press, Press and Post-Press, the JDF standard has been embraced and supported by all major supply-side industry participants (equipment and business systems suppliers). It means that one electronic file contains all data related to a particular job. It is free (in the sense that there is no charge for the use of the format) and open (in the sense that its tags are transparently presented in natural language; it is unencrypted, its coding can be exposed and it can be freely modified, adapted and extended by innovators—in sharp distinction to proprietary software).

The JDF functions as a digital addendum to offset print, and as the driver of digital print. Interoperability of JDF with other standards will mean, for instance, that a book order triggered through an online bookstore (the ONIX space, as described below) could generate a JDF wrapper around a content file as an automated instruction to print and dispatch a single copy.

The JDF serves the following functions:

![]() Pre-press—Full job specification, integrating pre-press, press and post-press (e.g. binding) elements, in such a way that these harmonise (the imposition matches the binding requirements, for example). This data is electronically ‘tagged’ to the file itself, and in this sense it actually ‘makes’ the ‘printing plate’.

Pre-press—Full job specification, integrating pre-press, press and post-press (e.g. binding) elements, in such a way that these harmonise (the imposition matches the binding requirements, for example). This data is electronically ‘tagged’ to the file itself, and in this sense it actually ‘makes’ the ‘printing plate’.

![]() Press—The job can then go onto any press from any manufacturer supporting the JDF standard (and most major manufacturers now do). This means that the press already ‘knows’ the specification developed at the pre-press stage.

Press—The job can then go onto any press from any manufacturer supporting the JDF standard (and most major manufacturers now do). This means that the press already ‘knows’ the specification developed at the pre-press stage.

![]() Post-press—Once again, any finishing is determined by the specifications already included in the JDF file, and issues such as page format and paper size are harmonised across all stages in the manufacturing process.

Post-press—Once again, any finishing is determined by the specifications already included in the JDF file, and issues such as page format and paper size are harmonised across all stages in the manufacturing process.

The effects of wide adoption of this standard by the printing industry include:

![]() Automation—There is no need to enter the job specification data from machine to machine, and from one step in the production process to the next. This reduces the time and thus the cost involved in handling a job.

Automation—There is no need to enter the job specification data from machine to machine, and from one step in the production process to the next. This reduces the time and thus the cost involved in handling a job.

![]() Human error reduction—As each element of a job specification is entered only once, this reduces waste and unnecessary cost.

Human error reduction—As each element of a job specification is entered only once, this reduces waste and unnecessary cost.

![]() Audit trail—Responsibility for entering specification data is pushed further back down the supply chain, ultimately even to the point where a customer will fill out the ‘job bag’ simply by placing an order through an online B-2-B interface. This shifts the burden of responsibility for specification, to some degree, to the initiator of an order, and records by whom and when a particular specification was entered. This leads to an improvement in ordering and specification procedures.

Audit trail—Responsibility for entering specification data is pushed further back down the supply chain, ultimately even to the point where a customer will fill out the ‘job bag’ simply by placing an order through an online B-2-B interface. This shifts the burden of responsibility for specification, to some degree, to the initiator of an order, and records by whom and when a particular specification was entered. This leads to an improvement in ordering and specification procedures.

![]() Equipment variations—The standard reduces the practical difficulties previously experienced using different equipment supplied by different manufacturers. This creates a great deal of flexibility in the use of plant.

Equipment variations—The standard reduces the practical difficulties previously experienced using different equipment supplied by different manufacturers. This creates a great deal of flexibility in the use of plant.

Resource discovery

Resource discovery can be assisted by metadata schemas that use tagging mechanisms to provide an account of the form and content of documents. In the case of documents locatable on the internet, Dublin Core is one of the principal standards, and is typical of others (Dublin Core Metadata Initiative 2010). It contains a number of key broadly descriptive tags: < title >, < creator >, < subject >, < description >, < publisher >, < contributor >, < date >, < resource type >, < format >, < resource identifier >, < source >, < language >, < relation >, < coverage > and < rights >. The schema is designed to function as a kind of electronic ‘catalogue card’ to digital files, so that it becomes possible, for instance, to search for Benjamin Disraeli as an author < creator > because you want to locate one of his novels, as opposed to writings about Benjamin Disraeli as a British prime minister < subject > because you have an interest in British parliamentary history. The intention of Dublin Core is to develop more sophisticated resource discovery tools than the current web-based search tools which, however fancy their algorithms, do little more than search indiscriminately for words and combinations of words.

A number of other schemas build on Dublin Core, such as the Australian standard for government information (Australian Government Locator Service 2003), and the EdNA and UK National Curriculum standards for electronic learning resources. Other schemas offer the option of embedding Dublin Core, as is the case with the Open eBook standard.

Cataloguing

The Machine Readable Catalog (MARC) format was initially developed in the 1960s by the US Library of Congress (MARC Standards Office 2003a; Mason 2001). Behind MARC is centuries of cataloguing practice, and its field and coding alternatives run to many thousands. Not only does MARC capture core information such as author, publisher or page extent. It also links into elaborate traditions and schemas for the classification of content such as the Dewey Decimal Classification system or the Library of Congress Subject Headings. MARC is based on ISO 2709 ‘Format for Information Exchange’. MARC has recently been converted into an open XML standard.

The original markup framework for MARC was based on nonintuitive alphanumeric tags. Subsequent related initiatives have included a simplified and more user-friendly version of MARC: the Metadata Object Description Schema (MARC Standards Office 2003c) and a standard specifically for the identification, archiving and location of electronic content, the Metadata Encoding and Transmission Standard (MARC Standards Office 2003b).

Various ‘crosswalks’ have also been mapped against other tagging schemas, notably MARC to Dublin Core (MARC Standards Office 2001) and the MARC to the ONIX e-commerce standard (MARC Standards Office 2000). In similar territory, although taking somewhat different approaches to MARC, are Biblink (UK Office for Library and Information Networking 2001) and Encoded Archival Description Language (Encoded Archival Description Working Group 2002).

Educational texts

Cutting across a number of areas—particularly rendering and resource discovery—are tagging schemas designed specifically for educational purposes. EdNA (EdNA Online 2000) and the UK National Curriculum Metadata Standard (National Curriculum Online 2002) are variants of Dublin Core.

Rapidly rising to broader international acceptance, however, is the Instructional Management Systems (IMS) Standard (IMS Global Learning Consortium 2003) and the related Shareable Content Object Reference Model (ADL/SCORM 2003). Not only do these standards specify metadata to assist in resource discovery. They also build and record conversations around interactive learning, manage automated assessment tasks, track learner progress and maintain administrative systems for teachers and learners. The genesis of IMS was in the area of metadata and resource discovery, and not the structure of learning texts. One of the pioneers in the area of structuring and rendering learning content (building textual information architectures specific to learning and rendering these through stylesheet transformations for web browsers) was Educational Modelling Language (OUL/EML 2003). Subsequently, EML was grafted into the IMS suite of schemas and renamed the IMS Learning Design Specification (IMS Global Learning Consortium 2002).

E-commerce

One tagging schema has emerged as the dominant standard for B-2-B e-commerce in the publishing supply chain—the ONIX, or the Online Information Exchange standard, initiated in 1999 by the Association of American Publishers, and subsequently developed in association with the British publishing and bookselling associations (EDItEUR 2001; Mason and Tsembas 2001). The purpose of ONIX is to capture data about a work in sufficient detail to be able automatically to upload new bookdata to online bookstores such as Amazon.com, and to communicate comprehensive information about the nature and availability of any work of textual content. ONIX sits within the broader context of interoperability with ebXML, an initiative of the United Nations Centre for Trade Facilitation and Electronic Business.

Digital rights management

Perhaps the most contentious area in the world of tagging is that of digital rights management (Cope and Freeman 2001). Not only does this involve the identification of copyright owners and legal purchasers of creative content; it can also involve systems of encryption by means of which content is only accessible to legitimate purchasers; and systems by means of which content can be decomposed into fragments and recomposed by readers to suit their specific needs. The < indecs > or Interoperability of Data in E-Commerce Systems framework was first published in 2000, the result of a two-year project by the European Union to develop a framework for the electronic exchange of intellectual property (< indecs > 2000). The conceptual basis of < indecs > has more recently been applied in the development of the Rights Data Dictionary for the Moving Pictures Expert Group’s MPEG-21 framework for distribution of electronic content (Multimedia Description Schemes Group 2002). From these developments and discussions, a comprehensive framework is expected to emerge, capable of providing markup tools for all manner of electronic content (International DOI Foundation 2002; Paskin 2003).

Among the other tagging schemas marking up digital rights, Open Digital Rights Language (ODRL) is an Australian initiative, which has gained wide international acceptance and acknowledgement (ODRL 2002); and Extensible Rights Markup Language (XrML) was created in Xerox’s PARC laboratories in Paulo Alto. Its particular strengths are in the areas of licensing and authentication (XrML 2003).

What tagging schemas do

The tagging schemas we have mentioned here do almost everything conceivable in the world of the written word. They can describe that world comprehensively, and to a significant degree they can support its manufacture. The typesetting and content capture schemas provide a systematic account of structure in written text, and through stylesheet transformations they can literally print text to paper, or render it electronically to screen or manufacture synthesised audio. Digital resource discovery and electronic library cataloguing schemas provide a comprehensive account of the form and content of non-digital as well as digital texts. Educational schemas attempt to operationalise the peculiar textual structures of traditional learning materials and learning conversations, where a learner’s relation to text is configured into an interchange not unlike the ATM conversation we described in Chapter 4, ‘What does the digital do to knowledge making?’. E-commerce and digital rights management schemas move texts around in a world where intellectual property rights regulate their flow and availability.

Tagging schemas and processes may be represented as paradigm (using syntagmatic devices such as taxonomy) or as narrative (an account of the ‘funnel of commitment’ and the alternative activity sequences or navigation paths in the negotiation of that commitment). Ontologies are like theories, except, unlike theories, they do not purport to be hypothetical or amenable to testing; they purport to tell of the world, or at the very least of a part of the world, like it is—in our case that part of the world inhabited by authors, publishers, librarians, bookstore workers and readers.

The next generation of ontology-based markup brings with it the promise of more accurate discovery, machine translation and, eventually, artificial intelligence. A computer really will be able to interpret the difference between Cope, cope and cope. Even in the case of the < author > with the seemingly unambiguous < surname > Kalantzis, there is semantic ambiguity that markup can eliminate or at least reduce, by collocating structurally related data (such as date of birth) to distinguish this Kalantzis from others and by knowing to avoid association with the transliteration of the common noun in Greek, which means ‘tinker’.

In the world of XML, tags such as < author > and < surname > are known as ‘elements’, which may well have specified ‘attributes’; and the ontologies are variously known as, or represented in, ‘schemas’, ‘application profiles’ or the ‘namespaces’ defined by ‘document type definitions’ or DTDs. As our interest in this chapter is essentially semantic, we use the concepts of ‘tag’ and ‘schema’. In any event, as mentioned earlier, ‘ontology’ seems the wrong concept insofar as tag schemas are not realities; they are specific constructions of reality within the frame of reference of highly particularised social languages. Their reality is a social reality. They are no more and no less than a ‘take’ on reality, which reflects and represents a particular set of human interests. These interests are fundamentally to get things done (funnels of commitment) more than they are mere reflections of objectified, inert being. Schemas, in other words, have none of the immutable certainty implied by the word ‘ontology’. Reality does not present itself in an unmediated way. Tagging schemas are mediated means rather than static pictures of reality.

Most of the tagging frameworks relating to authorship and publishing introduced above were either created in XML or have now been expressed in XML. That being the case, you might expect that an era of rapid and flexible transmission of content would quickly dawn. But this has not occurred, or at least not yet, and for two reasons. The first is the fact that, although almost all content created over the past quarter of a century has been digitised, most digital content has been created, and continues to be created, using legacy design and markup frameworks. These frameworks are embedded in software packages that provide tools for working with text which mimic the various trades of the Gutenberg universe: an author may use Word; a desktop publisher or latter-day typesetter may use Quark; and a printer will use a PDF file as if it were a virtual forme or plate. The result is sticky file flow and intrinsic difficulties in version control and digital repository maintenance (Cope 2001). How and where is a small correction made to a book that has already been published? Everything about this relatively simple problem, as it transpires, becomes complex, slow and expensive. However, in a fully comprehensive, integrated XML-founded file flow, things that are slow and expensive today should become easier and cheaper—a small change by an author to the source text could be approved by a publisher so that the very next copy of that book purchased online and printed on demand could include that change. Moreover, even though just about everything available today has been digitised somewhere, in the case of books and other written texts, the digital content remains locked away for fear that it might get out and about without all users paying for it when they should. Not only does this limit access, but what happens, for instance, when all you want is a few pages of a text and you do not want to pay for the whole of the printed version? And what about access for people who are visually impaired? It also puts a dampener on commercial possibilities for multichannel publishing, such as the student or researcher who really has to have a particular text tonight, and will pay for it if they can get it right away in an electronic format— particularly if the cost of immediate access is less than the cost of travelling to the library specially.

The second reason is that a new era of semantic text creation and transmission has not yet arrived. Even though XML is spreading quickly as a universal electronic lingua franca, each of its tagging schema describes its worlds in its own peculiar way. Tags may well be expressed in natural languages—this level of simplicity, openness, transparency is the hallmark of the XML world. But herein lies a trap. There is no particular problem when there is no semantic overlap between schemas. However, as most XML application profiles ground themselves in some ontological basics (such as people, place and time), there is nearly always semantic overlap between schemas. The problem is that, in everyday speech, the same word can mean many things, and XML tags express meaning functions in natural language.

The problem looms larger in the case of specialised social languages. These often develop a high level of technical specificity, and this attaches itself with a particular precision to key words. The more immersed you are in that particular social language—the more critical it is to your livelihood or identity in the world, for instance—the more important these subtle distinctions of meaning are likely to be. Communities of practice identify themselves by the rigorous singularity of purpose and intent within their particular domain of practice, and this is reflected in the relative lack of terminological ambiguity within the social language that characterises that domain. As any social language builds on natural language, there will be massive ambiguities if the looser and more varied world of everyday language is assumed to be homologous with a social language which happens to use some of the same terminology.

The semantic differences between two social languages in substantially overlapping domains are likely to be absolutely critical. Even though they are all talking about text and can with equal facility talk about books, it is the finely differentiated ways of talking about book that make authors, publishers, printers, booksellers and librarians different from each other. Their social language is one of the ways you can tell the difference between one type of person and another. These kinds of difference in social language are often keenly felt and defended. Indeed, they often become the very basis of professional identity.

This problem of semantics is the key dilemma addressed by this chapter, and the focal point of the research endeavour which has produced CGML. Our focus in this research has been the means of creation and communication of textual meaning, of which the book is an archetypical instance. Each of the schemas we have briefly described above channels a peculiar set of ‘funnels of commitment’ in relation to books—variously that of the author, typesetter, printer, publisher, bookseller, librarian and consumer. And although they are all talking about the same stuff—textual meaning in the form of books or journal articles—they talk about it in slightly different ways, and the differences are important. The differences distinguish the one funnel of commitment, employing its own peculiar social language to realise that commitment, from another. It is precisely the differences that give shape and form to the tagging schemas which have been the subject of our investigations.

The schemas we have identified range in size from a few dozen tags to a few thousand, and, the total number of tags across just these schemas would be in the order of tens of thousands. This extent alone would indicate that the full set of tags provides the basis for a near-definitive account of textual meaning. And although it seems as if these schemas were written almost yesterday, they merely rearticulate social languages that have developed through 500 years of working with the characteristic information architectures of mechanically reproduced writing, bibliography and librarianship, the book trade and readership. Given that they are all talking about authorship and publishing, the amount of overlap (the number of tags that represent a common semantic ground across all or most schemas) is unremarkable. What are remarkable are the subtle variations in semantics depending on the particular tagging schema or social language; and these variations can be accounted for in terms of the subtly divergent yet nevertheless all-important funnels of commitment.

So, after half a century of computing and a quarter of a century of the mass digitisation of text, nothing is really changing in the core business of representing the world using the electrical on/off switches of digitisation. The technology is all there, and has been for a while. The half-millennium-long shift is in the underlying logic behind the design of textual meaning. This shift throws up problems which are not at root technical; rather they are semantic. Interoperability of tagging schemas is not a technical problem, or at least it is a problem for which there are relatively straightforward technical solutions. The problem, and its solution, is semantic.