Interoperability and the exchange of humanly usable digital content

Throughout this book, it has been clearly articulated that the emergence and use of schemas and standards are increasingly important to the effective functioning of research networks. What is also equally emphasised is the danger posed if the use of schemas and standards results in excessive and negative system constraints—a means of exerting unhelpful control over distributed research activities. But, how realistically can a balance be facilitated between the positive benefits derived from the centralised coordination through the use standards versus the benefits from allowing self-organisation and emergence to prevail at the edge of organisational networks?

In this chapter we set out to explore how differing approaches to such problems are actually finding expression in the world. To do this, we have engaged in a detailed comparison of three different transformation systems, including the CGML system discussed at length in the previous chapter. We caution against any premature standardisation on any system due to externalities associated with, for example, the semantic web itself.

Introduction

In exploring the theme of interoperability we are interested in the practical aspects of what Magee describes as a ‘framework for commensurability’ in Chapter 12, ‘A framework for commensurability’, and what Cope and Kalantzis describe as the ‘dynamics of difference’ discussed in Chapter 4, ‘What does the digital do to knowledge making?’. Magee suggests it is possible to draw on the theoretical underpinnings outlined in this book to construct a framework that embraces correspondence, coherentist and consensual notions of truth:

it ought now be possible to describe a framework which examines conceptual translation in terms of denotation—whether two concepts refer to the same objective things in the world; connotation—how two concepts stand in relation to other concepts and properties explicitly declared in some conceptual scheme; and use—or how two concepts are applied by their various users (Magee, Chapter 11, ‘On commensurability’).

Cope and Kalantzis suggest something that could be construed as similar when they say that the new media underpinning academic knowledge systems requires a new ‘conceptualising sensibility’.

But what do these things mean in the world of global interoperability? How are people currently affecting content exchanges in the world so that it proves possible to enter data once for one particular set of purposes, but be able to exchange this content for use related to another set of purposes in a different context?

In addressing this question, our chapter has four overarching objectives. The first is simple. We aim to make a small contribution to a culture of spirited problem solving in relation to the design and management of academic knowledge systems and the complex and open information networks that form part of these systems. For example, in the case of universities, there is the challenge of building information networks that contribute to two-way data and information flows. That is, a university information system needs to support a strategy of reflexivity by enabling the university to contribute to the adaptive capacity of the society it serves and enabling itself to adapt to the changes in society and the world.

The second objective is to facilitate debate about technical and social aspects of interoperability and content exchanges in ways that do not restrict this debate to information systems personnel only. As Cope, Kalantzis and Magee all highlight, this challenge involves the difficult task of constructively negotiating commensurability. This will require considerable commitment by all in order to support productive enagements across multiple professional boundaries.

The third objective is to highlight that new types of infrastructures will be required to mediate and harmonise the dynamics of difference. The reader will notice that a large part of the chapter draws on the emergence (and importance) of standards and a trend towards standardisation. A normative understanding of knowledge involves embracing the complex interplay between the benefits of both self-organisation and standardisation. Therefore, we think that a normative approach to knowledge now requires that the system patterns of behaviour emerge concurrently at different levels of hierarchy as has also been discussed extensively in Chapter 6, ‘Textual representations and knowledge support-systems in research intensive networks’, by Vines, Hall and McCarthy. Any approach to the challenge of interoperability has to take this matter very seriously—as we do.

The fourth objective is to highlight that in the design of new infrastructures, specific attention must be given to the distinctiveness of two types of knowledge—tacit and explicit knowledge. This topic has been well discussed throughout this book. We claim that an infrastructure that fails to acknowledge the difference between explicit and tacit knowledge will be dysfunctional in comparison with one that does. To this end, we are grateful to Paul David for suggesting the use of the phrase ‘human interpretive intelligence’, which we refer to extensively in this chapter. This phrase aims to convey that there is a dynamic interaction between tacit and explicit forms of knowledge representation. That is, explicit knowledge cannot exist in the first place without the application of human interpretative intelligence. We agree very much with Magee where in Chapter 3, ‘The meaning of meaning’, he expounds the idea that meaning is bound to the context within which content is constructed and conveyed.

The means by which we progress this chapter is by analysing one particular technical concern—the emerging explosion in the use of Extensible Markup Language (XML). The rise of XML is seen as an important solution to the challenges of automated (and semi-automated) information and data processing. However, the problem of interoperability between XML schemas remains a global challenge that has yet to be resolved. Thus this chapter focuses on how to achieve interoperability in the transformation of humanly usable digital content from one XML content storage system to another. We claim that it will be necessary to use human interpretive intelligence in any such transformation system and that a technology that fails to acknowledge this, and to make suitable provision for it, will be dysfunctional in comparison with one that does. We further claim that a choice about how such content is translated will have implications for whether the form of representation to which the content is transformed gives access to the meanings which users will wish to extract from it.

Our chapter examines three translation systems: Contextual Ontology_X Architecture (the COAX system), the Common Ground Markup Language (the CGML system) and OntoMerge (the Onto-Merge System). All three systems are similar to the extent that they draw on ‘merged ontologies’ as part of the translation process. However, they differ in the ways in which the systems are designed and how the translation processes work. The CGML and COAX systems are examples of XML to XML to XML based approaches. The OntoMerge system is an example of an XML to Ontology (using web based ontology languages such as OWL) to XML approach.

We think that the leading criterion for selecting any XML translation system is the ease of formulating expanded frameworks and revised theories of translation that create commensurability between source and destination XML content and merged ontologies. We discuss this criterion using three sub-criteria: the relative commensurability creation load in using each system, the system design to integrate the use of human interpretive intelligence, and facilities for the use of human interpretive intelligence in content translations.

In a practical sense this chapter addresses the challenge of how to achieve interoperability in the storage and transfer of humanly usable digital content, and what must be done to exchange such content between different systems in as automated a way as possible. Automated exchanges of content can assist with the searching for research expertise within a university. Such search services can be secured by linking together multiple and disparate databases. An example of this is the University of Melbourne’s ‘Find an Expert’ service hosted on the university’s website (University of Melbourne 2010).

In this chapter we are concerned with requirements for the automated exchange of content, when this content is specifically designed for human use. As described by Cope and Kalantzis elsewhere in this book, interoperability of humanly usable content allows such content, originally stored in one system for a particular set of purposes, to be transferred to another system and used for a different set of purposes. We are concerned with the design of an efficient converter system, or translator system, hereafter referred to as a transformation system, that makes possible a compatibility of content across different systems.

Our interest in this subject arises because there are substantial sunk costs associated with storing humanly usable content in particular ways. There are also significant costs of translating content from one system to another. These costs will, in due course, become sunk costs also. Therefore, in addressing this topic, our expectation is that, over time, the costs incurred in the storage and transfer of humanly usable digital content will not be regarded as sunk costs, but as investments to secure the continued access to humanly usable digital content, now and into the future.

Many people, who are focused on their own particular purposes, have described aspects of the challenges associated with the need for exchanges of humanly usable content. Norman Paskin, the first Director of the Digital Object Identifier (DOI) Foundation, has described difficulties which must be faced when trying to achieve interoperability in content designed for the defence of intellectual property rights. These include the need to make interoperability possible across different kinds of media (such as books, serials, audio, audiovisual, software, abstract works and visual material), across different functions (such as cataloguing, discovery, workflow and rights management), across different levels of metadata (from simple to complex), across semantic barriers, and across linguistic barriers (Paskin 2006). Rightscom describe the need to obtain interoperability in the exchange of content, including usage rights, to support the continued development of an e-book industry (2006, p. 40). McLean and Lynch describe the challenges of facilitating interoperability between library and e-learning systems (2004, p. 5). The World Wide Web Consortium (W3C) is itself promoting a much larger vision of this same challenge through the semantic web:

The semantic web is about two things. It is about common formats for integration and combination of data drawn from diverse sources, where on the original Web mainly concentrated on the interchange of documents. It is also about language for recording how the data relates to real world objects. That allows a person, or a machine, to start off in one database, and then move through an unending set of databases which are connected not by wires but by being about the same thing (W3C undated).

We argue that that there are two, deeper, questions relating to achieving interoperability of humanly usable content that have not been sufficiently discussed. The aim of this chapter is to show that these two challenges are interrelated.

First, we claim that it will not be possible to dispense with human intervention in the translation of digital content. A technology that fails to acknowledge this, and to make suitable provision for it, will be dysfunctional in comparison with one that does. The reason for this is that digital content is ascribed meaning by those people who use it; the categories used to organise content reflect these meanings. Different communities of activity ascribe different meanings and thus different categories. Translation of the elements of content from one set of categories to another cannot, we claim, be accomplished without the application of what we will call ‘human interpretive intelligence’. (We particularly acknowledge Paul David’s contribution and thank him for suggesting the use of this term, which we have adopted throughout this chapter.) In what follows, we will provide a detailed explanation of how this is achieved by one translation system, and will argue that any system designed to translate humanly usable content must make this possible.

Second, in order to achieve interoperability of digital content, we claim a choice about how such content is translated will have implications for whether the form of representation to which the content is transformed, gives access to the meanings that users will subsequently wish to extract from it. This has ramifications about the way in which translations are enacted. This, we argue, requires the application of human interpretive intelligence as described above in order that in the translation process content is mapped between the source and destination schemas.

We advance our general argument in a specific way—by comparing three existing systems which already exist for the abstracting of content to support interoperability. We have used this approach because these three different systems (a) make different assumptions about the use of interpretive intelligence, and (b) rely on different ontological and technical means for incorporating the use of this intelligence. The first system is the Common Ground Markup Language (CGML), whose component ontology has been used to construct the CGML interlanguage. We have termed this the CGML system. The second system is called the Contextual Ontology_X Architecture XML schema, whose component ontology is OntologyX. We have termed this the COAX system. The third system is called OntoMerge, whose architecture includes a means of merging and storing different ontologies (a feature referred to as the OntoEngine). We have termed this the OntoMerge System.

The ontological assumptions of these three different systems differ in that CGML system uses noun-to-noun mapping rules to link the underlying digital elements of content (we use the word ‘digital element’ in this chapter synonymously with the idea of a ‘digital entity’). In contrast, the COAX system uses verbs and the linkages between digital elements that are generated when verbs are used (as we shall see below, it uses what we have termed ‘verb triples’). OntoMerge also uses nouns, but these act as noun-predicates. In a way similar to the COAX system, these noun-predicates also provide linkages between digital elements in the same way as does the use of verb triples in the COAX system. The CGML system is built around the use of human interpretive intelligence, whereas the COAX and OntoMerge systems attempt to economise on the use of such intelligence.

We aim to show that points (a) and (b) above are related, in a way that has significant implications for how well exchanges of content can be managed in the three systems. We argue that choice of ontological and technical design in the COAX and the OntoMerge systems makes it much harder to apply human interpretive intelligence, and thus it is no surprise that these systems attempt to economise on the use of that intelligence. But we regard the use of such intelligence as necessary. We think this because explicit knowledge is reliant on being accessed and applied through tacit processes.

All three systems are still in their proof-of-concept stage. Because of this, a description of how all these systems abstract content is provided in the body of this chapter. We should not be understood as advancing the interests of one system over another. Rather, our purpose in analysing the systems is to highlight the possibility of locking into an inefficient standard. We agree with Paul David (2007a, p. 137) when he suggests that ‘preserving open options for a longer period than impatient market agenda would wish is a major part of such general wisdom that history has to offer public policy makers’.

The outline of the remainder of this chapter is as follows. In the next section, we discuss the translation problem and outline a generalised model for understanding the mechanisms for translating digital content. This model is inclusive of two different approaches to the problem: what we have called the XML-based interlanguage approach and the ontology-based interlanguage approach. In the following sections we discuss these different approaches in some detail, using the three different systems—CGML, COAX and OntoMerge—as case study illustrations. Then we provide some criteria for how we might choose any system that addresses the XML translation problem. In the final sections we highlight some emergent possibilities that might arise based on different scenarios that could develop and draw our conclusions.

The transformation of digital content

With current digital workflow practices, translatability and interoperability of content is normally achieved in an unsystematic way by ‘sticking together’ content originally created, so as to comply with separately devised schemas. Interoperability is facilitated manually by the creation of procedures that enable content-transfers to be made between the different end points of digital systems.

For example, two fields expressing similar content might be defined as ‘manager’ in one electronic schema and ‘supervisor’ in another schema. The local middleware solution is for an individual programmer to make the judgement that the different tags express similar or the same content and then manually to create a semantic bridge between the two fields— so that ‘manager’ in one system is linked with ‘supervisor’ in the other system. These middleware programming solutions then provide a mechanism for the streaming of data held in the first content storage system categorised under the ‘manager’ field to another content storage system in which the data will be categorised under the ‘supervisor’ field. Such programming fixes make translatability and interoperability possible within firms and institutions and, to a lesser extent, along the supply chains that connect firms, but only to the extent that the ‘bridges’ created by the programmer really do translate two tags with synonymous content. We can call this the ‘localised patch and mend’ approach. And we note both that it does involve ‘human interpretive intelligence’, the intelligence of the programmer formulating the semantic bridges, and also that the use of such intelligence only serves to formulate a model for translation from one schema to another. And as should be plain to everyone, such models can vary in the extent to which they correctly translate the content of one schema to another.

Such an approach is likely to be advocated by practical people, for an obvious reason. The introduction of new forms of information architecture is usually perceived as disruptive of locally constructed approaches to information management. New more systematic approaches, which require changes to content management systems, are often resisted if each local need can be ‘fixed’ by a moderately simple intervention, especially if it is perceived that these more systematic approaches don’t incorporate local human interpretive intelligence into information management architecture. We believe that such patch and mend approaches to translatability might be all very well for locally defined operations. But such localised solutions risk producing outcomes in which the infrastructure required to support the exchange of content within a global economy remains a hopeless jumble of disconnected fixes. This approach will fail to support translatability among conflicting schemas and standards.

The structure of the automated translation problem

In order to overcome the limitations of the patch and mend approach, we provide a detailed structure of the problem of creating more generalised translation/transformation systems. This is illustrated in Figure 14.1. This figure portrays a number of schemas, which refer to different ontological domains. For example, such domains might be payroll, human resources and marketing within an organisation; or they might include the archival documents associated with research inputs and outputs of multiple research centres within a university such as engineering, architecture or the social sciences.

Our purpose is to discuss the transfer of content from one schema to another—and to examine how translatability and interoperability might be achieved in this process. In Figure 14.1 we provide details of what we have called the ‘translation/transformation architecture’.

To consider the details of this translation/transformation architecture, it is first necessary to discuss the nature of the various schemas depicted in Figure 14.1. Extensible Markup Language (XML) was developed after the widespread adoption of Hypertext Markup Language (HTML), which was itself a first step towards achieving a type of interoperability. HTML is a markup language of a kind that enables interoperability, but only between computers: it enables content to be rendered to a variety of browser-based output devices like laptops and computers. However, HTML does this through a very restricted type of interoperability. XML was specifically designed to address the inadequacies of HTML in ways which make it, effectively, more than a markup language. First, like HTML it uses tags to define the elements of the content in a way that gives meaning to them for computer processing (but unlike HTML there is no limit on the kinds of tags which can be used). This function of XML is often referred to as the semantic function, a term which we will use in what follows. Second, in XML a framework of tags can be used to create structural relationships between other tags. This function of XML is often referred to as the syntactical function, a term which we will also use. In what follows we will call a framework of tags an XML Schema—the schemas depicted in Figure 14.1 are simply frameworks of tags. The extensible nature of XML—the ability to create schemas using it—gives rise to both its strength and weakness. The strength is that ever-increasing amounts of content are being created to be ‘XML compliant’. Its weakness is that more and more schemas are being created in which this is done.

In response to this, a variety of industry standards, in the form of XML schemas, are being negotiated in many different sectors among industry practitioners. These are arising because different industry sectors see the benefit of using the internet as a means of managing their exchanges (e.g. in managing demand-chains and value-networks) because automated processing by computers can add value or greatly reduce labour requirements. In order to reach negotiated agreements about such standards, reviews are undertaken by industry-standards bodies which define, and then describe, the standard in question. These negotiated agreements are then published as XML schemas. Such schemas ‘express shared vocabularies and allow machines to carry out rules made by people’ (W3C 2000). The advantage of the process just described is that it allows an industry-standards body to agree on an XML schema which is sympathetic to the needs of that industry and declares this to be an XML standard for that industry.

But the adoption of a wide range of different XML standards is not sufficient to address the challenge of interoperability in the way we have previously defined this. Obviously reaching negotiated agreements about the content of each XML standard does not address the problem of what to do when content must be transferred between two standards. This problem is obviously more significant, the larger the semantic differences between the ways in which different industry standards handle the same content. This problem is further compounded by the fact that many of these different schemas can be semantically incommensurable.

Addressing the problem of incommensurability

The problem of incommensurability lies at the heart of the challenges associated with the automated translation problem. Instances of XML schemas are characterised by the use of ‘digital elements’ or ‘tags’ that are used to ‘mark up’ unstructured documents, providing both structure and semantic content. At face value, it would seem that the translation problem and the problem of incommensurability could be best addressed by implementing a rule based tag-to-tag transformation of XML content. The translation ‘rules’ would be agreed through negotiated agreements among a number of localised stakeholders. This would make this approach similar, but slightly different from the ‘patch and mend’ approach discussed previously, where effectively the arbitrary use of the human interpretive intelligence of the programmer is used to resolve semantic differences.

However, in considering the possibility of either a patch and mend or a rule-based approach, we think there is a necessity to ask the question: do tags have content that can be translated from one XML-schema to another, through rule-based tag-to-tag transformations? Or put another way, do such transformations really provide ‘translations’ of ‘digital content’?

To answer this question we have to be clear about what we mean by ‘digital content’. This question raises an ontological issue in the philosophical, rather than the information technology, sense of this term. The sharable linguistic formulations about the world (claims and metaclaims that are speech-, computer- or artifact-based—in other words, cultural information used in learning, thinking, and acting) found in documents and electronic media have two aspects (Popper 1972, Chapter 3). The first is the physical pattern of markings or bits and bytes constituting the form of documents or electronic messages embodying the formulations. This physical aspect comprises concrete objects and their relationships. In the phrase ‘digital content’, ‘digital’ refers to the ‘digital’ character of the physical, concrete objects used in linguistic formulations.

The second aspect of such formulations is the pattern of abstract objects that conscious minds can grasp and understand in documents or other linguistic products when they know how to use the language employed in constructing it. The content of a document is the pattern of these abstract objects. It is what is expressed by the linguistic formulation that is the document (Popper 1972, Chapter 3). And when content is expressed using a digital format, rather than in print, or some other medium, that pattern of abstract objects is what we mean by ‘digital content’.

Content, including digital content, can evoke understanding and a ‘sense of meaning’ in minds, although we cannot know for certain whether the same content presented to different minds evokes precisely the same ‘understanding’ or ‘sense of meaning’. The same content can be expressed by different concrete objects. For example, different physical copies of Hamlet, with different styles of printing having different physical form, can nevertheless express the same content. The same is true of different physical copies of the American Constitution. In an important sense when we refer to the American Constitution, we do not refer to any particular written copy of it, not even to the original physical document, but rather to the pattern of abstract objects that is the content of the American Constitution and that is embodied in all the different physical copies of it that exist.

Moreover, when we translate the American Constitution, or any other document for that matter, from one natural language—English—to another, say German, it is not the physical form that we are trying to translate, to duplicate, or, at least, to approximate in German, but rather it is the content of the American constitution that we are trying to carry or convey across natural languages. This content cannot be translated by mapping letters to letters, or words to words across languages, simply because content doesn’t reside in letters or words. Letters and words are the tools we use to create linguistic content. But, they, themselves, in isolation from context, do not constitute content. Instead, it is the abstract pattern of relationships among letters, words, phrases and other linguistic constructs that constitutes content. It is these same abstract patterns of relationships that give rise to the linguistic context of the assertions expressed as statements in documents, or parts of documents. The notion of linguistic context refers to the language and patterns of relationships that surrounds the assertions contained within documents. The linguistic context is distinct from the social and cultural context associated with any document, because social and cultural context is not necessarily included in the content itself. Rather social and cultural context if it is captured can be included in metadata attached to that content.

We think that it is linguistic context, in this sense of the term, which makes the problem of translation between languages so challenging. This principle of translation between natural languages is inclusive of XML-based digital content. With XML content it is the abstract patterns of objects in documents or parts of documents—combining natural language expressions, relationships among the abstract objects, the XML tags, relationships among the tags, and relationships among the natural language expressions and the XML tags—that give rise to that aspect of content we call linguistic context. This need for sensitivity towards linguistic context we think forms part of what Cope and Kalantzis extol as the need for new ‘conceptualization sensibilities’. And we contend that a translation process that takes into account this sensitivity towards context involves what Cope and Kalantzis describe as the ‘dynamics of difference’.

Once a natural language document is marked up in an XML schema the enhanced document is a meta-language document, couched in XML, having a formal structure. It is the content of such a meta-language document, including the aspect of it we have called linguistic context that we seek to translate, when we refer to translating XML-based digital content from one XML language to another.

So, having said what we mean by ‘digital content’, we now return to the question: do XML tags have content that can be translated from one XML-language to another, through rule-based tag-to-tag transformations? Or, put another way, do such transformations really provide ‘translations’ of ‘digital content’? As a matter of general rule, we think not, because of the impact of linguistic context embedded in the content. We contend that this needs to be taken into account, and that tag-to-tag translation systems do not do this.

Therefore, we think something else is needed to alleviate the growing XML babel by means of a semi-automated translation approach. To address this matter and to set a framework for comparing three different transformation models and the way these different models cater for the application of human interpretive intelligence, we now analyse the details of the translation/transformation architecture outlined in Figure 14.1.

System components of a translation/transformation architecture

To address the challenge of incommensurability and linguistic context as described in the previous section, we argue that the design of effective transformation architecture must allow for the application of human interpretive intelligence. The fundamental concern of this chapter is to explore the means by which this can be achieved. Further details of the technical design choice outlined in Figure 14.1 are now summarised in Figure 14.2.

As a means of exploring aspects of the technical design choice when considering different transformation architectures, it is helpful to understand the ‘translation/transformation system’ as the underlying assumptions and rules governing the translation from one XML schema to another, including the infrastructure systems that are derived from these assumptions (see the later section where we discuss infrastructure systems in more detail). In turn, the transformation mechanisms are best understood as the ‘semantic bridges’ or the ‘semantic rules’ that create the connections between each individual digital elements within each XML schema and the translation/transformation system. In this chapter we refer to such connections as axioms. We now discuss the details of these concepts in turn.

The translation/transformation system

The design of a translation/transformation system involves the consideration of the means by which the entities that comprise multiple XML schemas are mapped together. This process is called ontology mapping. In this process, we think that in order to integrate incommensurable tags or fields and relations across differing XML schemas, human interpretive intelligence is required to create new ontological categories and hierarchical organisations and reorganisations of such categories. Importantly, when this happens, the ontology mapping process gives rise to the creation of an expanded ontology (ontology creation)—and results in what we will call a ‘merged ontology’. We call this merged ontology an interlanguage.

This idea of a merged ontology (or interlanguage) we think has important implications for the role of categories. We are aware that many might think that the reliance on categories can lead to excessive rigidity in thought processes. However, we think that the use of human interpretive intelligence as a means of addressing incommensurable tags or fields and relations across differing XML schemas addresses this problem. The important point is that in explicitly addressing incommensurability, expanded ontologies with different categories and hierarchies of categories evolve, through time, according to very specific contexts of occurrence and application. Therefore, through time, we think that the growth of an interlanguage is best understood as an evolutionary process because its continued existence and expansion is reliant on the continued critiquing of its function and relevance by the human-centric social system that surrounds it.

The interlanguage approach to translation follows the pattern of the translation/transformation architecture presented in Figure 14.1. This is because in that architecture, the transformation system is the interlanguage. The interlanguage comprises the framework of terms, expressions and rules, which can be used to talk about documents encoded using the different XML schemas that have been mapped into the transformation system.

A key point is that an interlanguage can only apply to schemas whose terms, entities and relationships have been mapped into it and therefore are modeled by it. The model includes both the relationships among terms in the interlanguage and the mapping relationships between these terms and the terms or other entities in the XML schemas that have been mapped. The model expresses a broader framework that encompasses the ontologies of the XML schemas whose categories and relationships have been mapped into it—thus the term merged ontology.

An interlanguage model will be projectable in varying degrees to XML schemas that have not been mapped into it, provided there is overlap in semantic content between the new schemas and previously mapped schemas. Speaking generally, however, an interlanguage will not be projectable to other schemas without an explicit attempt to map the new schema to the interlanguage. Since the new schema may contain terms and relationships that are not encompassed by the framework (model, theory) that is the interlanguage, it may be, and often is, necessary to add new terms and relationships, as well as new mapping rules to the interlanguage. This cannot be done automatically, and human interpretive intelligence must be used for ontology creation. As we will see when we compare three different systems analysed in this chapter, there is potential to make the ontology merging process a semi-automated activity. We shall see that the OntoMerge system is an example of this.

The transformation mechanisms

Transformation mechanisms provide the semantic bridges and semantic rules between individual XML schemas and each translation/transformation system. As we shall see, the design of different transformation mechanisms is shaped by the assumptions or rules governing the transformation system itself. This includes whether human interpretive intelligence is applied during the content translation process itself, or whether the content translation process is fully automated. One of the key themes highlighted in this chapter is the extent to which the translation of XML content can be fully automated. In the examples we discuss, the COAX and OntoMerge systems are designed to automate the content translation process. In contrast, the CGML system builds within it the capacity to apply human interpretive intelligence as part of the execution of the translation process.

An interlanguage as a theory of translation

One way to look at the problem of semi-automated translation of XML content is to recognise that an interlanguage is a theory or model of a meta-meta-language. The idea of a meta-meta language arises because each XML schema defines a meta-language which is used to markup text and when different XML schemas are mapped together the framework for cross mapping these schemas becomes a meta-meta-language. The interlanguage is supplemented with translation/transformation rules, and therefore is constantly being tested, refuted and reformulated in the face of new content provided by new XML schemas. This is a good way of looking at things because it focuses our attention on the idea that an interlanguage is a fallible theoretical construct whose success is contingent on its continuing ability to provide a means of interpreting new experiences represented by XML schemas not encountered in the past.

We now turn to two different examples of addressing the translation/transformation challenge—the XML-based transformation approach and the ontology-based transformation approach.

The XML-based interlanguage approach: two examples

In the XML-based interlanguage approach, the transformation system or interlanguage is itself a merged ontology expressed as an XML schema. The schema provides a linguistic framework that can be used to compare and relate different XML schemas. It can also be used along with the transformation mechanisms to translate XML documents marked up in one schema into XML documents using different schemas.

We will now supplement this general characterisation of XML-based interlanguages with an account of two examples: the Common Ground Markup Language (CGML) and the Contextual Ontology_X Architecture (COAX). The theoretical foundations of the CGML system have been written up elsewhere in this book. CGML forms part of an academic publishing system owned by Common Ground Publishing, formerly based in Australia and now based in the Research Park at the University of Illinois in Champaign, Illinois. The foundations of the COAX system have been written up by Rightscom (2006). The COAX system forms part of an approach to global infrastructure which is being pioneered by the International Digital Object Identifier (DOI) Foundation. It uses an ontology called Ontology_X, which is owned by Rightscom in the UK.

The difference in ontological structure between the CGML and the COAX systems is visualised in Figure 14.3.

The CGML translation/transformation architecture

With the CGML system, the digital elements that become part of this transformation system all emanate from the activities of practitioners as expressed in published XML standards. CGML uses semantics and syntax embodied in those standards. All digital tags in the CGML system define nouns or abstract nouns. But these are defined as a kind of a word. CGML tags can be understood as lexical items, including pairs or groups of words which in a functional sense combine to form a noun or abstract noun, such as < CopyEditor > .

A key element of the CGML system is the CGML ‘interlanguage’. This is an ‘apparatus’ that is used to describe and translate to other XML-instantiated languages (refer to Chapter 13 for details). In particular, the CGML application provides a transformation system through which the digital elements expressed in one XML standard can be transformed and expressed in another standard. As Cope and Kalantzis highlight in Chapter 13, ‘Creating an interlanguage of the social web’, the interlanguage is governed by two ‘semantic logics’. The first is that of distinction-exclusion. This helps identify tags whose meanings exist as parallel branches (sibling relations)—those tags which have synonymous meanings across different standards and, by implication, those that do not. For example, a < Person > is not an < Organisation > because an < Organisation > is defined as a legally or conventionally constituted group of < Persons >. The second logic is that of relation-inclusion. This determines tags contained within sub-branches (parent–child relations), which are semantically included as part of the semantics of the superordinate branch. For example, a < Party > to a creative or contractual relationship can be either a < Person > or an < Organisation > .

As outlined in Chapter 13, ‘Creating an interlanguage of the social web’, the impact of these two logics gives rise to the semantic and the syntactical rules that are embedded within the CGML tag dictionary and the CGML thesaurus. The CGML tag thesaurus takes each tag within any given schema as its starting point, reproduces the definitions and provides examples. Against each of these tags, a CGML synonym is provided. The semantics of each of these synonyms are coextensive with, or narrower than, the tag against which the mapping occurs. The CGML tag dictionary links the tag concept to a semantically explicit definition.

The CGML Dictionary does not purport to be about external referents as ‘meaning’; rather, it is built via the interlanguage technique from other languages that purport to have external referents. As a consequence, its meanings are not the commonsense meanings of the lifeworld of everyday experience, but derivative of specialised social languages. In one sense, therefore, the dictionary represents a ‘scaffold for action’ with the CGML Dictionary being more like a glossary than a normal dictionary. Its building blocks are the other tag-concepts of the CGML interlanguage. A rule of ‘lowest common denominator semantics’ is rigorously applied.

Obviously, the contents of the thesaurus and the dictionary can be extended, each time a new XML standard or even, indeed, a localised schema is mapped into the CGML interlanguage. Thus, the interlanguage can continuously evolve through time in a type of lattice of cross-cutting processes that map existing and emerging tagging schemas.

By systematically building on the two logics described above, Cope and Kalantzis highlight that the interlanguage mechanism (or apparatus, as they call it) does not manage structure and semantics per se. Rather they suggest (in Chapter 13) that it automatically manages the structure and semantics of structure and semantics. Its mechanism is meta-structural and meta-semantic. It is aimed at interoperability of schemas which purport to describe the world rather than reference the world.

How the CGML system works

The key requirement for the CGML system to work on a particular XML document or content is that the XML standard underlying the document has already been mapped into the CGML interlanguage. The mapping process requires a comparison of the noun tags in the XML standard with the nouns or abstract nouns in the existing CGML ontology at the time of the mapping. This process requires the application of human interpretive intelligence. Generally speaking, there will be considerable overlap in noun tags between the CGML system and the XML standard: the ‘common ground’ between them. For the remaining tags, however, it will be necessary to construct transformation rules that explicitly map the noun tags in the standard to noun and abstract noun tags in CGML. Where this can’t be done, new noun (or abstract noun) tags are taken from the standard and added to CGML, which means placing the new tags in the context of the hierarchical taxonomy of tags that is CGML, and also making explicit the translation rules between the tags in the standard and the new tags that have been added to the CGML system.

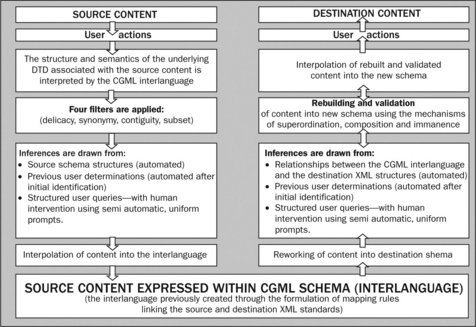

An overview of the resulting revised CGML translation/transformation system, as it is applied to XML content, is provided in Figure 14.4. We can describe how this system works on content as a two-stage process.

Stage 1 Merge the source XML content with the CGML XML schema

To begin with, the CGML interlanguage apparatus interprets the semantics and syntax of the source content. It is able to do this from the underlying XML standard (or schema) and the particular way the source content is expressed within the semantic and syntactic structure of the standard (or schema) itself. For this to be possible, it is, once again, necessary that each XML standard has already been mapped into the CGML interlanguage. The translation of the source content into the CGML interlanguage is then carried out by passing the source content through the delicacy, synonymn, contiguity and subset filters explained by Cope and Kalantzis in Chapter 13, ‘Creating an interlanguage of the social web’.

The translation can at times occur in automated ways, when inferences can be drawn from the underlying XML standard. Where it is not possible to construe any direct relationships between content elements from the source standard and the interlanguage, a structured query is thrown up for the user to respond to. It is in this process that human interpretive intelligence is applied to enable translation. User responses to these structured queries become part of the accumulated ‘recordings’ of semantic translations. These responses are then built up into a ‘bank’ of previous translations. The results stored in this bank can be used to refine the operation of the filters so that as the transformation process is repeated fewer and fewer structured queries are thrown up for the user to respond to. It is possible, in principle, for the filters to be ‘trained’, in a similar way to that in which voice recognition software is trained to recognise the speech of particular speakers. The user responses grow the knowledge about translation contained in the translation system, and also grow the ontology resident in CGML. This shows that ‘human interpretive intelligence’ is about solving problems that appear in the translation system, and raises the question of whether such a hybrid approach might have an advantage over other XML-based systems that do not provide for human intervention in problem solving and the growth of translation knowledge.

Stage 2 Transform CGML XML content into the destination standard

Once the content is interpolated into the CGML interlanguage, the content is structured at a sufficient level of delicacy to enable the CGML transformation system to function. Some reconfiguration of the content is necessary so that appropriate digital elements can be represented according to the semantic and syntactic structures of the destination XML standard. This process involves passing the content ‘backwards’ through only two of the filters but with the backwards filter constraints set according to the requirements of the destination standard. Only the contiguity and subset filters are required, because when content is structured within the CGML interlanguage apparatus, the content already exists at its lowest level of delicacy and semantic composition and thus the delicacy and synonymy filters are not needed. The three mechanisms associated with this backwards filtering process are superordination, composition and immanence and a number of other ‘sub-mechanisms’—as outlined by Cope and Kalantzis in Chapter 13, ‘Creating an interlanguage of the social web’.

This translation can also occur in automated ways when inferences can be drawn from the destination standard. In the case where the filter constraints prevent an automated passing of content, then a structured query is thrown up for the user to respond to. User responses are then built up into a bank of previous translations. The results stored in this bank can also be used to refine the operation of the filters so that, as the translation process is repeated, fewer and fewer structured queries are thrown up for the user to respond to in this second stage as well as in the first stage.

The COAX translation/transformation architecture

The COAX transformation system differs from the CGML system principally in the importance that verbs play in the way in which the COAX system manages semantics and syntax: ‘Verbs are… the most influential terms in the ontology, and nouns, adjectives and linking terms such as relators all derive their meanings, ultimately, from contexts and the verbs that characterize them’ (Rightscom 2006, p. 46).

Rightscom gives the reason for this:

COA semantics are based on the principle that meaning is derived from the particular functions which entities fulfil in contexts. An entity retains its distinct identity across any number of contexts, but its attributes and roles (and therefore its classifications) change according to the contexts in which it occurs (2006, p. 46).

The origins of this approach go back to the < indecs > metadata project. < indecs > was a project supported by the European Commission, and completed in 2000, which particularly focused on interoperability associated with the management of intellectual property. The project was designed as a fast track, infrastructure project aimed at finding practical solutions to interoperability affecting all types of rights-holders in a network, e-commerce environment. It focused on the practical interoperability of digital content identification systems and related rights metadata within multimedia e-commerce (Info 2000, p. 1).

The semantics and syntactical transformations associated with the COAX system depend on all tags within the source standard being mapped against an equivalent term in the COAX system. By working in this way, Rust (2005, slide 13) suggests that the COAX system can be understood as an ontology of ontologies. Rightscom explains the process by which an XML source standard is mapped into COAX:

For each schema a once-off specific ‘COA mapping’ is made, using Ontology_X. This mapping is made in ‘triples’, and it represents both the syntax and semantics of the schema. For example, it not only contains the syntactic information that element X is called ‘Author’ and has a Datatype of ‘String’ and Cardinality of ‘1-n’, but it contains the semantic information that ‘X IsNameOf Y’ and that ‘Y IsAuthorOf Z’. It is this latter dimension which is unusual and distinguishes the COAX approach from more simple syntactic mappings which do not make the semantics explicit (2006, p. 27).

Even though we are describing the COAX system as an XML to XML to XML translation system, it is entirely possible that this methodology could emerge into a fully fledged semantic web application that draws on RDF and OWL specifications.

The ways in which the COAX system specifies the triples it uses is in compliance with the W3C RDF standard subject–predicate–object triple model (Rightscom 2006, p. 16).

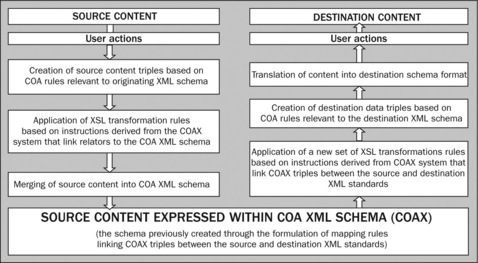

How the COAX transformation system works

The COAX transformation system works as a two-staged process (Figure 14.5).

Stage 1 Merging the source XML content with the COA XML schema

To begin with, all ‘elements’ from the source content are assigned ‘relators’ using a series of rules which are applied using the COAX transformation system. A ‘relator’ is an abstraction expressing a relationship between two elements—the domain and range of the relator (Rightscom 2006, p. 57). The relators may be distinct for each different XML standard and all originate from the COAX dictionary. The relators and the rules for assigning relators will have been created when each XML standard is mapped into the COAX system. Obviously for a user to be able to proceed in this way, it is necessary that each XML standard has already been mapped into the COAX system.

The linked elements created by the assigning of relators are expressed as XML structured triples. The COA model is ‘triple’ based, because content is expressed as sets of domain–relator–range statements such as ‘A HasAuthor B’ or ‘A IsA EBook’ (Rightscom 2006, p. 25). This domain–relator–range is in compliance with the W3C RDF standard subject–predicate–object triple model (Rightscom 2006, p. 16). These triples contain the semantic and syntactic information which is required for content transformation. Notice that relators must be constructed to express relationships between every pair of digital elements if these elements are to be used within the COAX transformation system. The aggregation of triples form the basis of the COAX data dictionary. Data dictionary items are created when the elements in the source and destination XML schemas are mapped against each using relators as the basis for mapping COAX triples.

Once the content is specified as a set of triples, then the COAX system uses the Extensible Stylesheet Language (XSL). XSL is an XML-based language, which is used for the transformation of XML documents (Wikipedia 2006) to further transform the source content, now expressed as triples, into a form whose semantics and syntax are compliant with the COAX schema. The XSL transformations (XSLTs) used to do this contain within them rules generated by Rightscom that are specific to the transformation of the content from the set of triples into the COAX interlanguage. For a user to be able to do this requires that the necessary rules and XSLT instructions have been mapped into the COAX system. Unlike the CGML XML content translation process, the COAX process of merging XML content into COAX is completely automatic and there is no facility for user queries to determine how content not represented in COAX may be integrated into the merged COAX ontology. Thus the use of human interpretive intelligence in COAX is limited to the contributions of Rightscom experts who create the bridge mappings of XML standards to COAX.

Stage 2 Transform the COAX content into the destination standard

Once the content is structured according to the COAX schema requirements, a new set of XSLTs is applied to the content in order to transfer the content from the COAX schema format into a set of triples which contain the semantic and syntactic information needed to convert the content into a form compatible with the destination XML standard. The new XSLTs used to do this also contain within them rules generated by Rightscom, and are specific to the transformation of COAX-formatted content to the destination standard. Again, for a user to be able to do this it is necessary that each XML standard has already been mapped into the COAX system.

A further series of rules are then applied using the COAX transformation system to translate the content, expressed as a set of triples, into the format of the destination XML standard. These rules will be different for each destination XML standard and are created when each XML standard is mapped into the COAX system. Again, for a user to be able to proceed in this way, it is necessary that the destination XML standard has already been mapped into the COAX system.

The ontology-based interlanguage approach: OntoMerge

In the ontology-based interlanguage approach, the transformation system or interlanguage (see Figure 14.1) is different from what we have described with CGML and COAX. This difference in approach arises because an ontology-based interlanguage approach does not result in an XML to XML to XML translation. Rather, it is an XML to ontology to XML translation. Like in the XML-based interlanguage approach, the transformation system is itself a merged ontology. But unlike the XML-based interlanguage approach the merged ontology is not expressed as an XML schema. In contrast, it is expressed as semantic web ontology through the use of object-oriented modelling languages such as Ontology Web Language (OWL). OWL is designed for use by applications that need to process the content of information instead of just presenting information to humans (W3C 2004a). Processing the content of information includes the ability to lodge queries across differing information systems that might be structured using different schema frameworks.

In achieving the objective of ‘processing content’ these merged ontologies, in turn can be used in two ways. First, they can assist with the translation of different ontologies through the continuous expansion of the transformation system to create larger, more expansive merged ontologies. Second, they can be used in the translation of one XML document to another. This highlights that XML schemas and ontologies are not the same thing. An XML schema is a language for restricting the structure of XML documents and provides a means for defining the structure, content and semantics of these documents (W3C 2000). In contrast, ontology represents the meaning of terms in vocabularies and the inter-relationships between those terms (W3C 2004a).

But despite the differences in the way the interlanguage mechanisms work, the overall system architecture is the same as that outlined in Figure 14.1. That is, the transformation system is the interlanguage, but the interlanguage is expressed as a semantic web ontology using object-orientated modelling languages. The ontology comprises a framework of terms, expressions and rules, which can be used as a basis for analysing documents encoded using the different XML schema that have been mapped into the transformation system and for supporting queries between different information systems that have been structured across different ontologies. To work through the differences in the ways the semantic web interlanguage systems work, we now turn to one example of such a translation/transformation architecture—OntoMerge.

OntoMerge: an example of an ontology-based translation system

OntoMerge is an online service for ontology translation, developed by Dejing Dou, Drew McDermott and Peishen Qui (2004a, 2004b) located at Yale University. It is an example of a translation/transformation architecture that is consistent with the design principles of the semantic web. Some of the design principles of the semantic web that form part of the OntoMerge approach include the use of formal specifications such as Resource Description Framework (RDF), which is a general-purpose language for representing information in the web (W3C 2004b); OWL and the predecessor language such as DARPA Agent Markup Language (DAML), the objective of which has been to develop a language and tools to facilitate the concept of the semantic web; Planning Domain Definition Language (PDDL) (Yale University undated a); and the Ontology Inference Layer (OIL).

To develop OntoMerge, the developers have also built their own tools to do translations between the PDDL and DAML. They have referred to this as PDDAML (Yale University undated b).

serves as a semi-automated nexus for agents and humans to find ways of coping with notational differences between ontologies with overlapping subject areas. OntoMerge is developed on top of PDDAML (PDDL-DAML Translator) and OntoEngine (inference engine).

and produces the concepts or instance data translated to the target ontology (Yale University undated c).

More recently, OntoMerge has acquired the capability to accept DAML + OIL and OWL ontologies, as well. Like OWL, DAML + OIL is a semantic markup language for web resources. It builds on earlier W3C standards such as RDF and RDF Schema, and extends these languages with richer modelling primitives (W3C 2001). For it to be functional, OntoMerge requires merged ontologies in its library. These merged ontologies specify relationships among terms from different ontologies.

OntoMerge relies heavily on Web-PDDL, a strongly typed, first-order logic language, as its internal representation language. Web-PDDL is used to describe axioms, facts and queries. It also includes a software system called OntoEngine, which is optimised for the ontology-translation task (Dou, McDermott and Qui 2004a, p. 2). Ontology translation may be divided into three parts:

![]() syntactic translation from the source ontology expressed in a web language, to an internal representation, e.g., syntactic translation from an XML language to an internal representation in Web-PDDL

syntactic translation from the source ontology expressed in a web language, to an internal representation, e.g., syntactic translation from an XML language to an internal representation in Web-PDDL

![]() semantic translation using this internal representation; this translation is implemented using the merged ontology derived from the source and destination ontologies, and the inference engine to perform formal inference

semantic translation using this internal representation; this translation is implemented using the merged ontology derived from the source and destination ontologies, and the inference engine to perform formal inference

![]() syntactic translation from the internal representation to the destination web language.

syntactic translation from the internal representation to the destination web language.

In doing the syntactic translations, there’s also a need to translate between Web-PDDL and OWL, DAML or DAML + OIL. OntoMerge uses its translator system PDDAML to do these translations:

Ontology merging is the process of taking the union of the concepts of source and target ontologies together and adding the bridging axioms to express the relationship (mappings) of the concepts in one ontology to the concepts in the other. Such axioms can express both simple and complicated semantic mappings between concepts of the source and target ontologies (Dou, McDermott and Qi 2004a, pp. 7–8).

Assuming that a merged ontology exists, located typically at some URL, OntoEngine tries to load it in. Then it loads the dataset (facts) in and does forward chaining with the bridging axioms, until no new facts in the target ontology are generated (Dou, McDermott and Qi 2004a, p. 12).

Merged ontologies created for OntoMerge act as a ‘bridge’ between related ontologies. However, they also serve as new ontologies in their own right and can be used for further merging to create merged ontologies of broader and more general scope.

Ontology merging requires human interpretive intelligence to work successfully, because ontology experts are needed to construct the necessary bridging axioms (or mapping terms) from the source and destination ontologies. Sometimes, also, new terms may have to be added to create bridging axioms, and this is another reason why merged ontologies have to be created from their component ontologies. A merged ontology contains all the terms of its components and any new terms that were added in constructing the bridging axioms.

Dou, McDermott, and Qi themselves emphasise heavily the role of human interpretive intelligence in creating bridging axioms:

In many cases, only humans can understand the complicated relationships that can hold between the mapped concepts. Generating these axioms must involve participation from humans, especially domain experts. You can’t write bridging axioms between two medical-informatics ontologies without help from biologists. The generation of an axiom will often be an interactive process. Domain experts keep on editing the axiom till they are satisfied with the relation expressed by it. Unfortunately, domain experts are usually not very good at the formal logic syntax that we use for the axioms. It is necessary for the axiom-generating tool to hide the logic behind the scenes whenever possible. Then domain experts can check and revise the axioms using the formalism they are familiar with, or even using natural-language expressions (Dou, McDermott and Qi 2004b, p. 14).

OntoMerge and the translation of XML content

It is in considering the problem of XML translations that a distinguishing feature of the OntoMerge system is revealed when compared with the CGML and COAX approaches. OntoMerge is not reliant on the declaration of XML standards in order for its transformation architecture to be developed. This reflects OntoMerge’s semantic web origins and the objective of ‘processing the content of information’. Because of this, the OntoMerge architecture has developed as a ‘bottom up’ approach to content translation. We say this because with OntoMerge, when the translation of XML content occurs there is no need to reference declared in XML standards in the OntoMerge transformation architecture. Nor is there any assumption that content needs to be XML standards based to enact successful translation. In other words, OntoMerge is designed to start at the level of content and work upwards towards effective translation. We highlight this perceived benefit, because, in principle, this bottom-up approach to translation has the benefit of bypassing the need for accessing XML standards-compliant content. We say work upwards because, as we emphasise in the next paragraph, OntoMerge relies on access to semantic web ontologies to execute successful translations.

Within the OntoMerge system architecture there is a need to distinguish between a ‘surface ontology’ and a ‘standard (or deep) ontology’. A surface ontology is an internal representation of the ontology derived from the source XML content when the OntoEngine inference engine is applied to the source content. In contrast, a standard ontology focuses on domain knowledge and thus is independent of the original XML specifications (Qui, McDermott and Dou 2004, p. 7).

Thus surface and standard ontologies in the ontology-based interlanguage approach appear to be equivalent to XML schemas and standards in the XML-based interlanguage approach. So, whereas with the XML-based interlanguage approach, there is a reliance on declared XML standards, with the ontology-based interlanguage approach there is a need to draw on published libraries of standard ontologies or semantic web ontologies. In translating XML content using the OntoMerge system architecture the dataset is merged with the surface ontology into what we have called a surface ontology dataset. In turn, this is subsequently merged with the standard ontology to create what we have termed a standard ontology dataset. The details of how this merging takes place are described in the following section. Once the content is expressed in the standard ontology dataset it can then be translated into a standard ontology dataset related to the destination content schema. The choice of the standard ontology is determined by its relatedness to the nature of the source content. In executing such translations of XML content, the OntoMerge system also uses bridging axioms. These are required in the translation between different standard ontology datasets. Thus with the OntoMerge system bridging axioms act as the transformation mechanisms and are central to the translation of XML content (Qui, McDermott and Dou 2004, p. 13). With both the CGML and COAX systems, the transformation mechanisms play a similar role to that of the bridging axioms in OntoMerge. In CGML, we saw that rules associating noun-to-noun mappings were critical to performing translations. For COAX, we saw that COAX triple to COAX triple mappings were the most influential terms in the ontology. In OntoMerge, the key to translation are the bridging axioms that map predicates to predicates in the source and destination standard ontologies respectively.

Predicates are terms that relate subjects and objects and are central to RDF and RDF triples. In RDF, a resource or subject (or name) is related to a value or object by a property. The property, the predicate (or relator) expresses the nature of the relationship between the subject and the object (or the name and the value). The assertion of an RDF triple says that some relationship indicated by the triple holds between the things donated by the subject and the object of the triple (W3C 2004b). Examples of predicates include: ‘author’, ‘creator’, ‘personal data’, ‘contact information’ and ‘publisher’. Some examples of statements using predicates are:

Routledge is the publisher of All Life Is Problem Solving

John’s postal address is 16 Waverly Place, Seattle, Washington

With OntoMerge, then, predicates are nouns, and bridging axioms are mapping rules that use predicates to map nouns-to-nouns as in CGML. However, there are significant differences in approach because these nouns as predicates carry with them the inferred relationships between the subjects and objects that define them as predicates in the first place. This is the case even though the bridging axioms are not defined using triples mapped to triples as in the case of COAX.

The importance of predicates is fundamental to the OntoMerge inference engine, OntoEngine, because one of the ways of discriminating ‘the facts’ embedded within datasets loaded into OntoEngine is through the use of predicates. That is, predicates form the second tier of indexing structure of OntoEngine. This in turn provides the foundation for OntoEngine to undertake automated reasoning: ‘When some dataset in one or several source ontologies are input, OntoEngine can do inference in the merged ontology, projecting the resulting conclusions into one of several target ontologies automatically’ (Dou, McDermott and Qui 2004b, p. 8).

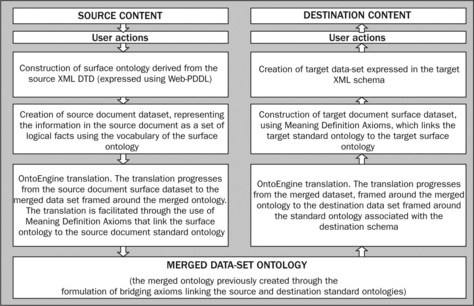

How the OntoMerge system works

There are some differences in the way OntoMerge is used to translate XML content versus how it is applied to translate ontologies. The general approach taken to XML content translation has been outlined by Dou, McDermott and Qi as follows:

We can now think of dataset translation this way: Take the dataset and treat it as being in the merged ontology covering the source and target. Draw conclusions from it. The bridging axioms make it possible to draw conclusions from premises some of which come from the source and some from the target, or to draw target-vocabulary conclusions from source-language premises, or vice versa. The inference process stops with conclusions whose symbols come entirely from the target vocabulary; we call these target conclusions. Other conclusions are used for further inference. In the end, only the target conclusions are retained; we call this projecting the conclusions into the target ontology. In some cases, backward chaining would be more economical than the forward-chain/project process… In either case, the idea is to push inferences through the pattern (2004b, p. 5).

The details of this approach to translating XML documents into other XML standards are outlined in Figure 14.6. As with the CGML and COAX approaches, we have also described how this system works as a two-staged process.

Stage 1 Transform source content schema into the merged ontology data set

To begin with the OntoEngine inference engine is used to build automatically a surface ontology from the source XML content’s Document Type Definition (DTD) file. A DTD defines the legal building blocks of an XML document and defines the document structure with a list of legal elements and attributes (W3 Schools undated). The surface ontology is the internal representation of the ontology derived from the source DTD file and is expressed using Web-PDDL (mentioned previously). Drawing on the vocabulary of the surface ontology, OntoEngine is used to extract automatically a dataset from the original XML content and the surface ontology. Though Qui, McDermott and Dou (2004, p. 5) don’t use this term, we call the new dataset the source document surface dataset.

This dataset represents the information in the source document ‘as a set of logical facts using the vocabulary of the surface ontology’. The need then is to merge this dataset further into a standard ontology dataset. In order to accomplish this merging, there is a requirement that the surface ontology has been merged with the standard ontology through the formulation of different types of bridging axioms called Meaning Definition Axioms (MDAs) (Qui, McDermott and Dou 2004, p. 2). According to Qui, McDermott and Dou, MDAs are required to assign meaning to the source XML content. This is achieved by relating the surface ontology to the particular standard ontology. As in the case of formulating bridging axioms, these MDAs are created with the help of human interpretive intelligence. Once these MDAs are formulated, the merging process can proceed with an automatic translation of the source document surface dataset to a dataset expressed in the standard ontology. The translation is also undertaken using the OntoEngine inference engine. The translation is able to proceed because of inferences derived from logical facts that are accessible from the use of MDAs that link the surface ontology dataset to the standard ontology. We now call this the source document merged ontology dataset.

Stage 2 Transform the source document merged ontology dataset into the destination XML schema

The source document merged ontology dataset is then translated to the destination document merged ontology. This translation is executed using OntoEngine and once again this translation is conditional on the formulation of bridging axioms that link the source and destination standard ontologies. The translation results in the creation of a destination document merged ontology dataset. In turn, this is translated into a destination document surface ontology dataset also using OntoEngine. This translation is conditional on MDAs being formulated that link the destination standard ontology with the destination surface ontology. The destination document surface ontology dataset is subsequently used along with the DTD file for the destination XML standard or schema, to create a destination dataset expressed in the destination XML standard (or schema). This completes the translation.

Differences in approach

The OntoMerge approach to XML translation is different from the CGML and COAX approaches in that it is pursued in a much more bottom-up rather than top-down manner. That is, when someone is faced with an XML translation problem and wants to use the OntoMerge approach, they don’t look to translate their surface ontologies to a single interlanguage model as represented by a model like the CGML or COAX systems. Rather, they first have to search web libraries to try to find a standard ontology (written in DAML, DAML + OIL or OWL) closely related to the knowledge domain of the surface ontology that has been merged with the surface ontology through the formulation of MDAs. Second, they also need to search web libraries for an earlier merged ontology that already contains bridging axioms linking the source standard ontology and the destination standard ontology. Third, they also need to search web libraries for previously developed ontologies that merge the destination standard ontology with the destination surface ontology through the formulation of meaning definition axioms. If no such merged ontologies exist, then no translation can be undertaken until domain experts working with OntoMerge can construct MDAs mapping the predicates in their surface ontologies to an ontology already available in DAML, DAML + OIL or OWL ontology libraries. Thus far, there appears to be no standard deep ontological framework that merges all the ontologies that have been developed in OntoMerge application work. There are many islands in the OntoMerge stream. But the centralised integration offered by CGML and COAX is absent.

Evaluating approaches to interoperability

In previous sections we have discussed the general nature of the transformation/translation problem from digital content expressed in one XML schema to the same digital content expressed in another. We have then reviewed three systems for performing such translations. Two of these systems originated in the world of XML itself and aim to transform the ad hoc ‘patch-and-mend’ and rule-based tag-to-tag approaches to transformation with something that would be more automatic, more efficient and equally effective. The third system originated in the world of the semantic web as a solution to the need for a software tool that would assist in performing ontology translations. The OntoMerge system was developed to solve this generalised problem and was found to be applicable to the problem of XML schema translations. As we have seen previously, all three systems rely on a merged ontology approach that creates either a generalised interlanguage, or in the case of OntoMerge a less generalised non-XML-based interlanguage that will work to translate one XML document to another.

In this section of the chapter we confront the problem of system choice. How do we select among competing architectures designed to enable XML translations? System selection, of course, requires one or more criteria, which we now proceed to outline and discuss. Then we illustrate the application of the criterion or criteria to the three illustrative systems reviewed earlier.

A generalised criterion for selecting among systems develops from the problem of semantic incommensurability that arises during the process of translating XML content between different schemas or standards. It is the criterion of ease of creating such commensurability (Firestone and McElroy 2003, p. 161; Popper 1970, pp. 56–57) where needed, so that transformations of XML content between one schema (or standard) and another can be implemented. In general, the system supporting the greatest relative ease and convenience in creating commensurability is to be preferred.