3.6. Constrained Problems

The major difference between a constrained and unconstrained problem is that for a constrained problem, an optimal solution must be sought in a feasible region; for an unconstrained problem, the feasible region contains the entire design domain. For a constrained problem, bringing an infeasible design into a feasible region is critical, in which gradients of active constraints are taken into consideration when determining the search direction for the next design. In this section, we first outline the nature of the constrained optimization problem and the concept of solution techniques. In Section 3.6.2, we then discuss a widely accepted strategy for dealing with the constraint functions, the so-called ε-active strategy. Thereafter, in Sections 3.6.3–3.6.5 we discuss the mainstream solution techniques for solving constrained optimization problems, including SLP, SQP, and the feasible direction method. These solution techniques are capable of solving general optimization problems with multiple constraints and many design variables. Before closing out this section, we introduce the penalty method, which solves a constrained problem by converting it to an unconstrained problem, and then we solve the unconstrained problem using methods discussed in Section 3.5. For illustration purposes, we use simple examples of one or two design variables. Like Section 3.5, we offer sample MATLAB scripts (see Appendix A) for solving example problems.

3.6.1. Basic Concept

Recall the mathematical definition of the constrained optimization problem:

![]() (3.67a)

(3.67a)

![]() (3.67b)

(3.67b)

![]() (3.67c)

(3.67c)

![]() (3.67d)

(3.67d)

Similar to solving unconstrained optimization problems, all numerical methods are based on the iterative process, in which the next design point is updated by a search direction n and a step size α along the direction. The next design point xk+1 is then obtained by evaluating the design at the current design point xk (some methods include information from previous design iterations) as

![]() (3.68)

(3.68)

For an unconstrained problem, the search direction n considers only the gradient of the objective function. For constrained problems, however, optimal solutions must be sought in the feasible region. Therefore, active constraints in addition to objective functions must be considered while determining the search direction as well as the step size. As with the unconstrained problems, all algorithms need an initial design to initiate the iterative process. The difference is for a constrained problem, the starting design can be feasible or infeasible, as illustrated in Figure 3.15a, in which a constrained optimization of two design variables x1 and x2 is assumed. The feasible region of the problem is identified on the surface of the objective function as well as projected onto the x1–x2 plane.

If an initial design is inside the feasible region, such as points A0 or B0, then we minimize the objective function by moving along its descent direction—say, the steepest descent direction—as if we are dealing with an unconstrained problem. We continue such iterations until either a minimum point is reached, such as the search path starting at point A0, or a constraint is encountered (i.e., the boundary of the feasible region is reached, like the path of initial design at point B0). Once the constraint boundary is encountered at point B1, one strategy is to travel along a tangent line to the boundary, such as the direction B1B2 illustrated in Figure 3.15b. This leads to an infeasible point from where the constraints are corrected in order to again reach the feasible point B3. From there, the preceding steps are repeated until the optimum point is reached.

Another strategy is to deflect the tangential direction B1B2 toward the feasible region by a small angle θ when there are no equality constraints. Then, a line search is performed through the feasible region to reach the boundary point B4, as shown in Figure 3.15b. The procedure is then repeated from there.

When the starting point is infeasible, like points C0 or D0 in Figure 3.15a, one strategy is to correct constraint violations to reach the constraint boundary. From there, the strategies described in the preceding paragraph can be followed to reach the optimum point. For example, for D0, a similar path to that shown in path B1B2B3 or B1B4 in Figure 3.15b is followed. The case for point C0 is easier because the decent direction of objective function also corrects the constraint violations.

A good analogy for finding a minimum of a constrained problem is rolling a ball in a fenced downhill field. The boundary of the feasible region is the fence, and the surface of the downhill field is the objective function. When a ball is released at a location (i.e., the initial design), the ball rolls due to gravity. If the initial point is chosen such that the ball does not encounter the fence, the ball rolls to a local crest (minimum point). If an initial point chosen allows the ball to hit the fence, the ball rolls along the fence to reach a crest. If the initial point is outside the fenced area, the ball has to be thrown into the fenced area before it starts rolling.

Several algorithms based on the strategies described in the foregoing have been developed and evaluated. Some algorithms are better for a certain class of problems than others. In this section, we focus on general algorithms that have no restriction on the form of the objective or the constraint functions. Most of the algorithms that we will describe in this chapter can treat feasible and infeasible initial designs.

In general, numerical algorithms for solving constrained problems start with a linearization of the objective and constraint functions at the current design. The linearized subproblem is solved to determine the search direction n. Once the search direction is found, a line search is carried out to find an adequate step size α for the next design iteration. Following the general solution steps, we introduce three general methods: SLP, SQP, and the feasible direction method. Before we discuss the solution techniques, we discuss the ε-active strategy that determines the active constraints to incorporate for design optimization.

3.6.2. ε-Active Strategy

An ε-active constraint strategy (Arora 2012), shown in Figure 3.16, is often employed in solving constrained optimization problems. Inequality constraints in Eq. 3.67b and equality constraints of Eq. 3.67c are first normalized by their respective bounds:

![]() (3.69a)

(3.69a)

and

![]() (3.69b)

(3.69b)

Usually, when bi (or ei) is between two parameters CT (usually −0.03) and CTMIN (usually 0.005), gi is active, as shown in Figure 3.16. When bi is less than CT, the constraint function is inactive or feasible. When bi is larger than CTMIN, the constraint function is violated. Note that CTMIN-CT = ε.

3.6.3. The Sequential Linear Programming Algorithm

The original optimization problem stated in Eq. 3.67 is first linearized by writing Taylor's expansions for the objective and constraint functions at the current design xk as below.

Minimize the linearized objective function:

![]() (3.70a)

(3.70a)

subject to the linearized inequality constraints

![]() (3.70b)

(3.70b)

![]() (3.70c)

(3.70c)

in which ∇f(Δxk), ∇gi(Δxk), and ∇hj(Δxk) are the gradients of the objective function, the ith inequality constraint and the jth equality constraint, respectively, and ≈ implies approximate equality.

To simplify the mathematical notations in our discussion, we rewrite the linearized equations in Eq. 3.70 as

![]() (3.71a)

(3.71a)

![]() (3.71b)

(3.71b)

![]() (3.71c)

(3.71c)

![]() (3.71d)

(3.71d)

where

![]()

![]()

![]()

![]()

![]()

![]()

![]()

and

![]()

Note that in Eq. 3.71a, f(xk) is dropped.  and

and  are the move limits—that is, the maximum allowed decrease and increase in the design variables at the kth design iteration. Note that the move limits make the linearized subproblem bounded and give the design changes directly without performing the line search for a step size α. Therefore, no line search is required in SLP. Choosing adequate move limits is critical to the SLP. More about the move limits will be discussed in Example 3.16.

are the move limits—that is, the maximum allowed decrease and increase in the design variables at the kth design iteration. Note that the move limits make the linearized subproblem bounded and give the design changes directly without performing the line search for a step size α. Therefore, no line search is required in SLP. Choosing adequate move limits is critical to the SLP. More about the move limits will be discussed in Example 3.16.

As discussed before, the SLP algorithm starts with an initial design x0. At the kth design iteration, we evaluate the objective and constraint functions as well as their gradients at the current design xk. We select move limits Δiℓk and Δiuk to define an LP subproblem of Eq. 3.71. Solve the linearized subproblem for dk, and update the design for the next iteration as xk+1 = xk + dk. The process repeats until convergent criteria are met. In general, the convergent criteria for an LP subproblem include

![]() (3.72)

(3.72)

Although the SLP algorithm is a simple and straightforward approach to solving constrained optimization problems, it should not be used as a black-box approach for engineering design problems. The selection of move limits is in essence trial and error and can be best achieved in an interactive mode. Also, the method may not converge to the precise minimum because no descent function is defined, and the line search is not performed along the search direction to compute a step size. Nevertheless, this method may be used to obtain improved designs in practice. It is a good method to include in our toolbox for solving constrained optimization problems.

3.6.4. The Sequential Quadratic Programming Algorithm

The SQP algorithm incorporates second-order information about the problem functions in determining a search direction n and step size α. A search direction in the design space is calculated by utilizing the values and the gradients of the objective and constraint functions. A quadratic programming subproblem is defined as

![]() (3.74a)

(3.74a)

![]() (3.74b)

(3.74b)

![]() (3.74c)

(3.74c)

in which a quadratic term is added to the objective function  and the constraint functions (3.74b) and (3.74c) are identical to those of LP subproblem, except that there is no need to define the move limits. The solution of the QP problem d defines the search direction n (where n = d/||d||). Once the search direction is determined, a line search is carried out to find an adequate step size α. The process repeats until the convergent criteria defined in Eq. 3.72 are met.

and the constraint functions (3.74b) and (3.74c) are identical to those of LP subproblem, except that there is no need to define the move limits. The solution of the QP problem d defines the search direction n (where n = d/||d||). Once the search direction is determined, a line search is carried out to find an adequate step size α. The process repeats until the convergent criteria defined in Eq. 3.72 are met.

3.6.5. Feasible Direction Method

The basic idea of the feasible direction method is to determine a search direction that moves from the current design point to an improved feasible point in the design space. Thus, given a design xk, an improving feasible search direction nk is determined such that for a sufficiently small step size α > 0, the new design, xk+1 = xk + αknk is feasible, and the new objective function is smaller than the current one; that is, f(xk+1) < f(xk). Note that n is a normalized vector, defined as n = d/||d||, where d is the nonnormalized search direction solved from a subproblem to be discussed.

Because along the search direction dk the objective function must decrease without violating the applied constraints, taking in account only inequality constraints, it must result that

![]() (3.76)

(3.76)

and

![]() (3.77)

(3.77)

Ik is the potential constraint set at the current point, defined as

![]() (3.78)

(3.78)

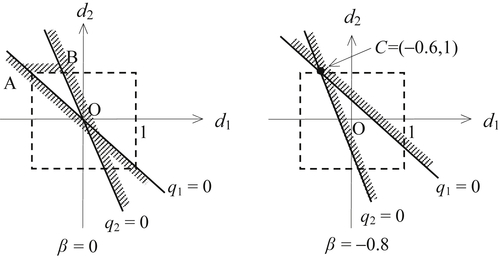

Note that ε is a small positive number, selected to determine ε-active constraints as discussed in Section 3.6.1. Note that gi(x) is normalized as in Eq. 3.69a. The inequality constraints enclosed in the set of Eq. 3.78 are either violated or ε-active, meaning they have to be considered in determining a search direction that brings the design into the feasible region. Equations 3.76 and 3.77 are referred to as usability and feasibility requirements, respectively. A geometrical interpretation of the requirements is shown in Figure 3.17 for a two-variable optimization problem, in which the search direction n points to the usable-feasible region.

This method has been developed and applied mostly to optimization problems with inequality constraints. This is because, in implementation, the search direction n is determined by defining a linearized subproblem (to be discussed next) at the current feasible point, and the step size α is determined to reduce the objective function as well as maintain feasibility of design. Because linear approximations are used, it is difficult to maintain feasibility with respect to the equality constraints. Although some procedures have been developed to treat equality constraints in these methods, we will describe the method for problems with only inequality constraints.

The desired search direction d will meet the requirements of usability and feasibility, and it gives the highest reduction of the objective function along it. Mathematically, it is obtained by solving the following linear subproblem in d:

![]() (3.79a)

(3.79a)

![]() (3.79b)

(3.79b)

![]() (3.79c)

(3.79c)

![]() (3.79d)

(3.79d)

Note that this is a linear programming problem. If β < 0, then d is an improving feasible direction. If β = 0, then the current design satisfies the KKT necessary conditions and the optimization process is terminated. To compute the improved design in this direction, a step size α is needed.

In the constraint equations of the subproblem stated in Eqs 3.79b and 3.79c, the same parameter β is employed. As demonstrated in Case B of Example 3.18, the same β leads to a search direction n pointing in a direction that splits the angle between −∇f and −∇g1, in which g1 is an active constraint function.

To determine a better feasible direction d, the constraints of Eq. 3.79c can be modified as

![]() (3.81)

(3.81)

where θi is called the push-off factor. The greater the value of θi, the more the direction vector d is pushed into the feasible region. The reason for introducing θi is to prevent the iterations from repeatedly hitting the constraint boundary and slowing down the convergence.

3.6.6. Penalty Method

A penalty method replaces a constrained optimization problem by a series of unconstrained problems whose solutions ideally converge to the solution of the original constrained problem. The unconstrained problems are formed by adding a term, called a penalty function, to the objective function that consists of a penalty parameter multiplied by a measure of violation of the constraints. The measure of violation is nonzero when the constraints are violated and is zero in the region where constraints are not violated.

Recall that a constrained optimization problem considered is defined as

![]() (3.83)

(3.83)

where S is the set of feasible designs defined by equality and inequality constraints. Using the penalty method, Eq. 3.83 is first converted to an unconstrained problem as

![]() (3.84)

(3.84)

where f(x) is the original objective function, p(x) is an imposed penalty function, and rp is a multiplier that determines the magnitude of the penalty. The function Ф(x, rp) is called pseudo-objective function.

There are numerous ways to create a penalty function. One of the easiest is called exterior penalty (Vanderplaats 2007), in which a penalty function is defined as

(3.85)

(3.85)

From Eq. 3.85, we see that no penalty is imposed if all constraints are satisfied. However, whenever one or more constraints are violated, the square of these constraints is included in the penalty function.

If we choose a small value for the multiplier rp, the pseudo-objective function Φ(x, rp) may be solved easily, but may converge to a solution with large constraint violations. On the other hand, a large value of rp ensures near satisfaction of all constraints but may create a poorly conditioned optimization problem that is unstable and difficult to solve numerically. Therefore, a better strategy is to start with a small rp and minimize Φ(x, rp). Then, we increase rp by a factor of γ (say γ = 10), and proceed with minimizing Φ(x, rp) again. Each time, we take the solution from the previous optimization problem as the initial design to speed up the optimization process. We repeat the steps until a satisfactory result is obtained. In general, solutions of the successive unconstrained problems will eventually converge to the solution of the original constrained problem.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.